Abstract

In order to change the control approach that commercially available Unmanned Aerial Vehicles (UAVs) use to execute a flight plan, which is based on the Global Positioning System (GPS) and assuming an obstacle-free environment, we propose a hierarchical multi-layered control system that permits to a UAV to define the flight plan during flight and locate itself by other means than GPS. The work presented on this article aims to set the foundation towards an autonomous airborne agent, capable of locating itself with the aid of computer vision, model its environment and plan and execute a three dimensional trajectory. On the current stage of development we locate the vehicle using a board of artificial markers, the flight plan to execute was defined as either a cubic spline or a Lemniscate. As results, we present the resultant flight data when the proposed control architecture drives the vehicle autonomously.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

1 Introduction

An autonomous agent acts on its environment by its own means, relying from little to nothing on other parties. An agent has to model its environment and locate itself in it, then it can create a plan and execute it to achieve a certain goal.

This translates to a set of subproblems to make out of a UAV an agent: (a) model its environment and estimate its current status; (b) given a certain goal, generate a feasible plan for the UAV to execute; (c) control the vehicle so that it can execute the plan. In the following sections we describe the multilayered hierarchical approach we propose to give a solution to all this subproblems; more importantly, the use of computer vision to locate the vehicle and the possibility to operate different kinds of aerial vehicles. The results show how this approach allowed for the operation of heterogeneous vehicles while describing different three dimensional trajectories.

2 Related Work

A robot consists of a series of highly heterogenous systems that are complex in nature and require an orchestrated integration to function properly. For a robot with certain mechanical features, depending on the problem it is intended to solve, there are many approaches to control it, but the archetypes are only three [5]: hierarchical, behavioral and hybrid. The hierarchical approach follows a sense-plan-act scheme, prioritizing deliberative control above all else, making it not very flexible. The behavioral approaches follow a bottom-up priority scheme, they use behaviors to react to the environment in real-time; because its reactive nature, achieving complex objectives is often difficult. Hybrid architectures try to make the best out of the hierarchical and behavioral approaches, combining the deliberative skills from the first and the flexibility of the latter.

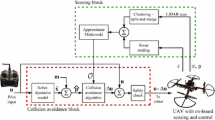

Some examples of high-level and computationally demanding features are: path-planning, human interaction and multi-robot coordination; examples for low-level routines are: sensor reading, actuator control and localization. A hybrid multi-layered architecture has proven successful on the field of mobile robotics because it allows to properly interface, upgrade and coordinate high-level and low-level routines [14], interacting with humans [6] and a group of identical robots [10, 18]. This kind of architecture, like the one shown in Fig. 1 [3], consists of three layers: (1) the low-level control layer allows to directly manage and access all hardware peripherals in real-time, it also implement some reactive behaviors; (2) the planner represents a set of high-level processes that given the current status of the robot and its environment, create a plan for the robot to achieve a certain goal. (3) the sequencer is the intermediate layer between the low level control and the planner that will execute the steps of the plan in sequence. In case an error occurs it will update the planner with the current status and ask for a new course of action.

On the field of UAVs, control schemes have been tested following a reactive approach, i.e. they act proportionally to an error metric, usually defined by tracking and triangulating salient features with computer vision [19, 20]. There are two schemes on how to profit from the payload of a UAV; in the first scheme, all generated information during the flight is stored in a non-volatile storage system for analysis after landing [9, 13], in the second scheme, all gathered information is sent to the Ground Control Station (GCS) for further analysis and decision making [1, 2]. Whatever the scheme, the UAV acts as a teleoperated entity with little autonomy to react to its flying conditions whether adverse or not. Even so, these schemes are popular and good enough for most civilian and military applications.

Nowadays, there’s a growing research community working on a third scheme, on which the flight plan of the UAV is not only dictated by a set of georeferenced waypoints or remotely piloted. Instead, the UAV process the information collected from the onboard sensors to further understand its environment and react to or interact with it. This problem is known as Simultaneous Localization and Mapping (SLAM) [11, 17]. Solving the SLAM problem means that a robot is able to navigate autonomously through its environment while it creates a virtual representation of its surroundings: the map [4, 12]. The work presented on this article is related to the third scheme. On this article, we describe how we plan to go one step further from the reactive approach by introducing a three layer architecture for the control of UAVs and the first steps we have taken.

3 Hardware Description

This work was successfully tested with two different UAVs, the first one we tested was the Solo from 3D Robotics and the second vehicle we tested was the AR-Drone v2, manufactured by Parrot (see Fig. 2). Both vehicles are ready-to-fly UAVs and feature an onboard monoscopic camera. To communicate with these vehicles we only had to adapt the low-level control routines, for the AR-Drone we used the ROS package created to communicate with it. To gain access to the Solo we used GstreamerFootnote 1 to receive the video feed and Dronekit (the software library to interface with UAVs compatible with the MAVlink protocol [15]). As a physical interface to operate the vehicle we used a hardware remote control, we gave a bigger priority to pilot commands over autonomous control; in case of unforeseen situations, the pilot can bypass the autonomous control immediately by operating the hardware controller. The software development was based on the Linux operating system and the Robotic Operating System (ROS) [16].

4 Proposed Approach and Methodology

The test scenario is shown on Fig. 4, the UAV follows the desired trajectory marked in blue while, overflying artificial markers fixed on the ground, the downward looking camera captures the aerial view and transmits the video feed to the GCS. Figure 4 also shows the reference frames attached to the monoscopic camera  , the world reference frame

, the world reference frame  , the center of gravity (CoG) of the vehicle

, the center of gravity (CoG) of the vehicle  and the

and the  frame (X: North, Y: East, Z: Down).

frame (X: North, Y: East, Z: Down).

At the top level, the proposed hierarchical multi-layer architecture defines the desired trajectory \(\mathbf {r}_d(t)\) with respect to  as a flight plan; and at the bottom level, it makes noisy estimations of the position of

as a flight plan; and at the bottom level, it makes noisy estimations of the position of  with respect to

with respect to  using computer vision. Noisy estimations are filtered using a Kalman Filter and then compared with the desired position, resulting in an error to be minimized by driving the vehicle close to the desired position.

using computer vision. Noisy estimations are filtered using a Kalman Filter and then compared with the desired position, resulting in an error to be minimized by driving the vehicle close to the desired position.

Figure 3 shows the structure of the architecture proposed in this paper. At the top, we show the high-level planner node, in charge of computing the desired flight plan for the UAV. At the bottom, the low-level nodes including: the hardware interface to the vehicle and the camera, the computer vision localization nodes and the controller. The trajectory generator node, defines the desired position for the UAV according to the parameters defined by the planner. For now, the trajectory tracker incorporates the ability of generating a lemniscate or an spline trajectory; the sequencer is in charge of switching between the two, depending on the flight plan.

To deal with spatial relationships from frame  to frame

to frame  , we used rigid body transformations in homogeneous coordinates

, we used rigid body transformations in homogeneous coordinates  denoted as:

denoted as:

where  and

and  are the rotation and translation components, respectively. Within the multi-layered architecture, we used the work from Foote [7] to manage all rigid body transformations.

are the rotation and translation components, respectively. Within the multi-layered architecture, we used the work from Foote [7] to manage all rigid body transformations.

Note that if we solve  with computer vision, we can locate

with computer vision, we can locate  with respect to

with respect to  because the camera is rigidly mounted on the UAV (the rigid body transformation from the camera to the center of mass of the vehicle

because the camera is rigidly mounted on the UAV (the rigid body transformation from the camera to the center of mass of the vehicle  is known beforehand). To estimate

is known beforehand). To estimate  we used the technique developed by Garrido et al. [8]; which consists on segmenting from the images the artificial markers and estimate the pose of the camera from all detected corners. Then, the location of

we used the technique developed by Garrido et al. [8]; which consists on segmenting from the images the artificial markers and estimate the pose of the camera from all detected corners. Then, the location of  with respect to

with respect to  can be computed with

can be computed with  .

.

As discussed earlier, we used a Kalman Filter over  to improve its accuracy and reduce the effects of errors when computing camera parameters, corner detection, image rectification and pose estimation. The state vector for the Kalman filter is

to improve its accuracy and reduce the effects of errors when computing camera parameters, corner detection, image rectification and pose estimation. The state vector for the Kalman filter is  , it defines the position, orientation and velocities of

, it defines the position, orientation and velocities of  with respect to

with respect to  . From the onboard inertial measurement unit (IMU), we receive the horizontal velocity components with respect to

. From the onboard inertial measurement unit (IMU), we receive the horizontal velocity components with respect to  , flight’s altitude and heading

, flight’s altitude and heading  ; the a priori estimate of the Kalman filter was updated using only

; the a priori estimate of the Kalman filter was updated using only  , i.e. the inertial measurements with respect to

, i.e. the inertial measurements with respect to  . The used state transition model, with k defining the time instant, is:

. The used state transition model, with k defining the time instant, is:

The a posteriori step runs at 24 Hz, a slower rate than the a priori, using as measurement the pose of the camera  , estimated by computer vision [8]. After the innovation step in the Kalman filter, state vector

, estimated by computer vision [8]. After the innovation step in the Kalman filter, state vector  defines the latest estimation for the pose of the UAV, i.e.

defines the latest estimation for the pose of the UAV, i.e.  .

.

The desired trajectory \(\mathbf {r}_d(t)\) to be described by the vehicle is dynamically computed using the waypoints delivered by the planner, joined together by a cubic spline or by a lemniscate. The spline is such that \(\mathbf {\dot{r}}_d(t)\) is continuous, creating a smooth trajectory, while the parametric equation for the lemniscate defined the smooth trajectory as:

Figure 5 shows the resultant directed graph of the spatial relationships, using nodes as reference frames and labels on edges as the modules on the architecture that update the spatial relationship between two reference frames. The direction of every edge represents the origin and target frames of the homogeneous transform. In turn, the error measurement with respect to \(\mathbf {B}\) is given by the rigid body transformation defined by:

After decomposing \(\mathbf {R}_e\) on its three Euler angles \((\theta ,\phi , \psi )_e\), we can compute a control command using a Proportional-Derivative controller:

where \(\mathbf {x}=[x, y, z, \psi ]\) and \(\mathbf {\dot{x}}=[\dot{x}, \dot{y}, \dot{z}, \dot{\psi }]\) are estimated by the Kalman filter described before and \(\mathbf {K}_P\) and \(\mathbf {K}_D\) are diagonal matrices \(\mathbb {R}^{4 \times 4}\)

5 Results

The proposed approach was tested with the AR-Drone 2.0 and the 3DR Solo. We made the front camera of the AR-Drone to point downwards, so we could get a higher quality image from above. The Solo had a gimbal installed, as a result, we had to update  using the navigational data we received from the UAV. The camera settings for the GoPro are very versatile, for this exercise, we used a narrow field of view with a resolution of \(1028\times 720\) pixels. Furthermore, to better estimate the pose of the camera, the video feed was rectified using the camera intrinsic parameters. The computer vision algorithm was set to track a board of artificial markers, for the Solo the board measured \(1.4\times 2.4\) m (\(2\times 5\) artificial markers), for the AR-Drone the board measured \(4\times 4\) m (\(20\times 21\) markers, see Fig. 6a).

using the navigational data we received from the UAV. The camera settings for the GoPro are very versatile, for this exercise, we used a narrow field of view with a resolution of \(1028\times 720\) pixels. Furthermore, to better estimate the pose of the camera, the video feed was rectified using the camera intrinsic parameters. The computer vision algorithm was set to track a board of artificial markers, for the Solo the board measured \(1.4\times 2.4\) m (\(2\times 5\) artificial markers), for the AR-Drone the board measured \(4\times 4\) m (\(20\times 21\) markers, see Fig. 6a).

To observe the current status of the vehicle and its environment, we used Rviz to show the virtual representation of the world. What is shown on Fig. 7 is an screenshot of Rviz displaying: the location of the vehicle, the trajectory being followed and the detected board. The tests were done with a computer based on the Ubuntu Linux, i5 processor, 8 GB RAM.

Flight path along the spline. The markers are displayed as white squares on the ground (\(z=0\)) at the moment they are detected by the computer vision system. The desired position and the estimated location of the drone are displayed as two coordinate frames, the two are almost always overlapping because of the adequate track of the trajectory.

On Figs. 8 and 9, we display the results as measured by the computer vision system while executing the spline and lemniscate maneuvers in x and y coordinates with respect to  . The \(\mathbf {r}_d\) plot is the desired trajectory, corresponding to the spline generated from the waypoints. For completeness, we also display the error plot. The maximum measured error was 30 cm for the lemniscate trajectory and 22 cm for the spline trajectory. The waypoints used for the spline were a set of coordinates in 3D space: \(p_1=\begin{bmatrix}-1.3, -1.3, 1.0\end{bmatrix}\), \(p_2=\begin{bmatrix}-0.9, 0.0, 1.0 \end{bmatrix}\), \(p_3=\begin{bmatrix}-0.3, 0.9, 1.0\end{bmatrix}\), \(p_4=\begin{bmatrix}0.45,-0.1, 0.0\end{bmatrix}\), \(p_5=\begin{bmatrix}1.7,1.0,1.0\end{bmatrix}\). Parameters for the lemniscate trajectory with the AR-Drone were: \(a=1.0\), \(b=0.8\), \(c=0.2\), \(\epsilon =30.0\), with a height offset of \(z=1.2\).

. The \(\mathbf {r}_d\) plot is the desired trajectory, corresponding to the spline generated from the waypoints. For completeness, we also display the error plot. The maximum measured error was 30 cm for the lemniscate trajectory and 22 cm for the spline trajectory. The waypoints used for the spline were a set of coordinates in 3D space: \(p_1=\begin{bmatrix}-1.3, -1.3, 1.0\end{bmatrix}\), \(p_2=\begin{bmatrix}-0.9, 0.0, 1.0 \end{bmatrix}\), \(p_3=\begin{bmatrix}-0.3, 0.9, 1.0\end{bmatrix}\), \(p_4=\begin{bmatrix}0.45,-0.1, 0.0\end{bmatrix}\), \(p_5=\begin{bmatrix}1.7,1.0,1.0\end{bmatrix}\). Parameters for the lemniscate trajectory with the AR-Drone were: \(a=1.0\), \(b=0.8\), \(c=0.2\), \(\epsilon =30.0\), with a height offset of \(z=1.2\).

6 Conclusions and Future Work

We have discussed a three layer architecture intended for the control of UAVs, that successfully guided the vehicle to describe the lemniscate and spline trajectories. Because the framework we used for this development runs on multiple platforms, including ARM on embedded computers, it is plausible to execute it onboard the UAV. Further development on the Sequencer and Planner layers would make the UAV and autonomous agent and leads the way towards a swarm of UAVs.

This document shows the results from the first step on our development and implementation roadmap. The next step is to execute it onboard the UAV. We are currently looking forward to extending the computer vision system with an visual odometry approach.

Notes

- 1.

Webpage: http://gstreamer.freedesktop.org/.

References

Ax, M., Thamke, S., Kuhnert, L., Schlemper, J., Kuhnert, K.-D.: Optical position stabilization of an UAV for autonomous landing. In: Proceedings of ROBOTIK; 7th German Conference on Robotics, pp. 1–6 (2012)

Blösch, M., Weiss, S., Scaramuzza, D., Siegwart, R.: Vision based MAV navigation in unknown and unstructured environments. In: IEEE International Conference on Robotics and Automation (ICRA), pp. 21–28 (2010)

Chen, H., Wang, X.M., Li, Y.: A survey of autonomous control for UAV. In: International Conference on Artificial Intelligence and Computational Intelligence (AICI 2009), vol. 2, pp. 267–271, November 2009

Correa, D.S.O., Sciotti, D.F., Prado, M.G., Sales, D.O., Wolf, D.F., Osorio, F.S.: Mobile robots navigation in indoor environments using kinect sensor. In: Second Brazilian Conference on Critical Embedded Systems (CBSEC), pp. 36–41 (2012)

Coste-Maniere, E., Simmons, R.: Architecture, the backbone of robotic systems. In: Proceedings ICRA. Millennium Conference. IEEE International Conference on Robotics and Automation. Symposia Proceedings (Cat. No.00CH37065), vol. 1, pp. 67–72 (2000)

Dumonteil, G., Manfredi, G., Devy, M., Confetti, A., Sidobre, D.: Reactive planning on a collaborative robot for industrial applications. In: 2015 12th International Conference on Informatics in Control, Automation and Robotics (ICINCO), vol. 02, pp. 450–457, July 2015

Foote, T.: tf: The transform library. In: 2013 IEEE International Conference on Technologies for Practical Robot Applications (TePRA), Open-Source Software Workshop, pp. 1–6, April 2013

Garrido-Jurado, S., Muñoz Salinas, R., Madrid-Cuevas, F.J., Marín-Jiménez, M.J.: Automatic generation and detection of highly reliable fiducial markers under occlusion. Pattern Recognit. 47(6), 2280–2292 (2014)

Gong, J., Zheng, C., Tian, J., Wu, D.: An image-sequence compressing algorithm based on homography transformation for unmanned aerial vehicle. In: International Symposium on Intelligence Information Processing and Trusted Computing (IPTC), pp. 37–40 (2010)

Goryca, J., Hill, R.C.: Formal synthesis of supervisory control software for multiple robot systems. In: 2013 American Control Conference, pp. 125–131, June 2013

Grzonka, S., Grisetti, G., Burgard, W.: A fully autonomous indoor quadrotor. IEEE Trans. Robot. PP(99), 1–11 (2011)

Kamarudin, K., Mamduh, S.M., Md Shakaff, A.Y., Saad, S.M., Zakaria, A., Abdullah, A.H., Kamarudin, L.M.: Method to convert kinect’s 3D depth data to a 2D map for indoor slam. In: IEEE 9th International Colloquium on Signal Processing and its Applications (CSPA), pp. 247–251 (2013)

Lou, L., Zhang, F.-M., Xu, C., Li, F., Xue, M.-G.: Automatic registration of aerial image series using geometric invariance. In: IEEE International Conference on Automation and Logistics, ICAL, pp. 1198–1203 (2008)

Luna-Gallegos, K.L., Palacios-Hernandez, E.R., Hernandez-Mendez, S., Marin-Hernandez, A.: A proposed software architecture for controlling a service robot. In: 2015 IEEE International Autumn Meeting on Power, Electronics and Computing (ROPEC), pp. 1–6, November 2015

Meier, L., Honegger, D., Pollefeys, M.: PX4: a node-based multithreaded open source robotics framework for deeply embedded platforms. In: 2015 IEEE International Conference on Robotics and Automation (ICRA), May 2015

Quigley, M., Conley, K., Gerkey, B.P., Faust, J., Foote, T., Leibs, J., Wheeler, R., Ng, A.Y.: ROS: an open-source robot operating system. In: ICRA Workshop on Open Source Software (2009)

Saska, M., Krajnik, T., Pfeucil, L.: Cooperative UAV-UGV autonomous indoor surveillance. In: 9th International Multi-conference on Systems, Signals and Devices (SSD), pp. 1–6 (2012)

Schöpfer, M., Schmidt, F., Pardowitz, M., Ritter, H.: Open source real-time control software for the Kuka light weight robot. In: 2010 8th World Congress on Intelligent Control and Automation, pp. 444–449, July 2010

Vanegas, F., Gonzalez, F.: Uncertainty based online planning for UAV target finding in cluttered and GPS-denied environments. In: 2016 IEEE Aerospace Conference, pp. 1–9, March 2016

Yang, L., Xiao, B., Zhou, Y., He, Y., Zhang, H., Han, J.: A robust real-time vision based GPS-denied navigation system of UAV. In: 2016 IEEE International Conference on Cyber Technology in Automation, Control, and Intelligent Systems (CYBER), pp. 321–326, June 2016

Author information

Authors and Affiliations

Corresponding authors

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2017 Springer International Publishing AG

About this paper

Cite this paper

Soto-Guerrero, D., Ramírez-Torres, J.G. (2017). An Airborne Agent. In: Carrasco-Ochoa, J., Martínez-Trinidad, J., Olvera-López, J. (eds) Pattern Recognition. MCPR 2017. Lecture Notes in Computer Science(), vol 10267. Springer, Cham. https://doi.org/10.1007/978-3-319-59226-8_21

Download citation

DOI: https://doi.org/10.1007/978-3-319-59226-8_21

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-59225-1

Online ISBN: 978-3-319-59226-8

eBook Packages: Computer ScienceComputer Science (R0)