Abstract

After reading this chapter you know:

-

what matrices are and how they can be used,

-

how to perform addition, subtraction and multiplication of matrices,

-

that matrices represent linear transformations,

-

the most common special matrices,

-

how to calculate the determinant, inverse and eigendecomposition of a matrix, and

-

what the decomposition methods SVD, PCA and ICA are, how they are related and how they can be applied.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

References

Online Sources of Information: History

Online Sources of Information: Methods

http://comnuan.com/cmnn01004/ (one of many online matrix calculators)

http://andrew.gibiansky.com/blog/mathematics/cool-linear-algebra-singular-value-decomposition/ (examples of information compression using SVD)

Books

R.A. Horn, C.R. Johnson, Topics in Matrix Analysis (Cambridge University Press, Cambridge, 1994)

W.H. Press, B.P. Flannery, S.A. Teukolsky, W.T. Vetterling, Numerical Recipes, the Art of Scientific Computing. (Multiple Versions for Different Programming Languages) (Cambridge University Press, Cambridge)

Y. Saad. Iterative Methods for Sparse Linear Systems, SIAM, New Delhi 2003. http://www-users.cs.umn.edu/~saad/IterMethBook_2ndEd.pdf

Papers

V.D. Calhoun, T. Adali, Multisubject independent component analysis of fMRI: a decade of intrinsic networks, default mode, and neurodiagnostic discovery. IEEE Rev. Biomed. Eng. 5, 60–73 (2012)

M.K. Islam, A. Rastegarnia, Z. Yang, Methods for artifact detection and removal from scalp EEG: a review. Neurophysiol. Clin. 46, 287–305 (2016)

Author information

Authors and Affiliations

Corresponding author

Appendices

Symbols Used in This Chapter (in Order of Their Appearance)

M or M | Matrix (bold and capital letter in text, italic and capital letter in equations) |

(⋅) ij | Element at position (i,j) in a matrix |

\( \sum \limits_{k=1}^n \) | Sum over k, from 1 to n |

\( \overrightarrow{\cdot} \) | Vector |

θ | Angle |

∘ | Hadamard product , Schur product or pointwise matrix product |

⊗ | Kronecker matrix product |

⋅T | (Matrix or vector) transpose |

⋅∗ | (Matrix or vector) conjugate transpose |

† | Used instead of * to indicate conjugate transpose in quantum mechanics |

⋅−1 | (Matrix) inverse |

|⋅| | (Matrix) determinant |

Overview of Equations, Rules and Theorems for Easy Reference

Addition, subtraction and scalar multiplication of matrices

Addition of matrices A and B (of the same size):

Subtraction of matrices A and B (of the same size):

Multiplication of a matrix A by a scalar s:

Basis vector principle

Any vector \( \left(\begin{array}{c}\hfill a\hfill \\ {}\hfill b\hfill \end{array}\right) \) (in 2D space) can be built from the basis vectors \( \left(\begin{array}{c}\hfill 1\hfill \\ {}\hfill 0\hfill \end{array}\right) \) and \( \left(\begin{array}{c}\hfill 0\hfill \\ {}\hfill 1\hfill \end{array}\right) \) by a linear combination as follows: \( \left(\begin{array}{c}\hfill a\hfill \\ {}\hfill b\hfill \end{array}\right)=a\left(\begin{array}{c}\hfill 1\hfill \\ {}\hfill 0\hfill \end{array}\right)+b\left(\begin{array}{c}\hfill 0\hfill \\ {}\hfill 1\hfill \end{array}\right) \).

The same principle holds for vectors in higher dimensions.

Rotation matrix (2D)

The transformation matrix that rotates a vector around the origin (in 2D) over an angle θ (counter clockwise) is given by \( \left(\begin{array}{cc}\hfill \cos \theta \hfill & \hfill -\sin \theta \hfill \\ {}\hfill \sin \theta \hfill & \hfill \cos \theta \hfill \end{array}\right) \).

Shearing matrix (2D)

\( \left(\begin{array}{cc}\hfill 1\hfill & \hfill k\hfill \\ {}\hfill 0\hfill & \hfill 1\hfill \end{array}\right) \): shearing along the x-axis (y-coordinate remains unchanged)

\( \left(\begin{array}{cc}\hfill 1\hfill & \hfill 0\hfill \\ {}\hfill k\hfill & \hfill 1\hfill \end{array}\right) \): shearing along the y-axis (x-coordinate remains unchanged)

Matrix product

Multiplication AB of an m × n matrix A with an n × p matrix B:

Hadamard product , Schur product or pointwise product:

Kronecker product :

Special matrices

Hermitian matrix : A = A*

normal matrix : A*A = AA*

unitary matrix : AA* = I

where \( {\left({A}^{\ast}\right)}_{ij}={\overline{a}}_{ji} \) defines the conjugate transpose of A.

Matrix inverse

For a square matrix A the inverse \( {A}^{-1}=\frac{1}{\det (A)} adj(A) \), where det(A) is the determinant of A and adj(A) is the adjoint of A (see Sect. 5.3.1).

Eigendecomposition

An eigenvector \( \overrightarrow{v} \) of a square matrix M is determined by:

where λ is a scalar known as the eigenvalue

Diagonalization

Decomposition of a square matrix M such that:

where V is an invertible matrix and D is a diagonal matrix

Singular value decomposition

Decomposition of an m × n rectangular matrix M such that:

where U is a unitary m × m matrix, Σ an m × n diagonal matrix with non-negative real entries and V another unitary n × n matrix.

Answers to Exercises

-

5.1.

-

(a)

-

(b)

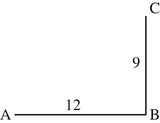

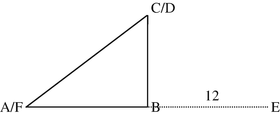

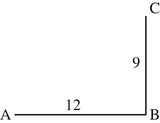

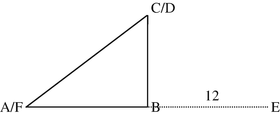

The direct distance between cities A and C can be calculated according to Pythagoras’ theorem as \( \sqrt{12^2+{9}^2}=\sqrt{144+81}=\sqrt{225}=15 \). Hence, the distance matrix becomes \( \left(\begin{array}{ccc}\hfill 0\hfill & \hfill 12\hfill & \hfill 15\hfill \\ {}\hfill 12\hfill & \hfill 0\hfill & \hfill 9\hfill \\ {}\hfill 15\hfill & \hfill 9\hfill & \hfill 0\hfill \end{array}\right) \).

-

(c)

-

(a)

-

5.2.

The sum and difference of the pairs of matrices are:

-

(a)

\( \left(\begin{array}{cc}\hfill 5\hfill & \hfill 2\hfill \\ {}\hfill 2\hfill & \hfill 15\hfill \end{array}\right) \) and \( \left(\begin{array}{cc}\hfill 1\hfill & \hfill 6\hfill \\ {}\hfill -4\hfill & \hfill 1\hfill \end{array}\right) \)

-

(b)

\( \left(\begin{array}{ccc}\hfill 7\hfill & \hfill -4\hfill & \hfill 6\hfill \\ {}\hfill -1\hfill & \hfill 4\hfill & \hfill 1\hfill \\ {}\hfill -4\hfill & \hfill 6\hfill & \hfill 2\hfill \end{array}\right) \) and \( \left(\begin{array}{ccc}\hfill -1\hfill & \hfill -10\hfill & \hfill 2\hfill \\ {}\hfill -3\hfill & \hfill 8\hfill & \hfill 9\hfill \\ {}\hfill 6\hfill & \hfill -10\hfill & \hfill -20\hfill \end{array}\right) \)

-

(c)

\( \left(\begin{array}{c}\hfill 2\kern0.5em 1.6\kern0.5em -1\hfill \\ {}\hfill \begin{array}{ccc}\hfill 5.1\hfill & \hfill 1\hfill & \hfill -2\hfill \end{array}\hfill \end{array}\right) \) and \( \left(\begin{array}{c}\hfill 0.4\kern0.5em 4.8\kern0.5em -2\hfill \\ {}\hfill \begin{array}{ccc}\hfill 1.7\hfill & \hfill 3.6\hfill & \hfill -4.4\hfill \end{array}\hfill \end{array}\right) \)

-

(a)

-

5.3.

-

(a)

\( \left(\begin{array}{c}\hfill 6\hfill \\ {}\hfill 7\hfill \\ {}\hfill 8\hfill \end{array}\kern0.5em \begin{array}{c}\hfill 0\hfill \\ {}\hfill -4\hfill \\ {}\hfill 10\hfill \end{array}\right) \)

-

(b)

\( \left(\begin{array}{cc}\hfill 3.5\hfill & \hfill 0\hfill \\ {}\hfill 2.9\hfill & \hfill 0.7\hfill \end{array}\right) \)

-

(a)

-

5.4.

Possibilities for multiplication are AB, AC, BD, CB and DA.

-

5.5.

-

(a)

2 × 7

-

(b)

2 × 1

-

(c)

1 × 1

-

(a)

-

5.6.

-

(a)

AB = \( \left(\begin{array}{cc}\hfill 18\hfill & \hfill 22\hfill \\ {}\hfill 22\hfill & \hfill 58\hfill \end{array}\right) \), BA = \( \left(\begin{array}{cc}\hfill 8\hfill & \hfill -8\hfill \\ {}\hfill 2\hfill & \hfill 68\hfill \end{array}\right) \)

-

(b)

BA = \( \left(\begin{array}{c}\hfill 8\hfill \\ {}\hfill 3\hfill \\ {}\hfill -20\hfill \end{array}\kern0.5em \begin{array}{c}\hfill -14\hfill \\ {}\hfill -11\hfill \\ {}\hfill 61\hfill \end{array}\right) \)

-

(c)

no matrix product possible

-

(a)

-

5.7.

-

(a)

\( \left(\begin{array}{cc}\hfill 2\hfill & \hfill 6\hfill \\ {}\hfill -4\hfill & \hfill 1\hfill \end{array}\right) \)

-

(b)

\( \left(\begin{array}{c}\hfill 2.8\kern0.5em -2.7\kern0.5em 0.5\hfill \\ {}\hfill \begin{array}{ccc}\hfill 12\hfill & \hfill -0.7\hfill & \hfill -4\hfill \end{array}\hfill \end{array}\right) \)

-

(a)

-

5.8.

-

(a)

\( \left(\begin{array}{cc}\hfill 3\hfill & \hfill -1\hfill \\ {}\hfill -3\hfill & \hfill 1\hfill \end{array}\kern0.5em \begin{array}{cc}\hfill 4\hfill & \hfill 6\hfill \\ {}\hfill -4\hfill & \hfill 3\hfill \end{array}\kern0.5em \begin{array}{cc}\hfill -2\hfill & \hfill 8\hfill \\ {}\hfill -1\hfill & \hfill 4\hfill \end{array}\right) \)

-

(b)

\( \left(\begin{array}{ccc}\hfill 0\hfill & \hfill -2\hfill & \hfill -4\hfill \\ {}\hfill -6\hfill & \hfill -8\hfill & \hfill -10\hfill \\ {}\hfill -12\hfill & \hfill -14\hfill & \hfill -16\hfill \end{array}\kern0.5em \begin{array}{ccc}\hfill 0\hfill & \hfill -3\hfill & \hfill -6\hfill \\ {}\hfill -9\hfill & \hfill -12\hfill & \hfill -15\hfill \\ {}\hfill -18\hfill & \hfill -21\hfill & \hfill -24\hfill \end{array}\right) \)

-

(a)

-

5.9.

-

(a)

symmetric, logical

-

(b)

sparse , upper-triangular

-

(c)

skew-symmetric

-

(d)

upper-triangular

-

(e)

diagonal, sparse

-

(f)

identity, diagonal, logical, sparse

-

(a)

-

5.10.

-

(a)

\( \left(\begin{array}{ccc}\hfill 1\hfill & \hfill i\hfill & \hfill 5\hfill \\ {}\hfill 2\hfill & \hfill 1\hfill & \hfill 4-5i\hfill \\ {}\hfill 3\hfill & \hfill -3+2i\hfill & \hfill 3\hfill \end{array}\right) \)

-

(b)

\( \left(\begin{array}{ccc}\hfill 1\hfill & \hfill -1\hfill & \hfill 5\hfill \\ {}\hfill 2\hfill & \hfill 1\hfill & \hfill 4\hfill \\ {}\hfill 3\hfill & \hfill -3\hfill & \hfill 0\hfill \end{array}\right) \)

-

(c)

\( \left(\begin{array}{ccc}\hfill 4\hfill & \hfill 19-i\hfill & \hfill 8i\hfill \\ {}\hfill 0\hfill & \hfill -3\hfill & \hfill -11+i\hfill \\ {}\hfill 3+2i\hfill & \hfill -3\hfill & \hfill 17\hfill \end{array}\right) \)

-

(a)

-

5.11.

Using Cramer’s rule we find that \( x=\frac{D_x}{D}=\left|\begin{array}{cc}\hfill c\hfill & \hfill b\hfill \\ {}\hfill f\hfill & \hfill e\hfill \end{array}\right|/\left|\begin{array}{cc}\hfill a\hfill & \hfill b\hfill \\ {}\hfill d\hfill & \hfill e\hfill \end{array}\right|=\frac{ce- bf}{ae- bd} \) and \( y=\frac{D_y}{D}=\left|\begin{array}{cc}\hfill a\hfill & \hfill c\hfill \\ {}\hfill d\hfill & \hfill f\hfill \end{array}\right|/\left|\begin{array}{cc}\hfill a\hfill & \hfill b\hfill \\ {}\hfill d\hfill & \hfill e\hfill \end{array}\right|=\frac{af- cd}{ae- bd} \). From Sect. 5.3.1 we obtain that the inverse of the matrix \( \left(\begin{array}{cc}\hfill a\hfill & \hfill b\hfill \\ {}\hfill d\hfill & \hfill e\hfill \end{array}\right) \) is equal to \( \frac{1}{ae- bd}\left(\begin{array}{cc}\hfill e\hfill & \hfill -b\hfill \\ {}\hfill -d\hfill & \hfill a\hfill \end{array}\right) \) and the solution to the system of linear equations is \( \frac{1}{ae- bd}\left(\begin{array}{cc}\hfill e\hfill & \hfill -b\hfill \\ {}\hfill -d\hfill & \hfill a\hfill \end{array}\right)\left(\begin{array}{c}\hfill c\hfill \\ {}\hfill f\hfill \end{array}\right)=\left(\begin{array}{c}\hfill \left( ce- bf\right)/\left( ae- bd\right)\hfill \\ {}\hfill \left( af- cd\right)/\left( ae- bd\right)\hfill \end{array}\right) \) which is the same as the solution obtained using Cramer’s rule.

-

5.12.

-

(a)

x = − 11, \( y=-5\frac{3}{5} \)

-

(b)

\( D=\left|\begin{array}{ccc}\hfill 4\hfill & \hfill -2\hfill & \hfill -2\hfill \\ {}\hfill 2\hfill & \hfill 8\hfill & \hfill 4\hfill \\ {}\hfill 30\hfill & \hfill 12\hfill & \hfill -4\hfill \end{array}\right|=4\left(8\cdot -4-4\cdot 12\right)-\cdot -2\left(2\cdot -4-30\cdot 4\right)+\cdot -2\left(2\cdot 12-30\cdot 8\right)=-144 \)

\( {D}_x=\left|\begin{array}{ccc}\hfill 10\hfill & \hfill -2\hfill & \hfill -2\hfill \\ {}\hfill 32\hfill & \hfill 8\hfill & \hfill 4\hfill \\ {}\hfill 24\hfill & \hfill 12\hfill & \hfill -4\hfill \end{array}\right|=-1632 \) \( {D}_y=\left|\begin{array}{ccc}\hfill 4\hfill & \hfill 10\hfill & \hfill -2\hfill \\ {}\hfill 2\hfill & \hfill 32\hfill & \hfill 4\hfill \\ {}\hfill 30\hfill & \hfill 24\hfill & \hfill -4\hfill \end{array}\right|=2208 \)

\( {D}_z=\left|\begin{array}{ccc}\hfill 4\hfill & \hfill -2\hfill & \hfill 10\hfill \\ {}\hfill 2\hfill & \hfill 8\hfill & \hfill 32\hfill \\ {}\hfill 30\hfill & \hfill 12\hfill & \hfill 24\hfill \end{array}\right|=-4752 \)

-

(a)

Thus, \( x=\frac{D_x}{D}=\frac{-1632}{-144}=11\frac{1}{3} \), \( y=\frac{D_y}{D}=\frac{2208}{-144}=-15\frac{1}{3} \) and \( z=\frac{D_z}{D}=\frac{-4752}{-144}=33 \)

-

5.13.

-

(a)

x = 4, y = 0

-

(b)

x = 2, y = − 1, z = 1

-

(a)

-

5.14.

This shearing matrix shears along the x-axis and leaves y-coordinates unchanged (see Sect. 5.2.2). Hence, all vectors along the x-axis remain unchanged due to this transformation. The eigenvector is thus \( \left(\begin{array}{c}\hfill 1\hfill \\ {}\hfill 0\hfill \end{array}\right) \) with eigenvalue 1 (since the length of the eigenvector is unchanged due to the transformation).

-

5.15.

-

(a)

λ 1 = 7 with eigenvector \( \left(\begin{array}{c}\hfill 1\hfill \\ {}\hfill 0\hfill \\ {}\hfill 0\hfill \end{array}\right) \) (x = x, y = 0, z = 0), λ 2 = − 19 with eigenvector \( \left(\begin{array}{c}\hfill 0\hfill \\ {}\hfill 1\hfill \\ {}\hfill 0\hfill \end{array}\right) \) (x = 0, y = y, z = 0) and λ 3 = 2 with eigenvector \( \left(\begin{array}{c}\hfill 0\hfill \\ {}\hfill 0\hfill \\ {}\hfill 1\hfill \end{array}\right) \) (x = 0, y = 0, z = z).

-

(b)

λ = 3 (double) with eigenvector \( \left(\begin{array}{c}\hfill 1\hfill \\ {}\hfill 1\hfill \end{array}\right) \) (y = x).

-

(c)

λ 1 = 1 with eigenvector \( \left(\begin{array}{c}\hfill -1\hfill \\ {}\hfill 1\hfill \\ {}\hfill 1\hfill \end{array}\right) \) (x = −z, y = z), λ 2 = − 1 with eigenvector \( \left(\begin{array}{c}\hfill 1\hfill \\ {}\hfill 1\hfill \\ {}\hfill 5\hfill \end{array}\right) \) (5x = z, 5y = z) and λ 3 = 3 with eigenvector \( \left(\begin{array}{c}\hfill 1\hfill \\ {}\hfill 1\hfill \\ {}\hfill 1\hfill \end{array}\right) \) (x = y = z).

-

(d)

λ 1 = 3 with eigenvector \( \left(\begin{array}{c}\hfill 1\hfill \\ {}\hfill -2\hfill \end{array}\right) \) (y = −2x) and λ 2 = 7 with eigenvector \( \left(\begin{array}{c}\hfill 1\hfill \\ {}\hfill 2\hfill \end{array}\right) \) (y = 2x).

-

(a)

-

5.16.

-

(a)

To determine the SVD, we first determine the eigenvalues and eigenvectors of M T M to get the singular values and right-singular vectors of M. Thus, we determine its characteristic equation as the determinant

-

(a)

Thus, the singular values are \( {\sigma}_1=\sqrt{9}=3 \) and \( {\sigma}_1=\sqrt{1}=1 \) and \( \Sigma =\left(\begin{array}{cc}\hfill 3\hfill & \hfill 0\hfill \\ {}\hfill 0\hfill & \hfill 1\hfill \end{array}\right) \).

The eigenvector belonging to the first eigenvalue of M T M follows from:

To determine the first column of V, this eigenvector must be normalized (divided by its length; see Sect. 4.2.2.1) and thus \( {\overrightarrow{v}}_1=\frac{1}{\sqrt{2}}\left(\begin{array}{c}\hfill 1\hfill \\ {}\hfill 1\hfill \end{array}\right) \).

Similarly, the eigenvector belonging to the second eigenvalue of M T M can be derived to be \( {\overrightarrow{v}}_2=\frac{1}{\sqrt{2}}\left(\begin{array}{c}\hfill 1\hfill \\ {}\hfill -1\hfill \end{array}\right) \), making \( V=\frac{1}{\sqrt{2}}\left(\begin{array}{cc}\hfill 1\hfill & \hfill 1\hfill \\ {}\hfill 1\hfill & \hfill -1\hfill \end{array}\right) \). In this case, \( {\overrightarrow{v}}_1 \) and \( {\overrightarrow{v}}_2 \) are already orthogonal (see Sect. 4.2.2.1), making further adaptations to arrive at an orthonormal set of eigenvectors unnecessary.

To determine U we use that \( {\overrightarrow{u}}_1=\frac{1}{\sigma_1}M{\overrightarrow{v}}_1=\frac{1}{3}\left(\begin{array}{cc}\hfill 2\hfill & \hfill 1\hfill \\ {}\hfill 1\hfill & \hfill 2\hfill \end{array}\right)\frac{1}{\sqrt{2}}\left(\begin{array}{c}\hfill 1\hfill \\ {}\hfill 1\hfill \end{array}\right)=\frac{1}{\sqrt{2}}\left(\begin{array}{c}\hfill 1\hfill \\ {}\hfill 1\hfill \end{array}\right) \) and \( {\overrightarrow{u}}_2=\frac{1}{\sigma_2}M{\overrightarrow{v}}_2=\frac{1}{1}\left(\begin{array}{cc}\hfill 2\hfill & \hfill 1\hfill \\ {}\hfill 1\hfill & \hfill 2\hfill \end{array}\right)\frac{1}{\sqrt{2}}\left(\begin{array}{c}\hfill 1\hfill \\ {}\hfill -1\hfill \end{array}\right)=\frac{1}{\sqrt{2}}\left(\begin{array}{c}\hfill 1\hfill \\ {}\hfill -1\hfill \end{array}\right) \), making \( U=\frac{1}{\sqrt{2}}\left(\begin{array}{cc}\hfill 1\hfill & \hfill 1\hfill \\ {}\hfill 1\hfill & \hfill -1\hfill \end{array}\right) \). You can now verify yourself that indeed M = UΣV ∗.

-

(b)

Taking a similar approach, we find that

\( U=\left(\begin{array}{cc}\hfill 1\hfill & \hfill 0\hfill \\ {}\hfill 0\hfill & \hfill 1\hfill \end{array}\right) \), \( \Sigma =\left(\begin{array}{cc}\hfill 2\hfill & \hfill 0\hfill \\ {}\hfill 0\hfill & \hfill 1\hfill \end{array}\right) \) and \( V=\left(\begin{array}{cc}\hfill 1\hfill & \hfill 0\hfill \\ {}\hfill 0\hfill & \hfill 1\hfill \end{array}\right) \).

-

5.17.

If M = UΣV ∗ and using that both U and V are unitary (see Table 5.1), then MM ∗ = UΣV ∗(UΣV ∗)∗ = UΣV ∗ VΣU ∗ = UΣ2 U ∗. Right-multiplying both sides of this equation with U and then using that Σ2 is diagonal, yields MM ∗ U = UΣ2 = Σ2 U. Hence, the columns of U are eigenvectors of MM * (with eigenvalues equal to the diagonal elements of Σ2).

Glossary

- Adjacency matrix

-

Matrix with binary entries (i,j) describing the presence (1) or absence (0) of a path between nodes i and j.

- Adjoint

-

Transpose of the cofactor matrix.

- Airfoil

-

Shape of an airplane wing, propeller blade or sail.

- Ataxia

-

A movement disorder or symptom involving loss of coordination.

- Basis vector

-

A set of (N-dimensional) basis vectors is linearly independent and any vector in N-dimensional space can be built as a linear combination of these basis vectors.

- Boundary conditions

-

Constraints for a solution to an equation on the boundary of its domain.

- Cofactor matrix

-

The (i,j)-element of this matrix is given by the determinant of the matrix that remains when the i-th row and j-th column are removed from the original matrix, multiplied by −1 if i + j is odd.

- Conjugate transpose

-

Generalization of transpose; a transformation of a matrix A indicated by A * with elements defined by \( {\left({A}^{\ast}\right)}_{ij}={\overline{a}}_{ji} \) .

- Dense matrix

-

A matrix whose elements are almost all non-zero.

- Determinant

-

Can be seen as a scaling factor when calculating the inverse of a matrix.

- Diagonalization

-

Decomposition of a matrix M such that it can be written as M = VDV −1 where V is an invertible matrix and D is a diagonal matrix .

- Diagonal matrix

-

A matrix with only non-zero elements on the diagonal and zeroes elsewhere.

- Discretize

-

To represent an equation on a grid.

- EEG

-

Electroencephalography; a measurement of electrical brain activity.

- Eigendecomposition

-

To determine the eigenvalues and eigenvectors of a matrix.

- Element

-

As in ‘matrix element’: one of the entries in a matrix.

- Gaussian

-

Normally distributed.

- Graph

-

A collection of nodes or vertices with paths or edges between them whenever the nodes are related in some way.

- Hadamard product

-

Element-wise matrix product.

- Identity matrix

-

A square matrix with ones on the diagonal and zeroes elsewhere, often referred to as I . The identity matrix is a special diagonal matrix .

- Independent component analysis

-

A method to determine independent components of non-Gaussian signals by minimizing higher order moments of the data.

- Inverse

-

The matrix A −1 such that AA −1 = A −1 A = I .

- Invertable

-

A matrix that has an inverse.

- Kronecker product

-

Generalization of the outer product (or tensor product or dyadic product) for vectors to matrices.

- Kurtosis

-

Fourth-order moment of data, describing how much of the data variance is in the tail of its distribution.

- Laplace equation

-

Partial differential equation describing the behavior of potential fields.

- Left-singular vector

-

Columns of U in the SVD of M: M = UΣV ∗ .

- Leslie matrix

-

Matrix with probabilities to transfer from one age class to the next in a population ecological model of population growth.

- Logical matrix

-

A matrix that only contains zeroes and ones (also: binary or Boolean matrix).

- Matrix

-

A rectangular array of (usually) numbers.

- Network theory

-

The study of graphs as representing relations between different entities, such as in a social network, brain network, gene network etcetera.

- Order

-

Size of a matrix.

- Orthonormal

-

Orthogonal vectors of length 1.

- Partial differential equation

-

An equation that contains functions of multiple variables and their partial derivatives (see also Chap. 6 ).

- Principal component analysis

-

Method that transforms data to a new coordinate system such that the largest variance is found along the first new coordinate (first PC), the then largest variance is found along the second new coordinate (second PC) etcetera.

- Right-singular vector

-

Columns of V in the SVD of M : M = UΣV ∗

- Root

-

Here: a value of λ that makes the characteristic polynomial |M − λI| of the matrix M equal to zero.

- Scalar function

-

Function with scalar values.

- Shearing

-

To shift along one axis.

- Singular value

-

Diagonal elements of Σ in the SVD of M : M = UΣV ∗ .

- Singular value decomposition

-

The decomposition of an m × n rectangular matrix M into a product of three matrices such that M = UΣV ∗ where U is a unitary m × m matrix, Σ an m × n diagonal matrix with non-negative real entries and V another unitary n × n matrix.

- Skewness

-

Third-order moment of data, describing asymmetry of its distribution.

- Skew-symmetric matrix

-

A matrix A for which a ij = − a ji .

- Sparse matrix

-

A matrix with most of its elements equal to zero.

- Stationary

-

Time-dependent data for which the most important statistical properties (such as mean and variance) do not change over time.

- Symmetric matrix

-

A matrix A that is symmetric around the diagonal, i.e. for which a ij = a ji .

- Transformation

-

Here: linear transformation as represented by matrices. A function mapping a set onto itself (e.g. 2D space onto 2D space).

- Transpose

-

A transformation of a matrix A indicated by A T with elements defined by (A T) ij = a ji .

- Triangular matrix

-

A diagonal matrix extended with non-zero elements only above or only below the diagonal.

- Unit matrix

-

Identity matrix.

Rights and permissions

Copyright information

© 2017 Springer International Publishing AG

About this chapter

Cite this chapter

Maurits, N. (2017). Matrices. In: Math for Scientists. Springer, Cham. https://doi.org/10.1007/978-3-319-57354-0_5

Download citation

DOI: https://doi.org/10.1007/978-3-319-57354-0_5

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-57353-3

Online ISBN: 978-3-319-57354-0

eBook Packages: Mathematics and StatisticsMathematics and Statistics (R0)