Appendix: Proofs

Proof

(Proof of Theorem 1) We divide the proof into two steps. The proof follows closely the proof of Theorem 2.2 given in [13]. However, we repeat it here since we need to introduce the notation needed for expressing the coefficients given in Theorem 2. This is, indeed, essential to derive the closed form of the estimator.

(i) Construction of a representor \(\psi _a(\equiv \psi _a^0)\). For simplicity, let us set \(\mathscr {Q}^1\equiv \left[ 0,1\right] \). For functions of one variable we have \(\left\langle g,h\right\rangle _{Sob,m}=\sum _{k=0}^{m}\int _{\mathscr {Q}^1}g^{(k)}(x)h^{(k)}(x)\text {d}x\). We are constructing a representor \( \psi _{a}\in \mathscr {H}^m\left[ 0,1\right] \) such that \(\left\langle \psi _a,f\right\rangle _{Sob,m}=f(a) \) for all \(f\in \mathscr {H}^m\left[ 0,1\right] \). It suffices to demonstrate the result for all \(f\in \mathscr {C}^{2m}\) because of the denseness of \(\mathscr {C}^{2m}\). The representor is defined as

$$\begin{aligned} \psi _a(x) = \left\{ \begin{array}{ll} L_{a}(x) &{} 0\le x\le a,\\ R_{a}(x) &{} a\le x\le 1, \end{array} \right. \end{aligned}$$

(30)

where \(L_{a}(x)\in \mathscr {C}^{2m}\left[ 0,a\right] \) and \(R_{a}(x)\in \mathscr {C}^{2m}\left[ a,1\right] \). As \(\psi _{a}\in \mathscr {H}^m\left[ 0,1\right] \), it suffices that \(L_a^{(k)}(a)=R_a^{(k)}(a)\), \(0\le k\le m-1\). We get

$$\begin{aligned} f(a)=\left\langle \psi _a,f\right\rangle _{Sob,m}=\int _0^a\sum _{k=0}^m L_a^{(k)}(x)f^{(k)}(x)\text {d}x+\int _a^1\sum _{k=0}^m R_a^{(k)}(x)f^{(k)}(x)\text {d}x. \end{aligned}$$

(31)

Integrating by parts and setting \(i=k-j-1\), we obtain

$$\begin{aligned}&\sum _{k=0}^m\int _0^a L_a^{(k)}(x)f^{(k)}(x)\text {d}x =\sum _{i=0}^{m-1}f^{(i)}(a)\left\{ \sum _{k=i+1}^{m}\left( -1\right) ^{k-i-1}L_a^{(2k-i-1)}(a)\right\} \nonumber \\ -&\sum _{i=0}^{m-1}f^{(i)}(0)\left\{ \sum _{k=i+1}^{m}\left( -1\right) ^{k-i-1}L_a^{(2k-i-1)}(0)\right\} +\int _0^a\left\{ \sum _{k=0}^m\left( -1\right) ^k L_a^{(2k)}(x)\right\} f(x)\text {d}x \end{aligned}$$

(32)

and, similarly,

$$\begin{aligned}&\sum _{k=0}^m\int _a^1 R_a^{(k)}(x)f^{(k)}(x)\text {d}x {=}\sum _{i=0}^{m-1}f^{(i)}(1)\left\{ \sum _{k=i+1}^{m}\left( -1\right) ^{k-i-1}R_a^{(2k-i-1)}(1)\right\} \nonumber \\ -&\sum _{i{=}0}^{m-1}f^{(i)}(a)\left\{ \sum _{k{=}i{+}1}^{m}\left( -1\right) ^{k-i-1}R_a^{(2k-i-1)}(a)\right\} {+}\int _a^1\left\{ \sum _{k{=}0}^m\left( -1\right) ^k R_a^{(2k)}(x)\right\} f(x)\text {d}x. \end{aligned}$$

(33)

This holds for all \(f(x)\in \mathscr {C}^{m}\left[ 0,1\right] \). We require that both \(L_a\) and \(R_a\) are solutions of the constant coefficient differential equation

$$\begin{aligned} \sum _{k=0}^m\left( -1\right) ^k \varphi _k^{(2k)}(x)=0. \end{aligned}$$

(34)

The boundary conditions are obtained by the equality of the functional values of \(L_a^{(i)}(x)\) and \(R_a^{(i)}(x)\) at a and the coefficient comparison of \(f^{(i)}(0)\), \(f^{(i)}(1)\) and \(f^{(i)}(a)\), compare (31) to (32) and (33). Let \(f^{(i)}(x)\bowtie c\) denotes that the term \(f^{(i)}(x)\) has the coefficient c in a certain equation. We can write

$$\begin{aligned} r_a\in \mathscr {H}^m\left[ 0,1\right]\Rightarrow & {} L_a^{(i)}(a)=R_a^{(i)}(a),\quad 0\le i\le m-1,\end{aligned}$$

(35)

$$\begin{aligned} f^{(i)}(0)\bowtie 0\Rightarrow & {} \sum _{k=i+1}^{m}\left( -1\right) ^{k-i-1}L_a^{(2k-i-1)}(0)=0,\quad 0\le i\le m-1,\end{aligned}$$

(36)

$$\begin{aligned} f^{(i)}(1)\bowtie 0\Rightarrow & {} \sum _{k=i+1}^{m}\left( -1\right) ^{k-i-1}R_a^{(2k-i-1)}(1)=0,\quad 0\le i\le m-1,\end{aligned}$$

(37)

$$\begin{aligned} f^{(i)}(a)\bowtie 0\Rightarrow & {} \sum _{k=i+1}^{m}\left( -1\right) ^{k-i-1}\Big \{L_a^{(2k-i-1)}(a)-R_a^{(2k-i-1)}(a)\Big \}=0,\nonumber \\&\qquad \qquad \qquad \qquad \quad \!\! 1\le i\le m-1,\end{aligned}$$

(38)

$$\begin{aligned} f(a)\bowtie 1\Rightarrow & {} \sum _{k=1}^{m}\left( -1\right) ^{k-1}\Big \{L_a^{(2k-1)}(a)-R_a^{(2k-1)}(a)\Big \}=1; \end{aligned}$$

(39)

together \(m+m+m+(m-1)+1=4m\) boundary conditions. To obtain the general solution of this differential equation, we need to find the roots of its characteristic polynomial \(P_m(\lambda )=\sum _{k=0}^m(-1)^k\lambda ^{2k}\). Hence, it follows that

$$\begin{aligned} (1+\lambda ^2)P_m(\lambda )=1+(-1)^m \lambda ^{2m+2}, \quad \lambda \ne \pm i. \end{aligned}$$

(40)

Solving (40), we get the characteristic roots \(\lambda _k=e^{i\theta _k}\), where

$$\begin{aligned} \theta _k\in \left\{ \begin{array}{lll} \frac{(2k+1)\pi }{2m+2}&{}m\text { even,}&{} k\in \left\{ 0,1,\ldots ,2m+1\right\} \backslash \left\{ \frac{m}{2},\frac{3m+2}{2}\right\} ,\\ \\ \frac{k\pi }{m+1}&{}m\text { odd,}&{} k\in \left\{ 0,1,\ldots ,2m+1\right\} \backslash \left\{ \frac{m+1}{2},\frac{3m+3}{2}\right\} . \end{array} \right. \end{aligned}$$

We have altogether \((2m+2)-2=2m\) different complex roots but each has a pair that is conjugate with it. Thus, for \(\underline{m {\mathrm{even}}}\), we have m complex conjugate roots with multiplicity one. We also have 2m base elements alike complex roots

$$\begin{aligned} \varphi _k(x)&=\exp \Big \{\big (\mathfrak {R}(\lambda _k)\big )x\Big \}\cos \Big [\big (\mathfrak {I}(\lambda _k)\big )x\Big ],\, k\in \left\{ 0,1,\ldots ,m\right\} \backslash \left\{ \frac{m}{2}\right\} ; \end{aligned}$$

(41)

$$\begin{aligned} \varphi _{m+1+k}(x)&=\exp \Big \{\big (\mathfrak {R}(\lambda _k)\big )x\Big \}\sin \Big [\big (\mathfrak {I}(\lambda _k)\big )x\Big ],\, k\in \left\{ 0,1,\ldots ,m\right\} \backslash \left\{ \frac{m}{2}\right\} . \end{aligned}$$

(42)

If \(\underline{m\, {\mathrm{is\, odd}}}\), we have \(2m-2\) different complex roots (each has a pair that is conjugate with it) and two-real roots. The two real roots are \(\pm 1\). The \(m-1\) complex conjugate roots have multiplicity one. We also have \(2(m-1)+2=2m\) base elements alike all roots. These base elements are

$$\begin{aligned} \varphi _0(x)&=\exp \left\{ x\right\} ;\end{aligned}$$

(43)

$$\begin{aligned} \varphi _k(x)&=\exp \Big \{\big (\mathfrak {R}(\lambda _k)\big )x\Big \}\cos \Big [\big (\mathfrak {I}(\lambda _k)\big )x\Big ],\, k\in \left\{ 1,2,\ldots ,m\right\} \backslash \left\{ \frac{m+1}{2}\right\} ;\end{aligned}$$

(44)

$$\begin{aligned} \varphi _{m+1}(x)&=\exp \left\{ -x\right\} ;\end{aligned}$$

(45)

$$\begin{aligned} \varphi _{m+1+k}(x)&=\exp \Big \{\big (\mathfrak {R}(\lambda _k)\big )x\Big \}\sin \Big [\big (\mathfrak {I}(\lambda _k)\big )x\Big ],\, k\in \left\{ 1,2,\ldots ,m\right\} \backslash \left\{ \frac{m+1}{2}\right\} . \end{aligned}$$

(46)

These vectors generate the subspace of \(\mathscr {C}^m\left[ 0,1\right] \) of solutions of the differential equation (34). The general solution is given by the linear combination

$$\begin{aligned}&L_a(x) = \sum _{\begin{array}{l} k=0 \\ k\ne \frac{m}{2} \end{array}}^m \gamma _k(a)\exp \Big \{\mathfrak {R}(\lambda _k)x\Big \}\cos \Big [\mathfrak {I}(\lambda _k)x\Big ]\nonumber \\&+ \sum _{\begin{array}{l} k=0 \\ k\ne \frac{m}{2} \end{array}}^m \gamma _{m+1+k}(a)\exp \Big \{\mathfrak {R}(\lambda _k)x\Big \}\sin \Big [\mathfrak {I}(\lambda _k)x\Big ],\ \text { for } m\text { even};\end{aligned}$$

(47)

$$\begin{aligned}&L_a(x) = \gamma _0(a)\exp \left\{ x\right\} + \sum _{\begin{array}{l} k=1 \\ k\ne \frac{m+1}{2} \end{array}}^m \gamma _k(a)\exp \Big \{\mathfrak {R}(\lambda _k)x\Big \}\cos \Big [\mathfrak {I}(\lambda _k)x\Big ]\nonumber \\&+ \gamma _{m+1}(a)\exp \left\{ -x\right\} + \sum _{\begin{array}{l} k=1 \\ k\ne \frac{m+1}{2} \end{array}}^m \gamma _{m+1+k}(a)\exp \Big \{\mathfrak {R}(\lambda _k)x\Big \}\sin \Big [\mathfrak {I}(\lambda _k)x\Big ],\ \text { for } m\text { odd}; \end{aligned}$$

(48)

$$\begin{aligned}&R_a(x) =\sum _{\begin{array}{l} k=0 \\ k\ne \frac{m}{2} \end{array}}^m \gamma _{2m+2+k}(a)\exp \Big \{\mathfrak {R}(\lambda _k)x\Big \}\cos \Big [\mathfrak {I}(\lambda _k)x\Big ]\nonumber \\&+ \sum _{\begin{array}{l} k=0 \\ k\ne \frac{m}{2} \end{array}}^m \gamma _{3m+3+k}(a)\exp \Big \{\mathfrak {R}(\lambda _k)x\Big \}\sin \Big [\mathfrak {I}(\lambda _k)x\Big ],\ \text { for } m\text { even};\end{aligned}$$

(49)

$$\begin{aligned}&R_a(x) =\gamma _{2m+2}(a)\exp \left\{ x\right\} + \sum _{\begin{array}{l} k=1 \\ k\ne \frac{m+1}{2} \end{array}}^m \gamma _{2m+2+k}(a)\exp \Big \{\mathfrak {R}(\lambda _k)x\Big \}\cos \Big [\mathfrak {I}(\lambda _k)x\Big ]\nonumber \\&+ \gamma _{3m+3}(a)\exp \left\{ -x\right\} + \sum _{\begin{array}{l} k=1 \\ k\ne \frac{m+1}{2} \end{array}}^m \gamma _{3m+3+k}(a)\exp \Big \{\mathfrak {R}(\lambda _k)x\Big \}\sin \Big [\mathfrak {I}(\lambda _k)x\Big ],\ \text { for } m\text { odd}; \end{aligned}$$

(50)

where the coefficients \(\gamma _k(a)\) are arbitrary constants that satisfy the boundary conditions (35)–(39). It can be easily seen that we have obtained \(4(m+1)-4=4m\) coefficients \(\gamma _k(a)\), because the first index of \(\gamma _k(a)\) is 0 and the last one is \(4m+3\). Thus, we have 4m boundary conditions and 4m unknowns of \(\gamma _k\)s that lead us to the square \(4m\times 4m\) system of the linear equations. Does \(\psi _a\) exist and is it unique? To show this, it suffices to prove that the only solution of the associated homogeneous system of linear equations is the zero vector. Suppose \(L_a(x)\) and \(R_a(x)\) are functions corresponding to the solution of the homogeneous system, because in linear system of equations (35)–(39) the right side has all zeros—coefficient of f(a) in the last boundary condition is 0 instead of 1. Then, by the exactly the same integration by parts, it follows that \(\left\langle \psi _a,f\right\rangle _{Sob,m}=0\) for all \(f\in \mathscr {C}^m\left[ 0,1\right] \). Hence, \(\psi _a(x)\), \(L_a(x)\) and \(R_a(x)\) are zero almost everywhere and, by the linear independence of the base elements \(\varphi _k(x)\), we obtain the uniqueness of the coefficients \(\gamma _k(a)\).

(ii) Producing a representor \(\psi _{\mathbbm {a}}^{\mathbbm {w}}\). Let us define the representor \(\psi _{\mathbbm {a}}^{\mathbbm {w}}\) by setting \(\psi _{\mathbbm {a}}^{\mathbbm {w}}(\mathbbm {x})=\prod _{i=1}^q\psi _{a_i}^{w_i}(x_i)\ \text {for all }\mathbbm {x}\in \mathscr {Q}^q\), where \(\psi _{a_i}^{w_i}(x_i)\) is the representor at \(a_i\) in \(\mathscr {H}^m\left( {Q}^1\right) \). We know that \(\mathscr {C}^m\) is dense in \(\mathscr {H}^m\), so it is sufficient to show the result for \(f\in \mathscr {C}^m(\mathscr {Q}^q)\). For simplicity let us suppose \(\mathscr {Q}^q\equiv \left[ 0,1\right] ^q\). After rewriting the inner product and using Fubini theorem we have

$$\begin{aligned} \big \langle&\psi _{\mathbbm {a}}^{\mathbbm {w}},f\big \rangle _{Sob,m}=\bigg \langle \prod _{i=1}^q\psi _{a_i}^{w_i},f\bigg \rangle _{Sob,m}\\&=\sum _{i_1=0}^m\int _0^1\frac{\partial ^{i_1}\psi _{a_1}^{w_1}(x_1)}{\partial x_1^{i_1}} \Bigg [\cdots \Bigg [\sum _{i_q=0}^m\int _0^1\frac{\partial ^{i_q}\psi _{a_q}^{w_q}(x_q)}{\partial x_q^{i_q}}. \frac{\partial ^{i_1,\ldots ,i_q}f(\mathbbm {x})}{\partial x_1^{i_1}\ldots \partial x_q^{i_q}}\text {d}x_q\Bigg ]\ldots \Bigg ]\text {d}x_1. \end{aligned}$$

According to Definition 3 and notation in Definition 1, we can rewrite the center most bracket

$$\begin{aligned} \sum _{i_q=0}^m\int _0^1\frac{\partial ^{i_q}\psi _{a_q}^{w_q}(x_q)}{\partial x_q^{i_q}}. \frac{\partial ^{i_1,\ldots ,i_q}f(\mathbbm {x})}{\partial x_1^{i_1}\ldots \partial x_q^{i_q}}\text {d}x_q&=\Big \langle \psi _{a_q}^{w_q},D^{(i_1,\ldots ,i_{q-1})}f(x_1,\ldots ,x_{i-1},\cdot )\Big \rangle _{Sob,m}\\&=D^{(i_1,\ldots ,i_{q-1},w_q)}f(\mathbbm {x}_{-q},a_q). \end{aligned}$$

Proceeding sequentially in the same way, we obtain that the value of the above expression is \(D^{\mathbbm {w}}f(\mathbbm {a})\). \(\square \)

Proof

(Proof of Theorem 2) Existence and uniqueness of coefficients \(\gamma _k(a)\) has already been proved in the proof of Theorem 1. Let us define

$$\begin{aligned} \varLambda _{a,I}^{(l)}:=\left\{ \begin{array}{ll} L_a^{(l)}(0), &{} \text {for } I=L;\\ R_a^{(l)}(1), &{} \text {for } I=R;\\ L_a^{(l)}(a)-R_a^{(a)}(a), &{} \text {for } I=D.\\ \end{array} \right. \end{aligned}$$

(51)

From the boundary conditions (36)–(39), we easily see that

$$\begin{aligned} \sum _{k=i+1}^{m}(-1)^{k-i-1}\varLambda _{a,I}^{(2k-i-1)}= & {} 0,\ 0\le i\le m-1,\,I\in \left\{ L,R,D\right\} ,\ \left[ i,I\right] \ne \left[ 0,D\right] ;\end{aligned}$$

(52)

$$\begin{aligned} \sum _{k=1}^{m}(-1)^{k-1}\varLambda _{a,D}^{(2k-1)}= & {} 1. \end{aligned}$$

(53)

For \(m=1\) it follows from (52) to (53) that \(\varLambda _{a,I}^{(1)}=0,\ I\in \left\{ L,R\right\} \) and \(\varLambda _{a,D}^{(1)}=1\). For \(m=2\), we have from (52) to (53): \(\varLambda _{a,I}^{(2)}=0,\ \forall I\); \(\varLambda _{a,I}^{(1)}-\varLambda _{a,I}^{(3)}=0,\ I\in \left\{ L,R\right\} \); and \(\varLambda _{a,D}^{(1)}-\varLambda _{a,D}^{(3)}=1\). Let us now suppose that \(m\ge 3\). We would like to prove the next important step:

$$\begin{aligned} \varLambda _{a,I}^{(m-j)}+(-1)^j\varLambda _{a,I}^{(m+j)}= & {} 0,\quad j=0,\ldots ,m-2,\,\forall I,\end{aligned}$$

(54)

$$\begin{aligned} \varLambda _{a,I}^{(1)}+(-1)^{m-1}\varLambda _{a,I}^{(2m-1)}= & {} 0,\quad I\in \left\{ L,R\right\} ,\end{aligned}$$

(55)

$$\begin{aligned} \varLambda _{a,D}^{(1)}+(-1)^{m-1}\varLambda _{a,D}^{(2m-1)}= & {} 1, \end{aligned}$$

(56)

where \(j:=m-i-1\). For \(j=0\), we obtain \(i=m-1\) and (52) and (53) implies

$$\begin{aligned} \varLambda _{a,I}^{(m)}=0, \quad \forall I, \end{aligned}$$

(57)

which is correct according to (54). Consider \(j=1\) and thus \(i=m-2\). In the same way we get

$$\begin{aligned} \varLambda _{a,I}^{(m-1)}-\varLambda _{a,I}^{(m+1)}=0,\quad \forall I. \end{aligned}$$

(58)

For \(j=2\) and thus \(i=m-3\), we have \(\varLambda _{a,I}^{(m-2)}-\varLambda _{a,I}^{(m)}+\varLambda _{a,I}^{(m+2)}=0,\ \forall I\), and we can use (57). For \(j=3\) and thus \(i=m-4\) we have \(\varLambda _{a,I}^{(m-3)}-\varLambda _{a,I}^{(m-1)}+\varLambda _{a,I}^{(m+1)}-\varLambda _{a,I}^{(m+3)}=0,\ \forall I\), where we can apply (58). We can continue in this way until \(j=m-1\). The last step ensures the correctness of (55) in case that \(I\in \left\{ L,R\right\} \), eventually (56) if \(I=D\) instead of (54).

To finish the proof, we only need to keep in mind (35). From (35), it follows that \(\varLambda _{a,D}^{(j)}=0,\ j\in \left\{ 0,\ldots ,m-1\right\} \). According to (54) for \(I=D\) and (56), we further see

$$\begin{aligned} \varLambda _{a,D}^{(j)}= & {} 0,\quad j\in \left\{ m+1,\ldots ,2m-2\right\} ;\\ \varLambda _{a,D}^{(2m-1)}= & {} (-1)^{m-1}. \end{aligned}$$

Altogether we have obtained the following system of 4m linear equations:

$$\begin{aligned} \varLambda _{a,L}^{(m-j)}+(-1)^j\varLambda _{a,L}^{(m+j)}= & {} 0,\quad j=0,\ldots ,m-1,\\ \varLambda _{a,R}^{(m-j)}+(-1)^j\varLambda _{a,R}^{(m+j)}= & {} 0,\quad j=0,\ldots ,m-1,\\ \varLambda _{a,D}^{(j)}= & {} 0,\quad j=0,\ldots ,2m-2,\\ \varLambda _{a,D}^{(2m-1)}= & {} (-1)^{m-1}, \end{aligned}$$

which, after rewriting them using (51), (47)–(50) and (41)–(46), completes the proof. \(\square \)

Proof

(Proof of Theorem 3) Let \(M=span\left\{ \psi _{\mathbbm {x}_i}:\,i=1,\ldots ,n\right\} \) and its orthogonal complement \(M^{\perp }=\left\{ h\in \mathscr {H}^m:\,\left\langle \psi _{\mathbbm {x}_i},h\right\rangle _{Sob,m}=0,\,i=1,\ldots ,n\right\} \). Representors exist by Theorem 1 and we can write the Sobolev space as a direct sum of its orthogonal subspaces, i.e., \(\mathscr {H}^m=M\oplus M^{\perp }\) since \(\mathscr {H}^m\) is a Hilbert space. Functions \(h\in M^{\perp }\) take on the value zero at \(\mathbbm {x}_1,\ldots ,\mathbbm {x}_n\). Each \(f\in \mathscr {H}^m\) can be written as \(f=\sum _{j=1}^nc_j\psi _{\mathbbm {x}_j}+h,\ h\in M^{\perp }\). Then,

$$\begin{aligned}&\left[ \mathbbm {Y}-\varvec{f}(\mathbbm {x})\right] ^{\top }{\varvec{\varSigma }}^{-1}\left[ \mathbbm {Y}-\varvec{f}(\mathbbm {x})\right] +\chi \left\| f\right\| _{Sob,m}^2\\&=\left[ \mathbbm {Y}_{\bullet }-\left\langle \psi _{\mathbbm {x}_{\bullet }},\sum _{j=1}^nc_j\psi _{x_j}+h\right\rangle _{Sob,m}\right] ^{\top }{\varvec{\varSigma }}^{-1}\left[ \mathbbm {Y}_{\bullet }-\left\langle \psi _{\mathbbm {x}_{\bullet }},\sum _{j=1}^nc_j\psi _{x_j}+h\right\rangle _{Sob,m}\right] \\&\quad +\chi \left\| \sum _{j=1}^nc_j\psi _{\mathbbm {x}_j}+h\right\| _{Sob,m}^2\\&=\left[ \mathbbm {Y}_{\bullet }-\sum _{j=1}^n\left\langle \psi _{\mathbbm {x}_{\bullet }},c_j\psi _{x_j}\right\rangle _{Sob,m}\right] ^{\top }{\varvec{\varSigma }}^{-1}\left[ \mathbbm {Y}_{\bullet }-\sum _{j=1}^n\left\langle \psi _{\mathbbm {x}_{\bullet }},c_j\psi _{x_j}\right\rangle _{Sob,m}\right] \\&\quad +\chi \left\| \sum _{j=1}^nc_j\psi _{\mathbbm {x}_j}\right\| _{Sob,m}^2+\chi \left\| h\right\| _{Sob,m}^2\\&=\left[ \mathbbm {Y}_{\bullet }-\sum _{j=1}^nc_j\left\langle \psi _{\mathbbm {x}_{\bullet }},\psi _{x_j}\right\rangle _{Sob,m}\right] ^{\top }{\varvec{\varSigma }}^{-1}\left[ \mathbbm {Y}_{\bullet }-\sum _{j=1}^nc_j\left\langle \psi _{\mathbbm {x}_{\bullet }},\psi _{x_j}\right\rangle _{Sob,m}\right] \\&\qquad +\chi \left\langle \sum _{j=1}^nc_j\psi _{\mathbbm {x}_j},\sum _{j=1}^nc_j\psi _{\mathbbm {x}_j}\right\rangle _{Sob,m}+\chi \left\| h\right\| _{Sob,m}^2\\&=\left[ \mathbbm {Y}_{\bullet }-\sum _{j=1}^n\varPsi _{\bullet ,j}c_j\right] ^{\top }{\varvec{\varSigma }}^{-1}\left[ \mathbbm {Y}_{\bullet }-\sum _{j=1}^n\varPsi _{\bullet ,j}c_j\right] \\&\qquad +\chi \sum _{j=1}^n\sum _{k=1}^nc_j\left\langle \psi _{\mathbbm {x}_j},\psi _{\mathbbm {x}_k}\right\rangle _{Sob,m}c_k+\chi \left\| h\right\| _{Sob,m}^2\\&=\left[ \mathbbm {Y}-{\varvec{\varPsi }}\mathbbm {c}\right] ^{\top }{\varvec{\varSigma }}^{-1}\left[ \mathbbm {Y}-{\varvec{\varPsi }}\mathbbm {c}\right] +\chi \mathbbm {c}^{\top }{\varvec{\varPsi }}\mathbbm {c}+\chi \left\| h\right\| _{Sob,m}^2, \end{aligned}$$

where \(\left\langle \psi _{\mathbbm {x}_{\bullet }},g\right\rangle _{Sob,m}=\left[ \left\langle \psi _{x_1},g\right\rangle _{Sob,m},\ldots ,\left\langle \psi _{x_n},g\right\rangle _{Sob,m}\right] ^{\top }\) for an arbitrary \(g\in \mathscr {H}^m\). Hence, there exists a function \(f^*\), minimizing the infinite dimensional optimizing problem, that is a linear combination of the representors. We note also that \(\left\| f^*\right\| _{Sob,m}^2=\mathbbm {c}^{\top }{\varvec{\varPsi }}\mathbbm {c}\).

Uniqueness is clear, since \(\psi _{\mathbbm {x}_i}\) are the base elements of M, and adding a function that is orthogonal to the spaces spanned by the representors will increase the norm. \(\square \)

Proof

(Proof of Corollary 1) It follows directly from (30) and from Theorem 3. \(\square \)

Proof

(Proof of Theorem 4) The representor matrix is symmetric by Definition 4 since

$$\begin{aligned} \varPsi _{i,j}=\left\langle \psi _{\mathbbm {x}_i},\psi _{\mathbbm {x}_j}\right\rangle _{Sob,m}=\left\langle \psi _{\mathbbm {x}_j},\psi _{\mathbbm {x}_i}\right\rangle _{Sob,m}=\varPsi _{j,i}, \end{aligned}$$

i.e., \({\varvec{\varPsi }}={\varvec{\varPsi }}^{\top }\).

We give the proof of positive definiteness of the representor matrix only for one-dimensional variable x. The extension into the multivariate case is straightforward. For an arbitrary \(\mathbbm {c}\in \mathbb {R}^n\), we obtain

$$\begin{aligned} \mathbbm {c}^{\top }{\varvec{\varPsi }}\mathbbm {c}&=\sum _ic_i\sum _j\varPsi _{ij}c_j=\sum _i\sum _jc_i\left\langle \psi _{x_i},\psi _{x_j}\right\rangle _{Sob,m}c_j=\sum _i\sum _j\left\langle c_i\psi _{x_i},c_j\psi _{x_j}\right\rangle _{Sob,m}\\&=\left\langle \sum _ic_i\psi _{x_i},\sum _jc_j\psi _{x_j}\right\rangle _{Sob,m}=\left\| \sum _ic_i\psi _{x_i}\right\| _{Sob,m}^2\ge 0. \end{aligned}$$

Hence, \(\mathbbm {c}^{\top }{\varvec{\varPsi }}\mathbbm {c}=0\) iff \(\sum _ic_i\psi _{x_i}=0\) a.e.

For \(x>x_i\), we define

$$ {\varvec{\gamma }}(x_i)=\left[ \gamma _0,\ldots ,\gamma _{\kappa -1},\gamma _{\kappa +1},\ldots ,\gamma _{m+\kappa },\gamma _{m+2+\kappa },\ldots ,\gamma _{2m+1}\right] ^{\top }(x_i). $$

Otherwise, we define

$$ {\varvec{\gamma }}(x_i)= \left[ \gamma _{2m+2},\ldots ,\gamma _{2m+1+\kappa }, \gamma _{2m+3+\kappa },\ldots ,\gamma _{3m+2+\kappa }, \gamma _{3m+4+\kappa },\ldots ,\gamma _{4m+3}\right] ^{\top }(x_i). $$

Similarly, we look at elements of the vector \(\left[ \left\{ {\varvec{\varGamma }}(x_i)\right\} ^{-1}\right] _{\bullet ,4m}\). According to (47)–(50), (41)–(46) and (7), we have

$$\begin{aligned} \psi _{x_i}(x)={\varvec{\gamma }}(x_i)^{\top }{\varvec{\varphi }}(x)=(-1)^{m-1}\left[ \left\{ {\varvec{\varGamma }}(x_i)\right\} ^{-1}\right] _{\bullet ,4m}^{\top }{\varvec{\varphi }}(x) \end{aligned}$$

where \({\varvec{\varphi }}(x)\) is vector containing the base elements of the space of the solutions of the differential equation (34), i.e., \(\varphi _k(x)\), cf. (41)–(46). From the linear independence of \(\varphi _k(x)\) it follows that

$$\begin{aligned} \sum _ic_i\psi _{x_i}&= (-1)^{m-1}\sum _ic_i\left[ \left\{ {\varvec{\varGamma }}(x_i)\right\} ^{-1}\right] _{\bullet ,4m}^{\top }{\varvec{\varphi }}\nonumber \\&= (-1)^{m-1}\sum _i\sum _kc_i\left[ \left\{ {\varvec{\varGamma }}(x_i)\right\} ^{-1}\right] _{4m,k}\varphi _{k}=0\quad \text {a.e.}\\&\Updownarrow \nonumber \\ \varphi _{k}&=0\quad \text {a.e.}\quad k\in \left\{ 0,1,\ldots ,2m+1\right\} \backslash \left\{ \begin{array}{ll}\left\{ \frac{m}{2},\frac{3m+2}{2}\right\} &{} m\text { even,}\\ \quad &{} \quad \\ \left\{ \frac{m+1}{2},\frac{3m+3}{2}\right\} &{} m\text { odd;} \end{array} \right. \\&\Downarrow \nonumber \\ \psi _{x_i}&= 0\quad \text {a.e.}\quad i=1,\ldots ,n. \end{aligned}$$

And \(\psi _{x_i}=0\) a.e. is a zero element of the space \(\mathscr {H}^m\). \(\square \)

Proof

(Proof of Theorem 5) According to the Theorem 3, we want to minimize the function

$$\begin{aligned} \mathscr {L}(\mathbbm {c}):=\frac{1}{n}\left[ \mathbbm {Y}-{\varvec{\varPsi }}\mathbbm {c}\right] ^{\top }{\varvec{\varSigma }}^{-1}\left[ \mathbbm {Y}-{\varvec{\varPsi }}\mathbbm {c}\right] +\chi \mathbbm {c}^{\top }{\varvec{\varPsi }}\mathbbm {c}. \end{aligned}$$

Therefore, the first partial derivatives of \(\mathscr {L}(\mathbbm {c})\) have to be equal zero at the minimizer \(\widehat{\mathbbm {c}}\), i.e., \(\frac{\partial }{\partial c_i}\mathscr {L}(\mathbbm {c})\mathop {=}\limits ^{!}0,\ i=1,\ldots ,n\). Denoting \({\varvec{\varSigma }}^{-1}=:\left[ \phi _{ij}\right] _{i,j=1}^{n,n}\), we can write

$$\begin{aligned} n\mathscr {L}(\mathbbm {c})&=\mathbbm {Y}^{\top }{\varvec{\varSigma }}^{-1}\mathbbm {Y}-2\mathbbm {Y}^{\top }{\varvec{\varSigma }}^{-1}{\varvec{\varPsi }}\mathbbm {c}+\mathbbm {c}^{\top }{\varvec{\varPsi }}{\varvec{\varSigma }}^{-1}{\varvec{\varPsi }}\mathbbm {c}+n\chi \mathbbm {c}^{\top }{\varvec{\varPsi }}\mathbbm {c}\\&=\sum _{r=1}^n\sum _{s=1}^n Y_r\phi _{rs}Y_s-2\sum _{r=1}^n\sum _{s=1}^n\sum _{t=1}^nY_r\phi _{rs}\varPsi _{st}c_t+\sum _{r=1}^n\sum _{s=1}^n\sum _{t=1}^n\sum _{u=1}^nc_r\varPsi _{rs}\phi _{st}\varPhi _{tu}c_u\\&\qquad +n\chi \sum _{r=1}^n\sum _{s=1}^nc_r\varPsi _{rs}c_s \end{aligned}$$

and, hence,

$$\begin{aligned} 0&\mathop {=}\limits ^{!}-2\sum _{r=1}^n\sum _{s=1}^nY_r\phi _{rs}\varPsi _{si}+2\sum _{\begin{array}{l}r=1\\ r\ne i\end{array}}^n\sum _{s=1}^n\sum _{t=1}^nc_r\varPsi _{rs}\phi _{st}\varPhi _{ti}\\&\qquad +2\sum _{r=1}^n\sum _{s=1}^nc_i\varPsi _{is}\phi _{st}\varPhi _{ti}+2n\chi \sum _{\begin{array}{l}r=1\\ r\ne i\end{array}}^nc_r\varPsi _{ri}+2n\chi c_i\varPsi _{ii}\\&=-2\mathbbm {Y}^{\top }{\varvec{\varSigma }}^{-1}{\varvec{\varPsi }}_{\bullet ,i}+2\mathbbm {c}^{\top }{\varvec{\varPsi }}{\varvec{\varSigma }}^{-1}{\varvec{\varPsi }}_{\bullet ,i}+2n\chi \mathbbm {c}^{\top }{\varvec{\varPsi }}_{\bullet ,i},\quad i=1,\ldots ,n. \end{aligned}$$

Then, we obtain our system of the normal equations

$$\begin{aligned} \mathbbm {c}^{\top }\left( {\varvec{\varPsi }}{\varvec{\varSigma }}^{-1}{\varvec{\varPsi }}_{\bullet ,i}+n\chi {\varvec{\varPsi }}_{\bullet ,i}\right) =\mathbbm {Y}^{\top }{\varvec{\varSigma }}^{-1}{\varvec{\varPsi }}_{\bullet ,i},\quad i=1,\ldots ,n. \end{aligned}$$

\(\square \)

Proof

(Proof of Theorem 6) Let us have fixed \(\chi >0\). Hence we have obtained unique \(\widehat{f}\) and also \(\widehat{\mathbbm {c}}\) according to Theorem 3. Theorem 3 and the Lagrange multipliers say that there exists a unique \(L>0\) such that \(\widehat{\mathbbm {c}}\) is also a unique solution of optimizing problem

$$\begin{aligned} \widehat{\mathbbm {c}}=\arg \min _{\mathbbm {c}\in \mathbb {R}^n}\frac{1}{n}\left[ \mathbbm {Y}-{\varvec{\varPsi }}\mathbbm {c}\right] ^{\top }{\varvec{\varSigma }}^{-1}\left[ \mathbbm {Y}-{\varvec{\varPsi }}\mathbbm {c}\right] \quad \text {s.t. }\mathbbm {c}^{\top }{\varvec{\varPsi }}\mathbbm {c}=L. \end{aligned}$$

Let us define

$$\begin{aligned} \widetilde{\varvec{f}}(\mathbbm {x}):= & {} {\varvec{\varXi }}{\varvec{f}}(\mathbbm {x}),\quad \widetilde{\mathbbm {Y}}:={\varvec{\varXi }}\mathbbm {Y},\\ \widehat{\widetilde{\mathbbm {c}}}:= & {} \arg \min _{\widetilde{\mathbbm {c}}\in \mathbb {R}^n}\frac{1}{n}\left[ \widetilde{\mathbbm {Y}}-{\varvec{\varPsi }}\widetilde{\mathbbm {c}}\right] ^{\top }{\varvec{\varSigma }}^{-1}\left[ \widetilde{\mathbbm {Y}}-{\varvec{\varPsi }}\widetilde{\mathbbm {c}}\right] \quad \text {s.t.}\ \widetilde{\mathbbm {c}}^{\top }{\varvec{\varPsi }}{\varvec{\varXi }}^{-1}{\varvec{\varPsi }}^{-1}{\varvec{\varXi }}^{-1}{\varvec{\varPsi }}\widetilde{\mathbbm {c}}\le L. \end{aligned}$$

We can easily find out that \(\widehat{\widetilde{\mathbbm {c}}}={\varvec{\varPsi }}^{-1}{\varvec{\varXi }}{\varvec{\varPsi }}\widehat{\mathbbm {c}}\) and, hence, \(\widehat{\widetilde{\varvec{f}}}(\mathbbm {x})={\varvec{\varXi }}\widehat{\widetilde{\mathbbm {c}}}\). Finally, there must exists \(\widetilde{L}>0\) such that

$$\begin{aligned} \widehat{\widetilde{\mathbbm {c}}}=\arg \min _{\widetilde{\mathbbm {c}}\in \mathbb {R}^n}\frac{1}{n}\left[ \widetilde{\mathbbm {Y}}-{\varvec{\varPsi }}\widetilde{\mathbbm {c}}\right] ^{\top }{\varvec{\varSigma }}^{-1}\left[ \widetilde{\mathbbm {Y}}-{\varvec{\varPsi }}\widetilde{\mathbbm {c}}\right] \quad \text {s.t. }\widetilde{\mathbbm {c}}^{\top }{\varvec{\varPsi }}\widetilde{\mathbbm {c}}=\widetilde{L} \end{aligned}$$

and hence this \(\widehat{\widetilde{\mathbbm {c}}}\) has to be a unique solution of the optimizing problem

$$\begin{aligned} \widehat{\widetilde{\mathbbm {c}}}=\arg \min _{\widetilde{\mathbbm {c}}\in \mathbb {R}^n}\frac{1}{n}\left[ \widetilde{\mathbbm {Y}}-{\varvec{\varPsi }}\widetilde{\mathbbm {c}}\right] ^{\top }{\varvec{\varSigma }}^{-1}\left[ \widetilde{\mathbbm {Y}}-{\varvec{\varPsi }}\widetilde{\mathbbm {c}}\right] \quad \text {s.t. }\widetilde{\mathbbm {c}}^{\top }{\varvec{\varPsi }}\widetilde{\mathbbm {c}}\le \widetilde{L} \end{aligned}$$

since \({\varvec{\varPsi }}\) is a positive definite matrix (\(\widetilde{\mathbbm {c}}^{\top }{\varvec{\varPsi }}\widetilde{\mathbbm {c}}\) is the volume of n-dimensional ellipsoid).

Now, we think of model

$$\begin{aligned} \widetilde{Y_i}=\widetilde{f}(\mathbbm {x}_i)+\widetilde{\varepsilon }_i,\quad \widetilde{\varepsilon }_i\sim iid,\quad i=1,\ldots ,n \end{aligned}$$

with least-squares estimator \(\widehat{\widetilde{f}}\). As in the proof of Lemma 1 in [13], using [9], it can be shown that there exists \(A>0\) such that for \(\delta >0\), we have \(\log N(\delta ;\mathscr {F})<A\delta ^{-q/m}\), where \(N(\delta ;\mathscr {F})\) denotes the minimum number of balls of radius \(\delta \) in sup-norm required to cover the set of functions \(\mathscr {F}\). Consequently, applying [11, Lemma 3.5], we obtain that there exist positive constants \(C_0,K_0\) such that for all \(K>K_0\)

$$\begin{aligned} \mathsf {P}\left[ \sup _{\left\| g\right\| _{Sob,m}^2\le \widetilde{L}}\frac{\sqrt{n}\left| -\frac{2}{n}\sum _{i=1}^n\widetilde{\varepsilon }_i\left( \widetilde{f}(x_i)-g(x_i)\right) \right| }{\left( \frac{1}{n}\sum _{i=1}^n\left( \widetilde{f}(x_i)-g(x_i)\right) ^2\right) ^{\frac{1}{2}-\frac{q}{4m}}}\ge KA^{1/2}\right] \le \exp \left\{ -C_0K^2\right\} . \end{aligned}$$

Since \(\widetilde{f}\in \widetilde{\mathscr {F}}=\left\{ g\in \mathscr {H}^m(\mathscr {Q}^q):\left\| g\right\| _{Sob,m}^2\le \widetilde{L}\right\} \) and \(\widehat{\widetilde{f}}\) minimizes the sum of squared residuals over \(g\in \widetilde{\mathscr {F}}\),

$$\begin{aligned} \frac{1}{n}\sum _{i=1}^n\left[ \widetilde{Y}_i-\widehat{\widetilde{f}}(x_i)\right] ^2&\le \frac{1}{n}\sum _{i=1}^n\left[ \widetilde{Y}_i-g(x_i)\right] ^2,\quad g\in \widetilde{\mathscr {F}}\nonumber \\ \frac{1}{n}\sum _{i=1}^n\left[ \left( \widetilde{f}(x_i)-\widehat{\widetilde{f}}(x_i)\right) +\widetilde{\varepsilon }_i\right] ^2&\le \frac{1}{n}\sum _{i=1}^n\left[ \left( \widetilde{f}(x_i)-g(x_i)\right) +\widetilde{\varepsilon }_i\right] ^2,\quad g\in \widetilde{\mathscr {F}}\nonumber \\&\Downarrow \text { realize that }\widetilde{f}\in \widetilde{\mathscr {F}}\nonumber \\ \frac{1}{n}\sum _{i=1}^n\left( \widetilde{f}(x_i)-\widehat{\widetilde{f}}(x_i)\right) ^2&\le -\frac{2}{n}\sum _{i=1}^n\widetilde{\varepsilon }_i\left( \widetilde{f}(x_i)-\widehat{\widetilde{f}}(x_i)\right) . \end{aligned}$$

(59)

Now combine (8) and (59) to obtain the result that \(\forall K>K_0\)

$$\begin{aligned} \mathsf {P}\left[ \frac{1}{n}\sum _{i=1}^n\left( \widetilde{f}(x_i)-\widehat{\widetilde{f}}(x_i)\right) ^2\ge \left( \frac{K^2A}{n}\right) ^{\frac{2m}{2m+q}}\right] \le \exp \left\{ -C_0K^2\right\} . \end{aligned}$$

Thus,

$$\begin{aligned} \frac{1}{n}\left[ \widehat{\varvec{f}}(\mathbbm {x})-{\varvec{f}}(\mathbbm {x})\right] ^{\top }{\varvec{\varSigma }}^{-1}&\left[ \widehat{\varvec{f}}(\mathbbm {x})-{\varvec{f}}(\mathbbm {x})\right] \\&\ =\frac{1}{n}\sum _{i=1}^n\left( \widetilde{f}(x_i)-\widehat{\widetilde{f}}(x_i)\right) ^2=\mathscr {O}_{\mathsf {P}}\left( n^{-\frac{2m}{2m+q}}\right) ,\quad n\rightarrow \infty . \end{aligned}$$

\(\square \)

Lemma 1

Suppose \(\left( f_n\right) _{n=1}^{\infty }\) are nonnegative Lipschitz functions on interval \(\left[ a,b\right] \) with a constant \(T>0\) for all \(n\in \mathbbm {N}\). If \(f_n\xrightarrow [n\rightarrow \infty ]{\mathsf {L_1}}0\) then

$$ \left\| f_n\right\| _{\infty ,\left[ a,b\right] }:=\sup _{x\in \left[ a,b\right] }\left| f_n(x)\right| \xrightarrow [n\rightarrow \infty ]{}0. $$

Proof

(Proof of Lemma 1) Suppose that

$$\begin{aligned} \exists \rho >0\quad \forall n_0\in \mathbbm {N}\quad \exists n\ge n_0 \quad \exists x\in \left[ a,b\right] \quad f_n(x)\ge \rho . \end{aligned}$$

Then according to Lipschitz property of each \(f_n\ge 0\), we have for fixed \(\rho ,n_0,n\) and \(x\in \left[ a,b\right] \) that

$$\begin{aligned}&\left\| f_n\right\| _{\mathsf {L_1}\left[ a,b\right] }=\int _a^bf_n(t)\text {d}t\\&\ge \min \left\{ \frac{f_n(x)}{2}(x-a)+\frac{f_n(x)}{2}(b-x),\frac{f_n(x)}{2}(x-a)+\frac{f_n(x)}{2}\frac{f_n(x)}{T},\right. \\&\quad \left. \frac{f_n(x)}{2}\frac{f_n(x)}{T}+\frac{f_n(x)}{2}(b-x),\frac{f_n(x)}{2}\frac{f_n(x)}{T}+\frac{f_n(x)}{2}\frac{f_n(x)}{T}\right\} \\&\ge \min \left\{ \frac{\rho }{2}(b-a),\frac{\rho }{2}(x-a)+\frac{\rho ^2}{2T},\frac{\rho ^2}{2T}+\frac{\rho }{2}(b-x),\frac{\rho ^2}{T}\right\} =:K>0. \end{aligned}$$

But K is a positive constant which does not depend on n and its existence would contradict the assumptions of this lemma, i.e.,

$$ \forall \delta >0\quad \exists n_1\in \mathbbm {N}\quad \forall n\ge n_1\quad \left\| f_n\right\| _{\mathsf {L_1}\left[ a,b\right] }<\delta . $$

\(\square \)

Proof

(Proof of Theorem 7) We divide the proof into two steps.

(i) \(s=0\). The covariance matrix \(\varvec{\varSigma }\) is symmetric and positive definite with equibounded eigenvalues for all n. Hence it can be decomposed using Schur decomposition: \( {\varvec{\varSigma }}={\varvec{\varGamma }}{\varvec{\varUpsilon }}{\varvec{\varGamma }}^{\top } \), where \(\varvec{\varGamma }\) is orthogonal, \(\varvec{\varUpsilon }\) is diagonal (with eigenvalues on this diagonal) such that \(0<\varUpsilon _{ii}\le \vartheta \), \(i=1,\ldots ,n\), \(\forall n\). Hence \({\varvec{\varSigma }}^{-1}={\varvec{\varGamma }}diag\left\{ \varUpsilon _1^{-1},\ldots ,\varUpsilon _n^{-1}\right\} {\varvec{\varGamma }}^{\top }\). Then

$$\begin{aligned}&\ \frac{1}{n}\left[ \widehat{\varvec{f}}(\mathbbm {x})-{\varvec{f}}(\mathbbm {x})\right] ^{\top }{\varvec{\varSigma }}^{-1}\left[ \widehat{\varvec{f}}(\mathbbm {x})-{\varvec{f}}(\mathbbm {x})\right] \nonumber \\&\ge \frac{1}{n}\left[ \widehat{\varvec{f}}(\mathbbm {x})-{\varvec{f}}(\mathbbm {x})\right] ^{\top }{\varvec{\varGamma }}\vartheta ^{-1}{\varvec{I}}{\varvec{\varGamma }}^{\top }\left[ \widehat{\varvec{f}}(\mathbbm {x})-{\varvec{f}}(\mathbbm {x})\right] =\frac{1}{n\vartheta }\sum _{i=1}^n\left[ \hat{f}(x_i)-f(x_i)\right] ^2. \end{aligned}$$

(60)

Let us define \(h_n:=\big |\hat{f}-f\big |\). We know \(\big \Vert \hat{f}\big \Vert _{Sob,m}^2\le L\) for all n and \(\big \Vert f\big \Vert _{Sob,m}^2\le L\). For every function \(t\in \mathscr {H}^m[a,b]\) with \(\big \Vert t\big \Vert _{Sob,m}^2\le L\), it holds that

$$\begin{aligned} \left\| t'\right\| _{\mathsf {L_2}[a,b]}\le \left\| t\right\| _{Sob,1}\le \left\| t\right\| _{Sob,m}\le \sqrt{L}. \end{aligned}$$

(61)

Then, t has equibounded derivative and hence there exists a Lipschitz constant \(T>0\) such that

$$\begin{aligned} \left| t(\xi )-t(\zeta )\right| <T\left| \xi -\zeta \right| ,\quad \xi ,\zeta \in [a,b]. \end{aligned}$$

We easily see

$$\begin{aligned} \frac{\left| h_n(\xi )-h_n(\zeta )\right| }{\left| \xi -\zeta \right| }&=\frac{\left| \big |\hat{f}(\xi )-f(\xi )\big |-\big |\hat{f}(\zeta )-f(\zeta )\big |\right| }{\left| \xi -\zeta \right| }\\&\le \frac{\left| \big [\hat{f}(\xi )-f(\xi )\big ]-\big [\hat{f}(\zeta )-f(\zeta )\big ]\right| }{\left| \xi -\zeta \right| }\\&\le \frac{\big |\hat{f}(\xi )-\hat{f}(\zeta )\big |+\big |f(\xi )-f(\zeta )\big |}{\left| \xi -\zeta \right| }<2T,\quad \xi ,\zeta \in [a,b]. \end{aligned}$$

Since \(h_n\) is T-Lipschitz function for all n and

$$\begin{aligned} \left\| h_n\right\| _{\mathsf {L_2}[a,b]}=\big \Vert \hat{f}-f\big \Vert _{\mathsf {L_2}[a,b]}\le \big \Vert \hat{f}-f\big \Vert _{Sob,1}&\le \big \Vert \hat{f}-f\big \Vert _{Sob,m}\\&\!\!\!\!\!\! \le \big \Vert \hat{f}\big \Vert _{Sob,m}+\big \Vert f\big \Vert _{Sob,m}\le 2\sqrt{L},\quad \forall n, \end{aligned}$$

we obtain that \(h_n\) is equibounded for all n with a positive constant M such that \(\left\| h_n\right\| _{\infty ,[a,b]}\le M>0,\ \forall n\). Hence, \(h_n^2\) is also a Lipschitz function for all n, because for \(\xi ,\zeta \in [a,b]\)

$$\begin{aligned} \frac{\left| h_n^2(\xi )-h_n^2(\zeta )\right| }{\left| \xi -\zeta \right| }=\frac{\left| h_n(\xi )-h_n(\zeta )\right| }{\left| \xi -\zeta \right| }&\left[ h_n(\xi )+h_n(\zeta )\right] \\&\!\! \le T\times 2\left\| h_n\right\| _{\infty ,[a,b]}=2MT=:U>0,\quad \forall n. \end{aligned}$$

Since \(h_n^2\) is U-Lipschitz function for all n and design points \((x_i)_{i=1}^n\) are equidistantly distributed on [a, b], we can write that

$$\begin{aligned} \int _a^b h_n^2(u)\text {d}u&\le \sum _{i=1}^{n-1}\frac{x_{i+1}-x_i}{2}\left\{ h_n^2(x_i)+\left[ h_n^2(x_i)+U(x_{i+1}-x_i)\right] \right\} \nonumber \\&\le \frac{1}{2n}\left[ 2\sum _{i=1}^{n-1}h_n^2(x_i)+U(b-a)\right] \le \frac{1}{n}\sum _{i=1}^n h_n^2(x_i)+\frac{U(b-a)}{2n}. \end{aligned}$$

(62)

According to Theorem 6,

$$\begin{aligned} \forall \rho>0\quad \mathsf {P}\left\{ \frac{1}{n}\left[ \widehat{\varvec{f}}(\mathbbm {x})-{\varvec{f}}(\mathbbm {x})\right] ^{\top }{\varvec{\varSigma }}^{-1}\left[ \widehat{\varvec{f}}(\mathbbm {x})-{\varvec{f}}(\mathbbm {x})\right] >\rho \right\} \xrightarrow [n\rightarrow \infty ]{}0, \end{aligned}$$

so it means

$$\begin{aligned} \forall \rho>0\quad \forall \delta>0\quad&\exists n_0\in \mathbbm {N}\quad \forall n\ge n_0:\nonumber \\&\mathsf {P}\left\{ \frac{1}{n}\left[ \widehat{\varvec{f}}(\mathbbm {x})-{\varvec{f}}(\mathbbm {x})\right] ^{\top }{\varvec{\varSigma }}^{-1}\left[ \widehat{\varvec{f}}(\mathbbm {x})-{\varvec{f}}(\mathbbm {x})\right] >\rho \right\} <\delta . \end{aligned}$$

(63)

Let us fix an arbitrary \(\rho >0\) and \(\delta >0\). Next, we fix \(n_0:=\Bigg \lceil \frac{U}{\rho ^2}\Bigg \rceil \) and for all \(n\ge n_0\) we can write

$$\begin{aligned} \delta&>\mathsf {P}\left\{ \frac{1}{n}\left[ \widehat{\varvec{f}}(\mathbbm {x})-{\varvec{f}}(\mathbbm {x})\right] ^{\top }{\varvec{\varSigma }}^{-1}\left[ \widehat{\varvec{f}}(\mathbbm {x})-{\varvec{f}}(\mathbbm {x})\right]>\frac{\rho ^2(b-a)}{2\vartheta }\right\}&\text {by (63)}\nonumber \\&\ge \mathsf {P}\left\{ \frac{1}{n}\sum _{i=1}^n\left[ \hat{f}(x_i)-f(x_i)\right] ^2>\frac{\rho ^2(b-a)}{2}\right\}&\text {by (60)} \nonumber \\&\ge \mathsf {P}\left\{ \left\| h_n\right\| _{\mathsf {L_2}[a,b]}^2>\underbrace{\frac{\rho ^2(b-a)}{2}+\frac{U(b-a)}{2n}}_{\tilde{\rho }}\right\}&\text {by (62)} \nonumber \\&\ge \mathsf {P}\left\{ \left\| h_n\right\| _{\mathsf {L_1}[a,b]}>\frac{\sqrt{\tilde{\rho }}}{\left\| 1\right\| _{\mathsf {L_2}[a,b]}}\right\} \ge \mathsf {P}\left\{ \left\| h_n\right\| _{\mathsf {L_1}[a,b]}>\rho \right\}&\!\!\!\text {Cauchy--Schwarz}. \end{aligned}$$

Thus, \(\left\| h_n\right\| _{\mathsf {L_1}[a,b]}\xrightarrow [n\rightarrow \infty ]{\mathsf {P}}0\). According to Lemma 1 and the fact that the almost sure convergence implies convergence in probability, we have

$$\begin{aligned} \sup _{x\in \left[ a,b\right] }\left| \widehat{f}(x)-f(x)\right| \xrightarrow [n\rightarrow \infty ]{\mathsf {P}}0. \end{aligned}$$

(ii) \(s\ge 1\). If \(m=2\), we are done. Let \(g_n:=\widehat{f}-f\). According to the assumptions of our model, \(g_n\in \mathscr {H}^m[a,b]\). By [13, Theorem 2.3], all functions in the estimating set have derivatives up to order \(m-1\) uniformly bounded in sup-norm. Then, all the \(g''_n\) are also bounded in sup-norm \((m\ge 3)\) and this implies the uniform boundedness of \(g''_n\):

$$\begin{aligned} \exists M>0 \quad \forall n\in \mathbb {N} \quad \left\| g''_n\right\| _{\infty ,[a,b]}<M. \end{aligned}$$

Let us have fixed \(M>0\). For any fixed \(\rho >0\), define \(\tilde{\rho }:=M\rho \) and there exists \(n_0\in \mathbb {N}\), such that \(\forall n\ge n_0: [c_n,d_n]\subset [a,b]\) and

$$ \begin{aligned} g'_n(c_n)=g'_n(d_n)=\tilde{\rho }\quad \& \quad g'_n(\xi )>\tilde{\rho },\,\xi \in (c_n,d_n) \end{aligned}$$

because \(g'_n\) is continuous on \([c_n,d_n]\) (drawing a picture is helpful). If such \([c_n,d_n]\) does not exist, the proof is finished.

Otherwise there exists \(n_1\ge n_0\) such that \(\forall n\ge n_1\) holds

$$\begin{aligned} \left| \tilde{\rho }(d_n-c_n)\right| \le \left| \int _{c_n}^{d_n}g'_n(\xi )\text {d}\xi \right| =\left| g_n(d_n)-g_n(c_n)\right| \le 2\rho ^2 \end{aligned}$$

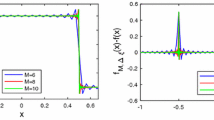

because \(g_n\xrightarrow {n\rightarrow \infty }0\) uniformly in sup-norm on the interval [a, b]. Hence, \(\left| d_n-c_n\right| \le \frac{2\rho }{M}\). The uniform boundedness of \(g''_n\) implies Lipschitz property (see Fig. 6):

$$\begin{aligned} \left| g'_n(x)\right| \le \left| \tilde{\rho }+M\frac{d_n-c_n}{2}\right| \le M\rho +M\frac{\rho }{M}\le \rho (M+1). \end{aligned}$$

We can continue in this way finitely times (formally we can proceed by something like a finite induction). In fact, if \((m-1)\)-th derivatives are uniformly bounded (\(g_n\in \mathscr {H}^m[a,b]\)), then this ensures that \(\widehat{f}^{(s)}\) for \(s\le m-2\) converges in sup-norm. Finally, we have to realize that convergence almost sure implies convergence in probability and each convergent sequence in probability has a subsequence that converges almost sure. \(\square \)

Proof

(Proof of Theorem 8) The proof is very similar to the proof of the Infinite to Finite Theorem 3 and the same arguments can be used. Each \(f,g\in \mathscr {H}^m\) can be written in the form:

$$\begin{aligned} f= & {} \sum _{\left\{ i\,|\,n_{i}\ge 1\right\} }c_i\psi _{x_i}+h_f,\quad h_f\in \left\{ span\left\{ \psi _{\mathbbm {x}_i}:\,n_{i}\ge 1\right\} \right\} ^{\perp },\\ g= & {} \sum _{\left\{ j\,|\,m_{j}\ge 1\right\} }d_j\phi _{x_j}+h_g,\quad h_g\in \left\{ span\left\{ \phi _{\mathbbm {x}_j}:\,m_{j}\ge 1\right\} \right\} ^{\perp }. \end{aligned}$$

For \(1\le \iota \le n\), we easily note that

$$\begin{aligned}&\left[ \left( \begin{array}{c}\mathbbm {Y}\\ \mathbbm {Z} \end{array}\right) - \left( \begin{array}{cc} {\varvec{\varDelta }} &{} {\varvec{0}}\\ {\varvec{0}} &{} {\varvec{\varTheta }} \end{array}\right) \left( \begin{array}{c} \varvec{f}\left( \mathbbm {x}_{\alpha }\right) \\ \varvec{g}\left( \mathbbm {x}_{\beta }\right) \end{array}\right) \right] _{\iota } =Y_{\iota }-\left\{ \sum _{\left\{ i\,|\,n_{i}\ge 1\right\} }\varDelta _{\iota i}f(x_i)+\sum _{\left\{ i\,|\,m_{i}\ge 1\right\} }\varTheta _{\iota i}g(x_i)\right\} \\&=Y_{\iota }-\sum _{\left\{ i\,|\,n_{i}\ge 1\right\} }\varDelta _{\iota i}\left\langle \psi _{x_i},\sum _{\left\{ j\,|\,n_{j}\ge 1\right\} }c_j\psi _{x_j}+h_f\right\rangle _{Sob,m}\\&\quad -\sum _{\left\{ i\,|\,m_{i}\ge 1\right\} }\varTheta _{\iota i}\left\langle \phi _{x_i},\sum _{\left\{ j\,|\,m_{j}\ge 1\right\} }d_j\phi _{x_j}+h_g\right\rangle _{Sob,m}\\&=Y_{\iota }-\sum _{\left\{ i\,|\,n_{i}\ge 1\right\} }\varDelta _{\iota i}\sum _{\left\{ j\,|\,n_{j}\ge 1\right\} }\varPsi _{ij}c_j-\sum _{\left\{ i\,|\,m_{i}\ge 1\right\} }\varTheta _{\iota i}\sum _{\left\{ j\,|\,m_{j}\ge 1\right\} }\varPhi _{ij}d_j\\&=\left[ \left( \begin{array}{c} \mathbbm {Y}\\ \mathbbm {Z} \end{array}\right) - \left( \begin{array}{cc} {\varvec{\varDelta }} &{} {\varvec{0}}\\ {\varvec{0}} &{} {\varvec{\varTheta }} \end{array}\right) \left( \begin{array}{cc} {\varvec{\varPsi }} &{} {\varvec{0}}\\ {\varvec{0}} &{} {\varvec{\varPhi }} \end{array}\right) \left( \begin{array}{c} \mathbbm {c}\\ \mathbbm {d} \end{array}\right) \right] _{\iota }. \end{aligned}$$

We can proceed in the same way also for \(n<\iota \le n+m\).

Finally, it remains to rewrite the constraints using (2) from Theorem 1:

$$\begin{aligned} f'(x_{\iota })=\left\langle \psi _{x_{\iota }},\sum _{\left\{ i\,|\,n_{i}\ge 1\right\} }c_i\psi '_{x_i}+h_f\right\rangle _{Sob,m}=\left[ {\varvec{\varPsi }}^{(1)}\mathbbm {c}\right] _{\iota }\quad \forall \iota :n_{\iota }\ge 1. \end{aligned}$$

Similarly, we obtain \( g'(x_{\iota })=\left[ {\varvec{\varPhi }}^{(1)}\mathbbm {d}\right] _{\iota }\ \forall \iota :m_{\iota }\ge 1\); \(f''(x_{\iota })=\left[ {\varvec{\varPsi }}^{(2)}\mathbbm {c}\right] _{\iota }\ \forall \iota :n_{\iota }\ge 1\); and \(g''(x_{\iota })=\left[ {\varvec{\varPhi }}^{(2)}\mathbbm {d}\right] _{\iota }\ \forall \iota :m_{\iota }\ge 1\). \(\square \)