Abstract

The visual appearance of a person is easily affected by many factors like pose variations, viewpoint changes and camera parameter differences. This makes person Re-Identification (ReID) among multiple cameras a very challenging task. This work is motivated to learn mid-level human attributes which are robust to such visual appearance variations. And we propose a semi-supervised attribute learning framework which progressively boosts the accuracy of attributes only using a limited number of labeled data. Specifically, this framework involves a three-stage training. A deep Convolutional Neural Network (dCNN) is first trained on an independent dataset labeled with attributes. Then it is fine-tuned on another dataset only labeled with person IDs using our defined triplet loss. Finally, the updated dCNN predicts attribute labels for the target dataset, which is combined with the independent dataset for the final round of fine-tuning. The predicted attributes, namely deep attributes exhibit superior generalization ability across different datasets. By directly using the deep attributes with simple Cosine distance, we have obtained surprisingly good accuracy on four person ReID datasets. Experiments also show that a simple distance metric learning modular further boosts our method, making it significantly outperform many recent works.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

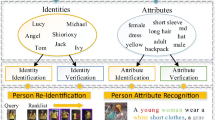

Person Re-Identification (ReID) targets to identify the same person from different cameras, datasets, or time stamps. As illustrated in Fig. 1, factors like viewpoint variations, illumination conditions, camera parameter differences, as well as body pose changes make person ReID a very challenging task. Due to its important applications in public security, e.g., cross camera pedestrian searching, tracking, and event detection, person ReID has attracted lots of attention from both the academic and industrial communities. Currently, research on this topic mainly focus on two aspects: (a) extracting and coding local invariant features to represent the visual appearance of a person [1–7] and (b) learning a discriminative distance metric hence the distance of features from the same person can be smaller [8–25].

Although significant progress has been made from previous studies, person ReID methods are still not mature enough for real applications. Local features mostly describe the low-level visual appearance, hence are not robust to variances of viewpoints, body poses, etc. On the other side, distance metric learning suffers from the poor generalization ability and the quadratic computational complexity, e.g., different datasets present different visual characteristics corresponding to different metrics. Compared with low-level visual feature, human attributes like long hair, blue shirt, etc., represent mid-level semantics of a person. As illustrated in Fig. 1, attributes are more consistent for the same person and are more robust to the above mentioned variances. Some recent works hence have started to use attributes for person ReID [29–34]. Because human attributes are expensive for manual annotation, it is difficult to acquire enough training data for a large set of attributes. This limits the performance of current attribute features. Consequently, low-level visual features still play a key role and attributes are mostly used as auxiliary features [31–34].

Recently, deep learning has exhibited promising performance and generalization ability in various visual tasks. For example in [35], an eight-layer deep Convolutional Neural Network (dCNN) is trained with large-scale images for visual classification. The modified versions of this network also perform impressively in object detection [36] and segmentation [37]. Motivated by the issues of low level visual features and the success of dCNN, our work targets to learn a dCNN to detect a large set of human attributes discriminative enough for person ReID. Due to the diversity and complexity of human attributes, it is a laborious task to manually label enough of attributes for dCNN training. The key issues are hence how to train this dCNN from a partially-labeled dataset and ensure its discriminative power and generalization ability in the person ReID tasks.

To address these issues, we propose a Semi-supervised Deep Attribute Learning (SSDAL) algorithm. As illustrated in Fig. 2, this algorithm involves three stages. The first stage uses an independent dataset with attribute labels to perform fully-supervised dCNN training. The resulting dCNN produces initial attribute labels for the target dataset. To improve the discriminative power of these attributes for ReID task, we start the second stage of training, i.e., fine-tuning the network using the person ID labels and our defined attributes triplet loss. The training data for fine-tuning can be easily collected because the person ID labels are readily accessible in many person tracking datasets. The attributes triplet loss updates the network to enforce that the same person has more similar attributes and vice versa. This fine-tuned dCNN hence predicts initial attribute labels for target datasets. Finally in the third stage, the initially labeled target dataset plus the original independent dataset are combined for the final stage of fine-tuning. The attributes predicted by the final dCNN model are named as deep attributes. In this manner, the dCNN is firstly trained with the independent dataset, then is refined to acquire more discriminative power for person ReID task. Because this procedure involves one dataset with attribute labels and another without attribute labels, we call it a semi-supervised learning.

To validate the performance of deep attributes, we test them on four popular person ReID datasets without combining with the local visual features. The experimental results show that deep attributes perform impressively, e.g., they significantly outperform many recent works combining both attributes and local features [31–34]. Note that, predicting and matching deep attributes make person ReID system significantly faster, because it no longer needs to extract and code local features, compute distance metric, and fuse with other features.

Our contributions can be summarized as follows: (1) we propose a three-stage semi-supervised deep attribute learning algorithm, which makes learning a large set of human attributes from a limited number of labeled attribute data possible, (2) deep attributes achieve promising performance and generalization ability on four person ReID datasets, and (3) deep attributes release the previous dependencies on local features, thus make the person ReID system more robust and efficient. To the best of our knowledge, this is an original work predicting human attributes using dCNN for person ReID tasks. The promising results of this work guarantees further investigation in this direction.

2 Related Work

This work learns a dCNN for attribute prediction and person ReID. It is closely related to works using deep learning for attribute prediction and person ReID.

Currently, many studies have applied deep learning to attributes learning [38, 39]. Shankar et al. [38] propose a deep-carving neural net to learn attributes for natural scene images. Chen et al. [39] use a double-path deep domain adaptation network to get the fine-grained clothing attributes. Our work differs from them in the aspects of motivation and methodology. We are motivated by how to learn attributes of the human cropped from surveillance videos from a small set of data labeled with attributes. Our semi-supervised learning framework consistently boosts the discriminative power of dCNN and attributes for person ReID.

Inspired by the promising performance of deep learning, some researchers begin to use deep learning to learn visual features and distance metrics for person ReID [24, 40–42]. In [40], Li et al. use a deep filter pairing neural network for person ReID, where two paired filters of two cameras are used to automatically learn optimal features. In [41], Yi et al. present a “siamese” convolutional network for deep distance metric learning. In [42], Ahmed et al. devise a deep neural network structure to transform person re-identification into a problem of binary classification, which judges whether a pair of images from two cameras is the same person. In [24], Ding et al. present a scalable distance learning framework based on the deep neural network with the triplet loss. Despite of their efforts to find better visual features and distance metrics, the above mentioned works are designed specifically for certain datasets and are dependent on their camera settings. Differently, we use deep learning to acquire general camera-independent mid-level representations. As a result, our algorithm shows better flexibility, e.g., it could handle person ReID tasks on datasets containing different number of cameras.

Some recent works also use triplet loss for person ReID [19, 43]. Our work uses attributes triplet loss for dCNN fine-tuning. This differs from the goals in these works, i.e., learning distance metric among low-level features. Therefore, these works also suffer from the low flexility and the quadratic complexity.

3 Proposed Approach

3.1 Framework

Our goal is to learn a large set of human attributes for person ReID through dCNN training. We define \(A = \{a_1,a_2,...,a_K\}\) as an attribute label containing K attributes, where \(a_i\in \{0,1\}\) is the binary indicator of the i-th attribute. Our goal is hence learning an attribute detector \(\mathcal {O}\), which predicts the attribute label \(A_I\) for any input image I, i.e.,

Because of the promising discriminative power and generalization ability, we use dCNN model as the detector \(\mathcal {O}(\cdot )\). However, dCNN training requires large-scale training data labeled with human attributes. Manually collecting such data is also too expensive to conduct. To ensure effective learning of a dCNN model for person ReID from only a small amount of labeled training data, we propose the Semi-supervised Deep Attribute Learning (SSDAL) algorithm.

As illustrated in Fig. 2, the basic idea of SSDAL is firstly training an initial dCNN on an independent dataset labeled with attributes. The limited scale and label accuracy of the independent dataset motivate us to introduce the second stage of training, which utilizes the easily acquired person ID labels to refine the initial dCNN. The updated dCNN hence initially labels the target dataset by predicting attribute labels. Finally, the independent dataset plus the initially labeled target dataset are combined for the final stage of fine-tuning. In the followings, we introduce the three stages of training in detail.

3.2 Fully-Supervised dCNN Training

We define the independent training set with attribute labels as \(T=\{t_1,t_2,...,t_N\}\), where N is the number of samples. In T, each sample is labeled with a binary attribute label, e.g., the label of the n-th instance \(t_n\) is \(A_n\).

In the first stage of training, we use T as the training set for fully-supervised learning. We refer to the AlexNet [35] to build our dCNN model for its promising performance in various vision tasks. Specifically, our dCNN is also a 8-layer network, including 5 convolutional layers and 3 fully connected layers, where the 3rd fully connected layer predicts the attribute labels. The kernel and filter sizes of each layer in our architecture are the same with the ones in [35, 38]. The only difference with AlexNet is that we use a sigmoid cross-entropy loss layer instead of the softmax loss layer for its better performance in multi-label prediction. We denote the dCNN model learned in this stage as \(\mathcal {O}^{S1}\). \(\mathcal {O}^{S1}\) could predict attribute labels for any test sample. However, as illustrated in our experiments, the discriminative power of \(\mathcal {O}^{S1}\) is weak because of the limited scale and label accuracy of the independent training set. We proceed to introduce our second stage of training.

3.3 dCNN Fine-Tuning with Attributes Triplet Loss

In the second stage, a larger dataset is used to fine tune the previous dCNN model \(\mathcal {O}^{S1}\). The goal of our dCNN model is predicting attribute labels for person ReID tasks. The predicted attribute labels thus should be similar for the same person. Motivated by this, we use person ID labels to fine-tune \(\mathcal {O}^{S1}\) and produce similar attribute labels for the same person and vice versa. We denote the dataset with person ID labels as \(U=\{u_1,u_2,...,u_M\}\), where M is the number of samples and each sample has a person ID label l, e.g., the m-th instance \(u_m\) has person ID \(l_m\).

In the second stage of training, we first use \(\mathcal {O}^{S1}\) to predict the attribute label \(\tilde{A}\) of each sample in U. For the attribute label \(\tilde{A_m}\) of the m-th sample, we set the indicators of attributes with top p highest confidence scores as 1 and set the others as 0. Note that, p can be selected according to the average number of positive attributes in person ReID tasks. It is experimentally set as 10 in this paper. After this, we use the person ID labels to measure the annotation errors of \(\mathcal {O}^{S1}\).

The annotation error of the \(\mathcal {O}^{S1}\) is computed among three samples. The three samples are randomly selected from the U through the following steps: (1) select an anchor sample \(u_{(a)}\), (2) select another positive sample \(u_{(p)}\) with the same person ID with \(u_{(a)}\), and (3) select a negative sample \(u_{{n}}\) with different person ID. Thus, a triplet \([u_{(a)}, u_{(p)}, u_{(n)}]\) is constructed, where the subscripts (a), (p), and (n) denote anchor, positive, and negative samples, respectively. The attributes of the e-th triplet predicted by \(\mathcal {O}^{S1}\) are \(\tilde{A}_{(a)}^{(e)}\), \(\tilde{A}_{(p)}^{(e)}\), and \(\tilde{A}_{(n)}^{(e)}\) at the beginning of the fine-tuning, respectively.

The objectives of the fine-tuning is minimizing the triplet loss through updating the \(\mathcal {O}^{S1}\), i.e., minimize the distance between the attributes of \(u_{(a)}\) and \(u_{(p)}\), meanwhile maximize the distance between \(u_{(a)}\) and \(u_{(n)}\). We call this triplet loss as attributes triplet loss. We hence could formulate our objective function for fine-tuning as:

where \(\mathbf {D}(.)\) represents the distance function of the two binary attribute vectors, \(A_{(a)}^{(e)}\), \(A_{(p)}^{(e)}\) and \(A_{(n)}^{(e)}\) are predicted attributes of the e-th triplet during the fine-tuning. Then, the corresponding loss function can be formulated as:

where E represents the number of triplets. In Eq. (3), if the \(\mathbf {D}\left( A_{(a)}^{(e)},A_{(n)}^{(e)}\right) -\mathbf {D}\left( A_{(a)}^{(e)}, A_{(p)}^{(e)}\right) \) is larger than \(\theta \), the loss would be zero. Therefore, parameter \(\theta \) largely controls the strictness of the loss.

The above loss function essentially enforces the dCNN to produce similar attributes for the same person. However, the person ID label is not strong enough to train the dCNN with accurate attributes. Without proper constraints, the above loss function may generate meaningless attribute labels and easily over-fit the training dataset U. For example, imposing a large number meaningless attributes to two samples of a person may decrease the distance between their attribute labels, but does not help to improve the discriminative power of the dCNN. Therefore, we add several regularization terms and modify the original loss function as:

where \(\mathcal {E}\) denotes the amount of change in attributes caused by the fine-tuning. The loss in Eq. (4) not only ensures that the same person has similar attributes, but also avoids the meaningless attributes. We hence use the above loss to update the \(\mathcal {O}^{S1}\) with back propagation. We denote the resulting update dCNN as \(\mathcal {O}^{S2}\).

3.4 Fine-Tuning on the Combined Dataset

The fine-tuning in previous stage produces more accurate attribute labels. We thus consider to combine the T and U for the final round of fine-tuning. As illustrated in Fig. 2, in the third stage, we first predict the attribute labels for dataset U with \(\mathcal {O}^{S2}\). A new dataset labeled with attribute labels can hence be generated by merging T and U. Then, we fine-tune \(\mathcal {O}^{S2}\) using sigmoid cross entropy loss on the dataset \( T \& U\), which outputs the final attribute detector \(\mathcal {O}\).

For any test image, we can predict its K-dimensional attribute label with Eq. (1). In our implementation, we only select the attributes whose confidence values predicted by \(\mathcal {O}\) are larger than a specified threshold as positive, where the confidence threshold is experimentally set as 0. This essentially selects more accurate attributes. Finally, \(\mathcal {O}\) produces a sparse binary K-dimensional attribute vector. Our person ReID system uses this binary vector as feature and measures their distance with Cosine distance to identify the same person. The validity of this three-stage training procedure and the performance of selected attributes will be tested in Sect. 4.

4 Experiments

4.1 Datasets for Training and Testing

To conduct the first stage training, we choose the PETA [44] dataset as the training set. Each image in PETA is labeled with 61 binary attributes and 4 multi-class attributes. The 4 multi-class attributes are footwear, hair, lowerbody and upperbody, each of which has 11 color labels including Black, Blue, Brown, Green, Grey, Orange, Pink, Purple, Red, White, and Yellow, respectively. We hence expand 4 multi-class attributes into 44 binary attributes, resulting in a 105-dimensional binary attribute label. For the second stage training, we choose the MOT challenge [45] dataset to fine-tune dCNN \(\mathcal {O}^{S1}\) with attributes triplet loss. MOT challenge is a dataset designed for multi-target tracking and provides the trajectories of each person. We thus could get the bounding box and ID label of each person. And we use more than 20,000 images on MOT challenge.

To evaluate our model, we choose VIPeR [26], PRID [27], GRID [28], and Market [46] as test sets. Note that, VIPeR, GRID and PRID are included in the PETA dataset. When we test our algorithm on them, they will be excluded from the training set. For example, when we use the VIPeR for person ReID test, none of its images will be used for dCNN training. We do not use the CUHK for testing, because it takes nearly one third of images in PETA. If it is excluded, the samples for dCNN training will be insufficient.

4.2 Implementation Details

We select AlexNet [35] as our base dCNN architecture. We use the same kernel and filter sizes for all the hidden layers. For the loss layers of our first stage dCNN \(\mathcal {O}^{S1}\) and third stage dCNN \(\mathcal {O}\), we use the sigmoid cross-entropy loss layer, because each input sample has multiple positive attribute labels. We learn 105 binary attributes from PETA. When we fine-tune dCNN with attributes triplet loss, we follow the standard triplet loss algorithm [47] to select samples. First randomly select the anchor samples \(u_{(a)}\). Then, we select samples with the same person ID with \(u_{(a)}\) but substantially different attribute labels as positive samples \(u_{(p)}\). Samples from other persons having similar attribute labels with \(u_{(a)}\) are selected as negative samples \(u_{(n)}\). Since each person only has 15 out of 105 positive attributes in average on training datasets, We select \(p=10\) attributes only for initialization in Stage 2, because they can be predicted with higher accuracy, i.e., \(15*60\,\% (the average of classification accuracy for testing) =9\). Moreover, we select \(O=0\) to ensure most testing images include near 15 positive attributes. Parameters for learning are empirically set via cross-validation. The \(\theta \) and \(\gamma \) in Eq. 4 are set as 1 and 0.01, respectively. We implement our approach with GTX TITAN X GPU, Intel i7 CPU, and 32 GB memory.

4.3 Accuracy of Predicted Attributes

In the first experiment, we test the accuracy of predicted attributes on three datasets, VIPeR, PRID and GRID, as well as show the effects of combining different training stages. For any input image of a person, if its GroundTruth has n positive attributes, we compare the top n predicted attributes against the GroundTruth to compute the classification accuracy. The results are summarized in Fig. 3. \(Stage_1\) denotes the baseline dCNN \(\mathcal {O}^{S1}\). \( Stage_{1 \& 3}\) first labels U with \(\mathcal {O}^{S1}\), then combines U and T to fine-tune the \(\mathcal {O}^{S1}\). \( Stage_{1 \& 2}\) denotes the updated dCNN \(\mathcal {O}^{S2}\) after the second stage training. SSDAL denotes our final dCNN after the third stage training. From the experimental results, we can draw the following conclusions:

-

(1)

Although \( Stage_{1 \& 3}\) uses larger training set, it does not constantly outperform the baseline. This is because the expanded training data is labeled by \(\mathcal {O}^{S1}\), and it does not provide new cues for fine-tuning \(\mathcal {O}^{S1}\) in stage-3.

-

(2)

\(\mathcal {O}^{S2}\) produced by \( Stage_{1 \& 2}\) does not constantly outperform baseline. This maybe because the weak person ID labels. Also, only updating the easily over-fitted fully-connected layers with triplet loss may degrade the generalization ability of \(\mathcal {O}^{S2}\) on other datasets besides U.

-

(3)

SSDAL is able to improve the accuracy of baseline by \(1.2\,\%\) in average on three datasets. This demonstrates our three-stage training framework can learn more robust semantic attributes. To intuitively show the accuracy of predicted attributes, we use the dCNN trained by SSDAL to predict attributes on MOT challenge dataset. Some examples are illustrated in Fig. 4.

4.4 Performance on Two-Camera Datasets

This experiment tests deep attributes on two-camera person ReID tasks. Three datasets are employed. 10 random tests are first performed for each dataset. Then, the average Cumulative Match Characteristic (CMC) curves of these tests are calculated and used for performance evaluation. The experimental settings on three datasets are introduced as follows:

VIPeR: 632 persons are included in the VIPeR dataset. Two images with size \(48\times 128\) of each person are taken by camera A and camera B, respectively in different scenarios of illumination, postures and viewpoints. Different from most of existing algorithms, our SSDAL does not need training on the target dataset. To make fair comparison with other algorithms, we use similar settings for performance evaluation, i.e., randomly selecting 10 test sets, and each contains 316 persons.

PRID: This dataset is specially designed for person ReID in single shot. It contains two image sets containing 385 and 749 persons captured by camera A and camera B, respectively. These two datasets share 200 persons in common. For the purpose of fair comparison with other algorithms, we follow the protocol in [27], and create a probe set and a gallery set, where all training samples are excluded. The probe set includes images of 100 persons from camera A. The gallery set is made up of images from 649 persons capture by camera B.

GRID: This dataset includes images collected by 8 non-adjacent cameras fixed at a subway station. The probe set contains images of about 250 persons. The gallery set contains images of about 1025 persons, among which 775 persons do not match anyone in the probe set. For the purpose of fair comparison, images of 125 persons shared by the two sets are employed for training. The remaining 125 persons and 775 distracters are used for the testing.

Compared Algorithms: We compare our approach with many recent works. Compared works that learn distance metrics for person ReID include RPML [10], PRDC [17], RSVM [52], Salmatch [48], LMF [49], PCCA [9], KISSME [13], kLFDA [14], KCCA [50],TSR [51], EPKFM [19],LOMO + XQDA [20],MRank-PRDC [28], MRank-RSVM [28], RQDA [53], MLAPG [23] and CSL [22]. Compared works based on traditional attribute learning are AIR [29], OAR [31] and LOREA [34]. Related works that leverage deep learning include DML [41], IDLA [42] and Deep-RDC [24]. The compared CMC scores at different ranks on three datasets are shown in Tables 1, 2 and 3, respectively.

The three tables clearly show that, even it is not fine-tuned with extra data, the baseline dCNN \(\mathcal {O}^{S1}\) achieves fairly good results on three datasets, especially on PRID and GRID. Additionally, if we fine-tune the baseline dCNN using our attributes triplet loss, we achieve an additional \(3.4\,\%\) improvement at rank 1 on VIPeR, \(1.4\,\%\) on PRID, and \(5.3\,\%\) on GRID, respectively. This indicates that our three-stage training framework improves the performance by progressively adding more information into the training procedure.

Our SSDAL algorithm has surpassed all existing algorithms on the PRID and GRID datasets. Some recent works like AIR [29], OAR [31], and LOREA [34] also learn attributes for person ReID. The comparison in Table 1 clearly shows the advantages of our deep model in attribute prediction. Some previous works like DML [41], IDLA [42] and Deep-RDC [24] take advantages of deep learning in person ReID. Different from them, our work generates camera-independent mid-level attributes, which can be used as discriminative features for identifying persons on different datasets. The experiments results in Table 1 also show that our method outperforms these works.

Because we use the predicted binary attributes as features for person ReID, we can also learn a distance metric to further improve the ReID accuracy. We select XQDA [20] for the distance metric learning. As can be seen from three tables, our approach with XQDA [20], i.e., SSDAL + XQDA, achieves the best accuracy at rank 1 on all the three datasets. It also constantly outperforms all the other algorithms at various ranks on PRID and GRID. This clearly proves that our work can easily combine with existing distance metric learning works to further boost the performance.

4.5 Performance on Multi-camera Dataset

We further test our approach in a more challenging multi-camera person ReID task. We employ the Market dataset [46], where more than 25,000 images of 1501 labeled persons are collected from 6 cameras. Each person has 17 images in average, which show substantially different appearances due to variances of viewpoints, illumination, backgrounds, etc. This dataset is also larger than most of existing person ReID datasets. Because Market has clearly provided the training set, we use images in the training set and their person ID labels to fine-tune our dCNN \(\mathcal {O}^{S2}\).

In contrast to the two-camera person ReID task, the multi-camera person ReID targets to identify the query person across image sets from multiple cameras. Therefore, our task is to query and rank all images from these cameras, according to the given probe image (i.e., Single Query) or tracklet (i.e., Multiple Query) of a person. Because this process is similar to image retrieval, we evaluate the performance by mean Average Precision (mAP) and accuracy at Rank 1, following the protocol in [46]. The results are shown in Fig. 5. MultiQ_avg and MultiQ_max denote applying average and max pooling to acquire the final feature for a person’s tracklet. More details about feature pooling can be found in [46].

From Fig. 5, we can observe that our approach outperforms all the compared methods by a large margin for both single query and multi-query scenarios. For the multiple query scenario, our method successfully boosts the mAP from 18.5 % to 25.8 %, resulting in an 7.3 % absolute improvement. This indicates that our method is also superior to other methods in more challenging multi-camera person ReID tasks. This experiment also shows that our learned deep attributes are robust to significant appearance variations among multiple cameras.

4.6 Discussions

In this part, we further discuss some interesting aspects of our method that may have been missed in the above experimental evaluations.

By using attributes features of only 105 dimensions, our method achieves promising performance on four public datasets. It is interesting to see the ReID performance after combining the compact attribute features and classic visual features. To verify this point, we integrate the appearance-based features with attributes features for better discriminative power. Table 4 shows the performance of fusing deep attributes with appearance-based feature LOMO [20], i.e., LOMO + XQDA + SSDAL. It is obvious that fusing appearance-based features further improves SSDAL, e.g., CMC score achieves 45.3 at Rank-1. Therefore, combining with visual feature would further ensure the performance of attributes features in real applications.

Many image retrieval works use the output of FC-7 layer in AlexNet as image feature. Therefore, another way of learning mid-level feature for person ReID is fine-tunning the FC-7 layer with triplet loss similar to the one in SSDAL, i.e., updating the dCNN to make same person have similar FC-7 layer features and vice versa. The FC-7 features learned in this way are also not limited to the 105 dimensions, thus might be more discriminative than attributes. To test the validity of this strategy, we fine-tune the FC-7 layer of AlexNet using person ID labels on different datasets, i.e., T, U, and \(T+U\), respectively. Experimental results in Table 4 clearly indicates that that deep attributes outperforms such FC7 features. This clearly validates the contribution and importance of attributes.

5 Conclusions and Future Work

In this paper, we address the person ReID problem using deeply learned human attribute features. We propose a novel Semi-supervised Deep Attribute Learning(SSDAL) algorithm. With our attributes triplet loss, images only with person ID labels can be used for training attribute detectors in a dCNN framework. Extensive experiments on four benchmark datasets demonstrate that our method is robust in attribute detection and substantially outperforms previous person ReID methods. In addition, our algorithm does not need further training on the target datasets. This means we can train the attribute prediction dCNN model only for one time, and it would work for person ReID tasks on different datasets. The dCNN model fine-tuning only requires images with person ID labels, which can be easily obtained by Multi-target Tracking algorithms. Considering the spatial locations and correlations of attributes might further improve the accuracy of attribute detection. These would be our future work.

References

Farenzena, M., Bazzani, L., Perina, A., Murino, V., Cristani, M.: Person re-identification by symmetry-driven accumulation of local features. In: CVPR (2010)

Cheng, D.S., Cristani, M., Stoppa, M., Bazzani, L., Murino, V.: Custom pictorial structures for re-identification. In: BMVC (2011)

Ma, B., Su, Y., Jurie, F.: Bicov: a novel image representation for person re-identification and face verification. In: BMVC (2012)

Liu, C., Gong, S., Loy, C.C., Lin, X.: Person re-identification: what features are important? In: ECCV (2012)

Zhao, R., Ouyang, W., Wang, X.: Unsupervised salience learning for person re-identification. In: CVPR (2013)

Wang, X., Zhao, R.: Person re-identification: system design and evaluation overview. In: Gong, S., Cristani, M., Yan, S., Loy, C.C. (eds.) Person Re-Identification, pp. 351–370. Springer, Heidelberg (2014)

Zheng, L., Wang, S., Tian, L., He, F., Liu, Z., Tian, Q.: Query-adaptive late fusion for image search and person re-identification. In: CVPR (2015)

Ma, A.J., Yuen, P.C., Li, J.: Domain transfer support vector ranking for person re-identification without target camera label information. In: ICCV (2013)

Dikmen, M., Akbas, E., Huang, T.S., Ahuja, N.: Pedestrian recognition with a learned metric. In: Kimmel, R., Klette, R., Sugimoto, A. (eds.) ACCV 2010, Part IV. LNCS, vol. 6495, pp. 501–512. Springer, Heidelberg (2011)

Hirzer, M., Roth, P.M., Köstinger, M., Bischof, H.: Relaxed pairwise learned metric for person re-identification. In: Fitzgibbon, A., Lazebnik, S., Perona, P., Sato, Y., Schmid, C. (eds.) ECCV 2012, Part VI. LNCS, vol. 7577, pp. 780–793. Springer, Heidelberg (2012)

Pedagadi, S., Orwell, J., Velastin, S., Boghossian, B.: Local fisher discriminant analysis for pedestrian re-identification. In: CVPR (2013)

Yan, S., Xu, D., Zhang, B., Zhang, H.J., Yang, Q., Lin, S.: Graph embedding and extensions: a general framework for dimensionality reduction. PAMI 29, 40–51 (2007)

Köstinger, M., Hirzer, M., Wohlhart, P., Roth, P.M., Bischof, H.: Large scale metric learning from equivalence constraints. In: CVPR (2012)

Xiong, F., Gou, M., Camps, O., Sznaier, M.: Person re-identification using kernel-based metric learning methods. In: Fleet, D., Pajdla, T., Schiele, B., Tuytelaars, T. (eds.) ECCV 2014, Part VII. LNCS, vol. 8695, pp. 1–16. Springer, Heidelberg (2014)

Liu, C., Loy, C.C., Gong, S., Wang, G.: POP: person re-identification post-rank optimisation. In: ICCV (2013)

Li, Z., Chang, S., Liang, F., Huang, T.S., Cao, L., Smith, J.R.: Learning locally-adaptive decision functions for person verification. In: CVPR (2013)

Zheng, W.S., Gong, S., Xiang, T.: Re-identification by relative distance comparison. In: CVPR (2013)

Wang, T., Gong, S., Zhu, X., Wang, S.: Person re-identification by video ranking. In: Fleet, D., Pajdla, T., Schiele, B., Tuytelaars, T. (eds.) ECCV 2014, Part IV. LNCS, vol. 8692, pp. 688–703. Springer, Heidelberg (2014)

Chen, D., Yuan, Z., Hua, G., Zheng, N., Wang, J.: Similarity learning on an explicit polynomial kernel feature map for person re-identification. In: CVPR (2015)

Liao, S., Hu, Y., Zhu, X., Li, S.Z.: Person re-identification by local maximal occurrence representation and metric learning. In: CVPR (2015)

Chen, Y.C., Zheng, W.S., Lai, J.: Mirror representation for modeling view-specific transform in person re-identification. In: IJCAI (2015)

Shen, Y., Lin, W., Yan, J., Xu, M., Wu, J., Wang, J.: Person re-identification with correspondence structure learning. In: ICCV (2015)

Liao, S., Li, S.Z.: Efficient PSD constrained asymmetric metric learning for person re-identification. In: ICCV (2015)

Ding, S., Lin, L., Wang, G., Chao, H.: Deep feature learning with relative distance comparison for person re-identification. Pattern Recogn. 48(10), 2993–3003 (2015)

Peng, P., Xiang, T., Wang, Y., Pontil, M., Gong, S., Huang, T., Tian, Y.: Unsupervised cross-dataset transfer learning for person re-identification. In: CVPR (2016)

Gray, D., Brennan, S., Tao, H.: Evaluating appearance models for recognition, reacquisition, and tracking. In: PETS (2007)

Hirzer, M., Beleznai, C., Roth, P.M., Bischof, H.: Person re-identification by descriptive and discriminative classification. In: Heyden, A., Kahl, F. (eds.) SCIA 2011. LNCS, vol. 6688, pp. 91–102. Springer, Heidelberg (2011)

Loy, C.C., Liu, C., Gong, S.: Person re-identification by manifold ranking (2013)

Layne, R., Hospedales, T.M., Gong, S., Mary, Q.: Person re-identification by attributes. In: BMVC (2012)

Layne, R., Hospedales, T.M., Gong, S.: Towards person identification and re-identification with attributes. In: ECCV Workshops (2012)

Layne, R., Hospedales, T.M., Gong, S.: Attributes-based re-identification. In: Gong, S., Cristani, M., Yan, S., Loy, C.C. (eds.) Person Re-Identification, 93–117. Springer, Heidelberg (2014)

Layne, R., Hospedales, T.M., Gong, S.: Re-id: Hunting attributes in the wild. In: BMVC (2014)

Su, C., Yang, F., Zhang, G., Tian, Q., gao, W., Davis, L.: Tracklet-to-tracklet person re-identification by attributes with discriminative latent space mapping. In: ICMS (2015)

Su, C., Yang, F., Zhang, S., Tian, Q., Davis, L.S., Gao, W.: Multi-task learning with low rank attribute embedding for person re-identification. In: ICCV (2015)

Krizhevsky, A., Sutskever, I., Hinton, G.: Imagenet classification with deep convolutional neural networks. In: NIPS (2012)

Girshick, R., Donahue, J., Darrell, T., Malik, J.: Region-based convolutional networks for accurate object detection and segmentation. In: PAMI (2015)

Long, J., Shelhamer, E., Darrell, T.: Fully convolutional networks for semantic segmentation. In: CVPR (2014)

Shankar, S., Garg, V.K., Cipolla, R.: Deep-carving: discovering visual attributes by carving deep neural nets. In: CVPR (2015)

Chen, Q., Huang, J., Feris, R., Brown, L.M., Dong, J., Yan, S.: Deep domain adaptation for describing people based on fine-grained clothing attributes. In: CVPR (2015)

Li, W., Zhao, R., Xiao, T., Wang, X.: Deepreid: Deep filter pairing neural network for person re-identification. In: CVPR (2014)

Yi, D., Lei, Z., Li, S.Z.: Deep metric learning for practical person re-identification. In: ICPR (2014)

Ahmed, E., Jones, M., Marks, T.K.: An improved deep learning architecture for person re-identification. In: CVPR (2015)

Paisitkriangkrai, S., Shen, C., Hengel, A.v.d.: Learning to rank in person re-identification with metric ensembles. In: CVPR (2015)

Deng, Y., Luo, P., Loy, C.C., Tang, X.: Pedestrian attribute recognition at far distance. In: ACM MM (2014)

Leal-Taixé, L., Milan, A., Reid, I., Roth, S., Schindler, K.: Motchallenge 2015: Towards a benchmark for multi-target tracking. arXiv preprint arXiv:1504.01942 (2015)

Zheng, L., Shen, L., Tian, L., Wang, S., Wang, J., Tian, Q.: Scalable person re-identification: a benchmark. In: ICCV (2015)

Schroff, F., Kalenichenko, D., Philbin, J.: Facenet: a unified embedding for face recognition and clustering. In: CVPR (2015)

Zhao, R., Ouyang, W., Wang, X.: Person re-identification by salience matching. In: ICCV (2013)

Zhao, R., Ouyang, W., Wang, X.: Learning midlevel filters for person reidentification. In: CVPR (2014)

Lisanti, G., Masi, I., Del Bimbo, A.: Matching people across camera views using kernel canonical correlation analysis. In: ICDSC (2014)

Shi, Z., Hospedales, T.M., Xiang, T.: Transferring a semantic representation for person re-identification and search. In: CVPR (2015)

Prosser, B., Zheng, W.S., Gong, S., Xiang, T., Mary, Q.: Person re-identification by support vector ranking. In: BMVC (2010)

Liao, S., Hu, Y., Li, S.Z.: Joint dimension reduction and metric learning for person re-identification. arXiv preprint arXiv:1406.4216 (2014)

Acknowledgements

This work was supported in part to Dr. Qi Tian by ARO grants W911NF-15-1-0290 and Faculty Research Gift Awards by NEC Laboratories of America and Blippar. This work was supported in part by National Science Foundation of China (NSFC) 61429201 and 61303178. This work was supported in part to Dr. Shiliang Zhang by National Science Foundation of China (NSFC) 61572050 and 91538111.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2016 Springer International Publishing AG

About this paper

Cite this paper

Su, C., Zhang, S., Xing, J., Gao, W., Tian, Q. (2016). Deep Attributes Driven Multi-camera Person Re-identification. In: Leibe, B., Matas, J., Sebe, N., Welling, M. (eds) Computer Vision – ECCV 2016. ECCV 2016. Lecture Notes in Computer Science(), vol 9906. Springer, Cham. https://doi.org/10.1007/978-3-319-46475-6_30

Download citation

DOI: https://doi.org/10.1007/978-3-319-46475-6_30

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-46474-9

Online ISBN: 978-3-319-46475-6

eBook Packages: Computer ScienceComputer Science (R0)