Abstract

This chapter begins with a brief history of optical communication before describing the main components of a modern optical communication system. Specific attention is paid to the development of low-loss optical fibers as they played an essential role after 1975. The evolution of fiber-optic communication systems is described through its six generations over a 40-year time period ranging from 1975 to 2015. The adoption of wavelength-division multiplexing (WDM) during the 1990s to meet the demand fueled by the advent of the Internet is discussed together with the bursting of the telecom bubble in 2000. Recent advances brought by digital coherent technology and space-division multiplexing are also described briefly.

You have full access to this open access chapter, Download chapter PDF

Similar content being viewed by others

Keywords

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

1 Historical Perspective

The use of light for communication purposes dates back to antiquity if we interpret optical communication in a broad sense, implying any communication scheme that makes use of light. Most civilizations have used mirrors, fire beacons, or smoke signals to convey a single piece of information (such as victory in a war). For example, it is claimed that the Greeks constructed in 1084 b.c. a 500-km-long line of fire beacons to convey the news of the fall of Troy [1]. The chief limitation of such a scheme is that the information content is inherently limited and should be agreed upon in advance. Attempts were made throughout history to increase the amount of transmitted information. For example, the North American Indians changed the color of a smoke signal for this purpose. Similarly, shutters were used inside lighthouses to turn the beacon signal on and off at predetermined intervals. This idea is not too far from our modern schemes in which information is coded on the light emitted by a laser by modulating it at a high speed [2].

In spite of such clever schemes, the distance as well as the rate at which information could be transmitted using semaphore devices was quite limited even during the eighteenth century. A major advance occurred in 1792 when Claude Chappe came up with the idea of transmitting mechanically coded messages over long distances through the use of intermediate relay stations (10–15 km apart) that acted as repeaters in the modern-day language [3]. Figure 8.1 shows the inventor and his basic idea schematically. Chappe called his invention optical telegraph and developed a coding scheme shown in Fig. 8.1 to represent the entire alphabet through different positions of two needles. This allowed transmission of whole sentences over long distances. The first such optical telegraph was put in service in July 1794 between Paris and Lille (two French cities about 200 km apart). By 1830, the network had expanded throughout Europe [4]. The role of light in such systems was simply to make the coded signals visible so that they could be intercepted by the relay stations. The opto-mechanical communication systems of the nineteenth century were naturally slow. In modern-day terminology, the effective bit rate of such systems was less than 1 bit/s; a bit is the smallest unit of information in a binary system.

The advent of the electrical telegraph in the 1830s replaced the use of light by electricity and began the era of electrical communications [5]. The bit rate B could be increased to a few bits/s by using new coding techniques such as the Morse code. The use of intermediate relay stations allowed communication over long distances. Indeed, the first successful transatlantic telegraph cable went into operation in 1866. Telegraphy employed a digital scheme through two electrical pulses of different durations (dots and dashes of the Morse code). The invention of the telephone in 1876 brought a major change inasmuch as electric signals were transmitted in an analog form through a continuously varying electric current [6]. Analog electrical techniques dominated communication systems until the switch to optical schemes 100 years later.

The development of a worldwide telephone network during the twentieth century led to many advances in electrical communication systems. The use of coaxial cables in place of twisted wires increased system capacity considerably. The first coaxial-cable link, put into service in 1940, was a 3-MHz system capable of transmitting 300 voice channels (or a single television channel). The bandwidth of such systems was limited by cable losses, which increase rapidly for frequencies beyond 10 MHz. This limitation led to the development of microwave communication systems that employed electromagnetic waves at frequencies in the range of 1–10 GHz. The first microwave system operating at the carrier frequency of 4 GHz was put into service in 1948. Both the coaxial and microwave systems can operate at bit rates \( \sim \) 100 Mbit/s. The most advanced coaxial system was put into service in 1975 and operated at a bit rate of 274 Mbit/s. A severe drawback of high-speed coaxial systems was their small repeater spacing (\( \sim \) 1 km), requiring excessive regeneration of signals and making such systems expensive to operate. Microwave communication systems generally allowed for a larger repeater spacing but their bit rate was also limited to near 100 Mbit/s.

All of the preceding schemes are now classified under the general heading of telecommunication systems. A telecommunication system transmits information from one place to another, whether separated by a few kilometers or by transoceanic distances. It may but does not need to involve optics. The optical telegraph of Claude Chappe can be called the first optical telecommunication system that spread throughout Europe over a 40-year period from 1800 to 1840. However, it was soon eclipsed by electrical telecommunication systems based on telegraph and telephone lines. By 1950, scientists were again looking toward optics to provide solutions for enhancing the capacity of telecommunication systems. However, neither a coherent optical source nor a suitable transmission medium was available during the 1950s. The invention of the laser and its demonstration in 1960 solved the first problem. Attention was then focused on finding ways for using laser light for optical communication. Many ideas were advanced during the 1960s [7], the most noteworthy being the idea of light confinement using a sequence of gas lenses [8].

Optical fibers were available during the 1960s and were being used for making gastroscope and other devices that required only a short length of the fiber [9]. However, no one was serious about using them for optical communication. The main problem was that optical fibers available during the 1960s had such high losses that only 10 % of light entering at one end emerged from the other end of a fiber that was only a few meters long. Most engineers ignored them for telecommunication applications where light had to be transported over at least a few kilometers. It was suggested in 1966 that losses of optical fibers could be reduced drastically by removing impurities from silica glass used to make them, and that such low-losses fibers might be the best choice for optical communication [10]. Indeed, Charles Kao was awarded one half of the 2009 noble prize for his groundbreaking achievements concerning the transmission of light in fibers for optical communication [11]. The idea of using glass fibers for optical communication was revolutionary since fibers are capable of guiding light in a manner similar to the confinement of electrons inside copper wires. As a result, they can be used in the same fashion as electric wires are used routinely.

However, before optical fibers could be used for optical communication, their losses had to be reduced to an acceptable level. This challenge was taken by Corning, an American company located not far from Rochester, New York where I work. A breakthrough occurred in 1970 when three Corning scientists published a paper indicating that they were able to reduce fiber losses to below 20 dB/km in the wavelength region near 630 nm [12]. Two years later, the same Corning team produced a fiber with a loss of only 4 dB/km by replacing titanium with germanium as a dopant inside the fiber’s silica core. Soon after, many industrial laboratories entered the race for reducing fiber losses even further. The race was won in 1979 by a Japanese group that was able to reduce the loss of an optical fiber to near 0.2 dB/km in the infrared wavelength region near 1.55 μm [13]. This value was close to the fundamental limit set by the phenomenon of Rayleigh scattering. Even modern fibers exhibit loss values similar to those first reported in 1979.

In addition to low-loss optical fibers, switching from microwaves to optical waves also required a compact and efficient laser, whose output could be modulated to impose the information that needed to be transmitted over such fibers. The best type of laser for this purpose was the semiconductor laser. Fortunately, at about the same time Corning announced its low-loss fiber in 1970, GaAs semiconductor lasers, operating continuously at room temperature, were demonstrated by two groups working in Russia [14] and at Bell Laboratories [15]. The simultaneous availability of compact optical sources and low-loss optical fibers led to a worldwide effort for developing fiber-optic communication systems [16].

The first-generation systems were designed to operate at a bit rate of 45 Mbit/s in the near-infrared spectral region because GaAs semiconductor lasers used for making them emit light at wavelengths near 850 nm. Since the fiber loss at that wavelength was close to 3 dB/km, optical signal needed to be regenerated every 10 km or so using the so-called repeaters. This may sound like a major limitation, but it was better than the prevailing coaxial-cable technology that required regeneration every kilometer or so. Extensive laboratory development soon led to several successful field trials. AT&T sent its first test signals on April 1, 1977 in Chicago’s Loop district. Three weeks later, General Telephone and Electronics sent live telephone traffic at 6 Mbit/s in Long Beach, California. It was followed by the British Post Office that began sending live telephone traffic through fibers near Martlesham Heath, UK. These trials were followed with further development, and commercial systems began to be installed in 1980. The new era of fiber-optic communication systems had finally arrived. Although not realized at that time, it was poised to revolutionize how humans lived and interacted. This became evident only after the advent of the Internet during the decade of the 1990s.

A commonly used figure of merit for communication systems is the bit rate–distance product BL, where B is the bit rate and L is the repeater spacing, the distance after which an optical signal must be regenerated to maintain its fidelity [2]. Figure 8.2 shows how the BL product has increased by a factor of 1018 through technological advances during the last 180 years. The acronym WDM in this figure stands for wavelength-division multiplexing, a technique used after 1992 to transmit multiple channels at different wavelengths through the same fiber. Its use enhanced the capacity of fiber-optic communication systems so dramatically that data transmission at 1 Tbit/s was realized by 1996. The acronym SDM stands for space-division multiplexing, a technique used after 2010 to further enhance the capacity of fiber-optic systems in response to continuing increase in the Internet data traffic (with the advent of video streaming by companies such as YouTube and Netflix) and fundamental capacity limitations of single-mode fibers (see Sect. 8.5). Two features of Fig. 8.2 are noteworthy. First, a straight line in this figure indicates an exponential growth because of the use of logarithmic scale for the data plotted on the y axis. Second, a sudden change in the line’s slope around 1977 indicates that the use of optical fibers accelerated the rate of exponential growth and signaled the emergence of a new optical communication era.

2 Basic Concepts Behind Optical Communication

Before describing the technologies used to advance the state of the art of fiber-optic communication systems, it is useful to look at the block diagram of a generic communication system in Fig. 8.3a. It consists of an optical transmitter and an optical receiver connected to the two ends of a communication channel that can be a coaxial cable (or simply air) for electric communication systems but takes the form of an optical fiber for all fiber-optic communication systems.

2.1 Optical Transmitters and Receivers

The role of optical transmitters is to convert the information available in an electrical form into an optical form, and to launch the resulting optical signal into a communication channel. Figure 8.3b shows the block diagram of an optical transmitter consisting of an optical source, a data modulator, and electronic circuitry used to derive them. Semiconductor lasers are commonly used as optical sources, although light-emitting diodes (LEDs) may also be used for some less-demanding applications. In both cases, the source output is in the form of an electromagnetic wave of constant amplitude. The role of the modulator is to impose the electrical data on this carrier wave by changing its amplitude, or phase, or both of them. In the case of some less-demanding applications, the current injected into a semiconductor laser itself is modulated directly, alleviating the need of an expensive modulator.

The role of optical receivers is to recover the original electrical data from the optical signal received at the output end of the communication channel. Figure 8.3c shows the block diagram of an optical receiver. It consists of a photodetector and a demodulator, together with the electronic circuitry used to derive them. Semiconductor photodiodes are used as detectors because of their compact size and low cost. The design of the demodulator depends on the modulation scheme used at the transmitter. Many optical communication systems employ a binary scheme referred to as intensity modulation with direct detection. Demodulation in this case is done by a decision circuit that identifies incoming bits as 1 or 0, depending on the amplitude of the electric signal. All optical receivers make some errors because of degradation of any optical signal during its transmission and detection, shot noise being the most fundamental source of noise. The performance of a digital lightwave system is characterized through the bit-error rate. It is customary to define it as the average probability of identifying a bit incorrectly. The error-correction codes are sometimes used to improve the raw bit-error rate of an optical communication system.

2.2 Optical Fibers and Cables

Most people are aware from listening to radios or watching televisions that electromagnetic waves can be transmitted through air. However, optical communication systems require electromagnetic waves whose frequencies lie in the visible or near-infrared region. Although such waves can propagate through air over short distances in good weather conditions, this approach is not suitable for making optical communication networks spanning the whole world. Optical fibers solve this problem and transmit light over long distances, irrespective of weather conditions, by confining the optical wave to the vicinity of a microscopic cylindrical glass core through a phenomenon known as total internal reflection.

Figure 8.4 shows the structure of an optical fiber designed to support a single spatial mode by reducing its core diameter to below 10 μm. In the case of a graded-index multimode fiber the core diameter is typically 50 μm. The core is made of silica glass and is doped with germania to enhance its refractive index slightly (by about 0.5 %) compared to the surrounding cladding that is also made of silica glass. A buffer layer is added on top of the cladding before putting a plastic jacket. The outer diameter of the entire structure, only a fraction of a millimeter, is so small that the fiber is barely visible. Before it can be used to transmit information, one or more optical fibers are enclosed inside a cable whose diameter may vary from 1 to 20 mm, depending on the intended application.

What happens to an optical signal transmitted through an optical fiber? Ideally, it should not be modified by the fiber at all. In practice, it becomes weaker because of unavoidable losses and is distorted through the phenomena such as chromatic dispersion and the Kerr nonlinearity [2]. As discussed earlier, losses were the limiting factor until 1970 when a fiber with manageable losses was first produced [12]. Losses were reduced further during the decade of 1970s, and by 1979 they have been reduced to a level as low as 0.2 dB/km at wavelengths near 1.55 μm. Figure 8.5 shows the wavelength dependence of power losses measured for such a fiber [13]. Multiple peaks in the experimental curve are due to the presence of residual water vapors. The dashed line, marked Rayleigh scattering, indicates that, beside water vapors, most of the loss can be attributed to the fundamental phenomenon of Rayleigh scattering, the same one responsible for the blue color of our sky. Indeed, although water peaks have nearly disappeared in modern fibers, their losses have not changed much as they are still limited by Rayleigh scattering.

2.3 Modulations Formats

The first step in the design of any optical communication system is to decide how the electrical binary data would be converted into an optical bit stream. As mentioned earlier, an electro-optic modulator is used for this purpose. The simplest technique employs optical pulses such that the presence of a pulse in the time slot of a bit corresponds to 1, and its absence indicates a 0 bit. This is referred to as on–off keying since the optical signal is either “off” or “on” depending on whether a 0 or 1 bit is being transmitted.

There are still two choices for the format of the resulting optical bit stream. These are shown in Fig. 8.6 and are known as the return-to-zero (RZ) and nonreturn-to-zero (NRZ) formats. In the RZ format, each optical pulse representing bit 1 is shorter than the bit slot, and its amplitude returns to zero before the bit duration is over. In the NRZ format, the optical pulse remains on throughout the bit slot, and its amplitude does not drop to zero between two or more successive 1 bits. As a result, temporal width of pulses varies depending on the bit pattern, whereas it remains the same in the case of RZ format. An advantage of the NRZ format is that the bandwidth associated with the bit stream is smaller by about a factor of 2 simply because on–off transitions occur fewer times. Electrical communication systems employed the NRZ format for this reason in view of their limited bandwidth. The bandwidth of optical communication systems is large enough that the RZ format can be used without much concern. However, the NRZ format was employed initially. The switch to the RZ format was made only after 1999 when it was found that its use helps in designing high-capacity lightwave systems. By now, the RZ format is use almost exclusively for WDM systems whose individual channels are designed to operate at bit rates exceeding 10 Gbit/s.

2.4 Channel Multiplexing

Before the advent of the Internet, telephones were used most often for communicating information. When an analog electric signal representing human voice is digitized, the resulting digital signal contains 64,000 bits over each one second duration. The bit rate of such an optical bit stream is clearly 64 kbit/s. Since fiber-optic communication systems are capable of transmitting at bit rates of up to 40 Gbit/s, it would be a huge waste of bandwidth if a single telephone call was sent over an optical fiber. To utilize the system capacity fully, it is necessary to transmit many voice channels simultaneously through multiplexing. This can be accomplished through time-division multiplexing (TDM) or WDM. In the case of TDM, bits associated with different channels are interleaved in the time domain to form a composite bit stream. For example, the bit slot is about 15 μs for a single voice channel operating at 64 kb/s. Five such channels can be multiplexed through TDM if the bit streams of successive channels are delayed by 3 μs. Figure 8.7a shows the resulting bit stream schematically at a composite bit rate of 320 kb/s. In the case of WDM, the channels are spaced apart in the frequency domain. Each channel is carried by its own carrier wave. The carrier frequencies are spaced more than the channel bandwidth so that the channel spectra do not overlap, as seen in Fig. 8.7b. WDM is suitable for both analog and digital signals and is used in broadcasting of radio and television channels. TDM is readily implemented for digital signals and is commonly used for telecommunication networks.

The concept of TDM has been used to form digital hierarchies. In North America and Japan, the first level corresponds to multiplexing of 24 voice channels with a composite bit rate of 1.544 Mb/s (hierarchy DS-1), whereas in Europe 30 voice channels are multiplexed, resulting in a composite bit rate of 2.048 Mb/s. The bit rate of the multiplexed signal is slightly larger than the simple product of 64 kb/s with the number of channels because of extra control bits that are added for separating channels at the receiver end. The second-level hierarchy is obtained by multiplexing four DS-1 channels. This results in a bit rate of 6.312 Mb/s (hierarchy DS-2) for North America and 8.448 Mb/s for Europe. This procedure is continued to obtain higher-level hierarchies.

The lack of an international standard in the telecommunication industry during the 1980s led to the advent of a new standard, first called the synchronous optical network (SONET) and later termed the synchronous digital hierarchy (SDH). It defines a synchronous frame structure for transmitting TDM digital signals. The basic building block of the SONET has a bit rate of 51.84 Mbit/s. The corresponding optical signal is referred to as OC-1, where OC stands for optical carrier. The basic building block of the SDH has a bit rate of 155.52 Mbit/s and is referred to as STM-1, where STM stands for a synchronous transport module. A useful feature of the SONET and SDH is that higher levels have a bit rate that is an exact multiple of the basic bit rate. Table 8.1 lists the correspondence between SONET and SDH bit rates for several levels. Commercial STM-256 (OC-768) systems operating near 40 Gbit/s became available by 2002. One such optical channel transmits more than half million telephone conversations over a single optical fiber. If the WDM technique is employed to transmit 100 channels at different wavelengths, one fiber can transport more than 50 million telephone conversations at the same time.

3 Evolution of Optical Communication from 1975 to 2000

As mentioned earlier, initial development of fiber-optic communication systems started around 1975. The enormous progress realized over the 40-year period extending from 1975 to 2015 can be grouped into several distinct generations. Figure 8.8 shows the increase in the BL product over the period 1975–2000 as quantified through various laboratory experiments [17]. The straight line corresponds to a doubling of the BL product every year. The first four generations of lightwave systems are indicated in Fig. 8.8. In every generation, the BL product increases initially but then begins to saturate as the technology matures. Each new generation brings a fundamental change that helps to improve the system performance further.

Increase in the BL product over the period 1975–2000 through several generations of optical communication systems. Different symbols are used for successive generations (from [2]; ©2010 Wiley)

3.1 The First Three Generations

The first generation of optical communication systems employed inside their optical transmitters GaAs semiconductor lasers operating at a wavelength near 850 nm. The optical bit stream was transmitted through graded-index multimode fibers before reaching an optical receiver, where it was converted back to the electric domain using a silicon photodetector. After several field trials during the period 1977–1979, such systems became available commercially in the year 1980. They operated at a bit rate of 45 Mbit/s and allowed repeater spacings of up to 10 km. The larger repeater spacing compared with 1-km spacing of coaxial systems was an important motivation for system designers because it decreased the installation and maintenance costs associated with each repeater. It is important to stress that even the first-generation systems transmitted nearly 700 telephone calls simultaneously over a single fiber through the use of TDM.

It was evident to system designers that the repeater spacing could be increased considerably by operating the system in the infrared region near 1.3 μm, where fiber losses were below 1 dB/km (see Fig. 8.5). Furthermore, optical fibers exhibit minimum dispersion in this wavelength region. This realization led to a worldwide effort for the development of new semiconductor lasers and detectors based on the InP material and operating near 1.3 μm. The second generation of fiber-optic communication systems became available in the early 1980s, but their bit rate was initially limited to 100 Mbit/s because of dispersion in multimode fibers. This limitation was overcome by the use of single-mode fibers. In such fibers the core diameter is reduced to near 10 μm (see Fig. 8.4) so that the fiber supports a single spatial mode. A laboratory experiment in 1981 demonstrated transmission at 2 Gbit/s over 44 km of a single-mode fiber. The introduction of commercial systems soon followed. By 1987, such second-generation commercial systems were operating at bit rates of up to 1.7 Gbit/s with a repeater spacing of about 50 km.

The repeater spacing of the second-generation systems was still limited by the fiber loss at their operating wavelength of 1.3 μm. As seen in Fig. 8.5, losses of silica fibers become the smallest at wavelengths near 1.55 μm. However, the introduction of third-generation optical communication systems operating at 1.55 μm was considerably delayed by a relatively large dispersion of single-mode fibers in this spectral region. Conventional InGaAsP semiconductor lasers could not be used because of pulse spreading occurring as a result of simultaneous oscillation of multiple longitudinal modes. This dispersion problem could be solved either by using dispersion-shifted fibers designed to have minimum dispersion near 1.55 μm, or by designing lasers such that their spectrum contained a dominant single longitudinal mode. Both approaches were followed during the 1980s. By 1985, laboratory experiments indicated the possibility of transmitting information at bit rates of up to 4 Gbit/s over distances in excess of 100 km. Third-generation optical communication systems operating at 2.5 Gbit/s became available commercially in 1990, and their bit rate was soon extended to 10 Gbit/s. The best performance was achieved using dispersion-shifted fibers in combination with lasers oscillating in a single longitudinal mode.

A relatively large repeater spacing of the third-generation 1.55-μm systems reduced the need of signal regeneration considerably. However, economic pressures demanded further increase in its value of close to 100 km. It was not immediately obvious how to proceed since losses of silica fibers at 1.55 μm were limited to near 0.2 dB/km by the fundamental process of Rayleigh scattering. One solution was to develop more sensitive optical receivers that could work reliably at reduced power levels. It was realized by many scientists that repeater spacing could be increased by making use of a heterodyne-detection scheme (similar to that used for radio- and microwaves) because its use would require less power at the optical receiver. Such systems were referred to as coherent lightwave systems and were under development worldwide during the 1980s. However, the deployment of such systems was postponed with the advent of fiber amplifiers in 1989 that were pumped optically using semiconductor lasers and were capable of boosting the signal power by a factor of several hundreds.

3.2 The Fourth Generation

By 1990 the attention of system designers shifted toward using three new ideas: (1) periodic optical amplification for managing fiber losses, (2) periodic dispersion compensation for managing fiber dispersion, and (3) WDM for enhancing the system capacity. As seen in Fig. 8.9, the WDM technique employs multiple lasers at slightly different wavelengths such that multiple data streams are transmitted simultaneously over the same optical fiber. The basic idea of WDM was not new as this technique was already being used at the radio- and microwave frequencies (e.g., by the television industry). However, its adoption at optical wavelengths required the development of a large number of new devices over a time span of a few years. For example, optical multiplexers and demultiplexers that could combine and separate individual channels at the two ends of a fiber link were critical components for the advent of the WDM systems.

Schematic of a WDM communication system. Multiple transmitters operating at different wavelengths are combined using a multiplexer and all channels are sent simultaneously over the same optical fiber. A demultiplexer at the receiving end separates individual channels and sends them to different receivers

The fourth generation of optical communication systems made use of optical amplifiers for increasing the repeater spacing, in combination with the WDM technique for increasing the system capacity. As seen in Fig. 8.8, the advent of the WDM technique around 1992 started a revolution that resulted in doubling of the system capacity every 8 months or so and led to lightwave systems operating at a bit rate of 1 Tbit/s by 1996. In most WDM systems, fiber losses are compensated periodically using erbium-doped fiber amplifiers spaced 60–80 km apart. Such WDM systems operating at bit rates of up to 80 Gbit/s were available commercially by the end of 1995. The researchers worldwide were pushing the limit of WDM technology. By 1996, three research groups reported during a post-deadline session of the Optical Fiber Communications conference that they were able to operate WDM systems with the total capacity of more than 1 Tbit/s. This represented an increase in the system capacity by a factor of 400 over a period of just 6 years!

The emphasis of most WDM systems is on transmitting as many optical channels as possible over a single fiber by adding more and more lasers operating at different wavelengths. The frequency spacing between two neighboring channels is chosen to be as small as possible but it has to be larger than the bandwidth of each channel. At a bit rate of 40 Gbit/s, a channel spacing of 50 GHz is the smallest that can be used. The standard setting agency, ITU, has assigned a set of fixed frequencies for commercial WDM systems using this 50-GHz channel spacing. All these frequencies lie in the wavelength region near 1550 nm where fiber losses are the smallest. The wavelength range of 1530–1570 nm is called the C band (C standing for conventional), and most commercial WDM systems are designed to work in this band. However, the S and L bands lying on the short- and long-wavelength side of the C band, respectively, are also used if necessary. This approach led in 2001 to a 11-Tbit/s experiment in which 273 channels, each operating at 40 Gbit/s, were transmitted over a distance of 117 km [18]. Given that the first-generation systems had a capacity of 45 Mbit/s in 1980, it is remarkable that the use of WDM increased the system capacity by a factor of more than 200,000 over a period of 21 years.

3.3 Bursting of the Telecom Bubble in 2000

It should be clear from Fig. 8.8 and the preceding discussion that the adoption of WDM during the fourth generation of optical communication systems was a disruptive technology. Fortunately, its adoption coincided with the advent and commercialization of the Internet around 1994. Just as the explosive growth of websites all around the world increased the volume of data flowing through the telecommunication networks, the fourth generation of optical communication systems making use of the WDM technology became available. Its advent allowed telecom operators to manage the data traffic by simply adding more channels to an existing WDM system. The demand went through the roof during the 4-year span of 1996–2000, and it led to a stock-market bubble that is now referred to as the telecom bubble.

The formation of the telecom bubble was the result of a rapid growth after 1995 in the telecommunication business. The stocks of companies dealing with the manufacturing and delivery of telecom services soared after 1995. As an example, consider the company JDS–Fitel involved in selling various optical devices needed for telecom systems. Its stock value was around $8 in June 1994, jumped to near $20 in June 1995, and exceeded $70 in June 1996 when the stock was split by 2:1 to bring the price near $35. The stock was split again in November 1997 when its price doubled a second time. In early 1999 the company announced a merger with Uniphase, another fast-growing optics company, resulting in the formation of JDSU. During that year, the stock of JDSU was increasing so rapidly that it was split two more times. Figure 8.10 shows how the stock price of JDSU varied over the 8-year period ranging from 1996 to 2004 after taking into account multiple splits. A nearly exponential growth during the 1999 indicates the formation of the telecom bubble during that year. A similar growth occurred in the stock price of many other telecommunication and Internet companies.

The telecom bubble burst during the year 2000, and the stock prices of all telecommunication companies collapsed soon after, including that of JDSU as seen in Fig. 8.10. Several companies went out of business and many surviving were in trouble financially. Commercial WDM systems capable of operating at 1 Tbit/s were still being sold, but their was no buyer for them. The research and development of fiber-optic communications systems slowed down to a crawl as everyone waited for the revival of the telecom industry. It took nearly 5 years before the US economy recovered, only to crash again in August 2008 owing to the formation of another bubble, this time in the real-estate market. One can say that the decade of 2000–2009 has not been a kind one as far as the telecommunication industry is concerned.

4 The Fifth Generation

In spite of the two severe economical downturns, considerable progress has occurred since 2000 in designing advanced optical communication systems, leading to the fifth and sixth generations of such systems. The focus of fifth-generation systems was on making the WDM systems more efficient spectrally. This was accomplished by reviving the coherent detection scheme that was studied in the late 1980s but abandoned soon after fiber-based optical amplifiers became available. Coherent receivers capable of detecting both the amplitude and phase of an optical signal through a heterodyne scheme were developed soon after the year 2000. Their commercial availability near the end of the decade allowed system designers to employ advanced modulation formats in which information is encoded using both the amplitude and phase of an optical carrier.

The basic concept can be understood from Fig. 8.11, showing four modulation formats using the so-called constellation diagram that displays the real and imaginary parts of the complex electric field along the x and y axes, respectively. The first configuration represents the standard binary format, called amplitude-shift keying (ASK), in which the amplitude or intensity of the electric field takes two values, marked by circles and representing 0 and 1 bits of a digital signal. The second configuration is another binary format, called phase-shift keying (PSK), in which the amplitude remains constant but phase of the electric field takes two values, say 0 and π, that represent the 0 and 1 bits of a digital signal. The third configuration in part (c) of Fig. 8.11 shows the quaternary PSK (or QPSK) format in which the optical phase takes four possible values. This case allows to reduce the signal bandwidth since two bits can be transmitted during each time slot, and the effective bit rate is halved. Borrowing from microwave communication terminology, the reduced bit rate is called the symbol rate (or baud). The last example in Fig. 8.11 shows how the symbol concept can be extended to multilevel signaling such that each symbol carries 4 bits or more. An additional factor of two can be gained if one transmits two orthogonally polarized symbols simultaneously during each symbol slot, a technique referred to as polarization division multiplexing.

The concept of spectral efficiency, defined as the number of bits transmitted in 1 s within a 1-Hz bandwidth, is quite useful in understanding the impact of coherent detection in combination with phase-encoded modulation formats. The spectral efficiency of fourth generation WDM systems that employed ASK as the modulation format was limited to below 0.8 bit/s/Hz since at most 40 billion bits/s could be transmitted over a 50-GHz bandwidth of each WDM channel. This value for the fifth-generation systems can easily exceed 3 by using polarization multiplexing in combination with the QPSK format. Figure 8.12 shows how the spectral efficiency of optical communication systems has evolved since 1990 when its value was near 0.05 bit/s/Hz. Values near 2 bit/s/Hz were realized by 2005 and they approached 10 bit/s/Hz by the year 2010 [19].

Evolution of spectral efficiency after 1990 through laboratory demonstrations. The red star shows the fundamental capacity limit of optical fibers (after [19]; ©2012 IEEE)

The availability of coherent receivers and increased computing speeds led to another advance after it was realized that one can use digital signal processing to improve the signal-to-noise ratio (SNR) of an optical signal arriving at the receiver. Since a coherent receiver detects both the amplitude and the phase of an optical signal, together with its state of polarization, one has in essence a digital representation of the electric field associated with the optical signal. As a result, special electronic chips can be designed to process this digital signal that can compensate for the degradation caused by such unavoidable factors as fiber dispersion. One can also implement error-correcting codes and employ encoder and decoder chips to improve the bit-error rate at the receiver end. A new record was set in 2011 when 64-Tbit/s transmission was realized over 320 km of a single-mode fiber using 640 WDM channels that spanned both the C and L bands with 12.5-GHz channel spacing [20]. Each channel contained two polarization-multiplexed 107-Gbit/s signals coded with a modulation format known as quadrature amplitude modulation. Such techniques are routinely implemented in modern optical communication systems.

5 The Sixth Generation

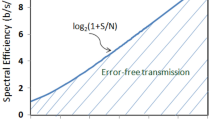

As the capacity of WDM systems approached 10 Tbit/s, indicating that 10 trillion bits could be transmitted each second over a single piece of optical fiber supporting a single optical mode inside its tiny core (diameter about 10 μm), scientists began to think about the ultimate information capacity of a single-mode fiber. The concept of the channel capacity C was first introduced by Shannon in a 1948 paper [21] in which he showed that the SNR sets the fundamental limit for any linear communication channel with a finite bandwidth W through the remarkably simple relation \( C=W{ \log}_2\left(1+SNR\right) \). The spectral efficiency, defined as \( SE=C /\ W \), is thus only limited by the SNR of the received signal and can, in principle, be increased indefinitely by sending more and more powerful signals over the channel. Unfortunately, this conclusion does not hold for optical fibers that are inherently nonlinear and affect the bit stream propagating through them in a nonlinear fashion [2].

5.1 Capacity Limit of Single-Mode Fibers

Considerable attention was paid during the decade of 2000 to estimating the ultimate capacity of single-mode fibers in the presence of various nonlinear effects. In a paper published in 2010 Essiambre et al. were able to develop a general formalism for calculating it [22]. Figure 8.13 shows how the nonlinear effects reduce the spectral efficiency from its value predicted by Shannon’s relation, when high signal powers are launched to ensure a high SNR at the receiver. As one may expect, the spectral efficiency depends on the transmission distance, and it becomes worse as this distance increases. However, the most noteworthy feature of Fig. 8.13 is that, for any transmission distance, spectral efficiency is maximum at an optimum value of SNR that changes with distance. For example, the spectral efficiency of a 1000-km-long link is limited to 8 bit/s/Hz for single polarization, irrespective of the modulation format employed. This is in sharp contrast to the prediction of Shannon and reflects a fundamental limitation imposed by the nonlinear effects.

Spectral efficiency as a function of SNR calculated numerically including the nonlinear effects over transmission distances ranging from 500 to 8000 km (after [22]; ©2010 IEEE)

We can use the results shown in Fig. 8.13 to estimate the ultimate capacity of a single-mode fiber. The usable bandwidth of silica fibers in the low-loss window centered around 1550 nm is about 100 nm. This value translates into a channel bandwidth of 12.5 THz. Using this value and a peak spectral efficiency of about 16 bit/s/Hz (assuming polarization-division multiplexing), the maximum capacity of a single-mode fiber is estimated to be 200 Tb/s. This is an enormous number and was thought to be high enough until recently that system designers did not worry about running out of capacity. However, data traffic over fiber-optic networks has experienced a steady growth, doubling every 18 months, since the advent of the Internet in the early 1990s. The growth has even accelerated in recent years owing to the new activities such as video streaming. One way to meet the demand would be to deploy more and more fiber cables. However, this approach will result in a larger and larger fraction of the total electrical power being devoted to supporting optical transport networks. It is estimated that by 2025 the energy demand of modern telecommunication systems will consume a very large fraction of the total US energy budget, unless a way is found to design energy efficient optical networks.

5.2 Space-Division Multiplexing

One proposed solution makes use of space-division multiplexing (SDM) to increase the capacity of fiber-optic communication networks at a reduced energy cost per transmitted bit [23–26]. The basic idea is to employ multimode fibers such that several WDM bit streams can be transmitted over different modes of the same fiber. The energy advantage comes from integrating the functionalities of key optical components into a smaller number of devices. For instance, if a single multimode optical amplifier is used to amplify all spatially multiplexed bit streams, power consumption is likely to be lower compared to using separate amplifiers. For this reason, the SDM technique is attracting increasing attention since 2010, and several record-setting experiments have already been performed. Most of them employ multicore fibers in which several cores share the same cladding. Each core is typically designed to support a single mode but that is not a requirement. Figure 8.14 shows schematically the basic idea behind SDM using the case of a three-core fiber as an example.

Similar to the case of WDM technology, the implementation of SDM requires not only new types of fibers but also many other active and passive optical components such as mode multiplexers/demultiplexers and fiber amplifiers that can amplify signals in all modes/cores simultaneously. A lot of progress has been made since 2010 in realizing such devices and many laboratory demonstrations have shown the potential of SDM for enhancing the system capacity [23–26]. Figure 8.15 shows how the capacity of optical communication systems has evolved over a period ranging from 1980 to 2015 and covering all six generations. Single-wavelength systems, employing TDM in the electrical domain, started with a capacity of under 100 Mbit/s in the 1980s and were operating at 10 Gb/s around 1990. The advent of WDM in the early 1990 led to a big jump in the system capacity and subsequent adoption of coherent detection with digital signal processing allowed the capacity to reach 64 Tbit/s by the year 2010 [20]. Further increase in system capacity required the adoption of SDM. In a 2012 experiment, SDM was used to demonstrate data transmission at 1000 Tbit/s (or 1 Pbit/s) by employing a 12-core fiber [24]. Each fiber core carried 222 WDM channels, and each wavelength transmitted a 380-Gbit/s bit stream over a 52-km-long multicore fiber with a spectral efficiency of 7.6 bit/s/Hz.

The simplest SDM case corresponds to a multicore fiber whose cores are far enough apart that they experience little coupling. In this situation, WDM signals in each core travel independently, and the situation is analogous to using separate fibers. Indeed, most high-capacity experiments have employed this configuration through multicore fibers with 7, 12, or 19 cores. In a second category of experiments single-core fibers supporting a few spatial modes are employed [23]. In this case, modes become invariably coupled, both linearly and nonlinearly, since all channels share the same physical path. Degradations induced by linear coupling are then removed at the receiver end through digital signal processing. In a 2015 experiment, a fiber supporting 15 spatial modes was used to transmit 30 polarization-multiplexed channels over 23 km [27].

6 Worldwide Fiber-Optic Communication Network

The advent of the Internet in the early 1990s made it necessary to develop a worldwide network capable of connecting all computers (including cell phones) in a transparent manner. Such a network required deployment of fiber-based submarine cables across all oceans. The first such cable was installed in 1988 across the Atlantic ocean (TAT–8) but it was designed to operate at only 280 Mbit/s using the second-generation technology. The same technology was used for the first transpacific fiber-optic cable (TPC–3), which became operational in 1989. By 1990 the third-generation lightwave systems had been developed. The TAT–9 submarine system used this technology in 1991; it was designed to operate near 1.55 μm at a bit rate of 560 Mb/s with a repeater spacing of about 80 km. The increasing traffic across the Atlantic Ocean led to the deployment of the TAT–10 and TAT–11 cables by 1993 with the same technology. A submarine cable should be strong so that it can withstand biting by large sea animals. Figure 8.16 shows, as an example, the internal structure of a submarine cable containing several fibers for carrying bidirectional traffic. Optical fibers are immersed in a water-resistant jelly that is surrounded with many steel rods to provide strength. Steel rods are kept inside a copper tube that itself is covered with the insulating polyethylene. As the scale on the right side of Fig. 8.16 shows, the outer diameter of the entire cable is still only 1.7 cm.

After 1990, many laboratory experiments investigated whether the amplifier technology could be deployed for submarine cables such that the signal retained its optical form all along the cable length, thus avoiding the use of expensive in-line regenerators. As early as 1991, an experiment employed a recirculating-loop configuration to demonstrate the possibility of data transmission in this manner over 14,300 km at 5 Gbit/s. This experiment indicated that an all-optical, submarine transmission system was feasible for intercontinental communication. The TAT–12 cable, installed in 1995, employed optical amplifiers in place of in-line regenerators and operated at a bit rate of 5.3 Gbit/s with an amplifier spacing of about 50 km. The actual bit rate was slightly larger than the data rate of 5 Gbit/s because of the overhead associated with the forward-error correction that was necessary for the system to work. The design of such lightwave systems becomes quite complex because of the cumulative effects of fiber dispersion and nonlinearity, which must be controlled over long distances.

International submarine cable network of fiber-optic communication systems around 2015 (source: dataset encoded by Greg Mahlknecht, http://www.cablemap.info)

The use of the WDM technique after 1996 in combination with optical amplifiers, dispersion management, and error correction revolutionized the design of submarine fiber-optic systems. In 1998, a submarine cable known as AC–1 was deployed across the Atlantic Ocean with a capacity of 80 Gb/s using the WDM technology. An identically designed system (PC–1) crossed the Pacific Ocean. The use of dense WDM, in combination with multiple fiber pairs per cable, resulted in systems with large capacities. After 2000, several submarine systems with a capacity of more than 1 Tbit/s became operational (see Table 8.2). Figure 8.17 shows the international submarine cable network of fiber-optic communication systems. The VSNL transatlantic submarine system installed in 2001 had a total capacity of 2.56 Tbit/s and spans a total distance of 13,000 km. A submarine system, known as India-ME-WE and installed in 2009, is capable of operating bidirectionally at 3.84 Tb/s through four fiber pairs. By 2012, submarine systems with a total capacity of 5 Tbit/s for traffic in each direction became operational. A proposed system, known as Arctic Fiber, will be capable of carrying traffic at speeds of up to 8 Tbit/s by transmitting 50 channels (each operating at 40 Gb/s) over four fiber pairs. It is estimated that more than 400 million kilometers of optical fiber have already been deployed worldwide, a number that is close to three times the distance to sun.

7 Conclusions

This chapter began with a brief history of optical communication before describing the main components of a modern optical communication system. Specific attention was paid to the development of low-loss optical fibers as they played an essential role after 1975. I describe in detail the evolution of fiber-optic communication systems through its six generations over a 40-year time period ranging from 1975 to 2015. I also discuss how the adoption of WDM during the 1990s was fueled by the advent of the Internet and how it eventually led in 2000 to bursting of the telecom bubble in the stock markets worldwide. However, the telecommunication industry recovered by 2005, and the researchers have come up with new techniques during the last 10 years. Recent advances brought by digital coherent technology and space-division multiplexing are described briefly in this chapter. Figure 8.17 shows the international submarine cable network of fiber-optic communication systems that allows the Internet to operate transparently, interconnecting computers worldwide on demand. Such a global high-speed network would have not been possible without the development of fiber-optic communication technology during the 1980s and the adoption of the WDM technique during the decade of the 1990s. One can only wonder what the future holds, especially if the potential of the SDM technology is realized by the year 2020.

References

Huurdeman AA (2003) The worldwide history of telecommunications. Wiley, Hoboken, NJ

Agrawal GP (2010) Fiber-optic communication systems, 4th edn. Wiley, Hoboken, NJ

Chappe I. (1824) Histoire de la télégraphie (in French), University of Michigan Library

Holzmann GJ, Pehrson B (2003) The early history of data networks. Wiley, Hoboken, NJ

Jones A (1852) Historical sketch of the electrical telegraph. Putnam, New York

Bell AG (1876) Improvement in telegraphy, U.S. Patent No. 174,465

Pratt WK (1969) Laser communication systems. Wiley, Hoboken, NJ

Miller SE (1966) Communication by laser. Sci Am 214:19–25

Hecht J (1999) City of light: the story of fiber optics. Oxford University Press, New York

Kao KC, Hockham GA (1966) Dielectric-fiber surface waveguides for optical frequencies. Proc IEE 113:1151–1158

http://www.nobelprize.org/nobel_prizes/physics/laureates/2009/

Kapron FP, Keck DB, Maurer RD (1970) Radiation losses in glass optical waveguides. Appl Phys Lett 17:423–425

Miya T, Terunuma Y, Hosaka T, Miyoshita T (1979) Ultimate low-loss single-mode fiber at 1.55 μm. Electron Lett 15:106–108

Alferov Z. (2000) Double heterostructure lasers: early days and future perspectives. IEEE J Sel Top Quant Electron 6:832–840

Hayashi I, Panish MB, Foy PW, Sumski S (1970). Junction lasers which operate continuously at room temperature. Appl Phys Lett 17:109–111

Willner AE (ed.) (2000) Several historical articles in this millennium issue cover the development of lasers and optical fibers. IEEE J Sel Top Quant Electron 6:827–1513

Kogelnik H (2000) High-capacity optical communications: personal recollections. IEEE J Sel Top Quant Electron 6:1279–1286

Fukuchi K, Kasamatsu T, Morie M, Ohhira R, Ito T, Sekiya K, Ogasahara D, Ono T (2001) 10.92-Tb/s (273 × 40-Gb/s) triple-band/ultra-dense WDM optical-repeatered transmission experiment. In: Proceedings of the Optical Fiber Communication (OFC) conference, paper PD24

Essiambre R-J, Tkach RW (2012) Capacity trends and limits of optical communication networks. Proc IEEE 100:1035–1055

Zhou X et al (2011) 64-Tb/s, 8 b/s/Hz, PDM-36QAM transmission over 320 km using both pre- and post-transmission digital signal processing. J Lightwave Technol 29:571–577

Shannon CE (1948) A mathematical theory of communication. Bell Syst Tech J 27:379–423

Essiambre R-J, Kramer G, Winzer PJ, Foschini GJ, Goebel B (2010) Capacity limits of optical fiber networks. J. Lightwave Technol. 28:662–701

Ryf R et al (2012) Mode-division multiplexing over 96 km of few-mode fiber using coherent 6 × 6 MIMO processing. J. Lightwave Technol. 30:521–531

Takara H et al (2012) 1.01-Pb/s crosstalk-managed transmission with 91.4-b/s/Hz aggregated spectral efficiency. In: Proceedings of the European conference on optical communications, paper Th3.C.1

Richardson DJ, Fini JM, Nelson LE (2012) Space-division multiplexing in optical fibres. Nat Photon 7:354–362

Li G, Bai N, Zhao N, Xia C (2014) Space-division multiplexing: the next frontier in optical communication. Adv Opt Commun 6:413–487

Fontaine NK et al (2015) 30×30 MIMO Transmission over 15 Spatial Modes. In: Proceedings of the Optical Fiber Communication (OFC) conference, post deadline paper Th5C.1

Acknowledgements

The author thanks Dr. R.-J. Essiambre of Alcatel–Lucent Bell Laboratories for helpful suggestions. The financial support of US National Science Foundation is also gratefully acknowledged.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Open Access This chapter is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits use, duplication, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, a link is provided to the Creative Commons license and any changes made are indicated.

The images or other third party material in this chapter are included in the work’s Creative Commons license, unless indicated otherwise in the credit line; if such material is not included in the work’s Creative Commons license and the respective action is not permitted by statutory regulation, users will need to obtain permission from the license holder to duplicate, adapt or reproduce the material.

Copyright information

© 2016 The Author(s)

About this chapter

Cite this chapter

Agrawal, G.P. (2016). Optical Communication: Its History and Recent Progress. In: Al-Amri, M., El-Gomati, M., Zubairy, M. (eds) Optics in Our Time. Springer, Cham. https://doi.org/10.1007/978-3-319-31903-2_8

Download citation

DOI: https://doi.org/10.1007/978-3-319-31903-2_8

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-31902-5

Online ISBN: 978-3-319-31903-2

eBook Packages: Physics and AstronomyPhysics and Astronomy (R0)