Abstract

We propose a practical method for calibrating the position and the Radiant Intensity Distribution (RID) of light sources from images of Lambertian planes. In contrast with existing techniques that rely on the presence of specularities, we prove a novel geometric property relative to the brightness of Lambertian planes that allows to robustly calibrate the illuminant parameters without the detrimental effects of view-dependent reflectance and a large decrease in complexity. We further show closed form solutions for position and RID of common types of light sources. The proposed method can be seamlessly integrated within the camera calibration pipeline, and its validity against the state-of-the-art is shown both on synthetic and real data.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

1 Introduction

The problem of devising a usable, accurate calibration routine for the illumination properties of a scene is becoming increasingly relevant. As techniques for 3D reconstruction become more accurate, the focus of the community has shifted towards more complex scenarios where many of the traditional lighting assumptions are no longer valid, e.g., in 3D reconstruction from hand-held devices with on-board lighting [4] or in endoscopic images which are becoming more popular in the community [6]. In these cases, anisotropic lighting combined with unstable or scarce features make traditional feature-based methods prone to errors. On the other hand, especially in medical environments there has been a resurgence of photometric techniques such as Shape-from-Shading (SFS) and Photometric Stereo (PS) [1, 2, 7, 20, 22, 26], because of their inherent suitability to textureless environments.

However, improvements in algorithmic and mathematical techniques have not been matched by a commensurate improvement in modelling, and in virtually all aforementioned scenarios a realistic modelling of the light sources has been largely ignored. Indeed, in the SFS and PS literature either directional [10, 23] or ‘omnilight’ [20, 22, 26] light sources are considered. While these models can perform well in controlled conditions, they are not suitable for the challenging environments where these techniques are being applied, with focused lights exhibiting anisotropic Radiant Intensity Distributions (RID) and placed close to the surface. This is also due to a lack of enabling technologies allowing to easily integrate the estimation of illumination parameters within the standard camera calibration pipeline, with most existing technologies requiring costly and time-intensive hardware solutions [14, 16].

Specifically, in this work we address the lack of cheap, practical vision-based techniques for RID calibration by introducing a fully image-based approach to calibration of light position and RID that can be easily integrated in camera calibration routines. Inspired by the first approach to the problem in [11], we extend it with the following contributions:

-

1.

A novel geometric property of shading of Lambertian planes, which relates the light position to global maxima in the intensity.

-

2.

An algebraic method for robustly finding the dominant light axis from global maxima in the intensity only, without the need for complex symmetry search as in [11].

-

3.

Closed-form solutions for position and RID for classes of (an-)isotropic sources.

-

4.

A complete pipeline for joint camera, light position and RID calibration using standard Lambertian calibration boards.

1.1 Related Work

Calibrating the position of a light source is a well-studied problem in computer vision. For distant light sources, all light rays are parallel and is therefore sufficient to estimate a single direction vector, which can be accomplished from the specular highlights produced by illuminating shiny surfaces [3, 25]. In the case of point light sources where the assumption of parallel light rays is no longer valid and is therefore necessary to estimate the light position in space, current strategies still employ reflective objects of known geometry such as spheres [13, 15, 18, 27], planes [17], or non-reflective objects with known geometry [24]. Alternative methods requiring specialised hardware such as LEDs and translucent screens [9] or flatbed scanners [19] have also been proposed. Applications have been proposed recently in works on PS using sources with anisotropic RIDs for generic vision [8] as well as medical endoscopy applications [2, 21]; however either none or ad hoc calibration methods were proposed in the cited works.

While the approaches above only characterise the positional information of the light source, there is a limited number of techniques investigating RID calibration, i.e., determining the angle-dependent attenuation factor relative to the anisotropism of the light source. The only vision-based approach in the literature was proposed by Park et al. in [11]. In this method, geometric properties holding under the Lambertian assumption are exploited to calibrate the light source. However, the method is effectively a 2-stage approach where the light position is first estimated using specularities from shiny surfaces, thus contradicting the initial Lambertian assumption. Whenever Lambertian surfaces are considered, the method was shown to have large errors in the position estimation. The contradiction between Lambertian assumption and the use of shiny surfaces can result in a breakdown of the assumptions depending on the light/camera configuration [12]. Finally, from a computational perspective the method leverages the symmetry of the projected light pattern, which on Lambertian surfaces results in a sensitive optimisation process requiring a full 2D search.

Conversely, we improve on the state-of-the-art by proving an additional geometric property of the shading of Lambertian planes. This allows to find the dominant light axis by inspection of the global intensity maxima, without expensive symmetry searches. Moreover, we find a closed-form solution for position and RID for widely used classes of realistic light sources. Finally, we show how we can easily integrate our proposed method within the camera calibration pipeline, using AR markers instead of the traditional checkerboard pattern.

(a) Geometric setup under consideration. The Lambertian plane \(\varPi \) with surface normal \(\varvec{n}\) lit by the point light source L is projected by the camera C on the image plane I. Each 3D point \(M \in \varPi \) is projected to a point \(m \in I\), and is related to L by the light vector \(\varvec{l}\). Two special points are defined on \(\varPi \): V, which is the intersection of the dominant light axis \(\varvec{v}\) with \(\varPi \), and W, which is the closest point to L. The triplet (V, W, L) defines the plane \(\varSigma \), which intersects \(\varPi \) at the line \(\varvec{s}\). (b) Supporting figure for the proof of Lemma 1.

2 Shading of Lambertian Planes Under Near Illumination

In this section, we analyse the reflectance properties of Lambertian textureless planes under near illumination from point light sources. Let \(\varPi \) be a plane in space with known position and orientation \(( \varvec{R}, \varvec{t})\), a unit surface normal \(\hat{\varvec{n}} = (n_x,n_y,n_z)\) illuminated by a nearby point light source L. The light source is located at a position \(\varvec{a}=(a_x,a_y,a_z)\) from the world origin, which in our formulation coincides with the position of the camera C. The light source is characterised by a dominant unit direction vector \(\hat{\varvec{v}}\), and the light vector between a 3D point \(M = (x,y,z)\in \varPi \) and L is represented as \(\varvec{l}\). In our notation, 3D points are given in uppercase letters, 2D points as lowercase letters, direction vectors as boldface lowercase letters, matrices as boldface uppercase letters and scalars as Greek letters.

This configuration is shown graphically in Fig. 1a. Under a generic Lambertian reflectance model with an infinitely far away light source, the brightness at the pixel (u, v) in the captured image I corresponding to the projection of M is independent from the viewing direction:

where \(\rho \) is the surface albedo and \(\gamma \) is the power of the incident light on the surface. This is the scenario that is traditionally considered in the photometric stereo literature for Lambertian surfaces. However, when a more realistic setup is considered, the factors related to the nearby light source can no longer be ignored. More specifically, while for infinitely far light sources two light rays \(\varvec{l}_i,\varvec{l}_j\) would be parallel, in this case they will no longer be parallel and intersect at L. Likewise, the incident light power \(\gamma \) is replaced by the Radiant Intensity Distribution (RID) function \(g(\phi )\) of the angle between the normalised light vector \(\hat{\varvec{l}}\) and the dominant light direction. Finally, the attenuation term \(r^2 = \Vert \varvec{l}\Vert ^2\) represents the light fall-off which is inversely proportional to the square of the distance between M and L:

In the equation above, the shape of \(g(\phi )\) is determined by the physical characteristics of the light source. Generally, focused lights such as those found on flash cameras, spotlights and endoscopes will exhibit some degree of anisotropism. In Fig. 2 examples of common RIDs are provided. Similarly to the work in [11], our single assumption is that the RID is radially symmetric about L, so that all RIDs can be represented as a 1D function of the angle \(\phi \).

2.1 Geometric Properties of Illumination Model

Consider the setup shown in Fig. 1a. The plane \(\varSigma \) can be constructed from the triplet of points (V, W, L), where V is the intersection of \(\varvec{v}\) and \(\varPi \), and W is the closest point to L on \(\varPi \). The intersection of \(\varPi \) and \(\varSigma \) defines the line \(\varvec{s}\). Orientation and position of the calibration plane \(\varPi \) is known to the observer.

In [11] it was shown that for any radially symmmetric RID about the main light axis \(\varvec{v}\), the observed brightness on a Lambertian plane will be bilaterally symmetric. This key result was extended by noticing that the observed brightness is in fact radially symmetric whenever the RID under consideration is isotropic. Thus, it was possible to detect \(\varvec{s}\) and \(\varSigma \) through a 2D search for the axis of symmetry, or 1D whenever the light position was already known.

In this section, we prove a further property of this geometric setup: given the shading image of a Lambertian plane, its global maximum will invariably lie on the line \(\varvec{s}\). This allows us to recover the dominant light direction and, for several classes of RID, the light source position directly from the position of the maximal points, without the need for computationally expensive and error-prone symmetry search. More formally, we prove the following:

Lemma 1

(Location of Global Maximum). Let \(\varPi \) be a Lambertian plane of constant albedo illuminated by a nearby point light source L with dominant view direction \(\varvec{v}\) and radially symmetric RID \(g(\phi )\) , and let \(\varvec{s}\) be a line on \(\varPi \) formed by W , the closest point to L on \(\varPi \) , and V , the point of intersection between \(\varPi \) and \(\varvec{v}\) . Then, the point where the global maximum of the intensity is reached, Q , will also lie on \(\varvec{s}\) .

Proof by contradiction of Lemma 1 . Consider the Lambertian reflectance function in Eq. (2). This can be represented as the product of two functions \(f_1(\rho ,\varvec{l},\varvec{v}),f_2(\varvec{l},\varvec{n})\):

By construction, \(f_1(\rho ,\varvec{l},\varvec{v})\) is an elliptical conic section on \(\varvec{\varPi }\) with centre V and symmetrical about \(\varvec{s}\). Also, \(f_2(\varvec{l},\varvec{n})\) is globally maximised at W: since W is by definition the closest point to L, at W the denominator of \(f_2(\varvec{l},\varvec{n})\) is minimised. Moreover, as W is the closest point to L it also implies that at W, \(\varvec{l} \parallel \varvec{n}\), thereby also maximising the numerator. Since both the distance from L and the angle between \(\varvec{l}\) and \(\varvec{n}\) will be radially symmetric about W, we conclude that \(f_2(\varvec{l},\varvec{n})\) is also radially symmetric about W.

The two functions will form isocurves centred at V and W respectively (Fig. 1b). Now consider a globally maximal point \(Q^*\) not lying on \(\varvec{s}\). Without loss of generality, let us assume that the distance between V and W is \(\alpha \) and let us further assume that this point lies on the \(\beta -\)isocurve of\(f_1(\rho ,\varvec{l},\varvec{v})\), meaning that it lies on a circumference of radius \(\beta \) centred at V. The three points form a triangle \(\triangle VWQ^*\), where the length the two known sides is \(\overline{VW}=\alpha \) and \(\overline{VQ^*} = \beta \) respectively, while the length of the third side \(\overline{WQ^*} = \zeta \) can be expressed as:

Since both \(\alpha \) and \(\beta \) are of fixed length, \(\zeta \) will have its minimum length when \(\angle {WVQ^*}=0\), in other words when \(Q^*\) lies on \(\varvec{s}\). Let us call this point Q. Since \(\overline{WQ} < \overline{WQ^*}\), Q lies on a higher isocurve of \(f_2(\varvec{l},\varvec{n})\) while lying on the same isocurve of \(f_1(\rho ,\varvec{l},\varvec{v})\) as \(Q^*\). However, this implies that the product of the two functions will yield a higher value for Q than for \(Q^*\), contradicting our initial assumption that the global maximum does not lie on \(\varvec{s}\). \(\Box \)

Corollary 1

When the light source is isotropic, no dominant light direction is present, then \(\alpha = 0\) and \(Q = W\).

3 Illuminant Properties Estimation

We estimate light position and RID from N purely Lambertian plane images \(I_i\). Perspective distortion-free images \(\check{I}_i\) are first created from the plane position and orientations \((\varvec{R}_i,\varvec{t}_i)\) obtained during AR-marker based camera calibration. Since all images are Lambertian, there are no view-variant effects to compensate for during the perspective correction.

3.1 Dominant Light Axis Estimation

We estimate the dominant light axis \(\varvec{v}\) by observing that all planes \(\varSigma _i\) form a pencil around it, each with normal vector \(\varvec{v}\times \hat{\varvec{n}}_i\). As the symmetry line \(\varvec{s}_i\) is the intersection of \(\varSigma _i\) and \(\varPi _i\), the segment \(L-Q_i\) between the light source and the \(i^{th}\) maximal point will also lie on \(\varSigma _i\) and

After expansion, and by stacking observations about the maximal points and the plane normals from each of the \(\varPi _i\) planes, we obtain a system \(\varvec{A}\varvec{u}=0\), where \(\varvec{A}\) is a rank 5, \(N\times 9\) matrix and \(\varvec{u}\) a vector of unknowns. Each row \(\varvec{A}_i\) of the matrix \(\varvec{A}\) and \(\varvec{u}\) correspond to:

The elements of \(\varvec{v}\) are encoded in the last three rows of the null space of \(\varvec{A}\), which is found after a rank 5 approximation of \(\varvec{A}\) to minimise the effect of noise followed by SVD. Generally, at this stage any candidate point \(L_c\) along \(\varvec{v}\) will satisfy Eq. 5, so while the 3 directional components of \(\varvec{v}\) can be obtained from the estimate of \(\varvec{u}\), the location of L will still be unknown and is estimated with the methods presented in the next sections. Instead, we fix \(\varvec{v}\) in space by finding a generic point \(V_0\) on \(\varvec{v}\) by stacking the Hessian normal forms of \(\varSigma _i\): \(\left( \hat{\varvec{n}}_i\times \varvec{v}\right) ^\top V_0=\left( \hat{\varvec{n}}_i\times \varvec{v}\right) \cdot Q_i\) and solving for \(V_0\).

At least 9 observations are sufficient to obtain a reliable estimate of \(\varvec{v}\) from the maximal points only. These are estimated by thresholding the top percentile of the pixel intensities, and calculating the intensity-weighted centroid. Given the smoothness of the reflectance function, this simple procedure is generally sufficient for a reliable estimate.

Corollary 2

When the light position is known, either by design or because of prior specular calibration, the orientation of the dominant light axis can be obtained from only two images and their maximal points. This is particularly important for practical systems.

3.2 Closed-Form Estimation of Light Position and RID

In this section we derive the procedure necessary for estimating light position and RID parameters directly in closed form, with no additional information required apart from the location of the maximal points. This procedure is applicable to both isotropic and regular anisotropic sources, approximating a wide variety of practical scenarios.

(1) Isotropic sources. For isotropic light sources, according to Corollary 1 the maximal points coincide with the closest points on the plane from the light source. Therefore, there is no need to estimate \(\varvec{v}\) according to the previous section, and the maximal point \(Q_i\) on a plane \((\hat{\varvec{n}}_i,\varvec{t}_i)\) closest to light source L is:

Hence, dropping the index i for notation clarity, we can build a linear system given one maximal point:

Since the matrix above is of rank 2, at least two observations are needed to be stacked together and remove the ambiguity. Therefore, for isotropic sources, given two observations it is possible to calculate the light position. This coincides with the specular case, where at least two views of a specular highlight are necessary to triangulate the light position.

(2) Regular anisotropic sources. For regular anisotropic light sources, we consider RID functions of the form \(g(\varvec{l},\varvec{v}) = \cos \left( \angle \left( \varvec{l},\varvec{v}\right) \right) ^\mu = \left( \left( \varvec{l}\cdot \varvec{v}\right) /\Vert \varvec{l}\Vert \right) ^\mu \), which approximates well the light distributions from spotlights commonly found in halogen or LED light sources, examples of which are shown in Fig. 2a, b. We decide to concentrate on this form as it has been shown to work well in practical scenarios in the photometric stereo literature, e.g., in [8].

To locate L we proceed by first recovering the dominant light axis \(\varvec{v}\) according to the procedure outlined earlier. This leaves one degree of freedom for the position of L along the known axis. To simplify our mathematical treatment, w e transform the system so that this degree of freedom will translate into a single unknown. To achieve this, we first rotate our complete frame by a matrix \(\varvec{R}^*\) so that the resulting vector \(\varvec{v}^*\) will be parallel to the vector \(\varvec{u} = \begin{pmatrix}0&0&1\end{pmatrix}^\intercal \) Footnote 1.

The rotated system consists of the new unknown light source position \(L^*\) with dominant light vector \(\varvec{v}^*\), the rotated planes \(\varPi _i^*\) with unit normals \(\hat{\varvec{n}}_i^*\) and translations \(\varvec{t}_i^*\). After rotating the reference frame, the intersection points \(V_i^*\) between the dominant light vector and the planes will be aligned parallel to the \(z-\)axis, with (x, y) coordinates equal to \((L_x^*,L_y^*)\), thus leaving a single unknown \(L_z^*\) to be calculated. We now parametrise a point P on the intersection line \(\varvec{s}^*\) between each plane \(\varPi _i^*\) and \(\varSigma _i^*\):

where we drop the index i for clarity. The intensity function along the intersection line can then be derived as:

At maximal points \(W^*\), \(\frac{\partial I(\lambda )}{\partial \lambda }=0\). Noting that at these points \(\lambda = 1\), we obtain:

where \(k_1,k_2,k_3\) are polynomials in \(L_z^*\) of degree 1, 2 and 1 respectively.1 The zeros of the functions can occur either when one of the \(\mu +1\) repeated roots \(\left( W_z^* - L_z^*\right) \) is zero or when the numerator is zero. Since the former can never be zero as \(L_z^* \ne W_z^*\), the problem of finding the unknowns \(\mu \) and \(L_z^*\) is reduced to finding the zeros of numerator. This is linear in \(\mu \) and cubic in \(L_z^*\), irrespective of the value of \(\mu \). Given that we have at least 9 observations from the estimation of \(\varvec{v}\), the two unknowns can be efficiently estimated using a numerical equation solver. Therefore, from the maximal points only we can retrieve dominant light direction, light location and RID parameters of regular anisotropic light sources.

3.3 Optimisation Procedure for Complex Anisotropic Sources

Light Position Estimation. For lights with complex RIDs, it is more difficult to obtain an analytical expression for the position as a function of maximal points, and we use instead an optimisation procedure. Instead of optimising the full reflectance function which requires RID knowledge, we extend the work in [5], by noting that given N Lambertian planes crossed by a ray \(\varvec{l}\), the intensity of the points \(I_i(u_i,v_i)\) on \(\varvec{l}\) obeys the following relationship independently of albedo or RID:

We start our search for L by picking the first crossing point \(L_0\) between the planes and \(\varvec{v}\) (since we assume that all planes are in front of the light source) and proceeding backwards. For each candidate point \(L_{c}\), we trace J random rays starting from it, compute their intersections with the planes and obtain the corresponding pixel intensities by projecting using the known calibration parameters. From Eq. (12), we compute the set of observations \(\varvec{r}_j = \{r_{1j},...,r_{Nj}\}\) relative to each ray. An initial approximation for L is found by minimising the cost based on the variance of the sets \(\varvec{r}_j\):

Helped by the RID independency of our formulation, in our experiments we have invariably found E(j) to be convex, as we show in the supplementary material. The single unknown for the light position is found through a Levenberg-Marquadt optimisation of E(j).

RID Parameters Optimisation.

Once the position of the light source is known, for complex RIDs it is necessary to estimate the distribution from the observed intensities. In our case, given the view-invariant Lambertian images, the RID is modeled with a \(4^{th}\) order polynomial with five unknown coefficients:

Since the RID function has the constraint \(g_{\max }=1\), the albedo is found as the normalising value for the estimated function. We solve the system from Eq. (2) by stacking K observations in the vector \(\varvec{b} = I(u,v)\Vert \varvec{l}\Vert ^3/(\varvec{l}\cdot \hat{\varvec{v}})\) and solving the system \(\varvec{A}\varvec{p} = \varvec{b}\), where \(\varvec{A}\) is an \(K\times 5\) matrix where each row is the vector of calculated angles \([\phi ^4\;\phi ^3\;\phi ^2\;\phi \;1]\) between the calculated \(\varvec{v}\) and the light vector to the projected 3D position of the plane pixels, while \(\varvec{p}\) is the \(5\times 1\) vector of unknown coefficients.

Images from the two experiments with real data. (a) The AR board used for joint camera and light calibration. (b) The lights tested, left to right: narrow, medium and wide cones. (c) Experimental setup. (d) Head of the scope used for the experiment, with the three coloured LEDs around it. (e) Calibration procedure (Color figure online).

4 Results

4.1 Synthetic Data

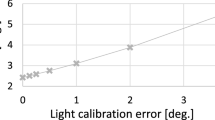

We generated 20 datasets for each of the 6 RID types in Fig. 2. Each dataset contains a plane observed at 20 positions/orientations. In our experiments, we test the angular error of the estimated dominant view axis, the position error of the source, as well as the MSE between the estimated and ground truth RIDs. The error measures in Fig. 4 are the average of the 20 datasets for each RID type. The proposed method is compared with the state-of-the-art in [11]. For fairness, while it is not a requirement for our proposed method, we ensured that the orientations generated showed enough of the symmetric pattern to avoid failure cases of [11]. Finally, we explore the noise robustness of the technique by injecting increasing percentages of uniformly distributed noise.

Results from [11] and this work shown as dashed and solid lines respectively. (a), Top tables: light position estimation error in mm. (b), Middle tables: angular estimation error of dominant light vector in degrees. (c), Bottom tables: MSE of estimated RID. All errors measured against different noise levels.

As shown in Fig. 4, the proposed method (solid lines) drastically reduces the errors in [11] (dashed) for all RID types. Numerical results are shown in the tables. Different colours of lines represent different RID types. Whenever the closed form solution can be used (i.e. for Circular, Elliptical and Isotropic RIDs), the estimated solution is very robust to noise with error close to zero in both position and RID. The robustness is due to the fact that only intensity maxima need to be found, which can be estimated very reliably. Whenever the optimisation of Sect. 3.3 has to be used, the errors increase, albeit at a slower rate than [11]. Extending the closed form solution to more classes to avoid the optimisation will be the subject of our future work. On the other hand, the method in [11] suffers from inaccuracies in the position estimation, which adversely affect the RID estimation as well. It is important to stress the difference in complexity between the two methods. Mainly due to the 2D symmetry search, our implementation of the method in [11] requires several minutes for each plane, while the proposed method can process the full set in a few seconds. In all cases at a given noise level, our worst performance is better than the best performance of [11].

4.2 Real Data

We test our proposed technique in two separate scenarios. First, with three halogen light bulbs with equal power but different beam widths (Fig. 3b). Second, we mounted three external (red, blue and green) LEDs with a housing diameter of 7.9 mm on a standard medical endoscope with an onboard camera in a triangular configuration (Fig. 3d). Instead of the traditional checkerboard used for camera calibration, we print on a matte sheet of paper AR markers for camera calibration, while leaving the center blank to visualise the projected light pattern for light calibration (Fig. 3a, c, e). This allows to jointly perform camera and light calibration during the same procedure without elaborate setups. Crucially, while the range of feasible plane orientations is limited with shiny planes and specular highlight triangulation, using Lambertian planes allows to use the full range of plane orientations necessary for accurate camera calibration.

In the first experiment, For each of the three light bulbs, we capture 17 images. The lights were placed at approximately 25 cm from the camera. Validation for the light position is obtained by specular highlight triangulation using a shiny checkerboard. RID validation information is obtained from the light manufacturer’s datasheet. The position estimation error was calculated with respect to the result of a specular highlight triangulation. However, the positional errors calculated (18.3 mm 12.9 mm and 13.5 mm for narrow, medium and wide beam lights respectively) were within the uncertainty of the specular highlight triangulation, since all rays do not intersect in a single point. The RID is shown in Fig. 5. It can be seen that while the polynomial approximation faithfully reproduces the RID for the wide and medium beams, it gives a slightly loose fit to the sharp spike of the narrow-beam light. Our future work will concentrate on extending the closed form solution to more general classes of light sources, thus avoiding the sensitive light position optimisation, as well as more general representations for the RID. In the second experiment, for each LED we captured 7 images using a scaled down version of our calibration board. The small baseline between light and camera makes it difficult to visualise the symmetry of the projected pattern needed by [11], highlighting the advantage of our method where only brightness maxima are required. Position calibration results are summarised by Fig. 6b, where we show the reconstructed position and orientation of the 3 LEDs reflecting their triangular configuration around the camera (black square in the figure) already shown in Fig. 3d. The correctness of the position was checked by hand and the angle of vergence between the dominant vectors of the 3 LEDs also corresponds to our setup, which was made so that the LED beams would focus at a distance of 7–8 cm. The RID result is shown in Fig. 6a. While we have no ground truth from the manufacturer, we can see that the fitted RID function shows a curve reaching 10 % of its maximal brightness at an angle of \({\approx }25^\circ \), corresponding to an approximately elliptical RID, which is expected from a focused light source.

5 Conclusions

In this paper we propose an approach for calibration of light RID and position that can be integrated within the standard camera calibration pipeline. In particular, we prove a novel property of near lighting on Lambertian planes which allows to calculate the dominant orientation of the light source by observing only the point of maximum intensity on the plane, thus constraining the light position to a single degree of freedom. This, combined with closed-form solutions with particular classes of light sources, allows us to have demonstrably better results for all types of light sources than the state-of-the-art, which instead relies on computationally expensive, sensitive symmetry searches. The method was validated with synthetic data as well as real data with a selection of different light sources and camera types, including an example application with endoscopic data.

Notes

- 1.

Full expressions given in the supplementary material.

References

Ciuti, G., Visentini-Scarzanella, M., Dore, A., Menciassi, A., Dario, P., Yang, G.Z.: Intra-operative monocular 3D reconstruction for image-guided navigation in active locomotion capsule endoscopy. In: IEEE RAS EMBS International Conference on Biomedical Robotics and Biomechatronics (BioRob), pp. 768–774 (2012)

Collins, T., Bartoli, A.: 3D Reconstruction in laparoscopy with close-range photometric stereo. In: Ayache, N., Delingette, H., Golland, P., Mori, K. (eds.) MICCAI 2012, Part II. LNCS, vol. 7511, pp. 634–642. Springer, Heidelberg (2012)

Drbohlav, O., Chaniler, M.: Can two specular pixels calibrate photometric stereo? In: IEEE International Conference on Computer Vision (ICCV), vol. 2, pp. 1850–1857, October 2005

Higo, T., Matsushita, Y., Joshi, N., Ikeuchi, K.: A hand-held photometric stereo camera for 3-D modeling. In: IEEE International Conference on Computer Vision (ICCV), pp. 1234–1241, September 2009

Liao, M., Wang, L., Yang, R., Gong, M.: Light fall-off stereo. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 1–8, June 2007

Maier-Hein, L., Groch, A., Bartoli, A., Bodenstedt, S., Boissonnat, G., Chang, P.L., Clancy, N., Elson, D., Haase, S., Heim, E., Hornegger, J., Jannin, P., Kenngott, H., Kilgus, T., Muller-Stich, B., Oladokun, D., Rohl, S., dos Santos, T., Schlemmer, H.P., Seitel, A., Speidel, S., Wagner, M., Stoyanov, D.: Comparative validation of single-shot optical techniques for laparoscopic 3-D surface reconstruction. IEEE Trans. Med. Imag. 33(10), 1913–1930 (2014)

Malti, A., Bartoli, A., Collins, T.: Template-based conformal shape-from-motion-and-shading for laparoscopy. In: Abolmaesumi, P., Joskowicz, L., Navab, N., Jannin, P. (eds.) IPCAI 2012. LNCS, vol. 7330, pp. 1–10. Springer, Heidelberg (2012)

Mecca, R., Wetzler, A., Bruckstein, A.M., Kimmel, R.: Near field photometric stereo with point light sources. SIAM J. Imag. Sci. 7(4), 2732–2770 (2014)

Moreno, I., Sun, C.C.: Three-dimensional measurement of light-emitting diode radiation pattern: a rapid estimation. Measur. Sci. Technol. 20(7), 075306 (2009). http://stacks.iop.org/0957-0233/20/i=7/a=075306

Park, J., Sinha, S., Matsushita, Y., Tai, Y.W., Kweon, I.S.: Multiview photometric stereo using planar mesh parameterization. In: IEEE International Conference on Computer Vision (ICCV), pp. 1161–1168, December 2013

Park, J., Sinha, S., Matsushita, Y., Tai, Y.W., Kweon, I.S.: Calibrating a non-isotropic near point light source using a plane. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 2267–2274, June 2014

Park, J., Sinha, S., Matsushita, Y., Tai, Y.W., Kweon, I.S.: Supplementary material for: ‘calibrating a non-isotropic near point light source using a plane’, June 2014

Powell, M., Sarkar, S., Goldgof, D.: A simple strategy for calibrating the geometry of light sources. IEEE Trans. Pattern Anal. Mach. Intell. 23(9), 1022–1027 (2001)

Rykowski, R., Kostal, H.: Novel approach for led luminous intensity measurement. In: Conference of Society of Photo-Optical Instrumentation Engineers (SPIE), vol. 6910, February 2008

Schnieders, D., Wong, K.Y.K.: Camera and light calibration from reflections on a sphere. Comput. Vis. Image Underst. 117(10), 1536–1547 (2013)

Simons, R., Bean, A.: Lighting Engineering: Applied Calculations. Routeledge, London (2012)

Stoyanov, D., Elson, D., Yang, G.Z.: Illumination position estimation for 3D soft-tissue reconstruction in robotic minimally invasive surgery. In: IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), pp. 2628–2633, October 2009

Takai, T., Niinuma, K., Maki, A., Matsuyama, T.: Difference sphere: an approach to near light source estimation. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR), vol. 1, pp. I-98-I-105, June 2004

Tan, H.Y., Ng, T.W.: Light-emitting-diode inspection using a flatbed scanner. Opt. Eng. 47(10), 103602–103602 (2008)

Tankus, A., Sochen, N., Yeshurun, Y.: Reconstruction of medical images by perspective shape-from-shading. Int. Conf. Pattern Recogn. (ICPR) 3, 778–781 (2004)

Visentini-Scarzanella, M., Hanayama, T., Masutani, R., Yoshida, S., Kominami, Y., Sanomura, Y., Tanaka, S., Furukawa, R., Kawasaki, H.: Tissue shape acquisition with a hybrid structured light and photometric stereo endoscopic system. In: Luo, X., Reichl, T., Reiter, A., Mariottini, G.L. (eds.) Computer-Assisted and Robotic Endoscopy (CARE). LNCS, pp. 26–37. Springer, Heidelberg (2015)

Visentini-Scarzanella, M., Stoyanov, D., Yang, G.Z.: Metric depth recovery from monocular images using shape-from-shading and specularities. In: IEEE International Conference on Image Processing (ICIP), Orlando, USA, pp. 25–28 (2012)

Vogiatzis, G.: Self-calibrated, multi-spectral photometric stereo for 3D face capture. Int. J. Comput. Vis. 97(1), 91–103 (2012)

Weber, M., Cipolla, R.: A practical method for estimation of point light-sources. In: British Machine Vision Conference (BMVC), pp. 1–10, September 2001

Wong, K.-Y.K., Schnieders, D., Li, S.: Recovering Light Directions and Camera Poses from a Single Sphere. In: Forsyth, D., Torr, P., Zisserman, A. (eds.) ECCV 2008, Part I. LNCS, vol. 5302, pp. 631–642. Springer, Heidelberg (2008)

Wu, C., Narasimhan, S.G., Jaramaz, B.: A multi-image shape-from-shading framework for near-lighting perspective endoscopes. Int. J. Comput. Vis. 86, 211–228 (2010)

Zhou, W., Kambhamettu, C.: A unified framework for scene illuminant estimation. Image Vis. Comput. 26(3), 415–429 (2008)

Acknowledgments

This work was supported by The Japanese Foundation for the Promotion of Science, Grant-in-Aid for JSPS Fellows no.26.04041.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2016 Springer International Publishing Switzerland

About this paper

Cite this paper

Visentini-Scarzanella, M., Kawasaki, H. (2016). Simultaneous Camera, Light Position and Radiant Intensity Distribution Calibration. In: Bräunl, T., McCane, B., Rivera, M., Yu, X. (eds) Image and Video Technology. PSIVT 2015. Lecture Notes in Computer Science(), vol 9431. Springer, Cham. https://doi.org/10.1007/978-3-319-29451-3_44

Download citation

DOI: https://doi.org/10.1007/978-3-319-29451-3_44

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-29450-6

Online ISBN: 978-3-319-29451-3

eBook Packages: Computer ScienceComputer Science (R0)