Abstract

An unexpected finding of previous psychophysical studies is that listeners show highly replicable, individualistic patterns of decision weights on frequencies affecting their performance in spectral discrimination tasks—what has been referred to as individual listening styles. We, like many other researchers, have attributed these listening styles to peculiarities in how listeners attend to sounds, but we now believe they partially reflect irregularities in cochlear micromechanics modifying what listeners hear. The most striking evidence for cochlear irregularities is the presence of low-level spontaneous otoacoustic emissions (SOAEs) measured in the ear canal and the systematic variation in stimulus frequency otoacoustic emissions (SFOAEs), both of which result from back-propagation of waves in the cochlea. SOAEs and SFOAEs vary greatly across individual ears and have been shown to affect behavioural thresholds, behavioural frequency selectivity and judged loudness for tones. The present paper reports pilot data providing evidence that SOAEs and SFOAEs are also predictive of the relative decision weight listeners give to a pair of tones in a level discrimination task. In one condition the frequency of one tone was selected to be near that of an SOAE and the frequency of the other was selected to be in a frequency region for which there was no detectable SOAE. In a second condition the frequency of one tone was selected to correspond to an SFOAE maximum, the frequency of the other tone, an SFOAE minimum. In both conditions a statistically significant correlation was found between the average relative decision weight on the two tones and the difference in OAE levels.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

- Behavioural decision weights

- Level discrimination

- Spontaneous otoacoustic emissions

- Stimulus frequency otoacoustic emission

1 Introduction

People with normal hearing acuity usually can follow a conversation with their friends at a noisy party, a phenomenon known as the “cocktail party effect” (Cherry 1953). This remarkable ability to attend to target sounds in background noise deteriorates with age and hearing loss. Yet, people who have been diagnosed in the clinic as having a very mild hearing loss or even normal hearing based on their pure tone audiogram (the clinical gold standard for identifying hearing loss) still often report considerable difficulty communicating with others in such noisy environments (King and Stephens 1992). The conventional pure tone audiogram, the cornerstone of hearing loss diagnosis, is not always the best predictor for these kinds of difficulties.

Perturbation analysis has become a popular approach in psychoacoustic research to measure how listeners hear out a target sound in background noise (cf. Berg 1990; Lutfi 1995; Richards 2002). Studies using this paradigm show listeners to have highly replicable, individualistic patterns of decision weights on frequencies affecting their ability to hear out specific targets in noise—what has been referred to as individual listening styles (Doherty and Lutfi 1996; Lutfi and Liu 2007; Jesteadt et al. 2014; Alexander and Lutfi 2004). Unfortunately this paradigm is extremely time-consuming, rendering it ineffective for clinical use. Finding a quick and an objective way to measure effective listening in noisy environments would provide a dramatic improvement in clinical assessments, potentially resulting in better diagnosis and treatment.

In the clinic, otoacoustic emissions (OAEs) provide a fast, noninvasive means to assess auditory function. Otoacoustic emissions (OAEs) are faint sounds that travel from the cochlea back through the middle ear and are measured in the external auditory canal. Since their discovery in the late 1970s by David Kemp (1978), they have been used in the clinic to evaluate the health of outer hair cells (OHC) and in research to gain scientific insight into cochlear mechanics. Behaviorally they have been shown to predict the pattern of pure-tone quiet thresholds (Long and Tubis 1988; Lee and Long 2012; Dewey and Dhar 2014), auditory frequency selectivity, (Baiduc et al. 2014), and loudness perception (Mauermann et al. 2004).

The effect of threshold microstructure (as measured by OAEs) on loudness perception is particularly noteworthy because relative loudness is also known to be one of the most important factors affecting the decision weights listeners place on different information-bearing components of sounds (Berg 1990; Lutfi and Jesteadt 2006; Epstein and Silva 2009; Thorson 2012; Rasetshwane et al. 2013). This suggests that OAEs might be used to diagnose difficulty in target-in-noise listening tasks through their impact on decision weights. OAEs may be evoked by external sound stimulation (EOAEs) or may occur spontaneously (SOAEs). Stimulus frequency OAEs (SFOAEs), which are evoked using a single frequency sound, are one of the most diagnostic OAEs regarding cochlear function. They show a highly replicable individualistic pattern of amplitude maxima and minima when measured with high enough frequency resolution, a pattern called SFOAE fine structure. The level difference between maxima and minima can be as large as 30 dB. Usually SOAEs occur near the maxima of SFOAEs fine structure (Bergevin et al. 2012; Dewey and Dhar 2014). Given that loudness varies with SFOAE maxima and minima and that loudness is a strong predictor of listener decision weights, it is possible that both SFOAEs and SOAEs may be used to predict individual differences in behavioural decision weights.

2 Methods

2.1 Listeners

Data are presented from seven individuals (mean age: 27.42 yrs) with pure tone air-conduction hearing thresholds better than 15 dB HL at all frequencies between 0.5 and 4 kHz, normal tympanograms, and no history of middle ear disease or surgery.

2.2 Measurement and Analysis of Otoacoustic Emissions

SOAEs were evaluated from 3-min recordings of sound in the ear canal obtained after subjects were seated comfortably for 15 min in a double-walled, Industrial Acoustics, sound-attenuation chamber. The signal from the ER10B + microphone was amplified by an Etymotic preamplifier with 20 dB gain before being digitized by a Fireface UC (16 bit, 44100 samples/sec). The signal was then segmented into 1-sec analysis windows (1-Hz frequency resolution) with a step size of 250 ms. Half of the segments with the highest power were discarded in order to reduce the impact of subject generated noise. Then an estimate of the spectrum in the ear canal was obtained by converting the averages of FFT magnitude in each frequency bin to dB SPL. SOAE frequencies were identified as a very narrow peak of energy at least 3 dB above an average of the background level of adjacent frequencies.

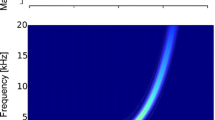

SFOAE measurements were obtained using an adaptation of a swept-tone paradigm (c.f. Long and Talmadge 2007; Long et al. 2008). A probe tone was swept logarithmically at a rate of 2 s per octave from 500 to 8000 Hz at 35 dB SPL (8 s per sweep). The stimuli were delivered to the subjects’ ear canal through ER2 tube phones attached to an ER10B + probe microphone used to record the OAEs. The sweep was repeated 32 times to permit reduction of the noise and intermittent artifacts. Each measurement was multiplied by a half-second-long sliding window with a step size of 25 ms, which gives successive windowed signal segments \(x_{m}^{n}\) where \(n=1,2,...,32\) and \(m\) are the indices of repeated number and segments respectively. An artifact-reduced segment \({{\bar{x}}_{m}}\) was obtained by taking a temporal average of the 32 segments having power falling within 75 % of the median, then the magnitude coefficients \({{\bar{X}}_{m}}\) (at frequency \({{F}_{m}}=500\cdot {{2}^{(0.025/2)m}}\)) were evaluated by LSF procedure to the delayed probe stimulus (Long and Talmadge 1997; Talmadge et al. 1999; Naghibolhosseini et al. 2014). Real-time data processing was controlled by a Fireface UC, using Matlab and Psychtoolbox (Kleiner et al. 2007) in a Windows OS programing environment. The SFOAE fine structure obtained was high-pass filtered with a cutoff frequency of 1 cycle/octave to reduce the impact of any slow changes in overall SFOAEs level (Fig. 1). The levels of maxima (MAX) and minima (MIN) frequencies were determined by the filtered SFOAE fine structure.

An example of raw (light blue) and the filtered (blue) SFOAEs. The raw SFOAE fine structure was high-pass filtered with a high-pass frequency of 1 cycle/octave to reduce the impact of any slow changes in overall SFOAEs level on estimates of fine structure depth. The red line represents SOAEs and the grey line represents the noise floor

2.3 Behavioural Task: Two-Tone Level Discrimination

A two-interval, forced-choice procedure was used: two-tone complexes were presented in two intervals, standard and target, on each trial. All stimuli were presented monaurally at a duration of 300 (in SOAEs experiment) or 400 ms (SFOAEs experiment) with cosine-squared, 5-ms rise/fall ramps. In the target interval, the level of each tone was always 3 dB greater than that in the standard interval. Small independent and random perturbations in level of the individual tones were presented from one presentation to the next. The level perturbations were normally distributed with sigma (σ) = 3 dB. The order of standard and target intervals was selected at random on each trial. Listeners were asked to choose the interval in which the target (higher level) sound occurred by pressing a mouse button. Correct feedback was given immediately after the listener’s response. Decision weights on the tones for each listener were then estimated from logistic regression coefficients in a general linear model for which the perturbations were predictor variables for the listener’s trial-by-trial response (Berg 1990). In experiment 1, the frequencies of the two-tone complex were chosen from SOAE measures from each listener: one at the frequency of an SOAE and the other at a nonSOAE frequency, either lower or higher than the chosen SOAE frequency. The level of each tone in the standard interval was 50 dB SPL. SOAEs usually occur near maxima of SFOAEs fine structure (Bergevin et al. 2012; Dewey and Dhar 2014), but SOAEs are not always detectable at such maxima. Thus, we decided also to measure SFOAE fine structure and select frequencies at the maxima and minima of the fine structure for the behavioural level discrimination task. In experiment 2, two frequencies were chosen from the measured SFOAE fine structure for each listener: one at a maximum of the fine structure and the other at a minimum. The level of the standard stimuli was 35 dB SPL. During a testing session, SOAEs and SFOAEs were recorded prior to and after the behavioural task.

3 Results

Figure 2 shows the mean relative decision weight for the tone at an SOAE frequency as a function of the mean level-difference of the OAE at the SOAE and non-SOAE frequency for six different ears. There was a statistically significant positive correlation between the relative decision weights and the OAE level difference (r2 = 0.759, p = 0.0148).

The mean relative decision weight for the tone at an SOAE frequency as a function of the mean OAE level difference measured at the SOAE and nonSOAE (~ SOAE) frequency. Each decision weight was based on at least 400 trials. The relative decision weight was calculated as WSOAE tone/(WSOAE tone + WnonSOAE tone), where W is the coefficient from the logistic regression. Each different colour represents a different ear

The relative decision weights were compared with the level differences between tones at the maxima and tones at the minima of the fine structure for two listeners (Fig. 3). These listeners have detectable SOAEs, which occur near SFOAE maxima.

The SFOAEs levels obtained at the beginning of the session are associated with the decision weights from the first half of the behavioural trials (filled symbols), and those at the end of session are associated with the decision weights from the second half of the behavioural trials (open symbols). The correlation between the relative decision weight and the level difference between tones near fine-structure maxima and tones near minima is statistically significant (r2 = 0.48, p = 0.000014). This outcome suggests that the association between decision weights and OAEs does not depend on detection of SOAEs.

The position of the frequency pairs used for the behavioural task and the decision weight analysis for subject 1 and 2 are shown in Figs. 4 and 5 respectively. The relative weights were transformed into level differences using the fitted function in the right panel and plotted the transformed level differences (an indication of fine structure depth) in the right panel as a function of SFOAE frequency.

The left panel represents the relative weight of the tone at SFOAE fine structure maxima as a function of the level difference between SFOAE maxima and minima for subject 1. The frequencies used in each comparison are represented by number pairs (right panel). The level difference between the maxima and minima (represented by the same numbers in a pair) is a direct transformation of the relative weights into the level difference using the fitted regression line in the left panel. The rank of the relative weights of frequency pairs is represented by the font size of the numbers in the right panel. Weights from the first half (black) and second half (orange) of the behavioural trials are both presented

The same as in Fig. 4 for subject 2

4 Discussion

Given that loudness varies with SFOAE maxima and minima and that loudness is a strong predictor of listener decision weights, we hypothesized that both SFOAEs and SOAEs may be used to predict individual differences in decision weights in a level discrimination task. As expected, the data showed a significant positive correlation between the level difference of the OAE at the SOAE and non-SOAE frequency and the relative decision weights on level discrimination task of two-tone complex (see Fig. 2). Also there was a similar positive correlation with the difference between SFOAE maxima and minima (see Fig. 3). The results suggest that OAE levels might be used to predict individual differences in more complex target-in-noise listening tasks, even possibly in the diagnosis of speech understanding in specific noise backgrounds. For clinical applications swept frequency SFOAEs might provide a better measure of cochlear fine structure inasmuch as they are less time consuming than SOAE measurements and provide a clearer indication of regions of threshold microstructure and variations in loudness.

References

Alexander JM, Lutfi RA (2004) Informational masking in hearing-impaired and normal-hearing listeners: sensation level and decision weights. J Acoust Soc Am 116(4):2234–2247

Baiduc RR, Lee J, Dhar S (2014) Spontaneous otoacoustic emissions, threshold microstructure, and psychophysical tuning over a wide frequency range in humans. J Acoust Soc Am 135(1):300–314

Berg BG (1990) Observer efficiency and weights in a multiple observation task. J Acoust Soc Am 88:149–158

Bergevin C, Fulcher A, Richmond S, Velenovsky D, Lee J (2012) Interrelationships between spontaneous and low-level stimulus-frequency otoacoustic emissions in humans. Hear Res 285:20–28

Cherry EC (1953) Some experiments on the recognition of speech, with one and two ears. J Acoust Soc Am 25(5):975–979

Dewey JB, Dhar S (2014). Comparing behavioral and otoacoustic emission fine structures. 7th Forum Acusticum, Krakow, Poland

Doherty KA, Lutfi RA (1996) Spectral weights for overall discrimination in listeners with sensorineural hearing loss. J Acoust Soc Am 99(2):1053–1058

Epstein M, Silva I (2009) Analysis of parameters for the estimation of loudness from tone-burst otoacoustic emissions. J Acoust Soc Am 125(6):3855–3810. doi:10.1121/1.3106531

Jesteadt W, Valente DL, Joshi SN, Schmid KK (2014) Perceptual weights for loudness judgments of six-tone complexes. J Acoust Soc Am 136(2):728–735

Kemp DT (1978) Stimulated otoacoustic emissions from within the human auditory system. J Acoust Soc Am 64(5):1386–1391

King K, Stephens D (1992) Auditory and psychological factors in auditory disability with normal hearing. Scand Audiol 21:109–114

Kleiner M, Brainard D, Pelli D (2007). What’s new in Psychtoolbox-3. Perception 36:14

Lee J, Long GR (2012) Stimulus characteristics which lessen the impact of threshold fine structure on estimates of hearing status. Hear Res 283:24–32

Long GR, Talmadge CL (1997) Spontaneous otoacoustic emission frequency is modulated by heartbeat. J Acoust Soc Am 102:2831–2848

Long GR, Talmadge CL (2007) New procedure for measuring stimulus frequency otoacoustic emissions. J Acoust Soc Am 122:2969

Long GR, Tubis A (1988) Investigations into the nature of the association between threshold microstructure and otoacoustic emissions. Hear Res 36:125–138

Long GR, Talmadge CL, Jeung C (2008). New Procedure for evaluating SFOAEs without suppression or vector subtraction. Assoc Res Otolaryngol 31

Lutfi RA (1995) Correlation coefficients and correlation ratios as estimates of observer weights in multiple-observation tasks. J Acoust Soc Am 97:1333–1334

Lutfi RA, Jesteadt W (2006) Molecular analysis of the effect of relative tone on multitone pattern discrimination. J Acoust Soc Am 120(6):3853–3860

Lutfi RA, Liu CJ (2007) Individual differences in source identification from synthesized impact sounds. J Acoust Soc Am 122:1017–1028

Mauermann M, Long GR, Kollmeier B (2004) Fine structure of hearing threshold on loudness perception. J Acoust Soc Am 116(2):1066–1080

Naghibolhosseini M, Hajicek J, Henin S, Long GR (2014). Discrete and swept-frequency sfoae with and without suppressor tones. Assoc Res Otolaryngol 37(73)

Rasetshwane DM, Neely ST, Kopun JG, Gorga MP (2013) Relation of distortion-product otoacoustic emission input-output functions to loudness. J Acoust Soc Am 134(1):369–315. doi:10.1121/1.4807560

Richards VM (2002) Effects of a limited class of nonlinearities on estimates of relative weights. J Acoust Soc Am 111:1012–1017

Talmadge CL, Long GR, Tubis A, Dhar S (1999) Experimental confirmation of the two-source interference model for the fine structure of distortion product otoacoustic emissions. J Acoust Soc Am 105:275–292

Thorson MJ, Kopun JG, Neely ST, Tan H, Gorga MP (2012) Reliability of distortion-product otoacoustic emissions and their relation to loudness. J Acoust Soc Am 131(2):1282–1214. doi:10.1121/1.3672654

Acknowledgments

This research was supported by NIDCD grant R01 DC001262-21. Authors thank Simon Henin and Joshua Hajicek for providing MATLAB code for the LSF analysis.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

<SimplePara><Emphasis Type="Bold">Open Access</Emphasis> This chapter is distributed under the terms of the Creative Commons Attribution-Noncommercial 2.5 License (http://creativecommons.org/licenses/by-nc/2.5/) which permits any noncommercial use, distribution, and reproduction in any medium, provided the original author(s) and source are credited.</SimplePara> <SimplePara>The images or other third party material in this chapter are included in the work's Creative Commons license, unless indicated otherwise in the credit line; if such material is not included in the work's Creative Commons license and the respective action is not permitted by statutory regulation, users will need to obtain permission from the license holder to duplicate, adapt or reproduce the material.</SimplePara>

Copyright information

© 2016 The Author(s)

About this paper

Cite this paper

Lee, J. et al. (2016). Individual Differences in Behavioural Decision Weights Related to Irregularities in Cochlear Mechanics. In: van Dijk, P., Başkent, D., Gaudrain, E., de Kleine, E., Wagner, A., Lanting, C. (eds) Physiology, Psychoacoustics and Cognition in Normal and Impaired Hearing. Advances in Experimental Medicine and Biology, vol 894. Springer, Cham. https://doi.org/10.1007/978-3-319-25474-6_48

Download citation

DOI: https://doi.org/10.1007/978-3-319-25474-6_48

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-25472-2

Online ISBN: 978-3-319-25474-6

eBook Packages: Biomedical and Life SciencesBiomedical and Life Sciences (R0)