Abstract

Reconsidering the function allocation between automation and the pilot in the flight deck is the next step in improving aviation safety. The current allocation, based on who does what best, makes poor use of the pilot’s resources and abilities. In some cases it may actually handicap pilots from performing their role. Improving pilot performance first lies in defining the role of the pilot – why a human is needed in the first place. The next step is allocating functions based on the needs of that role (rather than fitness), then using automation to target specific human weaknesses in performing that role. Examples are provided (some of which could be implemented in conventional cockpits now). Along the way, the definition of human error and the idea that eliminating/automating the pilot will reduce instances of human error will be challenged.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

There is no doubt that commercial aviation is one of the safest forms of long distance travel available today. The annual accident rate per million departures has, on average, continued to decrease over time and is now so close to zero that the results are perhaps driven more by chance than any trend in operational factors. Indeed there have been years with no hull losses or fatalities [1]. There are many factors that have contributed to this level of safety: Advances in design and materials; advances in sensors; Cockpit/Crew Resource Management (CRM) [2]; enhancements in operational management, procedures and regulations; improvements in navigation aids; and advances in automation (flight control, flight navigation, systems control). Automation is often singled out as the major contributor to improved safety. It has made flying more efficient and precise. The automation rarely fails, and it always behaves as programmed. Automation always remembers what it is supposed to do and when to do it, and automation remains ever vigilant. Automation is not subject to many human frailties.

For many years, The Boeing Company has compiled and published statistics on commercial aircraft accidents worldwide. Until 2005–6, Boeing listed the primary causes of accidents, and year after year, the flight crew was named responsible for between 55 to 80 % of all accidents [3]. Unfortunately, this has led to the belief that the flight crew is more of a liability than an asset when it comes to aviation safety. Indeed, many have used this statistic to call for fully automated aircraft; their logic being that if humans are the cause for a significant portion of the accidents, then removing the human from the flight deck will dramatically increase safety. For years, human factors researchers have shown that the interaction between pilots and automation can lead to human error [4–7], but the “remove the human” argument still stands – without the human, there is no need for human-automation interaction so that problem goes away.

Closer inspection reveals several flaws in this logic. First, while there is significant correlation between the increase in automation and the increase in safety, there does not appear to be much proof of causality. Indeed, from 1980 to 1990 – the advent of advanced automation such as the flight management system – the accident rate actually increased [8]. The trend reversed itself in the nineties as automation became more the norm, but CRM training and practices also became the norm during this period. Secondly, while human failures may have been the primary causes in accidents, in most cases, there is no evidence that automation would have fared any better. It’s time to stop worrying about who is better and work on synergizing pilot and automation.

The first section of this paper will describe how removing pilots will not eliminate human error and that the negative effects of human error might become more problematic and dangerous without pilots. The second part briefly describes how the primary role of the pilot on flight deck is not mission monitoring/management but is avoiding and compensating for the complex, unanticipated, and dangerous situations that arise. The third part argues that the current allocation of tasks and functions between the flight crew and automation can significantly hinder the pilot’s ability to perform their primary role. Finally, suggestions are provided for potentially better function allocation schemes, largely applicable to new flight deck designs, but that could also be implemented in current aircraft design.

2 “To err is human, …”

Alexander Pope succinctly described humans’ propensity for error in his 1711 poem, “Essay on Criticism” [9]. Humans, by their very nature, will make errors. Errors, in this context, are acts that the human was not ‘supposed’ to do, yet did or acts that they were supposed to do, and didn’t. Making these errors is part of being human. It’s part of our programming – part of the package. Pilots are human. They will make errors. This is the price that comes with the humans’ creativity, adaptability, and common sense.

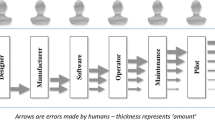

Pilots are not the only humans in the aviation system. Aircraft designers, aircraft manufacturers, software programmers, company owners, air traffic controllers, maintenance, and dispatchers are all human, and they will all make errors. Design flaws will occur, bugs will be found in software, bolts will not be tightened properly, call signs will be confused, and procedural steps will be skipped. Given all the error-making humans in the system, it is surprising that the system operates at all, let alone so remarkably well. There are two reasons that it does. First, while humans will make errors, they generally do not. They generally do the right thing most of the time. The second reason is that while humans do commit errors, they also have the capacity to catch and remediate errors caused by themselves and others. Figure 1 is a notional representation of human errors flowing through the chain of humans with each link reducing the impact of earlier errors while introducing new ones. It is important to note that the last human in the chain will almost necessarily appear to be the largest source of error.

If human pilots are removed from the process, there will still be human error in the system. However, these errors can be more problematic. For example, a design error can affect an entire fleet of aircraft [10] where as a pilot error generally only affects one or two aircraft. A software error [11] may take quite a long time before it manifests itself whereas a pilot error generally manifests itself within the duration of the flight. An error made by the pilot, if manifested or identified, can often be corrected or compensated for in short order. However, errors earlier in the chain may not be immediately detected or correctable and, indeed, resolution may take days to weeks to months, depending on the error. A combination of these scenarios could create very difficult problems. For example, suppose that there is a latent software error. This software is installed throughout the fleet. When the error occurs, how will be handled? Will the fleet be grounded until fixed? Will the fleet need to have its flights restricted to avoid conditions that might trigger the error? Will new software patches be rushed past thorough verification and validation procedures in order to resume operation quickly? In today’s environment, if a software or data base error is discovered, a Notice To Airmen (NOTAM) or some other advisory is issued to all pilots and they quickly implement restrictions or compensation for the error. It is not uncommon for pilots to deal with numerous NOTAMs for each flight.

So, pilot errors affect fewer aircraft, may be more quickly identified and corrected, and have a shorter lifespan and latency than other human errors in the system. Removing the pilot from the system will not eliminate human error and may actually make the consequences of human error worse (e.g., grounding a fleet of aircraft or even leading to multiple aircraft accidents due to the same error). The subject of human error will be returned to in Sect. 4.

3 The Role of the Pilot

There is no doubt that the pilot’s role in the flight deck has changed drastically over the past century. Fifty years ago, a pilot had to do most of the flying manually. The pilot had to manage the systems - balancing fuel and ensuring that the engines were running within parameters. Pilots were the sole navigators on board. Pilots did most everything except provide lift, drag, and thrust. They did this because there were no alternatives. The human could do these tasks much better than the machines of the day. Pilots were hired for their unique skills and personalities.

Today, it is a much different story. Automation can do almost everything better than a human. It can fly more precisely, efficiently, and smoothly. It can monitor hundreds to thousands of pieces of information and never get bored. It will follow procedures to the letter without regard for distractions. It is for these reasons that automation does nearly everything on a modern aircraft these days. As for what the pilot does, it has been described as mission manager, automation manager [12], software programmer, and by some pilots, a glorified bus driver [13]. For these roles, the human needs only a keyboard and cursor control in front of them instead of a control inceptor and a primary flight display. Managers and software programmers are fairly removed from the actual performance of the task. Their job is to tell others (humans or computers) what to do. So why do we still have control inceptors in this highly automated flight deck? Is the task of ‘mission management’ so complex that software could not be created to handle the high level decisions? Couldn’t the management be done from the ground? Why is the human still there?

The answer lies in part in the previous section. The human pilot is there to handle errors and unforeseen situations. The human may recognize the onset of a dangerous situation more quickly than a machine and avoid it altogether. The human often has some big picture awareness or receives other sensory inputs that can lead them to say, “I have a bad feeling about this.” The human is there to adapt, to deal with the unanticipated, or situations that are just too complicated to have previously programmed in every possible response. The human is there for when things go wrong and, in some circumstances, the human is there to perform as a pilot of old – grabbing the stick and throttle and manually flying the aircraft. For efficiency’s sake, this role will be called Exception Handler, although it is much broader than that. The human has exception handling abilities that over 40 years of research in machine intelligence and an exponential growth in computing power and memory has not yet been able to replicate in automation. These human abilities are creativity, adaptability, flexibility, and the ability to understand and perform unusual, unplanned tasks. These abilities are a uniquely human, as is the vulnerability to make errors.

The problem is that the situations where the pilot is called on to practice these abilities are rare. Only the human can deal with them, but we don’t need the human to deal with them very often. They are firemen in a village built primarily of fireproof material. They are goalies for a team that hardly ever lets the ball past midfield. What do they do with the rest of their time? The pilot has to be given something to do. Enter the concept of a ‘manager/monitor’. Perhaps we give the goalie an additional task of watching the plays and coaching the team to keep them occupied. But the team doesn’t even need very much coaching. Unlike machines, the human cannot be switched off. They get bored watching the automation relentlessly and reliably take care of everything on the aircraft. And when a problem arises that requires their exception handling skill, they are likely to be caught off guard.

4 Humans Versus Machines

When it comes to basic aviation, navigation, and communication in an aircraft, machines are better than humans. The list of activities where machines excel human performance continues to grow. If a designer allocates functions based on the better task performer, the machine will continue to be allocated more tasks and functions. This type of allocation has been dubbed the Fitts List approach [14]. To allocate functions using a Fitts List, the designer first performs a function or task analysis where the overall mission is broken down into their smallest logical components. Then for each component, the question is asked, “Which agent does this better – human or machine?” The agent that does it best is allocated that task or function. The result should be a near optimal allocation of tasks where the best performer performs every task. It is a survival of the fittest (pun intended) – and automation is winning more and more races.

Unfortunately, when compared to automation of routine monotonous tasks, humans are proving less and less capable of performing their assigned tasks. One would think that since they have lower workload that they should actually increase proficiency in the tasks still allocated to them, but this is not the case. There are several reasons for this state, many of which arise out of basic human characteristics. As has been stated, machines do a lot of things very well. The functions and tasks that they can not do as well as humans tend to be very difficult, even for humans. In the Fitts List approach, humans tend to get all the hard and ill-defined tasks. This fact naturally leads towards a lower success rate for humans than for machines.

Regardless of what tasks and functions have been allocated to pilots, their role as exception handler does not change. They are responsible for the mission. They must step up and step in when the rare problems do occur. Unlike machines, humans are not able to instantly turn on and function at peak performance. They do not easily shift from doing nothing to situation assessment and problem solving. The classic Yerkes-Dodson [15–17] inverted U theory of performance (Fig. 2) describes that humans do not perform well in extremely low arousal/stress, inactive states. They become bored, complacent, and their mental activities turn to non-task related things. In the current allocation scheme, where the pilot turns on the automation and then sits back to monitor it, humans are in the low stress state. The automation almost always does the right thing and, try as they might, humans become complacent. On the other end of the spectrum, when humans are in periods of high stress and high workload, their performance degrades significantly. When a problem in the flight deck does arise, the pilot often must respond quickly. They go from low workload, low stress almost immediately to performing difficult tasks with high workload and high stress. To add to their workload, the automation often does not provide as much support as in low workload periods. As such, pilots are called upon to perform under the least desirable conditions and often they do not perform well [18]. That the pilot is the last agent who can catch and mitigate errors places even the most highly proficient perfect pilot in a rather undesirable position.

As mentioned, humans are the only agents that have flexibility, creativity, adaptability, and abstract thinking. But with these abilities come a host of human frailties that cannot be removed. These include a relatively poor memory (retrospective, declarative, and prospective), difficulty in multitasking, and a number of biases such as confirmation bias, recency bias, and overreliance on highly reliable agents [4–7, 19–22]. There are physiological factors as well such as the ‘startle’ response, fatigue, and sleep inertia. There are more abstract requirements that feed in to performance: humans need meaning and motivation; they have a need to understand rather than simply respond; they can be overconfident or plagued with self-doubt; and they can become overwhelmed and confused by having the wrong mental models of the situation. The list goes on (see Reason [23] for a more in depth description). It seems that the current function allocation in the flight deck often plays into these frailties and weaknesses.

Consider the following all-too-real [24, 25] scenario. The pilot is in the flight deck monitoring the automation reliably fly the aircraft as it has always done. The pilot looks at the instruments, and they are reading spot-on as usual. Meanwhile, some phenomenon such as ice on the wings causes a change in the performance of the aircraft. The autopilot quietly and dutifully compensates as designed. Finally, the autopilot reaches the end of its authority to compensate. Now, the world changes for the pilot. The autopilot disconnects, essentially leaving no one at the controls. The autopilot blares out its warning that it has disengaged, startling the pilot. The pilot is likely not aware of the inputs that the automation has entered to counter the change. The pilot has little or no time to assess the situation, and must immediately take control of the aircraft and do something. The startle response evokes a reflexive action that is counter to the proper response. The pilot forgets that because the autopilot has disengaged, the normal envelope protections are no longer available and does not consider that the aircraft could stall. Stress has almost instantaneously increased to high levels. Workload has increased, not only physically because the pilot must control the aircraft, but also cognitively as the pilot must try to understand what is going on and how it came to pass. The pilot stalls the aircraft, loses control, and the aircraft crashes. The agency investigating the accident concludes that human error was a major factor because the pilot should have been paying constant close attention, the pilot should not have overreacted or reacted inappropriately, and the pilot should have either recalled that envelope protection was no longer available or should have noticed the reminder on the display. The pilot should have assessed all information, should have acted according to procedure, and simply should have known better. But we know that factors such as prolonged vigilance, emotionless responses, perfect memory, and rigid adherence to all procedures are areas where humans often fail. If a system is designed such that the human must not have human traits and weaknesses, then it is running a greater risk of failure due to human error.

As a side note, where was the automation in all of this? Would the automation have saved the aircraft? The answer is clearly that it just stopped flying the aircraft (as designed) and that it would not have saved the aircraft.. It was not designed to intervene or try and save the aircraft. Yet, because it functioned exactly as designed, the design of automation is not held culpable. It is considered more reliable than the pilot. Even though the pilot was only being stereotypically human, acting as it was designed to act. Clearly, and perhaps rightfully so, the bar has been set much higher for humans than for machines.

One could imagine other ways of handicapping the human but it’s hard to think of methods so subtle yet so effective. There was no malicious intent in the design process. Prior to the 1980s, aircraft were filled with lights and knobs that served to confuse the pilot as much as inform and empower. The pilot workload was excessively high in nearly all phases of flight. And this high workload environment was also conducive to human error – the pilot was in the high stress/arousal portion of the Yerkes – Dodson curve. The modern automation systems introduced in the 80s and 90s dramatically decreased physical workload in the flight deck. Automation was limited by the state of the art in computing and interface technology. Much of the technologies on these early flight decks were state of the art for that time. They were designed long before the concept of a graphical user interface existed. The line programming design of the flight management computer was nearly as elegant and user friendly as could be at the time. While it has been criticized as difficult to program, this is usually from the perspective of the desktop computing revolution embodied in Macintosh and Windows computers.

These gallant attempts to alleviate the pilots’ excessive workload were only partial successes. Studies have shown that workload was shifted from physical to mental and that, in general, automation can be described as silent, blindly obedient, and very brittle [26–28]. In light of what we now know about CRM, it is clear that automation is not a good crewmember. Imagine if a pilot flying failed to let the other members of the crew know that they were having difficulty controlling the aircraft, then suddenly let go of the controls, closed their eyes, folded their arms and shrieked, “I can’t do it! I can’t do it!” over and over until the other pilot was able to silence them. That pilot would not be a pilot for long.

5 Single Pilot Operations

In 2012, NASA began to explore the feasibility of allowing large passenger carrying jets (operating under Part 121 regulations) to be flown by a single pilot in the flight deck [29]. Such operations could reduce weight, crew costs, crew scheduling conflicts, and ameliorate a pilot shortage if one should occur. Research and products produced in this research effort are also likely to improve most other types of aviation; for example, dual crew operations and single pilot general aviation operations. NASA conducted an extensive task analysis that assigned current flight crew tasks to Pilot Flying or Pilot Monitoring as well as categorizing the tasks as heads-up or heads-down, continuous or discrete, aviate, navigate, communicate, manage systems, and other categories [30]. They also conducted four human in the loop studies that looked at pilots flying alone with various levels of ground support [31, 32]. These studies included full mission gate-to-gate operations, and also scenarios that included system failures and diversions. The results (which shall be published in other papers) indicated that the missions could be completed by a single pilot. That is not surprising, given that these aircraft are designed so that a single pilot can fly the aircraft in the emergency case of an incapacitated pilot. But collectively, the studies suggest that there will probably be situations that will overwhelm the single pilot.

One solution to keeping the single pilot from being overwhelmed is to try and replace the second pilot with even more automation. However, as suggested above, this approach of replacing more of the crew’s duties with automation runs the risk of further exacerbating human error. The single pilot would be ill-used under the Fitts allocation model, and the pilot’s talents and skills wasted. Exception handling skills, including basic piloting skills, are likely to continue to atrophy. Operations would likely remain in the two extreme states of workload. This could be a missed opportunity to maximize the human potential.

Another way of viewing the situation is to view the single pilot as a scarce resource that must be used carefully and judiciously. We have this amazing ‘wetware’ computer in the pilot’s skull, why not make the most of it? The pilot’s skills and abilities should be optimized rather than replaced. Automation should enhance human skills and compensate for human weakness in a very precise and targeted manner. If the automation can perform a task better than a human, the automation can be crafted so as to complement the human, effectively making the human/automation collaboration better than either alone. In this way, instead of removing the pilot and allowing their skills to remain idle until needed, the pilot is used to a much greater extent in all phases of flight. Because pilots generally like to fly and be involved, this additional tasking may actually be welcomed by pilots.

6 Making the Most of Your Human

A new function allocation scheme based on the role of the human pilot may keep the pilot in the high performance region of the Yerkes-Dodson Inverted U, thus resulting in better overall pilot performance. The process begins by first determining why the human is involved in the first place. What is their raison d’être for being on the flight deck? This differs from asking what they could do or what they do best. It’s more a matter of what necessary and critical functions and performances does the human bring that are unique to humans? Next, human performance in carrying out this role is examined. Questions such as ‘What does the human need (information, control, authority) to perform this role?’ ‘What are the necessary operating conditions for the human, and what cognitive and physical state do they need to be in?’ and “What conditions and states will lead to poor performance?’ Only then can the function allocation actually begin. Since you have established the human’s role, it is relatively easy to make the first allocations. Anything that is specific to that role is allocated to the human. Next, the information, control, and authority requirements are addressed. What tasks or functions will assist the human in knowing what they need to know, controlling what they need to control, and having authority to perform their role. In the case of the pilot, this list is very long since the pilot may have to perform many of the tasks in the case of emergency. Each task should be rated with regard to the benefits it provides to the human and those tasks of high benefit should be initially allocated to the pilot. For example, for the pilot to perform their role as exception handler, they need to be constantly aware of the aircraft configuration and location. Manually flying the aircraft provides significant benefits in this regard. So it might initially be assigned to the human.

At this point, the human probably has too much assigned to them and that’s where the next step comes in. All that is known about human performance and human error should now be considered to see where this proposed allocation would break down. For the task of manually flying the aircraft, there are many issues. For example, it is known that the human will quickly suffer from fatigue, that they are liable to forget to make critical decisions such as leveling off at the prescribed altitude, and that the workload is high enough to consume all their attention when their attention is also needed elsewhere. Manually flying provides benefits but it has many problems that keep it from being a practical allocation.

In this case, the entire task of controlling the aircraft is a candidate for further decomposition into lower level tasks and functions and allocating some tasks to the human and some to the automation. In this further decomposition, each task is assessed for its value and benefit to the original goal (in this example, maintaining awareness of the aircraft’s situation). Do some tasks contribute more than others? The tasks that contribute less may be considered for allocation to the automation. One possible solution in this example is to have the pilot make major course changes such as altitude changes and heading changes and allow the automation to control on long route legs. This would be similar to just using the traditional auto pilot controls of heading, altitude, vertical velocity, and airspeed. This allocation addresses the fatigue issue while still providing some situation awareness benefit. There is, however, the problem of forgetting to make turns or changes. This is a very common human error. To address this problem, the task of remembering to make changes could be shared with the automation as compensation for the human’s weaknesses. This process continues for all of the functions initially assigned to the human. And each decision must be considered in light of the role that the pilot must perform.

This is certainly more involved and painstaking compared to the Fitts List allocation approach. In the Fitts list approach, controlling the aircraft would never be considered for allocation to the pilot because the automation can do it better. As we have seen, though, the result of this whole cloth allocation of the flight control function to automation has the effect of removing the human from the loop and allowing situation awareness to drop below acceptable levels for performing the role as exception handlers. Returning to the Yerkes-Dodson law mentioned earlier, there are two extremes of stress and workload that produce severely degraded human performance: low stress where the human has little to do or deal with and very high stress where the workload is approaching or is beyond the human’s limitations. In between these two extremes lies a region of high human performance. As workload/stress increase from zero, human performance climbs until it reaches something like a plateau where the human is performing at near optimal levels. The role based function allocation process has as one of its goals to keep the human in this mid-range of stress, arousal, and workload and to do so in a way that supports the role of exception handler.

In the example given above, an allocation solution was proposed to have the pilot make all major course changes and to have the automation hold those courses. (The full description of this allocation will be outlined in a future paper.) The courses themselves consist not only of the traditional autopilot courses of heading, altitude and airspeed, but also navigational courses such as airways, approaches, departures, and go around procedures. The automation keeps track of when those changes need to be made (as well as other flight restrictions) and reminds the pilot to perform them. It is important to note the shift in the human-machine relationship. Returning to the goalie analogy, the pilot is moved mid-field to work with the automation and the automation takes the place of the goalie. This goalie does not become bored, and the types of situations that are not handled by the human/automation mid-field (e.g., forgetting, over reacting, hitting the wrong switch) are just the sort that the automation does well.

Another outcome of this allocation is that the automation becomes a better CRM team member. In this allocation, the automation never automatically transitions to a less safe state. Returning to the narrative described in Sect. 4, the automation would alert the pilot that it is having difficulty maintaining control and will ‘ask’ the pilot to take the airplane. But just as is outlined in CRM protocols, the automation must never just unilaterally relinquish control. It tries to ensure that someone is always flying the plane. This helps avoid the human startle response and allows the pilot to assess the situation before taking control of the aircraft.

While the new resulting allocation and design requires a new flight deck design, there are some aspects that can be put into place in current flight decks. For example, the FMS could be used to alert the flight crew of course changes, and the pilots could be required to manually disengage the autopilot and make the course change and then reengage on the next leg. (This has the added benefit of combating skill loss by providing the pilot with frequent experiences of manual flying in various weather conditions [33]). Similarly, the auto-flight system may be able to be reprogrammed to not automatically disengage, but to alert the pilot at one or more thresholds that it is reaching design limits and then the pilot could positively disengage it and take control.

7 Conclusions

In engineering terms, the human operating system was designed to, for various reasons, not do everything that the rational mind knows that it should. We do not require computers to perform tasks that they are not programmed to perform. Yet, when it comes to humans, we design systems that expect and count on the human not making typical human errors. And for the majority of the time, they don’t err. But the design and programming of a human being is such that on certain occasions, that are not easily identified, the human will not do everything as they should, and we call these events human errors.

These task “errors” are, however, the result of the normal functioning of a human being. We know a great deal beyond ‘to err is human…’ about how humans function. We ignore this knowledge at our peril. If we as designers expect human pilots to act like machines, we are the ones making the error. Perhaps what we call human error can be described as the negative consequences of normal human behavior that was not accounted for in the design of the system. The proposed scheme for function allocation will never evade all human frailties; the system is simply over constrained. Normal human behavior will, on occasion, lead to negative consequences. However, role-based function allocation may lead to a more complementary and collaborative use of automation [34]. And it may help to get the most out of the human in the flight deck.

References

Boeing Commercial Airplanes, Statistical Summary of Commercial Jet Airplane Accidents Worldwide Operations 1959–2013. Boeing Commercial Airplanes, Seattle, WA (2014)

Kanki, B., Helmreich, R., Anca, J. (eds.): Crew Resource Management. Academic Press, San Diego (2010)

Boeing Commercial Airplanes, Statistical Summary of Commercial Jet Airplane Accidents Worldwide Operations 1959–2004. Boeing Commercial Airplanes, Seattle, WA (2005)

Sarter, N.B., Woods, D.D.: How in the world did we ever get into that mode? Mode error and awareness in supervisory control. Hum. Factors: J. Hum. Factors Ergon. Soc. 37(1), 5–19 (1995)

Parasuraman, R., Riley, V.: Humans and automation: use, misuse, disuse, abuse. Hum. Factors: J. Hum. Factors Ergon. Soc. 39(2), 230–253 (1997)

Sarter, N.B., Woods, D.D., Billings, C.E.: Automation surprises. Handb. Hum. Factors Ergon. 2, 1926–1943 (1997)

Skitka, L.J., Mosier, K., Burdick, M.D.: Accountability and automation bias. Int. J. Hum Comput Stud. 52(4), 701–717 (2000)

Aviation Safety Network. http://aviation-safety.net/statistics/period/stats.php?cat=A1

Pope, A.: An Essay on Criticism. Clarendon Press, Oxford (1909)

Williard, N., et al.: Lessons learned from the 787 Dreamliner issue on lithium-ion battery reliability. Energies 6(9), 4682–4695 (2013)

Bhana, H.: Trust but verify. AeroSafety World 5(5), 13–14 (2010)

http://aviation.about.com/od/Pilot-Training/tp/7-Reasons-You-Shouldnt-Become-a-Pilot.htm

Jordan, N.: Allocation of functions between man and machines in automated systems. J. Appl. Psychol. 47(3), 161 (1963)

Yerkes, R.M., Dodson, J.D.: The relation of strength of stimulus to rapidity of habit-formation. J. Comp. Neurol. Psychol. 18(5), 459–482 (1908)

Broadhurst, P.L.: A confirmation of the Yerkes-Dodson law and its relationship to emotionality in the rat. Acta Psychol. 15, 603–604 (1959)

Anderson, K.J.: Impulsitivity, caffeine, and task difficulty: a within-subjects test of the Yerkes-Dodson law. Pers. Individ. Differ. 16(6), 813–829 (1994)

Casner, S.M., Geven, R.W., Williams, K.T.: The effectiveness of airline pilot training for abnormal events. Hum. Factors: J. Hum. Factors Ergon. Soc. (2012). doi:10.1177/0018720812466893

Funk, K., et al.: Flight deck automation issues. Int. J. Aviat. Psychol. 9(2), 109–123 (1999)

Parasuraman, R., Mouloua, M., Molloy, R., Hilburn, B.: Monitoring of automated systems. In: Parasuraman, R., Mouloua, M. (eds.) Automation and Human Performance: Theory and Applications Mahwah, pp. 91–116. Lawrence Erlbaum Associates, NJ (1996)

Dismukes, R.K., Young, G.E., Sumwalt, R.L.: Cockpit interruptions and distractions: effective management requires a careful balancing act. ASRS Directline 10, 4–9 (1998)

Billings, C.E.: Human-centered aviation automation: principles and guidelines (1996)

Reason, J.: Human error. Cambridge University Press, Cambridge (1990)

Bureau d'Enquêtes et d'Analyses: Final report on the accident on 1st June 2009 to the Airbus A330-203 registered F-GZCP operated by Air France flight AF 447 Rio de Janeiro–Paris. Ministère de l’Écologie. du Dévéloppement durable, des Transports et du Logement, Paris (2012)

National Transportation Safety Board. Loss of Control on Approach, Colgan Air, Inc. Operating as Continental Connection Flight 3407 Bombardier DHC-8-400, N200WQ, Clarence Center, New York, February 12, 2009. NTSB/AAR-10/01. National Transportation Safety Board, Washington, DC (2010)

Wiener, E.L.: Human factors of advanced technology (glass cockpit) transport aircraft (1989)

Wiener, E.L.: Cockpit automation. In: Wiener, E.L., Nagel, D.C. (eds.) Human Factors in Aviation, pp. 433–461. Academic Press, San Diego (1988)

Woods, D.D.: Decomposing automation: apparent simplicity, real complexity. Autom. Hum. Perform. Theory Appl. 3–17 (1996)

Comerford, D., et al.: NASA’s Single-Pilot Operations Technical Interchange Meeting: Proceedings and Findings. (Report no. NASA-CP-2013-216513). NASA Ames Research Center, Moffett Field, CA (2013)

Schutte, P.: 2015 task analysis of two crew operations in the flight deck: investigating the feasibility of using single pilot in part 121 operations (in press)

Lachter, J., et al.: Toward single pilot operations: the impact of the loss of non-verbal communication on the flight deck. In: Paper Presented at the International Conference on Human-Computer Interaction in Aerospace, Santa Clara, CA (2014)

Lachter, J., et al.: Toward single pilot operations: developing a ground station. In: Paper presented at the International Conference on Human-Computer Interaction in Aerospace, Santa Clara, CA (2014)

Lowy, J.: Automation in the sky dulls airline-pilot skill, Daily News Los Angeles. http://www.dailynews.com/business/ci_18792474. Accessed 31 August 2011

Schutte, P.: Complemation: an alternative to automation. J. Inf. Technol. Impact 1(3), 113–118 (1999)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2015 Springer International Publishing Switzerland

About this paper

Cite this paper

Schutte, P.C. (2015). How to Make the Most of Your Human: Design Considerations for Single Pilot Operations. In: Harris, D. (eds) Engineering Psychology and Cognitive Ergonomics. EPCE 2015. Lecture Notes in Computer Science(), vol 9174. Springer, Cham. https://doi.org/10.1007/978-3-319-20373-7_46

Download citation

DOI: https://doi.org/10.1007/978-3-319-20373-7_46

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-20372-0

Online ISBN: 978-3-319-20373-7

eBook Packages: Computer ScienceComputer Science (R0)