Abstract

Digital twin technology has become a driving force in the transformation of the manufacturing industry, playing a crucial role in optimizing processes, increasing productivity, and enhancing product quality. A digital twin (DT) is a digital representation of a physical entity or process, modeled to improve decision-making in a safe and cost-efficient environment. Digital twins (DTs) cover a range of problems in different domains at different phases in the lifecycle of a product or process. DTs have gained momentum due to their seamless integration with technologies such as IoT, machine learning algorithms, and analytics solutions. DTs can have different scopes in the manufacturing domain, including process level, system level, asset level, and component level. This work presents the knowlEdge Digital Twin Framework (DTF), a toolkit that comprises a set of tools to create specific instances of DTs in the manufacturing process. This chapter explains how the DTF relates to other standards, such as ISO 23247. This chapter also presents the implementation done for a dairy company.

You have full access to this open access chapter, Download chapter PDF

Similar content being viewed by others

Keywords

1 Introduction

1.1 Definition, Usages, and Types of Digital Twins

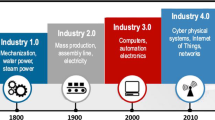

The manufacturing industry is continuously evolving, and digital twin (DT) technology has become a prominent driving force in this transformation. DTs play a crucial role in optimizing manufacturing processes, increasing productivity, and enhancing product quality.

A digital twin (DT) is a digital representation of a physical entity or process modeled with the purpose to improve the decision-making process in a safe and cost-efficient environment where different alternatives can be evaluated before implementing them. The digital twin framework (DTF) for manufacturing is a set of components for making and maintaining DTs, which describe the current state of a product, process, or resource.

DTs have pace momentum due to their seamless integration and collaboration with technologies such as IoT, machine learning (ML) algorithms, and analytics solutions. DTs and ML solutions benefit in a bidirectional way, as DTs simulate real environments, being a source of data for training the always data-eager ML algorithms. DTs are a source of data that would be costly to acquire in other conditions such as private data tied to legal and ethical implications, data labeling, complex data cleaning, abnormal data, or data gathering that require intrusive processes. In the other direction, ML models are a type of simulation technique that can be used to simulate processes and other entity behaviors for the DT. Some of the algorithms that can be used for simulation are deep learning neural networks, time-series-based algorithms, and reinforcement learning.

DTs are not specific software solutions, but they are a range of solutions that support the improvement of physical products, assets, and processes at different levels and different stages of the lifecycle of those physical assets [3]. Therefore, in the manufacturing domain, DTs can have different scopes such as [1] the following:

-

1.

Process level—recreates the entire manufacturing process. Plant managers use process twins to understand how the elements in a plant work together. Process DT can detect interaction problems between processes at different departments of a company.

-

2.

System level—monitors and improves an entire production line. System-level DTs cover different groups of assets in a specific unit and can be used for understanding and optimizing assets and processes involved in the production of a specific product [12].

-

3.

Asset level—focuses on a single piece of equipment or product within the production line. Asset DTs can cover cases such as the optimization of energy consumption, the management of fleet performance, and the improvement of personnel assignment based on skills and performance.

-

4.

Component level—focuses on a single component of the manufacturing process, such as an item of a product or a machine. Component-level twins help to understand the evolution and characteristics of the modeled component, such as the durability of a drill or the dynamics of a fan.

The creation of a digital copy of a physical object offers significant advantages throughout its entire life cycle [4]. This includes the design phase, such as product design and resource planning, as well as the manufacturing phase, such as production process planning and equipment maintenance. Additionally, during the service phase, benefits include performance monitoring and control, maintenance of fielded products, and path planning. Finally, during the disposal phase, the digital replica can facilitate end-of-life reuse, remanufacturing, and recycling efforts.

A DL framework is a toolkit that allows developers the creation of specific DT instances, and as such is a complex system composed of several tools such as data gathering and synchronization platforms, multi-view modelers, simulator engines, what-if analytic reporting, and vast integration capabilities.

Data collection is a crucial aspect of feeding a digital twin in the manufacturing industry. To ensure the accuracy and reliability of the DT’s representation, a robust and efficient data collection platform is essential. Such platforms must possess certain characteristics to meet the requirements of flexibility, availability, and support manufacturing communication protocols, while also ensuring efficiency and security. The data collection platform must have the ability to adapt to different types of data inputs, including sensor readings, machine data, process parameters, and environmental variables. This adaptability enables comprehensive data gathering, capturing a holistic view of the manufacturing process and all its interconnected elements.

Ensuring availability is essential for a data collection platform to be effective. Manufacturing operations typically operate continuously, demanding a constant flow of real-time data. The platform should guarantee uninterrupted data acquisition, seamlessly handling substantial data volumes promptly. It should offer dependable connectivity and resilient infrastructure to prevent data gaps or delays, thereby maintaining synchronization between the DT and its physical counterpart.

To connect to a range of devices, machines, and systems, support for manufacturing communication protocols is crucial. Networked devices that adhere to specific protocols are often utilized in production environments. The data collection platform should therefore be able to interact via well-established protocols like OPC-UAFootnote 1, MQTTFootnote 2, or ModbusFootnote 3. Rapid data transfer, synchronization, and seamless integration are all made possible by this interoperability throughout the production ecosystem.

Finally, security is of utmost importance in data collection for DTs. Manufacturing data often includes sensitive information, trade secrets, or intellectual property. The data collection platform must implement robust security measures, including encryption, access controls, and data anonymization techniques, to protect the confidentiality, integrity, and availability of the collected data. This ensures that valuable manufacturing knowledge and insights remain protected from unauthorized access or malicious activities.

The rest of this chapter is organized as follows: in the next subsection, we explain DT usages in the manufacturing sector. In Sect. 2, we present the digital twin framework. In Sect. 3, we present the case study and the methodology to experimentally evaluate the proposed method. In Sect. 4, we discuss the conclusion.

1.2 Digital Twin in Manufacturing

Digital twin technology has a wide range of applications in manufacturing, including predictive maintenance, quality management, supply chain management, and customer experience. This technology can help predictive maintenance breakthrough data fatigue and turn data into a competitive advantage [7]. By monitoring equipment data in real time, the DT can predict equipment failures before they occur, reducing downtime and increasing productivity. In a study, DTs of well-functioning machines were used for predictive maintenance, and the discrepancies between each physical unit and its DT were analyzed to identify potential issues before they become critical [8].

-

Predictive Maintenance and Process Optimization: DTs enable manufacturers to monitor equipment performance and predict potential failures or malfunctions, leading to timely maintenance and reduced downtime. Additionally, DTs can optimize manufacturing processes by simulating different scenarios and identifying bottlenecks and inefficiencies [9].

-

Quality Control and Inspection: DTs can play a critical role in quality control and inspection processes in manufacturing. By creating a virtual replica of the manufactured product, DTs can detect deviations from the desired specifications and suggest corrective actions to ensure optimal quality [10]. Additionally, DTs can help in automating inspection processes, reducing human error, and increasing efficiency [11].

-

Production Planning and Scheduling: By simulating the production environment, DTs can assist in creating optimized production schedules and plans, considering various constraints such as resource availability, lead times, and capacity utilization [13]. DTs can also support real-time adjustments to the production plan, allowing manufacturers to adapt to unforeseen events or disruptions [14].

-

Workforce Training and Skill Development: The integration of DT technology in manufacturing can facilitate workforce training and skill development. By simulating the production environment and processes, DTs enable workers to practice and enhance their skills in a virtual setting, reducing the learning curve and minimizing the risk of errors during real-world operations. Furthermore, DTs can provide personalized training and feedback based on individual performance, promoting continuous improvement [15].

-

Supply Chain Integration and Visibility: DTs can enhance supply chain integration and visibility in manufacturing by providing real-time information and analytics about various aspects of the supply chain, such as inventory levels, lead times, and supplier performance [16]. This increased visibility enables better decision-making and collaboration among supply chain partners, ultimately improving the overall efficiency and responsiveness of the supply chain.

Please check section head ``knowlEdge Manufacturing Digital Twin Framework'' for clarity.

2 knowlEdge Manufacturing Digital Twin Framework

2.1 Digital Twin Standardization Initiatives

There are many articles referencing potential DT architectures, which provide different forms of naming for the main components and layers of the DT architecture [2, 5, 6].

Most of those DT architectures summarize a DT from a mathematical point of view as a five-dimensional model defined as follows [4]:

where DT refers to digital twin, that is expressed as a function (F) aggregating: the physical system (PS), the digital system (DS), an updating engine that synchronizes the two words (P2V), a prediction engine that runs prediction algorithms (V2P), and an optimization dimension containing optimizers (OPT).

One of the most relevant initiatives to standardize a DT’s main building blocks is the one proposed by ISO 23247 [17] comprising a DT framework that partitions a digital twinning system into layers defined by standards. The framework is based on the Internet of Things (IoT) and consists of four main layers:

-

Observable Manufacturing Elements: This layer describes the items on the manufacturing floor that need to be modeled. Officially, it is not part of the framework, as it already exists.

-

Device Communication Entity: This layer collates all state changes of the observable manufacturing elements and sends control programs to those elements when adjustments become necessary.

-

Digital Twin Entity: This layer models the DTs, reading the data collated by the device communication entity and using the information to update its models.

-

User Entities: User entities are applications that use DTs to make manufacturing processes more efficient. They include legacy applications like ERP and PLM, as well as new applications that speed up processes.

On the other hand, the Digital Twin Capabilities Periodic Table (CPT) [16] is a framework developed by the Digital Twin Consortium to help organizations design, develop, deploy, and operate DTs based on use case capability requirements. The CPT is architecture and technology agnostic, meaning it can be used with any DT platform or technology solution. The framework clusters capabilities around common characteristics using a periodic-table approach:

The CPT framework clusters capabilities into the following main clusters:

-

Data Management: This cluster includes capabilities related to data access, ingestion, and management across the DT platform from the edge to the cloud.

-

Modeling and Simulation: This cluster includes capabilities related to the creation of virtual models and simulations of real-world entities and processes.

-

Analytics and Artificial Intelligence: This cluster includes capabilities related to the use of analytics and artificial intelligence to analyze data and generate insights.

-

Visualization and User Interface: This cluster includes capabilities related to the visualization of digital twin data and the user interface used to interact with the DT

-

Security and Privacy: This cluster includes capabilities related to the security and privacy of DT data and systems

-

Interoperability and Integration: This cluster includes capabilities related to the integration of DT systems with other systems and the interoperability of DT data with other data sources.

ISO 23247 and the Digital Twin Capabilities Periodic Table are generic frameworks that are worth taking into consideration when developing a digital twin framework because they provide a consistent and structured approach to digital twin implementation. Section 2.3 presents the alignment carried out between the knowlEdge Digital Twin Framework and the ISO 23247.

2.2 knowlEdge Digital Twin Framework

The knowlEdge DT framework is a toolkit solution composed of a set of modeling, scheduling, visualization, analysis, and data gathering and synchronization components that is capable to create instances of manufacturing digital twins at different scopes and phases of the product, process and asset lifecycle.

The components composing the solution (see Fig. 1) are described as follows:

-

Sensor Reader Interface: This interface is composed of the set of field protocols needed for connecting the pilots’ sensors to the knowlEdge Data Collection Platform [18]. The interface has to be aware of the details of the protocols in terms of networking, configuration, and specific data model.

-

Sensor Protocol Adapter: Once one data has been read, the sensor protocol adapter can distinguish whether the data is meaningful for the Data Collection Platform or has to be collected and presented as raw data.

-

Unified Data Collector: The module is responsible to add the semantic to the lower-level object and make them available to the upper level.

-

Data Model Abstractor: The Data Model Abstractor unifies the different information models that depend on the specific field protocol to hide that information when the data is presented to the real-time broker.

-

Data Ingestion: This interface is responsible for offering different mechanisms to communicate with the DTs’ framework, such as MQTT or REST API services.

-

Platform Configurator: The platform configurator exposes a REST API for the configuration of all the internal and external modules. Examples of configurations are the topic where the platform publishes the data, the configuration of the platform when a new sensor is been plugged into the system, its information model, etc.

-

DT Designer UI Interface:

-

DT Domain Model UI: this is the UI interface that allows an IT person or a skilled operator to define the DT domain data model, that is, the digital assets containing the model, with their features and to assign to them their behavior and its graphical representation. This UI will provide subsections to specify simulation services.

-

DT Visual Editor: this component allows to edit 3D elements that will be used to animate 3D visualization when needed.

-

-

DT UI Interface: It is the end-user UI set of interfaces used for running simulations and visualizing results through reports.

-

DT User View Backend: It is the backend engine that according to the decision view of the digital twin can represent the different widgets (indicators, tables, 3D view) that were defined in design time.

-

Digital Domain Model Manager: This is the main backend of the DT. It is in charge to create new DT instances based on data model definitions and connect them to existing simulators and other AI algorithms (such as reinforcement learning for production scheduling, neural networks for simulating the energy consumption of manufacturing machines). Domain Data Models contain the digital entities that will be part of the digital twin model, that is, the machines, resources, places, and people. The Digital Domain Model Manager will support the decomposition of digital elements in their parts through trees, and their connection with the physical objects through the knowlEdge real-time brokering component.

-

DT Data Aggregator: It is the backend component in charge of maintaining the DT model synchronized with the physical counterparts and offering APIs with the rest of the components of the architecture. One of its components is the context broker, which is based on the FIWARE Stellio Context BrokerFootnote 4.

-

3D Visualization Engine: This component can render 3D scenes of the simulations when a design is provided. Their results can be embedded into dashboards used by the operators when running simulations.

-

Behavior Manager: This component is in charge of keeping a linkage with endpoints of the algorithms that define the behavior of digital things, for instance, a linkage to the knowlEdge HP AI component that provides a REST API to the repository of knowledge AI algorithms that are to be tested using the DT. This subcomponent is also in charge of keeping a repository of linkages to simulators and other algorithms through a REST API that can be third-party solutions provided by external providers. The behavior manager has a scheduler engine that runs simulations according to time events or data condition rules that are executed against the DT model that is being filled with data from the IoT devices.

2.3 knowlEdge Digital Twin Framework Alignment with Current Initiatives

The importance of aligning the knowlEdge DT framework to the ISO 23247 standard on DTs cannot be overstated. The ISO 23247 series defines a framework to support the creation of DTs of observable manufacturing elements, including personnel, equipment, materials, manufacturing processes, facilities, environment, products, and supporting documents. Aligning the knowlEdge DT framework to the ISO 23247 standard can help ensure that the framework follows recognized guidelines and principles for creating DTs in the manufacturing industry. The following are the specific blocks of the ISO 23247 standard that have been aligned to the knowlEdge DT framework:

-

The knowlEdge DT has considered the terms and definitions provided by ISO 23247 standard to ensure that the framework is consistent with the standard.

-

The knowlEdge DT provides many of the ISO 23247’s functional entities (see Fig. 2, where the different colors are used to emphasize which functional entity from the ISO 23247 is covered by each functional block of the knowlEdge DT). It supports all the ISO 23247 functional entities based on the rest of the components provided by the knowlEdge project. This ensures that the framework meets the needs of the manufacturing industry.

-

The knowlEdge DT provides a Graphical DT Domain Data Modeler Editor that has been customized with the ISO 2347 Observable Manufacturing Elements, so users define the digital things using the exact terminology of the standard, such as Personnel, Equipment, Material, Manufacturing Processes, Facilities, Environment, Products, and Supporting Documents.

-

The knowlEdge DT provides integration mechanisms that make its usage in the application and services described in ISO 2347 possible.

3 knowlEdge Digital Twin for Process Improvement

DTs have become an essential tool for improving shop floor processes in the manufacturing industry. One specific application of a DT is for scheduling process improvement. By using a DT, manufacturers can optimize their production schedules to improve efficiency and reduce costs. The following is a description of how the DT framework was applied to a dairy company within the knowlEdge project to improve the management, control their processes, and automatize the scheduling of the weekly production of yoghurt.

The knowlEdge Data Collection Platform (DCP) is used to connect to the shop floor for gathering production and demand data. The platform was integrated with various sensors and devices to collect data in real time. The DCP was also used to collect data from various sources, such as the company’s ERPFootnote 5. By collecting data from various sources, manufacturers can get a complete picture of their production and demand data. The data was passed through the data collection platform for filtering, formatting and normalization. This assured the proper quality of data and ensured that the DT is accurate and reliable (see Fig. 3).

The processed data is pushed through an MQTT broker to the DT framework. The DT uses the data to model the behavior and performance of the manufacturing process. The DT framework was used to model the plant using ISO 2347 Observable Manufacturing Elements concepts (see Fig. 4).

The DT framework was also used to simulate different mechanics of scenarios to identify opportunities for process improvement, specifically for computing a production schedule based on metaheuristic rules provided by the company and for simulating the execution of the schedules based on what-if scenarios, allowing the manufacturing operators to select the optimal production plan according to a range of aspects such as timestamp, resource occupation, uncertainty resilience, or customer satisfaction. Figure 5 shows a partial view of the dashboard generated using the DT Decision View and populated with the information and the results of the DT simulators.

4 Conclusions

Digital twin technology has the potential to revolutionize the manufacturing industry by optimizing processes, increasing productivity, and enhancing product quality. By leveraging advanced digital techniques, simulations, and hybrid learning-based modeling strategies, DT technology can help overcome the challenges faced by traditional manufacturing methods and pave the way for the next generation of smart manufacturing.

This chapter has presented the knowlEdge DT framework, an open-source toolkit of DT modules supporting the modeling of physical assets and processes, and the execution of functional and AI-based simulators for the execution of what-if scenarios for improving the decision-making process. The tool has been used to also for the generation of synthetic data for training AI algorithms. It is composed of a set of modules as a DT Data Modeler, 3D twin modeler, IoT Ingestion Connector, Simulator/AI Manager and Repository, Event Scheduler, DT Live Dashboard, and the Data Collection Platform.

The DT Framework proposed was successfully used for creating a manufacturing DT instance for generating weekly manufacturing schedules based on a rule-based simulator and a discrete event simulator. The company where it was applied has improved their reactiveness to incidents occurring on the shop floor, optimizing the rescheduling process accordingly.

As more case studies and practical implementations emerge, the true potential of DT technology in manufacturing will become increasingly apparent, driving further transformation and innovation inReferences ``2, 12'' were not cited anywhere in the text. Please provide in text citation or delete the reference from the reference list.References 4 and 17 were identical and Reference 17 has been deleted. The subsequent references have been renumbered. Please check and confirm if appropriate. the industry.

Notes

- 1.

OPC Unified Architecture. https://opcfoundation.org/about/opc-technologies/opc-ua/

- 2.

- 3.

Modbus. https://modbus.org/

- 4.

- 5.

Enterprise Resource Planning.

References

Stavropoulos, P., Mourtzis, D.: Digital twins in industry 4.0. In: Design and Operation of Production Networks for Mass Personalization in the Era of Cloud Technology, pp. 277–316. Elsevier, Amsterdam (2022)

Ogunsakin, R., Mehandjiev, N., Marin, C.A.: Towards adaptive digital twins architecture. Comput. Ind. 149, 103920 (2023)

He, B., Bai, K.J.: Digital twin-based sustainable intelligent manufacturing: a review. Adv. Manuf. 9, 1–21 (2021)

Thelen, A., Zhang, X., Fink, O., Lu, Y., Ghosh, S., Youn, B.D., et al.: A comprehensive review of digital twin—part 1: modeling and twinning enabling technologies. Struct. Multidiscip. Optim. 65(12), 354 (2022)

Kim, D.B., Shao, G., Jo, G.: A digital twin implementation architecture for wire+ arc additive manufacturing based on ISO 23247. Manuf. Lett. 34, 1–5 (2022)

Shao, G., Helu, M.: Framework for a digital twin in manufacturing: Scope and requirements. Manuf. Lett. 24, 105–107 (2020)

Farhadi, A., Lee, S.K., Hinchy, E.P., O’Dowd, N.P., McCarthy, C.T.: The development of a digital twin framework for an industrial robotic drilling process. Sensors. 22(19), 7232 (2022)

Zhong, D., Xia, Z., Zhu, Y., Duan, J.: Overview of predictive maintenance based on digital twin technology. Heliyon (2023)

Hassan, M., Svadling, M., Björsell, N.: Experience from implementing digital twins for maintenance in industrial processes. J. Intell. Manuf., 1–10 (2023)

Lee, J., Lapira, E., Bagheri, B., Kao, H.A.: Recent advances and trends in predictive manufacturing systems in big data environment. Manuf. Lett. 1(1), 38–41 (2013)

Rodionov, N., Tatarnikova, L.: Digital twin technology as a modern approach to quality management. In: E3S Web of Conferences, vol. 284, p. 04013. EDP Sciences (2021)

Fang, Y., Peng, C., Lou, P., Zhou, Z., Hu, J., Yan, J.: Digital-twin-based job shop scheduling toward smart manufacturing. IEEE Trans. Ind. Inf. 15(12), 6425–6435 (2019)

Lu, Y., Liu, C., Kevin, I., Wang, K., Huang, H., Xu, X.: Digital twin-driven smart manufacturing: connotation, reference model, applications and research issues. Robot. Comput. Integr. Manuf. 61, 101837 (2020)

Ogunseiju, O.R., Olayiwola, J., Akanmu, A.A., Nnaji, C.: Digital twin-driven framework for improving self-management of ergonomic risks. Smart Sustain. Built Environ. 10(3), 403–419 (2021)

Ivanov, D., Dolgui, A., Sokolov, B.: The impact of digital technology and Industry 4.0 on the ripple effect and supply chain risk analytics. Int. J. Prod. Res. 57(3), 829–846 (2019)

Capabilities Periodic Table – Digital Twin Consortium. Digital Twin Consortium. Published August 8, 2022. https://www.digitaltwinconsortium.org/initiatives/capabilities-periodic-table/. Accessed 2 June 2023

ISO 23247-2: ISO 23247-2: Automation Systems and Integration – Digital Twin Framework for Manufacturing – Part 2: Reference Architecture. International Organization for Standardization, Geneva (2021)

Wajid, U., Nizamis, A., Anaya, V.: Towards Industry 5.0–A Trustworthy AI Framework for Digital Manufacturing with Humans in Control. Proceedings http://ceur-ws.org. ISSN, 1613, 0073 (2022)

Acknowledgment

This work has received funding from the European Union’s Horizon 2020 research and innovation programs under grant agreement No. 957331—KNOWLEDGE. This paper reflects only the authors’ views, and the Commission is not responsible for any use that may be made of the information it contains.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Open Access This chapter is licensed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license and indicate if changes were made.

The images or other third party material in this chapter are included in the chapter's Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the chapter's Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder.

Copyright information

© 2024 The Author(s)

About this chapter

Cite this chapter

Anaya, V., Alberti, E., Scivoletto, G. (2024). A Manufacturing Digital Twin Framework. In: Soldatos, J. (eds) Artificial Intelligence in Manufacturing. Springer, Cham. https://doi.org/10.1007/978-3-031-46452-2_10

Download citation

DOI: https://doi.org/10.1007/978-3-031-46452-2_10

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-46451-5

Online ISBN: 978-3-031-46452-2

eBook Packages: EngineeringEngineering (R0)