Abstract

This chapter discusses the integration of ethical deliberations within agile software development processes. It emphasizes the importance of considering ethical implications during the development of software, not just AI. The chapter proposes modes of reflection and deliberation that include disclosive, weighing, and applicative modes of contemplation. It argues that these three kinds of thinking are guided by different normative values. The chapter suggests that agile development is an excellent starting point for implementing ethical deliberations, as it allows for continuous reflection and learning. It also proposes that development teams can perform this task themselves up to a point with proper guidance. This section further discusses the potential of agile processes to naturally accommodate ethical deliberation. However, it also acknowledges the challenges associated with implementing agile processes, especially in the context of machine learning models.

You have full access to this open access chapter, Download chapter PDF

Similar content being viewed by others

1 Introduction

The widespread societal interest in the potential of artificial intelligence, and machine learning in particular, has sparked a renewed debate on technology ethics. Similar discussions have previously taken place in the areas of preimplantation diagnostics, cloning, and nuclear and genetic engineering. The core question in such debates is which values we should take into account while designing and developing technology and also whether and to what extent we should allow the technology at all. The basic problem is not new: the development of technology already raised philosophically relevant questions in Aristotle’s times, which he addresses in his Nicomachean Ethics. An independent systematic approach of the philosophy of technology in the modern era was introduced by Ernst Kapp (1877) in the second half of the nineteenth century. Since then, philosophy-of-technology considerations and approaches have been discussed under various names with different facets: technology assessment, value-sensitive design, responsibility-driven design, etc. (Friedman et al., 2017; Grunwald, 2010; Van den Hoeven et al., 2015). The growing recognition that software is assuming decision-making responsibilities in various aspects of life, or at the very least, providing decision-making assistance to system users, has engendered a feeling of reduced control. This realization contributes to the increasing significance of technology ethics.

To a software engineer, it comes as a surprise that the current ethics debate focuses on AI and, with few exceptions such as the ISO 4748-7000:2022 standard on addressing ethical concerns during system design (ISO, 2022), not on software in general: Doesn’t such a focus on AI falsely suggest that it is not necessary to consider values when developing ego shooter games, cryptocurrencies, file-sharing platforms, corona warn apps, or user interfaces for video platforms that are implemented without any form of AI? On the other hand, there indeed are specific ethical challenges associated with AI. However, from a systems perspective, whether a piece of software is using classic algorithms or machine-learned models seems secondary to the implementation of values. Yet, in the second case, another artifact, the training data, must of course be considered operationally. Explainability is often cited as a relevant criterion—but whether a complex algorithm in a distributed system such as a car is really that much easier to understand and hence less dangerous and more transparent than a learned decision tree or a trained neural network is a matter of divided opinion (see, e.g., Felderer & Ramler (2021) for classic systems and Elish (2010) for a discussion on machine learning systems). Either way, ethical values are embedded in machines through the design and programming choices made by their creators and developers. For example, when developing a machine learning model, the training data reflects past values, norms, and biases. Ignoring this will perpetuate them into the future. Additionally, the deployment and use of the technology has ethical implications, such as in the case of facial recognition technology that has been criticized for its potential for invasion of privacy and biased outcomes. As a result, it is important for developers, organizations, and users to consider the ethical implications of their technology and make choices that align with their values and the values of society. This sort of reasoning is called an ethical deliberation.

In this chapter, we will address the question of how to implement ethical deliberations within software development. It turns out that agile development is an excellent starting point (Zuber et al., 2022).

Earlier versions of our considerations on ethics in agile development have been discussed by Pretschner et al. (2021). This chapter specifically emphasizes the philosophical foundations.

2 Codes of Conduct and Software Development

In the last 10 years, more than 100 codes of conduct for software development have been developed by professional associations, companies, NGOs, and scientists. These codes essentially state more or less universally accepted values such as participation, transparency, fairness, etc. (Gogoll et al., 2021). Values are characterized by a high degree of abstraction, which leaves their concrete implications unclear. In software engineering, these codes do not provide the degree of practical orientation that software practitioners are hoping for. They do not provide engineers with immediate instructions for action and often leave them perplexed. Interestingly, the aforementioned codes of conduct often set individual values, such as fairness or transparency, without justification, which could help explain the discomfort of engineers in the face of the lack of concreteness. The implementation of these values is also simple, as long as it does not lead to contradictions, costs, or efforts, i.e., to trade-offs: What is wrong with “transparency”? Nothing—until transparency collides with privacy (or accuracy). There is also nothing to be said against the decision not to develop a guidance system for unmanned aerial vehicles—but the discussion becomes much harder when it leads to lost sales and the need to cut jobs. Thus, the descriptive formulation of isolated values alone is clearly insufficient.

The lack of immediate applicability lies in the nature of things and cannot be avoided. On the one hand, values are formulated in an abstract way. On the other hand, software is very context-specific, ranging from pacemakers over videoconferencing software to visual pedestrian detection systems. This means that software engineering is highly dependent on the context, too (Briand et al. 2017). This implies that we have to apply abstract ideas in a concrete context. Due to their abstract nature, Codes of Conduct hence cannot be a tool that provides step-by-step instructions of an ethical implementation of values in software that fits all contexts. Therefore, the embedding of values in software (development) must always be done on a case-by-case basis and tailored to the concrete context. Such a casuistic endeavor yearns for training in ethical reasoning and in practices that ensure ethical thinking to be a part of the daily development routine. In such a sense, Codes of Conducts address a work ethos by highlighting desirable attitudes that developers and designers need to have themselves. Hence, the focus shifts from ethically desirable products to ethically designing products.

In the following, we argue that agile software development is particularly suited to allow for a case-specific consideration of values as it can foster ethical deliberation and that ethical deliberations can, in turn, close the gap that the Codes of Conducts must leave open. This does, of course, not mean that non-agile development settings cannot embody ethical considerations as many ideas also apply to non-agile development processes. However, it turns out that the key ideas behind agile development blend surprisingly well with ethical deliberations.

3 Ethical Deliberation

Our challenge is to effectively incorporate ethical concepts into software development processes and thus the software products. This is no straightforward task when morally desirable software is intuitively difficult to identify and examine. As outlined before, it is impossible to define general decisive measures on how to implement and evaluate technology according to ethical criteria due to the context specificity of software and underdetermination of values (Gogoll et al., 2021). This means that we cannot simply create an algorithm that will produce an ethically good outcome. Ethical considerations cannot be resolved by only using checklists or with the help of predefined answers (ibid.) either. Therefore, it remains indispensable to continuously evaluate each new design project from its inception, throughout the development process, its deployment, operations, and its maintenance. Or, to put it another way, we need to normatively weigh, judge, and practically argue throughout the entire life cycle. This is what Brey calls anticipatory ethics and the reason why Floridi and Sanders formulate a proactive ethics (Brey, 2000, 2010, 2012; Floridi & Sanders, 2005). Broadly speaking, their approaches address the necessity of an active ethical stance while designing and developing digital artifacts in contrast to an ex-post ethical technology assessment. To stress the point: even if we had ethically good software, this assessment might change if the software or the operational context changed. For example, when Instagram was launched, it was obviously not started with the goal of making teenagers feel insecure regarding their physical appearance. Yet, when the context of the app changes, the developers need to reevaluate, e.g., how they present photos or if they should show the number of likes on a given photo.

Firstly, we need to identify the values we consider desirable. In fact, codes of conduct may be a good starting point here. Secondly, we need to know how to apply values in particular cases. Hence, we need to understand how to translate ethical values in technical language. This remains the task of a trained techno-ethical judgment (Nida-Rümelin, 2017; Rohbeck, 1993). What does that mean? On the one hand, we need to venture into ethical concepts as well as specific technical know-how, and on the other hand, we need a structure, a praxis, in which we can apply this hands-on knowledge. Thereby, praxis refers to the practical application of knowledge or theory to real-world situations. It is relevant to highlight that we often can perform an ethical deliberation without the use of classical ethical theories. We call such an endeavor pre-theoretical ethical deliberation that orientates itself at empirical input and is less principle-driven. However, also such a pre-theoretical thinking needs to follow rational rules and standards, whereas it cannot be a mere brainstorming process or stream of consciousness.

In the following, we argue that agile software development, especially when paired with an agile product life cycle that includes DevOps, enables a case-specific consideration of norms and values, promotes ethical deliberations, and can thus close the gap that the codes of conduct necessarily leave open. Agile development can be used to establish a desirable praxis by implementing ethical deliberations to achieve a desired outcome, namely, a morally valuable digital product.

4 Individual Responsibility of the Software Engineer

Before doing so, we must briefly consider who actually bears responsibility in the development and use of software-intensive systems. In the spectrum from complete societal systems to the single individual, there are several actors who can and must take responsibility (Nida-Rümelin, 2011, 2017; Nissenbaum, 1994, 1996): society, the organization developing the system and its subdivisions, the individual developer, the operator, and the user of that system. For example, a specific form of facial recognition may be accepted or rejected by society; an organization may choose to develop systems to identify faces from a certain ethnicity; a developer selects data and algorithms; and both the operator and user bear responsibility for possible misuse of that system. Care robots represent another classic example. Let us remember that our considerations go beyond AI as spelt out by the examples in the introduction: computer games, blockchain-based applications, warn apps, and the like.

Clearly, software engineers are not solely responsible. And above all, they are not responsible for all potential externalities: software engineers are not single-handedly responsible for the fact that the widespread use of Airbnb led to distortions in the housing market or that the existence of Uber leads to an increase in non-public transport. Yet, they do have some responsibility. The perception of their individual responsibility is what our approach is about.

Deliberation is performed by various roles: (1) persons who are well aware of technological possibilities and constraints, most often developers, are our focus; (2) persons who are capable of making normative reasoning explicit in certain societal subsystems, most often ethicists; (3) empirical researchers, such as domain specific experts, i.e., development psychologists, economists, and biologists; and (4) stakeholders, such as customers, users, or indirectly affected individuals. While we highlight normative modes of thinking and are claiming that those capacities need to be trained, we are well aware that even if developers are trained in normative reasoning, the deliberation teams will be dependent on the knowledge of experts from various fields to access domain-specific knowledge regarding the implementation of the product in their respective domain.

5 Agile Software Development

In addition to the guiding theme of simplicity, we argue that agile software development and especially agility as an organizational culture can be roughly simplified to four essential phenomena: planning, incrementality, empowerment, and learning (also see Farley, 2022).

First, there is the idea that at the famous conference on software engineering in 1968 in Garmisch, central characteristics of the production of industrial goods, and hardware in particular, were transferred to software. Notably, the separation of design and the subsequent production is such a fundamental concept, the adoption of which was reflected in software development methodologies like the waterfall model and the V-model. In these contexts, design documents are long-term planning artifacts. Yet, software is generally much more flexible than industrial goods, is not subject to a complex process of mass production, and thus requires and also enables rapid reaction to changing requirements and contexts. For this reason, among others, the separation between planning/design and production was reversed in the 1990s by concepts of agility, where planning was interwoven with production. Long-term plannability was considered to be an illusion: “Developers and project managers often live (and are forced to) live a lie. They have to pretend that they can plan, predict and deliver, and then work the best way that they know to deliver the system” (Beedle et al., 1998). The focus was thus shifted from long-term planning, which was underpinned by artifacts such as requirements specifications and functional specifications, specifications, and target architectures, to very precise short-term planning at the sprint level, which was accompanied by a reduction in the number of artifacts to be developed (Beck et al., 2001).

Second, the realization that long-term planning is difficult to impossible in a world where requirements and technologies are constantly changing (and they can change because of the flexibility of software!) leads almost inevitably to incremental development. One cornerstone here is the idea to sequentially develop individual functionalities completely up till the end and then immediately integrate them with the respective (possibly legacy) system developed up until now. This is in contrast with a distributed approach where multiple functionalities are developed at the same time and where system integration necessarily takes place only late in the process. The idea of incremental development elegantly addresses the colossal software engineering problems of integrating subsystems, and it smoothly coincides with the ideas behind continuous integration and deployment.

Third, also as a consequence of the short-term rather than a long-term planning perspective, the organizational culture and the understanding of the role of employees is changing. In a worldview where fine-grained specification documents are handed over to “code monkeys” for implementation, there are “higher-level” activities that write specifications and “lower-level” activities that implement them. In this chapter, we will focus on the Scrum implementation of agile. Therefore, a short introduction into the framework is warranted.

Scrum is an agile framework that’s primarily used in software development and project management. It encourages cross-functional teams to self-organize and make changes quickly, with a focus on iterative and incremental progress toward the project goal.

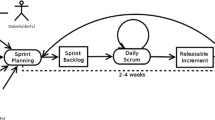

The Scrum framework is based on various key components. The Scrum Team comprises a Product Owner, Scrum Master, and Development Team. The Product Owner is tasked with maximizing the value of the product and interacts with the various stakeholders. The Scrum Master facilitates the use of Scrum within the team. The Development Team is responsible for creating the product increment. Sprints are time-boxed periods, usually lasting 2–4 weeks, within which a usable and potentially releasable product increment is created. The Product Backlog is a prioritized list of requirements, features, enhancements, and fixes to be developed, which is maintained by the Product Owner. Detailed Sprint Planning takes place at the start of each Sprint, where the team plans the work to be performed and commits to a Sprint Goal. The Daily Scrum, or Standup, is a 15-min meeting where the team reviews progress toward the Sprint Goal and plans for the next 24 h of work. At the end of each Sprint, a product-oriented Sprint Review takes place, where the team presents the work completed during the Sprint to stakeholders for feedback. Following this, a Sprint process-oriented Retrospective is conducted. The team reflects on the past Sprint, discussing what went well and what didn’t, and plans improvements for the next Sprint. The central idea behind Scrum is to deliver valuable, high-quality work frequently and adapt to changes rapidly.

In a Scrum-based agile environment, the primary focus is on addressing high-level requirements, known as “user stories” in the product backlog instead of module specifications. This approach empowers teams with more extensive design capabilities right from the start and across various aspects of the system, which in turn is reflected in cross-functional teams. The team is empowered when compared to the world of the waterfall or V-model and has much greater freedom in its design activities. The team decides how a feature is developed—and can thus influence ethical outcomes. This ability to make decisions, in turn, has direct consequences for the structure of the organization, as it raises the question of what the role of “managers” at different hierarchical levels is in such a world. It also explains why agile software development in non-agile corporate structures regularly does not work as one might have hoped for. It is noteworthy that the possibility of ownership through empowerment is, in our eyes, also an obligation of ownership.

Fourth, a central idea behind agile ways of working is a culture that embraces error and a culture of learning. Agile organizations can only be successful if they rely on a cybernetic feedback loop. This idea is closely related to the emphasis on short-term planning: because of a (necessary) lack of knowledge, inadequate design decisions will likely be taken, and the development of functionality may very well show that a chosen (technical) path cannot be pursued further in this way. If this is accepted and, in this sense, “mistakes” are perceived as common occurrences, mechanisms for learning from these mistakes must be established. In Scrum, this is reflected in reviews and retrospectives and results in the need for constant empirical process control. In terms of implementing values, this means that an organization continuously learns and improves how to do this.

We do not want to give the impression here that agility is the silver bullet—there is no such thing. Among others, the size of projects, domains with regulated development processes and certifications, organization and logistics of production of hardware-based systems, and the ability and possible lack of desire of employees to work independently are natural and long-known stumbling blocks. Especially in the context of creating pure machine-learned models, it is not directly obvious what agile development actually means since the act of training models does not lend itself easily to an incremental and iterative workflow (unless training the model for one purpose becomes one sprint, which is the perspective we are taking in this paper). Also it is an open question how to map DevDops, and more specific approaches like MLOps, workflows onto agile processes. However, whenever agile processes are a fitting solution, also in situations where they are using machine-learned models, it turns out that the four facets of agile development mentioned above allow ethical deliberation to happen in a very natural way. The dual perspective of how characteristics of modern (agile) software production as such have ethical consequences is explored by Gürses and Van Hoboken (Gürses & Van Hoboken, 2017).

The success factors and characteristics of agility somehow collide with approaches such as the ISO 4748-7000:2022 standard on ethical systems development that explicitly does not consider agile approaches and suggests that all ethical issues can be identified before software is written. Our considerations above indicate that the move toward agile development processes happened for good reasons, which is why we embed our ethical deliberation into those processes rather than confine ourselves to rather static up-front planning methods. While they are suitable and maybe necessary in some contexts, more often than not, they necessarily slow down product development. We think that ethical deliberation needs to be part of development itself. Our approach is designed to scale with the speed of the overall process.

In agile environments, particularly in agile software development, teams operate with a high degree of autonomy within progressively flattened hierarchies. They independently develop features in brief cycles, guided more by broad user requirements than detailed system specifications. Empowerment now means that software engineers can and must have a direct influence on the consideration of values through technology. To a large extent, however, this normative procedure is only possible when concrete design decisions are pending, i.e., when software is already being developed, and not completely before development. Constant reflection and learning are almost by necessity part of an agile culture, into which ethical considerations can be seamlessly embedded.

In our approach, we have combined these pieces into an augmented Scrum process (Fig. 1). The core idea is that, before the regular agile cadence begins, in a sprint 0, we first proceed descriptively and align ourselves with societal and organizational value specifications, i.e., we start from a framework defined by society and organization. Second, in the relationship between the product owner and the client, central ethical values are identified within this framework on a project-specific basis, if necessary, and become part of the product backlog. This can be done on the basis of existing codes of conduct or with other tools and methods that are specific to culture and context. We call this the normative horizon that is established during disclosive contemplation.

Embedding ethical deliberations into Scrum; based on Zuber et al. (2022) http://creativecommons.org/licenses/by/4.0/

Within each individual sprint, it is a matter of identifying new values and implementing normative demands through suitable technical or organizational mechanisms. To do so, developers must continue to be clear about their value concepts in their totality in each sprint. In particular, to avoid risks and harms, they need to think about the consequences of a chosen methodology, a chosen solution approach, a chosen architecture, a chosen implementation, or a chosen data set. At this point, this is done much more concretely than it could have been done before the start of the development, because an increasingly detailed understanding of the system emerges here. Moreover, while reflecting on the implementation of values, it may of course be realized that further values need to be considered.

Let us first focus on disclosive contemplation. Disclosive reasoning is an epistemic endeavor. In this phase, thinking means to identify ethical relevant values within a transparent and opaque environment. Such a reflective process requires a different form of normative orientation: on one hand, one must take a look at the digital technology itself. On the other hand, one needs to analyze normative demands of a special sub-social system. Throughout the project, the product owner elicits technical requirements from the stakeholders, performing such an ethical deliberation, and adds them to the backlog, for example, as user stories. At the beginning of each sprint, the product owner, the developers, and the scrum master decide what backlog items to work on. In this process, they prioritize backlog items and focus on weighing contemplations. When a backlog item is implemented, single developers mainly need applicative reasoning to decide technical realizations. After each sprint, each increment is reviewed with the customer and relevant stakeholders in the sprint review meeting. Finally, the process is reviewed in the sprint retrospective. This might also be followed with backlog grooming by the product owner to restructure and revise the backlog for future sprints.

Not all values can be implemented in individual sprints through code alone. For instance, if transparency is a value to be implemented, then a socio-technical accountability infrastructure may be one solution. By definition, such a solution is likely to affect policies, procedures, roles and responsibilities, and ultimately the culture of an organization that cannot be implemented by code in individual sprints alone.

We hinted above that ethical arguments become interesting when they conflict with other moral, aesthetic, or economic arguments that need to be considered during deliberation. Such conflicts sometimes lead to dilemma situations, which by definition cannot be resolved, but only decided, which is why it is necessary not to prolong this discussion arbitrarily but to come to a result just within the planning of a sprint.

This process is a context-specific process of reflection and deliberation, which must be structured accordingly and carried out permanently (Fig. 2). There is a proposal to permanently include an “embedded ethicist” (McLennan et al., 2020) in development teams. This seems too heavyweight to us, as it quickly seems too expensive for smaller companies, and there also is a general shortage of people with these skills that cannot be addressed quickly. With proper guidance, we believe that development teams can perform this task themselves up to a point. This is exactly what our approach aims to do: it includes the lightweight identification, localization, and critical reflection of relevant values. While the former represent a descriptive phase, the latter is already essentially normative. At the same time, technical feasibility or even intentional abstinence must always be discussed. We can systematically accompany this process in order to arrive at well-founded decisions and technical solutions. This does not always require a scientific-ethical analysis as we often reach a desirable level of ethical deliberation with pre-theoretical knowledge.

6 Ethical Deliberation in Agile Processes

The techno-ethical judgment must necessarily encompass three modes of contemplation (Fig. 2): disclosive, weighing, and applicative. First, we must recognize normatively relevant facts, i.e., we pursue an epistemic endeavor. We cannot assume that looking at Codes of Conduct, stakeholder surveys, or intuitive brainstorming is sufficient. Each of these, in itself, has its justification, but also its shortcomings (Gogoll et al., 2021). The judgment of the disclosive contemplation must be comprehensible for everyone. Only in the form of a rational argument can we justify our focus on specific facts (Blackman, 2022). The weighing mode of contemplation refers to decision-making. The objective is to balance normative arguments in order to arrive at a well-reasoned decision. It is essential that the values identified through observation can be technically executed or incorporated. The third mode is the applicative mode of contemplation: It requires contemplating how to translate values into technical functionality. Thus, the third mode requires a contemplation from values to translation activity aiming at technical functionality or technical solutionism. All three modes of contemplation are not to be thought of linearly. In fact, they can alternate and sometimes overlap: it is more like a back and forth.

First, we compile all the values from the internal ethical guidelines and other association guidelines. Initially, this is a descriptive approach. It does not make any further assessment and does not question the values found.

In the relationship between product owner and the client, if necessary, project-specific central values will be identified and become part of the product backlog. This can be based on existing codes of conduct, as well as on culturally and context-specific tools and methods. The main reason to do this early in the development life cycle is to ensure that everyone is on the same page and no one works on a project that goes against their core values. However, not only values are of interest, but moral requirements, social norms, and desirable virtues and practices must also be able to find consideration in a moral evaluation. Furthermore, possible consequences of the use of the software system play a role. Similar to the ISO 4748-7000:2022 standard (ISO, 2022) ethical theories may give some additional epistemic guidance. This means that in this case, ethical theories are more useful in identifying the significant moral aspects of software systems rather than serving as a means to decide on morally good actions at an individual level. Consequentialist ethics, such as utilitarianism, which weigh outcomes based on a specific net sum, provide a criterion for normative thinking to ensure stable and rational evaluations (Driver, 2011). Deontological ethical theories support disclosing normative aspects under some form of a universalization principle (Darwall, 1983). Normative thinking in respect to deontological demands will point to the fact that no further balancing is morally appropriate: in the case of developing some medical device, for example, the value of healing cannot be counterbalanced with economic or aesthetic values. Normative thinking is not only about trade-offs but also about understanding limitations that are morally demanded.

Additionally, it is crucial to acknowledge that normative thinking involves not only the consideration of values but also the inclusion of desirable attitudes or patterns of behavior. Such approaches are commonly discussed by virtue ethics. The latter involves the understanding of social interactions that allow desirable attitudes to manifest themselves. Hence, this approach is an appeal to desirable character traits as well as practices that enable such traits to be fruitful. It is less of a cognitive process than a form of education and internationalization of skills, attitudes, and evaluations. This, however, does not lead to virtue ethics being less rational (Siep, 2013). To understand technology, it is of great importance because it can highlight the ways in which digital technology fosters or corrupts desirable character attitudes as well as practices.

However, we feel that the profound knowledge of ethical theories is not part of software engineering and also not a prerequisite for developing software. Yet, as a means to pinpoint ethical relevant issues, it is useful to ask questions such as the following: Is a world desirable where everyone has access to this technology? Is it justifiable that everyone would use technology in a certain way? Such universalism tests reveal spaces that are morally questionable. Or more broadly: how does one assess the expected consequences? Is a world more desirable in which we, for instance, nudge older people to drink more water by using digital means, e.g., because their television set is automatically turned off in case of insufficient water consumption or they receive an electrical impulse? This kind of questioning belongs in the disclosive mode of contemplation but already shows the transition into weighing relation. The weighing mode of contemplation involves prioritizing and delimiting thinking, so that we can come to a conclusion. Throughout, we intermingle technological solutionism with moral concepts: This is the applicative mode of contemplation. Hence, identification of values, principles, and norms and their technological implementation are thought together to form reasonable technical or non-technical solutions.

Within each individual sprint, the third challenge is to implement these values through appropriate mechanisms. To do this, developers must continue to agree on the normative demands in each sprint and particularly consider the potential implications of the selected approach, methodology, architecture, or deployment scenario. This also corresponds to a work culture that favors ethical deliberations rather than suppressing thinking outside technological functionality.

7 Example

Building on these theoretical foundations, how can we now embed ethical deliberations into a practical setting?

To start with, we need to understand what kind of universe of discourse we find ourselves confronted with. Thus, it is important to consider the company’s environment and the product’s purpose. For example, a team writing software for a manufacturer of children’s toys faces different moral demands than a team that works for a manufacturer of weapons. Furthermore, a team building healthcare applications needs different normative solutions than a team that develops an application to share photos. Thus, employees must accept to a certain degree the corporate purpose and culture, i.e., the premise of the universe of discourse.

For our example, we assume that our team, consisting of four developers, Alice, Bob, Claire, and David, is working for a manufacturer of children’s dolls. The next generation of dolls will be connected to the Internet, respond to the children’s voice commands, and engage in basic conversations. Basically, we have ChatGPT behind a text-to-speech processor and in the package of a doll. Note that while the doll uses ChatGPT, it is not a pure AI application. It is a normal software application that uses ChatGPT as a service and can thus be developed just like any other classical piece of software.

7.1 Ethical Deliberation: Disclosive Contemplation

To define our normative horizon, we begin sprint 0 and identify normative relevant aspects in a structured process. We start by using the company’s ethical codex, industry values, and stakeholder interests. These gathered preferences and statements on values and moral beliefs are already the descriptive part of a disclosive ethical contemplation. Since this will lead to a plethora of values and a mix of ethical demands, we need to work with domain ethics to specify and substantiate normative claims.

The example of a ChatGPT doll raises obvious ethical concerns that are debated in advocatory ethics. In the case of children, advocatory ethics speaks for the still immature, who cannot yet fully enter into their own rights and values and is thus concerned with child welfare. Advocatory ethics for children is a philosophy and approach that seeks to promote ethical and moral behavior in children and protect their rights and well-being. Advocatory ethics for children strive to promote values such as fairness, equality, respect, empathy, responsibility, and honesty. These values are essential for building a strong moral foundation in children and helping them become compassionate and responsible individuals. By promoting these values, advocates of ethics for children aim to create a world where all individuals are treated with kindness, fairness, and respect and where children learn to make responsible and ethical decisions. These values also justify moral rules that provide a framework for ethical behavior and are essential for promoting positive social interactions and relationships. By teaching children to follow these rules, advocates of ethics for children aim to instill important values and skills that will serve them well throughout their lives. We designate normative demands that derive from the consideration of domain ethics as structural values.

We call normative aspects that are intrinsic to digital technology, such as privacy, security, and transparency, techno-generic values. They are relevant because of the characteristics of digital technology itself and hence independent of a specific application. As a result, these values are commonly mentioned in discussions regarding digital technology. This is why ethical codes of conduct targeting developers, operators, and users typically incorporate these concepts, principles, and values, as opposed to values like “honesty” or “kindness” that are not as pertinent. It is essential to consider these techno-generic values when evaluating the ethical implications of digital technology, but not only these.

There are many educational and interactive applications to engage children in learning and conversation, and that could be part of our doll. These applications can provide a fun and engaging way for children to learn new skills and knowledge while also promoting positive social and ethical values. Some examples of educational apps for children include language learning apps that use speech recognition technology to help children improve their language skills, interactive storytelling apps that use natural language processing to respond to children’s input and encourage them to engage in creative thinking, and chatbot apps that allow children to have conversations with virtual assistants.

A toy or application can still foster moral behavior in children if it is designed and implemented in a way that promotes these positive ethical attitudes. For example, an interactive storytelling app could feature stories that promote empathy, kindness, and fairness and provide opportunities for children to engage in ethical decision-making. A chatbot app could be programmed to respond in ways that encourage respectful and honest communication and offer guidance on how to navigate difficult social situations.

To ensure that such a toy or application promotes positive moral behavior in children, it is important to consider the design and content of the media (techno-generic normative aspects), as well as the ethical implications of its use (structural normative aspects). Developers should take care to avoid reinforcing harmful stereotypes or biases and should consider the potential impact of the doll on children’s social and emotional development. Additionally, parents and caregivers should be involved in the selection and use of such tools and should provide guidance and support to help children understand the ethical implications of their interactions with AI-powered toys and applications.

This first step is crucial to ethical software development. It serves as a critical juncture where the team can approach issues in a structured and rational manner, as opposed to engaging in unstructured discussions that may yield unpredictable and insufficient outcomes. While unstructured approaches may—by chance—lead to some level of improvement, the lack of documentation, reproducibility, and evaluation hinders their effectiveness. Therefore, a structured approach regarding the disclosive contemplation within ethical software development is imperative to ensure that the thought process is transparent and accountable to the team and others. It thus increases the probability of finding the relevant ethical aspects that surround the product and its context of use.

7.2 Ethical Deliberation: Weighing Contemplation and Sprint Planning

Having outlined our universe and our normative catalog of requirements for the product is determined, we move into the regular agile cadence. This means that, in the case of Scrum, we will have a sprint that entails a sprint planning meeting, daily scrum meetings, a sprint review meeting, as well as a sprint retrospective. The daily meetings are short and are used to communicate to the team what everyone is working on. Ethical issues should only in very rare cases be raised in these meetings. For example, Alice might talk about problems of the architecture for the performance of the network stack to get faster replies when the doll has an unstable network connection.

Thus, most of the ethical deliberation with focus on balancing normative aspects should be part of the sprint planning meetings since in these meetings, the development team discusses the scope of the next sprint and what backlog items will be worked on (Note: here we do not go into details of how to turn requirements into backlog items; for an example, see Vlaanderen et al. (2011)). When a backlog item gets selected for the sprint, as part of the discussion of the item, its definition of done and similar technical points, there should be a short discussion about their ethical issues. Here, the item can be analyzed in view of the normative criteria, such as privacy and honesty, so that we ensure that the item and its effect on the product do not violate the given set of moral demands. However, focusing on balancing and trade-offs of ethical demands does not mean that we do not need to switch between all three types of reasoning: it is obvious that while pondering how our doll may foster the value of honesty, we will need to think it in an applicative way. For example, should the doll tell the truth and back statements of facts with sources? Certainly, the doll should not be deceitful and manipulate its users. While doing so, it needs to balance trade-offs, for example, how should a doll answer a question if Santa Claus is real or where babies come from? Additionally, this mode of thinking should disclose problems with the doll. For example, how can we avoid problems of animism and avoid children seeing the doll as a living being? This would certainly lead into habits we do not find desirable.

Most backlog items, like minor features, improvements to existing features, or highly technical features, will most likely need no ethical discussion at all. However, for each feature, there should be space to deliberate normatively—also in a disclosive manner. It is equally important that the meeting is timeboxed and that a deliberation must end with a decision. If the team cannot come to a decision within the allotted timebox, it is a clear sign that the feature should not be implemented in the current sprint. The team should work on another feature and hand the problematic feature, together with the points of contention that arose in the discussion, back to the product owner and, if necessary, a dedicated ethical specialist. This approach does not impede the progress and velocity of the team and allows the ethical issues to be resolved asynchronously while the team is productive.

7.3 Ethical Deliberation: Applicative Contemplation to the Increment

For example, if the team discusses the network capabilities of the doll in sprint N, Bob raises an issue of privacy that conflicts with the normative horizon defined in sprint 0: that just knowing when the doll is active allows an attacker to infer when it is being used and someone is home. Then Claire suggests sending dummy data at random times to obscure the user’s usage patterns and habits. By applicative contemplation, the value of privacy can also be understood as predictive privacy (Mühlhoff, 2021) and be countered by technical means. Similarly, Alice might raise the honesty issue with Santa Claus in sprint N + 1: if asked by a child if Santa Claus exists, how should the doll answer? An honest doll might say that he is not real, thus hurting the child. Additionally, think about any kind of dialog surrounding sexuality. Furthermore, the doll should also react appropriately to malicious input: If the child playing with it shouts at it, for example, it should react accordingly. The latter implies that we do not want to guide the children toward violent behavior. Empirical input from evolutionary psychologists is needed as well as the critical reflection upon which purpose the doll is supposed to represent. It may be easier to involve ethical experts to elicit and understand desirable values of such a doll. If it is a doll, e.g., that will foster the children’s behavior as good parents, we need to extract the meaning of empathy, caring, and need satisfaction. These normative questions might be dealt with in a pre-development phase with an all-encompassing deliberation team to highlight the doll’s purpose such as outlined in 4. It is unlikely that all questions might be answered thoroughly by the development team while developing only. However, it is necessary that the developers have a certain mindset to enrich and ground normative dialogue. For example, when working on speech recognition in sprint N + 2, David might suggest also considering speech patterns of minority and non-native-speaking children to ensure that kindness and non-discrimination are technically enabled. In such a case, technological skills are combined with ethical awareness.

7.4 Ethical Deliberation: Sprint Review and Sprint Retrospective

At the end of each sprint, we have a sprint review meeting. Here, the team presents the work to the product owner and reviews it. Now, Claire checks on Bob’s solution to the privacy problem, and they find that David did not have enough time to train the system on a varied selection of speech patterns and create a backlog item to work on this in a future sprint. The product owner needs to verify that every artifact complies with the definition of done. For particularly sensitive artifacts, this should entail a focus on its ethical suitability.

During a sprint retrospective, the team discusses the work process and how to improve the development process. For example, Claire earns special praise for her idea to solve the privacy problem, or David is celebrated for identifying groups and organizations to contact speech samples. Additionally, this is the place to make changes to the normative horizon. For example, if David finds in his experiments that the doll is prone to insult its user or seem unhinged similar to early versions of Bing Chat (Vincent, 2023), the team might remove the value of free access to information through the bot and consider installing some kind of filtering (or censoring) capabilities. The development continues with the next sprint.

8 Conclusions

Our key insights and ideas can be summarized as follows:

-

More than 100 codes of conduct for software development have been developed in the last 10 years by various organizations. These codes state universal and abstract values but lack practical orientation. The lack of immediate applicability is due to both the abstract nature of values and the context-specificity of software.

-

Values must be embedded in software development on a case-by-case basis tailored to the context. Ethical deliberations must be part of the daily development routine to achieve ethically designed products.

-

Agile software development is particularly suited to allow for a case-specific consideration of values and can foster ethical deliberation.

-

Incorporating ethical concepts into software development processes and products is a challenge. It is difficult to identify and examine morally desirable software. There are no and there cannot be universal measures for implementing and evaluating technology according to ethical criteria due to the context specificity of software and underdetermination of values. Ethical considerations cannot be resolved by only using checklists or predefined answers.

-

Continuously evaluating design projects from inception to maintenance is necessary. Ethically good software may need reevaluation if the context changes.

-

We need to identify desirable values and translate them into technical language. Thus, some techno-ethical judgment is necessary.

-

Four actors must take responsibility in the development and use of software-intensive systems: society, the organization developing the system and its subdivisions, the development team, the operator, and the user of that system.

-

Software engineers are not solely responsible for every potential externalities, but they do have some responsibility. The approach is about the perception of the individual responsibility of software engineers.

-

There are four essential phenomena of agile software development: planning, incrementality, empowerment, and learning. Simplicity is overarching. Agile development is incremental development, sequentially developing individual functionalities and immediately integrating them with the system developed up until now.

-

Agile development empowers teams and allows for extensive design capabilities. It emphasizes learning and a culture of error. Ethical deliberation needs to be part of development itself, and the approach should scale with development. A work culture that favors ethical deliberations is important.

-

Ethical judgment must encompass three modes of contemplation: disclosive, weighing, and applicative. Disclosive mode is about recognizing normatively relevant facts. Weighing mode is about decision-making and balancing normative demands. Applicative mode is about translating values into technical functionality. These modes can alternate and overlap.

-

Although the focus lies on the developers, ethical deliberations necessarily include the product owner, who is in direct contact with the customers and has an important role in managing requirements of systems.

Discussion Questions for Students and Their Teachers

-

1.

What has contributed to the current significance of technology ethics?

-

2.

Why might it be surprising to a software engineer that the current ethics debate focuses on AI and not on software in general?

-

3.

What is suggested as an excellent starting point for implementing ethical deliberations within software development? Can you think about reasons for this claim?

-

4.

Why are values characterized by a high degree of abstraction?

-

5.

Why can’t codes of conduct be a tool that gives a step-by-step instruction of an ethical implementation of values in software that fits all contexts?

Learning Resources for Students

-

1.

Blackman, R. (2022). Ethical Machines—Your Concise Guide to Totally Unbiased, Transparent, and Respectful AI. Harvard Business Review Press.

This book provides an overview about ethical issues regarding AI. It is a good introduction for students particularly interested in the ethics of AI.

-

2.

Gogoll, J., Zuber, N., Kacianka, S., Greger, T., Pretschner, A., & Nida-Rümelin, J. (2021). Ethics in the software development process: from codes of conduct to ethical deliberation. Philosophy Technology. https://doi.org/10.1007/s13347-021-00451-w.

This paper argues that codes of conducts and ethics are not enough to implement ethics into software development. Instead, ethical deliberation within software development teams is necessary.

-

3.

Reijers, W., Wright, D., Brey, P., Weber, K., Rodrigues, R., O’Sullivan, D., & Gordijn, B. (2018). Methods for practising ethics in research and innovation: A literature review, critical analysis and recommendations. Science and engineering ethics, 24, 1437–1481.

This review discusses different approaches for a systematic ethical reflection on technology.

-

4.

Winkler, T., & Spiekermann, S. (2021). Twenty years of value sensitive design: a review of methodological practices in VSD projects.

Ethics and Information Technology, 23, 17–21. In this paper, the VSD approach and its main concepts are discussed and compared to other process-related methodologies.

-

5.

Vakkuri, V., Kemell, K. K., Jantunen, M., Halme, E., & Abrahamsson, P. (2021). ECCOLA—A method for implementing ethically aligned AI systems. Journal of Systems and Software, 182, 111067.

This paper introduces ECCOLA, a method for implementing AI ethics and bridging the gap between principles and values and the requirements of AI systems. It discusses the steps developers and organizations should take to ensure ethical considerations are integrated into the development process.

References

Beck, K., Grenning, J., Martin, R., Beedle, M., Highsmith, J., Mellor, S., van Bennekum, A., Hunt, A., Schwaber, K., Cockburn, A., Jeffries, R., Sutherland, J., Cunningham, W., Kern, J., Thomas, D., Fowler, M., & Marick, B. (2001). Manifesto for agile software development. Accessed June 7, 2023, from http://agilemanifesto.org/

Beedle, M., Devos, M., Sharon, Y., Schwaber, K., & Sutherland, J. (1998). SCRUM—An extension pattern language for hyperproductive software development. Accessed June 7, 2023, from http://jeffsutherland.com/scrum/scrum_plop.pdf

Blackman, R. (2022). Ethical machines - Your concise guide to totally unbiased, transparent, and respectful AI. Harvard Business Review Press.

Brey, P. (2000). Disclosive computer ethics. ACM Sigcas Computers and Society, 30(4), 10–16.

Brey, P. (2010). Values in technology and disclosive computer ethics. The Cambridge Handbook of Information and Computer Ethics, 4, 41–58.

Brey, P. A. (2012). Anticipatory ethics for emerging technologies. NanoEthics, 6(1), 1–13.

Briand, L., Bianculli, D., Nejati, S., Pastore, F., & Sabetzadeh, M. (2017). The case for context-driven software engineering research: Generalizability is overrated. IEEE Software, 34(5), 72–75.

Darwall, S. L. (1983). Impartial reason. Cornell University Press.

Driver, J. (2011). Consequentialism. Routledge.

Elish, M. O. (2010, June) Exploring the relationships between design metrics and package understandability: A case study. In 2010 IEEE 18th international conference on program comprehension (pp. 144–147). IEEE.

Farley, D. (2022). Modern Software Engineering. O’Reilly.

Felderer, M., & Ramler, R. (2021). Quality assurance for AI-based systems: Overview and challenges (introduction to interactive session). In Software quality: Future perspectives on software engineering quality: 13th international conference, SWQD 2021, Vienna, January 19–21, 2021, Proceedings 13 (pp. 33–42). Springer International Publishing.

Floridi, L., & Sanders, J. W. (2005). Internet ethics: The constructionist values of homo poieticus.

Friedman, B., Hendry, D. G., & Borning, A. (2017). A survey of value sensitive design methods. Foundations and Trends Human Computer Interaction, 11(2), 63–125.

Gogoll, J., Zuber, N., Kacianka, S., Greger, T., Pretschner, A., & Nida-Rümelin, J. (2021). Ethics in the software development process: From codes of conduct to ethical deliberation. Philosophy and Technology. https://doi.org/10.1007/s13347-021-00451-w

Grunwald A (2010) Technikfolgenabschätzung: Eine Einführung Bd. 1. edition sigma, Berlin.

Gürses, S., & Van Hoboken, J. (2017). Privacy after the agile turn. In J. Polonetsky, O. Tene, & E. Selinger (Eds.), Cambridge handbook of consumer privacy. Cambridge University Press.

ISO. (2022). Standard 24748-7000:2022, Systems and software engineering – Life cycle management – Part 7000: Standard model process for addressing ethical concerns during system design.

Kapp, E. (1877). Grundlinien einer Philosophie der Technik: zur Entstehungsgeschichte der Cultur aus neuen Gesichtspunkten.

McLennan, S., Fiske, A., Celi, L. A., Müller, R., Harder, J., Ritt, K., Haddadin, S., & Buyx, A. (2020). An embedded ethics approach for AI development. Nature Machine Intelligence, 2, 488–490.

Mühlhoff, R. (2021). Predictive privacy: Towards an applied ethics of data analytics. Ethics and Information Technology. https://doi.org/10.1007/s10676-021-09606-x

Nida-Rümelin, J. (2011). Verantwortung. Reclam.

Nida-Rümelin, J. (2017). Handlung, Technologie und Verantwortung. In: Berechenbarkeit der Welt? (S 497–513). Springer VS, Wiesbaden.

Nissenbaum, H. (1994). Computing and accountability. Communications of the ACM, 37(1), 72–81.

Nissenbaum, H. (1996). Accountability in a computerized society. Science and Engineering Ethics, 2(1), 25–42.

Pretschner, A., Zuber, N., Gogoll, J., Kacianka, S., & Nida-Rümelin, J. (2021). Ethik in der agilen Software-Entwicklung. Informatik Spektrum, 44(5), 348–354.

Rohbeck, J. (1993). Technologische Urteilskraft: Zu einer Ethik technischen Handelns. Suhrkamp.

Siep, L. (2013). Vernunft und Tugend. Mentis.

Van den Hoeven, J., Vermaas, P. E., & Van de Poel, I. (2015). Handbook of ethics, values and technological design: Sources, theory, values and application domains. Springer.

Vincent, J. (2023). Microsoft’s Bing is an emotionally manipulative liar, and people love it. The Verge. Accessed June 7, 2023, from https://www.theverge.com/2023/2/15/23599072/microsoft-ai-bing-personality-conversations-spy-employees-webcams

Vlaanderen, K., Jansen, S., Brinkkemper, S., & Jaspers, E. (2011). The agile requirements refinery: Applying SCRUM principles to software product management. Information and Software Technology, 53(1), 58–70.

Zuber, N., Gogoll, J., Kacianka, S., Pretschner, A., & Nida-Rümelin, J. (2022). Empowered and embedded: Ethics and agile processes. Humanities and Social Sciences Communications, 9(1), 1–13.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Open Access This chapter is licensed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license and indicate if changes were made.

The images or other third party material in this chapter are included in the chapter's Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the chapter's Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder.

Copyright information

© 2024 The Author(s)

About this chapter

Cite this chapter

Zuber, N., Gogoll, J., Kacianka, S., Nida-Rümelin, J., Pretschner, A. (2024). Value-Sensitive Software Design: Ethical Deliberation in Agile Development Processes. In: Werthner, H., et al. Introduction to Digital Humanism. Springer, Cham. https://doi.org/10.1007/978-3-031-45304-5_22

Download citation

DOI: https://doi.org/10.1007/978-3-031-45304-5_22

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-45303-8

Online ISBN: 978-3-031-45304-5

eBook Packages: Computer ScienceComputer Science (R0)