Abstract

In industrial production, customers’ requirements are rising regarding various aspects. Products have to be produced more economical, more flexible, faster, and with much higher quality requirements. Furthermore, especially for traditional mass production processes, shorter product cycles increase the demand in rapid production and process development. The inherent increased product and production complexity raises additional challenges not only in development but also in setup and operation. Lastly, upcoming requirements for sustainable production have to be incorporated. These conflicting aspects lead to increasing complexity for production development as well as production setup at each individual production step as well as along the complete value chain. To master these challenges, digitalization and data-driven models are fundamental tools, since these allow for the automation of many basic tasks as well as processing of large data sets to achieve process understanding and derive appropriate measures. This chapter illustrates requirements for digital systems to be created and benefits derived by different novel systems. Furthermore, because modern systems have to incorporate not only single processes but complex process chains, various production processes and assembly processes are taken into account. In the following chaps. 13, “Decision Support for the Optimization of Continuous Processes Using Digital Shadows,” 14, “Modular Control and Services to Operate Lineless Mobile Assembly Systems,” 12, “Improving Manufacturing Efficiency for Discontinuous Processes by Methodological Cross-Domain Knowledge Transfer,” and 11, “Model-Based Controlling Approaches for Manufacturing Processes,” digitalization and Industry 4.0 approaches are presented, which incorporate data-driven models for a wide variety of production processes and for different time scales. Many techniques are illustrated to generate benefits on various levels due to the use of data-driven, model-based systems, which are incorporated into a digital infrastructure.

You have full access to this open access chapter, Download chapter PDF

Similar content being viewed by others

Keywords

1 Introduction

Production technology has come a long way since the early beginning of industrial manufacturing. Starting with the First Industrial Revolution, which incorporated machine based production using steam-powered or water-powered machines, industrial manufacturing has steadily improved regarding efficiency and speed. In the Second Industrial Revolution, logistic infrastructures and electricity like railroad tracks and assembly belt production lines have boosted industrial production and extended it toward a broader field of view. The Third Industrial Revolution, introducing electronic systems, microcontroller, and embedded systems, further increased the efficiency and set the foundation for the Fourth Industrial Revolution, which is still ongoing as about 64% of the companies are still at the beginning of the digital transformation (Xu et al. 2018; PwC 2022). This Fourth Industrial Revolution aims at establishing a flexible production, which is capable of adapting production toward changing requirements regarding product complexity, quality, and speed while increasing customer satisfaction via production on demand or individualized products. Furthermore, it aims for optimized processing regarding quality and costs as well as sustainability (Ghobakhloo 2020). To achieve this, the use of data along the value chain is the main enabler (PwC 2022).

In general, this development is driven by certain factors like increasing complexity (information intensity), increasing demand for customizability and functionality, flexibility, efficiency benefits through standardization and the substitution of competencies, resilience, as well as the improved information exchange with partners and customers. This is underlined by more than 1 Bio. € investments into digital manufacturing sites yearly, which makes an annual investment of 1.8% of the net revenue (Andal-Ancion et al. 2003; Christensen 2016; PwC 2022).

In the following, we discuss which challenges arise for production technology due to consumer and customer requirements and which challenges have to be met to achieve a production according to Industry 4.0. Furthermore, we discuss, how these can be overcome by novel approaches in the field of production processes and assembly processes, leading to actual benefit for production.

2 Challenges for Industrial Manufacturing

In industrial production, customers’ requirements are rising regarding various aspects. Products have to be produced more economical, more flexible, with more variants, faster, and with much higher-quality requirements. Furthermore, especially for traditional mass production processes, shorter product cycles increase the demand in rapid production and process development as well as faster product changes in production.

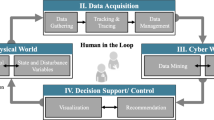

These diverse requirements result in higher complexity regarding all areas of production including product design, process development and planning, as well as mastering the production processes itself. Furthermore, each area including all needed assets has to be coordinated and fine-tuned to the current change requirements. To be able to achieve this, the right data at the right point in the process chain has to be acquired in the first place. Due to the complexity involved in production, this can be an extensive task, since, many domains are included in these processes ranging from sales and order management, process development, process planning to manufacturing. Additionally, each domain involves a large variety of interfaces, protocols, and formats as well as different semantic information (Fig. 10.1).

Especially on the production shop floor, data interfaces of machinery vary depending on the individual configuration and the age of production machines. Therefore, connectivity ranges from no usable data interface to file-based storage or export to locally accessible interfaces like RS232/485 or bus-driven systems to modern Ethernet-based interfaces like OPCUA. Additionally, data introduced by the human via human-machine interfaces (HMI) has to be considered. Depending on the task, for which the data is intended to be used, requirements are created regarding acquisition speed as well. For real-time applications, for example, not all data acquisition methods are capable of providing data at the necessary sampling rates. If a direct feedback has to be given to achieve a defined task, the used interface has to be capable of accepting input data and perform actions accordingly, which is again not provided by any interface (Rostan 2014; Hopmann and Schmitz 2021; Cañas et al. 2021; OPC Foundation 2022).

Lastly, interfaces also do not specify the semantic information, which is provided, such that domain knowledge and experience is necessary to define which information has to be used and how it will be used. This leads to lots of manual overhead by individual configuration and establishment of data pipelines, which makes data engineering a non-negligible expense. Additionally, interdisciplinary skillsets are necessary to be able to perform these integration tasks.

If the capability of data acquisition is established, the data has to be processed, stored, and/or provided to other systems. Data processing itself can be performed in many different ways using varying hardware and software. The appropriate technology again has to be chosen based on the requirements of the task, which has to be performed. Rapid development in Internet of Things (IoT) technologies on the one hand provide a variety of tools; on the other hand, the landscape of tools and technologies for data integration and digitalization got complex and diverse (Cañas et al. 2021).

For real-time applications, for example, data has often processed in close proximity to the process, since the latency introduced by the network due to protocol overhead or wire length cannot be accepted. For such applications, edge devices are used, which reduce latency and locate the processing power close to the data source. For other applications like inline optimizations, higher latencies are acceptable, and processing can therefore be performed on a more economical server infrastructure (Pennekamp et al. 2019; Cao et al. 2020; Hopmann and Schmitz 2021; N.N. 2022).

Processing also relies on algorithms and models analyzing the data and deriving appropriate outcomes. Depending on the complexity and computational effort, the software and hardware have to be chosen to meet these requirements regarding execution times.

Finally, the data has to be stored and/or provided to other systems. Therefore, the right concepts for databases, data warehouses, or data lakes have to be considered, which fulfill requirements regarding storage capacity and database interaction speed (Nambiar and Mundra 2022).

To actually generate benefits for production, the data has to be appropriately processed. This includes an aggregation of all necessary data, which itself often relies on specific domain knowledge to establish an acquisition of the right data sources and process-specific settings or parameters. These sources can be machine interfaces, sensors, human-machine interfaces, dedicated databases, or further sources. The data has also to be aggregated and interpreted to be used as digital representation (digital shadow) of a specific use case. Furthermore, task-specific models have to be created based on this data to represent the targeted use case and identify appropriate measures. In process technology, the range of modeling techniques is huge, ranging from physical motivated models to data-driven models, and the most suitable one has to be identified to achieve the highest benefit (Cañas et al. 2021).

Another requirement is an increasingly flexible production, which is capable of changing manufactured products more rapidly while reducing overhead for each product change. This can on the one hand be achieved due to data availability and suitable models to optimize the available machinery. On the other hand, the processes and machines have to be developed toward these requirements to overcome the limitations of the physical capabilities. Therefore, improvement of the production processes or novel manufacturing approaches have to be developed.

3 Potential and Benefits

Production processes get increasingly difficult to handle and operate at the optimal processing conditions due to the complexity in process control and machine operation as well as influencing factors like varying material properties and ambient condition. Furthermore, for overall process efficiency, not only a single process has to be observed but also previous and following processing steps. In addition to the complexity of the individual process itself, demanding a high skillset to be developed and operated, the processes interact with other such processes as well as with the processed material and the environmental conditions.

One important potential of digitalization for production is to achieve an improved transparency of production processes, enabling various benefits on the management and operation level. From the management point of view, transparency assists the operators to supervise more production processes simultaneously and be able to rapidly react to changing states like drifts in process quality or unforeseen production changes. This can be achieved with a wide variety of techniques, starting with raw data illustration, extended by computation of KPIs to increase information density to complex techniques like soft sensors, incorporation of simulation data, or improved quality measurements. In the following chapters, use cases for these methods are illustrated at the processes of milling, extrusion, and condition monitoring of ball screws.

The gained transparency also enables operators to get a more sophisticated insight into the process itself, and these are assisted to understand the behavior of a process more deeply. Therefore, the operator is able to set up and operate the process more efficiently and generate improved manufacturing speed or product quality. Data about the state and condition of the process has to be processed and made available to the operator in a condensed and understandable manner.

If this potential is reached, further methods for automatic decision development can provide the operator with guidance in the form of an assistance system. In this case, the operator does not necessarily have to understand the process in detail, but is guided by a model-driven system. A model-driven system analyzes the process and develops a suitable measure. To be able to do so, novel systems are developed based on physical and data-driven models in combination with machine learning approaches, which are capable of modeling complex production processes and lead to higher process efficiency or higher quality. In the following chapters, such methods are illustrated for the use cases of welding, laser drilling and cutting, injection molding, fine blanking, or coating.

Furthermore, systems are developed, which automatically plan or interact with the process to achieve the highest efficiency or speed. The use case of assembly illustrates how a combination of novel information infrastructure coupled with standardized formats and model-driven decision-making systems enables an efficient, fast, and flexible assembly process while incorporating various boundary conditions.

The overall benefits can be stated as follows:

-

Higher transparency in production

-

Increased information availability

-

Improved process understanding

-

Higher process efficiency

-

Higher process and product quality

-

Increased flexibility

-

More resilient processes

4 The Approach of the “Internet of Production”

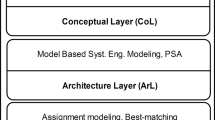

As illustrated in Fig. 10.2, within the “Internet of Production,” a holistic approach is pursued to enable production technologies for upcoming requirements.

At the process level, many different process technologies are investigated to be able to address different process requirements and applications. These can be structured in applications requiring real-time or fast data acquisition and processing in combination with reduced models to achieve real-time computation, discontinuous and continuous processes to cover the development for online and inline digitalization methods, as well as ontologies and semantics for both types of processes and assembly processes, which inherit a close connection to prior processing step and introduce many boundary conditions and a great variety of submodels to be accessed.

For each process technology, data acquisition is performed with industry domain-specific interfaces and formats as well as additional sensors. For this area of data engineering, knowledge with respect to industrial data interfaces as well as intense domain knowledge about the process and the necessary data sources is required. To efficiently master data engineering and data processing, an extremely interdisciplinary and wide skillset is necessary (Pinzone et al. 2017). Some domains, which are considered in the following chapters, are milling, rolling, extrusion, injection molding, high-pressure die casting, open die forging, fine blanking, welding, coating, laser cutting, and industrial assembly using various approaches.

Furthermore, different information infrastructure concepts are used. For time-critical processing, edge computing is used to enable fast signal processing for closed-loop milling control to achieve higher process and part quality (Schwenzer 2022). For complex tasks with many data sources, sub pub infrastructures are used to organize information, make information available, and provide it to a cloud-based modular infrastructure (Buckhorst et al. 2021). Furthermore, database-oriented approaches are investigated like continuous data storage for continuous processes like rolling or extrusion.

As stated earlier, data processing is performed according to the specific task and specific domain using suitable models. For production technology, one important branch of models is created using analytical approaches like physically motivated models or models based on finite element simulations (Hopmann et al. 2019).

To bridge the gap between individual and domain-specific knowledge, a common definition of semantic dependencies is developed based on the Web Ontology Language OWL. Ontologies were developed for a standardized and formalized description of knowledge and can therefore be used to formalize knowledge and especially relationships between all occurring assets in production, may it be the used material, the manufacturing process, the order along the value chain, or the actual product. OWL therefore uses standardized formats in XML, RDF, or RDF-S format (World Wide Web Consortium (W3C) 2003). Using a standardized syntax and a standardized definition allows applications to electronically interpret the information and automate currently manually performed tasks like data aggregation or data interpretation. Furthermore, a common standard for data interchange in terms of formats for data exchange is developed. Along with the research in the field of “Infrastructure” of the “IoP,” which focuses on Asset Administration Shells (ASS), a methodology to automatically connect data sources using a given ontology and available ASS is developed. Asset Administration Shells define a standardized way for defining and also establishing connectivity to an Industry 4.0 asset. It can be used either as a passive ASS, providing necessary information for an asset, or actively as a standardized communication interface with the interface (Tantik and Anderl 2017; Sapel et al. 2022).

Furthermore, data exchange has also to be shared outside of trusted boundaries like the shop floor or company boundaries. Therefore, suitable data exchange interfaces have to be used in combination with suitable security measures, to provide information only to authorized systems in a necessary granularity. Additionally, approaches have to be developed, which preserve the intellectual property of the instance providing data. This can, for example, be achieved using anonymization techniques or dedicated systems, which process the given information and only provide the results or calculated measures (Pennekamp et al. 2019, 2020).

By this global connectivity, benefits can be derived throughout whole value chains, and the increasingly valuable good data can be most efficiently used, creating a World Wide Lab.

5 Conclusion

The increasing requirements on production processes, resulting from increasing demands of customers and consumers, result in the need of increasingly complex processes and the need for using the maximum potential of each processing process. Both aspects result in the need of handling rapidly changing, multidimensional, and complex problems. To master these problems, adaptive smart systems are necessary, which process all given information and derive optimized measures. Especially data-driven and model-based systems are capable of achieving this, especially in the field of processing technology, since these are capable of working on small sample sizes. Furthermore, such smart systems have to be deployed in an economic manner to avoid cost overhead when introducing new products or changing production. Modern information technology along with standardization has the potential for automating and fastening digitalization of existing and new production assets. Domain knowledge along with data-driven modeling furthermore enables the creation of digital representations of the processes (digital shadows) to evaluate and optimize those. Nevertheless, one of the greatest challenges is to master the high degree of interdisciplinarity necessary and bring together all needed skillsets for a successful implementation.

References

Andal-Ancion A, Cartwright P, Yip GS (2003) The digital transformation of traditional businesses. undefined

Buckhorst AF, Montavon B, Wolfschläger D et al (2021) Holarchy for line-less mobile assembly systems operation in the context of the internet of production. Procedia CIRP 99:448–453. https://doi.org/10.1016/J.PROCIR.2021.03.064

Cañas H, Mula J, Díaz-Madroñero M, Campuzano-Bolarín F (2021) Implementing industry 4.0 principles. Comput Ind Eng 158. https://doi.org/10.1016/J.CIE.2021.107379

Cao K, Liu Y, Meng G, Sun Q (2020) An overview on edge computing research. IEEE Access 8:85714–85728. https://doi.org/10.1109/ACCESS.2020.2991734

Christensen C (2016) Innovator’s dilemma. 1–179

Ghobakhloo M (2020) Industry 4.0, digitization, and opportunities for sustainability. J Clean Prod 252:119869. https://doi.org/10.1016/J.JCLEPRO.2019.119869

Hopmann C, Schmitz M (2021) Plastics industry 4.0 : potentials and applications in plastics technology

Hopmann C, Jeschke S, Meisen T et al (2019) Combined learning processes for injection moulding based on simulation and experimental data. AIP Conf Proc 2139:5. https://doi.org/10.1063/1.5121656

N.N. (2022) The three layers of computing – cloud, fog and edge - SCC. https://www.scc.com/insights/it-solutions/data-centre-modernisation/the-three-layers-of-computing-cloud-fog-and-edge/. Accessed 19 Jul 2022

Nambiar A, Mundra D (2022) An overview of data warehouse and data lake in modern enterprise data management. In: Big data and cognitive computing 2022, vol 6, p 132. https://doi.org/10.3390/BDCC6040132

OPC Foundation (2022) Unified architecture - OPC Foundation. https://opcfoundation.org/developer-tools/specifications-unified-architecture. Accessed 13 Nov 2022

Pennekamp J, Glebke R, Henze M et al (2019) Towards an infrastructure enabling the internet of production. In: Proceedings of the 2019 IEEE international conference on Industrial Cyber-Physical Systems ICPS 2019, pp 31–37. https://doi.org/10.1109/ICPHYS.2019.8780276. 2019

Pennekamp J, Buchholz E, Lockner Y et al (2020) Privacy-preserving production process parameter exchange. ACM Int Conf Proc Ser 16:510–525. https://doi.org/10.1145/3427228.3427248

Pennekamp J, Glebke R, Henze M et al. Towards an infrastructure enabling the Internet of Production. https://doi.org/10.1109/ICPHYS.2019.8780276

Pinzone M, Fantini P, Perini S et al (2017) Jobs and skills in industry 4.0: an exploratory research. IFIP Adv Inf Commun Technol 513:282–288. https://doi.org/10.1007/978-3-319-66923-6_33/COVER

PwC (2022) PwC Digital Factory Transformation Survey 2022

Rostan M (2014) Industrial ethernet technologies: overview and comparison

Sapel P, Gannouni A, Fulterer J et al (2022) Towards digital shadows for production planning and control in injection molding. CIRP J Manuf Sci Technol 38:243–251. https://doi.org/10.1016/J.CIRPJ.2022.05.003

Schwenzer M (2022) Closing the loop of model predictive force control in milling with Ensemble Kalman Filtering = Schließen des Regelkreises einer modellprädiktiven Kraftregelung beim Fräsen mit einem Ensemble Kalman Filter. Apprimus Verlag

Tantik E, Anderl R (2017) Integrated data model and structure for the Asset Administration Shell in Industrie 4.0. Procedia CIRP 60:86–91. https://doi.org/10.1016/J.PROCIR.2017.01.048

World Wide Web Consortium (W3C) (2003) OWL Web Ontology language overview. http://www.w3.org/TR/2003/PR-owl-features-20031215/. Accessed 18 Jul 2022

Xu M, David JM, Kim SH (2018) The fourth industrial revolution: opportunities and challenges. Int J Financ Res 9:90. https://doi.org/10.5430/IJFR.V9N2P90

Acknowledgments

Funded by the Deutsche Forschungsgemeinschaft (DFG, German Research Foundation) under the Germany’s Excellence Strategy – EXC-2023 Internet of Production – 390621612. The support and sponsorship are gratefully acknowledged and appreciated.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Open Access This chapter is licensed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license and indicate if changes were made.

The images or other third party material in this chapter are included in the chapter's Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the chapter's Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder.

Copyright information

© 2024 The Author(s)

About this chapter

Cite this chapter

Hopmann, C., Hirt, G., Schmitz, M., Bailly, D. (2024). Internet of Production: Challenges, Potentials, and Benefits for Production Processes due to Novel Methods in Digitalization. In: Brecher, C., Schuh, G., van der Aalst, W., Jarke, M., Piller, F.T., Padberg, M. (eds) Internet of Production. Interdisciplinary Excellence Accelerator Series. Springer, Cham. https://doi.org/10.1007/978-3-031-44497-5_26

Download citation

DOI: https://doi.org/10.1007/978-3-031-44497-5_26

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-44496-8

Online ISBN: 978-3-031-44497-5

eBook Packages: EngineeringEngineering (R0)