Abstract

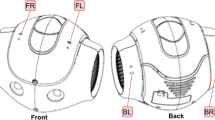

An important task for a social robot is to understand the world around it. For this, sensory information is a key factor that allows identifying objects and people or listening to users and/or relevant sounds in the environment. This work focuses on the latter: the detection of everyday environmental sounds. We present a system able to recognise common sounds (e.g., air conditioning, car horn, water dripping) and how the integration in the social robot Mini allows for enhanced interaction with the users. We propose using deep learning techniques for sound identification, with the Mel-frequency spectrogram to represent the sound as an image, which allows using Convolutional Neural Networks to distinguish between sounds. The development was integrated into a real social robot to ensure the system’s proper operation. The resulting functional system depends on specific settings implemented in the robot to distinguish between actual sounds and background noise.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

Notes

- 1.

Working example video: https://youtu.be/2mO5241CKMU.

References

Alonso-Martin, F., Gamboa-Montero, J.J., Castillo, J.C., Castro-Gonzalez, A., Salichs, M.A.: Detecting and classifying human touches in a social robot through acoustic sensing and machine learning. Sensors 17(5) (2017). https://doi.org/10.3390/s17051138, https://www.mdpi.com/1424-8220/17/5/1138

Chandrakala, S., Jayalakshmi, S.: Environmental audio scene and sound event recognition for autonomous surveillance: a survey and comparative studies. ACM Comput. Surv. (CSUR) 52(3), 1–34 (2019)

Jain, D., et al.: Homesound: an iterative field deployment of an in-home sound awareness system for deaf or hard of hearing users. In: Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems, pp. 1–12 (2020)

Khurana, A., Mittal, S., Kumar, D., Gupta, S., Gupta, A.: Tri-integrated convolutional neural network for audio image classification using Mel-frequency spectrograms. Multimed. Tools Appl. 82(4), 5521–5546 (2023)

Mu, W., Yin, B., Huang, X., Xu, J., Du, Z.: Environmental sound classification using temporal-frequency attention based convolutional neural network. Sci. Rep. 11(1), 21552 (2021)

Pawar, M.D., Kokate, R.D.: Convolution neural network based automatic speech emotion recognition using Mel-frequency Cepstrum coefficients. Multimed. Tools Appl. 80, 15563–15587 (2021)

Piczak, K.J.: ESC: dataset for environmental sound classification. In: Proceedings of the 23rd ACM International Conference on Multimedia, pp. 1015–1018 (2015)

Salamon, J., Jacoby, C., Bello, J.P.: A dataset and taxonomy for urban sound research. In: Proceedings of the 22nd ACM International Conference on Multimedia, pp. 1041–1044 (2014)

Salichs, M.A., et al.: Mini: a new social robot for the elderly. Int. J. Soc. Robot. 12, 1231–1249 (2020)

Sharan, R.V., Moir, T.J.: Acoustic event recognition using cochleagram image and convolutional neural networks. Appl. Acoust. 148, 62–66 (2019)

Acknowledgements

The research leading to these results has received funding from the projects: Robots sociales para mitigar la soledad y el aislamiento en mayores (SOROLI), PID2021-123941OA-I00, funded by Agencia Estatal de Investigación (AEI), Spanish Ministerio de Ciencia e Innovación. Robots sociales para reducir la brecha digital de las personas mayores (SoRoGap), TED2021-132079B-I00, funded by Agencia Estatal de Investigación (AEI), Spanish Ministerio de Ciencia e Innovación. This publication is part of the R &D &I project PDC2022-133518-I00, funded by MCIN/AEI/10.13039/501100011033 and by the European Union NextGenerationEU/PRTR.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2023 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Marques-Villarroya, S., Sosa-Aleman, A., Castillo, J.C., Maroto-Gómez, M., Salichs, M.A. (2023). Environmental Sound Recognition in Social Robotics. In: Novais, P., et al. Ambient Intelligence – Software and Applications – 14th International Symposium on Ambient Intelligence. ISAmI 2023. Lecture Notes in Networks and Systems, vol 770. Springer, Cham. https://doi.org/10.1007/978-3-031-43461-7_22

Download citation

DOI: https://doi.org/10.1007/978-3-031-43461-7_22

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-43460-0

Online ISBN: 978-3-031-43461-7

eBook Packages: Intelligent Technologies and RoboticsIntelligent Technologies and Robotics (R0)