Abstract

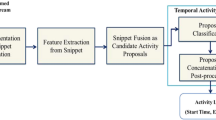

Human Activity Recognition (HAR) is a vast and complex research domain that has multiple applications, such as healthcare, surveillance or human-computer interaction. Several sensing technologies exist to record data later used to recognize people’s activity. This paper aims to linger over the specific case of HAR based on multimodal wearable sensing devices. Corresponding HAR datasets provide multiple sensors information collected from different body parts. Previous approaches consider each information separately or altogether. Vision HAR methods consider each body segment and their position in space in order to perform activity recognition. This paper proposes a similar approach for Multimodal Wearable HAR (MW-HAR). Datasets are first re-sampled at a higher sampling rate (i.e., lower frequency) in order to both decrease the overall processing time and facilitate interpretability. Then, we propose to group sensing features from all the sensors corresponding to the same body part. For each group, the proposal determines a different representation realm of the group information. This abstracted representation depicts the different states of the corresponding body part. Finally, activity recognition is performed based on these trained abstractions of each considered body part. We tested our proposal on three benchmark datasets. Our evaluations first confirmed that a re-sampled dataset offers similar or even better performance for activity recognition than usual processing. But the primary advantage is to decrease significantly the training time. Finally, results show that a grouped abstraction of the sensors features is improving the activity recognition in most cases, without increasing training time.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Adadi, A., Berrada, M.: Peeking inside the black-box: a survey on explainable artificial intelligence (XAI). IEEE Access 6, 52138–52160 (2018)

Banos, O., et al.: mHealthDroid: a novel framework for agile development of mobile health applications. In: Pecchia, L., Chen, L.L., Nugent, C., Bravo, J. (eds.) IWAAL 2014. LNCS, vol. 8868, pp. 91–98. Springer, Cham (2014). https://doi.org/10.1007/978-3-319-13105-4_14

Cao, Z., Hidalgo, G., Simon, T., Wei, S.E., Sheikh, Y.: OpenPose: realtime multi-person 2D pose estimation using part affinity fields (2019)

Chandrashekar, G., Sahin, F.: A survey on feature selection methods. Comput. Electr. Eng. 40(1), 16–28 (2014)

Chang, Y.J., Chen, S.F., Huang, J.D.: A Kinect-based system for physical rehabilitation: a pilot study for young adults with motor disabilities. Res. Dev. Disabil. 32(6), 2566–2570 (2011)

Chavarriaga, R., et al.: The opportunity challenge: a benchmark database for on-body sensor-based activity recognition. Pattern Recogn. Lett. 34(15), 2033–2042 (2013)

Chen, K., Zhang, D., Yao, L., Guo, B., Yu, Z., Liu, Y.: Deep learning for sensor-based human activity recognition: overview, challenges, and opportunities. ACM Comput. Surv. 54(4) (2021)

Ferrari, A., Micucci, D., Mobilio, M., Napoletano, P.: Trends in human activity recognition using smartphones. J. Reliable Intell. Environ. 7(3), 189–213 (2021)

Fong, S., Lan, K., Sun, P., Mohammed, S., Fiaidhi, J.: A time-series pre-processing methodology for biosignal classification using statistical feature extraction. In: Proceedings of the IASTED International Conference on Biomedical Engineering, BioMed 2013 (2013)

Gerling, K., Livingston, I., Nacke, L., Mandryk, R.: Full-body motion-based game interaction for older adults. In: Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, CHI 2012, pp. 1873–1882. Association for Computing Machinery, New York (2012)

Gochoo, M., Tan, T.H., Liu, S.H., Jean, F.R., Alnajjar, F.S., Huang, S.C.: Unobtrusive activity recognition of elderly people living alone using anonymous binary sensors and DCNN. IEEE J. Biomed. Health Inform. 23(2), 693–702 (2019)

Goldberger, J., Roweis, S., Hinton, G., Salakhutdinov, R.: Neighbourhood components analysis. In: Proceedings of the 17th International Conference on Neural Information Processing Systems, NIPS 2004, pp. 513–520 (2004)

Gu, K., Vosoughi, S., Prioleau, T.: Feature selection for multivariate time series via network pruning. In: 2021 International Conference on Data Mining Workshops (ICDMW). IEEE (2021)

Hayes, A.L., Dukes, P.S., Hodges, L.F.: A virtual environment for post-stroke motor rehabilitation (2011)

Joshi, A., Parmar, H.R., Jain, K., Shah, C.U., Patel, V.R.: Human activity recognition based on object detection. IOSR J. Comput. Eng. 19, 26–32 (2017)

Kaluža, B., Mirchevska, V., Dovgan, E., Luštrek, M., Gams, M.: An agent-based approach to care in independent living. In: de Ruyter, B., et al. (eds.) AmI 2010. LNCS, vol. 6439, pp. 177–186. Springer, Heidelberg (2010). https://doi.org/10.1007/978-3-642-16917-5_18

Kwon, H., Abowd, G.D., Plötz, T.: Complex deep neural networks from large scale virtual IMU data for effective human activity recognition using wearables. Sensors 21(24), 8337 (2021)

Lawrence, E., Sax, C., Navarro, K.F., Qiao, M.: Interactive games to improve quality of life for the elderly: towards integration into a WSN monitoring system. In: 2010 Second International Conference on eHealth, Telemedicine, and Social Medicine, pp. 106–112 (2010)

Miyamoto, S., Ogawa, H.: Human activity recognition system including smartphone position. Procedia Technol. 18, 42–46 (2014)

Münzner, S., Schmidt, P., Reiss, A., Hanselmann, M., Stiefelhagen, R., Dürichen, R.: CNN-based sensor fusion techniques for multimodal human activity recognition. In: Proceedings of the 2017 ACM International Symposium on Wearable Computers, ISWC 2017, pp. 158–165 (2017)

Perez-Rua, J.M., Vielzeuf, V., Pateux, S., Baccouche, M., Jurie, F.: MFAS: multimodal fusion architecture search. In: 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 6959–6968 (2019)

Qian, H., Pan, S.J., Da, B., Miao, C.: A novel distribution-embedded neural network for sensor-based activity recognition. In: Proceedings of the Twenty-Eighth International Joint Conference on Artificial Intelligence, IJCAI-19, pp. 5614–5620. International Joint Conferences on Artificial Intelligence Organization (2019)

Reiss, A., Stricker, D.: Creating and benchmarking a new dataset for physical activity monitoring. In: Proceedings of the 5th International Conference on PErvasive Technologies Related to Assistive Environments, PETRA 2012 (2012)

Rossi, S., Capasso, R., Acampora, G., Staffa, M.: A multimodal deep learning network for group activity recognition. In: 2018 International Joint Conference on Neural Networks (IJCNN), pp. 1–6 (2018)

Ryoo, M.S.: Human activity prediction: early recognition of ongoing activities from streaming videos. In: 2011 International Conference on Computer Vision, pp. 1036–1043 (2011)

Straczkiewicz, M., James, P., Onnela, J.P.: A systematic review of smartphone-based human activity recognition methods for health research. NPJ Digit. Med. 4(11), 1–15 (2021)

Van Der Maaten, L.: Accelerating T-SNE using tree-based algorithms. J. Mach. Learn. Res. 15(1), 3221–3245 (2014)

Vo, Q.V., Lee, G., Choi, D.: Fall detection based on movement and smart phone technology. In: 2012 IEEE RIVF International Conference on Computing & Communication Technologies, Research, Innovation, and Vision for the Future, pp. 1–4 (2012)

Wang, J., Chen, Y., Hao, S., Peng, X., Hu, L.: Deep learning for sensor-based activity recognition: a survey. Pattern Recogn. Lett. 119, 3–11 (2019)

Wang, J., Chen, Y., Gu, Y., Xiao, Y., Pan, H.: SensoryGANs: an effective generative adversarial framework for sensor-based human activity recognition. In: 2018 International Joint Conference on Neural Networks (IJCNN), pp. 1–8 (2018)

Zeng, M., et al.: Convolutional Neural Networks for human activity recognition using mobile sensors. In: 6th International Conference on Mobile Computing, Applications and Services, pp. 197–205 (2014)

Zhang, L., Zhang, X., Pan, J., Huang, F.: Hierarchical cross-modality semantic correlation learning model for multimodal summarization. In: Proceedings of the AAAI Conference on Artificial Intelligence (2022)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2023 ICST Institute for Computer Sciences, Social Informatics and Telecommunications Engineering

About this paper

Cite this paper

Habault, G., Wada, S. (2023). Efficient Human Activity Recognition Based on Grouped Representations of Multimodal Wearable Data. In: Hou, R., Huang, H., Zeng, D., Xia, G., A. Ghany, K.K., Zawbaa, H.M. (eds) Big Data Technologies and Applications. BDTA BDTA 2022 2021. Lecture Notes of the Institute for Computer Sciences, Social Informatics and Telecommunications Engineering, vol 480. Springer, Cham. https://doi.org/10.1007/978-3-031-33614-0_16

Download citation

DOI: https://doi.org/10.1007/978-3-031-33614-0_16

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-33613-3

Online ISBN: 978-3-031-33614-0

eBook Packages: Computer ScienceComputer Science (R0)