Abstract

Although the COVID-19 vaccine has the potential to end the pandemic, the simultaneous infodemic has led to people questioning the safety of vaccines, lowered vaccination intentions, and given rise to dangerous health-related beliefs. Unfortunately, misinformation can be highly persuasive and misleading to the extent that even the most critical reader is no longer ‘immune’. In this chapter, we provide an overview of a long-term strategy for tackling misinformation in the modern digital age through the use of psychological inoculation interventions. In recent years, research has shown that low-dose exposures to misinformation and manipulation tactics can have inoculating effects amongst news consumers, making them more resistant to media manipulation and misinformation. This chapter highlights the scientific progress in the application of the theory, from its roots as a ‘vaccine’ against brainwashing in the 1960s, to the modern advancements that have demonstrated the promising effects of gamified interventions in tackling the infodemic.

You have full access to this open access chapter, Download chapter PDF

Similar content being viewed by others

Keywords

8.1 Beyond Fact-Checking: Tackling the Infodemic

The global pandemic saw a rapid rise in information regarding COVID-19 (Frenkel et al. 2020; Tardáguila 2020; Zarocostas 2020), prompting the World Health Organization (WHO) to declare an infodemic (WHO Director General 2020): a situation where there exists too much information, both offline and online, that can make it difficult to identify trustworthy information and which causes confusion (Pertwee et al. 2022). Misinformation is a dangerous part of the infodemic and can contain outright false messaging, which is easier to spot or fact-check but also uses techniques of manipulation to contort information, make it appear true, and much more difficult to identify (Roozenbeek and van der Linden 2019a). Determining a definition of misinformation has proved to be a scholarly challenge, with some defining misinformation as information presented by fictitious or fake sources (Pennycook et al. 2021), while others categorise misinformation according to whether or not it contains misleading information that distorts the truth, regardless of the source (Traberg 2022). Here we define misinformation in line with the latter characterisation of the term.

Misinformation in and of itself is not inherently dangerous if nobody believes it. If everyone simply scrolled past it and gave it no attention, the problem would be much easier to contain. However, misinformation during the pandemic has been associated with a decrease in compliance with public health guidelines (Freeman et al. 2022; Imhoff and Lamberty 2020; Roozenbeek et al. 2020a), and an increase in violent behaviour (Featherstone and Zhang 2020; Jolley and Paterson 2020). Although the COVID-19 vaccine has the potential to end the pandemic, the simultaneous infodemic has led to people questioning the safety of vaccines, thereby lowering vaccination intentions (Loomba et al. 2021) and uptake (Pierri et al. 2022). Misinformation has also given rise to dangerous health-related beliefs such as the promotion of bleach as a cure for the virus (Litman et al. 2020) and conspiracy theories suggesting the purposeful manufacturing of the virus as a bioweapon (Roozenbeek et al. 2020a).

To tackle the infodemic, a focus has, therefore, been to understand how we can prevent people from being persuaded to believe misinformation, leading to the design and testing of interventions to counter the influence of misinformation. Amongst other initiatives, fact-checks have become increasingly popular – these have included either removing information that is flagged as false (Taylor 2021) or providing disclaimers on articles (RAND 2022). Although studies have found positive effects of fact-checks (Porter and Wood 2021; Walter et al. 2020), debunking is not always fully effective, as misinformation can continue to influence how we see the world even if we have been told that it is false: a psychological effect known as the ‘continued influence effect’ (Lewandowsky et al. 2012; Cook et al. 2017). For example, picture a social media user scrolling past a clip posted by a Facebook friend, which is initially perceived as true. Later, the same person notices that the clip now contains a correction noting that it contained false information. This correction may not be internalised into memory and the individual may not update their beliefs, leaving the correction ineffective (Ecker et al. 2022). Indeed, as more time passes between the initial exposure to a message, the more likely it is that the source is forgotten and the message increases in persuasiveness over time, known as ‘the sleeper effect’ (Kumkale and Albarracín 2004). A final problem with fact-checking is that it does not pierce echo-chambers, which exist when groups of polarised social media users aggregate around different types of content, as users in these online communities can respond to fact-checks negatively (Zollo et al. 2017).

Likening the infodemic to its biological pandemic counterpart, the cost of treatment dwarfs the cost of prevention. Although the infodemic analogy has not gone without critique (Simon and Camargo 2021), researchers have successfully used models from epidemiology to understand the spread of misinformation in social networks (Vosoughi et al. 2018; Cinelli et al. 2021; Jin et al. 2013). According to social scientists, the answer to the infodemic might mirror the answer to the pandemic – that is, through psychologically inoculating (i.e. vaccinating) individuals before exposure to the misinformation ‘disease’. In this chapter, we detail how a psychological theory from the 1960s has been applied to tackling online misinformation, and highlight projects that have demonstrated that gamified ‘vaccines’ against misinformation can have inoculating effects on people (Traberg et al., 2022), making them more resistant to manipulation.

8.2 Inoculation Theory: A Vaccine Against Persuasion

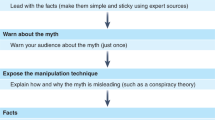

While the idea of a vaccine against persuasion techniques dates back to the 1960s, it is only in the last decade that this approach has been applied to tackling misinformation. Based on inoculation theory, psychological inoculations or ‘prebunks’ were originally proposed and tested by McGuire (1964) to train individuals to resist having their attitudes changed by persuasive messages (e.g. propaganda). His suggestion was that the psychological process involved in creating resistance against persuasion is comparable to our bodies creating biological resistance against viruses (McGuire 1961). As with biological vaccines where individuals are injected with a weakened version of a virus to generate immunity against future exposure to viral pathogens, psychological vaccines involve exposing individuals to ‘weak’ persuasive ‘attacks’ such as watered-down bite-sized versions of misleading arguments followed by a strong and persuasive rebuttal to these weak arguments.

When the body encounters a biological vaccine, the immune system responds by generating antibodies. With psychological vaccines, when the individual is pre-emptively exposed to a ‘weak’ persuasive attack followed by a strong rebuttal, mental ‘antibodies’ are generated as the individual is given the tools to spot deception. According to inoculation theory, this takes place through the use of two key mechanisms that must be present in the inoculation process, known as ‘threat’ or ‘forewarning’ and refutational preemption (prebunking). Threat entails warning people that they will be exposed to a manipulative message, motivating the ‘mental’ immune system into action. The second element, refutational preemption or prebunking provides individuals with the means to shoot down these misleading arguments. The idea is that once inoculated, individuals are better prepared to resist ‘stronger’ misleading arguments in the future.

While initial experiments showed that after ‘inoculation’, individuals were better at resisting persuasive attacks, the theory was never tested in the context of misinformation and remained largely untouched until recently. The threat posed by misinformation online (and more recently, the infodemic) gave rise to new potential applications of psychological vaccines (for recent reviews of the theory, see Traberg 2022; Lewandowsky and van der Linden 2021; Compton et al. 2021). Scientists thus found a potential new virus to inoculate people against dangerous and misleading information on the internet.

Inoculation was initially designed to be prophylactic; meaning it was intended to protect against future persuasive attacks before they occurred (McGuire 1964). However, in the context of infodemics, it may be more appropriate to discuss therapeutic inoculation as misinformation reaches more people and spreads at faster rates than fact-checked content (Vosoughi et al. 2018), implying that a large proportion of any inoculation will occur after exposure. Today, following the advancement of therapeutic vaccines that can still boost immune responses when someone has already been infected (e.g. HPV), ‘therapeutic’ inoculation also occurs in a psychological sense when individuals are inoculated after being exposed to, but not yet fully convinced by, misinformation (Compton et al. 2021). The distinction between prebunking and debunking (post-hoc corrections) likely depends on the incubation period of the misinformation virus in question; that is, sometimes, it may only take a single exposure to dupe someone on social media, but at other times, it may require repeated exposure from trusted members in one’s social network over extended periods of time (van der Linden 2023).

8.2.1 Initial Vaccines Against Specific Misinformation

One of the early pioneering studies looked at the prevalence of misinformation about climate change (van der Linden et al. 2017), as previous research had not focused on contentious issues or misinformation (Banas and Rains 2010). To test whether being inoculated against climate misinformation would reduce the likelihood of persuasion, the researchers recruited over 2000 participants online who were assigned to groups containing either inoculation messages or simple facts. The scientists attempted to ‘vaccinate’ individuals psychologically against the Oregon Petition – a real-life petition denying anthropogenic climate change claimed to have been signed by 31,000 ‘scientists’. This petition has been debunked (Greenberg 2017) – with fewer than 1% of the ‘scientists’ on the list having any degree or expertise in climate science, with names like Dr. Gari Halliwell (from the Spice Girls) cited. Participants in the inoculation condition were forewarned that someone would try to persuade them that climate change is a hoax (threat element) (van der Linden et al. 2017). The study also provided factual information about the fact that 97% of climate scientists agree that humans have contributed to the global rise in temperatures and proof that the petition consists of the names of fake experts (refutational pre-emption).

Results showed that while the misinformation message negatively impacted people’s beliefs about climate change, it mostly only persuaded participants who had not been inoculated beforehand. In other words, the inoculation messages successfully protected individuals against the misinformation. Consequently, one of the first modern applications of inoculation theory showed that it was possible to protect individuals against future exposure to misinformation, and these results were soon replicated across several additional studies (Cook et al. 2017; Williams and Bond 2020).

8.2.2 A Broad-Spectrum Vaccine Through Gamification

In the initial studies, the goal was to protect individuals from being persuaded by specific myths (e.g. claims of climate change being a hoax). However, due to the volume of misinformation covering a wide range of topics, inoculating against specific myths limits its scalability. As such, inoculation interventions were developed to educate individuals on the techniques used by peddlers of misinformation. Specifically, researchers developed entertaining and interactive games built on principles of inoculation theory in a new and accessible way. Firstly, the game environments allow players to be exposed to the threat posed by fake news as they witness the ‘ease’ with which truths can be spun into falsehoods using misleading tactics, representing the ‘threat’ element of inoculation. Secondly, in the games, players are taught how and why fake news producers use misleading techniques. Exposing players to these misleading tactics in a humorous way is intended to inspire players to come up with counter-arguments, representing the ‘refutational pre-emption’. These games are known as technique-based inoculation interventions, as they train participants to spot the misleading tactics used across a wide range of misinformation messages, rather than focusing on a specific example of misinformation. In addition, game-based inoculation is also superior to text-based interventions in that they provide higher entertainment value and are publicly available.

One of the most well-known and thoroughly tested inoculation games is the award-winning Bad News game developed by Roozenbeek and van der Linden (2019a, b) in collaboration with the Dutch media platform DROG (DROG 2019). In Bad News, players are placed in the shoes of a misinformation producer and tasked with spreading weakened doses of their own misinformation within a simulated social media platform. Players are taught how to use six commonly used fake news tactics: (1) impersonating individuals or groups to make audiences believe the source of the information is credible; (2) polarising audiences by feeding on the divide between political groups; (3) using overly emotive language that distorts the original news to spark strong emotional reactions; (4) creating or inspiring conspiracy theories to explain recent events; (5) trolling users, famous people, or organisations, for example, to create the impression that a larger group agree or disagree with a claim; and (6) discrediting otherwise credible individuals, institutions, or well-established facts to create doubt amongst audiences.

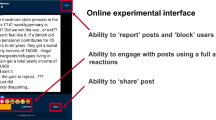

This type of inoculation is also otherwise known as active inoculation as players are not directly told why the misinformation is misleading (instead known as passive inoculation), but they learn it through actively having to create it themselves in a controlled setting. The original authors of inoculation theory (McGuire and Papageorgis 1962) believed that this type of inoculation may be more effective, because participants are more involved, which may lead to them remembering it better – that is learning by doing (Tyler et al. 1979). A screenshot of these gamified interventions is provided in Fig. 8.1.

Bad News, Harmony Square, and Go Viral! games. (Source: Bad News [www.getbadnews.com], Harmony Square [www.harmonysquare.game], and Go Viral! [www.goviralgame.com]. Reprinted with permission)

8.2.3 Testing the Efficacy of Inoculation Games

To assess whether such interventions are successful, that is, if they effectively reduce the likelihood of news consumers being persuaded to believe fake news, scientists have tested the game using several different scenarios. First, the game was tested in a live card version (Roozenbeek and van der Linden 2019a). After promising results, the online version of the game was released and has been widely studied since its inception. The impact of the online Bad News game was originally tested in a before-after design, meaning the researchers test the players’ improvement in spotting fake news after the game compared to before (Roozenbeek and van der Linden 2019a; Roozenbeek et al. 2021; Maertens et al. 2021; Basol et al. 2020). In one of the largest studies, Roozenbeek and van der Linden (2019a) recruited 15,000 online participants whose abilities to spot misleading news headlines were evaluated. Results showed that players evaluated misinformation headlines as significantly less reliable following gameplay, highlighting the ‘inoculating’ effects of the Bad News game. For example, after having been ‘vaccinated’ against conspiracy theories, game players would judge conspiratorial headlines such as ‘Scientists discovered greenhouse effect years ago but aren’t allowed to publish it, report claims’ as less reliable after playing, compared to before.

Although conspiracy narratives represent just one of six misinformation techniques in the game, they remain a highly effective tool to spread misinformation. Conspiracy theories can be used to vilify certain groups by accusing them of secretly plotting to achieve their own evil goals (Nera et al. 2022) while simultaneously placing the conspiracy believer in a morally superior victim role (Douglas et al. 2017, 2019). The believability of conspiracy theories can be explained by their perceived ability to satisfy unmet psychological needs (Douglas et al. 2017, 2019; Biddlestone et al. 2022), and the entertainment value they can provide (van Prooijen et al. 2022). Given their complexity, conspiracy theories have been given increased attention from social scientists and prior to the creation of inoculation games, it remained unclear whether inoculation interventions could successfully reduce the likelihood of believing conspiracy theories. Promisingly, Roozenbeek and van der Linden (2019a) showed that the Bad News game could be used to protect individuals against conspiratorial narratives.

8.3 Criticisms of the Initial Game Studies

8.3.1 The Use of Randomised Research Designs

One concern about testing the effectiveness of games by measuring improvements using before and after measures is that it remains uncertain whether the improvement is the result of the specific intervention. This concern can be alleviated by testing the intervention using randomised research trials by (1) allocating a control condition and (2) changing the test items (the false headlines) in the game. Basol et al. (2020) therefore allocated participants to a control group that played Tetris, showing that participants who played the Bad News game outperformed their Tetris-playing counterparts when it came to spotting unreliable headlines – with even stronger effects than prior studies. The team found that the intervention also improved participants’ confidence in their own abilities to spot misinformation: a promising finding as higher confidence can boost one’s ability to resist persuasion (Tormala and Petty 2004). Other studies have shown that even when headlines are changed in the post-test, participants’ abilities to spot previously unseen misinformation are improved through the Bad News inoculation game (Roozenbeek et al. 2021, 2022a).

8.3.2 Long-Term Effectiveness of Inoculation

Recently, researchers have begun to study the long-term effectiveness of psychological inoculation with results showing that inoculation interventions are at least as good as, and sometimes better than, other traditional interventions in providing long-term protection against misinformation (Banas and Rains 2010; Maertens et al. 2021; Nisa et al. 2019). Effects typically last for at least a couple of weeks (Maertens et al. 2020; Maertens 2022), and sometimes for months (Pfau et al. 1992; Pfau et al. 2006; Maertens et al. 2021). However, research also shows that the inoculation effect starts decaying within days after the intervention, meaning that a diminishing effect needs to be accounted for (Maertens 2022). Recent research using the Bad News game indicates that the inoculation effect can last up to 2 months but needs to be ‘boosted’ in a similar way that biomedical vaccine booster shots may help to prolong immunity against viruses. Practically, this means regularly engaging people with a fun quiz or shortened version of the initial treatment to boost both people’s ability and motivation to resist fake news (Maertens et al. 2021; Maertens 2022).

8.4 Applications and Herd Iimmunity

8.4.1 Policy Applications of Inoculation Theory

One of the major advantages of inoculation interventions is that they can be applied in other settings. For instance, Cranky Uncle is a humour-based inoculation game about climate misinformation (Cook 2021). In a recent study, Cook et al. (2022) showed that playing the game improved students’ ability to identify logical fallacies often used in climate misinformation. Other games have been developed in collaboration with government partners, such as Go Viral! (https://www.goviralgame.com/), a 5-minute game about COVID-19 misinformation produced in collaboration with the UK Cabinet Office (Basol et al. 2021), and Harmony Square (https://www.harmonysquare.game/), a game developed with the US Department of Homeland Security, which tackles political disinformation and polarisation (Roozenbeek and van der Linden 2020). These games have been tested (Basol et al. 2021) and translated into numerous languages (Bad News, Go Viral! and Harmony Square include the option to select a different language). Both Basol et al. (2021) and Roozenbeek et al. (2020b) found that the games were similarly effective across different (European) languages, and that people are better able to spot misinformation online and are less likely to report wanting to share it with their social networks.

The games are freely accessible online and can be used as part of public health campaigns. For example, GoViral! was part of WHO’s ‘Stop the Spread’ campaign and the United Nation’s ‘Verified’ campaign, reaching over 200 million impressions on social media (Government Communication Service 2021; WHO 2021). Another practical application of inoculation interventions is to run ad campaigns on social media platforms. Roozenbeek et al. (2022b) showed that running a video ad campaign on YouTube using inoculation videos they had created significantly improved YouTube users’ ability to identify manipulative content correctly ‘in the wild’ on YouTube, at a cost of a maximum of US$ 0.05 per video view. Policymakers may, thus, run similar campaigns on YouTube or other social media platforms using these or other inoculation videos.

8.4.2 Can Inoculation Spread?

One limitation of vaccines against misinformation is that, much like vaccines against biological infections, it is difficult, if not impossible, to inoculate everyone. However, what if this was not necessary? In the past, research had suggested that once individuals had been psychologically inoculated, talking with others about the inoculation might, in turn, actually increase their own resistance to misinformation (Compton and Pfau 2009; Ivanov et al. 2012). Exciting novel research by Basol (2022) suggests that inoculated individuals voluntarily engage in post-inoculation talk without instruction, and that not only does talking about inoculation increase the protective effects of inoculation for the inoculated individual, inoculated individuals can vicariously inoculate the recipients of talk. In another study, participants were more willing to share the GoViral! game with their friends and family than other interventions. If enough people share the inoculation in their network, it could outpace the spread of misinformation, or at least protect enough people within a social network so that the influence of misinformation is substantially reduced as recent computer simulations have indicated (Pilditch et al. 2022). In this way, inoculation has the potential to promote psychological herd-immunity against misinformation, as inoculating one individual could end up having exponential effects.

8.5 Conclusion

In this chapter, we have outlined the history of inoculation theory and its applications to tackling misinformation. Given the increasing number of studies that highlight the efficacy of psychological vaccines in reducing persuasion by misinformation, it is clear that inoculation interventions represent a promising and potentially scalable tool to limit the influence of online misinformation.

Like all interventions, however, they are not without limitations and unresolved questions remain. For example, news and information online is not consumed in a social vacuum. Instead, news consumption increasingly takes place in a social environment where social cues are present. Furthermore, individuals hold pre-existing beliefs about the world and may be prone to additional cognitive biases that impact their perceptions and judgements of information veracity (Traberg and van der Linden 2022). As such, there may be other factors at play other than the simple news headline when it comes to being persuaded by misinformation, which have yet to be tested in relation to inoculation interventions. Unlike biological vaccines, psychological vaccines against misinformation cannot claim to guarantee 90% efficacy against future misinformation attacks. However, given the rapid spread of misinformation and the lack of alternative scalable solutions, inoculation intervention remains one of the most powerful tools currently available.

References

Banas JA, Rains SA (2010) A meta-analysis of research on inoculation theory. Commun Monogr 77(3):281–311

Basol M (2022) Harnessing post-inoculation talk to confer intra-and interindividual resistance to persuasion. PhD Thesis, University of Cambridge.

Basol M, Roozenbeek J, van der Linden S (2020) Good news about bad news: gamified inoculation boosts confidence and cognitive immunity against fake news. J Cogn 3(1):2. https://doi.org/10.5334/joc.91

Basol M, Roozenbeek J, Berriche M, Uenal F, McClanahan WP, Linden SVD (2021) Towards psychological herd immunity: cross-cultural evidence for two prebunking interventions against COVID-19 misinformation. Big Data Soc 8(1). https://doi.org/10.1177/20539517211013868

Biddlestone M, Green R, Cichocka A, Douglas K, Sutton RM (2022) A systematic review and meta-analytic synthesis of the motives associated with conspiracy beliefs. PsyArXiv. https://doi.org/10.31234/osf.io/rxjqc

Cinelli M, De Francisci MG, Galeazzi A, Quattrociocchi W, Starnini (2021) The echo chamber effect on social media. Proc Natl Acad Sci 118(9). https://doi.org/10.1073/pnas.2023301118

Compton J, Pfau M (2009) Spreading inoculation: inoculation, resistance to influence, and word-of-mouth communication. Commun Theory 19(1):9–28

Compton J, van der Linden S, Cook J, Basol M (2021) Inoculation theory in the post-truth era: extant findings and new frontiers for contested science, misinformation, and conspiracy theories. Soc Personal Psychol Compass 15(6). https://doi.org/10.1111/spc3.12602

Cook J (2021) Teaching students how to spot climate misinformation using a cartoon game. Plus Lucis 3:13–16

Cook J, Lewandowsky S, Ecker UK (2017) Neutralizing misinformation through inoculation: exposing misleading argumentation techniques reduces their influence. PLoS ONE 12(5). https://doi.org/10.1371/journal.pone.0175799

Cook J, Ecker UK, Trecek-King M, Schade G, Jeffers-Tracy K, Fessmann J, Kim SC, Kinkead D, Orr M, Vraga E, Roberts K (2022) The cranky uncle game – combining humor and gamification to build student resilience against climate misinformation. Environ Educ Res. https://doi.org/10.1080/13504622.2022.2085671

Douglas KM, Sutton RM, Cichocka A (2017) The psychology of conspiracy theories. Curr Dir Psychol Sci 26(6):538–542

Douglas KM, Uscinski JE, Sutton RM, Cichocka A, Nefes T, Ang CS, Deravi F (2019) Understanding conspiracy theories. Polit Psychol 40(S1):3–35

DROG (2019) A good way to fight bad news. www.aboutbadnews.com. Accessed 17 Aug 2022

Ecker UK, Lewandowsky S, Cook J, Schmid P, Fazio LK, Brashier N, Kendeou P, Vraga EK, Amazeen MA (2022) The psychological drivers of misinformation belief and its resistance to correction. Nat Rev Psychol 1(1):13–29

Featherstone JD, Zhang J (2020) Feeling angry: the effects of vaccine misinformation and refutational messages on negative emotions and vaccination attitude. J Health Commun 25(9):692–702

Freeman D, Waite F, Rosebrock L, Petit A, Causier C, East A, Jenner L, Teale AL, Carr L, Mulhall S, Bold E (2022) Coronavirus conspiracy beliefs, mistrust, and compliance with government guidelines in England. Psychol Med 52(2):251–263

Frenkel S, Alba D, Zhong R (2020) Surge of virus misinformation stumps Facebook and Twitter. https://www.nytimes.com/2020/03/08/technology/coronavirus-misinformation-social-media.html. Accessed 25 Aug 2022

Government Communication Service (2021) GCS International joins the fight against health misinformation worldwide. https://gcs.civilservice.gov.uk/news/gcs-international-joins-the-fight-against-health-misinformation-worldwide/. Accessed 25 Aug 2022

Greenberg J (2017) No, 30,000 scientists have not said climate change is a hoax. https://www.politifact.com/factchecks/2017/sep/08/blog-posting/no-30000-scientists-have-not-said-climate-change-h/. Accessed 26 Aug 2022

Imhoff R, Lamberty P (2020) A bioweapon or a hoax? The link between distinct conspiracy beliefs about the coronavirus disease (COVID-19) outbreak and pandemic behavior. Soc Psychol Personal Sci 11(8):1110–1118

Ivanov B, Miller CH, Compton J, Averbeck JM, Harrison KJ, Sims JD, Parker KA, Parker JL (2012) Effects of postinoculation talk on resistance to influence. J Commun 62(4):701–718

Jin F, Dougherty E, Saraf P, Cao Y, Ramakrishnan N (2013) Epidemiological modeling of news and rumors on Twitter. Proceedings of the 7th workshop on social network mining and analysis – SNAKDD’13. https://doi.org/10.1145/2501025.2501027

Jolley D, Paterson JL (2020) Pylons ablaze: examining the role of 5G COVID-19 conspiracy beliefs and support for violence. Br J Soc Psychol 59(3):628–640

Kumkale GT, Albarracín D (2004) The sleeper effect in persuasion: a meta-analytic review. Psychol Bull 130(1):143–172

Lewandowsky S, van der Linden S (2021) Countering misinformation and fake news through inoculation and prebunking. Eur Rev Soc Psychol 32(2):348–384

Lewandowsky S, Ecker UK, Seifert CM, Schwarz N, Cook J (2012) Misinformation and its correction: continued influence and successful debiasing. Psychol Sci Public Interest 13(3):106–131

Litman L, Rosen Z, Rosenzweig C, Weinberger-Litman SL, Moss AJ, Robinson J (2020) Did people really drink bleach to prevent COVID-19? A tale of problematic respondents and a guide for measuring rare events in survey data. medRxiv. https://doi.org/10.1101/2020.12.11.20246694

Loomba S, de Figueiredo A, Piatek SJ, de Graaf K, Larson HJ (2021) Measuring the impact of COVID-19 vaccine misinformation on vaccination intent in the UK and USA. Nat Hum Behav 5(3):337–348

Maertens, R (2022) The long-term effectiveness of inoculation against misinformation: an integrated theory of memory, threat, and motivation. PhD Thesis, University of Cambridge.

Maertens R, Anseel F, van der Linden S (2020) Combatting climate change misinformation: evidence for longevity of inoculation and consensus messaging effects. J Environ Psychol 70. https://doi.org/10.1016/j.jenvp.2020.101455

Maertens R, Roozenbeek J, Basol M, van der Linden S (2021) Long-term effectiveness of inoculation against misinformation: three longitudinal experiments. J Exp Psychol Appl 27(1):1–16

McGuire WJ (1961) Resistance to persuasion conferred by active and passive prior refutation of the same and alternative counterarguments. J Abnorm Soc Psychol 63(2):326–332

McGuire WJ (1964) Inducing resistance to persuasion: some contemporary approaches. In: Berkowitz L (ed) Advances in experimental social psychology, vol 1, 1st edn. Academic Press, New York, pp 191–229

McGuire WJ, Papageorgis D (1962) Effectiveness of forewarning in developing resistance to persuasion. Public Opin Q 26(1):24–34

Nera K, Bertin P, Klein O (2022) Conspiracy theories as opportunistic attributions of power. Curr Opin Psychol 47. https://doi.org/10.1016/j.copsyc.2022.101381

Nisa CF, Bélanger JJ, Schumpe BM, Faller DG (2019) Meta-analysis of randomised controlled trials testing behavioural interventions to promote household action on climate change. Nat Commun 10(1):4545. https://doi.org/10.1038/s41467-019-12,457-2

Pennycook G, Epstein Z, Mosleh M, Arechar AA, Eckles D, Rand DG (2021) Shifting attention to accuracy can reduce misinformation online. Nature 592(7855):590–595

Pertwee E, Simas C, Larson HJ (2022) An epidemic of uncertainty: rumors, conspiracy theories and vaccine hesitancy. Nat Med 28(3):456–459

Pfau M, van Bockern S, Kang JG (1992) Use of inoculation to promote resistance to smoking initiation among adolescents. Commun Monogr 59(3):213–230

Pfau M, Compton J, Parker KA, An C, Wittenberg EM, Ferguson M, Horton H, Malyshev Y (2006) The conundrum of the timing of counterarguing effects in resistance: strategies to boost the persistence of counterarguing output. Commun Q 54(2):143–156

Pierri F, Perry BL, DeVerna MR, Yang KC, Flammini A, Menczer F, Bryden (2022) Online misinformation is linked to early COVID-19 vaccination hesitancy and refusal. Sci Rep 12:5966. https://doi.org/10.1038/s41598-022-10,070-w.

Pilditch TD, Roozenbeek J, Madsen JK, van der Linden S (2022) Psychological inoculation can reduce susceptibility to misinformation in large rational agent networks. R Soc Open Sci 9(8). https://doi.org/10.1098/rsos.211953

Porter E, Wood TJ (2021) The global effectiveness of fact-checking: evidence from simultaneous experiments in Argentina, Nigeria, South Africa, and the United Kingdom. Proc Natl Acad Sci 118(37). https://doi.org/10.1073/pnas.2104235118

RAND (2022) Captain fact. https://www.rand.org/research/projects/truth-decay/fighting-disinformation/search/items/captain-fact.html. Accessed 25 Aug 2022.

Roozenbeek J, van der Linden S (2019a) The fake news game: actively inoculating against the risk of misinformation. J Risk Res 22(5):570–580

Roozenbeek J, van der Linden S (2019b) Fake news game confers psychological resistance against online misinformation. Palgrave Commun 5(65). https://doi.org/10.1057/s41599-019-0279-9

Roozenbeek J, van der Linden S (2020) Breaking harmony square: a game that “inoculates” against political misinformation. Harv Kennedy Sch Misinfo Rev 8(1). https://doi.org/10.37016/mr-2020-47

Roozenbeek J, Schneider CR, Dryhurst S, Kerr J, Freeman AL, Recchia G, Van Der Bles AM, van der Linden S (2020a) Susceptibility to misinformation about COVID-19 around the world. R Soc Open Sci 7(10). https://doi.org/10.1098/rsos.201199

Roozenbeek J, van der Linden S, Nygren T (2020b) Prebunking interventions based on “inoculation” theory can reduce susceptibility to misinformation across cultures. Harv Kennedy Sch Misinfo Rev 1(2). https://doi.org/10.37016//mr-2020-008

Roozenbeek J, Maertens R, McClanahan W, van der Linden S (2021) Disentangling item and testing effects in inoculation research on online misinformation: solomon revisited. Educ Psychol Meas 81(2):340–362

Roozenbeek J, Traberg CS, van der Linden S (2022a) Technique-based inoculation against real-world misinformation. R Soc Open Sci 9(5). https://doi.org/10.1098/rsos.211719

Roozenbeek J, van der Linden S, Goldberg B, Rathje S, Lewandowsky S (2022b) Psychological inoculation improves resilience against misinformation on social media. Sci Adv 8(34):eabo6254. https://doi.org/10.1126/sciadv.abo6254

Simon FM, Camargo CQ (2021) Autopsy of a metaphor: the origins, use and blind spots of the ‘infodemic’. New Media Soc 1–22. https://doi.org/10.1177/14614448211031908

Tardáguila C (2020) The demand for COVID-19 facts on WhatsApp is skyrocketing. https://www.poynter.org/fact-checking/2020/the-demand-for-covid-19-facts-on-whatsapp-is-skyrocketing/. Accessed 25 Aug 2022.

Taylor J (2021) Facebook removes 110,000 pieces of Covid misinformation posted by Australian users. https://www.theguardian.com/technology/2021/may/21/facebook-removes-110000-pieces-of-covid-misinformation-posted-by-australian-users. Accessed 25 Aug 2022.

Tormala ZL, Petty RE (2004) Source credibility and attitude certainty: a metacognitive analysis of resistance to persuasion. J Consum Psychol 14(4):427–442

Traberg CS (2022) Misinformation: broaden definition to curb its societal influence. Nature 606(7915):653–653

Traberg CS, van der Linden S (2022) Birds of a feather are persuaded together: perceived source credibility mediates the effect of political bias on misinformation susceptibility. Personal Individ Differ 185(14). https://doi.org/10.1016/j.paid.2021.111269

Traberg CS, Roozenbeek J, van der Linden S (2022) Psychological inoculation against misinformation: current evidence and future directions. Ann Am Acad Pol Soc Sci 700(1):136–151

Tyler SW, Hertel PT, McCallum MC, Ellis HC (1979) Cognitive effort and memory. J Exp Psychol Hum Learn Mem 5(6):607–617

van der Linden S (2023) Foolproof: why we fall for misinformation and how to build immunity. HarperCollins, London

van der Linden S, Leiserowitz A, Rosenthal S, Maibach (2017) Inoculating the public against misinformation about climate change Global Chall 1(2). https://doi.org/10.1002/gch2.201600008

van Prooijen JW, Ligthart J, Rosema S, Xu Y (2022) The entertainment value of conspiracy theories. Br J Psychol 113(1):25–48

Vosoughi S, Roy D, Aral S (2018) The spread of true and false news online. Science 359(6380):1146–1151

Walter N, Cohen J, Holbert RL, Morag Y (2020) Fact-checking: a meta-analysis of what works and for whom. Polit Commun 37(3):350–375

WHO Director General (2020) WHO Director-General’s opening remarks at the media briefing on COVID-19 – 11 March 2020. https://www.who.int/director-general/speeches/detail/who-director-general-s-opening-remarks-at-the-media-briefing-on-covid-19%2D%2D-11-march-2020. Accessed 26 Aug 2022.

Williams MN, Bond CMC (2020) A preregistered replication of “Inoculating the public against misinformation about climate change”. J Environ Psychol 70:101456

World Health Organization (2021) What is go viral? https://www.who.int/news/item/23-09-2021-what-is-go-viral. Accessed 25 Aug 2022.

Zarocostas J (2020) How to fight an infodemic. Lancet 395(10225):676. https://doi.org/10.1016/S0140-6736(20)30461-X

Zollo F, Bessi A, Del Vicario M, Scala A, Caldarelli G, Shekhtman L, Havlin S, Quattrociocchi W (2017) Debunking in a world of tribes. PLoS ONE 12(7):e0181821. https://doi.org/10.1371/journal.pone.0181821

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Open Access Some rights reserved. This chapter is an open access publication, available online and distributed under the terms of the Creative Commons Attribution-NonCommercial 3.0 IGO license, a copy of which is available at (http://creativecommons.org/licenses/by-nc/3.0/igo/). Enquiries concerning use outside the scope of the licence terms should be sent to Springer Nature Switzerland AG.

The designations employed and the presentation of the material in this publication do not imply the expression of any opinion whatsoever on the part of WHO concerning the legal status of any country, territory, city or area or of its authorities, or concerning the delimitation of its frontiers or boundaries. Dotted and dashed lines on maps represent approximate border lines for which there may not yet be full agreement.

The mention of specific companies or of certain manufacturers' products does not imply that they are endorsed or recommended by WHO in preference to others of a similar nature that are not mentioned. Errors and omissions excepted, the names of proprietary products are distinguished by initial capital letters.

Copyright information

© 2023 WHO: World Health Organization

About this chapter

Cite this chapter

Traberg, C.S. et al. (2023). Prebunking Against Misinformation in the Modern Digital Age. In: Purnat, T.D., Nguyen, T., Briand, S. (eds) Managing Infodemics in the 21st Century . Springer, Cham. https://doi.org/10.1007/978-3-031-27789-4_8

Download citation

DOI: https://doi.org/10.1007/978-3-031-27789-4_8

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-27788-7

Online ISBN: 978-3-031-27789-4

eBook Packages: MedicineMedicine (R0)