Abstract

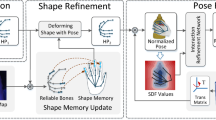

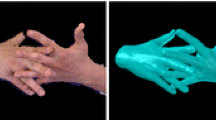

We present TOCH, a method for refining incorrect 3D hand-object interaction sequences using a correspondence based prior learnt directly from data. Existing hand trackers, especially those that rely on very few cameras, often produce visually unrealistic results with hand-object intersection or missing contacts. Although correcting such errors requires reasoning about temporal aspects of interaction, most previous works focus on static grasps and contacts. The core of our method are TOCH fields, a novel spatio-temporal representation for modeling correspondences between hands and objects during interaction. TOCH fields are a point-wise, object-centric representation, which encode the hand position relative to the object. Leveraging this novel representation, we learn a latent manifold of plausible TOCH fields with a temporal denoising auto-encoder. Experiments demonstrate that TOCH outperforms state-of-the-art 3D hand-object interaction models, which are limited to static grasps and contacts. More importantly, our method produces smooth interactions even before and after contact. Using a single trained TOCH model, we quantitatively and qualitatively demonstrate its usefulness for correcting erroneous sequences from off-the-shelf RGB/RGB-D hand-object reconstruction methods and transferring grasps across objects. Our code and model are available at [1].

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Aliakbarian, S., Saleh, F.S., Salzmann, M., Petersson, L., Gould, S.: A stochastic conditioning scheme for diverse human motion prediction. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 5223–5232 (2020)

Arnab, A., Doersch, C., Zisserman, A.: Exploiting temporal context for 3d human pose estimation in the wild. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 3395–3404 (2019)

Ballan, L., Taneja, A., Gall, J., Van Gool, L., Pollefeys, M.: Motion capture of hands in action using discriminative salient points. In: Fitzgibbon, A., Lazebnik, S., Perona, P., Sato, Y., Schmid, C. (eds.) ECCV 2012. LNCS, vol. 7577, pp. 640–653. Springer, Heidelberg (2012). https://doi.org/10.1007/978-3-642-33783-3_46

Bhatnagar, B.L., Sminchisescu, C., Theobalt, C., Pons-Moll, G.: Loopreg: self-supervised learning of implicit surface correspondences, pose and shape for 3d human mesh registration. Adv. Neural Inf. Process. Syst. 33, 12909–12922 (2020)

Bhatnagar, B.L., Xie, X., Petrov, I., Sminchisescu, C., Theobalt, C., Pons-Moll, G.: Behave: dataset and method for tracking human object interactions. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR), June 2022

Bogo, F., Kanazawa, A., Lassner, C., Gehler, P., Romero, J., Black, M.J.: Keep It SMPL: automatic estimation of 3d human pose and shape from a single image. In: Leibe, B., Matas, J., Sebe, N., Welling, M. (eds.) ECCV 2016. LNCS, vol. 9909, pp. 561–578. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-46454-1_34

Bohg, J., Morales, A., Asfour, T., Kragic, D.: Data-driven grasp synthesis-a survey. IEEE Trans. Robot. 30(2), 289–309 (2013)

Boukhayma, A., Bem, R.D., Torr, P.H.: 3d hand shape and pose from images in the wild. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 10843–10852 (2019)

Brahmbhatt, S., Ham, C., Kemp, C.C., Hays, J.: ContactDB: analyzing and predicting grasp contact via thermal imaging. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 8709–8719 (2019)

Brahmbhatt, S., Tang, C., Twigg, C.D., Kemp, C.C., Hays, J.: ContactPose: a dataset of grasps with object contact and hand pose. In: Vedaldi, A., Bischof, H., Brox, T., Frahm, J.-M. (eds.) ECCV 2020. LNCS, vol. 12358, pp. 361–378. Springer, Cham (2020). https://doi.org/10.1007/978-3-030-58601-0_22

Cai, Y., et al.: A unified 3d human motion synthesis model via conditional variational auto-encoder. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 11645–11655 (2021)

Cao, Z., Radosavovic, I., Kanazawa, A., Malik, J.: Reconstructing hand-object interactions in the wild. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 12417–12426 (2021)

Chen, L., Lin, S.Y., Xie, Y., Lin, Y.Y., Xie, X.: MVHM: a large-scale multi-view hand mesh benchmark for accurate 3d hand pose estimation. In: Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, pp. 836–845 (2021)

Chen, Y., et al.: Joint hand-object 3d reconstruction from a single image with cross-branch feature fusion. IEEE Trans. Image Process. 30, 4008–4021 (2021)

Corona, E., Pumarola, A., Alenya, G., Moreno-Noguer, F., Rogez, G.: GanHand: predicting human grasp affordances in multi-object scenes. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 5031–5041 (2020)

El-Khoury, S., Sahbani, A., Bidaud, P.: 3d objects grasps synthesis: a survey. In: 13th World Congress in Mechanism and Machine Science, pp. 573–583 (2011)

Elgammal, A., Lee, C.S.: The Role of Manifold Learning in Human Motion Analysis. In: Rosenhahn, B., Klette, R., Metaxas, D. (eds.) Human Motion. Computational Imaging and Vision, vol. 36, pp. 25–56. Springer, Dordrecht (2008). https://doi.org/10.1007/978-1-4020-6693-1_2

Garcia-Hernando, G., Yuan, S., Baek, S., Kim, T.K.: First-person hand action benchmark with RGB-D videos and 3d hand pose annotations. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 409–419 (2018)

Ge, L., et al.: 3d hand shape and pose estimation from a single RGB image. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 10833–10842 (2019)

Goodfellow, I., et al.: Generative adversarial nets. Adv. Neural Inf. Process. Syst. 27 (2014)

Grady, P., Tang, C., Twigg, C.D., Vo, M., Brahmbhatt, S., Kemp, C.C.: ContactOpt: optimizing contact to improve grasps. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 1471–1481 (2021)

Guzov, V., Sattler, T., Pons-Moll, G.: Visually plausible human-object interaction capture from wearable sensors. In: arXiv (May 2022)

Hamer, H., Gall, J., Weise, T., Van Gool, L.: An object-dependent hand pose prior from sparse training data. In: 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, pp. 671–678. IEEE (2010)

Hampali, S., Rad, M., Oberweger, M., Lepetit, V.: HOnnotate: a method for 3d annotation of hand and object poses. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 3196–3206 (2020)

Hasson, Y., Tekin, B., Bogo, F., Laptev, I., Pollefeys, M., Schmid, C.: Leveraging photometric consistency over time for sparsely supervised hand-object reconstruction. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 571–580 (2020)

Hasson, Y., Varol, G., Laptev, I., Schmid, C.: Towards unconstrained joint hand-object reconstruction from RGB videos. arXiv preprint arXiv:2108.07044 (2021)

Hasson, Y., et al.: Learning joint reconstruction of hands and manipulated objects. In: CVPR (2019)

Hasson, Y., et al.: Learning joint reconstruction of hands and manipulated objects. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 11807–11816 (2019)

Henter, G.E., Alexanderson, S., Beskow, J.: MoGlow: probabilistic and controllable motion synthesis using normalising flows. ACM Trans. Graph. (TOG) 39(6), 1–14 (2020)

Huang, L., Zhang, B., Guo, Z., Xiao, Y., Cao, Z., Yuan, J.: Survey on depth and RGB image-based 3d hand shape and pose estimation. Virtual Reality Intell. Hardware 3(3), 207–234 (2021)

Jiang, H., Liu, S., Wang, J., Wang, X.: Hand-object contact consistency reasoning for human grasps generation. arXiv preprint arXiv:2104.03304 (2021)

Jiang, W., Kolotouros, N., Pavlakos, G., Zhou, X., Daniilidis, K.: Coherent reconstruction of multiple humans from a single image. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 5579–5588 (2020)

Jiang, Z., Zhu, Y., Svetlik, M., Fang, K., Zhu, Y.: Synergies between affordance and geometry: 6-DoF grasp detection via implicit representations. Robot. Sci. Syst. (2021)

Karunratanakul, K., Yang, J., Zhang, Y., Black, M.J., Muandet, K., Tang, S.: Grasping field: Learning implicit representations for human grasps. In: 2020 International Conference on 3D Vision (3DV), pp. 333–344. IEEE (2020)

Kingma, D.P., Welling, M.: Auto-encoding variational bayes. In: Bengio, Y., LeCun, Y. (eds.) 2nd International Conference on Learning Representations, ICLR 2014, Banff, AB, Canada, 14–16 April 2014, Conference Track Proceedings (2014). http://arxiv.org/abs/1312.6114

Kocabas, M., Athanasiou, N., Black, M.J.: Vibe: video inference for human body pose and shape estimation. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 5253–5263 (2020)

Kundu, J.N., Gor, M., Babu, R.V.: BiHMP-GAN: bidirectional 3d human motion prediction GAN. In: Proceedings of the AAAI Conference on Artificial Intelligence, vol. 33, pp. 8553–8560 (2019)

Kwon, T., Tekin, B., Stuhmer, J., Bogo, F., Pollefeys, M.: H2o: two hands manipulating objects for first person interaction recognition. arXiv preprint arXiv:2104.11181 (2021)

León, B., et al.: OpenGRASP: a toolkit for robot grasping simulation. In: Ando, N., Balakirsky, S., Hemker, T., Reggiani, M., von Stryk, O. (eds.) SIMPAR 2010. LNCS (LNAI), vol. 6472, pp. 109–120. Springer, Heidelberg (2010). https://doi.org/10.1007/978-3-642-17319-6_13

Li, J., et al.: Task-generic hierarchical human motion prior using vaes. arXiv preprint arXiv:2106.04004 (2021)

Liu, C.K.: Dextrous manipulation from a grasping pose. In: ACM SIGGRAPH 2009 papers, pp. 1–6 (2009)

Luo, Z., Golestaneh, S.A., Kitani, K.M.: 3d human motion estimation via motion compression and refinement. In: Proceedings of the Asian Conference on Computer Vision (2020)

Malik, J., et al.: HandVoxNet: deep voxel-based network for 3d hand shape and pose estimation from a single depth map. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 7113–7122 (2020)

Miller, A.T., Allen, P.K.: Graspit! a versatile simulator for robotic grasping. IEEE Robot. Autom. Mag. 11(4), 110–122 (2004)

Mordatch, I., Popović, Z., Todorov, E.: Contact-invariant optimization for hand manipulation. In: Proceedings of the ACM SIGGRAPH/Eurographics Symposium on Computer Animation, pp. 137–144 (2012)

Mueller, F., et al.: Real-time pose and shape reconstruction of two interacting hands with a single depth camera. ACM Trans. Graph. (TOG) 38(4), 1–13 (2019)

Ng, E., Ginosar, S., Darrell, T., Joo, H.: Body2hands: learning to infer 3d hands from conversational gesture body dynamics. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 11865–11874 (2021)

Oikonomidis, I., Kyriazis, N., Argyros, A.A.: Full DOF tracking of a hand interacting with an object by modeling occlusions and physical constraints. In: 2011 International Conference on Computer Vision, pp. 2088–2095. IEEE (2011)

Ormoneit, D., Sidenbladh, H., Black, M.J., Hastie, T.: Learning and tracking cyclic human motion. Adv. Neural Inf. Process. Syst. 894–900 (2001)

Panteleris, P., Argyros, A.: Back to RGB: 3d tracking of hands and hand-object interactions based on short-baseline stereo. In: Proceedings of the IEEE International Conference on Computer Vision Workshops, pp. 575–584 (2017)

Pavlakos, G., et al.: Expressive body capture: 3d hands, face, and body from a single image. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 10975–10985 (2019)

Qi, C.R., Su, H., Mo, K., Guibas, L.J.: Pointnet: Deep learning on point sets for 3d classification and segmentation. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 652–660 (2017)

Romero, J., Tzionas, D., Black, M.J.: Embodied hands: modeling and capturing hands and bodies together. ACM Trans. Graph. (Proc. SIGGRAPH Asia) 36(6) (2017)

Sahbani, A., El-Khoury, S., Bidaud, P.: An overview of 3d object grasp synthesis algorithms. Robot. Auton. Syst. 60(3), 326–336 (2012)

Smith, B., et al.: Constraining dense hand surface tracking with elasticity. ACM Trans. Graph. (TOG) 39(6), 1–14 (2020)

Sridhar, S., et al.: Real-time joint tracking of a hand manipulating an object from RGB-D input. In: Leibe, B., Matas, J., Sebe, N., Welling, M. (eds.) ECCV 2016. LNCS, vol. 9906, pp. 294–310. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-46475-6_19

Sridhar, S., Rhodin, H., Seidel, H.P., Oulasvirta, A., Theobalt, C.: Real-time hand tracking using a sum of anisotropic gaussians model. In: 2014 2nd International Conference on 3D Vision, vol. 1, pp. 319–326. IEEE (2014)

Starke, S., Zhang, H., Komura, T., Saito, J.: Neural state machine for character-scene interactions. ACM Trans. Graph. 38(6), 209–210 (2019)

Taheri, O., Ghorbani, N., Black, M.J., Tzionas, D.: GRAB: a dataset of whole-body human grasping of objects. In: Vedaldi, A., Bischof, H., Brox, T., Frahm, J.-M. (eds.) ECCV 2020. LNCS, vol. 12349, pp. 581–600. Springer, Cham (2020). https://doi.org/10.1007/978-3-030-58548-8_34

Taylor, J., et al.: Efficient and precise interactive hand tracking through joint, continuous optimization of pose and correspondences. ACM Trans. Graph. (TOG) 35(4), 1–12 (2016)

Taylor, J., Shotton, J., Sharp, T., Fitzgibbon, A.: The vitruvian manifold: Inferring dense correspondences for one-shot human pose estimation. In: 2012 IEEE Conference on Computer Vision and Pattern Recognition, pp. 103–110. IEEE (2012)

Taylor, J., et al.: Articulated distance fields for ultra-fast tracking of hands interacting. ACM Trans. Graph. (TOG) 36(6), 1–12 (2017)

Tiwari, G., Antic, D., Lenssen, J.E., Sarafianos, N., Tung, T., Pons-Moll, G.: Pose-NDF: modeling human pose manifolds with neural distance fields. In: European Conference on Computer Vision (ECCV), Springer, Cham October 2022

Urtasun, R., Fleet, D.J., Fua, P.: 3d people tracking with gaussian process dynamical models. In: 2006 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR 2006), vol. 1, pp. 238–245. IEEE (2006)

Wang, Y., et al.: Video-based hand manipulation capture through composite motion control. ACM Trans. Graph. (TOG) 32(4), 1–14 (2013)

Xie, X., Bhatnagar, B.L., Pons-Moll, G.: Chore: contact, human and object reconstruction from a single RGB image. In: European Conference on Computer Vision (ECCV). Springer, Cham, (October 2022

Yang, L., Zhan, X., Li, K., Xu, W., Li, J., Lu, C.: CPF: learning a contact potential field to model the hand-object interaction. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 11097–11106 (2021)

Ye, Y., Liu, C.K.: Synthesis of detailed hand manipulations using contact sampling. ACM Trans. Graph. (TOG) 31(4), 1–10 (2012)

Yi, H., et al.: Human-aware object placement for visual environment reconstruction. In: Computer Vision and Pattern Recognition (CVPR), pp. 3959–3970 (Jun 2022)

Zeng, A., Yang, L., Ju, X., Li, J., Wang, J., Xu, Q.: SmoothNet: a plug-and-play network for refining human poses in videos. In: European Conference on Computer Vision. Springer, Cham (2022)

Zhang, B., et al.: Interacting two-hand 3d pose and shape reconstruction from single color image. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 11354–11363 (2021)

Zhang, H., Bo, Z.H., Yong, J.H., Xu, F.: InteractionFusion: real-time reconstruction of hand poses and deformable objects in hand-object interactions. ACM Trans. Graph. (TOG) 38(4), 1–11 (2019)

Zhang, H., Zhou, Y., Tian, Y., Yong, J.H., Xu, F.: Single depth view based real-time reconstruction of hand-object interactions. ACM Trans. Graph. (TOG) 40(3), 1–12 (2021)

Zhang, H., Ye, Y., Shiratori, T., Komura, T.: ManipNet: neural manipulation synthesis with a hand-object spatial representation. ACM Trans. Graph. (TOG) 40(4), 1–14 (2021)

Zhang, S., Zhang, Y., Bogo, F., Pollefeys, M., Tang, S.: Learning motion priors for 4d human body capture in 3d scenes. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 11343–11353 (2021)

Zhang, X., Bhatnagar, B.L., Guzov, V., Starke, S., Pons-Moll, G.: Couch: towards controllable human-chair interactions. In: European Conference on Computer Vision (ECCV). Springer, Cham, October 2022

Zhao, R., Su, H., Ji, Q.: Bayesian adversarial human motion synthesis. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 6225–6234 (2020)

Zhao, W., Zhang, J., Min, J., Chai, J.: Robust realtime physics-based motion control for human grasping. ACM Trans. Graph. (TOG) 32(6), 1–12 (2013)

Zhao, Z., Wang, T., Xia, S., Wang, Y.: Hand-3d-studio: a new multi-view system for 3d hand reconstruction. In: ICASSP 2020–2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), pp. 2478–2482. IEEE (2020)

Zhu, T., Wu, R., Lin, X., Sun, Y.: Toward human-like grasp: dexterous grasping via semantic representation of object-hand. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 15741–15751 (2021)

Zimmermann, C., Ceylan, D., Yang, J., Russell, B., Argus, M., Brox, T.: FreiHAND: a dataset for markerless capture of hand pose and shape from single RGB images. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 813–822 (2019)

Acknowledgements

This work is supported by the German Federal Ministry of Education and Research (BMBF): Tübingen AI Center, FKZ: 01IS18039A. This work is funded by the Deutsche Forschungsgemeinschaft (DFG, German Research Foundation) - 409792180 (Emmy Noether Programme, project: Real Virtual Humans). Gerard Pons-Moll is a member of the Machine Learning Cluster of Excellence, EXC number 2064/1 - Project number 390727645.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

1 Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

Copyright information

© 2022 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Zhou, K., Bhatnagar, B.L., Lenssen, J.E., Pons-Moll, G. (2022). TOCH: Spatio-Temporal Object-to-Hand Correspondence for Motion Refinement. In: Avidan, S., Brostow, G., Cissé, M., Farinella, G.M., Hassner, T. (eds) Computer Vision – ECCV 2022. ECCV 2022. Lecture Notes in Computer Science, vol 13663. Springer, Cham. https://doi.org/10.1007/978-3-031-20062-5_1

Download citation

DOI: https://doi.org/10.1007/978-3-031-20062-5_1

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-20061-8

Online ISBN: 978-3-031-20062-5

eBook Packages: Computer ScienceComputer Science (R0)