Abstract

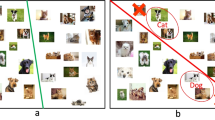

Data lies at the core of modern deep learning. The impressive performance of supervised learning is built upon a base of massive accurately labeled data. However, in some real-world applications, accurate labeling might not be viable; instead, multiple noisy labels (instead of one accurate label) are provided by several annotators for each data sample. Learning a classifier on such a noisy training dataset is a challenging task. Previous approaches usually assume that all data samples share the same set of parameters related to annotator errors, while we demonstrate that label error learning should be both annotator and data sample dependent. Motivated by this observation, we propose a novel learning algorithm. The proposed method displays superiority compared with several state-of-the-art baseline methods on MNIST, CIFAR-100, and ImageNet-100. Our code is available at: https://github.com/zhengqigao/Learning-from-Multiple-Annotator-Noisy-Labels.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Algan, G., Ulusoy, I.: Image classification with deep learning in the presence of noisy labels: a survey. Knowl.-Based Syst. 215, 106771 (2021)

Asmussen, S.: Applied Probability and Queues, vol. 51. Springer, New York (2003). https://doi.org/10.1007/b97236

Charoenphakdee, N., Lee, J., Sugiyama, M.: On symmetric losses for learning from corrupted labels. In: International Conference on Machine Learning, pp. 961–970 (2019)

Deng, J., Dong, W., Socher, R., Li, L.J., Li, K., Fei-Fei, L.: ImageNet: a large-scale hierarchical image database. In: IEEE Conference on Computer Vision and Pattern Recognition, pp. 248–255 (2009)

Dufossé, F., Uçar, B.: Notes on Birkhoff-von Neumann decomposition of doubly stochastic matrices. Linear Algebra Appl. 497, 108–115 (2016)

Gagniuc, P.: Markov Chains: From Theory to Implementation and Experimentation. Wiley, Hoboken (2017)

Gao, Z., Ren, S., Xue, Z., Li, S., Zhao, H.: Training-free robust multimodal learning via sample-wise Jacobian regularization (2022)

Ghosh, A., Kumar, H., Sastry, P.: Robust loss functions under label noise for deep neural networks. In: Proceedings of the AAAI Conference on Artificial Intelligence, vol. 31 (2017)

Guan, M., Gulshan, V., Dai, A., Hinton, G.: Who said what: modeling individual labelers improves classification. In: Proceedings of the AAAI Conference on Artificial Intelligence, vol. 32 (2018)

He, K., Zhang, X., Ren, S., Sun, J.: Deep residual learning for image recognition. In: 2016 IEEE Conference on Computer Vision and Pattern Recognition, pp. 770–778 (2016)

Ioffe, S., Szegedy, C.: Batch normalization: accelerating deep network training by reducing internal covariate shift. CoRR (2015)

Jiang, L., Zhou, Z., Leung, T., Li, L.J., Fei-Fei, L.: MentorNet: learning data-driven curriculum for very deep neural networks on corrupted labels. In: International Conference on Machine Learning, pp. 2304–2313 (2018)

Kajino, H., Tsuboi, Y., Sato, I., Kashima, H.: Learning from crowds and experts. In: Workshops at the Twenty-Sixth AAAI Conference on Artificial Intelligence (2012)

Khetan, A., Lipton, Z.C., Anandkumar, A.: Learning from noisy singly-labeled data. In: International Conference on Learning Representations (2018)

Krizhevsky, A., Hinton, G., et al.: Learning multiple layers of features from tiny images (2009)

Lecun, Y., Bottou, L., Bengio, Y., Haffner, P.: Gradient-based learning applied to document recognition. Proc. IEEE 86(11), 2278–2324 (1998)

Lin, T.-Y., et al.: Microsoft COCO: common objects in context. In: Fleet, D., Pajdla, T., Schiele, B., Tuytelaars, T. (eds.) ECCV 2014. LNCS, vol. 8693, pp. 740–755. Springer, Cham (2014). https://doi.org/10.1007/978-3-319-10602-1_48

Patrini, G., Rozza, A., Krishna Menon, A., Nock, R., Qu, L.: Making deep neural networks robust to label noise: a loss correction approach. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 1944–1952 (2017)

Raykar, V.C., et al.: Supervised learning from multiple experts: whom to trust when everyone lies a bit. In: Proceedings of the 26th Annual International Conference on Machine Learning, pp. 889–896 (2009)

Reed, S., Lee, H., Anguelov, D., Szegedy, C., Erhan, D., Rabinovich, A.: Training deep neural networks on noisy labels with bootstrapping. arXiv preprint arXiv:1412.6596 (2014)

Song, H., Kim, M., Park, D., Shin, Y., Lee, J.G.: Learning from noisy labels with deep neural networks: a survey. arXiv preprint arXiv:2007.08199 (2020)

Tanaka, D., Ikami, D., Yamasaki, T., Aizawa, K.: Joint optimization framework for learning with noisy labels. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 5552–5560 (2018)

Tanno, R., Saeedi, A., Sankaranarayanan, S., Alexander, D.C., Silberman, N.: Learning from noisy labels by regularized estimation of annotator confusion. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 11244–11253 (2019)

Whitehill, J., Wu, T.F., Bergsma, J., Movellan, J., Ruvolo, P.: Whose vote should count more: optimal integration of labels from labelers of unknown expertise. In: Advances in Neural Information Processing Systems, vol. 22, pp. 2035–2043 (2009)

Xiao, T., Xia, T., Yang, Y., Huang, C., Wang, X.: Learning from massive noisy labeled data for image classification. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 2691–2699 (2015)

Xue, Z., Ren, S., Gao, Z., Zhao, H.: Multimodal knowledge expansion. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 854–863 (2021)

Acknowledgements

This research was supported in part by Millennium Pharmaceuticals, Inc. (a subsidiary of Takeda Pharmaceuticals). The authors also acknowledge helpful feedback from the reviewers. Zhengqi Gao would like to thank Alex Gu, Suvrit Sra, Zichang He and Hangyu Lin for useful discussions, and Zihui Xue for her support.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

1 Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

Copyright information

© 2022 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Gao, Z. et al. (2022). Learning from Multiple Annotator Noisy Labels via Sample-Wise Label Fusion. In: Avidan, S., Brostow, G., Cissé, M., Farinella, G.M., Hassner, T. (eds) Computer Vision – ECCV 2022. ECCV 2022. Lecture Notes in Computer Science, vol 13684. Springer, Cham. https://doi.org/10.1007/978-3-031-20053-3_24

Download citation

DOI: https://doi.org/10.1007/978-3-031-20053-3_24

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-20052-6

Online ISBN: 978-3-031-20053-3

eBook Packages: Computer ScienceComputer Science (R0)