Abstract

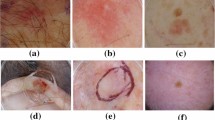

Dermoscopic images segmentation is a key step in skin cancer diagnosis and analysis. Convolutional Neural Networks (CNNs) has achieved great success in various medical image segmentation tasks. However, continuous down-sampling operation brings network redundancy and loss of local details, and also shows great limitations in modeling of long-range relationships. Inversely, Transformer shows great potential in modeling global contexts. In this paper, we propose a novel segmentation network combining Transformers and CNNs (CTCNet) for Skin Lesions to improve the efficiency of the network in modeling the global context, while maintaining the control of the underlying details. Besides, a novel fusion technique - Two-stream Cascaded Feature Aggregation (TCFA) module, is constructed to integrate multi-level features from two branches efficiently. Moreover, we design a Multi-Scale Expansion-Aware (MSEA) module based on the convolution of feature perception and expansion, which can extract high-level features containing more abundant context information and further enhance the perception ability of network. CTCNet combines Transformers and CNNs in a parallel style, where both global dependency and low-level spatial details can be efficiently captured. Extensive experiments demonstrate that CTCNet achieves the better performance compared with state-of-the-art approaches.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Wu, H., Pan, J., Li, Z., Wen, Z., Qin, J.: Automated skin lesion segmentation via an adaptive dual attention module. IEEE Trans. Med. Imaging 40(1), 357–370 (2021)

Xw, A., et al.: Knowledge-aware deep framework for collaborative skin lesion segmentation and melanoma recognition. Pattern Recogn. 120, 108075 (2021)

Xiao, J., et al.: A prior-mask-guided few-shot learning for skin lesion segmentation. Computing 120, 108075 (2021)

Cerri, S., Puonti, O., Meier, D.S., Wuerfel, J., Leemput, K.V.: A contrast-adaptive method for simultaneous whole-brain and lesion segmentation in multiple sclerosis. Neuroimage 225, 117471 (2021)

Li, W., Raj, A., Tjahjadi, T., Zhuang, Z.: Digital hair removal by deep learning for skin lesion segmentation. Pattern Recogn. 117, 107994 (2021)

Sarker, M.M.K., et al.: SLSDeep: skin lesion segmentation based on dilated residual and pyramid pooling networks. In: Frangi, A.F., Schnabel, J.A., Davatzikos, C., Alberola-López, C., Fichtinger, G. (eds.) MICCAI 2018. LNCS, vol. 11071, pp. 21–29. Springer, Cham (2018). https://doi.org/10.1007/978-3-030-00934-2_3

Singh, V.K., et al.: FCA-Net: adversarial learning for skin lesion segmentation based on multi-scale features and factorized channel attention. IEEE Access 7, 130552–130565 (2019)

Ran, G., Guotai, W., Tao, S., et al.: CA-Net: comprehensive attention convolutional neural networks for explainable medical image segmentation. IEEE Trans. Med. Imaging 40(2), 699–711 (2021)

Feng, S., Zhao, H., Shi, F., et al.: CPFNet: context pyramid fusion network for medical image segmentation. IEEE Trans. Med. Imaging 39(10), 3008–3018 (2020)

Dosovitskiy, A., Beyer, L., et al.: An image is worth 16 × 16 words: transformers for image recognition at scale. arXiv preprint arXiv:2010.11929 (2020)

Zheng, S., Lu, J., et al.: Rethinking semantic segmentation from a sequence-to sequence perspective with transformers. arXiv preprint arXiv:2012.15840 (2020)

Chen, J., Lu, Y., et al.: TransUNet: transformers make strong encoders for medical image segmentation. arXiv preprint arXiv:2102.04306 (2021)

Zhang, Y., Liu, H., Hu, Q.: TransFuse: fusing transformers and CNNs for medical image segmentation. In: de Bruijne, M., et al. (eds.) MICCAI 2021. LNCS, vol. 12901, pp. 14–24. Springer, Cham (2021). https://doi.org/10.1007/978-3-030-87193-2_2

Chen, L.C., Papandreou, G., Kokkinos, I., et al.: DeepLab: semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected CRFs. IEEE Trans. Pattern Anal. Mach. Intell. 40(4), 834–848 (2018)

Chollet, F.: Xception: deep learning with depthwise separable convolutions In: Proceedings of IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 1251–1258 (2017)

Codella, N.C., et al.: Skin lesion analysis toward melanoma detection: a challenge at the 2017 international symposium on biomedical imaging (ISBI), hosted by the international skin imaging collaboration (ISIC). arXiv preprint arXiv:1710.05006 (2017)

Codella, N.C., et al.: Skin lesion analysis toward melanoma detection: a challenge at the 2017 international symposium on biomedical imaging (ISBI), hosted by the international skin imaging collaboration (ISIC). arXiv preprint arXiv:1902.03368 (2018)

Ronneberger, O., Fischer, P., Brox, T.: U-Net: convolutional networks for biomedical image segmentation. In: Navab, N., Hornegger, J., Wells, W., Frangi, A. (eds.) MICCAI 2015. LNCS, vol. 9351, pp. 234–241. Springer, Cham (2015). https://doi.org/10.1007/978-3-319-24574-4_28

Oktay O, Schlemper J, Folgoc LL, et al.: Attention U-Net: learning where to look for the pancreas. arXiv Print, arXiv:1804.03999 (2018)

Yuan, Y., Lo, Y.C.: Improving dermoscopic image segmentation with enhanced convolutional-deconvolutional networks. IEEE J. Biomed. Health Inform. 23, 519–526 (2017)

Bi, L., Kim, J., et al.: Step-wise integration of deep class-specific learning for dermoscopic image segmentation. Pattern Recogn. 85, 78–89 (2019)

Jha, D., et al.: Resunet++: an advanced architecture for medical image segmentation. In: 2019 IEEE International Symposium on Multimedia (2019)

Valanarasu, J.M.J., Oza, P., Hacihaliloglu, I., Patel, V.M.: Medical transformer: Gated axial-attention for medical image segmentation. In: de Bruijne, M., et al. (eds.) MICCAI 2021. LNCS, vol. 12901, pp. 36–46. Springer, Cham (2021). https://doi.org/10.1007/978-3-030-87193-2_4

Valanarasu J, Patel V M.: UNeXt: MLP-based rapid medical image segmentation network (2022)

Acknowledgement

This research was supported by the National Natural Science Foundation of China (No. 61901537), Research funds for overseas students in Henan Province, China Postdoctoral Science Foundation (No. 2020M672274), Science and technology guiding project of China National Textile and Apparel Council (No. 2019059), Postdoctoral Research Sponsorship in Henan Province (No. 19030018), Program of Young backbone teachers in Zhongyuan University of Technology (No. 2019XQG04), Training Program of Young Master’s Supervisor in Zhongyuan University of Technology (No. SD202207).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2022 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Wang, J., Li, B., Guo, X., Huang, J., Song, M., Wei, M. (2022). CTCNet: A Bi-directional Cascaded Segmentation Network Combining Transformers with CNNs for Skin Lesions. In: Yu, S., et al. Pattern Recognition and Computer Vision. PRCV 2022. Lecture Notes in Computer Science, vol 13535. Springer, Cham. https://doi.org/10.1007/978-3-031-18910-4_18

Download citation

DOI: https://doi.org/10.1007/978-3-031-18910-4_18

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-18909-8

Online ISBN: 978-3-031-18910-4

eBook Packages: Computer ScienceComputer Science (R0)