Abstract

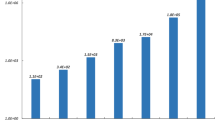

Recent advances in High Performance Computing (HPC) enable Deep Learning (DL) models to achieve state-of-the-art performance by exploiting multiple processors. Data parallelism is a strategy that replicates the DL model on each processor, which is impossible for models like AmoebaNet on NVIDIA GPUs. Layer parallelism avoids this limitation by placing one or more layers on each GPU, but still cannot train models like AmoebaNet on high-resolution images. We propose Hy-Fi: Hybrid Five-Dimensional Parallelism; a system that takes advantage of five parallelism dimensions—data, model, spatial, pipeline, and bi-directional parallelism—which enables efficient distributed training of out-of-core models and layers. Hy-Fi also proposes communication-level optimizations to integrate these dimensions. We report up to \(2.67{\times }\) and \(1.68{\times }\) speedups over layer and pipeline parallelism, respectively. We demonstrate Hy-Fi on up to 2, 048 GPUs on AmoebaNet and ResNet models. Further, we use Hy-Fi to enable DNN training on high-resolution images, including 8,192 \(\times \) 8,192 and 16,384 \(\times \) 16,384.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Awan, A.A., Hamidouche, K., Hashmi, J.M., Panda, D.K.: S-Caffe: co-designing MPI runtimes and Caffe for scalable deep learning on modern GPU clusters. In: Proceedings of the 22nd ACM SIGPLAN Symposium on Principles and Practice of Parallel Programming, pp. 193–205. ACM, New York (2017)

Awan, A.A., Jain, A., Anthony, Q., Subramoni, H., Panda, D.K.: HyPar-Flow: exploiting MPI and Keras for scalable hybrid-parallel DNN training using TensorFlow (2019)

Awan, A.A., Subramoni, H., Panda, D.K.: An in-depth performance characterization of CPU- and GPU-based DNN training on modern architectures. In: Proceedings of the Machine Learning on HPC Environments, MLHPC 2017, pp. 8:1–8:8. ACM, New York (2017)

Bauer, M., Treichler, S., Slaughter, E., Aiken, A.: Legion: expressing locality and independence with logical regions. In: Proceedings of the International Conference on High Performance Computing, Networking, Storage and Analysis, SC 2012. IEEE Computer Society Press (2012)

Ben-Nun, T., Hoefler, T.: Demystifying parallel and distributed deep learning: an in-depth concurrency analysis. CoRR abs/1802.09941 (2018)

Dryden, N., Maruyama, N., Benson, T., Moon, T., Snir, M., Essen, B.V.: Improving strong-scaling of CNN training by exploiting finer-grained parallelism. CoRR abs/1903.06681 (2019). http://arxiv.org/abs/1903.06681

Farrell, S., et al.: Novel deep learning methods for track reconstruction (2018)

Gholami, A., Azad, A., Jin, P., Keutzer, K., Buluc, A.: Integrated model, batch, and domain parallelism in training neural networks. In: Proceedings of the 30th on Symposium on Parallelism in Algorithms and Architectures, SPAA 2018, pp. 77–86. ACM, New York (2018). https://doi.org/10.1145/3210377.3210394

Gholami, A., Azad, A., Jin, P., Keutzer, K., Buluc, A.: Integrated model, batch, and domain parallelism in training neural networks. In: Proceedings of the 30th on Symposium on Parallelism in Algorithms and Architectures, pp. 77–86 (2018)

Harlap, A., et al.: PipeDream: fast and efficient pipeline parallel DNN training. CoRR abs/1806.03377 (2018). http://arxiv.org/abs/1806.03377

Huang, Y., et al.: GPipe: efficient training of giant neural networks using pipeline parallelism. CoRR abs/1811.06965 (2018). http://arxiv.org/abs/1811.06965

Huang, Y., et al.: GPipe: efficient training of giant neural networks using pipeline parallelism. In: NeurIPS (2019)

Jain, A., et al.: SUPER: SUb-graph parallelism for transformers. In: 35th IEEE International Parallel and Distributed Processing Symposium (IPDPS), May 2021

Jain, A., et al.: GEMS: GPU-enabled memory-aware model-parallelism system for distributed DNN training. In: 2020 SC 2020: International Conference for High Performance Computing, Networking, Storage and Analysis (SC), pp. 621–635. IEEE Computer Society (2020)

Jia, Z., Zaharia, M., Aiken, A.: Beyond data and model parallelism for deep neural networks. CoRR abs/1807.05358 (2018). http://arxiv.org/abs/1807.05358

Kim, C., et al.: torchgpipe: on-the-fly pipeline parallelism for training giant models (2020)

Kousha, P., et al.: Designing a profiling and visualization tool for scalable and in-depth analysis of high-performance GPU clusters. In: 2019 IEEE 26th International Conference on High Performance Computing, Data, and Analytics (HiPC), pp. 93–102 (2019). https://doi.org/10.1109/HiPC.2019.00022

Krizhevsky, A.: One weird trick for parallelizing convolutional neural networks. CoRR abs/1404.5997 (2014). http://arxiv.org/abs/1404.5997

Lee, S., et al.: Interactive classification of whole-slide imaging data for cancer researchers. Cancer Res. 81(4), 1171–1177 (2021). https://doi.org/10.1158/0008-5472.CAN-20-0668. https://cancerres.aacrjournals.org/content/81/4/1171

Paszke, A., et al.: Automatic differentiation in PyTorch (2017)

Petrowski, A., Dreyfus, G., Girault, C.: Performance analysis of a pipelined backpropagation parallel algorithm. IEEE Trans. Neural Netw. 4(6), 970–981 (1993). https://doi.org/10.1109/72.286892

Real, E., Aggarwal, A., Huang, Y., Le, Q.V.: Regularized evolution for image classifier architecture search. CoRR abs/1802.01548 (2018)

Shazeer, N., et al.: Mesh-TensorFlow: deep learning for supercomputers. In: Advances in Neural Information Processing Systems, vol. 31. Curran Associates, Inc. (2018)

Shoeybi, M., Patwary, M.A., Puri, R., LeGresley, P., Casper, J., Catanzaro, B.: Megatron-LM: training multi-billion parameter language models using model parallelism. ArXiv abs/1909.08053 (2019)

Simonyan, K., Zisserman, A.: Very deep convolutional networks for large-scale image recognition. arXiv preprint arXiv:1409.1556 (2014)

Acknowledgement

This research is supported in part by NSF grants 1818253, 1854828, 1931537, 2007991, 2018627, 2112606, and XRAC grant NCR-130002.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2022 Springer Nature Switzerland AG

About this paper

Cite this paper

Jain, A., Shafi, A., Anthony, Q., Kousha, P., Subramoni, H., Panda, D.K. (2022). Hy-Fi: Hybrid Five-Dimensional Parallel DNN Training on High-Performance GPU Clusters. In: Varbanescu, AL., Bhatele, A., Luszczek, P., Marc, B. (eds) High Performance Computing. ISC High Performance 2022. Lecture Notes in Computer Science, vol 13289. Springer, Cham. https://doi.org/10.1007/978-3-031-07312-0_6

Download citation

DOI: https://doi.org/10.1007/978-3-031-07312-0_6

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-07311-3

Online ISBN: 978-3-031-07312-0

eBook Packages: Computer ScienceComputer Science (R0)