Abstract

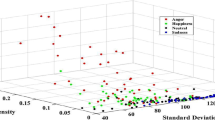

This paper investigates basic prosodic features of speech (duration, pitch/F0 and intensity) for the analysis and classification of Ibibio (New Benue Congo, Nigeria) emotions, at the suprasegnental (sentence, word, and syllable) level. We begin by proposing generic hypothesis/baselines, representing a cache of research works documented over the years on emotion effects of neutral speech on western languages, and adopt the circumplex model for the effective representation of emotions. Our methodology uses standard approach to speech processing and exploits machine learning for the classification of seven emotions (anger, fear, joy, normal, pride, sadness, and surprise) obtained from male and female speakers. Analysis of feature-emotion correlates reveal that syllable (duration) units yield the least standard deviation across selected emotions for both genders, compared to other units. Also, there appear to be consistency for word and syllable units – as both genders show same duration correlate patterns. For F0 and intensity features, our findings agree with the literature, as high activation effects tend to produce higher F0 and intensity values, compared to low activation effects, but neutral and low activation effects produce the lowest pitch/F0 and intensity values (for both genders). A classification of the emotions yields interesting results, as classification accuracies and errors remarkably improved in emotion-F0 and emotion-intensity classification for support vector machine (SVM) and decision tree (DT) classifiers, but the highest classification accuracies were produced by the three classifiers at the sentence unit/level for fear emotion, with the k-nearest neighbour classifier (k-NN) leading (DT: 90%, SVM: 90%, k-NN: 92.40%).

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Moridis, C.N., Economides, A.A.: Affective learning: empathetic agents with emotional facial and tone of voice expressions. IEEE Trans. Affect. Comput. 3(3), 260–272 (2012)

Sbattella, L., Colombo, L., Rinaldi, C., Tedesco, R., Matteucci, M., Trivilini, A.: Extracting emotions and communication styles from prosody. In: da Silva, H., Holzinger, A., Fairclough, S., Majoe, D. (eds.) PhyCS 2014. LNCS, vol. 8908. Springer, Heidelberg (2014). https://doi.org/10.1007/978-3-662-45686-6_2

Cowie, R., et al.: Emotion recognition in human-computer interaction. IEEE Signal Process. Mag. 18(1), 32–80 (2001)

Scherer, K.R.: Vocal affect expression: a review and a model for future research. Psychol. Bull. 99(2), 143–165 (1986)

Murray, I.R., Arnott, J.L.: Toward the simulation of emotion in synthetic speech: a review of the literature on human vocal emotion. J. Acoust. Soc. Am. 93(2), 1097–1108 (1993)

Nwe, T., Foo, S., de Silva, L.: Speech emotion recognition using hidden Markov models. Speech Commun. 41, 603–623 (2003)

Väyrynen, E.: Emotion recognition from speech using prosodic features. Ph.D. Thesis, University of Oulu, Oulu (2014)

Cowie, R., Douglas-Cowie, E., Savvidou, S., McMahon, E., Sawey, M., Schröder, M.: FEELTRACE: an instrument for recording perceived emotion in real time. In: ISCA tutorial and research workshop (ITRW) on speech and emotion, Newcastle, Northern Ireland, UK (2000)

Koleva, M.: Towards adaptation of NLP tools for closely-related Bantu languages: building a Part-of-Speech Tagger for Zulu. PhD Thesis, Saarland University, Germany (2012)

Kaufman, E.M.: Ibibio Grammar. Ph.D. Dissertation, California, Berkeley (1968)

Urua, E.A.: Aspects of Ibibio phonology and morphology. Ph.D. Thesis, University of Ibadan (1990)

Noah, P.: On the classification of /a/ in the Ibibio language in Nigeria. Am. J. Soc. Issues Hum. 2(5), 322–328 (2012)

Urua, E., Watts, O., King, S., Yamagishi, J.: Statistical parametric speech synthesis for Ibibio. Speech Commun. 56, 243–251 (2014)

Urua, E., Gibbon, D., Gut, U.: A computational model of low tones in Ibibio. In: Proceedings of 15th International Congress on Phonetic Sciences, Barcelona (2003)

Gumperz, J.J.: Rethinking Context: Language as an Interactive Phenomenon. Cambridge University Press, Cambridge (1992)

Essien, O.: A Grammar of the Ibibio Language. University Press, Ibadan (1990)

Ameka, F.: Interjections: the universal yet neglected part of speech. J. Pragmat. 18, 101–118 (1992)

Osisanwu, W.: The aural perception of verbal expression of mood in English as a second language situation. In: Ajiboye, T., Osisanwu, W. (eds.) Mood in Language and Literature, pp. 15–23. Femolus-Fetop Publishers, Lagos (2006)

Crombie, W.: Intonation in English: a systematic perspective. Paper presented at the Applied Linguistics Group seminar, the Hatfield Polytechnic, Hatfield, England (1988)

Campbell, N.: Recording techniques for capturing natural everyday speech. In: Proceedings of 3rd International Conference on Language Resources and Evaluation, Spain (2002)

Gibbon, D., Urua, E.-A.: Data creation for Ibibio speech synthesis. In: Proceedings of Third Partnership International Workshop on Speech Technology for Minority Languages, pp. 1–23. Local Language Speech Technology Initiative (LLSTI) Publication (2004). http://www.llsti.org/pubs/ibibio_data.pdf

Tan, P.-N., Steinbach, M., Kumar, V.: Introduction to Data Mining. Pearson Addison Wesley, Boston (2006)

Friel, N., Pettitt, A.N.: Classification using distance nearest neighbours. Stat. Comput. 21(3), 431–437 (2011)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2022 Springer Nature Switzerland AG

About this paper

Cite this paper

Ekpenyong, M.E., Ananga, A.J., Udoh, E.O., Umoh, N.M. (2022). Speech Prosody Extraction for Ibibio Emotions Analysis and Classification. In: Vetulani, Z., Paroubek, P., Kubis, M. (eds) Human Language Technology. Challenges for Computer Science and Linguistics. LTC 2019. Lecture Notes in Computer Science(), vol 13212. Springer, Cham. https://doi.org/10.1007/978-3-031-05328-3_13

Download citation

DOI: https://doi.org/10.1007/978-3-031-05328-3_13

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-05327-6

Online ISBN: 978-3-031-05328-3

eBook Packages: Computer ScienceComputer Science (R0)