Abstract

Can recent developments in deep, artificial neural networks (ANNs), machine speed, and Big Data revolutionize scientific discovery across many fields? Sections 5.1 and 5.2 investigate the claim that deep learning fueled by Big Data is providing a methodological revolution across the sciences, one that overturns traditional methodologies. Sections 5.3 and 5.4 address the challenges of alien reasoning and the black-box problem for these novel computational methods. Section 5.5 briefly considers several responses to the problem of achieving “explainable AI” (XAI). Section 5.6 summarizes my present position. If we are at a major turning point in scientific research, it is not the one initially advertised. The alleged revolution is also self-limiting in important ways.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

Notes

- 1.

In this paper the term ‘discovery’ names a traditional topic area in philosophy of science. It is not, for me, an achievement term meaning the disclosure of a final truth about the universe. I prefer the generic term ‘innovation’ as noncommittal to strong scientific realism. (I am a selective realist/nonrealist.) It is also a term that recognizes that there are many varieties of techno-scientific innovation besides empirical discoveries and the invention of new theories.

- 2.

See, e.g., Koza (1992, ch. 1). Although the successes of machines such as AlphaZero are remarkable in this regard, we must still be alert for hype. It took a tremendous amount of work to configure and tune its architecture in order to achieve the dramatic results.

- 3.

Think of evolutionary computation, say, in the form of genetic algorithms (Koza, 1992 and successor volumes), or, more recently, the use of genetic algorithms to evolve scientific models from big data, partly to overcome human cognitive limitations, e.g., the “Genetically Evolving Models in Science” (GEMS) project at the London School of Economics (Gobet, 2020). Better, think of the “endless forms most beautiful” (Darwin, 1859) that biological evolution, as a counterintuitive, exploratory process, has produced. (I don’t mean this as an exact analogy. The rough idea is that the instances of a biological species genetically incorporate the phylogenetic wisdom of long, multi-generational evolutionary processes, while a machine trained on many examples, whether historical human examples or self-generated ones, comes, ontogenetically, to embody much wisdom, e.g., as how to play chess or to translate French into Chinese.) An open, exploratory process may not be very efficient; but, in the long run, it is more likely to produce genuinely novel results than one that is humanly goal-directed.

- 4.

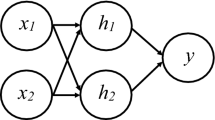

Hyperparameters are those values that set the structure of a given ANN before training, that classify the type of machine or “model” it is, e.g., the number of layers of which kinds (input, convolutional, output, etc.), the parameters that govern a specific convolutional configuration, unit biases, number of training epochs, and so on. This as opposed to the momentary values of the activation functions of individual units or input arrays (for example).

- 5.

Recall that, in Skinnerian learning theory, behavior is shaped on the basis of the reward signal alone (along with information about the new state of the environment as a result of the behavior), without explicit instruction, as an ontogenetic extension of biological evolution. Biological evolution is also exploratory in this respect, as Skinner (1981) recognized. Ontogenetic learning is an evolutionary shortcut—and more flexibly adaptive. Sejnowski (2018, 149) points out the difference that a ANN has a many reward signals, one for each output unit, whereas there is just one signal to the animal brain. The brain proceeds to solve the credit-assignment problem.

- 6.

Roman Frigg (in discussion) raises the interesting question who or what should get credit for a new discovery or problem solution made principally by a machine. My answer, to a first approximation: Presumably, the machine and everyone associated with designing and building it, as in today’s movie credits, when even the person who brings the ham sandwiches for lunch gets a mention. But that does not answer the question of order: first author, last author, etc.

- 7.

White-box models such as linear regression, decision trees, and traditional production systems lack the predictive power of the new ANNs, nor do they scale well to more complex problems. ‘Black box’ and ‘white box’ are, of course, two extreme points on a continuum.

- 8.

For discussion of biases and other limitations, see the website AINow.org, Marcus and Davis (2019), Brooks (2019), Nickles (2018a), Broussard (2018), Eubanks (2018), Noble (2018), Knight (2017, 2020), Somers (2017), Sweeney (2017), Wachter-Boettcher (2017), Weinberger (2014, 2017), Zenil (2017), Arbesman (2016), Lynch (2016), O’Neil (2016), Nguyen et al. (2015), Pasquale (2015), and Sjegedy et al. (2014). Worse, insofar as opaque machines shape our understanding of the phenomena or systems to which they are applied, the latter themselves become opaque (Pasquale, 2015, Ippoliti & Chen, 2017).

- 9.

Both generalization within a task domain, beyond the training set, and transfer of training to other domains are challenges. These are not the same. To be fair, there has been some recent progress on both fronts. For transfer learning see Krohn (2020, 188f, 251f). See also the Gillies paper (Chap. 4, this volume) on the failure of current deep learning to handle background knowledge.

- 10.

The generation of large databases, including training sets, can be very expensive. And the dollar and energy cost of deep learning machines is increasing according to a Moore-like exponential law. As in many domains, increasing performance only a few percent (e.g., in error reduction of translation programs) may require an exponentially large increase in computational power and expense. Here’s a recent statement by Jerome Presenti, head of the AI division of Facebook.

When you scale deep learning, it tends to behave better and to be able to solve a broader task in a better way. So, there’s an advantage to scaling. But clearly the rate of progress is not sustainable. If you look at top experiments, each year the cost is going up 10-fold. Right now, an experiment might be in seven figures, but it’s not going to go to nine or ten figures, it’s not possible, nobody can afford that.

It means that at some point we’re going to hit the wall. In many ways we already have. Not every area has reached the limit of scaling, but in most places, we’re getting to a point where we really need to think in terms of optimization, in terms of cost benefit, and we really need to look at how we get most out of the compute [sic] we have. This is the world we are going into (Knight 2019).

See also Knight (2020) and Thompson et al. (2020) on the computational limits of deep learning.

- 11.

Human beings apply common sense in real time, employing intuitive physics as well as local knowledge, whereas serious attempts to program common sense, such as Douglas Lenat’s Cyc project, based on traditional symbolic entries, make processing ever slower as more verities are added. While Cyc is an old approach, hand-crafted in the traditional way, the Paul Allen Institute in Seattle is now taking a new, crowd-sourced, machine-learning approach to common sense.

- 12.

Of course, we hardly understand how we do it either. This has been called “Polanyi’s paradox”: many of the things we do most easily, in both thought and action, are the product of “tacit” skills that we are unable to articulate. As several experts have pointed out, computers can best adults on intelligence tests and games of intelligence such as chess but cannot compete with the perception, flexibility, and learning capabilities of a one-year old. See Walsh (2018, 136).

- 13.

At the time, this was a reasonable starting point for attempting to mechanize problem solving as the core process of scientific discovery. I am not claiming that Newell and Simon thought human intelligence was the gold standard. Recall that Simon’s Nobel Prize was for his economics work on our “bounded rationality.” For discussion of ways in which current work surpasses Simon and other developments, see Gillies and Gillies (Chap. 4, this volume) and Gonzalez (2017).

- 14.

Today’s ANNs are pretty domain-specific in their architectures and in the tuning of hyperparameters but not in the sense of containing much substantive domain-specific knowledge. Sometimes little or none is needed. More generally, it remains a problem of how ANNs can build on extant domain knowledge. Again, see Gillies and Gillies (Chap. 4, this volume) on background knowledge.

- 15.

See Gillies (1996) on the resolution principle and Prolog.

- 16.

Dorigo (2017). Thanks to Mat Trachok for the reference. In a later, 1000-game match, a later version of AlphaZero again crushed (the then-latest version of) Stockfish.

- 17.

- 18.

Does Davidson (1974) on conceptual schemes deny that we can meaningfully attribute radically alien intelligence to other entities (intelligence without intelligibility to us)? I join those who reject this idea as anthropocentric, and as containing a whiff of verificationism.

- 19.

Dennett applies “a strange inversion of reasoning” to both Darwinian evolution and Turing machines. Animals operate on a “need to know” basis, guided by “free-floating rationales” programmed in transgenerationally by evolution. On this view, rational behavior need not be backed by explicit reasoning at any level of description, nor by the possession of language. Nonhuman animals cannot explain to us why they behave as they do. Rejecting “mind first,” Dennett (1995, chs. 1, 3, 2017) implies that we also must reject “method first.” Successful methods are inductive outcomes of research, not available to us a priori. Methods are cranes, not skyhooks. Dennett reworks the above-mentioned material in Dennett (2017). See also Mercoier and Sperber (2017) and Buckner (2019).

- 20.

Expert behavior produced by deep learning machines could scarcely have been imagined twenty years ago. The AlphaZero machines do not start with any human expert or domain knowledge at all, yet they achieve championship level through self-play in only three days.

- 21.

We must be careful not to spread the extension of ‘alien’ so widely that most of our own behavior is alien to us (see Wilson 2004). Although the human brain is also a highly distributed system that we understand only dimly, we do have the aforementioned evidence concerning our huge differences from machines regarding general intelligence as a basis for claiming that they operate in a way much different from us. When it comes to understanding each other, however, especially within the same culture, we rely on our high-level, belief-desire model of behavior (say, in the form of Dennett’s intentional systems model), a “theory of mind” that we can fairly reliably attribute to others. The result is that we can black box micro-biological implementation levels with much cognitive, real-time gain. Still, that many of our ordinary human epistemic activities are alien to ourselves has always been a main source of “the discovery problem.” It generalizes to the problem of understanding expert scientific practice.

- 22.

On the other hand, some AI experts are attempting to make progress by reverse-engineering the human visual system, which, like other animal sensory systems, is far more computationally intensive than automated logical reasoning is.

- 23.

Explainability or interpretability or transparency in the use of machine models is itself a complex topic that I must gloss over here. There are several different kinds of opacity, some harder to handle than others, some more relevant than others to the epistemic or moral justification questions we need to ask. (The issue becomes broadly “rhetorical” in the sense that we seek an appropriate match between what the machine can “tell” us and what questions we, the audience, need answered.) For discussion, see, e.g., Lipton (2018), Humphreys (2004, 2009), Winsberg (2010), Sullivan (2019), and Creel (2020).

- 24.

I am here talking about substantive, domain-specific models such as the Hodgkin-Huxley model of neuron action-potential transmission. In a different sense, deep learning experts often describe the specific architectures of their machines as models.

- 25.

In some cases, it is possible to simulate much of the data used to train a machine.

- 26.

See DARPA and Gunning (2016).

- 27.

- 28.

See, e.g., Tishby and Zaslavsky (2015) and Kindermans et al. (2017). While achieving a general, abstract-theoretical understanding would be a major breakthrough, there remains the question how this could be applied to particular cases such as helping Maria understand why, specifically, her application to medical school was denied. (This question again underscores that there are distinct black-box issues.) There are still other approaches not mentioned above. Consider the idea of reverse-engineering generative image classifiers, for example, to determine which features are most significant in the machine’s categorization decisions (as in the code-audit approach). For example, when asking it to generate pictures of dogs, the length and shape of the ears is particularly important in today’s machines’ ability to discriminate dog breeds. One can also look for patterns in adversarial examples in the hope of better understanding the major processing pathways, much as we study visual illusions to determine what heuristic shortcuts our visual system is making.

- 29.

- 30.

For references and a critique of transhumanist approaches as challenging our humanity, see Schneider (2019).

- 31.

I mention also the use of fast, simple statistical algorithms, from Paul Meehl (1954) to Gerd Gigerenzer today. Success here, e.g., in rapid medical diagnosis, has led philosophers such as J. D. Trout to reject the quest for understanding insofar as it is subjective and cannot serve as a proxy for reliability. Others such as Henk de Regt defend the importance of understanding as a scientific goal (Trout, 2002; Bishop & Trout, 2005; de Regt et al., 2009; de Regt, 2017). The “fast and frugal” approach goes in the opposite direction from today’s ANNs, with their thousands of variables, instead employing just two or three principal factors. For example, Gigerenzer and Todd (1999) include several examples of such algorithms. Their aim is to contribute to “ecological rationality,” based on the sets of heuristics that animals, including people, can use in real-time behavioral decisions. In several applications, these quick applications produce better results than more complex ones and better than human experts. One application of this sort of approach to deep learning might be a “code audit,” near-final stage that reports only the few variables that played major roles in the final classification. While the examples provided by Gigerenzer’s team are impressive, they offer critics little hope that they can replace anything close to the full range of black-box machines. Moreover, although their simplicity makes them easier to understand and use, these approaches themselves exhibit the black-box problem rather than solving it. For we will still lack the intuitive causal understanding that scientists have traditionally sought, as well as heuristic guidance as to how to further develop our models (but see Nickles, 2018a, b).

- 32.

Descartes once observed that, the more science teaches us about how human sensory systems work, the more skeptical we become about the knowledge of the world they seem to impart to us.

References

Anderson, C. (2008). The end of theory: The data deluge makes the scientific method obsolete. Wired, 16, 106–129.

Arbesman, S. (2016). Overcomplicated: Technology at the limits of comprehension. Penguin/Random House.

Barber, G. (2019). Artificial Intelligence confronts a ‘reproducibility’ crisis. Wired, online, September 16. Accessed 16 Sept 2019.

Batterman, R. (2002). The Devil in the details: Asymptotic reasoning in explanation, reduction, and emergence. Oxford University Press.

Batterman, R., & Rice, C. (2014). Minimal model explanations. Philosophy of Science, 81(2), 349–376.

Bengio, Y., et al. (2019). A meta-transfer objective for learning to disentangle causal mechanisms. arXiv:1901.109112v2 [cs.LG], 4 February.

Bishop, M., & Trout, J. D. (2005). Epistemology and the psychology of human judgment. Oxford University Press.

Brockman, J. (Ed.). (2015). What to think about machines that think. Harper.

Brooks, R. (2019). Forai-steps-toward-super-intelligence. https://rodneybrooks.com/2018/07/. Accessed 30 Sept 2019.

Broussard, M. (2018). Artificial unintelligence: How computers misunderstand the world. MIT Press.

Buckner, C. (2018). Empiricism without magic: Transformational abstraction in deep convolutional neural networks. Synthese (online) September, 24, 2018.

Buckner, C. (2019). Rational inference: The lowest bounds. Philosophy and Phenomenological Research, 98(3), 697–724.

Buckner, C., & Garson, J. (2018). Connectionism and post-connectionist models. In M. Sprevak & M. Colombo (Eds.), The Routledge handbook of the computational mind (pp. 76–90). Routledge.

Burrell, J. (2016). How the machine ‘thinks’: Understanding opacity in machine learning algorithms. Big Data & Society, January–June, 1–12.

Creel, K. (2020). Transparency in complex computational systems. Philosophy of Science. Online 30 April 2020. https://doi.org/10.1086/709729

DARPA, & Gunning, D. (2016). Explainable Artificial Intelligence (XAI). https://www.darpa.mil/attachments/XAIIndustryDay_Final.pptx. Accessed 8 Dec 2019.

Daston, L. (2015). Simon and the sirens: A Commentary. Isis, 106(3), 669–676.

Davidson, D. (1974). On the very idea of a conceptual scheme. Proceedings and Addresses of the American Philosophical Association, 47, 5–20. Reprinted in Davidson, D., Inquiries into truth and interpretation (pp. 183–198). Oxford University Press.

de Regt, H. (2017). Understanding scientific understanding. Oxford University Press.

de Regt, H., Leonelli, S., & Eigner, K. (Eds.). (2009). Scientific understanding: Philosophical perspectives. University of Pittsburgh Press.

Dennett, D. (1971). Intentional systems. Journal of Philosophy, 68(4), 87–106.

Dennett, D. (1995). Darwin’s dangerous idea. Simon & Schuster.

Dennett, D. (2017). From bacteria to Bach and back: The evolution of minds. Norton.

Dewey, J. (1929). The quest for certainty. Putnam.

Dick, S. (2011). AfterMath: The work of proof in the age of human-machine collaboration. Isis, 102(3), 494–505.

Dick, S. (2015). Of models and machines. Isis, 106(3), 623–634.

Domingos, P. (2015). The master algorithm: How the search for the ultimate learning machine will remake our world. Basic Books.

Dorigo, T. (2017). Alpha Zero teaches itself chess 4 hours, then beats dad. Science 2.0. https://www.science20.com/tommaso_dorigo/alpha_zero_teaches_itself_chess_4_hours_then_beats_dad-229007. Accessed 25 Aug 2019.

Erickson, P., et al. (2013). How reason almost lost its mind. University of Chicago Press.

EU GDPR. (2016). Recital 71. http://www.privacy-regulation.eu/en/recital-71-GDPR.htm. Accessed 10 Dec 2019.

Eubanks, V. (2018). Automating inequality: How high-tech tools profile, police, and punish the poor. St. Martin’s Press.

Finkelstein, G. (2013). Emil du Bois-Reymond: Neuroscience, self, and society in nineteenth-century Germany. MIT Press.

Fritchman, D. (2019). Why analog lost. http://Medium.com. Accessed 30 Nov 2019.

Gigerenzer, G., & Todd, P. (Eds.). (1999). Simple heuristics that make us smart. Oxford University Press.

Gillies, D. (1996). Artificial Intelligence and scientific method. Oxford University Press.

Gillies, D., & Sudbury, A. (2013). Should causal models always be Markovian? The case of multi-causal forks in medicine. European Journal for Philosophy of Science, 3(3), 275–308.

Glymour, C., & Cooper, G. (Eds.). (1999). Computation, causation, & discovery. MIT Press.

Gobet, F. (2020). Genetically evolving models in science (GEMS). Research program at the London School of Economics. http://www.lse.ac.uk/cpnss/research/genetically-evolving-models-in-science. Accessed 26 July 2020.

Gonzalez, W. J. (2017). From intelligence to rationality of minds and machines in contemporary society: The sciences of design and the role of information. Minds & Machines, 27(3), 397–424.

Humphreys, P. (2004). Extending ourselves: Computational Science, empiricism, and scientific method. Oxford University Press.

Humphreys, P. (2009). The philosophical novelty of computer simulation methods. Synthese, 169(3), 615–626.

Ippoliti, E., & Chen, P. (Eds.). (2017). Methods and finance: A unifying view on finance, mathematics and philosophy. Springer.

Kindermans, P.-J., et al. (2017). Learning how to explain neural networks: PatternNet and PatternAttribution. ArXiv:1705.05598v2. 24 October. Accessed 28 Dec 2019.

Knight, W. (2017). The dark secret at the heart of AI: No one really knows how the most advanced algorithms do what they do. MIT Technology Review, 11 April 2017, 55–63.

Knight, W. (2019). Facebook’s head of AI says the field will soon ‘hit the wall’. Wired, December 4. Accessed 10 Dec 2019.

Knight, W. (2020). Prepare for Artificial Intelligence to produce less wizardry. Wired, July 11. Accessed 26 July 2020.

Koza, J. (1992). Genetic programming: On the programming of computers by means of natural selection [the first volume of a series]. The MIT Press.

Kozyrkov, C. (2018). Explainable AI won’t deliver. Here’s why. Hackernoon.com, September 16. https://hackernoon.com/explainable-ai-wont-deliver-here-s-why-6738f54216be. Accessed 19 Nov 2019.

Krohn, J. (with Beyleveld, G., & Bassens, A.). (2020). Deep learning illustrated. Addison-Wesley.

Langley, P., Simon, H., Bradshaw, G., & Zytkow, J. (1987). Scientific discovery: Computational explorations of the creative process. The MIT Press.

Laudan, L. (1981). Science and hypothesis. Reidel.

Lipton, Z. (2018). The mythos of model interpretability. Queue (May–June), 1–27. https://dl.acm.org/doi/pdf/10.1145/3236386.3241340. Accessed 2 Aug 2020. Original, 2016 version in Archive.org > cs > arXiv:1606.03490.

Lynch, M. (2016). The Internet of us: Knowing more and understanding less in the age of big data. Liveright/W. W. Norton.

Marcus, G., & Davis, E. (2019). Rebooting AI: Building artificial intelligence we can trust. Pantheon.

Meehl, P. (1954). Clinical versus statistical prediction: A theoretical analysis and a review of the evidence. University of Minnesota Press.

Mercoier, H., & Sperber, D. (2017). The Enigma of Reason. Harvard University Press.

Mitchell, M., Wu, S., Zaldivar, A., Barnes, P., Vasserman, L., Hutchinson, B., Spitzer, E., Raji, I. D., & Gebru, T. (2019). Models cards for model reporting. In FAT*´19: Proceedings of the Conference on Fairness, Accountability, and Transparency, 29 January 2019 (pp. 220–229). https://doi.org/10.1145/3287560.3287596

Mnih, V., et al. (2015). Human-level control through deep reinforcement learning. Nature, 518, 529–533.

Newell, A., & Simon, H. (1972). Human problem solving. Prentice-Hall.

Nguyen, A., Yosinski, J., & Clune, J. (2015). Deep neural networks are easily fooled: High confidence predictions for unrecognizable images. In 2015 IEEE conference on computer vision and pattern recognition (CVPR), pp. 427–436.

Nickles, T. (2018a). Alien reasoning: Is a major change in scientific research underway? Topoi. Published online, 22 March. https://doi.org/10.1007/s11245-018-9557-1

Nickles, T. (2018b). TTT: A fast heuristic to new theories? In D. Danks & E. Ippoliti (Eds.), Building theories: Hypotheses and heuristics in science (pp. 169–189). Springer.

Nielsen, M. (2012). Reinventing discovery: The new era of networked science. Princeton University Press.

Noble, S. (2018). Algorithms of oppression: How search engines reinforce racism. NYU Press.

O’Neil, C. (2016). Weapons of math destruction: How big data increases inequality and threatens democracy. Crown.

Pasquale, F. (2015). The black box society: The secret algorithms that control money and information. Harvard University Press.

Pearl, J. (2000). Causality: Models, reasoning, and inference. Cambridge University Press.

Pearl, J., & Mackenzie, D. (2018). The book of why: The new science of cause and effect. Basic Books.

Pearl, J., Glymour, M., & Nicholas, J. (2016). Causal inference in statistics: A primer. Wiley.

Polanyi, M. (1966). The tacit dimension. Doubleday.

Russell, S. (2019). Human compatible: Artificial Intelligence and the problem of control. Viking.

Schneider, S. (2019). Artificial you: AI and the future of your mind. Princeton University Press.

Sejnowski, T. (2018). The deep learning revolution. The MIT Press.

Silver, D., Hassabis, D., et al. (2017). Mastering chess and shogi by self-play with a general reinforcement learning algorithm. arxiv.org/pdf/1712.01815.pdf. Accessed 28 Aug 2019.

Sjegedy, C., et al. (2014). Intriguing pro-erties of neural networks. arXiv.org/pdf/1213.6199.pdf. Accessed 5 February 2018.

Skinner, B. F. (1981). Selection by consequences. Science, 213, 501–504.

Somers, J. (2017). Is AI riding a one-trick pony? MIT Technology Review, September 29.

Spirtes, P., Glymour, C., & Scheines, R. (2000). Causation, prediction, and search (2nd ed.). The MIT Press.

Strevens, M. (2017). The whole story: Explanatory autonomy and convergent evolution. In D. Kaplan (Ed.), Explanation and integration in mind and brain science. Oxford University Press.

Strogatz, S. (2018). One giant step for a chess-playing machine: The stunning success of AlphaZero: A deep-learning algorithm, heralds a new age of insight — One that, for humans, may not last long. New York Times, December 26.

Sullivan, E. (2019). Understanding from machine learning models. British Journal for the Philosophy of Science, online. https://doi.org/10.1093/bjps/axz035. Accessed 21 Sept 2019.

Sweeney, P. (2017). Deep learning, alioen knowledge and other UFOs. http://medium.com/inventing-intelligent-machines/machine-learning-alien-knowledge-and-other-ufos-1a44c66508d1. Accessed 18 Nov 2017.

Thompson, N., Greenwald, K., Lee, K., & Manso, G. (2020). The computational limits of deep learning. arxiv.org/pdf/2007.05558.pdf. Accessed 16 July 2020.

Tian, Y., et al. (2019). ELF OpenGo: An analysis and open reimplementation of AlphaZero. arxiv.org/pdf/1902.04522.pdf

Tishby, N., & Zaslavsky, N. (2015). Deep learning and the information bottleneck principle. arxiv.org/pdf/1503.02406.pdf. Accessed 5 Feb 2018.

Trout, J. D. (2002). Scientific explanation and the sense of understanding. Philosophy of Science, 69(2), 212–233.

Turing, A. (1950). Computing machinery and intelligence. Mind, 59(236), 433–460.

Ullman, T., Spelke, E., Battaglia, P., & Tenenbaum, J. (2017). Mind games: Game engines as an architecture for intuitive physics. Trends in Cognitive Sciences, 21(9), 649–665.

Wachter-Boettcher, S. (2017). Technically wrong: Sexist apps, biased algorithms, and other threats of toxic tech. Norton.

Walsh, T. (2018). Machines that think: The future of artificial intelligence. Prometheus Books.

Weinberger, D. (2014). Too big to know: Rethinking knowledge. Basic Books.

Weinberger, D. (2017). Alien knowledge: When machines justify knowledge. Wired, April 18. Accessed 17 Mar 2018.

Winsberg, E. (2010). Science in the age of computer simulation. University of Chicago Press.

Woodward, J. (2003). Making things happen: A theory of causal explanation. Oxford University Press.

Wilson, T. (2004). Strangers to ourselves: Discovering the adaptive unconscious. Harvard University Press.

Zenil, H., et al. (2017). What are the main criticism and limitations of deep learning? https://www.quora.com/What-are-the-main-criticsms-and-limitations-of-deep-learning. Accessed 5 Feb 2018.

Acknowledgements

I am honored to contribute to these 25th anniversary Jornadas. My thanks to Wenceslao J. Gonzalez and his staff for organizing the conference under difficult (Covid-19 pandemic) conditions. I am also grateful for helpful comments from my fellow participants, Roman Frigg and (especially) Donald and Marco Gillies. See their chapters in this volume.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2022 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this chapter

Cite this chapter

Nickles, T. (2022). Whatever Happened to the Logic of Discovery? From Transparent Logic to Alien Reasoning. In: Gonzalez, W.J. (eds) Current Trends in Philosophy of Science. Synthese Library, vol 462. Springer, Cham. https://doi.org/10.1007/978-3-031-01315-7_5

Download citation

DOI: https://doi.org/10.1007/978-3-031-01315-7_5

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-01314-0

Online ISBN: 978-3-031-01315-7

eBook Packages: Religion and PhilosophyPhilosophy and Religion (R0)