Abstract

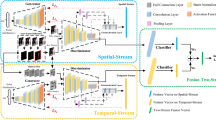

Predicting high-quality images that depend on past images and external events is a challenge in computer vision. Prior proposals have tried to solve this problem; however, their architectures are complex, unstable, or difficult to train. This paper presents an action-conditioned network based upon Introspective Variational Autoencoder (IntroVAE) with a simplistic design to predict high-quality samples. The proposed architecture combines features of Variational Autoencoders (VAEs) and Generative Adversarial Networks (GANs) with encoding and decoding layers that can self-evaluate the quality of predicted frames; no extra discriminator network is needed in our framework. Experimental results with two data sets show that the proposed architecture could be applied to small and large images. Our predicted samples are comparable to the state-of-the-art GAN-based networks.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

Notes

- 1.

We have to mention that other activation functions were also tested, specifically LeakyReLU and Tanh (for the last layer of the generator). However, the results did not improve, and the computational load increased significantly.

References

Open AI: Gym toolkit. https://gym.openai.com/envs/CarRacing-v0.html

Berthelot, D., Schumm, T., Metz, L.: BEGAN: boundary equilibrium generative adversarial networks (2017)

Castelló, J.S.: A comprehensive survey on deep future frame video prediction (2018)

Daniel, T., Tamar, A.: Soft-introVAE: analyzing and improving the introspective variational autoencoder (2021)

Ebert, F., Finn, C., Dasari, S., Xie, A., Lee, A., Levine, S.: Visual foresight: model-based deep reinforcement learning for vision-based robotic control (2018)

Finn, C., Goodfellow, I., Levine, S.: Unsupervised learning for physical interaction through video prediction (2016)

Goodfellow, I.J., et al.: Generative adversarial networks (2014)

Ha, D., Schmidhuber, J.: World models. arXiv preprint arXiv:1803.10122 (2018)

Heydari, A.A., Mehmood, A.: SRVAE: super resolution using variational autoencoders. In: Pattern Recognition and Tracking XXXI, vol. 11400, p. 114000U. International Society for Optics and Photonics (2020)

Huang, H., Li, Z., He, R., Sun, Z., Tan, T.: IntroVAE: introspective variational autoencoders for photographic image synthesis (2018)

Khan, S.H., Hayat, M., Barnes, N.: Adversarial training of variational auto-encoders for high fidelity image generation (2018)

Kingma, D.P., Welling, M.: Auto-encoding variational Bayes (2014)

Larsen, A.B.L., Sønderby, S.K., Larochelle, H., Winther, O.: Autoencoding beyond pixels using a learned similarity metric (2016)

Ledig, C., et al.: Photo-realistic single image super-resolution using a generative adversarial network (2017)

Lee, A.X., Zhang, R., Ebert, F., Abbeel, P., Finn, C., Levine, S.: Stochastic adversarial video prediction (2018)

Joyce, J.M.: Kullback-Leibler Divergence. In: Lovric, M. (eds.) International Encyclopedia of Statistical Science, pp. 720–722. Springer, Heidelberg (2011). https://doi.org/10.1007/978-3-642-04898-2_327

Malik, A., Troute, M., Capoor, B.: DeepGIFs: Using deep learning to understand and synthesize motion (2018)

Oh, J., Guo, X., Lee, H., Lewis, R., Singh, S.: Action-conditional video prediction using deep networks in atari games (2015)

Oprea, S., et al.: A review on deep learning techniques for video prediction (2020)

Paxton, C., Barnoy, Y., Katyal, K., Arora, R., Hager, G.D.: Visual robot task planning (2018)

Radford, A., Metz, L., Chintala, S.: Unsupervised representation learning with deep convolutional generative adversarial networks (2016)

Rasouli, A.: Deep learning for vision-based prediction: a survey (2020)

Rhinehart, N., McAllister, R., Kitani, K., Levine, S.: PRECOG: prediction conditioned on goals in visual multi-agent settings (2019)

Sainburg, T., Thielk, M., Theilman, B., Migliori, B., Gentner, T.: Generative adversarial interpolative autoencoding: adversarial training on latent space interpolations encourage convex latent distributions (2019)

Salimans, T., et al.: Improved techniques for training GANs. In: Lee, D., Sugiyama, M., Luxburg, U., Guyon, I., Garnett, R. (eds.) Advances in Neural Information Processing Systems, vol. 29, pp. 2234–2242. Curran Associates, Inc. (2016). https://proceedings.neurips.cc/paper/2016/file/8a3363abe792db2d8761d6403605aeb7-Paper.pdf

Vu, H.S., Ueta, D., Hashimoto, K., Maeno, K., Pranata, S., Shen, S.M.: Anomaly detection with adversarial dual autoencoders (2019)

Walker, J., Marino, K., Gupta, A., Hebert, M.: The pose knows: video forecasting by generating pose futures (2017)

Wang, E., Kosson, A., Mu, T.: Deep action conditional neural network for frame prediction in atari games. Technical report, Stanford University (2017)

Zhao, J., Mathieu, M., LeCun, Y.: Energy-based generative adversarial network (2017)

Zhao, S., Song, J., Ermon, S.: InfoVAE: information maximizing variational autoencoders (2018)

Zheng, K., Cheng, Y., Kang, X., Yao, H., Tian, T.: Conditional introspective variational autoencoder for image synthesis. IEEE Access 8, 153905–153913 (2020). https://doi.org/10.1109/ACCESS.2020.3018228

Zhu, D., Chen, H., Yao, H., Nosrati, M., Yadmellat, P., Zhang, Y.: Practical issues of action-conditioned next image prediction (2018)

Zhu, J.Y., Zhang, R., Pathak, D., Darrell, T., Efros, A.A., Wang, O., Shechtman, E.: Toward multimodal image-to-image translation (2018)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2022 Springer Nature Switzerland AG

About this paper

Cite this paper

Valencia, D., Williams, H., MacDonald, B., Qiao, T. (2022). Action-Conditioned Frame Prediction Without Discriminator. In: Nicosia, G., et al. Machine Learning, Optimization, and Data Science. LOD 2021. Lecture Notes in Computer Science(), vol 13163. Springer, Cham. https://doi.org/10.1007/978-3-030-95467-3_24

Download citation

DOI: https://doi.org/10.1007/978-3-030-95467-3_24

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-95466-6

Online ISBN: 978-3-030-95467-3

eBook Packages: Computer ScienceComputer Science (R0)