Abstract

The safety, proper maintenance, and renovation of tunnel structures have become a critical problem for urban management in view of the aging of tunnels. Tunnel inspection and inventory are regulated by construction laws and must be carried out at regular intervals. Advances in digitalization and machine vision technologies enable the development of an automated and BIM-based system to collect data from tunnel surfaces. In this study, a tunnel inspection system using vision-based systems and the related principles are introduced to measure the tunnel surfaces efficiently. In addition, the main components and requirements for subsystems are presented, and different challenges in data acquisition and point cloud generation are explained based on investigations during initial experiments.

You have full access to this open access chapter, Download chapter PDF

Similar content being viewed by others

Keywords

1 Introduction

The inventory and monitoring of transport structures, such as tunnel and bridge constructions, are essential to improve the mobility in urban areas. Tunnels are mostly underground constructions which serve to pass under obstacles such as mountains or hills, waterways, or other traffic routes. In cities, they protect residents from road or rail traffic noise. Mobility systems and infrastructure and their consequences for the people living in a city are a key topic of the iCity project, to which this paper contributes with the development of a system for 3D tunnel inspection.

Tunnel inspection includes monitoring and mapping of the tunnel surfaces to generate 3D models and to detect anomalies as well as localizing tunnel objects, e.g., technical equipment, for building information modeling (BIM) applications. This results in a significant total workload and makes it necessary to develop optimum and intelligent solutions in a digitalized manner. Traditionally, tunnel inspection methods are accomplished by local visual inspection and the use of adapted devices, which usually require on-site installation and interaction with the surface of the tunnel. Despite being time-consuming and cumbersome, manual measurements of the tunnels by operators require quite expensive equipment and a lot of working hours. Moreover, a tunnel blocking for several hours or even days is usually necessary to reduce the risk areas for operators.

To improve the automation level and efficiency of inspection systems, advanced and intelligent technologies are necessary. The current approaches can be divided into two main groups, namely, laser-based and image-based techniques. Laser scanners are one of the state-of-the-art sensors for 3D mapping and can be deployed on terrestrial stations or mobile mapping vehicles to accurately capture dense point cloud data of the tunnel surface (Chen et al. 2015; Wang et al. 2014). However, a mobile system with a typical laser scanner is very cost-intensive and even today costs much more than several $50,000 (O’Neill, 2020).

With recent advances in multi-view image processing and high-speed image capturing techniques, a practical and inexpensive solution for automatic tunnel inspections with image-based systems has become feasible. This technique involves a set of industrial cameras installed in a specific ring to cover the entire area of the tunnel, as well as light sources to provide sufficient illumination for image capturing. However, there are distinct hardware components and data processing challenges and considerations that directly affect the accuracy of the final results. This study aims to address those challenges and to investigate optimal solutions to develop a cost-effective system for the automated and BIM-compatible inspection of tunnels.

2 Related Work

BIM technology is widely used to present the digital model-based process of planning, designing, constructing, operating, and managing for the infrastructures such as buildings, roads, bridges, and tunnels over the entire life cycle of the project (Ehrbar et al. 2019; Fargnoli et al. 2019). The procedure of inspection and maintenance of tunnels and collected 3D information and geo-data of underground structures can support BIM systems (Chen et al. 2019; Yin et al. 2020).

With the advances in photogrammetric and computer vision algorithms, the interest in developing automatic and inexpensive approaches for tunnel inspection is rapidly increasing. In an early study, Gavilán et al. (2013) developed a mobile inspection system that includes six linear cameras and six laser-based projectors for tunnel surveys at speeds up to 30 km/h and with a depth accuracy of 0.5 mm. Similar to this study, Zhan et al. (2015) presented an inspection system that includes seven linear cameras and structured-light projectors and can operate at a speed of about 60 km/h. A challenge of tunnel inspection is to detect damages with mm to sub-mm accuracy. To this end, Stent et al. (2015) proposed a low-cost system to inspect 0.3 mm cracks using two consumer-grade digital cameras installed on a robotic rotating arm. One of the advanced mapping systems in GNSS-denied areas, proposed by Chapman et al. (2016), includes an array of 16 cameras and light sources to monitor the roadway tunnels. They reported an average positioning error of about 0.34 m. For subway tunnel inspection, Huang et al. (2017) developed a small vision-based system including line-scan cameras and light sources. The inspection equipment is designed to achieve a resolution of 0.3 mm/pixel at a speed of 5 km/h. In another study, an inspection system, proposed by Attard et al. (2018), includes one consumer-grade digital camera and light sources to capture high-resolution images from tunnel walls. This system achieves an average accuracy of 81.4% for change detection. However, it needs a robotic arm and special installations on walls to move through the tunnel which is not applicable for road tunnels. To monitor defects such as cracks in roadway tunnels, Jiang et al. (2019) developed a high-speed system including line-scan cameras and near-infrared (NIR) illumination which can guarantee to achieve a photographing speed of up to 100 km/h.

Following the existing approaches, an image-based inspection system for tunnel mapping consists of different hardware and software components which should be selected and assembled based on the project requirements such as the speed of monitoring, the tunnel conditions, the total cost, the total size and weight of the system, the carrying vehicle type, and the final deliveries.

3 Proposed Method

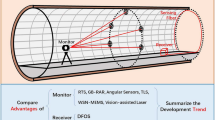

This study aims to examine the main parameters and challenges of a cost-effective mobile system for roadway tunnel inspection. The intended operating speed of the proposed system is about 60–65 km/h which is suitable for traffic flows and fast monitoring of long tunnels. The main components are machine vision (MV) cameras, light sources, a synchronization unit, and a mobile platform, as shown in Fig. 13.1. The outputs are raw RGB images of the entire tunnel area which can be further processed for generating 3D colorful point clouds, damage detection, and object recognition maps. The project should strive for a relative accuracy of about 2 cm for 3D models and a surface resolution of about 3–5 mm for images.

3.1 Camera Selection

The performance of the camera can directly affect the accuracy of the final results for tunnel inspection. The main features of a camera are listed in Table 13.1. According to previous studies, two different types of cameras can be used for image capturing, i.e., digital cameras and machine vision (MV) cameras. While digital cameras are cheaper and can acquire high-resolution images, the total speed of scanning cannot be fast due to their limited exposure time (ET) in order to avoid motion blur. On the other hand, MV cameras are available as line-scan and area-scan sensors. The line-scan cameras are the best option in high-speed processing or fast-moving applications. However, the image acquisition with linear cameras generally requires to continuously record the rotational and transitional movements, e.g., by an inertial measurement unit. In addition, perspective distortion can be expected because of the limited field of view (FOV) of linear sensors (Attard et al. 2018; Teledyne Dalsa 2014). Also, the line-scan cameras usually need high-power illuminator, unlike area-scan cameras (Attard et al. 2018; Huang et al. 2017). On the other hand, the area-scan cameras have a standard interface, easier setup, and alignment.

For area-scan cameras, a global shutter should be used, instead of a rolling shutter, to reduce the motion blur as well as noise in images. In this case, the scene will be frozen at a certain point in time and there is no motion blur. Another important parameter of the camera is the interface of the camera such as USB2, USB3, and GigE. Among different interfaces, USB3 is the fastest interface (e.g., 400 MB/s), and it uses the least amount of computer processor power. Therefore, it is ideal for high-resolution and high-speed imaging (VisionCamera 2019).

3.2 Light Selection

Light sources play a major role in vision-based inspection systems by providing enough lighting to capture blur-free images. LED (light-emitting diode) technology is widely used for lighting in machine vision applications because it can provide high performance, stability, high intensity, as well as cost-efficiency.

LEDs can be operated in three modes, i.e., continuous, switch, and flash operations. In order to scan at a speed up to 65 km/h, the required light for the camera should be provided for a very short time interval (e.g., about 10 microseconds) to ensure that there is no motion blur in the images. This is only possible using flash LEDs. Ideally, the selected LED should be able to produce strobe pulses with a pulse width that corresponds to the camera exposure time (Eq. 13.6). Other important features for an LED are the flash rate and the working distance. Recent LED technologies can provide more than 50 kHz flash strobe. However, the normal working distances of LEDs are less than 1 meter which is not perfect for many tunnels. Therefore, the irradiance of the selected LED should be sufficient to provide powerful light for bigger distances of several meters (e.g., 3–8 m working distances). The relation between the irradiance (E) and working distance (R) is given in Eq. (13.1).

where Eo is the irradiance of the LED at the distance of Ro, provided by the manufacturer.

3.3 System Design

One of the crucial parameters for the total costs of the final system is the number of cameras required for covering an entire ring of the tunnel. The number of cameras is also defined by the desired overlap of the images within a ring. Although the overlap between two consecutive images along the moving direction (i.e., the horizontal overlap) should be 80% or more for the image alignment and stereo matching algorithms, the overlap between two adjacent cameras (i.e., the vertical overlap) can be less than 50%, e.g., only 10% as illustrated in Fig. 13.2. Since most tunnels can be passed in two opposite directions, five cameras are sufficient in our case to scan each side of the tunnel during one pass (Fig. 13.2). In this case, the angle between the camera axes of adjacent cameras (α) is 45 degrees and the vertical overlap is determined by Eq. (13.2).

Half of the vertical overlap applies to each of the two neighboring images. FOVw is the field of view, corresponding to the sensor’s width w, given by Eq. (13.3).

The sensor size is given by w and h in mm. The parameter f is the camera’s focal length. The horizontal overlap between two consecutive images depends on the speed of the vehicle (V), the capturing rate (FPS), and the working distance (D) and is given by Eq. (13.4).

To calculate the required capturing rate for each camera (FPS), the bandwidth of the USB3 interface should be considered, as shown in Eq. (13.5).

where W and H are the sensor sizes in pixels and the BPP is bytes per pixel which is 1.5 for a 12-bit RGB image. Another important parameter for designing the proposed system is the exposure time of the camera which is related to the motion blur (B) as well as the LED’s pulse width. In our design, the allowed amount of motion blur in an image should not exceed 0.2 pixels. A blurring above this threshold can affect the 3D model and final results. Based on the speed of the vehicle (V), and the size of the pixel (PS), the required exposure time (ET) can be calculated according to Eq. (13.6).

3.4 Time Synchronization

In tunnel inspection using multi-cameras and multi-LEDs, the vital task is synchronizing the light system and the image acquisition system. The synchronization parameters are the trigger rate and pulse width of the trigger signal which are calculated for the primary camera. The trigger rate is equal to the required acquisition rate for each camera and is calculated by Eq. (13.5). The pulse width of the signal is chosen so that it corresponds to the required exposure time of the camera (see Eq. 13.6). As shown in Fig. 13.1, at the present project stage, a self-made synchronization unit is used to connect cameras and LEDs based on internal input and output pins. As shown in Fig. 13.3, the dark image will be captured for a short exposure time (e.g., 50 microseconds) if the LED and camera are not properly synchronized.

3.5 Tunnel Conditions

The system is to be developed for the roadway tunnels with different shapes like circular or rectangular tunnels. The working distance is 3–8 m and the light conditions in the tunnel are low. Therefore, high-power LEDs are selected to provide sufficient illuminations even in absolute darkness conditions. Moreover, the extraneous light interference is prevented due to the short exposure time of the camera (see Eq. 13.6). The system can be installed on a normal car like a kombi van (Volkswagen, 2020), and the intended operating speed of the vehicle is about 60–65 km/h which is suitable for traffic flows and fast monitoring of long tunnels with a minimum motion blur in the final images.

3.6 Image Processing Challenges

The generation of point clouds from the tunnel images offers challenges that are not found in many other close-range or UAV-based applications. Special features are often poorly textured environments, low lighting, noisy images, and little vertical overlap between the images. In addition, many images are captured in a long tunnel, and the limited computing capacity often prevents all images from being processed in one go. To address these issues, different scenarios are proposed in this study.

The first scenario is to coarsely estimate the external orientations (EOs) of the cameras in a local auxiliary coordinate system and use them as initial values for the image alignment algorithm. The EOs can be calculated based on the tunnel dimensions as well as the camera’s installation angles in relation to the auxiliary coordinate system, as illustrated in Fig. 13.4. Figure 13.5 shows the first experiment with a test block of 1600 images. The alignment algorithm benefits from the coarsely determined EOs, and the semi-global matching algorithm produces a more complete point cloud.

The second scenario is to process the images of a long tunnel by dividing the image set into several overlapping image blocks, generate a point cloud for each block, and then fuse point clouds using a point-based registration technique. The experimental result for this scenario is shown in Fig. 13.6. The mean error and standard deviation of differences between the fused point cloud and the original point cloud are 0.07 m and 0.08 m, respectively. The merging of the point clouds of the three blocks worked well as long as the overlap between the blocks did not fall below 20% of the length of one of the blocks.

4 Conclusion

This article discusses the most important components of a multi-camera system for tunnel inspection including cameras, LEDs, and the synchronization unit. Based on the project requirements for ground pixel resolution, point cloud density and accuracy, the speed of scanning, the tunnel dimensions, and the total costs, each component should be selected to guarantee the performance and automation of the final system. Therefore, different possible options for each component are discussed, and the best possible solution is proposed to design and develop the inspection system, as shown in Table 13.2. Supported by initial experimental tests, image processing challenges are addressed in order to generate 3D models of the tunnel. We will report in the future on the system assembling with Metaphase LEDs, Gardasoft controllers, and Grasshopper cameras and on our experiments with data recording in tunnels and data processing for object recognition and damage detection using deep learning algorithms.

References

Attard, L., Debono, C. J., Valentino, G., & Di Castro, M. (2018). Vision-based change detection for inspection of tunnel liners. Automation in Construction, 91, 142–154. https://doi.org/10.1016/j.autcon.2018.03.020

Chapman, M. A., Min, C., & Zhang, D. (2016). Continuous mapping of tunnel walls in a GNSS-denied environment. In International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences - ISPRS Archives (Vol. 41, pp. 481–485). https://doi.org/10.5194/isprsarchives-XLI-B3-481-2016

Chen, H. M., Ulianov, C., & Shaltout, R. (2015). 3D laser scanning technique for the inspection and monitoring of railway tunnels. In RailNewcastle conference 2015 (pp. 73–84). https://doi.org/10.21307/tp-2015-063

Chen, L., Lu, S., & Zhao, Q. (2019). Research on BIM-Based highway tunnel design, construction and maintenance management platform. In IOP Conference Series: Earth and Environmental Science (Vol. 218). https://doi.org/10.1088/1755-1315/218/1/012124

Ehrbar, H., Braun, M., Fentzlof, W., Franz, S., Rahm, T., Scheffer, M., & Weiner, T. (2019). Digital Design, Building and Operation of Underground Structures, BIM in Tunnelling. Cologne: Deutscher Ausschuss für unterirdisches Bauen e. V. (DAUB).

Fargnoli, M., Lleshaj, A., Lombardi, M., Sciarretta, N., & Di Gravio, G. (2019). A BIM-based PSS approach for the management of maintenance operations of building equipment. Buildings, 9(6). https://doi.org/10.3390/buildings9060139

Gavilán, M., Sánchez, F., Ramos, J. A., & Marcos, O. (2013). Mobile Inspection System for High-Resolution Assessment of Tunnels. In The 6th International Conference on Structural Health Monitoring of Intelligent Infrastructure. Hong Kong |.

Huang, H., Sun, Y., Xue, Y., & Wang, F. (2017). Inspection equipment study for subway tunnel defects by grey-scale image processing. Advanced Engineering Informatics, 32, 188–201. https://doi.org/10.1016/j.aei.2017.03.003

Jiang, Y., Zhang, X., & Taniguchi, T. (2019). Quantitative condition inspection and assessment of tunnel lining. Automation in Construction, 102, 258–269. https://doi.org/10.1016/j.autcon.2019.03.001

O’Neill, B. (2020). Terrestrial laser scanners (TLS): guide and product selection. Retrieved from https://www.aniwaa.com/buyers-guide/3d-scanners/terrestrial-laser-scanners-long-range/

Stent, S. A. I., Girerd, C., Long, P. J. G., & Cipolla, R. (2015). A low-cost robotic system for the efficient visual inspection of tunnels. 32nd International Symposium on Automation and Robotics in Construction and Mining: Connected to the Future, Proceedings. https://doi.org/10.22260/isarc2015/0070

Teledyne Dalsa. (2014). Understanding Line Scan Camera Applications. Retrieved from https://www.inspect-online.com/file/track/7757/1

VisionCamera. (2019). HOW TO SELECT A MACHINE VISION CAMERA INTERFACE (USB3 / GIGE / 5GIGE / 10GIGE VISION)? Retrieved from https://www.vision-camera.nl/How-to-select-a-machine-vision-camera-interface-USB3-GigE-5GigE-10GigE-Vision

Volkswagen. (2020). The Transporter 6.1 Kombi. Retrieved from https://www.volkswagen-nutzfahrzeuge.de/de/modelle

Wang, W., Zhao, W., Huang, L., Vimarlund, V., & Wang, Z. (2014). Applications of terrestrial laser scanning for tunnels: a review. Journal of Traffic and Transportation Engineering (English Edition), 1(5), 325–337. https://doi.org/10.1016/S2095-7564(15)30279-8

Yin, X., Liu, H., Chen, Y., Wang, Y., & Al-Hussein, M. (2020). A BIM-based framework for operation and maintenance of utility tunnels. Tunnelling and Underground Space Technology, 97. https://doi.org/10.1016/j.tust.2019.103252

Zhan, D., Yu, L., Xiao, J., & Chen, T. (2015). Multi-camera and structured-light vision system (MSVS) for dynamic high-accuracy 3D measurements of railway tunnels. Sensors (Switzerland), 15(4), 8664–8684. https://doi.org/10.3390/s150408664

Acknowledgments

This research is funded by the Federal Ministry for Economic Affairs and Energy (BMWi) as part of the ZiM project ABOUT. The project partner is Viscan Solutions GmbH.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Open Access This chapter is licensed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license and indicate if changes were made.

The images or other third party material in this chapter are included in the chapter's Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the chapter's Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder.

Copyright information

© 2022 The Author(s)

About this chapter

Cite this chapter

Alidoost, F., Austen, G., Hahn, M. (2022). A Multi-camera Mobile System for Tunnel Inspection. In: Coors, V., Pietruschka, D., Zeitler, B. (eds) iCity. Transformative Research for the Livable, Intelligent, and Sustainable City. Springer, Cham. https://doi.org/10.1007/978-3-030-92096-8_13

Download citation

DOI: https://doi.org/10.1007/978-3-030-92096-8_13

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-92095-1

Online ISBN: 978-3-030-92096-8

eBook Packages: Economics and FinanceEconomics and Finance (R0)