Abstract

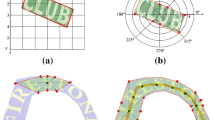

Scene text detection has drawn the close attention of researchers. Though many methods have been proposed for horizontal and oriented texts, previous methods may not perform well when dealing with arbitrary-shaped texts such as curved texts. In particular, confusion problem arises in the case of nearby text instances. In this paper, we propose a simple yet effective method for accurate arbitrary-shaped nearby scene text detection. Firstly, a One-to-Many Training Scheme (OMTS) is designed to eliminate confusion and enable the proposals to learn more appropriate groundtruths in the case of nearby text instances. Secondly, we propose a Proposal Feature Attention Module (PFAM) to exploit more effective features for each proposal, which can better adapt to arbitrary-shaped text instances. Finally, we propose a baseline that is based on Faster R-CNN and outputs the curve representation directly. Equipped with PFAM and OMTS, the detector can achieve state-of-the-art or competitive performance on several challenging benchmarks.

Supported by the Open Research Project of the State Key Laboratory of Media Convergence and Communication, Communication University of China, China (No. SKLMCC2020KF004), the Beijing Municipal Science & Technology Commission (Z191100007119002), the Key Research Program of Frontier Sciences, CAS, Grant NO ZDBS-LY-7024, the National Natural Science Foundation of China (No. 62006221).

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Baek, Y., Lee, B., Han, D., Yun, S., Lee, H.: Character region awareness for text detection. In: CVPR, pp. 9365–9374 (2019)

Chen, Y., Wang, W., Zhou, Y., Yang, F., Yang, D., Wang, W.: Self-training for domain adaptive scene text detection. In: ICPR, pp. 850–857 (2021)

Chen, Y., Zhou, Yu., Yang, D., Wang, W.: Constrained relation network for character detection in scene images. In: Nayak, A.C., Sharma, A. (eds.) PRICAI 2019. LNCS (LNAI), vol. 11672, pp. 137–149. Springer, Cham (2019). https://doi.org/10.1007/978-3-030-29894-4_11

Chu, X., Zheng, A., Zhang, X., Sun, J.: Detection in crowded scenes: one proposal, multiple predictions. In: CVPR, pp. 12211–12220 (2020)

Hu, J., Shen, L., Sun, G.: Squeeze-and-excitation networks. In: CVPR, pp. 7132–7141 (2018)

Liao, M., Shi, B., Bai, X.: Textboxes++: a single-shot oriented scene text detector. IEEE Trans. Image Process. 27(8), 3676–3690 (2018)

Liao, M., Wan, Z., Yao, C., Chen, K., Bai, X.: Real-time scene text detection with differentiable binarization. In: AAAI, pp. 11474–11481 (2020)

Liu, Y., Chen, H., Shen, C., He, T., Jin, L., Wang, L.: ABCNet: real-time scene text spotting with adaptive Bezier-curve network. In: CVPR. pp. 9806–9815 (2020)

Long, S., Ruan, J., Zhang, W., He, X., Wu, W., Yao, C.: TextSnake: a flexible representation for detecting text of arbitrary shapes. In: Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y. (eds.) ECCV 2018. LNCS, vol. 11206, pp. 19–35. Springer, Cham (2018). https://doi.org/10.1007/978-3-030-01216-8_2

Luo, D., et al.: Video cloze procedure for self-supervised spatio-temporal learning. In: AAAI, pp. 11701–11708 (2020)

Lyu, P., Liao, M., Yao, C., Wu, W., Bai, X.: Mask TextSpotter: an end-to-end trainable neural network for spotting text with arbitrary shapes. In: Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y. (eds.) Computer Vision – ECCV 2018. LNCS, vol. 11218, pp. 71–88. Springer, Cham (2018). https://doi.org/10.1007/978-3-030-01264-9_5

Qiao, Z., Qin, X., Zhou, Y., Yang, F., Wang, W.: Gaussian constrained attention network for scene text recognition. In: ICPR, pp. 3328–3335 (2020)

Qiao, Z., Zhou, Y., Yang, D., Zhou, Y., Wang, W.: SEED: semantics enhanced encoder-decoder framework for scene text recognition. In: CVPR, pp. 13525–13534 (2020)

Qin, X., Zhou, Y., Guo, Y., Wu, D., Wang, W.: FC2RN: a fully convolutional corner refinement network for accurate multi-oriented scene text detection. In: ICASSP, pp. 4350–4354 (2021)

Qin, X., Zhou, Y., Yang, D., Wang, W.: Curved text detection in natural scene images with semi- and weakly-supervised learning. In: ICDAR, pp. 559–564 (2019)

Tian, Z., Huang, W., He, T., He, P., Qiao, Yu.: Detecting text in natural image with connectionist text proposal network. In: Leibe, B., Matas, J., Sebe, N., Welling, M. (eds.) ECCV 2016. LNCS, vol. 9912, pp. 56–72. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-46484-8_4

Wang, W., et al.: Shape robust text detection with progressive scale expansion network. In: CVPR, pp. 9336–9345 (2019)

Wang, W., et al.: Efficient and accurate arbitrary-shaped text detection with pixel aggregation network. In: ICCV, pp. 8439–8448 (2019)

Wang, X., Jiang, Y., Luo, Z., Liu, C., Choi, H., Kim, S.: Arbitrary shape scene text detection with adaptive text region representation. In: CVPR, pp. 6449–6458 (2019)

Wang, Y., Xie, H., Zha, Z., Xing, M., Fu, Z., Zhang, Y.: ContourNet: taking a further step toward accurate arbitrary-shaped scene text detection. In: CVPR, pp. 11750–11759 (2020)

Woo, S., Park, J., Lee, J.-Y., Kweon, I.S.: CBAM: convolutional block attention module. In: Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y. (eds.) ECCV 2018. LNCS, vol. 11211, pp. 3–19. Springer, Cham (2018). https://doi.org/10.1007/978-3-030-01234-2_1

Xiao, S., Peng, L., Yan, R., An, K., Yao, G., Min, J.: Sequential deformation for accurate scene text detection. In: Vedaldi, A., Bischof, H., Brox, T., Frahm, J.-M. (eds.) ECCV 2020. LNCS, vol. 12374, pp. 108–124. Springer, Cham (2020). https://doi.org/10.1007/978-3-030-58526-6_7

Yang, D., Zhou, Y., Wu, D., Ma, C., Yang, F., Wang, W.: Two-level residual distillation based triple network for incremental object detection. CoRR abs/2007.13428 (2020)

Yao, C., Bai, X., Liu, W.: A unified framework for multioriented text detection and recognition. IEEE Trans. Image Process. 23(11), 4737–4749 (2014)

Yao, Y., Liu, C., Luo, D., Zhou, Y., Ye, Q.: Video playback rate perception for self-supervised spatio-temporal representation learning. In: CVPR, pp. 6547–6556 (2020)

Zhang, C., et al.: Look more than once: an accurate detector for text of arbitrary shapes. In: CVPR, pp. 10552–10561 (2019)

Zhang, S., et al.: Deep relational reasoning graph network for arbitrary shape text detection. In: CVPR, pp. 9696–9705 (2020)

Zhang, Y., Liu, C., Zhou, Y., Wang, W., Wang, W., Ye, Q.: Progressive cluster purification for unsupervised feature learning. In: ICPR, pp. 8476–8483 (2020)

Zhou, X., et al.: EAST: an efficient and accurate scene text detector. In: CVPR, pp. 2642–2651 (2017)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2021 Springer Nature Switzerland AG

About this paper

Cite this paper

Guo, Y., Zhou, Y., Qin, X., Wang, W. (2021). Which and Where to Focus: A Simple yet Accurate Framework for Arbitrary-Shaped Nearby Text Detection in Scene Images. In: Farkaš, I., Masulli, P., Otte, S., Wermter, S. (eds) Artificial Neural Networks and Machine Learning – ICANN 2021. ICANN 2021. Lecture Notes in Computer Science(), vol 12895. Springer, Cham. https://doi.org/10.1007/978-3-030-86383-8_22

Download citation

DOI: https://doi.org/10.1007/978-3-030-86383-8_22

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-86382-1

Online ISBN: 978-3-030-86383-8

eBook Packages: Computer ScienceComputer Science (R0)