Abstract

Road safety analysis can be used to understand what has been successful in the past and what needs to be changed in order to be successful to reduce severe road trauma going forward and ultimately what’s needed to achieve zero. This chapter covers some of the tools used to retrospectively evaluate real-life benefits of road safety measures and methods used to predict the combined effects of interventions in a road safety action plan as well as to estimate if they are sufficient to achieve targets near-term and long-term. Included are also a brief overview of methods to develop boundary conditions on what constitutes a Safe System for different road users. Further to that, the chapter lists some arguments for the need of high-quality mass and in-depth data to ensure confidence in the results and conclusions from road safety analysis. Finally, a few key messages are summarized.

You have full access to this open access chapter, Download reference work entry PDF

Similar content being viewed by others

Keywords

Introduction: Why Is Road Safety Analysis Necessary?

Road safety analysis is an area of profound importance in Vision Zero planning and implementation. It has commonly been used to understand the real-life benefits of road safety measures, to guide future implementation of interventions and to facilitate the development of action plans and strategies. Road safety analysis could be said to include more specifically in the context of Vision Zero – gaining detailed insights into crash and injury mechanisms, investigate boundary conditions for what constitutes a safe system and building confidence in innovative solutions by setting up quality management systems and evaluation frameworks. Going forward, road safety analysis is essential to understand future trauma residuals and what it might take to ultimately eliminate fatalities and serious injuries. Road safety analysis is also an important ingredient in target management as it can be used to inform what constitutes ambitious but achievable long-term and near-term targets, both in terms of trauma targets and targets for system transformation, namely Safety Performance Indicators (SPI).

In summary, road safety analysis can be used to understand what has been successful in the past and what needs to be changed in order to be successful to reduce severe road trauma going forward and ultimately what’s needed to achieve zero.

Road safety analysis is made up from numerous elements of data of crashes and injuries, information of the system state as road assets, vehicle and driver characteristics, statistical methods and models, in-depth investigations as well as other analytical tools. As with all analytics, the quality of the outputs and the confidence in the results are products of the input data, the approach used when generating hypothesis and the methods adopted when testing them. Over the past decades, road safety analysis has benefited from increased data quality and coverage as well as new and improved methods for evaluation, forecasting, and scenario development.

This chapter will cover some of the tools used to retrospectively evaluate real-life benefits of road safety countermeasures and methods used to predict the combined effects of interventions in a road safety action plan as well as to estimate if they are sufficient to achieve targets near-term and long-term. Included are also a brief overview of methods to develop boundary conditions on what constitutes a Safe System for different road users. Further to that, the chapter will also list some arguments for the need of high-quality mass and in-depth data to ensure confidence in the results and conclusions from road safety analysis. Finally, a few key messages are summarized.

Retrospective Analysis

Real-Life Evaluation of Road Safety Countermeasures

With the Vision Zero approach it is imperative to constantly evaluate implemented countermeasures in real-life conditions, thus providing valuable feedback to the designers of the road transport system. The basic idea is to compare two different crash populations:

-

The treated population, that is, one involving the countermeasure to be evaluated

-

The untreated population, that is, one without the countermeasure

An example could be the evaluation of newly installed median barriers on a road. In its simplest form, the analysis would compare the number of crashes occurring on the new roads with median barriers with the number of crashes on the same road, before it was rebuilt. Such an approach is normally called before-and-after study. In other words, the treated and untreated crash populations come from the same road, in different time periods. However, such a simple approach would not handle possible confounders. For instance, it is conceivable that during the studied period there may be seasonable or even long-term variation in traffic volumes, or other changes in driving patterns due to weather, roadworks, or increased police enforcement, that would reasonably affect the overall crash rates on the analyzed road. In order to handle this issue, it is recommended to use the “before-and-after” approach with at least one so-called control group, that is, an untreated crash population from another road during the same time period. Clearly, several control groups can be used, as done by Transport for London (2007).

Further, even more advanced approaches could be used, that is, empirical Bayes (EB) methods, which also account for abnormal crash rates in short study periods by shrinking such estimates toward the mean, depending on the amount of data available. It should be noted, however, that EB require a certain level of statistical tools. While explaining such tools goes beyond the aim of this chapter, further details can be found in Hauer (1997), Elvik (2013), and OECD/ITF (2018).

Regardless of study design, it is important to understand that it is possible to perform real-life evaluations with limited data, as long as the data have a sufficient degree of detail and are analyzed with robust methods. A few recommendations are outlined below.

The first critical step in evaluating a countermeasure is matching the treated and untreated crash populations. Ideally, these should be as similar as possible and only differentiate on the variable under study. However, this may not always be possible, therefore it may be necessary to make assumptions or simplifications. With regard to optional vehicle safety technologies, for instance, it would be preferable to compare the same car models with and without the technology.

The second critical step is to obtain the exposure. Indirect methods are often used, that is, the exposure is derived from the actual crash data (i.e., induced exposure). With this approach, the key point is to identify at least one crash type or situation in which the countermeasure under analysis can be reasonably assumed (or known) not to be effective. Then, the relation between crashes with and without the countermeasure in a non-affected situation would be considered as the true exposure relation. For further reading, please see Evans (1998), Lie et al. (2006), or Strandroth et al. (2012). While sometimes it may be possible to use data based on real exposure (Teoh 2013; HLDI 2013), this may be difficult to obtain and could also include confounding factors. The most obvious advantage of indirect methods is that the analysis can be performed based on crash data only, without any need of other sources. Secondly, the issue of confounding factors may be easier to handle. To elaborate further, an example regarding the evaluation of optional autonomous emergency braking (AEB) on passenger cars is illustrated below.

As long as AEB is not standard equipment in all cars on the roads, it could be argued that drivers choosing AEB are probably more concerned about their safety in the first place, which could naturally lead to a lower crash involvement (i.e., selective recruitment). Further differences between the crash populations could also confound the results, for instance age, gender and use of protective equipment, etc. If crash rates are calculated based on real exposure (i.e., number of crashes divided by number of registered vehicle, or vehicle mileage), it is essential to control for possible confounders, for instance driver age or seat belt use rate, as done in Teoh (2011). However, adopting an induced exposure approach would normally address this issue, as the result is given by the relative differences within the AEB and non-AEB crash populations. Basically, even though a variable is known to affect the overall crash or injury risk (say driver age), the same variable can only confound the induced exposure results by deviating from the overall sensitive/nonsensitive ratio. If this is found to be the case, the treated crash population can be stratified into different subgroups for further analysis. The induced exposure calculations can be adjusted for confounders, as suggested by Schlesselman (1982), for instance by calculating the weighted average of the individual odds ratios.

Nonetheless, it is important to stress that the induced exposure approach is also based on a number of assumptions and limitations. First of all, it should be clear that the basic idea with this method is to calculate the number of crashes that should be included in the data, if the countermeasure under analysis had no effect at all. This approach may be considered as calculating the “missing” crashes in the dataset. Therefore, it is evident that a certain reduction in police reported crashes, for instance, does not necessarily mean that no crashes had occurred at all, or that no slight injuries were sustained in a minor crash that was not reported to the police.

An attempt to address this issue, that is, distinguishing between crash avoidance and reduced crash severity, has been presented in Rizzi et al. (2015). However, it should be noted that this approach is difficult to apply to police-reported crashes, as it requires injury data with good resolution (i.e., hospital records including full diagnoses), which may not be available in all regions of the world.

It is important to stress that the most critical assumption with the induced exposure approach is to determine the nonsensitive crash type. While the main method for selecting nonsensitive crashes is a priori analysis of in-depth studies, as done in previous research (Sferco et al. 2001), the distribution of crash types within the analyzed data may also provide insights into the non-sensitivity of certain crash types. However, it is very important that such assumptions are based on an actual hypothesis, rather than “trial and error” in the analysis steps (Lie et al. 2006). A further reflection is that evaluations of safety countermeasures based on real-life crashes may imply several factors affecting each other, that is, these may not be based on the principle “everything else is constant.” An example is the fitment of “safety packages” on cars, that is, a number of safety features such as low-speed AEB, high-speed AEB, Lane Keeping Assist, and Blind Spot Detection are offered as optional fitments together. It is therefore important to keep this issue in mind in order to differentiate between explanatory variables and confounding variables. If confounders are present as variables that differ between cases and controls, they might be picked up by the effect variable. When selecting possible confounders, it is important that they are based on a hypothesis, and not just invented. If included without any hypothesis, they may pick a variation that is not real. In other words, it is important to distinguish between possible correlation and causation .

Risk Factors and Boundary Conditions

Road safety research has traditionally had a significant focus on identifying risk factors which could be explained as factors correlating with increased crash or injury risk (Stigson 2009). Certainly, in some areas of road safety it is crucial to gain insight into risk factors. The development of driver support systems is one area where an understanding of driver distraction and impairment are important when selecting treatment strategies (Tivesten 2014). However, it has been found repeatedly that severe injuries and fatalities can be prevented without deep knowledge of the specific crash causation. Median barriers, speed reduction, airbags, and restraint systems are all interventions that act independently from driver-related errors. Despite the fact that they do not prevent crashes they are nonetheless effective in preventing injuries or mitigating injury outcomes.

In designing a safe transport system there is a need for a holistic approach, and current safety policies are therefore focusing more on defining safety criteria, or boundary conditions, rather than identifying risk factors (OECD 2008). The development of risk curves is the first step in creating that holistic understanding of what would constitute a safe system.

Injury Risk Curves

Injury risk curves are another essential part of the Vision Zero approach. As mentioned in other chapters, according to Vision Zero the road transport system should be adapted to the limitations of the road users, by anticipating and allowing for human error. The primary aim is not to totally eliminate the number of crashes but to align the crash severity with the potential to protect from bodily harm. In order to do that, detailed knowledge on the human tolerance to blunt force is needed. Different injury risk curves have been developed for passenger car occupants, pedestrians (see, e.g., Kullgren 2008); Gabauer and Gabler 2006; Rosen and Sander 2009; Niebuhr et al. 2016) and motorcyclists (Ding et al. 2019). Other studies have also identified age as critical factor affecting the injury outcome for a given crash severity. For instance, with regard to car-pedestrian collisions, Kullgren and Stigson (2010) reported that at 40 km/h the risk of sustaining a MAIS 3+ injury is almost twice as high for elderly 60+, compared to all pedestrians in general Fig. 1.

Injury risk curves for pedestrians hit by cars. (Source: Kullgren and Stigson (2010))

Injury risk curves can be inherently affected by different types of different measurement errors, especially if based on crash reconstructions. As pointed out by Kullgren and Stigson (2010), impact speeds in crash reconstructions can include measurement errors in the 20% magnitude, which greatly affects the injury risk functions, especially at higher impact speeds. As shown in Fig. 2, the injury risk function would become more flat with increasing measurement error. This issue implies that injury risks are underestimated at higher impact speeds, which has significant practical implications for setting safe speed limits. As illustrated below, setting the threshold for acceptable risk is set at 10% based on data including 25% measurement error would result in a more than twice as high risk based on the original data.

Estimated effect of measurement errors on injury risk curves. (Source: Kullgren and Stigson (2010))

It is important to point out that it is difficult to compensate the influence of measurement errors, or poor data quality in general, by increasing the data size. On the contrary, data sources with greater precision, that is, data based on EDR (Event Data Recorders), should be used whenever possible, even if the number of available cases is limited.

Model for Safe Traffic

A natural extension of risk curves, which outlines boundary conditions for specific crash configurations and road users, is to define system boundary conditions. System boundary conditions could be described as a combination of system element characteristics such as road infrastructure, vehicles, roads use, and speed such that they provide a safe system. Linnskog (2007) suggested a model as Fig. 3 where the combination of safe roads, safe vehicles, safe road use, and safe speed would produce safe traffic. For the model to be useful in different road environments, a dynamic approach was suggested where if one element failed it would need to be compensated by strengthening another. A typical example would be to adapt the speed limit to the function and safety quality of road infrastructure. Thereby a safe system can be created not only by heavy infrastructure investments but rather by a conscious decision of safe and appropriate speed in combination with infrastructure investment based on a road’s strategic movement function. It is also important to note that the model criteria need constant review in relation to vehicle fleet turnover and as more advanced vehicle safety technologies enter the market.

The model for safe traffic adopted by the Swedish Transport Administration. (Adapted from Linnskog (2007))

Linnskog (2007) suggested a model for safe traffic for passenger car occupants with criteria being a Euro NCAP 5-star car, an iRAP 4-star road and a road user using seatbelt, being sober and complying with the speed limit. Stigson (2009) validated this model with the use of in-depth analysis of fatalities and serious injuries and found it to valid with a few exceptions, such as collisions with heavy goods vehicles. Also, Stigson (2009) further developed the model by suggesting that some of the road user requirements as seatbelt wearing, speed limit compliance, and unimpaired driving should be guaranteed by the implementation of vehicle technology rather than being road user dependent.

In a similar matter, but with a more future focus, a model for safe traffic in 2050 is being developed and validated in Victoria (Australia) with the purpose to understand infrastructure requirements to achieve zero road trauma by 2050 when a new the national long-term target of zero by 2050 was set (Strandroth et al. 2019). Based on the in-depth investigation of fatal crashes, the implementation of road cross-sections as outlined in Fig. 4 is investigated, in combination with a 5-star vehicle model year 2025 with safety technologies as outlined in the Euro NCAP roadmap (Euro NCAP 2017).

Concepts of cross-sections for different road types. (Source: Strandroth et al. (2019))

Even though this example is limited to passenger cars on midblock sections of high-speed rural roads, it illustrates the value safe system models. These models enable a back-casting approach where a future desired state can be compared with the current system state resulting in a gap-analysis useful for future planning. By doing this, one can identify additional programs and innovation needed to achieve zero and understand how to optimize the pathway to zero.

From a Vision Zero perspective it is thereby essential to develop and validate models for safe system for all road users in all situations, as it forms the basis for Vision Zero planning and implementation.

In-Depth Analysis of Crashes and Injuries

In road safety, as for other areas of epidemiology, a commonly used approach is case studies – where in-depth investigations of uncommon events are used to gain deep insight. This is one of the key prerequisites for road safety analysis with Vision Zero. Basically, macro analyses based on mass data need to be complemented with in-depth knowledge on crashes. It is also important to acknowledge that the road transport system is far from static – it is an intrinsically ever-moving entity that needs to be constantly monitored and studied. Therefore, having up-to-date in-depth studies of crashes makes it possible to follow up the current performance of the road transport system, to identify new deficiencies, as well as to test new hypotheses on future countermeasures.

A concrete example on median barriers is presented below. Using police-reported crashes matched with road data, Carlsson (2009) reported an 80% reduction of fatalities on newly built 2+1 roads. Clearly, this is an important result that strongly supports the further implementation of 2+1 roads as soon as possible. However, on the path toward zero it is essential to truly understand the circumstances of the remaining 20% fatalities that were not addressed by mid-barriers. This could be referred to as “getting the magnifying glass and zooming in on the leakage from a treatment,” which would be very difficult task to perform using mass data. The more effective a treatment is found to be, the more important it becomes to understand the leakage. This is where detailed knowledge through case studies can support quick action by road authorities by detecting non-conformities and supporting adjustments of existing countermeasures or even the development of new countermeasures to address them. Theoretically, even one single case involving a new non-conformity could be enough to require action on the whole road transport system. Again, it becomes evident that data quantity can never replace quality.

In-depth studies are also often used to find potential benefits, especially for pre-production vehicle technologies. While the analysis of in-depth cases naturally has to deal with challenges regarding subjectivity and reliability, a number of studies have shown that it is possible to minimize this issue by setting logical decision-trees and having redundant analyses. Anti-lock Brakes, Electronic Stability Control, Autonomous Emergency Braking, Lane Keeping Assist, Barrier treatments, and Audio Tactile Line Markings are all examples of vehicle safety systems and road safety treatments which future benefits are assessed using a case-by-case approach (Sternlund 2017; Rizzi et al. 2009; Sferco et al. 2001; Swedish Transport Administration 2012a, Doecke et al. 2016).

Analysis of Future Safety Gaps

When aiming for a society free from serious road traffic injuries, it has been common practice in many countries and organizations to set up time-limited and quantified targets for the reduction of fatalities and injuries (OECD 2008). In setting these targets, the EU and other organizations recognize the importance of monitoring and predicting the development toward the target as well as the efficiency of road safety policies and interventions (EU 2010). Predicting the future status of the road transport system is, however, important not only with respect to target monitoring. According to Tingvall et al. (2010), it also plays an important role in the process of operational planning and in the prioritization of future actions.

Typical questions that arise as organizations, cities, regions, jurisdictions, and countries target zero are: How close to zero will our current strategies take us? What crashes and injuries remains in the future when all the treatments in our current toolbox are implemented and what further innovations are needed to ultimately eliminate road trauma? These are some of the questions that this chapter seeks to answer in order to facilitate Vision Zero planning.

The Challenges with Using of Retrospective Accident Data

The nature of the road transport system in many regions of the world has changed rapidly over the last decade as safety improvements in road infrastructure, vehicle fleet, and speed management have changed the characteristics of the system components. Not only has the condition state of the transport system changed, the characteristics of the crashes have also changed. For instance, Sweden has had a large reduction in car occupant fatalities since the beginning of the twenty-first century; however, the reduction is most evident in head-on crashes in contrary to single vehicle crashes. Strandroth (2015) has shown that this reduction was the result of systematic improvements, such as the installation of median barriers on roads with high traffic volume and/or vehicle improvements like the fitment of ESC and improved crashworthiness. Hence, as the road transport system continues to evolve it is quite reasonable to believe that the crashes of the future will differ a lot from the crashes of today and the past. Especially keeping in mind a future where cars can drive autonomously and the consequences of driver errors may be prevented, however, other challenges connected to automated vehicles may possibly arise (Lie 2014; Eugensson et al. 2013). Micro-mobility may also present the same possibilities and challenges.

Often when benefit assessments are made, retrospective data are used to describe accident scenarios that the technology is assumed to address (eValue 2011; Kuehn et al. 2009; Fach and Ockel 2009). The benefit estimations of a technology that will be introduced on new cars in a couple of years will then be based on accident data that may be several years old. Strandroth (2015) showed that the maximum benefit of a vehicle technology can be delayed for 10 or even 20 years. Hence, there can be a large time distance between maximum benefit and the time from which the accident data was collected and utilized in the benefit assessment. This fact can make retrospective analysis of crash data invalid when trying to predict the future impact of new or existing safety measures.

Naturally, accident data will always be intrinsically retrospective. However, the validity of the crash data need to be controlled by taking into account the evolution of the transport system when estimating benefits of future technologies.

Methods to estimate future benefits of road safety interventions based on the development of a combination of countermeasures can according to the Transportation Research Board be classified as statistical or structural (TRB 2013). TRB recognize statistical methods as an essential engineering tool for “formulating an initial, preliminary understanding of the relationship between variables” (TRB 2013). As a complement to statistical analysis, structural analysis has been proposed as an approach to identify why crashes occur and to explain causal relationships in road safety. A structural model is described by Davis (2004) as a model that “consist of deterministic mechanisms that draw on background knowledge concerning how the driver–vehicle–road system behaves…First, the relevant mechanisms for a specific type of crash are identified. Then, they are used to quantify the causal effect of the treatment on each mechanism. Finally, the frequencies of the mechanisms are aggregated for the facility of interest.”

Methods for prediction with a structural approach have been introduced and used in, for example, Sweden and Australia. In Sweden, a model suggested by the Institute of Transport Economics in Norway was used to forecast the number of lives saved by different road safety interventions introduced in 2007 and beyond (Swedish Road Administration 2008). This was done to facilitate the decision on an interim road safety target in Sweden. The effect of the individual interventions was calculated as the exposure multiplied by the effectiveness. The number of lives saved from all interventions was then estimated by the total sum multiplied by a factor of 0.6 to adjust for double counting (Swedish Road Administration 2008).

In South Australia, a model was developed for the South Australian Government by Anderson and Ponte (2013) which aimed to quantify the benefit from a number of safety improvements until 2020. The model took implementation rate and time into account and related every intervention to its relevant target population. In this study, the target population was defined as the group of fatalities prevented by a specific intervention. Other external factors such as traffic growth and changes in the vehicle fleet were also taken into consideration. A model developed by Vulcan and Corben (1998) was numerically implemented by Corben et al. (2009) in Western Australia and used the same approach. The overall benefit from all interventions (I1, I2, …, In) in the Australian model was calculated as 1-(1- I1) ∙ (1- I2) ∙ … ∙ (1- In). Hence, the interventions were treated as independent.

Correlation, Independence, Overlapping Variables, Non-Linearity, and System Effects

Although the assumption of an independent relationship could sometimes be true and applied in retrospective evaluations, it has been shown in some cases to be invalid and therefore not appropriate to describe the future. Tingvall et al. (2010) identified at least two major challenges that are linked to the dependent relationship between different SPIs and the nonlinearity between an increase of an SPI and the final outcome. Regarding the relationship between SPIs, earlier studies have shown some possible alternatives that are all based on the fact the SPIs do not act alone, but are rather interacting components in a complex system.

In some cases it is clear that SPIs are correlated. This is the case with seat belt use and impaired drivers, since the probability for impaired drivers to be unrestrained in fatal crashes have been found to be larger than for sober drivers (Tingvall et al. 2010). Also, Nilsson (2004) found correlations between alcohol, seat belt use, and speed limit compliance in studies with self-reported data. One other possible interaction between SPIs could occur where a combination of two or more SPIs is conditional, in the sense that the effect of one factor is dependent on, or enhanced by, another factor, for example, system effects.

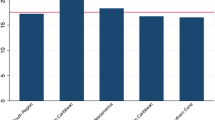

Strandroth et al. (2011) illustrated an example of system effects by showing that the injury reducing effect of more pedestrian-friendly car fronts depends on the speed limit where the pedestrians are struck by the car. In that study, hospital records were used to calculate the mean risk of impairing or fatal consequences. The results showed a significantly lower mean risk of fatal or impairing injuries for cars with a higher Euro NCAP pedestrian score. Interestingly, the risk difference in 30 km/h speed zones was 42%, while in 50 km/h speed zones the difference was 25%; and in 70 km/h no risk differences could be found (Fig. 5).

System effects illustrated by comparison of mean risk for fatal or impairing injuries in one and two star cars Euro NCAP rated in different speed limits. (Source Strandroth et al. (2011))

Broughton et al. (2000) assumed this relationship when evaluating the past benefit of vehicle safety, interventions against drink-driving and road engineering. But also in an attempt to forecast the benefit of these interventions, the same study based the calculation on the theory of independence. If the SPIs are treated as independent or simply additive without interaction, double counting becomes a risk if the populations addressed are in fact overlapping. However, if system effects are introduced with a combination of SPIs, there is a risk of underestimating the combined effect by just adding them. Elvik (2009) stated that many studies earlier have overestimated the combined effects of SPIs, and suggested a more conservative approach described as the method of dominant common residuals. In that method it is assumed that the introduction of one road safety measure makes another measure entirely ineffective.

Another way of dealing with the combined effects is to simply summarize the effects and then use a multiplying factor lower than 1 in order to compensate for the correlation (Swedish Road Administration 2008). Furthermore, even if a valid and reliable number of casualties could be foreseen, it is still just a number and insufficient to describe qualitatively and to identify safety gaps.

The other challenge to predicting a final outcome from the combination of several improvements is the fact that there is not always a linear relationship between the development of an SPI in traffic and the final outcome. In-depth studies from fatal crashes combined with measurement on a whole population indicate that an increase of a safety factor among the whole population might not lead to an improvement in the final outcome. Tingvall et al. (2010) relate this to the fact that the improvement could address another part of the population than the one involved in severe crashes. Figure 6 shows an example where the increase in seat belt rate for all drivers does not increase the seat belt rate in fatal crashes.

Rate of seat belt wearing in traffic for all passenger car drivers vs. seat belt wearing for fatally injured drivers, from 1997 to 2007. (Source: Tingvall et al. (2010))

Another explanation for the nonlinearity could be the slow turnover of vehicles in a vehicle fleet, and that the distribution of vehicle mileage over vehicle age is not linear with the proportion of fatal and severe crashes. Figure 7 shows that when a cohort of cars has driven 80% of their lifetime mileage, they have only been involved in 50% of their fatal and severe crashes (STA 2012c). Hence, older cars are in general over-represented in severe crashes, and as new safety technologies penetrate the market it could take many years before the technologies reach the target population. Often this nonlinearity is ignored in benefit assessments.

Accumulated passenger car mileage and involvement in fatal and severe crashes over passenger car age. (Source: STA (2012c))

An Analytical Approach in Vision Zero Planning and Target Setting

To overcome issues with nonlinearity, double counting and invalid old crash data, Strandroth et al. (2015) suggested a new approach to understand the future impact of road safety interventions by combining knowledge from system improvements with in-depth crash data. Figure 8 gives a basic overview of the analytical approach in Vision Zero planning. While each step is described separately, please see Strandroth (2015a) for further reading.

Step 1: Outline Business as Usual System Improvements

The first three steps are about developing a baseline business as usual scenario which aims to illustrate a baseline development of fatalities and injuries. In this context, a baseline can be defined as the projection of today’s fatalities and current risk levels affected by already planned system improvements. This includes infrastructure treatments in the current delivery pipeline but also vehicle safety improvements due to vehicle fleet turnover. It could also be more general factors such as travel speed changes or changes in general deterrence levels.

To consider the future impact of the baseline safety improvements, they all need associated business rules around implementation timeline, target crash pools, and effectiveness. Business rules are essential to make the modeling repeatable and scientifically sound. They cover in detail which crashes, involving who and in which situations that would be prevented by certain treatments. The business rules also need to capture every inclusion and exclusion criterion in the target crash pools, that is, extreme violations, excessive speeding.

Crash data format and quality would determine the method of modeling. In-depth crash data enables case-by-case analysis of crashes that allows detailed understanding and engagement and deals with double counting when estimating future treatment effectiveness. However, the resource intensity of case-by-case analysis limits the number of crashes that can be modeled to hundreds instead of thousands in contrast to a statistical approach using mass data. In reality, it is rarely the case that in-depth analysis of thousands of crashes is needed to understand the systematic risks in a jurisdiction, why a random sample could be selected for this specific purpose. However, in some cases, such as when analyzing big data, a similar structural model, however with a statistical approach, could be used in the analysis of serious injuries which are vast in numbers. For further reading see Strandroth et al. (2016).

Independent of the crash data format, information on the roads, vehicles, and people involved in the crashes must be linked to unit-records on the same level of detail as the crash data as well as future treatments with their associated business rules and the target years. The greater the quality of this meta-data, the more transparent and repeatable is the method.

Step 2: Baseline Development Through Crash and Injury Assessment

Crash and injury assessment by application of business rules to crash data would be different between mass data and case-by-case data. In a case-by-case analysis, every crash needs to be carefully examined to understand whether the crash outcome would be the same in a future target year given future system improvements. Each fatality is assessed according to the business rules to decide whether it is likely to be prevented or not in a specific year. Firstly, the prevailing crash type associated with each fatality is considered against the target crash pools to identify relevant treatments and vehicle safety systems. Secondly, the effectiveness business rules is applied to see whether the crash circumstances are such that the fatality would be expected to be prevented or not. Finally, implementation time is taken into account to understand in what year the fatality is expected to be prevented (if relevant). If the fatality is considered to be prevented by any of the agreed road safety improvement measures, it can be removed from the residual.

As an example case, let us assume that a passenger car with model year (MY) 2007 was involved in a single vehicle loss-of-control scenario in 2018. The crash occurred on a main national road with median barriers but without roadside barriers. When leaving the lane on the right side of the road, the driver over-corrected, lost control, and rolled over resulting in the driver being killed. The crash would sort into the target crash pool relevant to ESC, roadside ATLM, and road-side barriers. In this case we assume the circumstances do not exclude the crash from the effective envelope of ESC and barriers; however, ATLM where not assumed to be effective. The car was not equipped with ESC which, in this hypothetical example, became standard in this region of the world in 2012. Based on the five-year difference between 2007 and 2012 the crash would be expected to be prevented five years after the original crash in 2018, thus prevented and removed from the residual in 2023. In this way, not only the age of the vehicle fleet is taken into account when projecting the benefit of fleet renewal but more importantly the age of each vehicle involved in fatal crashes. This process is then repeated if the crash belongs to more than one crash pool to understand what else might have prevented the fatality. In this hypothetical case, there are no specific projects planned on this road but since it is a main national road it is expected to be fitted with barriers to 2030. In summary, ESC and the fitment of roadside barriers are expected to prevent the crash in 2023 and 2030, respectively. However, every crash is only removed once from the residual to avoid double counting of treatment benefits. Thus, this particular crash would be removed from the residual only once in 2023.

After the initial application of system improvements, general improvements that are not necessarily associated with individual crash pools can be applied (e.g., travel speed changes, enforcement elasticities). One has to be careful though not to add general factors to the degree that they represent the majority of future benefits as issues with double counting might come to effect. And in some cases, also general improvement could be associated with specific crash pools to avoid double counting. That is the case for example with crashworthiness which is a more general improvement over time while at the same time specific to only car occupant injuries. External factors like risk exposure increase due to population growth or demographic changes could also be included at this stage.

Step 3: Residual Analysis

Following the establishment of a baseline, not only is it possible to estimate the level of future residual trauma but also to investigate the characteristics of this trauma. As previously mentioned, typical questions are: How close to zero will our current strategies take us? What are the characteristics of crashes and injuries remaining in the future when all the treatments in our current toolbox are implemented and what further innovations are needed to ultimately eliminate road trauma? Other questions might be: Are interim targets estimated to be achieved? What road users are favored in the delivery of safety improvements under the “business as usual” scenario? When and where will the majority of trauma reduction benefits from safer and more advanced vehicles be realized?

The investigation of future residuals can then guide the development of future treatments and interventions to close the gap between the baseline and future targets, both near-term and long-term. Of particular interest is to understand why future crashes are estimated to not be prevented. In this context, at least three basic reasons can be mentioned. First, residual due to implementation delays – when the relevant interventions exist but are not implemented in time. Naturally this residual would be diminishing over time. Second, residual being outside the effective envelope – when relevant treatments exist and are expected to be implemented in time, but the circumstances of the crash are such that the injury outcome are not avoided or mitigated sufficiently. Third, there is no relevant intervention – when there is no intervention in the pipeline (or maybe none at all) to address the crash outcome.

Step 4: Scenarios to Address Residual Trauma

As described in Steps 1–3, a logical reduction of future trauma based on the implementation of planned interventions can be used to make informed decisions on ambitious, achievable, and empirically derived interim targets. The natural next step is to recognize potential for additional improvements and trauma reductions by comparing the baseline with scenarios based on the implementation of additional countermeasure. Alternatively, the benefits of a more rapid implementation of treatments could be investigated as the example in Fig. 9 which illustrates the benefit of a more rapid uptake of vehicle safety technology. Normally in road safety management and strategy development it is seldom valuable to reflect on the single impact of one intervention. Instead, the combined benefits of several interventions are of interest when interim road safety targets are to be set. The third line in Fig. 9 illustrates the combined effect of accelerated implementation of vehicle safety and increased efforts in infrastructure improvements.

The last but not least important step in this approach in the method would involve using the baseline modeling to develop Safety Performance Indicators and associated targets to enable system transformation monitoring. For further reading please see STA (2012b).

Methodological Considerations

One relevant question is whether prediction models in general should aim for a higher complexity by including as many variables as possible in order to reflect reality in the best possible way. Or, if methods to describe the future should be kept simple to preserve transparency and repeatability. Of course, it could always be argued that the more variables that are included in a model, the closer the model will represent real life. However, if more and more variables are included it could become harder to establish the causal relationship needed to understand the output of the model.

For instance, Broughton et al. (2000) states that even if statistical forecasting can be a powerful tool it has some weaknesses and often the modelers have no theory to guide their choice of model. Therefore, the current practice is to use a few alternative ones and choose the one that fits the existing data best. With no theory to guide the choice of model, functions could be formatted poorly and be problematic such as having correlated independent variables or induce nonexistent correlations (Hauer 2005). The challenge relating to multivariate models is that they are either additive or multiplicative. However, it has been shown in Strandroth (2015a) and in previous research that SPIs are not only additive or multiplicative. When simply added together, double counting becomes an issue, and with a multiplicative approach it is ignored that SPIs can also be conditional (where the effect of one improvement is dependent on another). Hence, when dealing with combined interventions, a deterministic logic approach is preferable as it could be more transparent and able to tackle double counting and conditional improvements, even though it is hard to image any method that would completely eliminate these issues.

Summary and Key Messages

Road safety analysis is an essential element in Vision Zero planning practices as it is used to provide guidance on what has been successful in treating past trauma problems, how to treat current risks in the road transport system and how to design a future safe system. As with all analytics, road safety analysis is reliant on good quality data in order to provide valid and reliable guidance. However, more data is not always the solution and data quantity should never be seen as a substitute of quality. On the contrary, small datasets can be very valuable if analyzed with robust methods. This is especially the case when sample sizes would naturally decrease due to road safety interventions (i.e., close to zero). The closer to zero we get, the more important is the analysis of outliers and nonconformities. And this type of quality management of the road transport system is only possible with in-depth data.

Defining future interventions and strategies in an accurate way requires in-depth knowledge of crashes and injuries, robust methods, and clear hypotheses. In order to design a Safe System, it is essential to understand the effective envelope of system interventions, that is, which crashes and injuries are prevented, what is not prevented and why. From an analytical prospective this requires a clear hypothesis of the cause and effect and not only correlation. And when selecting possible confounders, it is important that they are based on a hypothesis, and not just invented. If included without any hypothesis, they may pick a variation that is not real. In other words, it is important to distinguish between possible correlation and causation.

Another aspect of understanding the benefits of future interventions is that the road system is constantly changing, affected by everyday improvements like the renewal of the vehicle fleet, hence making retrospective data unsuited to describe the problems ahead. Naturally, crash data will always be retrospective in nature. However, the validity of the crash data needs to be ensured by taking into account the evolution of the transport system when estimating benefits of future interventions.

Road safety analysis is also about providing an analytical framework for the vision to become tangible and implemented in the day-to-day operation of road safety stakeholders. Some basic analytical steps for Vision Zero target management are presented in this chapter as follows:

-

1.

Outline a baseline scenario with “business-as-usual” safety improvements

-

2.

Baseline development through crash and injury assessment

-

3.

Analyze the residual to identify future safety gaps

-

4.

Develop scenarios to address residual trauma, set ambitious but achievable trauma targets and define Safety Performance Indicators for system transformation and set their long term and interim targets

References

Anderson, R., & Ponte, G. (2013). Modelling potential benefits of interventions made under the SA road safety strategy, CASR report series.

Broughton, J., Allsop, R. E., Lynam, D. A., & McMahon, C. M. (2000). The numerical context for setting national casualty reduction targets, TRL report 382.

Carlsson. (2009). Evaluation of 2+1-roads with cable barrier final report. VTI report 636A.

Corben, B., Logan, D., Fanciulli, L., Farley, R., & Cameron, I. (2009). Strengthening road safety strategy development ‘Towards Zero’ 2008–2020 – Western Australia’s experience scientific research on road safety management SWOV workshop 16 and 17 November 2009. Safety Science, 10(48), 1085–1097.

Davis, G. (2004). Possible aggregation biases in road safety research and a mechanism approach to accident modeling. Accident Analysis and Prevention, 36(2004), 1119–1127.

Ding, C., Rizzi, M., Strandroth, J., Sander, U., & Lubbe, N. (2019). Motorcyclist injury risk as a function of real-life crash speed and other contributing factor. Accident Analysis and Prevention, 123(2019), 374–386.

Doecke, S., Grant, A., & Anderson, R. (2016). The real-world safety potential of connected vehicle technology. Traffic Injury Prevention, 16, 31–35.

Elvik, R. (2009). An exploratory analysis of models for estimating the combined effects of road safety measures. Accident Analysis and Prevention, 41(2009), 876–880.

EU. (2010). EU Press Release. http://europa.eu/rapid/press-release_MEMO-10-343_en.htm. Accessed 12 Nov 2014.

Eugensson, A., Brännström, M., Frasher, D., Rothoff, M., Solyom, S., & Robertsson, A. (2013). Environmental, safety, legal and societal implications of autonomous driving system. In Proceedings of the 23rd international technical conference on the enhanced safety of vehicles, Seoul, Korea.

Euro NCAP. (2017). Roadmap 2025 Roadmap – In pursuit of Vision Zero. https://cdn.euroncap.com/media/30700/euroncap-roadmap-2025-v4.pdf. Accessed 9 Mar 2020.

eVALUE. (2011). Testing and evaluation methods for active vehicle safety. http://www.evalue-project.eu/index.php. Accessed 12 Nov 2014.

Evans, L. (1998). Antilock brake systems and risk of different types of crashes in traffic. In proceedings of the 16th ESV conference, Windsor, Ontario, Canada, paper number 98-S2-O-12.

Fach, M., & Ockel, D. (2009). Evaluation methods for the effectiveness of active safety systems with respect to real world accident analysis. In Proceedings of the 21th international technical conference on the enhanced safety of vehicles, Stuttgart, Germany.

Gabauer, D. J., & Gabler, H. C. (2006). Comparison of delta-v and occupant impact velocity crash severity metrics using event data recorders. In proceedings of the 50th annual meeting of the American Association for the Advancement of Automotive Medicine, Waikoloa.

Hauer, E. (2005). Fishing for safety information in murky waters. Journal of Transportation Engineering, 131(5), 340–344.

HLDI, Highway Loss Data Institute. (2013). Evaluation of motorcycle antilock braking systems, alone and in conjunction with combined control braking systems. HLDI Bulletin 2013, 30–10; Arlington, USA.

Kuehn, M., Hummel, T., & Bende, J. (2009). Benefit estimation of advanced driver assistance systems for cars derived from real-life accidents. In Proceedings of the 21th international technical conference on the enhanced safety of vehicles, Stuttgart, Germany.

Kullgren, A. (2008). Dose-response models and EDR data for assessment of injury risk and effectiveness of safety systems. In proceedings of the 2008 international IRCOBI conference, Bern, Switzerland.

Kullgren, A., & Stigson, H. (2010). Fotgängares risk i trafiken – Analys av tidigare forskningsrön [Pedestrians risk in traffic – Analys av previous research]. https://blogg.folksam.se/folksam-forskar/wp-content/uploads/sites/3/2011/01/Stigson-Kullgren-2010-Fotgängares-risk-i-trafiken.pdf. Accessed 9 Mar 2020.

Lie, A. (2014). Evaluations of vehicle safety systems today and tomorrow, Installation lecture for adjunct professorship at Chalmers University of Technology.

Lie, A., Tingvall, C., Krafft, M., & Kullgren, A. (2006). The effectiveness of electronic stability control (ESC) in reducing real-life crashes and injuries. Traffic Injury Prevention, 2006(7), 38–43.

Linnskog, P. (2007). Safe Road Traffic – Systematic quality assurance based on a model for safe road traffic and data from in-depth investigations of traffic accidents. Proceedings of EuroRAP AISBL 5th General Assembly Members’ Plenary Sessions.

Niebuhr, T., Junge, M., & Rosen, E. (2016). Pedestrian injury risk and the effect of age. Accident Analysis and Prevention, 2016(86), 121–128.

Nilsson, G. (2004). Traffic safety dimensions and the power model to describe the effect of speed on safety (Bulletin, 221). Lund: Lund University.

OECD, Organization for Economic Cooperation and Development. (2008). Toward zero. Ambitious road safety targets and the safe system approach. Paris: OECD Publishing.

Rizzi, M., Strandroth, J., & Tingvall, C. (2009). The effectiveness of antilock brake systems on motorcycles in reducing real-life crashes and injuries. Traffic Injury Prevention, 10(5), 479–487.

Rizzi, M., Kullgren, A., & Tingvall, C. (2015). The combined benefits of motorcycle antilock braking systems (ABS) in preventing crashes and reducing crash severity. Traffic Injury Prevention, 22, 1–7.

Rosen, E., & Sander, U. (2009). Pedestrian fatality risk as a function of car impact speed. Accident Analysis and Prevention, 41, 536–542.

Schlesselman, J. (1982). Case-control studies: Design, conduct, analysis. New York: Oxford University Press.

Sferco, R., Page, Y., Coz, J. Y., & Fay, A. (2001). Potential effectiveness of electronic stability programs (ESP) – What European field studies tell us. In Proceedings of the 17th ESV conference, Amsterdam, the Netherlands; paper number 2001-S2-O-327.

Sternlund, S. (2017). The safety potential of Lane Departure Warning systems – A descriptive real-world study of fatal lane departure passenger car crashes in Sweden. Traffic Injury Prevention, 18. https://doi.org/10.1080/15389588.2017.1313413.

Stigson, H. (2009). A safe road transport system – Factors influencing injury outcome for car occupants, Thesis for the degree of doctor in Philosophy, ISBN 978-91-7409-433-6, Karolinska Institutet, Stockholm, Sweden.

Strandroth, J. (2015a). Identifying potentials of combined road safety interventions: A method to evaluate future effects of integrated road and vehicle safety technologies. Thesis for the degree of doctor in Philosophy, Chalmers University of Technology, Göteborg, Sweden.

Strandroth, J. (2015b). Validation of a method to evaluate future impact of road safety interventions, a comparison between fatal passenger car crashes in Sweden 2000 and 2010. Accident; Analysis and Prevention, 76, 133–140.

Strandroth, J., Rizzi, M., Sternlund, S., Lie, A., & Tingvall, C. (2011). The correlation between pedestrian injury severity in real-life crashes and Euro NCAP pedestrian scores. Traffic Injury Prevention, 12(6), 604–613. 2012.

Strandroth, J., Rizzi, M., Olai, M., Lie, A., & Tingvall, C. (2012). The effects of studded tires on fatal crashes with passenger cars and the benefits of electronic stability Control (ESC) in Swedish Winter Driving. Accident Analysis and Prevention, 45, 50–60.

Strandroth, J., Nilsson, P., Sternlund, S., Rizzi, M., & Krafft, M. (2016). Characteristics of future crashes in Sweden – Identifying road safety challenges in 2020 and 2030. In proceedings of the 2016 international IRCOBI conference, Malaga, Spain.

Strandroth, J., Moon, W., & Corben, B. (2019). Zero 2050 in Victoria – A planning framework to achieve zero with a date. World Engineers Convention Australia 2019. 20–22 November 2019, Melbourne, Victoria.

Swedish Road Administration. (2008). Management by objectives for road safety work – Stakeholder collaboration towards new interim targets 2020, Publication no, 31.

Swedish Transport Administration. (2012a). Increased safety for motorcycle and moped riders Joint strategy version 2.0 for the years 2012–2020. STA publication, 194.

Swedish Transport Administration. (2012b). Review of interim targets and indicators for road safety in 2010–2020. STA publication, 162

Swedish Transport Administration. (2012c). Road safety – Vision zero on the move, ISBN 978-91-7467-234-3.

Teoh, E. (2011). Effectiveness of antilock braking systems in reducing motorcycle fatal crash rates. Traffic Injury Prevention, 12(2), 169–173. 2013.

Teoh, E. (2013). Effects of antilock braking systems on motorcycle fatal crash rates: An update. Insurance Institute for Highway Safety (p. 1005). Arlington: N. Glebe Rd.

Tingvall, C., Stigson, H., Eriksson, L., Johansson, R., Krafft, M., & Lie, A. (2010). The properties of safety performance indicators in target setting, projections and safety design of the road transport system. Accident Analysis and Prevention, 42(2), 372–376.

Tivesten, E. (2014). Understanding and prioritizing crash contributing factors – Analyzing naturalistic driving data and self-reported crash data for car safety development. Thesis for the degree of Doctor in Philosophy, Chalmers University of Technology, Gothenburg, Sweden.

Transport Research Board. (2013). Theory, explanation, and prediction in road safety, transportation research circular, number E-C179.

Vulcan, A. P., & Corben, B. F. (1998). Prediction of Australian road fatalities for the year 2010. Presented to: Canberra: National Road Safety Summit.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Open Access This chapter is licensed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license and indicate if changes were made.

The images or other third party material in this chapter are included in the chapter's Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the chapter's Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder.

Copyright information

© 2023 The Author(s)

About this entry

Cite this entry

Rizzi, M., Strandroth, J. (2023). Road Safety Analysis. In: Edvardsson Björnberg, K., Hansson, S.O., Belin, MÅ., Tingvall, C. (eds) The Vision Zero Handbook. Springer, Cham. https://doi.org/10.1007/978-3-030-76505-7_33

Download citation

DOI: https://doi.org/10.1007/978-3-030-76505-7_33

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-76504-0

Online ISBN: 978-3-030-76505-7

eBook Packages: Religion and PhilosophyReference Module Humanities and Social SciencesReference Module Humanities