Abstract

We introduce a new database to promote visibility enhancement techniques intended for spectral image dehazing. SHIA (Spectral Hazy Image database for Assessment) is composed of two real indoor scenes M1 and M2 of 10 levels of fog each and their corresponding fog-free (ground-truth) images, taken in the visible and the near infrared ranges every 10 nm starting from 450 to 1000 nm. The number of images that form SHIA is 1540 with a size of \(1312\,\times \,1082\) pixels. All images are captured under the same illumination conditions. Three of the well-known dehazing image methods based on different approaches were adjusted and applied on the spectral foggy images. This study confirms once again a strong dependency between dehazing methods and fog densities. It urges the design of spectral-based image dehazing able to handle simultaneously the accurate estimation of the parameters of the visibility degradation model and the limitation of artifacts and post-dehazing noise. The database can be downloaded freely at http://chic.u-bourgogne.fr.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

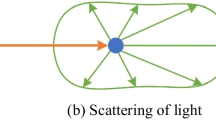

In computer vision applications, dehazing is applied to enhance the visibility of outdoor images by reducing the undesirable effects due to scattering and absorption caused by atmospheric particles.

Dehazing is needed for human activities and in many algorithms like objects recognition, objects tracking, remote sensing and sometimes in computational photography. Applications that are of interest in this scope include fully autonomous vehicles typically that use computer vision for land or air navigation, monitored driving or outdoor security systems. In bad visibility environments, such applications require dehazed images for a proper performance.

Image dehazing is a transdisciplinary challenge, as it requires knowledge from different fields: meteorology to model the haze, optical physics to understand how light is affected through haze and computer vision as well as image and signal processing to recover the parameters of the scene. Researchers have been always searching for an optimal method to get rid of degradation by light scattering along aerosols. Many methods have been proposed and compared to each other. However, available methods still do not meet efficient recovery standards and show a varying performance depending on the density of haze [7].

Earlier approaches involve multiple inputs to break down the mathematical ill-posed problem. Narasimhan et al. [18] calculate the haze model parameters by considering the variation of the color of pixels under different weather conditions. Feng et al. [9] take advantage of the deep penetration of near-infrared wavelength to unveil the details that could be completely lost in the visible band. Other ways consist in employing depth data [13] or images differently polarized [20]. Later techniques mainly focus on single image dehazing approach, which is more challenging but more suitable for the purpose of real time and costless computer vision applications. Single image dehazing was promoted through the work of He et al. [11], the well-known Dark Channel Prior, which gained its popularity thanks to its simple and robust real assumption based on a statistical characteristic of outdoor natural images. Therefore, numerous versions were released later, some of them propose an improvement in estimating one or more of the model’s parameters and others extend the approach to other fields of application [14]. This approach, like others such as filtering based method [21], estimates explicitly transmission and airlight. Other methods overlook the physical model and improve contrast through multi-scale fusion [3], variational [10] or histogram equalization approaches [27]. Recently, like many research domains, several machine learning approaches for image dehazing have come to light [5, 15]. These models are trained on synthetic images built upon a simplistic model comparing to reality [24]. Hence the importance to build a large number of real hazy images.

To evaluate various dehazing methods, some databases of hazy images are available. Tarel et al. [22, 23] used FRIDA and FRIDA2 as two dehazing evaluating databases dedicated to driving assistance applications. They are formed of synthetic images of urban road scenes with uniform and heterogenous layers of fog. There exist also databases of real outdoor haze-free images, for each, different weather conditions are simulated [28].

Given the significant research that has been conducted through the last decade, it turns out that synthetic images formed upon a simplified optical model do not simulate faithfully the real foggy images [6]. Therefore, several databases of real images and real fog with the groundtruth images have emerged. The real fog was produced using a fog machine. This was first used in our previous work presented in [8] and it was used later by Ancuti et al. [2, 4] to construct a good number of outdoor and indoor real hazy images covering a large variation of surfaces and textures. Lately, they introduced a similar database containing 33 pairs of dense haze images and their corresponding haze-free outdoor images [1].

The main contribution of this paper is SHIA, which is inspired from our previous color image database CHIC [8]. To the best of our knowledge, SHIA is the first database that presents for a given fog-free image, a set of spectral images with various densities of real fog. We believe that such database will promote visibility enhancement techniques for drone and remote sensing images. It will represent also a useful tool to valid future methods of spectral image dehazing. Although it contains only 2 scenes, it stands for an example to consider and to integrate efforts on a larger scale to increase the number of such complex databases.

After the description of the used material and the acquisition process of the scenes, we provide a spectral dimension analysis of the data. Then, we evaluate the three color dehazing methods that have been applied to single spectral images. Our experimental results underline a strong dependency between the performance of dehazing methods and the density of fog. Comparing to color images, the difference between dehazing methods is minor, since color shifting, which is usually caused by dehazing methods is not present here. The difference is mainly due to the low intensity, especially induced by the physical based methods and the increase of noise after dehazing.

2 Data Recording

2.1 Used Material

The hyperspectral data was obtained using the Photon focus MV1-D1280I-120-CL camera based on e2v EV76C661 CMOS image sensor with 1280 \(\times \) 1024 pixel resolution.

In order to acquire data in visible and Near-infrared (VNIR) ranges, two models of VariSpec Liquid Crystal Tunable Filters (LCTF) were used: VIS, visible-wavelength filters with a wavelength range going from 450 to 720 nm. NIR, near-infrared wavelength filter with a wavelength range going from 730 to 1100 nm. Every 10 nm in the VIS range and in the NIR range, we captured a picture with a single integration time of 530 ms, which allows a sufficient light to limit the noise without producing saturated pixels over channels. This reduces as well the complexity of the preprocessing spectral calibration step (cf. Sect. 2.3).

In order to provide the image depth of the captured scenes, which could be a relevant data to assess approaches, a Kinect device was used. The Kinect device can detect objects up to 10 m but it induces some inaccuracies beyond 5 m [16]. Therefore, the camera was standing at 4.5 m from the furthest point at the center of the scene.

To generate fog, we used the fog machine FOGBURST 1500 with the flow rate 566 m\(^{3}\)/min and a spraying distance of 12 m, which emits a dense vapor that appears similar to fog. The particles of the ejected fog are water droplets whose radius is close to the radius size of the atmospheric fog (1–10 \(\upmu \)m) [19].

2.2 Scenes

Scenes were set up in a closed rectangular room (length = 6.35 m, width = 6.29 m, height = 3.20 m, diagonal = 8.93 m) with a window (length = 5,54 m, height = 1.5 m), which is large enough to light up the room with daylight. The acquisition session was performed on a cloudy day to ensure an unfluctuating illumination. The objects forming the scenes were placed in front of the window, by which the sensors were placed. This layout guarantees a uniform diffusion of daylight over the objects of the scenes.

After the set up of each scene and before introducing fog, a depth image was first captured using the Kinect device, and it was then replaced by the Photon focus camera, which kept the same position through the capture of images at various fog densities of the same scene. The different densities of fog were generated by spreading first an opaque layer of fog, which was then evacuated progressively through the window. The same procedure was adopted for the acquisition of visible and near infrared images.

Hence, the dataset consists of two scenes, M1 and M2. The images of the scene M1 are only acquired over the visible range (450–720 nm) for technical reasons. M2’s images are captured in visible and NIR (730–1000 nm) ranges (Figs. 1 and 2). In the first set the lamp in the middle of the scene is turned off and turned on in the second. For each acquisition set, 10 levels of fog were generated besides the fog-free scene. As result, there are 308 images for M1: 11 levels (10 levels of fog \(+\) fog-free level), in each there are 28 spectral images taken at every 10 nm from 450 to 720 nm). On the other hand, there are 1232 images for M2: on the basis of M1’s images calculation, M2_VIS and M2_NIR’s images are 616 each (308 for lamp on scene and 308 for lamp off scene).

2.3 Data Processing

We performed a dark correction to get rid of the offset noise that appears all over the image, and a spectral calibration to deal with the spectral sensitivities of the sensor and the used filters. The dark correction consists in taking several images in the dark with the same integration time. For each pixel, we calculate the median value over these images. Therefore, we obtain the dark image. We then subtract the dark image from the spectral images taken with the same integration time. The negative values are set equal to zero [17].

For the spectral calibration, we considered the relative spectral response of the camera and the filter provided in the user manuals. For each captured image at each wavelength band with an integration time of 530 ms, we divided by the maximum peak value of the spectral response of the sensor and the corresponding filter.

M2_VIS Lamp off taken at 550 nm. Dehazed images processed by DCP, MSCNN and CLAHE methods, with the foggy images presented in the first column and the corresponding fog-free images in the last column. The first, second and third rows correspond to the low, medium and high levels of fog, respectively. Under each image, its corresponding scores are showed as follows: (PSNR, SSIM, MS-SSIM).

M2_NIR Lamp on taken at 850 nm. Dehazed images processed by DCP, MSCNN and CLAHE methods, with the foggy images presented in the first column and the corresponding fog-free images in the last column. The first, second and third rows correspond to the low, medium and high levels of fog, respectively. Under each image, its corresponding scores are showed as follows: (PSNR, SSIM, MS-SSIM).

3 Spectral Dimension Analysis

In order to investigate the effective spectral dimension of the spectral images, we used the Principal Component Analysis (PCA) technique [12]. For this analysis, we computed the minimum number of dimensions required to preserve 95% and 99% of the total variance of the spectral images of a given scene. Table 1 shows the minimum number of dimensions computed on the scenes M2 Lamp on and M2 Lamp off considering visible and NIR components at the three levels of fog, low, medium and high. We can observe that for M2 Lamp on, fog-free images and images with very light fog, show an effective dimensions of one or two to preserve 95% of the total variance and from two to four dimensions to preserve 99% of the total variance. Images with a denser fog, require almost the same dimensions as light-fog images in the visible range and more dimensions in the NIR range. This is very likely to be caused by the sensor noise, which is accentuated on dark images. This can be observed from the number of dimensions required at NIR range of M2 lamp off (darker than M2 Lamp on), which is significantly high at fog-free and low levels. Considering the particles size of the fog used to construct this database, the spectral properties at NIR wavelengths is very close to visible wavelengths. To take advantage of its spectral particularities and get better visibility, images at higher infrared wavelengths will be required [6].

4 Evaluation of Dehazing Techniques

The images of SHIA database have been used to evaluate three of the most representative categories of single image dehazing approaches: DCP [11], MSCNN [5], and CLAHE [27]. DCP and MSCNN are both physical-based methods. DCP relies on the assumption that, for a given pixel in a color image of a natural scene, one channel (red, green or blue) is usually very dark (it has a very low intensity). The atmospheric light tends to brighten these dark pixels. Thus, it is estimated over the darkest pixels in the scene. MSCNN introduces DehazeNet, a deep learning method for single image haze removal. DehazeNet is trained with thousands of hazy image patches, which are synthesized from haze-free images taken from the Internet. Since the parameters of the generating model are known, they are used for training. CLAHE, which is a contrast enhancement approach, consists of converting an RGB image into HSI color space. The intensity component of the image is processed by contrast limited adaptive histogram equalization. Hue and saturation remain unchanged.

These methods have been adjusted to be applied on spectral images rather than color images. In other words, the parameters that are usually estimated through the three color bands, were estimated from the single spectral image. For the sake of readability, we have selected three levels of fog, which are denoted by low, medium and high levels (Figs. 1 and 2). In this article, we only display the dehazed images of the scene M2_VIS Lamp off at 550 nm (Fig. 3) and the scene M2_NIR lamp on at 850 nm (Fig. 4). The first row in these figures represent the foggy image at the low selected level of fog, in addition to the corresponding fog-free image and the dehazed images resulting from the three selected dehazing methods. Similarly, the second and the third rows represent the foggy image at the medium and low levels, respectively. We have calculated the scores of the classical metrics used to evaluate spectral images: PSNR, which calculate the absolute error between images; SSIM [25], which consider the neighborhood dependencies while measuring contrast and structure similarity; and MS-SSIM, which is a multiscale SSIM [26], and performs particularly well in assessing sharpness [7]. A higher quality is indicated by a higher PSNR and closer SSIM and MS-SSIM to 1. The corresponding values are written under the images in Figs. 3 and 4. The average values calculated over a few selected wavelengths in the VNIR range are given in Table 2.

Through the visual assessment of the dehazed images presented in Figs. 3 and 4, we can observe that all methods, regardless their approach and hypotheses, perform better at low fog densities, either at visible or near infrared range. CLAHE, which does not consider the physical model of image degradation, eliminates well the fog. However, it induces an important amount of noise that increases with the density of fog. DCP, which is a physical-based approach, fails to estimate accurately the unknown parameters of the image degradation model, the airlight and the transmission of light [11]. This bad estimate produces dim dehazed images, especially at high densities of fog, where the dark channel hypothesis fails. This accords with the observation made on color images presented in our previous work [7]. MSCNN performs also an inversion of the physical model of visibility degradation. However, it estimates better the unknown parameters comparing to DCP since it is trained on a large number of hazy images. This can be deduced through its dehazed images, which are not as dark as the DCP’s dehazed images are.

The metrics values provided in Figs. 3 and 4 have the same trends for color dehazed images across fog densities [7]. They show an increase in quality when the density of fog decreases. However, they underline a global low performance of dehazing methods. This means that haze removal is associated with secondary effects that restrains quality enhancement. This is likely to be handicapped by the noise and the artifacts induced in the image and the dark effect resulted from wrong estimation of visibility model parameters. These effects seem to have a more severe impact on image quality than the fog itself.

From Table 2, we can conclude that foggy images are quantitatively of better quality comparing to the dehazed images, which suffer from noise, low intensity and structural artifacts; the scores resulting from different dehazing methods are very close to each other across wavelengths; the metrics values demonstrate a correlated performance between MSCNN and CLAHE over wavelengths. Although DCP has relatively higher scores, this does not mean it is the best performing method. The dimness of its resulting images seems to minimize the effect of the artifacts.

5 Conclusions

We introduce a new database to promote visibility enhancement techniques intended for spectral image dehazing. For two indoor scenes, this hard built database SHIA, contains 1540 images taken at 10 levels of fog, starting from a very light to a very opaque layer, with the corresponding fog-free images. The applied methods introduce the same effects induced in color images, such as structural artifacts and noise. This is underlined by pixelwise quality metrics when they are compared to foggy images. Accordingly, future works should focus on reducing these effects while considering the particularities of spectral foggy images that need to be further investigated.

References

Ancuti, C.O., Ancuti, C., Sbert, M., Timofte, R.: Dense haze: a benchmark for image dehazing with dense-haze and haze-free images. arXiv preprint arXiv:1904.02904 (2019)

Ancuti, C.O., Ancuti, C., Timofte, R., De Vleeschouwer, C.: O-haze: a dehazing benchmark with real hazy and haze-free outdoor images. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, pp. 754–762 (2018)

Ancuti, C.O., Ancuti, C.: Single image dehazing by multi-scale fusion. IEEE Trans. Image Process. 22(8), 3271–3282 (2013)

Ancuti, C., Ancuti, C.O., Timofte, R., De Vleeschouwer, C.: I-HAZE: a dehazing benchmark with real hazy and haze-free indoor images. In: Blanc-Talon, J., Helbert, D., Philips, W., Popescu, D., Scheunders, P. (eds.) ACIVS 2018. LNCS, vol. 11182, pp. 620–631. Springer, Cham (2018). https://doi.org/10.1007/978-3-030-01449-0_52

Cai, B., Xu, X., Jia, K., Qing, C., Tao, D.: DehazeNet: an end-to-end system for single image haze removal. IEEE Trans. Image Process. 25(11), 5187–5198 (2016)

El Khoury, J.: Model and quality assessment of single image dehazing (2016). http://www.theses.fr/s98153

El Khoury, J., Le Moan, S., Thomas, J.-B., Mansouri, A.: Color and sharpness assessment of single image dehazing. Multimed. Tools Appl. 77(12), 15409–15430 (2017). https://doi.org/10.1007/s11042-017-5122-y

El Khoury, J., Thomas, J.-B., Mansouri, A.: A database with reference for image dehazing evaluation. J. Imaging Sci. Technol. 62(1), 10503-1 (2018)

Feng, C., Zhuo, S., Zhang, X., Shen, L., Süsstrunk, S.: Near-infrared guided color image dehazing. In: 2013 IEEE International Conference on Image Processing, pp. 2363–2367. IEEE (2013)

Galdran, A., Vazquez-Corral, J., Pardo, D., Bertalmio, M.: Enhanced variational image dehazing. SIAM J. Imaging Sci. 8(3), 1519–1546 (2015)

He, K., Sun, J., Tang, X.: Single image haze removal using dark channel prior. IEEE Trans. Pattern Anal. Mach. Intell. 33(12), 2341–2353 (2010)

Hotelling, H.: Analysis of a complex of statistical variables into principal components. J. Educ. Psychol. 24(6), 417 (1933)

Kopf, J., et al.: Deep photo: model-based photograph enhancement and viewing. ACM Trans. Graph. (TOG) 27(5), 1–10 (2008)

Lee, S., Yun, S., Nam, J.-H., Won, C.S., Jung, S.-W.: A review on dark channel prior based image dehazing algorithms. EURASIP J. Image Video Process. 2016(1), 4 (2016). https://doi.org/10.1186/s13640-016-0104-y

Liu, R., Ma, L., Wang, Y., Zhang, L.: Learning converged propagations with deep prior ensemble for image enhancement. IEEE Trans. Image Process. 28(3), 1528–1543 (2018)

Mankoff, K.D., Russo, T.A.: The Kinect: a low-cost, high-resolution, short-range 3D camera. Earth Surf. Proc. Land. 38(9), 926–936 (2013)

Mansouri, A., Marzani, F.S., Gouton, P.: Development of a protocol for CCD calibration: application to a multispectral imaging system. Int. J. Robot. Autom. 20(2), 94–100 (2005)

Narasimhan, S.G., Nayar, S.K.: Chromatic framework for vision in bad weather. In: Proceedings IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2000 (Cat. No. PR00662), vol. 1, pp. 598–605. IEEE (2000)

Nayar, S.K., Narasimhan, S.G.: Vision in bad weather. In: Proceedings of the Seventh IEEE International Conference on Computer Vision, vol. 2, pp. 820–827. IEEE (1999)

Schechner, Y.Y., Narasimhan, S.G., Nayar, S.K.: Instant dehazing of images using polarization. In: CVPR, vol. 1, pp. 325–332 (2001)

Tarel, J.-P., Hautiere, N.: Fast visibility restoration from a single color or gray level image. In: 2009 IEEE 12th International Conference on Computer Vision, pp. 2201–2208. IEEE (2009)

Tarel, J.-P., Hautiere, N., Caraffa, L., Cord, A., Halmaoui, H., Gruyer, D.: Vision enhancement in homogeneous and heterogeneous fog. IEEE Intell. Transp. Syst. Mag. 4(2), 6–20 (2012)

Tarel, J.-P., Hautiere, N., Cord, A., Gruyer, D., Halmaoui, H.: Improved visibility of road scene images under heterogeneous fog. In: 2010 IEEE Intelligent Vehicles Symposium, pp. 478–485. IEEE (2010)

Valeriano, L.C., Thomas, J.-B., Benoit, A.: Deep learning for dehazing: comparison and analysis. In: 2018 Colour and Visual Computing Symposium (CVCS), pp. 1–6. IEEE (2018)

Wang, Z., Bovik, A.C., Sheikh, H.R., Simoncelli, E.P.: Image quality assessment: from error visibility to structural similarity. IEEE Trans. Image Process. 13(4), 600–612 (2004)

Wang, Z., Simoncelli, E.P., Bovik, A.C.: Multiscale structural similarity for image quality assessment. In: The Thirty-Seventh Asilomar Conference on Signals, Systems & Computers, vol. 2, pp. 1398–1402. IEEE (2003)

Xu, Z., Liu, X., Ji, N.: Fog removal from color images using contrast limited adaptive histogram equalization. In: 2009 2nd International Congress on Image and Signal Processing, pp. 1–5. IEEE (2009)

Zhang, Y., Ding, L., Sharma, G.: HazeRD: an outdoor scene dataset and benchmark for single image dehazing. In: 2017 IEEE International Conference on Image Processing (ICIP), pp. 3205–3209. IEEE (2017)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2020 Springer Nature Switzerland AG

About this paper

Cite this paper

El Khoury, J., Thomas, JB., Mansouri, A. (2020). A Spectral Hazy Image Database. In: El Moataz, A., Mammass, D., Mansouri, A., Nouboud, F. (eds) Image and Signal Processing. ICISP 2020. Lecture Notes in Computer Science(), vol 12119. Springer, Cham. https://doi.org/10.1007/978-3-030-51935-3_5

Download citation

DOI: https://doi.org/10.1007/978-3-030-51935-3_5

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-51934-6

Online ISBN: 978-3-030-51935-3

eBook Packages: Computer ScienceComputer Science (R0)