Abstract

Low Rank Approximation (LRA) of a matrix is a hot research subject, fundamental for Matrix and Tensor Computations and Big Data Mining and Analysis. Computations with LRA can be performed at sublinear cost, that is, by using much fewer memory cells and arithmetic operations than an input matrix has entries. Although every sublinear cost algorithm for LRA fails to approximate the worst case inputs, we prove that our sublinear cost variations of a popular subspace sampling algorithm output accurate LRA of a large class of inputs.

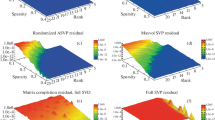

Namely, they do so with a high probability (whp) for a random input matrix that admits its LRA. In other papers we propose and analyze other sublinear cost algorithms for LRA and Linear Least Sqaures Regression. Our numerical tests are in good accordance with our formal results.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

Notes

- 1.

Here and hereafter “Gaussian matrices” stands for “Gaussian random matrices” (see Definition 1). “SRHT and SRFT” are the acronyms for “Subsample Random Hadamard and Fourier transforms”. Rademacher’s are the matrices filled with iid variables, each equal to 1 or \(-1\) with probability 1/2.

- 2.

Subpermutation matrices are full-rank submatrices of permutation matrices.

- 3.

\({{\,\mathrm{nrank}\,}}(W)\) denotes numerical rank of W (see Appendix A.1).

- 4.

We defined Algorithm 4 in Remark 4

- 5.

Additive white Gaussian noise is statistical noise having a probability density function (PDF) equal to that of the Gaussian (normal) distribution.

References

Björck, Å.: Numerical Methods in Matrix Computations. TAM, vol. 59. Springer, Cham (2015). https://doi.org/10.1007/978-3-319-05089-8

Chen, Z., Dongarra, J.J.: Condition numbers of Gaussian random matrices, SIAM. J. Matrix Anal. Appl. 27, 603–620 (2005)

Cichocki, C., Lee, N., Oseledets, I., Phan, A.-H., Zhao, Q., Mandic, D.P.: Tensor networks for dimensionality reduction and large-scale optimization: part 1 low-rank tensor decompositions. Found. Trends® Mach. Learn. 9(4–5), 249–429 (2016)

Davidson, K.R., Szarek, S.J.: Local operator theory, random matrices, and banach spaces. In: Johnson, W.B., Lindenstrauss, J., (eds.) Handbook on Geometry of Banach Spaces, pp. 317–368, North Holland (2001)

Edelman, A.: Eigenvalues and condition numbers of random matrices. SIAM J. Matrix Anal. Appl. 9(4), 543–560 (1988)

Edelman, A., Sutton, B.D.: Tails of condition number distributions. SIAM J. Matrix Anal. Appl. 27(2), 547–560 (2005)

Golub, G.H., Van Loan, C.F.: Matrix Computations, fourth edition. The Johns Hopkins University Press, Baltimore (2013)

Goreinov, S., Oseledets, I., Savostyanov, D., Tyrtyshnikov, E., Zamarashkin, N.: How to find a good submatrix. In: Matrix Methods: Theory, Algorithms, Applications,(dedicated to the Memory of Gene Golub, edited by V. Olshevsky and E. Tyrtyshnikov), pp. 247–256. World Scientific Publishing, New Jersey (2010)

Halko, N., Martinsson, P.G., Tropp, J.A.: Finding structure with randomness: probabilistic algorithms for constructing approximate matrix decompositions. SIAM Rev. 53(2), 217–288 (2011)

Kishore Kumar, N., Schneider, J.: Literature survey on low rank approximation of matrices. Linear Multilinear Algebra 65(11), 2212–2244 (2017). arXiv:1606.06511v1 [math.NA] 21 June 2016

Luan, Q., Pan, V.Y.: CUR LRA at sublinear cost based on volume maximization, In: Salmanig, D. et al. (eds.) MACIS 2019, LNCS 11989, pp. xx–yy. Springer, Switzerland (2020). https://doi.org/10.1007/978-3-030-43120-49. arXiv:1907.10481 (2019)

Luan, Q., Pan, V.Y., Randomized approximation of linear least squares regression at sublinear cost. arXiv:1906.03784, 10 June 2019

Mahoney, M.W.: Randomized algorithms for matrices and data. Found. Trends Mach. Learn. 3, 2 (2011)

Osinsky, A.: Rectangular maximum volume and projective volume search algorithms. arXiv:1809.02334, September 2018

Pan, C.-T.: On the existence and computation of rank-revealing LU factorizations. Linear Algebra Appl. 316, 199–222 (2000)

Pan, V.Y., Luan, Q.: Refinement of low rank approximation of a matrix at sublinear cost. arXiv:1906.04223, 10 June 2019

Pan, V.Y., Luan, Q., Svadlenka, J., Zhao, L.: Primitive and Cynical Low Rank Approximation, Preprocessing and Extensions. arXiv 1611.01391, 3 November 2016

Pan, V.Y., Luan, Q., Svadlenka, J., Zhao, L.: Superfast Accurate Low Rank Approximation. Preprint, arXiv:1710.07946, 22 October 2017

Pan, V.Y., Luan, Svadlenka, Q., Zhao, L.: CUR Low Rank Approximation at Sublinear Cost. arXiv:1906.04112, 10 June 2019

Pan, V.Y., Luan, Q., Svadlenka, J., Zhao, L.: Low rank approximation at sublinear cost by means of subspace sampling. arXiv:1906.04327, 10 June 2019

Pan, V.Y., Qian, G., Yan, X.: Random multipliers numerically stabilize Gaussian and block Gaussian elimination: proofs and an extension to low-rank approximation. Linear Algebra Appl. 481, 202–234 (2015)

Pan, V.Y., Zhao, L.: New studies of randomized augmentation and additive preprocessing. Linear Algebra Appl. 527, 256–305 (2017)

Pan, V.Y., Zhao, L.: Numerically safe Gaussian elimination with no pivoting. Linear Algebra Appl. 527, 349–383 (2017)

Sankar, A., Spielman, D., Teng, S.-H.: Smoothed analysis of the condition numbers and growth factors of matrices. SIMAX 28(2), 446–476 (2006)

Tropp, J.A.: Improved analysis of subsampled randomized Hadamard transform. Adv. Adapt. Data Anal. 3(1–2), 115–126 (2011). (Special issue "Sparse Representation of Data and Images")

Tropp, J.A., Yurtsever, A., Udell, M., Cevher, V.: Practical sketching algorithms for low-rank matrix approximation. SIAM J. Matrix Anal. Appl. 38, 1454–1485 (2017)

Woolfe, F., Liberty, E., Rokhlin, V., Tygert, M.: A fast randomized algorithm for the approximation of matrices. Appl. Comput. Harmonic. Anal. 25, 335–366 (2008)

Acknowledgements

We were supported by NSF Grants CCF–1116736, CCF–1563942, CCF–1733834 and PSC CUNY Award 69813 00 48.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Appendix

Appendix

A Background on Matrix Computations

A.1 Some Definitions

-

An \(m\times n\) matrix M is orthogonal if \(M^*M=I_n\) or \(MM^*=I_m\).

-

For \(M=(m_{i,j})_{i,j=1}^{m,n}\) and two sets \(\mathcal I\subseteq \{1,\dots ,m\}\) and \(\mathcal J\subseteq \{1,\dots ,n\}\), define the submatrices \(M_{\mathcal I,:}:=(m_{i,j})_{i\in \mathcal I; j=1,\dots , n}, M_{:,\mathcal J}:=(m_{i,j})_{i=1,\dots , m;j\in \mathcal J},\) and \(M_{\mathcal I,\mathcal J}:=(m_{i,j})_{i\in \mathcal I;j\in \mathcal J}.\)

-

\({{\,\mathrm{rank}\,}}(M)\) denotes the rank of a matrix M.

-

argmin\(_{|E|\le \epsilon |M|}{{\,\mathrm{rank}\,}}(M+E)\) is the \(\epsilon \)-\({{\,\mathrm{rank}\,}}(M)\) it is numerical rank, \({{\,\mathrm{nrank}\,}}(M)\), if \(\epsilon \) is small in context.

-

Write \(\sigma _j(M)=0\) for \(j>r\) and obtain \(M_{r}\), the rank-r truncation of M.

-

\(\kappa (M)=||M||~||M^+||\) is the spectral condition number of M.

A.2 Auxiliary Results

Next we recall some relevant auxiliary results (we omit the proofs of two well-known lemmas).

Lemma 2

[The norm of the pseudo inverse of a matrix product]. Suppose that \(A\in \mathbb R^{k\times r}\), \(B\in \mathbb R^{r\times l}\) and the matrices A and B have full rank \(r\le \min \{k,l\}\). Then \(|(AB)^+| \le |A^+|~|B^+|\).

Lemma 3

(The norm of the pseudo inverse of a perturbed matrix, [B15, Theorem 2.2.4]). If \({{\,\mathrm{rank}\,}}(M+E)={{\,\mathrm{rank}\,}}(M)=r\) and \(\eta =||M^+||~||E||<1\), then

Lemma 4

(The impact of a perturbation of a matrix on its singular values, [GL13, Corollary 8.6.2]). For \(m\ge n\) and a pair of \({m\times n}\) matrices M and \(M+E\) it holds that

Theorem 8

(The impact of a perturbation of a matrix on its top singular spaces, [GL13, Theorem 8.6.5]). Let \(g=:\sigma _{r}(M)-\sigma _{r+1}(M)>0~\mathrm{and}~||E||_F\le 0.2g.\) Then for the left and right singular spaces associated with the r largest singular values of the matrices M and \(M+E\), there exist orthogonal matrix bases \( B_{r,\mathrm left}(M)\), \(B_{r,\mathrm right}(M)\), \( B_{r,\mathrm left}(M+E)\), and \(B_{r,\mathrm right}(M+E)\) such that

For example, if \(\sigma _{r}(M)\ge 2\sigma _{r+1}(M)\), which implies that \(g\ge 0.5~\sigma _{r}(M)\), and if \(||E||_F\le 0.1~ \sigma _{r}(M)\), then the upper bound on the right-hand side is approximately \(8||E||_F/\sigma _r(M)\).

A.3 Gaussian and Factor-Gaussian Matrices of Low Rank and Low Numerical Rank

Lemma 5

[Orthogonal invariance of a Gaussian matrix]. Suppose that k, m, and n are three positive integers, \(k\le \min \{m,n\},\) \(G_{m,n}{\mathop {=}\limits ^{d}}\mathcal G^{m\times n}\), \(S\in \mathbb R^{k\times m}\), \(T\in \mathbb R^{n\times k}\), and S and T are orthogonal matrices. Then SG and GT are Gaussian matrices.

Definition 2

[Factor-Gaussian matrices]. Let \(r\le \min \{m,n\}\) and let \(\mathcal G_{r,B}^{m\times n}\), \(\mathcal G_{A,r}^{m\times n}\), and \(\mathcal G_{r,C}^{m\times n}\) denote the classes of matrices \(G_{m,r}B\), \(AG_{r,n}\), and \(G_{m,r} C G_{r,n}\), respectively, which we call left, right, and two-sided factor-Gaussian matrices of rank r, respectively, provided that \(G_{p,q}\) denotes a \(p\times q\) Gaussian matrix, \(A\in \mathbb R^{m\times r}\), \(B\in \mathbb R^{r\times n}\), and \(C\in \mathbb R^{r\times r}\), and A, B and C are well-conditioned matrices of full rank r.

Theorem 9

The class \(\mathcal G_{r,C}^{m\times n}\) of two-sided \(m\times n\) factor-Gaussian matrices \(G_{m,r} \varSigma G_{r,n}\) does not change if in its definition we replace the factor C by a well-conditioned diagonal matrix \(\varSigma =(\sigma _j)_{j=1}^r\) such that \(\sigma _1\ge \sigma _2\ge \dots \ge \sigma _r>0\).

Proof

Let \(C=U_C\varSigma _C V_C^*\) be SVD. Then \(A=G_{m,r}U_C{\mathop {=}\limits ^{d}}\mathcal G^{m\times r}\) and \(B=V_C^*G_{r,n}{\mathop {=}\limits ^{d}}\mathcal G^{r\times n}\) by virtue of Lemma 5, and so \(G_{m,r} C G_{r,n} =A\varSigma _C B\) for \(A{\mathop {=}\limits ^{d}}\mathcal G^{m\times r}\), \(B{\mathop {=}\limits ^{d}}\mathcal G^{r\times n}\), and A independent from B.

Definition 3

The relative norm of a perturbation of a Gaussian matrix is the ratio of the perturbation norm and the expected value of the norm of the matrix (estimated in Theorem 11).

We refer to all three matrix classes above as factor-Gaussian matrices of rank r, to their perturbations within a relative norm bound \(\epsilon \) as factor-Gaussian matrices of \(\epsilon \)-rank r, and to their perturbations within a small relative norm as factor-Gaussian matrices of numerical rank r to which we also refer as perturbations of factor-Gaussian matrices.

Clearly \(||(A\varSigma )^+||\le ||\varSigma ^{-1}||~||A^+||\) and \(||(\varSigma B)^+||\le ||\varSigma ^{-1}||~||B^+||\) for a two-sided factor-Gaussian matrix \(M=A\varSigma B\) of rank r of Definition 2, and so whp such a matrix is both left and right factor-Gaussian of rank r.

Theorem 10

Suppose that \(\lambda \) is a positive scalar, \(M_{k,l}\in \mathbb R^{k\times l}\) and G a \(k\times l\) Gaussian matrix for \(k-l \ge l+2 \ge 4\). Then, we have

Proof

Let \(M_{k,l}=U\varSigma V^*\) be full SVD such that \(U\in \mathbb R^{k\times k}\), \(V\in \mathbb R^{l\times l}\), U and V are orthogonal matrices, \(\varSigma =(D~|~O_{l,k-l})^*\), and D is an \(l\times l\) diagonal matrix. Write \(W_{k,l}:=U^*(M_{k,l}+\lambda G)V\) and observe that \(U^*M_{k,l}V=\varSigma \) and \(U^*GV = \begin{bmatrix} G_1 \\ G_2 \end{bmatrix}\) is a \(k\times l\) Gaussian matrix by virtue of Lemma 5. Hence

and so \(|W_{k,l}^+|\le \min \{|(D+ \lambda G_1)^+|,|\lambda G_2^+|\}.\) Recall that \(G_1 {\mathop {=}\limits ^{d}}\mathcal G^{l\times l}\) and \(G_2 {\mathop {=}\limits ^{d}}\mathcal G^{k-l\times l}\) are independent, and now Theorem 10 follows because \(|(M_{k,l}+\lambda G_{k,l})^+|= |W_{k,l}^+|\) and by virtue of claim (iii) and (iv) of Theorem 12.

A.4 Norms of a Gaussian Matrix and Its Pseudo Inverse \(\varGamma (x)= \int _0^{\infty }\exp (-t)t^{x-1}dt\) denotes the Gamma function.

Theorem 11

[Norms of a Gaussian matrix. See [DS01, Theorem II.7] and our Definition 1].

(i) Probability\(\{\nu _{\mathrm{sp},m,n}>t+\sqrt{m}+\sqrt{n}\}\le \exp (-t^2/2)\) for \(t\ge 0\), \(\mathbb E(\nu _{\mathrm{sp},m,n})\le \sqrt{m}+\sqrt{n}\).

(ii) \(\nu _{F,m,n}\) is the \(\chi \)-function, with \(\mathbb E(\nu _{F,m,n})=mn\) and probability density \(\frac{2x^{n-i}\mathrm{exp}(-x^2/2)}{2^{n/2}\varGamma (n/2)}.\)

Theorem 12

[Norms of the pseudo inverse of a Gaussian matrix (see Definition 1)].

-

(i)

Probability \(\{\nu _{\mathrm{sp},m,n}^+\ge m/x^2\}<\frac{x^{m-n+1}}{\varGamma (m-n+2)}\) for \(m\ge n\ge 2\) and all positive x,

-

(ii)

Probability \(\{\nu _{F,m,n}^+\ge t\sqrt{\frac{3n}{m-n+1}}\}\le t^{n-m}\) and Probability \(\{\nu _{\mathrm{sp},m,n}^+\ge t\frac{e\sqrt{m}}{m-n+1}\}\le t^{n-m}\) for all \(t\ge 1\) provided that \(m\ge 4\),

-

(iii)

\(\mathbb E((\nu ^+_{F,m,n})^2)=\frac{n}{m-n-1}\) and \(\mathbb E(\nu ^+_{\mathrm{sp},m,n})\le \frac{e\sqrt{m}}{m-n}\) provided that \(m\ge n+2\ge 4\),

-

(iv)

Probability \(\{\nu _{\mathrm{sp},n,n}^+\ge x\}\le \frac{2.35\sqrt{n}}{x}\) for \(n\ge 2\) and all positive x, and furthermore \(||M_{n,n}+G_{n,n}||^+\le \nu _{n,n}\) for any \(n\times n\) matrix \(M_{n,n}\) and an \(n\times n\) Gaussian matrix \(G_{n,n}\).

Proof

See [CD05, Proof of Lemma 4.1] for claim (i), [HMT11, Proposition 10.4 and equations (10.3) and (10.4)] for claims (ii) and (iii), and [SST06, Theorem 3.3] for claim (iv).

Theorem 12 implies reasonable probabilistic upper bounds on the norm \(\nu _{m,n}^+\) even where the integer \(|m-n|\) is close to 0; whp the upper bounds of Theorem 12 on the norm \(\nu ^+_{m,n}\) decrease very fast as the difference \(|m-n|\) grows from 1.

B Small Families of Hard Inputs for Sublinear Cost LRA Any sublinear cost LRA algorithm fails on the following small families of LRA inputs.

Example 1

Let \(\varDelta _{i,j}\) denote an \(m\times n\) matrix of rank 1 filled with 0s except for its (i, j)th entry filled with 1. The mn such matrices \(\{\varDelta _{i,j}\}_{i,j=1}^{m,n}\) form a family of \(\delta \)-matrices. We also include the \(m\times n\) null matrix \(O_{m,n}\) filled with 0s into this family. Now any fixed sublinear cost algorithm does not access the (i, j)th entry of its input matrices for some pair of i and j. Therefore it outputs the same approximation of the matrices \(\varDelta _{i,j}\) and \(O_{m,n}\), with an undetected error at least 1/2. Arrive at the same conclusion by applying the same argument to the set of \(mn+1\) small-norm perturbations of the matrices of the above family and to the \(mn+1\) sums of the latter matrices with any fixed \(m\times n\) matrix of low rank. Finally, the same argument shows that a posteriori estimation of the output errors of an LRA algorithm applied to the same input families cannot run at sublinear cost.

The example actually covers randomized LRA algorithms as well. Indeed suppose that with a positive constant probability an LRA algorithm does not access K entries of an input matrix with a positive constant probability. Apply this algorithm to two matrices of low rank whose difference at all these K entries is equal to a large constant C. Then, clearly, with a positive constant probability the algorithm has errors at least C/2 at at least K/2 of these entries. The paper [LPa] shows, however, that accurate LRA of a matrix that admits sufficiently close LRA can be computed at sublinear cost in two successive Cross-Approximation (C-A) iterations (cf. [GOSTZ10]) provided that we avoid choosing degenerating initial submatrix, which is precisely the problem with the matrix families of Example 1. Thus we readily compute close LRA if we recursively perform C-A iterations and avoid degeneracy at some C-A step.

Rights and permissions

Copyright information

© 2020 Springer Nature Switzerland AG

About this paper

Cite this paper

Pan, V.Y., Luan, Q., Svadlenka, J., Zhao, L. (2020). Sublinear Cost Low Rank Approximation via Subspace Sampling. In: Slamanig, D., Tsigaridas, E., Zafeirakopoulos, Z. (eds) Mathematical Aspects of Computer and Information Sciences. MACIS 2019. Lecture Notes in Computer Science(), vol 11989. Springer, Cham. https://doi.org/10.1007/978-3-030-43120-4_9

Download citation

DOI: https://doi.org/10.1007/978-3-030-43120-4_9

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-43119-8

Online ISBN: 978-3-030-43120-4

eBook Packages: Computer ScienceComputer Science (R0)