Abstract

Vision-based semantic segmentation and obstacle detection are important perception tasks for autonomous driving. Vision-based semantic segmentation and obstacle detection are performed using separate frameworks resulting in increased computational complexity. Vision-based perception using deep learning reports state-of-the-art accuracy, but the performance is susceptible to variations in the environment. In this paper, we propose a radar and vision-based deep learning perception framework termed as the SO-Net to address the limitations of vision-based perception. The SO-Net also integrates the semantic segmentation and object detection within a single framework. The proposed SO-Net contains two input branches and two output branches. The SO-Net input branches correspond to vision and radar feature extraction branches. The output branches correspond to object detection and semantic segmentation branches. The performance of the proposed framework is validated on the Nuscenes public dataset. The results show that the SO-Net improves the accuracy of the vision-only-based perception tasks. The SO-Net also reports reduced computational complexity compared to separate semantic segmentation and object detection frameworks.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Vehicle detection and free space semantic segmentation are important perception tasks that has been researched extensively [8, 9]. Generally these tasks are independently explored and modeled using the monocular camera. By independently modeling these tasks, the resulting computational complexity is high. Additionally, the camera-based perception frameworks are affected by challenging environmental conditions.

In this paper, we propose to address both these issues using the SO-Net. Firstly, we address the environmental challenges by performing a deep fusion of radar and vision features. Secondly, for the high computational complexity, we formulate a joint multi-task deep learning framework which simultaneously performs semantic segmentation and object detection.

The main motivation for formulating a joint multi-task deep learning framework is to reduce the computational complexity, while achieving real-time performance. We propose to achieve computationally complexity by sharing the features extracted by deep learning layers for multiple tasks.

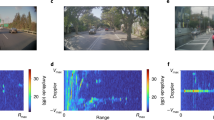

In sensor fusion methodologies, sensors with complementary features are fused together to enhance robustness. In case of radar and vision sensors, the features are complementary. The vision features are descriptive and provide delineation of objects, but are noisy in adverse conditions. Radar features are not affected by adverse conditions caused by illumination variation, rain, snow and fog [11, 12], but are sparse and do not provide delineation of objects. By fusing the radar and vision sensors, we can improve the robustness of perception. Examples of radar features in challenging scenes are shown in Fig. 1.

In this research, we propose the SO-Net, which is an extension of RV-Net [18]. The SO-Net is a perception network containing two feature extraction branches and two output branches. The two feature extraction branches contain separate branches for the camera-based images and the radar-based features. The output branches correspond to vehicle detection and free space semantic segmentation branches.

The main contribution of the paper is as follows:

-

A novel deep learning-based joint multi-task framework termed as the SO-Net using radar and vision.

The SO-Net is validated using the Nuscenes public dataset [2]. The experimental results show that the proposed framework effectively fuses the camera and radar features, while reporting reduced computational complexity for vehicle detection and free space semantic segmentation.

The remainder of the paper is structured as follows. The literature is reviewed in Sect. 2 and the SO-Net is presented in Sect. 3. The experimental results are presented in Sect. 4. Finally, the paper is concluded in Sect. 5.

2 Literature Review

To our understanding, no one has explored the possibility to use radar and camera pipeline for multi-task learning. Multi-task learning is the joint learning of multiple perception tasks. The main advantage of joint learning is the reduction of computational complexity. Recently, multi-task learning has received much attention [6, 13].

We perform radar-vision fusion within a multitask framework. Generally, radar-vision fusion for perception is performed as early-stage fusion [1, 4, 17], late-stage fusion methods [7, 19, 20] and feature-level fusion [3, 18]. In early-stage fusion, the radar features identify candidate regions for vision-based perception tasks [1, 5, 10, 14, 17]. In late stage fusion, independent vision and radar pipelines are utilized for the perception tasks. The results obtained by the independent pipelines are fused in the final step [7, 20].

Compared to the above two methods of fusion, the feature-level fusion is more suited for deep learning-based multi-task learning [3]. In feature-level fusion a single pipeline is adopted for perception. Recently, John et al. [18] proposed the RVNet to fuse radar and vision fusion for obstacle detection using feature level vision. The authors show that the feature-level fusion framework reports state-of-the-art performance with real-time computational complexity.

In this paper, we extend the RVNet to a multi-task learning framework, where the radar and vision features extracted in the input branches are shared in the output branches. By sharing the features, the proposed SO-Net performs free space segmentation and vehicle detection while reducing the computational complexity.

3 Algorithm

The SO-Net is a deep learning framework which performs sensor fusion of camera and radar features for semantic segmentation and vehicle detection. The SO-Net architecture, based on the RVNet, contains two input branches for feature extraction and output branches for vehicle detection and semantic segmentation.

The semantic segmentation framework can be utilized to detect the vehicles instead of the separate vehicle detection output branch. However, the semantic segmentation framework does not provide the instances of vehicles for tracking. The instances of vehicles are provided by the instance segmentation framework. However, the instance segmentation framework utilizes a bounding box-based obstacle detector in its initial step [16]. Thus in our work, we propose to utilize an obstacle detection branch and semantic segmentation branch. An overview of SO-Net modules are shown in Fig. 2.

3.1 SO-Net Architecture

Feature Extraction Branches. The SO-Net has two input feature extraction branches which extract the features from the front camera image I and the “sparse radar image” S. The “sparse radar image” is a 3-channel image of size \((224 \times 224)\), where each non-zero pixel in the S contains the depth, lateral velocity and longitudinal velocity radar features. The velocity features are compensated by the ego-motion of the vehicle. The two feature extraction branches extract image-specific and radar-specific features, respectively. Each randomly initialised input branch contains multiple encoding convolutional layers and pooling layers. These specific features maps are shared with the two output branches. The detailed architecture of the feature extraction branches are given in Fig. 3.

Vehicle Detection Output Branch. The radar and image feature maps are fused in the vehicle detection output branch. The vehicle detection branch is based on the tiny Yolo3 network. The fusion of the radar and image feature maps in the output branches are performed by concatenation, \(1\times 1\) 2D convolution and up-sampling. The vehicle detection output branch detects vehicles in two sub-branches. In the first sub-branch, small and medium vehicles are detected. In the second sub-branch, big vehicles are detected. For both the sub-branches, the YOLOv3 loss function [15] is used within the binary classification framework.

Free Space Semantic Segmentation Output Branch. The second output branch performs semantic segmentation for estimating the free space for the vehicle. The free space represents the drivable area on the road surface. In this work, we define everything other than the free space as the background.

For the semantic segmentation framework, an encoder-decoder architecture is utilized. The radar and vision features obtained in the encoding layers are shared and fused in the output branch using the skip connections. In the skip connections, the vision and radar features at the different encoding levels are transferred individually to the corresponding semantic segmentation output branch. The feature maps are effectively fused using concatenation layer. Details of the encoder-decoder architecture are found in Fig. 3. We use a sigmoid activation function at the output layer for the binary semantic segmentation.

The detailed architecture of the proposed SO-Net. Conv2D(m,n) represents 2D convolution with m filters with size \(n \times n\) and stride 1. Maxpooling 2D is performed with size (2,2). The zero-padding 2D pads as following (top, bottom), (left, right). The Yolo output conv (Conv2D(30,1)) and the output reshape are based on the YOLOv3 framework.

Training. The SO-Net is trained with the image, radar points and ground truth annotations from the Nuscenes dataset. For the semantic segmentation, we manually annotate the free space for the images in the Nuscenes dataset as the dataset doesn’t contain semantic information. The SO-Net is trained with an Adam optimizer with learning rate of 0.001.

3.2 SO-Net Variants

We propose different variations of the proposed SO-Net to understand how each input branch contributes to the learning.

Fusion for Vehicle Detection. The camera and radar features are utilized for the vehicle detection task alone, instead of the joint multi-task learning (Fig. 4(a)). The architecture is similar to the SO-Net in Fig. 3, with the omission of the segmentation branch.

Fusion for Semantic Segmentation. The camera and radar features are utilized for the semantic segmentation task alone, instead of the joint multi-task learning (Fig. 4(b)). The architecture is similar to the SO-Net in Fig. 3, with the omission of the vehicle detection branch.

Camera-Only for Vehicle Detection. The camera features “alone” are utilized for the vehicle detection task, instead of the sensor fusion for the joint multi-task learning (Fig. 4(c)). The architecture is similar to the SO-Net in Fig. 3, with the omission of the radar-input branch and segmentation branch.

Camera-Only for Semantic Segmentation. The camera features “alone” are utilized for the semantic segmentation task, instead of the sensor fusion for the joint multi-task learning (Fig. 4(d)). The architecture is similar to the SO-Net in Fig. 3, with the omission of the radar-input branch and vehicle detection branch.

4 Experimental Section

Dataset: The different algorithms are validated on the Nuscenes dataset with 308 training and 114 testing samples. The training data contain scenes from rainy weather and night-time. Example scenes from the dataset are shown in Fig. 1.

Algorithm Parameters: The proposed algorithm and its variants were trained with batch size 8 and epochs 20 using the early stopping strategy. The algorithms were implemented on Nvidia Geforce 1080 Ubuntu 18.04 machine using TensorFlow 2.0. The performance of the networks are reported using accuracy and computational time.

Error Measures: The performance of the vehicle detection for the networks are reported using the Average Precision (AP) with IOU (intersection over threshold) of 0.5. In case of the semantic segmentation, we report the per-pixel classification accuracy for free space segmentation.

Results. The performance of the different algorithms tabulated in Tables 1 and 2 show that the segmentation accuracy of the proposed SO-Net and vehicle detection accuracy of the fused vehicle detection framework are better than the variants. The computational time of all the algorithms are real-time in the order of 10–25 ms.

Discussion. The results tabulated in Tables 1 and 2 show that performance of the SO-Net is similar to the fusion network variants, with marginally inferior vehicle detection accuracy and superior semantic segmentation accuracy. However, the SO-Net reports improved computational complexity. The two fusion network variants, fusion with vehicle detection and fusion with semantic segmentation, report a combined computational time of (vehicle det-15 \(+\) semantic seg-20) 35 ms per frame. The proposed SO-Net reports a reduced computational time of 25 ms with similar performance as shown in Table 3 (Fig. 5).

In the case of comparison with the camera-only network variants, the SO-Net reports better accuracy for both vehicle detection and semantic segmentation tasks, with similar computational complexity. The two camera-only network variants report a combined computational time of (vehicle det-7 \(+\) semantic seg-15) 22 ms per frame, which is similar to the SO-Net as shown in Table 3.

The observed results show the effectiveness of sensor fusion for vehicle detection and semantic segmentation. The SO-Net and fusion network variants both report better accuracy than the camera-only network variants. In case of the computational complexity, we see that the multi-task learning based SO-net reports reduced the computational complexity compared to the individual fusion networks (Fig. 6).

5 Conclusion

A deep sensor fusion and joint learning framework termed as the SO-Net is proposed for the sensor fusion of camera-radar. The SO-Net is a multi-task learning framework, where the vehicle detection and free space segmentation is performed using a single network. The SO-Net contains two independent feature extraction branches, which extract radar and camera specific features. The multi-task learning is performed using two output branches. The proposed network is validated on the Nuscenes dataset and perform comparative analysis with variants. The results show that sensor fusion improves the vehicle detection and semantic segmentation accuracy, while reporting reduced computational time. In our future work, we will consider the fusion of additional sensors.

References

Bombini, L., Cerri, P., Medici, P., Aless, G.: Radar-vision fusion for vehicle detection. In: International Workshop on Intelligent Transportation, pp. 65–70 (2006)

Caesar, H., et al.: nuScenes: a multimodal dataset for autonomous driving. CoRR abs/1903.11027 (2019)

Chadwick, S., Maddern, W., Newman, P.: Distant vehicle detection using radar and vision. CoRR abs/1901.10951 (2019)

Fang, Y., Masaki, I., Horn, B.: Depth-based target segmentation for intelligent vehicles: fusion of radar and binocular stereo. IEEE Trans. Intell. Transp. Syst. 3(3), 196–202 (2002)

Gaisser, F., Jonker, P.P.: Road user detection with convolutional neural networks: an application to the autonomous shuttle WEpod. In: International Conference on Machine Vision Applications (MVA), pp. 101–104 (2017)

Sistu, G., Leang, I., Yogamani, S.: Real-time joint object detection and semantic segmentation network for automated driving (2018)

Garcia, F., Cerri, P., Broggi, A., de la Escalera, A., Armingol, J.M.: Data fusion for overtaking vehicle detection based on radar and optical flow. In: 2012 IEEE Intelligent Vehicles Symposium, pp. 494–499 (2012)

Jazayeri, A., Cai, H., Zheng, J.Y., Tuceryan, M.: Vehicle detection and tracking in car video based on motion model. IEEE Trans. Intell. Transp. Syst. 12(2), 583–595 (2011)

John, V., Karunakaran, N.M., Guo, C., Kidono, K., Mita, S.: Free space, visible and missing lane marker estimation using the PsiNet and extra trees regression. In: 24th International Conference on Pattern Recognition, pp. 189–194 (2018)

Kato, T., Ninomiya, Y., Masaki, I.: An obstacle detection method by fusion of radar and motion stereo. IEEE Trans. Intell. Transp. Syst. 3(3), 182–188 (2002)

Macaveiu, A., Campeanu, A., Nafornita, I.: Kalman-based tracker for multiple radar targets. In: 2014 10th International Conference on Communications (COMM), pp. 1–4 (2014)

Manjunath, A., Liu, Y., Henriques, B., Engstle, A.: Radar based object detection and tracking for autonomous driving. In: 2018 IEEE MTT-S International Conference on Microwaves for Intelligent Mobility (ICMIM), pp. 1–4 (2018)

Teichmann, M., Weber, M., Zoellner, M., Cipolla, R., Urtasun, R.: MultiNet: real-time joint semantic reasoning for autonomous driving (2018)

Milch, S., Behrens, M.: Pedestrian detection with radar and computer vision (2001)

Redmon, J., Farhadi, A.: YOLOv3: an incremental improvement (2018). http://arxiv.org/abs/1804.02767

Shao, L., Tian, Y., Bohg, J.: ClusterNet: instance segmentation in RGB-D images. arXiv (2018). https://arxiv.org/abs/1807.08894

Sugimoto, S., Tateda, H., Takahashi, H., Okutomi, M.: Obstacle detection using millimeter-wave radar and its visualization on image sequence. In: 2004 Proceedings of the 17th International Conference on Pattern Recognition. ICPR 2004, vol. 3, pp. 342–345 (2004)

John, V., Mita, S.: RVNet: deep sensor fusion of monocular camera and radar for image-based obstacle detection in challenging environments. In: Lee, C., Su, Z., Sugimoto, A. (eds.) PSIVT 2019. LNCS, vol. 11854, pp. 351–364. Springer, Cham (2019). https://doi.org/10.1007/978-3-030-34879-3_27

Wang, X., Xu, L., Sun, H., Xin, J., Zheng, N.: On-road vehicle detection and tracking using MMW radar and monovision fusion. IEEE Trans. Intell. Transp. Syst. 17(7), 2075–2084 (2016)

Zhong, Z., Liu, S., Mathew, M., Dubey, A.: Camera radar fusion for increased reliability in ADAS applications. Electron. Imaging Auton. Veh. Mach. 1(4), 258–258 (2018)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2020 Springer Nature Switzerland AG

About this paper

Cite this paper

John, V., Nithilan, M.K., Mita, S., Tehrani, H., Sudheesh, R.S., Lalu, P.P. (2020). SO-Net: Joint Semantic Segmentation and Obstacle Detection Using Deep Fusion of Monocular Camera and Radar. In: Dabrowski, J., Rahman, A., Paul, M. (eds) Image and Video Technology. PSIVT 2019. Lecture Notes in Computer Science(), vol 11994. Springer, Cham. https://doi.org/10.1007/978-3-030-39770-8_11

Download citation

DOI: https://doi.org/10.1007/978-3-030-39770-8_11

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-39769-2

Online ISBN: 978-3-030-39770-8

eBook Packages: Computer ScienceComputer Science (R0)