Abstract

Because convolutional neural networks can extract discriminative features, they are widely used in face recognition and significantly improve the performance in face recognition. In order to improve the accuracy of the face recognition, in addition to improving the structures of convolutional neural networks, many new loss functions have been proposed to enhance the distinguishing ability to extract features, such as SphereFace and ArcFace. Inspired by SphereFace and ArcFace, we propose a new loss function called MaaFace, in which the angular multiplier and the angular addition are introduced into the loss function simultaneously. We give a detailed derivation of MaaFace and conduct extensive experiments on different networks and different data sets. Experiments show that our proposed loss function can achieve an out performance than the latest face recognition loss functions in face recognition accuracy. Finally, we explain why MaaFace can achieve better performance through statistical analysis of the experimental data.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Convolutional neural networks are widely used in computer vision [1] and greatly promote the development of it. As face recognition is a representative classification task in computer vision, convolutional neural networks are widely used in it and improve the face recognition effect. There are four main factors affecting face recognition accuracy, including dataset, neural network architecture, initialization, loss function.

1.1 Dataset

A good dataset should be sufficient, complete and noise-free. A sufficient amount of data for training can reduce overfitting. Completeness requires the dataset covers various situations that may occur in practice, such as changes in illumination, pose, age, etc. Noise-free means that the data is labeled correctly, available and in the same form. MS-Celeb-1M [2], VGGFace2 [3], LFW [4], CFP [5], AgeDB [6] and MegaFace [7] are some datasets commonly used.

1.2 Neural Network Architecture

The neural network architecture not only affects the face recognition accuracy but also affects the time required for training and actual use. In general, the deeper the network, the better the effect of face recognition. However, it will need a larger training dataset to avoid overfitting, longer time to train and take more time in actual use. VGG network [8], Google Inception V1 network [9], ResNet [10] and Inception-ResNet [11] are some basic network structures commonly used.

1.3 Initialization

The method of neural network parameter initialization has a direct impact on the final recognition effect. Good parameter initialization can make the neural network more convergent and optimize the performance of the neural network. Commonly used initialization methods are Msra initialization [12], Xavier initialization [13], Random initialization [14] and so on.

1.4 Loss Function

The loss function can be roughly divided into two categories. One is the Euclidean margin-based loss measured by Euclidean distance, another is the Angular and Co-sine margin-based loss measured by angular distance and the cosine distance.

In [15], the softmax loss was introduced into the neural network of face recogni-tion. A lot of loss functions are variants of it. Based on softmax loss, Center loss [16] adds constraints on the deviation between features and their corresponding intra-class center, so that the feature space of the same person is compressed. Considering the distance between the most different individuals in intra-class and the distance between the nearest inter-class centers, Range loss [17] reduces the feature distance of the same person and enlarges the distance between different people. In addition to adding the constraints of intra-class and inter-class distances, Marginal loss [18] sets an empirical minimum threshold for intra-class distance and inter-class distance.

Different from the Euclidean margin-based loss function, SphereFace [19] sets the bias of the fully connected layer of the loss function to 0 and takes L2 normalization on the weights of the fully connected layer. In addition, SphereFace introduces an angular multiplier, which makes the classification boundary \( \cos (u\theta ) \). CosFace [20] normalizes the features and turns the training classification boundaries into \( \cos \theta - v \), converting the face classification to a measure based on the cosine distance, further enhancing the effect of face recognition. ArcFace [21] turns the training classification boundaries into \( \cos (\theta + v) \).

Although ArcFace is currently the best loss function in the field of face recognition, we found that when using ArcFace as the loss function, all the class feature centers of training dataset are approximately evenly distributed in the feature space, which is an over-fitting of the training dataset and limits the effect of face recognition.

SphereFace is mainly to compress the feature space of the intra-class, as ArcFace aims to make the feature distance of inter-class to be larger. Inspired by SphereFace and ArcFace, combine the advantages of them, we propose a new loss function called MaaFace, whose classification boundary is \( \cos (u\theta + v) \). Our main contributions are as follows:

-

(1)

Compared with ArcFace, besides the angular addition \( v \), we introduce the angular multiplier \( u \) into the loss function simultaneously. This can alleviate the over-fitting problem caused by the angular addition.

-

(2)

MaaFace advances the state-of-the-art performance over most of the benchmarks on popular face databases including LFW, CFP, AgeDB.

An overview of the rest of the paper is as follows: The derivation process of the MaaFace are introduced in Sect. 2. The experimental results are shown in Sect. 3. Finally, the conclusion is indicated in Sect. 4.

2 Proposed Approach

In this chapter, we introduce several variants of the softmax loss and present how to derive the expression of MaaFace in detail.

2.1 Softmax Loss

As one of the basic classification loss functions of the convolutional neural network, the softmax loss is as follows:

where \( m \) is the batch size. \( n \) is the number of face categories of the training dataset. \( x_{i} \in {\mathbf{R}}^{d} \) is the feature extracted from the \( i \)-th image, where \( d \) represents the dimension of the features. \( y_{i} \) is the real category of the \( i \)-th image. \( W \in {\mathbf{R}}^{d \times n} \) and \( b \in {\mathbf{R}}^{n} \) respectively refer to the weights and bias of the last fully connected layer of the convolutional neural network.

The classification boundary of softmax loss is \( (W_{1} - W_{2} )x + b_{1} - b_{2} = 0 \), as shown in Fig. 1. It does not explicitly write the intra-class distance and the inter-class distance as penalty terms into the formula. Although softmax loss is widely used in convolutional neural networks for face recognition, the performance is not satisfied.

2.2 SphereFace

Assume the angle between the vectors \( W_{j}^{T} \) and \( x_{i} \) is \( \theta_{j} \), let the absolute value of \( b_{j} \) be 0, we can get \( W_{j}^{T} x_{i} + b_{j} = | |W_{j} | |\,\, | |x_{i} | |\cos \theta_{j} \). It is noted that normalizing the weights of the fully connected layer can accelerate the convergence speed of the training [29]. Fixing \( ||W_{j} || = 1 \) by \( L2 \) normalization, Eq. 1 can be rewritten as:

An Angular multiplier \( u \) is introduced by SphereFace [19]:

where \( \theta_{{y_{i} }} \in [0,\pi /u] \).

The classification boundary of SphereFace is \( ||x||(\cos (u\theta_{1} ) - \cos \theta_{2} ) = 0 \). Making that the angle between the vectors of two inter-class feature centers is \( \theta \), we can get \( \theta_{1} = \frac{1}{u + 1}\theta \), as shown as Fig. 2(a). The introduction of the angular multiplier \( u \) in SphereFace has a very obvious geometric interpretation, which compresses the feature space of each class and improves the accuracy of face recognition.

To eliminate the constraint \( \theta_{{y_{i} }} \in [0,{\pi \mathord{\left/ {\vphantom {\pi u}} \right. \kern-0pt} u}] \), SphereFace replaces \( \cos (u\theta_{{y_{i} }} ) \) with \( \varphi (\theta_{{{\text{y}}_{i} }} ) \):

where \( \varphi (\theta_{{{\text{y}}_{i} }} ) = ( - 1)^{k} \cos (u\theta_{{{\text{y}}_{i} }} ) - 2k \), \( \theta_{{{\text{y}}_{i} }} \in [\frac{k\pi }{u},\frac{(k + 1)\pi }{u}] \), \( k \in [0,u - 1] \) and \( k \) is an integer. The parameter \( u \) is an integer greater than 0, controlling the degree of compression of each intra-class angle in the feature space. A dynamic super parameter \( \lambda \) is introduced to ensure training convergence, \( \varphi (\theta_{{{\text{y}}_{i} }} ) \) is rewritten as follows:

where \( \lambda \) is set to 1000 at the beginning and gradually reduced to 5 when training, so that the angular range of each intra-class is gradually compressed.

2.3 ArcFace

In [22], it is proved that the normalization of the output features can accelerate the training convergence. Fixing \( ||x_{i} || = 1 \) by \( L2 \) normalization and scales it to \( s \), Eq. 2 can be written as:

ArcFace improved the loss function by introducing an angular addition \( v \) [21]:

where \( \theta_{{{\text{y}}_{i} }} \in [0,\pi - v] \).

The classification boundary is \( ||x||(\cos (\theta_{1} + v) - \cos \theta_{2} ) = 0 \). Making the angular distance between two classes centers is \( \theta \), the maximum distance between the individual and its corresponding class center is \( \theta_{1} = \frac{\theta - v}{2} \).

The angular addition \( v \) of ArcFace specifies the minimum distance between the most similar individuals between inter-class, as SphereFace doesn’t explicitly write the minimum inter-class distance as penalty terms into the formula. The distance between the nearest inter-class features of SphereFace is smaller than ArcFace’s. Therefore, SphereFace is easier to confuse individuals between inter-classes and ArcFace gets a better effect, as shown in Fig. 2.

2.4 MaaFace

SphereFace loss function compresses the angular space of intra-class, making the intra-class features more compact. However, SphereFace does not optimize the distance of inter-class, which tends to cause the distance between the class centers to be too small and limits the effect of face recognition. ArcFace is the opposite of SphereFace, which is just mainly to optimize the distance between classes. When the distance between the class centers is close, ArcFace has a better control effect on the distance of the intra-class. However, as the distance between the class centers increases, this control effect becomes weaker and the distribution of features within the class becomes much looser, as shown in Fig. 3.

Increasing the angular addition \( v \) can compress the feature space of the intra-class. However, in the initial stage of increasing \( v \), the distance between inter-class centers tends to increase while the feature space of the intra-class remains almost unchanged. This will make the inter-class centers of the training dataset tend to be evenly distributed in the feature space, as shown in Fig. 4. At this time, the minimum distance between a class center and its nearest inter-class center is the same as others’, which does not meet the actual situation that the training data inter-class centers are randomly distributed in the feature space. This is overfitting of the training dataset. Then as \( v \) continues to increase, the feature space of the intra-class begins to be compressed while the inter-class centers remain evenly distributed.

Features distribution of Fashion-MNIST [25] using ArcFace as the loss function (\( v = 0. 5 \))

Inspired by SphereFace and ArcFace, we propose a new loss function called MaaFace by combing with the advantages of both of them. We introduce the angular multiplier \( u \) and the angular addition \( v \) into the loss function simultaneously. The loss function is:

Where \( u \) is an integer larger than 1 and \( v \in [0,\pi ] \).

The classification boundary becomes \( ||x||(\cos (u\theta_{1} + v) - \cos \theta_{2} ) = 0 \). Let the angular distance between the inter-class centers is \( \theta \), so that \( \theta_{1} = \frac{\theta - v}{u + 1} \). As shown in Fig. 5, MaaFace keeps a certain minimum angular distance between inter-class by introducing the angular addition \( v \). By introducing the angular multiplier \( u \), MaaFace compresses the feature space of the intra-class. Let \( v \) be a value that is not very large. When the distance between the inter-class centers increases, the angular multiplier can still keep the feature space of the intra-class compact. On the one hand, the angular multiplier can compress the feature space of the intra-class. On the other hand, the angular multiplier maintains the flexible distribution of the inter-class centers and reduces the over-fitting of the training dataset.

To eliminate the constraint \( \theta_{{{\text{y}}_{i} }} \in [0,\frac{\pi - v}{u}] \), we further replace \( \varphi (\theta_{{{\text{y}}_{i} }} ) = \cos (u\theta_{{y_{i} }} + v) \) with \( \varphi (\theta_{{{\text{y}}_{i} }} ) = ( - 1)^{k} \cos (u\theta_{{y_{i} }} + v) - 2k \), where \( u\theta_{{y_{i} }} + v \in [k\pi ,(k + 1)\pi ] \), \( k \in [0,u] \) and \( k \) is an integer, \( \theta_{{{\text{y}}_{i} }} \in [0,\pi ] \).

Considering the training convergence problem, referring to the training process of SphereFace, we introduce the hyper parameter \( \alpha \):

It is noted that \( \alpha \) gradually increased from 0 to 0.2 during training.

3 Experiments

In this section, we will describe our experiment from three aspects, including datasets, network structure, experimental results.

3.1 Dataset

3.1.1 Training Dataset

MS-Celeb-1M. MS-Celeb-1M contains approximately 10M images. It contains 100k celebrities and each person has 100 photos on average. In order to reduce noise and get a higher quality data set, the features of all images are firstly extracted in [23]. After that, the portion, whose distance between themselves and their intra-class feature center is greater than a given threshold, is removed. Finally, the image with the distance near the threshold is checked manually. The refined dataset is published available within a binary file by [21]. We use MS-Celeb-1M as the training dataset. The refinement dataset contains 85,164 people with a total of 3.8M images.

3.1.2 Testing Dataset

LFW: LFW is a dataset in the unconstrained natural scene where the photos vary greatly in orientation, expression, and lighting environment [4]. It contains 13,233 photos of 5,749 people. The database randomly selected 6000 pairs of faces to form face recognition image pairs, in which 3000 pairs of photos belong to the same person, and 3000 pairs of photos belong to different people.

CFP: The CFP is a 500-person dataset with 10 front images and 4 contour images per person [5]. CFP has two sub-dataset, namely frontal-frontal (FF) and frontal-profile (FP). The FF contains 3500 pairs of random image pairs belonging to the same person and 3500 pairs of random image pairs belonging to different people, in which the images of each pair are frontal images. The FP contains 3500 pairs of random image pairs belonging to the same person and 3500 pairs of random image pairs belonging to different people, in which each pair of images contains a front image and a profile image. We only use the most challenging subset CFP-FP as the vilification dataset.

AgeDB: The AgeDB dataset contains 12,240 images of 440 people, whose images vary greatly in poses, lighting, and age [6]. This dataset contains four sub-datasets for different age spans, namely 5 years, 10 years, 20 years, 30 years. Each sub-dataset contains 3,000 image pairs of the same person and 3000 image pairs of different people. Here, we only test on AgeDB-30, which is the most difficult one.

3.2 Network Structure

All models, implemented by MxNet proposed by [24] in this paper, are trained on four NVIDIA Titan X Pascal (12 GB) GPUs. Momentum is set at 0.9 while weight decay is set at \( 5e{ - 4} \). The batch size is 512. The learning rate is started at 0.1. As for ArcFace, following [21], the learning rate is divided by 10 at the 100k, 140k, 160k, 180k iterations. Total iteration step is set as 200k. As for MaaFace, we first train with hyper parameter \( \alpha = 0. 0 \) and the learning rate is divided by 10 at the 50k, 80k, 100k, 120k iterations. Then at the 130k iterations, the learning rate is reset as 0.1. At each iteration later, \( \alpha \) adds \( 5e{ - 6} \) until \( \alpha \) equals 0.2. The learning rate is divided by 10 at the 160k, 170k, 175k, 178k iterations. Total iteration step is set as 185k.

We use SE-LResNetE-IR as the base network and just replace loss function with ours. The dimension of the features extracted by SE-LResNetE-IR is 512. Before training, we use MTCNN [25] to perform face detection and alignment on images. The faces will then be cropped and resized to \( 1 1 2\times 1 1 2 \) pixels. Then, we normalize each pixel in RGB images by subtracting 127.5 and dividing it by 128.

In order to illustrate the universality of the loss function, we performed experiments on the models of 34, 50, and 100 layers respectively.

3.3 Analysis of Experimental Results

We firstly train on the 50 layers models with different angular multiplier \( u \) and different angular addition \( v \). The result is shown in Table 1. When \( u = 2 \) and \( v = 0. 3 \), we get the best performance in combination. So, we fix the angular multiplier \( u \) as 2 and the angular addition \( v \) as 0.3. In [21], ArcFace gets the best performance when the angular addition \( v \) as 0.5. We train the models with 34 layers, 50 layers, 100 layers respectively, and get the results as Table 2.

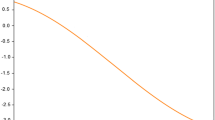

Although the result on LFW dataset of MaaFace-100 is a little smaller than that of ArcFace-100, the other results of MaaFace are better than ArcFace’s. It proves that introduced the angular multiplier \( u \) and the angular addition \( v \) into the loss function simultaneously can improve the performance of the face recognition. In order to explain why this can get a better result, we have statistics on the distribution of the shortest distance between feature centers of inter-class. As shown in Fig. 6, compared to ArcFace, the distribution of MaaFace is more random, which reduces over-fitting to some extent. Therefore, MaaFace can get better performance.

4 Conclusion

In this paper, we propose a new loss function, called MaaFace. Through extensive experiments, we can prove that the loss function can effectively improve the face recognition effect compared with the most advanced loss functions on the same convolutional neural network. Besides, we explain why MaaFace can get better performance through theoretical derivation and mathematical statistics.

References

Voulodimos, A., Doulamis, N., Doulamis, A., Protopapadakis, E.: Deep learning for computer vision: a brief review. In: Computational Intelligence and Neuroscience (2018)

Guo, Y., Zhang, L., Hu, Y., He, X., Gao, J.: MS-Celeb-1M: a dataset and benchmark for large-scale face recognition. In: Leibe, B., Matas, J., Sebe, N., Welling, M. (eds.) ECCV 2016. LNCS, vol. 9907, pp. 87–102. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-46487-9_6

Cao, Q., Shen, L., Xie, W., Parkhi, O.M., Zisserman, A.: VGGFace2: a dataset for recognising faces across pose and age. In: 13th IEEE International Conference on Automatic Face & Gesture Recognition (FG 2018), pp. 67–74. IEEE (2018)

Huang, G.B., Mattar, M., Berg, T., Learned-Miller, E.: Labeled faces in the wild: a database for studying face recognition in unconstrained environments. In: Workshop on Faces in ‘Real-Life’ Images: Detection, Alignment, and Recognition (2008)

Sengupta, S., Chen, J.-C., Castillo, C., Patel, V.M., Chellappa, R., Jacobs, D.W.: Frontal to profile face verification in the wild. In: IEEE Winter Conference on Applications of Computer Vision (WACV), pp. 1–9. IEEE (2016)

Moschoglou, S., Papaioannou, A., Sagonas, C., Deng, J., Kotsia, I., Zafeiriou, S.: AgeDB: the first manually collected, in-the-wild age database. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, pp. 51–59 (2017)

Kemelmacher-Shlizerman, I., Seitz, S.M., Miller, D., Brossard, E.: The MegaFace benchmark: 1 million faces for recognition at scale. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 4873–4882 (2016)

Parkhi, O.M., Vedaldi, A., Zisserman, A.: Deep face recognition. In: BMVC, vol. 3, p. 6 (2015)

Szegedy, C., et al.: Going deeper with convolutions. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 1–9 (2015)

He, K., Zhang, X., Ren, S., Sun, J.: Identity mappings in deep residual networks. In: Leibe, B., Matas, J., Sebe, N., Welling, M. (eds.) ECCV 2016. LNCS, vol. 9908, pp. 630–645. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-46493-0_38

Szegedy, C., Ioffe, S., Vanhoucke, V., Alemi, A.A.: Inception-v4, inception-ResNet and the impact of residual connections on learning. In: Thirty-First AAAI Conference on Artificial Intelligence (2017)

He, K., Zhang, X., Ren, S., Sun, J.: Delving deep into rectifiers: surpassing human-level performance on imagenet classification. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 1026–1034 (2015)

Glorot, X., Bengio, Y.: Understanding the difficulty of training deep feedforward neural networks. In: Proceedings of the Thirteenth International Conference on Artificial Intelligence and Statistics, pp. 249–256 (2010)

Thimm, G., Fiesler, E.: Neural network initialization. In: Mira, J., Sandoval, F. (eds.) IWANN 1995. LNCS, vol. 930, pp. 535–542. Springer, Heidelberg (1995). https://doi.org/10.1007/3-540-59497-3_220

Taigman, Y., Yang, M., Ranzato, M.A., Wolf, L.: DeepFace: closing the gap to human-level performance in face verification. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 1701–1708 (2014)

Wen, Y., Zhang, K., Li, Z., Qiao, Y.: A discriminative feature learning approach for deep face recognition. In: Leibe, B., Matas, J., Sebe, N., Welling, M. (eds.) ECCV 2016. LNCS, vol. 9911, pp. 499–515. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-46478-7_31

Zhang, X., Fang, Z., Wen, Y., Li, Z., Qiao, Y.: Range loss for deep face recognition with long-tailed training data. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 5409–5418 (2017)

Deng, J., Zhou, Y., Zafeiriou, S.: Marginal loss for deep face recognition. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, pp. 60–68 (2017)

Liu, W., Wen, Y., Yu, Z., Li, M., Raj, B., Song, L.: SphereFace: deep hypersphere embedding for face recognition. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 212–220 (2017)

Wang, H., et al.: CosFace: large margin cosine loss for deep face recognition. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 5265–5274 (2018)

Deng, J., Guo, J., Xue, N., Zafeiriou, S.: ArcFace: additive angular margin loss for deep face recognition (2018)

Wang, F., Xiang, X., Cheng, J., Yuille, A.L.: NormFace: L2 hypersphere embedding for face verification. In: Proceedings of the 25th ACM International Conference on Multimedia, pp. 1041–1049. ACM (2017)

Xiao, H., Rasul, K., Vollgraf, R: Fashion-MNIST: a novel image dataset for benchmarking machine learning algorithms (2017)

Chen, T., et al.: MXNet: a flexible and efficient machine learning library for heterogeneous distributed systems (2015)

Zhang, K., Zhang, Z., Li, Z., Qiao, Y.: Joint face detection and alignment using multitask cascaded convolutional networks. IEEE Sig. Process. Lett. 23(10), 1499–1503 (2016)

Acknowledgements

The project sponsored by the National Key Research and Development Program (No. 2016YFB0502002).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2019 Springer Nature Switzerland AG

About this paper

Cite this paper

Liu, W., Jiao, J., Mo, Y., Jiao, J., Deng, Z. (2019). MaaFace: Multiplicative and Additive Angular Margin Loss for Deep Face Recognition. In: Zhao, Y., Barnes, N., Chen, B., Westermann, R., Kong, X., Lin, C. (eds) Image and Graphics. ICIG 2019. Lecture Notes in Computer Science(), vol 11903. Springer, Cham. https://doi.org/10.1007/978-3-030-34113-8_53

Download citation

DOI: https://doi.org/10.1007/978-3-030-34113-8_53

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-34112-1

Online ISBN: 978-3-030-34113-8

eBook Packages: Computer ScienceComputer Science (R0)