Abstract

The emergence of new biotechnologies provides great promise for biodefense, especially for key objectives of biosurveillance and early warning, microbial forensics, risk and threat assessment, horizon scanning in biotechnology, and medical countermeasure (MCM) development, scale-up, and delivery. Understanding and leveraging the newly developed capabilities afforded by emerging biotechnologies require knowledge about cutting-edge research and its real or proposed application(s), the process through which biotechnologies advance, and the educational and research infrastructure that promotes multi-disciplinary science. Innovation in research and technology development are driven by sector-specific needs and the convergence of the physical, chemical, material, computer, engineering, and/or life sciences. Biotechnologies developed for other sectors could be applied to biodefense, especially if the individuals involved are able to innovate in concept design and development. Of all biodefense objectives, biosurveillance seems to have reaped the most benefit from emerging biotechnologies, specifically the integration and analysis of diverse clinical, biological, demographic, and other relevant data. More recently, scientists have begun applying synthetic biology, genomics, and microfluidics to the development of new products and platforms for MCMs. Unlike these objectives, investments in microbial forensics have been few, limiting its ability to harness biotechnology advances for collecting and analyzing data. Looking to the future, emerging biotechnologies can provide new opportunities for enhancing biodefense by addressing capability gaps.

You have full access to this open access chapter, Download chapter PDF

Similar content being viewed by others

Keywords

- Emerging biotechnologies

- Biodefense

- Convergence science

- Multi-disciplinary science

- Benefit

- Workforce

- Medical countermeasures

- Biosurveillance

- Infectious disease modeling

- Microbial forensics

13.1 Introduction

Biotechnologies provide critical capabilities in the defense against natural and man-made threats. In health, new scientific discoveries and platforms contribute to the development of vaccines and medicines against pathogens, such as influenza A virus or Bacillus anthracis. Researchers’ ability to understand the relationship between pathogens and their hosts provide the information needed to create targeted vaccines and medicines. Similarly, scientists have begun applying synthetic biology tools and concepts to vaccine development, hoping to reduce the time involved in creating a vaccine against an emerging infectious disease and enabling rapid response efforts. More recently, investments in additive bio-manufacturing have provided new opportunities to print human tissues and organs, which in some laboratories, are being used to study the effects of pharmaceutical candidates before testing in animals or people. In addition, advances in knowledge gained from neuroscience and behavioral sciences provides new opportunities to understand and identify clinical interventions to prevent or treat various psychological disorders, such as post-traumatic stress disorder after return from military conflict.

Beyond health efforts, governments are looking to the life sciences and biotechnology to assist in early warning of infectious disease events, forensics, decontamination of materials, and many other uses. Efforts to gain advanced warning of infectious disease events have leveraged new data analysis technologies (e.g., natural language processing, image analysis, and text analysis) to evaluate a variety of information, including environmental, ecological, genomic, physiological, and other relevant data. Efforts to promote longevity of materials and equipment have leveraged advances in synthetic biology. Efforts to enhance microbial forensics and attribution have leveraged advances in microbiology and improved data handing and sharing procedures between public health and law enforcement communities. Enabling this research and data sharing was a commitment by the U.S. government and international organizations to seek solutions for capability gaps in preventing, detecting, and responding to natural and/or man-made infectious disease events of international concern.

13.1.1 Biotechnology Market

The global market for biotechnology is in the tens of billions of dollars. Financial forecasts estimate the size of the global biotechnology market by 2021 to be between $2 billion and $6 billion dollars, suggesting an annual growth rate of 14–39% [1,2,3]. The variability in estimates results from the pharmaceutical business model wherein a single pharmaceutical product can be worth several billions of dollars [4], but about only 1% of all compounds transition from pre-clinical research stages to licensure. This estimate does not include knowledge generated, products developed, and services provided by academia, amateur biologists, entrepreneurs, and governments, indicating that the total scope of biotechnology research and development may be significantly larger than the forecasts suggest. In fact, the Silicon Valley venture capital community has begun creating new models for promoting innovation among entrepreneurial individuals interested in leveraging biology to address a particular concern. In addition, amateur biologists (DIYBio) are raising tens to hundreds of thousands of dollars from crowdsource funding websites, such as Kickstarter, enabling their increasingly sophisticated research activities.

The biotechnology enterprise contributes to the health, agriculture, energy, environmental, scientific, and defense sectors. The multidisciplinary nature of modern biotechnology drives advancement in the basic and applied sciences, enabling new approaches for addressing health care and public health needs, enhancing production of agricultural commodities, pushing scientific research and analysis, preserving biodiversity and ecological health, and protecting military personnel and materiel. Computational technologies have enhanced the study of complex biological processes and systems [5], including collection, integration, and analysis of large amounts of data on genomes [6,7,8], epigenomes (elements that are not encoded in DNA, but control expression of genes) [9, 10], proteomes (protein interactions) [11], metabolomes (biosynthetic pathways controlling an organism’s natural chemicals), microbiomes (all the commensal microbes on or in an individual) [12, 13], exposome (all the changes that occur after an individual is exposed to a foreign particle) [14, 15], pharmacogenome (genetic changes to a pharmacologic molecule) [16, 17], and other -omics data sets. Better understanding of biological systems has enabled the development of in silico models of animals to enable computer-based studies of medicines, proteins structures, and DNA structures [18, 19]. Data analysis technologies have enabled the creation of new computational models for infectious disease surveillance and enhanced efforts to leverage published data better [20]. Engineering and design concepts have led to new approaches for modifying biological systems to enable production of industrial chemicals, illicit drugs, ethanol, precursor chemicals for cosmetics, and other molecules [21, 22]. Advances in material science has enabled 3D bioprinting of tissues and organs, which are being used to test drug efficacy before human trials [23,24,25]. These are some examples of how advances in biotechnology are enabled by convergence with other scientific disciplines to benefit society.

13.1.2 Drivers of Biotechnology

The major drivers of advances in biotechnology vary by technology and application. Strong health, agriculture, energy, and scientific applications drive continued advancement of biotechnologies. Human health and agriculture are two primary drivers for biotechnology. Better diagnostics, medicines, and tailored health care promote the development and use of new biotechnologies. Agricultural production has led to use of genetic engineering to create hardier, larger, and more pest-resistant crops [26, 27]; data analytics of different types of agriculturally-relevant data sets to improve crop management and reduce of intra-crop variability [28, 29]; genomics and genome association studies in crops and livestock to identify the genetic basis of favorable and deleterious traits [30, 31]; and genome editing tools to modify crops and livestock [32, 33]. In addition, clean energy contributed to early development of synthetic biology approaches to produce organisms that can make or consume ethanol [34, 35]. Although non-biologically-based technologies have overtaken these synthetic organisms as better clean energy sources, synthetic biology has demonstrated utility in other sectors, such as the chemical, pharmaceutical, and cosmetic industries. These industries use biological systems for production of chemicals, and active ingredients in pharmaceuticals and other commercial products. Furthermore, scientific play and education (both formal and informal) contribute to the continued use and development of synthetic biology. Advancement in scientific knowledge and capability serves as a primary driver for the creation of new genetic-manipulation tools, data analysis software, and commercial kits, products, and services to make experimental procedures easier. Examples of drivers of genome editing and personalized health are described below.

Advances in genome editing largely are driven by human health and agriculture. The ability to make specific changes in a human cell’s genome to correct a disease, an agriculturally-important animal to eliminate a pesky trait, or in crops to enhance pest resistance provide significant drivers for improving the capability and precision of genome editing tools. In 2015, scientists used a non-CRISPR-based genome editing tool to modify healthy donor cells in the laboratory before injecting them into a 2-year old girl who had leukemia [36]. The cells were edited to reduce the chance that they would be rejected by the girl’s body. After treatment with the modified cells, the girl was cured of leukemia. More recently, research groups in Sweden, China, and the U.S. reported successful editing of human embryos [37,38,39]; though controversial, the U.S. National Academy of Medicine, Engineering, and Sciences stated that editing human embryos may be warranted to correct a debilitating genetic disease [40]. Unlike humans, modification of germ cells of animals, insects, and plants are much less controversial and occurring routinely, in some cases in the commercial sector. For example, Sangamo uses another genome editing tool (specifically, zinc finger nucleases) to produce hornless dairy cows, making milking less dangerous for farmers [41, 42]. The Bill and Melinda Gates Foundation is investing $35 million into CRISPR-enabled gene drives in mosquitoes as part of its initiatives to control the spread of malaria-causing plasmodia [43]. (Gene drives are DNA sequences that rapidly push a specific trait through a population.) Agriculture companies are editing crop seeds to increase resistance to pests.

The ability to tailor health care to individual people (i.e., personalized medicine) is a significant driver of advancement and application of -omics, systems biology, and similar types of research. Private health care providers, such as Inova Health Care, are beginning to collect genomic information of patients to improve research and patient care [44]. In 2015, the U.S. government launched its Precision Medicine Initiative, to collect, integrate, and analyze one million individual genomes for enhancing the scientific foundation for patient-centered health care in the U.S. One year later, China announced its precision medicine initiative with an investment of $9.2 billion [45]. These efforts, as with other similar efforts, include other influencing factors such as daily habits and patterns of family history.

Precision agriculture is much farther along than personalized medicine, in large part because the genomes of crops can be studied with high reliability and reproducibility. Unlike human genetic linkage studies, similar studies in plants have produced highly reliable information. This high degree of reliability enables better identification, prediction, and elimination of harmful disease in crops that have been studied. In addition to genetic information, precision agriculture programs integrate information about environmental, meteorological, and climate conditions in their analyses.

Empowerment of people to take control of their own health has and continues to push advancement and application of personal genomics, mobile health technologies, and biomedical research in amateur community labs. Personal genomics is a relatively new commercial market that provides three main focus areas: (1) mobile health devices, such as health apps for smart phones and fitbits; (2) direct-to-consumer genome sequencing services, such as 23andMe; and (3) genealogy services that provide individuals with information about their ancestry based on their genomic sequences. Going further than personal genomics, members of the DIY Bio community are securing funds to conduct research on their own microbiome, genetic diseases that affect their families, and biotechnologies for self-augmentation or enhancement.

13.1.3 Advances in and Biosecurity Considerations of Biotechnology

Small and large (i.e., transformative) changes influence the advancement and creation of new tools, knowledge, and concepts in biology. Small changes generally occur as part of the normal cycle of fundamental and applied research wherein new discoveries lead to new scientific questions or knowledge. Larger changes tend to occur when new fields of science, disciplines, or conceptual frameworks are combined (often referred to as convergence in science). Recent examples include additive biomanufacturing, which combines technologies of 3D printing with development of “ink” made of living cells [24]; synthetic biology (i.e., combining DNA-based “circuits” to create an organism of known function), which bring engineering and computer science concepts to the field of biology; and systems biology, which involves advanced data analytics and visualization (often considered big data analytics) to study connections and networks within and between molecules, pathways, cells, physiological systems, and life forms. In addition, transformative changes could result from trial-and-error during the discovery process or through careful observation of biological systems. Examples of these changes include genetic engineering, which was enabled by the discovery of proteins that cut DNA internally (called endonucleases), and CRISPR-based genome editing, which emerged from research on immune responses in bacteria.

The convergence of scientific disciplines has elicited concerns among security experts about the potential for new technical capabilities to disrupt national security, health, and prosperity. These concerns often stem from surprise about the development of new technologies and knowledge gained that could be used to cause physical, social, economic, and/or political harms to a country. However, technologies rarely arise without warning. Scientific publication, patent applications, conferences, and online media and blogs shed light on near-term advances taking place within scientific fields and disciplines. Through these resources, security experts can learn about cutting-edge research and applications to address health, agriculture, energy, and other societal needs. Identifying larger changes that occur through the integration and/or leveraging of advances from distinct fields involves an evaluation of technical challenges and/or gaps limiting benefits to social needs, technical challenges that stall advancement within fields, and scientific advances in different fields. For example, 3D bioprinting came about as a result of increasing need for tissues and organs for research (e.g., in vitro testing of drug candidates) and clinical purposes (e.g., more effective tissue or organ transplantation), advances in stem cell research and tissue engineering, and advances in additive manufacturing [46]. Another example is neuroprosthetics, which has developed from the need to improve the lives of service members and other individuals who have lost limbs, increasing knowledge in neuroscience, and advances in material science involved in the development of prosthetic limbs [47, 48]. Still another example is the field of precision medicine, which is enabled by improved individualized health care, increasing collection of biological and health data, and new computational analysis and data storage technologies [49]. The convergence of science and new technology development often are driven by beneficial purposes and social need. Continued examination of these needs and scientific activities can prevent or limit the technology surprise that often elicits concern from the security community.

Another phenomenon associated with biotechnology that has raised national security concerns is the increasingly unconventional approach to the life sciences and their key components. A few examples have emerged during the past decade highlighting this phenomenon. One example is the emergence of synthetic biology as a new field of study. At its surface, synthetic biology is genetic engineering. It involves joining together specific DNA sequences to produce a certain biological outcome. But what distinguishes synthetic biology from traditional genetic engineering is the conceptual approach to designing, building, and testing of biological systems. The concept of synthetic biology was created by engineers and computer scientists who asked whether biological systems could be assembled using specific parts to generate expected outcomes. The synthetic biology approach involves the identification of individual “parts”, which functionally are DNA (specifically, plasmids containing genes with desired functions), that when placed in a particular order, could produce a desired outcome such as creating organisms that produce small molecules (e.g., synthetic artemisinin for malaria treatment [50], chemicals [51], opioids [52]), or creating bacteria in all the colors of the rainbow [53]. This design-based approach enables new applications in health, agriculture, industry, and national security.

More recently, technology-based companies and academic scientists have begun exploring the use of DNA as a storage medium for image, audio, and video data files. This use of DNA is driven by the increasing need for platforms that can store extremely large data and datasets. The concept of DNA-based storage emerged from the recognition that the human genome, which is 3 billion base pairs long, can store a lot of information stably and with relatively high fidelity for thousands of years. DNA-based storage leverages advances in DNA sequencing and synthesis technologies. The concept that image data can be stored in and retrieved from DNA was demonstrated in 2017, when scientists from Harvard University published their work on using CRISPR-based tools to encode image data of a galloping horse in bacteria [54, 55]. This effort leverages genome editing tools to demonstrate the feasibility of DNA-based storage of data. The use of DNA as a data storage medium also may present new, potentially exploitable, vulnerability. In 2017, scientists described their ability to hack computers via sequences they embedded into DNA [56]. The researchers claimed to encode “malicious software” in “DNA they purchased online,” which they used “to gain ‘full control’ over a computer that tried to process” or analyze the sequence data generated from physically sequencing the purchased DNA [56].

Despite concerns about how advances in biotechnology could be applied to harm national security, these advances can enhance efforts to prevent, prepare for, and respond to incidents involving chemical and biological threats. For example, next generation sequencers and data analysis software allow scientists to collect information about host response following exposures to pathogens or hazardous chemicals, the microbiome that supports normal growth and functioning of the human body, the organisms in the environment, and many other molecules associated with the normal state of the human body and potential risk factors of exposure to chemical or biological hazards. These data can inform surveillance efforts to help determine whether and when a natural or man-made incident has occurred, aiding both detection of potentially harmful incidents and forensic analysis. In addition, the data may inform the development of vaccines, drugs, and diagnostic tools against chemical and biological agents [called medical countermeasures (MCMs)] [57,58,59,60,61]. Another examples include the use of synthetic genomics, which is the chemical synthesis of genomic DNA of organisms, as platform methods for rapid development of vaccines against epidemic pathogens. Synthetic biology is being used to create technology platforms for rapid MCM development against pathogens and for synthesis of pharmaceutical products. Similar technologies can be used to prevent, detect, and respond to incidents involving contamination or infection of animals and agriculturally-important plants. In addition, synthetic biology is being used to develop biological systems that “eat” nerve agent [62, 63], clean up contaminated materials [61], and exert biocontrol in the environment [64, 65]. These efforts are being aided by advances in genome editing, laboratory automation, high-throughput experimental procedures, bioinformatics, and other technologies that improve repeatability and reduce human error during experimentation.

13.2 U.S. Biodefense Needs

Before September 11, 2001, the field of biodefense was small, restricted to a select group of researchers at a few agencies around the world, and focused largely on the threat of biological weapons from state actors or terrorist organizations. The level and scope of biodefense changed completely after the terrorist events of 2001 when anthrax spores were sent in letters through the mail (i.e., Amerithrax) 1 month after terrorists flew planes into the World Trade Center towers. The U.S. government passed a series of laws and issued presidential directives in rapid succession to enhance its ability to anticipate, prevent, detect, prepare for, respond to, and recover from terrorist events. Several of these programs were further enhanced to prevent, detect, and respond to naturally-occurring infectious disease and natural disasters. These changes were driven in large part by the emergence of West Nile virus in Northern America (1999), H5N1 influenza A virus in Asia (1997), severe acute respiratory syndrome coronavirus in Asia and North America (2003), Middle East respiratory syndrome coronavirus in the Middle East (2012), H1N1 influenza A virus in North America (2009), Ebola virus in Western Africa (2013–2016), Zika virus in the Americas (2016–2017), and the delayed federal government response to hurricane Katrina in 2005. Today, biodefense efforts cover threat characterization, risk assessment, MCM research and development, protection of laboratory workers from accidental exposures, detection and surveillance of infectious disease, emergency response, forensics, and protection of public health, agriculture, and health care critical infrastructures. This section highlights biodefense activities that were initiated after 2001, subsequent policy changes associated with those activities, and their science and technology needs.

13.2.1 Medical Countermeasures

For the past 17 years, the U.S. government has been investing in research and development of MCMs, which includes the development of new vaccines, therapeutics, and diagnostic tools to identify, prevent, and treat infectious diseases. These investments involved several complementary activities, including basic research, advanced development, regulatory science, and development of product platforms. These efforts required coordination and communication between the U.S. National Institutes of Health (NIH), Food and Drug Administration (FDA), and newly created programs for advanced development such as the Biomedical Advanced Research and Development Authority (BARDA), which was established in 2006 by the Pandemic and All-Hazards Preparedness Act (USC109§417). Together, these efforts support fundamental research that provides the scientific foundation for rational design and pre-clinical testing of vaccines and drugs, research to develop and/or validate the animal models and safety and efficacy data used to inform product approval by the FDA (often referred to as regulatory science research), and research that supports testing and evaluation of product efficacy in animal models. These efforts focus on developing and maintaining MCMs for use by civilians and first responders in an emergency.

Complementary activities are being supported in the Department of Defense (DoD) for protection of military forces [66]. Having vaccines and drugs against biological agents that may affect a sizable number of the military and/or be used on the battlefield is a priority for the DoD and has resulted in research, development, and manufacturing investments to support this defensive need. In 2006, DoD created the Transformational Medical Technologies Initiative (TMTI) to leverage scientific advances and technology innovation to counter genetically-engineered biological weapons [67, 68]. The TMTI program supported the development of several vaccine and drug candidates, including 2 products against hemorrhagic fever-causing viruses that reached the Investigational New Drug (IND) stage of the FDA approval process, preparation of 2 INDs for broad spectrum antibiotics, 3 broad-spectrum antibiotics, and 20 additional candidates in early research and development. In addition, TMT had begun examining FDA-approved antibiotics for activity against non-biothreat diseases [69]. In 2010, TMTI moved under the DoD Joint Program Executive Office for Chemical and Biological Defense (JPEO-CBD) [70]. In addition to this program, the Defense Threat Reduction Agency (DTRA) Joint Science and Technology Office for Chemical and Biological Defense (JSTO-CBD) supports research on MCMs against biological threats at DoD research facilities (i.e., under the Army’s Medical Research and Materiel Command [71]) and at the nongovernmental research institutions [72]. The Joint Project Manager for Medical Countermeasure Systems, which is overseen by the DoD JPEO-CBD office, has issued calls for development of countermeasures against chemical, biological, radiological, and nuclear threats [73]. More recently, the DoD launched the Medical Countermeasures Advanced Development and Manufacturing capability, which is a joint effort between DoD and Nanotherapeutics Inc. that provides advanced development and manufacturing of biopharmaceuticals [74].

Although the operational considerations for the MCM needs for the Department of Health and Human Services (HHS) and DoD differ, the early research and development studies do not differ dramatically by product end-use. Therefore, the relevant HHS and DoD offices established the Integrated Portfolio, poviding opportunities to co-manage investments in MCM research and development of common interest [72, 75].

These programs provide the structure within which new scientific research and technologies can be funded and leveraged for medical countermeasure development. Through a combination of funding programs and technology watch efforts, program managers support innovation in technology development and learn about existing biotechnologies that could be applied to MCM development. For example, the Defense Advanced Research Projects Agency (DARPA) has created several programs to generate the scientific knowledge needed to detect human infection from any pathogen, develop new technologies to conduct field diagnostics with little sample, and create platform methodologies for rapid development of vaccines against outbreak pathogens [76, 77]. Although the results of this research are expected to enhance military force protection against biological threats, the scientific discoveries and technologies may benefit development of MCMs for civilians.

Recognizing potential funding opportunities for MCM development, academic and industrial researchers have undertaken efforts to apply new knowledge and scientific approaches to MCM development. For example, in 2013, the J. Craig Venter Institute and Novartis described the rapid creation of influenza vaccines within a cell-free system, capitalizing on recent innovations in synthetic biology [78]. More recently, scientists have used synthetic genomics (i.e., chemically-synthesized genomes) to generate hard-to-obtain pathogens, such as severe acute respiratory syndrome coronavirus [79, 80], or to develop candidate vaccine vectors against biological agents such as the recently-recreated horsepox virus [81]. Still other scientists have conducted studies to develop research animals and conditions that reflect pathogen infection and onset of disease in humans [70, 82] or alternative approaches for studying product efficacy, such as the “human on a chip” [83]. Although promising, application of emerging biotechnologies to MCM development is in early stages of research and development.

A primary difficulty in harnessing these advances is the up-front process and cost of FDA approval, particularly for products for which little or no commercial market exists. In response to this challenge, NIH and FDA have supported research towards enhancing regulatory science, a field of study designed to generate the foundational science needed to evaluate and validate scientific studies on product safety and efficacy [84,85,86]. This field emerged from the significant difficulties that the FDA encountered in assessing data submitted as part of the FDA approval process. Leveraging of emerging biotechnologies for MCMs depends on the functionality and capabilities of these technologies, the availability of information to evaluate safety and efficacy of products in a timely manner, and the existence of a steady market that helps to off-set product development costs.

13.2.2 Biosurveillance

Early detection and warning of infectious disease events was recognized as an important part of biodefense capabilities since the mid-1990s. The concept emerged from the need for preventing large-scale, man-made outbreaks, a concern that emerged in the 1990s after the discovery of the Former Soviet Union’s massive overt offensive biological weapons program [87] and the release of an vaccine strain of Bacillus anthracis by the Japanese apocalyptic cult Aum Shinrikyo [88]. The first biosurveillance platform, ProMed Mail, was developed in 1994 and enables open, internet-based sharing of media reports, official reports, online information, local observations, and other similar sources. Users monitor these reports to identify incidents involving emerging or unusual infectious disease outbreaks or acute exposure to toxins affecting human health. Today, this platform includes 60,000 members from every continent and receives philanthropic funding and donations [89].

Shortly thereafter (1997), Health Canada in partnership with the World Health Organization established the Global Public Health Intelligence Network (GPHIN), which is a curated system that involves human analysis of media reports that are automatically collected [90]. The platform uses text analysis to detect potential infectious disease outbreaks, contamination of food and water, bioterrorism and exposure to chemicals, natural disasters, and safety incidents involving products, drugs, medical device, and agents. Through human analysis of these data, the platform provides users with actionable information about public health threats. Today, GPHIN is part of the WHO’s epidemic intelligence system, which seeks to enable global alert and response of potential outbreaks before they become public health emergencies of international concern. The WHO’s system focuses on a few infectious diseases and detects food and water contamination and chemical events [91].

More recently-developed systems collect, integrate, and inform users about potential infectious disease events that could cause large-scale epidemics or that are of questionable or suspicious origin. Although these systems include data from clinical and public health sources, they also include data from various other sources such as media reports of unusual illnesses or events, availability of medications in pharmacies, and ecological data of sick animals. Some systems build on the concepts of syndromic surveillance, which is the monitoring of infectious disease infections through surrogate markers (e.g., antibiotic sales), and sentinel surveillance (e.g., illness of animals from a zoonotic pathogen in specific locations). Furthermore, several of these systems leverage computational platforms that enable the collection, integration, and analysis of data of various types, from various sources, and with various degrees of uncertainty. Still others, which no longer exist, relied on crowdsourcing to verify reports of possible illness.

Between 2004 and 2006, four systems were developed: Georgetown University’s Argus, the European Union Joint Research Centre’s MediSys, the Japanese National Institute of Informatics’ BioCaster, and Harvard University’s HealthMap. MediSys and BioCaster were fully automated and able to analyze information in multiple languages. MediSys seeks to enable early detection of suspected bioterrorism activities [92]. This system uses a computational tool that scans articles for specific keywords and newsworthy information. BioCaster uses text mining technologies to scan RSS newsfeeds from local and national news in Japan to detect keywords related to infectious disease incidents [93]. This capability systematically indexes relevant information from the collected articles, increasing the rapidity with which users can access information. Argus, which no longer exists, and HealthMap were/are moderated by people. Argus was established to detect the emergence, illnesses, and person-to-person transmission of avian H5N1 influenza A virus [94]. Argus used a variety of data analysis technologies and human analysis to examine and monitor media reports on potential health security incidents. HealthMap was created to collect, integrate, and analyze a variety of data that may indicate a disease outbreak and enable ongoing monitoring and surveillance of infectious disease threats. Users can access and visualize data of interest by applying filters of data sources [95]. A retrospective analysis of the efficacy of HealthMap at detecting the 2014–2016 Western African Ebolavirus outbreak found that the system had identified cases of viral hemorrhagic fever a week before the WHO declared the outbreak [96]. Whether this system can be used to provide public health officials with predictive, actionable information is unclear, especially because the viral hemorrhagic fever cases identified by the platform do not appear to have led to outbreak response. An evaluation of these systems concluded that their efficacies are influenced by involvement of human moderation, sources of data, languages of the collected information, the regions in which incidents occur, and the types of cases collected [97].

The U.S. government provided financial support for the development of Argus and HealthMap. In addition, the U.S. government established several systems to obtain early warning of potential infectious disease outbreaks, allocating about $32 billion dollars between 2001 and 2012 [98]. DoD supported the Electronic Surveillance System for the Early Notification of Community-based Epidemics (ESSENCE), which was a system of systems integrating reportable disease surveillance, sentinel surveillance, syndromic surveillance, and clinical identification [99]. This system sought to detect natural and/or man-made outbreaks through the identification of abnormal trends in the data. ESSENCE involved cooperation between the Walter Reed Army Institute of Research, the Centers for Disease Control and Prevention (CDC), the Emergency Medical Associates of New Jersey Research Foundation, the New York City Department of Health and Mental Hygiene, and Harvard Medical School and Harvard Pilgrim Health Care. In 2003, the CDC created BioSense to enable early detection and assessment of potential illnesses that may be related to bioterrorism incidents [100]. Through this system, the CDC sought to build the capabilities of state and local public health departments to conduct syndromic surveillance. Today, the program is called the National Syndromic Surveillance Program and enables the collection, evaluation, sharing, and storage of health information via a cloud-based system [101]. The CDC also created the Early Warning Infectious Disease Surveillance system, which sought to enhance detection and reporting of transboundary infectious diseases outbreaks with Canada and Mexico [102]. In 2007, the Department of Homeland Security (DHS) established the National Biosurveillance Integration Center (NBIC) after passage of the Implementing Recommendations of the 9/11 Commission Act [103]. The NBIC integrates and analyzes animal, plant, human, and environmental health data to improve early warning of potential biological incidents and gain situational awareness [104]. Although NBIC was created from a need to detect potential bioterrorism incidents, the Center now includes natural events that may cause significant disruption to the U.S. [105]. In 2012, the DoD invested in the Biosurveillance Ecosystem, which is a cloud-based platform that enables users to plug in data-specific applications to detect anomalies in human and animal infectious disease events [106]. In addition, the platform enables collaboration among users and incorporates advanced data analytics, such as machine learning of health and non-health data. The concept for this platform emerged from the 2009 H1N1 influenza outbreak, which caught many by surprise [107].

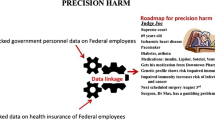

Building of these efforts and recognizing the benefits of identifying potentially-devastating infectious events, President Obama issued the National Strategy for Biosurveillance in 2012 [108]. This Strategy had three primary objectives: (1) to “integrate and enhance national biosurveillance efforts” to provide decision-makers with timely information in a crisis; (2) to increase the network of national expertise and capabilities for rapid detection and understanding (i.e., situational awareness) of emerging crises; and (3) to implement new approaches for considering and addressing uncertainty in the decision-making process. Included as core functions are environmental scanning; integration of human, animal, and plant health information; and assessment and forecasting of potential consequences. One month prior to the Strategy’s release, the U.S. government established an interagency working group on science and technology for biosurveillance, which was chaired by the White House Office and Science and Technology Policy and DoD. This working group was tasked with developing the research and development priorities for achieving the core functions of the National Strategy for Biosurveillance. The working group discussion culminated in the release of the National Biosurveillance Science and Technology Roadmap in 2013, which defined the key priorities for detecting unusual or above-baseline events, anticipating risk including assessment of the potential consequences of incidents, identification and characterization of threats to human health, and integration of, analysis of, and information-sharing with key decision-makers, responders, and healthcare providers. The roadmap highlights current national activities in each of these areas and identifies capability needs to achieve the described priorities. The priorities encompassed the production of baseline data of natural or man-made human, animal, plant, and environmental health events; assessment of emergence and disease dynamics; development of rapid detection and diagnosis technologies and systems; and improvements in sharing, integration, and communication of biosurveillance data. This strategy and roadmap provided a framework for enhancing U.S. capabilities for early warning of natural and/or man-made biological events, which could be leveraged for detection and early warning against chemical, radiological, nuclear, and environmental incidents.

13.2.3 Infectious Disease Characterization and Modeling

Following the investments for biosurveillance, NIH, DHS, U.S. Agency for International Development (USAID), and DoD initiated programs to enhance understanding of pathogens of national security and public health concern, host-pathogen interactions, and disease dynamics through modeling the emergence and spread of infectious disease. Furthermore, these agencies embraced newer biotechnologies, computational and data science, and convergence of chemical, biological, materials, and other physical sciences to provide innovative solutions for preventing, detecting, and responding to biological threats.

In the aftermath of September and October 2001, NIH convened a Blue Ribbon Panel on Bioterrorism and its Implications for Biomedical Research to define biodefense research priorities for pathogens and toxins that could present the highest risk to national security and public health (NIAID Category A Priority Pathogens [109]). To support early research, build scientific expertise, and provide ready capability in a bioterrorism or public health emergency, NIH invested in Regional and National Biocontainment Laboratories and in research and training consortia through their newly established Regional Centers of Excellence for Biodefense and Emerging Infectious Diseases [110, 111]. NIH priorities primarily focused on developing specialized knowledge and skill for studying biological agents on the Category A and B Priority Pathogen lists [112], which initially did not require the use of emerging biotechnologies. However, more recent research efforts have begun exploring synthetic biology, systems biology and data science, microfluidic technologies, tissues-on-a-chip, and other methodologies that offer new capabilities for studying host-pathogen responses and developing, testing, and validating diagnostic tools and MCMs. For example, NIH supported a Modeling Immunity for Biodefense program in which immunologists, computational biologists, modelers, and microbiologists worked together to model the immunological responses to infection and vaccination [113]. Other examples include the applications of next generation sequencing to characterize newly emerging pathogens using microfluidic technologies [114] and lab-on-a-chip systems for monitoring pathogens in various samples, including environmental samples [115]. In addition, research programs supporting metagenomic studies for examining host-pathogen responses are leveraged to understand biological threat agents better [116].

In 2004, NIH created a collaborative network of researchers to develop new computational, statistical, and mathematical models for studying pathogen dynamics, building technical expertise through education and training, and enabling communication with policy-makers and international partners [117]. This program, called Models of Infectious Disease Agent Study (MIDAS), supports research on the emergence and spread of infectious disease, surveillance, effectiveness of intervention strategies, host-pathogen interactions, and environmental and societal factors involved in emergence and spread of infectious diseases. Although not designed to inform public health control measures directly, the models can inform high-level strategy for detecting and controlling infectious disease outbreaks. For example, some of the influenza models [118] informed the development of the U.S. National Strategy for Pandemic Influenza in 2006. In addition, modeling approaches can provide insight about public health strategies that would help control pandemics [119].

NIH created a second program, the Research and Policy for Infectious Disease Dynamics (RAPIDD) program that lasted from 2008 to 2016, received funds from the Department of Homeland Security to support infectious disease modeling efforts, and was administered through the Fogarty International Center [120]. The RAPIDD program supported international teams of researchers to develop community-based and/or environmental models and to generate and/or collect the necessary data to study infectious disease dynamics. Some models integrated clinical data with data on local population migration, and hospital location and capacity. Other models focused on the existence of specific pathogens (e.g., viruses that cause hemorrhagic fevers) in wild animals throughout the world, the migration patterns of the animals, and the interactions between the animals and people. Some of these models include data on disease vectors, such as mosquitoes, to study the spread and potential risk of vector-borne diseases. In addition to these modeling efforts, the RAPIDD program includes opportunities to translate these models to decision-makers responsible for public health emergencies of international consequence. In 2015, one research group (Drs. David Hayman of Massey University in New Zealand and James Wood of Cambridge University in the United Kingdom) partnered with Ambassador Bonnie Jenkins (former Ambassador for Cooperative Threat Reduction of the U.S. Department of State) and Gryphon Scientific (Dr. Kavita Berger, the author of this chapter) to explore approaches for improving communication between global health security stakeholders and the research community. This discussion occurred within the context of the 2014–2016 Ebolavirus disease outbreak and the Global Health Security Agenda (launched in 2014), and leveraged Dr. Hayman and Wood’s expertise and research on the existence of viral hemorrhagic fevers.

In the early-2010s, the U.S. and U.K. entered into a collaboration to support research for combatting zoonoses (i.e., infectious diseases that infect both humans and animals) in low-income countries [121]. The U.S. program was part of the Ecology of Infectious Disease program, which involved joint funding from NIH and the U.S. National Science Foundation [122]. Funding in the U.K. was provided by the Biotechnology and Biological Sciences Research Council and Economic and Social Research Council [121]. This collaborative effort lasted until November 2017 [123]. The knowledge gained from the research can inform infectious disease surveillance efforts.

In 2009, the USAID Emerging Pandemic Threats (EPT) program funded teams of scientists, One Health experts (including veterinarians), and epidemiologists to build international capacity to prevent, detect, and respond infectious disease incidents in animals and people [124]. Leading up to the creation of the EPT program were several outbreaks (e.g., severe acute respiratory syndrome in 2003, H5N1 influenza from 1997 to present day, and H1N1 influenza in 2009) that emerged unexpectedly, resulting in international concern. This program supports multi-sector teams to implement projects to predict, prevent, identify, and respond to infectious disease threats. The Predict project involves capacity building for strengthening detection and monitoring of, characterizing the drivers for, and improving predictive models for disease transmission between wildlife and people. The Prevent project involves assessment of the risks of associated with disease transmission and strategies to mitigate those risks. The Identify project involves the building laboratory capacity, including training personnel, to diagnose infectious diseases in animals and people. The Respond project involves facilitating networks of public health, veterinary medicine, and environmental health to improve transdisciplinary training and response efforts. In addition to these projects, the EPT program funds CDC to promote the detection and monitoring of zoonotic diseases, including providing support for the Field Epidemiology Training Program.

DoD’s DTRA leverages emerging biotechnologies to develop products for development of innovative MCMs (see previous section), detection of biological threats, protection from exposure to chemical and biological agents, and decontamination [125]. For example, the DoD supports research involving synthetic biology to develop decontamination products, develop and test vaccine and drug candidates, and improve product development pathways. The agency supports innovative projects for the development of bio-based sensors for chemical and biological agents and for integration and analysis of data via its Global Biosurveillance Portal. In addition, the DoD coordinates internally and with HHS to leverage investments in science and technology.

DHS supported University-based Centers of Excellence to develop the approaches, technologies, and knowledge needed to enhance U.S. homeland security. Texas A&M University and Kansas State University hosted the Center of Excellence for Zoonotic and Animal Disease Defense, which conducted research to prevent foreign animal and zoonotic disease threats [126]. This Center supported research to develop new vaccine candidates and platforms for animal pathogens, promote educational and training programs, and inform decision-makers about prevention and control of animal and zoonotic diseases. The University of Minnesota leads the Food Protection and Defense Institute, which conducts research to address security vulnerabilities in the food supply [127]. This center supports research on risk analysis and modeling of incidents involving the food system. Purdue University and Rutgers University hosted the Center of Visualization and Data Analytics [128]. This center supported research for integrating and analyzing large datasets involved in detecting security threats. All three centers are in emeritus status; none of the current Centers of Excellence focus on biological threats.

13.2.4 Microbial Forensics

The field of microbial forensics emerged in 2001 when the Federal Bureau of Investigation (FBI) had to develop new sampling, storage, testing, and analysis methods for identifying the source and determining attribution of the Bacillus anthracis-laced letters. Microbial forensics and attribution was included in Presidential level strategy for biodefense in the early part of the 21st century, specifically in Homeland Security Presidential Directive-10, Biodefense for the 21st Century. A significant investment towards microbial forensics was the establishment of the National Bioforensic Analysis Center (NBFAC), which is part of the National Biodefense Analysis and Countermeasure Center [129]. NBFAC has achieved certification of ISO standards enabling it to provide laboratory support for federal law enforcement investigations that involve biological agents [130]. NBFAC works closely with the FBI and relevant U.S. government agencies on specific cases that warrant sample analysis for agent identification, sample signatures, and attribution. In addition, NBFAC conducts forensic research to address capability gaps to enhance its support for biocriminal and bioterrorism investigations. In addition, the National Institute of Justice of the Department of Justice has provided funding since 2011 for research on application of microbiome research to forensic data collection and analysis [131]. This funding program leverages recent research on the microbiome, which receives significant funding from the NIH. In 2014, the National Research Council (NRC) published a report on scientific needs for microbial forensics [132]. Drawing on the recent advances in cloud computing, data collection, and data analytics, the NRC report primarily focused the potential for bioinformatics, whole genome sequencing, and systems biology analyses to enhance microbial forensic capabilities internationally.

More broadly, the National Science Foundation and Department of Justice have sponsored seminars and research on forensic science. For example, the National Institute of Justice provided over 140 grants since 2004 to support research and development of forensic science, some of which involves analysis of biological materials [133]. In 2014, the National Science Foundation established a forensic science program that supports research and training in seven directorates: Biological Sciences, Computer and Information Science and Engineering, Education and Human Resources, Engineering, Geosciences, Mathematical and Physical Sciences, and Social, Behavioral, and Economic Sciences [134]. Through this program, NSF established a Center for Advanced Research in Forensic Science, which is a consortium of nine universities that have received planning grants in various topics [135]. The University of North Texas and Texas A&M University received planning grants in microbial forensics and California State University received a planning grant in chemistry, biology, and biomechanical engineering. The Center for Advanced Research in Forensic Science enables cooperation between universities, industry, and government to support fundamental research in forensic science and to develop scientists with the knowledge and skills to leverage scientific advances for application to forensic science. In addition to these efforts, the Department of Justice and National Institute of Standards and Technology established the National Commission on Forensic Science to promote scientific progress and improve scientific validity in forensic science [136]. However, this Commission lasted only 4 years, from 2013 to 2017.

13.3 Examples of Creative Application of Emerging Biotechnologies

Although much of the biodefense investment remains focused on microbiological and virological research, U.S. agencies and scientists have leveraged emerging biotechnologies to meet other defensive needs. Multidisciplinary scientific efforts have been used to address military medical needs, global health, and forensic analysis of chemical weapons attacks. This section briefly describes efforts that promoted creativity and innovation to meet practical needs. The approaches used in these and other examples could be applied to leveraging emerging biotechnologies and converging sciences to address current and future biodefense and health security needs.

13.3.1 Neuroprosthetics

Advances in neuroscience and robotics enabled the development of brain-controlled prosthetics, a field which the DARPA pioneered [137,138,139]. In 2006, DARPA established the Revolutionizing Prosthetics program to restore normal upper limb function to individuals who have lost that capability [140]. At the time, the state of the science for artificial limbs was much less advanced than lower-body prosthetics [141]. To enhance upper-limb prosthetics, DARPA funded engineers and neuroscientists to work together to design and develop prosthetic limbs that can be controlled by the user’s thoughts. Through this multidisciplinary effort, engineers developed artificial limbs that had the range of motion like a natural limb and neuroscientists developed user control interfaces that enable the transfer the signals generated by thought to the actual movement and feeling of limbs [140]. The initial prototypes were tested in the laboratory before being tested on amputees. Through this multidisciplinary effort, the DARPA program was able to develop neuroprosthetics that restored fine motor function in the amputees [142, 143].

13.3.2 Predicting Infectious Disease Events

Advances in data science and availability of environmental, geographic, and population density and migration data have ushered in a new age for detecting infectious disease events. This approach draws on studies correlating infectious disease infection, transmission, and emergence in the human population with temperature, humidity, urbanization and city expansion, night lights, local migration, and other environmental and human factors. These data, along with satellite imagery, can be used to identify conditions conducive for pathogen outbreaks. In addition, geolocation data embedded in videos, pictures, and text files can be used to tag the location and time of infection events. In 2009, scientists described the use of satellite imagery to identify climate events (e.g., flooding) with outbreaks of waterborne disease (e.g., cholera, typhoid, hepatitis, and leptospirosis) [144]. They leveraged published research correlating outbreaks of these diseases with societal changes caused by flooding, including overcrowding and lack of sanitation and clean water. The scientists argued that coupling knowledge about human factors with factors observable by satellite imagery also could be used to predict movement of vectors with the emergence and spread of vector-borne diseases. Another research group examined the correlation between malaria incidence and climatic data (i.e., rainfall estimates, vegetation levels, and surface temperature of the land) collected through satellites [145]. More recently, researchers described the use of satellite imagery to monitor nighttime lights to examine the pattern of measles cases in Western Africa [146]. These scientists used nighttime lights as a surrogate for population density, which affects pathogen transmission, to predict outbreaks of measles.

13.3.3 Syrian Chemical Weapons Attack

In 2013, hundreds of men, women, and children were killed in Ghouta, Syria with missiles carrying chemical weapons. In the immediate aftermath of the attacks, Human Rights Watch, working with scientists, examined images and videos posted on social media sites to analyze the types and amounts of weapon used in the attack, which led the organization to attribute the attacks to the Syrian government [147]. They leveraged Facebook and other social media sites to examine the content in image and video files that were being posted by attack victims and Human Rights Watch staff in country. The scientists, some of whom had expertise in chemistry, chemical weapons, and arms control, were able to use the posted images to identify: (a) the signs of disease caused by chemical weapons; (b) the location, timing, and duration of the attacks; and (c) the type of weapon used to deliver the agent.

These examples illustrate different ways by which technology can be used to address societal needs. Similar innovations, technologies, and applications can be leveraged to address biodefense needs ranging from threat assessment through recovery after a significant natural or man-made event.

13.4 Underlying Factors of Leveraging Biotechnologies for Biodefense

During the Obama Administration, the White House Office of Science Technology Policy, National Science and Technology Council, and National Security Staff issued several reports and Executive-level policies encouraging the advancement and application of science and technology (S&T) to address national security objectives, including biodefense objectives. In 2013, the White House released the National Biosurveillance Science and Technology Roadmap, which described current capabilities and S&T gaps and needs to support implementation of the National Biosurveillance Strategy [148]. In 2014, the White House released a report on Strengthening Forensic Science, which highlighted capabilities and implementation challenges for accreditation and certification of forensic science and workforce development of medical and legal personnel [149]. In 2016, the White House issued three reports highlighting S&T needs for disaster preparedness, reduction, and resilience, including The National Preparedness Science and Technology Task Force Report: Identifying Science and Technology Opportunities for National Preparedness [150], The Implementation Roadmap for the Critical Infrastructure Security and Resilience Research and Development Plan [151], and The Homeland Biodefense Science and Technology Capability Review [152]. The Task Force Report highlighted preparedness initiatives that could benefit from advances in S&T. The Implementation Roadmap enumerated the research and development activities that enhance security and resilience of critical infrastructure. The biodefense capability review highlighted the S&T needs for countering biological threats affecting human and animal health.

The Homeland Security Biodefense Science and Technology Capability Review described the results of an interagency process to identify science and technology needs to meet critical biodefense objectives in threat awareness, surveillance and detection, and recovery [152]. The report highlighted the critical needs for: (a) enhancing U.S. risk assessment methodologies; (b) conducting threat characterization research (including assessing the consequences of a deliberate attack on pets and wildlife); (c) examining transmission through food and water; (d) studying avian influenza; (e) linking biosurveillance data to improve decision-making in a biological incident; (f) improving sampling, detection, and surveillance of environmental samples after an attack; (g) improving forensic analysis of a biological incident; (h) enhancing research and laboratory capacity during a crisis to inform modeling of biological attacks; (i) improving MCM shelf-life, production, and storage; (j) enhancing workforce development of responders to biological attacks; and (k) developing innovative decontamination methods. This list of science and technology needs for biodefense highlights the general need for workforce development, research support, and information-sharing with policy-makers. Some of these needs were addressed by the 2018 National Biodefense Strategy, which calls for research and development in a variety of areas important for preventing, detecting, and responding to natual and man-made biological threats [153].

Common to these documents are the recognized need for a proficient workforce, data sharing and communication with other scientists and decision-makers, and research and development to increase knowledge and develop new technologies and methodologies. However, a significant challenge seems to be the translation of operational or strategic need to specific research requirements that can be used to generate information and develop new products. Shortly after 2001, the U.S. government, philanthropic organizations, and universities supported research, education, and communication efforts. In the early 2010s, interest in continuing these efforts waned as time has progressed without the occurrence of a major biological attack. However, during the past few years, particularly since the 2014–2016 Western African Ebolavirus and 2016 Zika virus outbreaks, concern about catastrophic natural and man-made threats have met with renewed interest in funding research on risk assessment, prediction of future infectious disease threats, trends in biotechnology (specifically, horizon scanningFootnote 1), and decontamination. This increased interest in research has not necessarily been met with increased investment in education and training. Although a few formal, degree-granting programs in biodefense have existed, the only known programs that exist today are the Biohazardous Threat Agents and Emerging Infectious Diseases of Georgetown University [154] and Masters in Biodefense at George Mason University [155]. Furthermore, the number of universities that provide laboratory biosecurity training to graduate students in the life sciences has decreased during the past decade, but in their place programs through educational service providers, such as CITI [156], have emerged. In addition, the American Biological Safety Association provides laboratory biosafety and biosecurity training to biosafety professionals [157] the International Federation of Biosafety Associations has a certification program for biorisk management (i.e., methods for analyzing and managing accidental and deliberate biological risks) [158], three organizations host leadership in biosecurity or health security programs [159,160,161], and the U.S. government supports training activities. Despite these efforts, most scientists are not taught about biosecurity or biodefense issues (the exceptions are researchers working with Biological Select Agents and Toxins or in synthetic biology). This lack of training results in scientists who may not be able to evaluate the broader impact of their research, whether that impact is increased risk of research activities or enhanced benefits to meet national security needs. Without such knowledge, scientists and engineers may not be able to identify and transition knowledge or technologies that can address outstanding biodefense needs, or to identify potential risks associated with their research. This difficulty is pronounced with pathogen research compared to research that does not involve pathogens (e.g., creating synthetic organisms to develop platforms for synthetic antimicrobial products). However, the ability to translate biodefense needs to research projects appears to be more challenging than similar capabilities for translating needs to improve human health, agriculture, or environmental health.

Overcoming these challenges requires a system in which decision-makers are able to convey their needs to funding organizations, which in turn need to convey their needs to the scientific and engineering communities. To be successful, this system requires creativity at all stages to identify solutions that address particular needs and leverage the capabilities generated by advances in physics, chemistry, engineering, material science, computer science, social and behavioral sciences, and the life sciences. Promoting creativity is harder than it appears, often requiring researchers and decision-makers to think differently than they were taught. For example, many scientists, especially life scientists, approach research from a discovery science standpoint, i.e., they develop and test hypotheses to generate knowledge, create new products (e.g., high-throughput drug discovery and pre-clinical vaccine development), and/or develop new technologies to improve research efforts or as product platforms. On the other end of the spectrum are engineers, who often conduct design-based research, which involves identifying the specific product needs before developing the methodological approach to achieve the desired output (e.g., synthetic organisms designed to produce industrial chemicals). Both approaches enable innovation within fields and sometimes across fields, but they also may contribute to conceptual barriers to identifying solutions from high-level need. This challenge may be exacerbated for decision-makers whose area of expertise is not research and consequently, may have trouble defining product specifications from the strategic or operational needs.

One potential solution to addressing this issue is to provide more opportunities for students, junior and senior scientists and engineers with opportunities to learn about different approaches to knowledge and product development. This level of awareness-raising can be achieved through training or fellowship opportunities, conferences and symposia, collaborative grants, and/or challenge competitions. In addition, these workforce development opportunities could be enhanced further by including biodefense topics and needs in training curricula and other engagement venues. The goal of these efforts would be to impart the necessary knowledge to the scientific and engineering communities about the critical needs in biodefense that emerging biotechnologies may be able to address. Through these training and career development opportunities, scientists and engineers may gain the necessary knowledge about the problems that need addressing, previously-attempted approaches, and willingness for transitioning new technologies. Furthermore, they may be better positioned to identify which information and technologies may be most effective for addressing a given biodefense need.

In addition to developing a science and engineering workforce that understands the biodefense objectives and needs, educating decision-makers about advances in emerging biotechnology may enhance current or add new capabilities not previously imagined. Although some decision-makers are examining cutting-edge biotechnologies to harness their capabilities for biodefense purposes, many largely are unaware of the benefits afforded by these technologies. In fact, several security experts and decision-makers view emerging biotechnology more as a risk than benefit and consider the realization of risky outcomes to be more plausible that the realization of benefit. This attitude prevents decision-makers and security experts, alike, from seeing the potential of biology and biotechnology for addressing unaddressed capability gaps. Educational efforts directed towards raising awareness of current and near future technology trends and their application to other fields may help decision-makers understand which technologies can be leveraged, which require further investment, and which have associated legal, ethical, and social barriers limiting their application.

These efforts could be enhanced through directed engagement and dialogue between scientists, engineers, and decision-makers, especially if one result is the act of jointly brainstorming potential technology solutions for biodefense science and technology needs. This ideation process could help drive creativity in identifying potential technologies and information that could be harnessed, even if outside the expertise of the scientists involved in the process. Furthermore, this process could have broader benefit by helping to develop research agendas that may generate wider interest among the scientific community. This process could assist with translation of strategic or operational biodefense needs to more defined research and development programs, particularly ones that harness the capabilities of emerging biotechnologies.

13.5 Conclusions

The emergence of new biotechnologies provides great promise for biodefense, especially for key objectives of biosurveillance and early warning, microbial forensics, risk and threat assessment, horizon scanning in biotechnology, and MCM development, scale-up, and delivery. Understanding and leveraging newly-developed capabilities afforded by emerging biotechnologies require knowledge about cutting-edge research and its real or proposed application(s) to analogous needs, the process through which biotechnologies advance, and the educational and research infrastructure that promotes multi-disciplinary science. When combined with knowledge about biodefense gaps that could be addressed through science and technology, creative scientists, engineers, and decision-makers can design research agendas or concepts based on the identified gaps and S&T needs, and that leverage emerging biotechnologies. Innovation in research and technology development are driven by sector-specific needs and the convergence of the physical, chemical, material, computer, engineering, and life sciences. Biotechnologies developed for other sectors could be applied to biodefense, especially if the individuals involved are able to innovate in concept design and development. Of all biodefense objectives, biosurveillance seems to have reaped the most benefit from emerging biotechnologies, specifically the integration and analysis of diverse clinical, biological, demographic, and other relevant data. More recently, scientists have begun applying synthetic biology, genomics, and microfluidics to development of new products and platforms for MCMs. Unlike these initiatives, investments in microbial forensics have been few, limiting its ability to leverage biotechnology advances for collecting and analyzing data. Looking to the future, emerging biotechnologies can provide new opportunities for enhancing biodefense by addressing capability gaps.

Notes

- 1.

Horizon scanning is the gathering and analyzing data to forecast trends in society, including technology trends.

References

Global Market Insights. Genome editing market. 2016.

Market Research Service. Genome editing: Genome Engineering Markets. Research and Markets. 2017.

Research and Markets. Genome editing: technologies and global markets. 2016.

PM Live Top Pharma List. Top 50 pharmaceutical products by global sales.

Bowick GC, Barrett ADT. Comparative pathogenesis and systems biology for biodefense virus vaccine development. J Biomed Biotechnol. 2010; Article ID 236528.

Burke W, Psaty BM. Personalized medicine in the era of genomics. JAMA. 2007;298(14):1682–4.

Ginsburg GS, Willard HF. Genomic and personalized medicine, vol. 1. San Diego: Academic; 2008.

Hesse H, Höfgen R. Application of genomics in agriculture. In: Hawkesford MJ, Buchner P, editors. Molecular analysis of plant adaptation to the environment. New York: Springer; 2001. p. 61–79.

Menachery VD, Schäfer A, Burnum-Johnson KE, Mitchell HD, Eisfeld AJ, Walters KB, et al. MERS-CoV and H5N1 influenza virus antagonize antigen presentation by altering the epigenetic landscape. Proc Natl Acad Sci. 2018;201706928.

Schäfer A, Baric RS. Epigenetic landscape during coronavirus infection. Pathogens. 2017;6(1):8.

Kruse U, Bantscheff M, Drewes G, Hopf C. Chemical and pathway proteomics powerful tools for oncology drug discovery and personalized health care. Mol Cell Proteomics. 2008;7(10):1887–901.

Bundy JG, Davey MP, Viant MR. Environmental metabolomics: a critical review and future perspectives. Metabolomics. 2009;5(1):3.

Spratlin JL, Serkova NJ, Eckhardt SG. Clinical applications of metabolomics in oncology: a review. Clin Cancer Res. 2009;15(2):431–40.

Dennis KK, Auerbach SS, Balshaw DM, Cui Y, Fallin MD, Smith MT, et al. The importance of the biological impact of exposure to the concept of the exposome. Environ Health Perspect. 2016;124(10):1504.

Rappaport SM. Implications of the exposome for exposure science. J Expo Sci Environ Epidemiol. 2011;21(1):5.

Evans WE, Relling MV. Pharmacogenomics: translating functional genomics into rational therapeutics. Science. 1999;286(5439):487–91.

Phillips KA, Veenstra DL, Oren E, Lee JK, Sadee W. Potential role of pharmacogenomics in reducing adverse drug reactions: a systematic review. JAMA. 2001;286(18):2270–9.

Raunio H. In silico toxicology–non-testing methods. Front Pharmacol. 2011;2:33.

Viceconti M, Clapworthy G, Jan SVS. The virtual physiological human—a European initiative for in silico human modelling. J Physiol Sci. 2008;58(7):441–6.

Hay SI, George DB, Moyes CL, Brownstein JS. Big data opportunities for global infectious disease surveillance. PLoS Med. 2013;10(4):e1001413.

Khalil AS, Collins JJ. Synthetic biology: applications come of age. Nat Rev Genet. 2010;11(5):367.

National Research Council. Positioning synthetic biology to meet the challenges of the 21st century: summary report of a six academies symposium series. Washington, DC: National Academies Press; 2013.

Chang R, Nam J, Sun W. Direct cell writing of 3D microorgan for in vitro pharmacokinetic model. Tissue Eng Part C Methods. 2008;14(2):157–66.

Murphy SV, Atala A. 3D bioprinting of tissues and organs. Nat Biotechnol. 2014;32(8):773.

Nguyen DG, Funk J, Robbins JB, Crogan-Grundy C, Presnell SC, Singer T, Roth AB. Bioprinted 3D primary liver tissues allow assessment of organ-level response to clinical drug induced toxicity in vitro. PLoS One. 2016;11(7):e0158674.

Moeller L, Wang K. Engineering with precision: tools for the new generation of transgenic crops. AIBS Bull. 2008;58(5):391–401.

Voytas DF, Gao C. Precision genome engineering and agriculture: opportunities and regulatory challenges. PLoS Biol. 2014;12(6):e1001877.

Davis G, Casady WW, Massey RE. Precision agriculture: an introduction. Extension Publications (MU); 1998.

Schmaltz R. What is precision agriculture? AgFunder News. 2017. https://agfundernews.com/what-is-precision-agriculture.html

Huang X, Han B. Natural variations and genome-wide association studies in crop plants. Annu Rev Plant Biol. 2014;65:531–51.

Sharma A, Lee JS, Dang CG, Sudrajad P, Kim HC, Yeon SH, et al. Stories and challenges of genome wide association studies in livestock—a review. Asian Australas J Anim Sci. 2015;28(10):1371.

Georges F, Ray H. Genome editing of crops: a renewed opportunity for food security. GM Crops Food. 2017;8(1):1–12.

Laible G, Wei J, Wagner S. Improving livestock for agriculture–technological progress from random transgenesis to precision genome editing heralds a new era. Biotechnol J. 2015;10(1):109–20.

Adesina O, Anzai IA, Avalos JL, Barstow B. Embracing biological solutions to the sustainable energy challenge. Chem. 2017;2(1):20–51.

Ferry MS, Hasty J, Cookson NA. Synthetic biology approaches to biofuel production. Biofuels. 2012;3:9–12.

Reardon S. Leukaemia success heralds wave of gene-editing therapies. Nature. 2015;527(7577):146–7. https://doi.org/10.1038/nature.2015.18737

Bowler J. A Swedish scientist is using CRISPR to genetically modify healthy human emryos. Science Alert. 2016.

Ma H, Marti-Gutierrez N, Park SW, Wu J, Lee Y, Suzuki K, et al. Correction of a pathogenic gene mutation in human embryos. Nature. 2017;548(7668):413–9. https://doi.org/10.1038/nature23305

Cyranoski D, Ledford H. Genome-edited baby claim provokes international outcry. Nature. 2018;563(7733):607–8.

Kaiser J. A yellow light for embryo editing. Science. 2017;355(6326):675. https://doi.org/10.1126/science.355.6326.675-b

Carlson DF, Lancto CA, Zang B, Kim ES, Walton M, Oldeschulte D, et al. Production of hornless dairy cattle from genome-edited cell lines. Nat Biotechnol. 2016;34(5):479–81. https://doi.org/10.1038/nbt.3560

Panko B. Gene-edited cattle produce no horns. Science. 2016. https://doi.org/10.1126/science.aaf9982

Regalado A. Bill Gates doubles his bet on wiping out mosquitoes with gene editing. MIT Technology Review. 2016.

Inova Healthcare. Transforming healthcare with groundbreaking genomics research. http://www.inova.org/itmi/home. Accessed 4 Oct 2017.

Cyranoski D. China embraces precision medicine on a massive scale. Nature. 2016;529(7584):9–10.

Jang J. 3D bioprinting and in vitro cardiovascular tissue modeling. Bioengineering. 2017;4(3):71.

Aravamudhan S, Bellamkonda RV. Toward a convergence of regenerative medicine, rehabilitation, and neuroprosthetics. J Neurotrauma. 2011;28(11):2329–47.

Thakor NV. Neuroprosthetics: past, present and future. In: Jensen W, et al., editors. Replace, repair, restore, relieve–bridging clinical and engineering solutions in neurorehabilitation. Cham: Springer; 2014. p. 15–21.