Abstract

Peter J. Bickel has made far-reaching and wide-ranging contributions to many areas of statistics. This short article highlights his marvelous contributions to high-dimensional statistical inference and machine learning, which range from novel methodological developments, deep theoretical analysis, and their applications. The focus is on the review and comments of his six recent papers in four areas, but only three of them are reproduced here due to limit of the space.

You have full access to this open access chapter, Download chapter PDF

Similar content being viewed by others

Keywords

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

7.1 Contributions of Peter Bickel to Statistical Learning

7.1.1 Introduction

Peter J. Bickel has made far-reaching and wide-ranging contributions to many areas of statistics. This short article highlights his marvelous contributions to high-dimensional statistical inference and machine learning, which range from novel methodological developments, deep theoretical analysis, and their applications. The focus is on the review and comments of his six recent papers in four areas, but only three of them are reproduced here due to limit of the space.

Information and technology make data collection and dissemination much easier over the last decade. High dimensionality and large data sets characterize many contemporary statistical problems from genomics and neural science to finance and economics, which give statistics and machine learning opportunities with challenges. These relatively new areas of statistical science encompass the majority of the frontiers and Peter Bickel is certainly a strong leader in those areas.

In response to the challenge of the complexity of data, new methods and greedy algorithms started to flourish in the 1990s and their theoretical properties were not well understood. Among those are the boosting algorithms and estimation of insintric dimensionality. In 2005, Peter Bickel and his coauthors gave deep theoretical foundation on boosting algorithms (Freund and Schapire 1997; Bickel et al. 2005) and novel methods on the estimation of intrinsic dimensionality (Levina and Bickel 2005). Another example is the use of LASSO (Tibshirani 1996) for high-dimensional variable selection. Realizing issues with biases of the Lasso estimate, Fan and Li (2001) advocated a family of folded concave penalties, including SCAD, to ameliorate the problem and critically analyzed its theoretical properties including LASSO. See also Fan and Lv (2011) for further analysis. Candes and Tao (2007) introduced the Dantzig selector. Zou and Li (2008) related the family folded-concave penalty with the adaptive LASSO (Zou 2006). It is Bickel et al. (2009) who critically analyzed the risk properties of the Lasso and the Dantzig selector, which significantly helps the statistics and machine learning communities on better understanding various variable selection procedures.

Covariance matrix is prominently featured in many statistical problems from network and graphic models to statistical inferences and portfolio management. Yet, estimating large covariance matrices is intrinsically challenging. How to reduce the number of parameters in a large covariance matrix is a challenging issue. In Economics and Finance, motivated by the arbitrage pricing theory, Fan et al. (2008) proposed to use the factor model to estimate the covariance matrix and its inverse. Yet, the impact of dimensionality is still very large. Bickel and Levina (2008a,b) and Rothman et al. (2008) proposed the use of sparsity, either on the covariance matrix or precision matrix, to reduce the dimensionality. The penalized likelihood method used in the paper fits in the generic framework of Fan and Li (2001) and Fan and Lv (2011), and the theory developed therein is applicable. Yet, Rothman et al. (2008) were able to utilize the specific structure of the covariance matrix and Gaussian distribution to get much deeper results. Realizing intensive computation of the penalized maximum likelihood method, Bickel and Levina (2008a,b) proposed a simple threshold estimator that achieves the same theoretical properties.

The papers will be reviewed in chronological order. They have high impacts on the subsequent development of statistics, applied mathematics, computer science, information theory, and signal processing. Despite young ages of those papers, a google-scholar search reveals that these six papers have around 900 citations. The impacts to broader scientific communities are evidenced!

7.1.2 Intrinsic Dimensionality

A general consensus is that high-dimensional data admits lower dimensional structure. The complexity of the data structure is characterized by the intrinsic dimensionality of the data, which is critical for manifold learning such as local linear embedding, Isomap, Lapacian and Hessian Eigenmaps (Roweis and Saul 2000; Tenenbaum et al. 2000; Brand 2002; Donoho and Grimes 2003). These nonlinear dimensionality reduction methods go behond traditional methods such as principal component analysis (PCA), which deals only with linear projections, and multidimensional scaling, which focuses on pairwise distances.

The techniques to estimate the intrinsic dimensionality before Levina and Bickel (2005) are roughly two groups: eigenvalue methods or geometric methods. The former are based on the number of eigenvalues greater than a given threshold. They fail on nonlinear manifolds. While localization enhances the applicability of PCA, local methods depend strongly on the choice of local regions and thresholds (Verveer and Duin 1995). The latter exploit the geometry of the data. A popular metric is the correlation dimension from fractal analysis. Yet, there are a couple of parameters to be tuned.

The main contributions of Levina and Bickel (2005) are twofolds: It derives the maximum likelihood estimate (MLE) from a statistical prospective and gives its statistical properties. The MLE here is really the local MLE in the terminology of Fan and Gijbels (1996). Before this seminal work, there are virtually no formal statistical properties on the estimation of intrinsic dimensionality. The methods were often too heuristical and framework was not statistical.

The idea in Levina and Bickel (2005) is creative and statistical. Let X 1, ⋯ , X n be a random sample in R p. They are embedded in an m-dimensional space via X i = g(Y i ), with unknown dimensionality m and unknown functions g, in which Y i has a smooth density f in R m. Because of nonlinear embedding g, we can only use the local data to determine m. Let R be small, which asymptotically goes to zero. Given a point x in R p, the local information is summarized by the number of observations falling in the ball \(\{\mathbf{z} :\| \mathbf{z} -\mathbf{x}\| \leq t\}\), which is denoted by N x (t), for 0 ≤ t ≤ R. In other words, the local information around x with radius R is characterized by the process

Clearly, N x (t) is a binomial distribution with number of trial n and probability of success

where V (m) = πm ∕ 2[Γ(m ∕ 2 + 1)] − 1 is the volume of the unit sphere in R m. Recall that the approximation of the Binomial distribution by the Poison distribution. The process {N x (t) : 0 ≤ t ≤ R} is approximately a Poisson process with the rate λ(t), which is the derivative of (7.2), or more precisely

The parameters θ = log f(x) and m can be estimated by the maximum likelihood using the local observation (7.1).

Assuming {N x (t), 0 ≤ t ≤ R} is the inhomogeneous Poisson process with rate λ(t). Then, the log-likelihood of observing the process is given by

This can be understood by breaking the data {N x (t), 0 ≤ t ≤ R} as the data

with a large T and noticing that the data above follow independent poisson distributions with mean λ(jΔ)Δ for the j-th increment (The dependence on x is suppressed for brevity of notation). Therefore, using the Poisson formula, the likelihood of data (7.5) is

where dN(jΔ) = N(jΔ) − N(jΔ − Δ). Taking the logarithm and ignoring terms independent of the parameters, the log-likelihood of the observing data in (7.5) is

Taking the limit as Δ → 0, we obtain (7.4).

By taking the derivatives with parameters m and θ in (7.4) and setting them to zero, it is easy to obtain that

Let T k (x) be the distance of the k-th nearest point to x. Then,

Now, instead of fixing distance R, but fixing the number of points k, namely, taking R = T k (x) for a given k, then, N x (R) = k by definition and the estimator becomes

Levina and Bickel (2005) realized that the parameter m is global whereas the estimate \(\hat{{m}}_{k}(\mathbf{x})\) is local, depending on the location x. They averaged out the n estimates at the observed data points and obtained

To reduce the sensitivity on the choice of the parameter k, they proposed to use

for the given choices of k 1 and k 2.

The above discussion reveals that the parameter m was estimated in a semiparametric model in which f(x) is fully nonparametric. Levina and Bickel (2005) estimates the global parameter m by averaging. Averaging reduces variances, but not biases. Therefore, it requires k to be small. However, when p is large, even with a small k, T k (x) can be large and so can be the bias. For semiparametric model, the work of Severini and Wong (1992) shows that the profile likelihood can have a better bias property. Inspired by that, an alternative version of the estimator is to use the global likelihood, which adds up the local likelihood (7.4) at each data point X i , i.e.

Following the same derivations as in Levina and Bickel (2005), we obtain the maximum profile likelihood estimator

In its nearest neighbourhood form,

The reason for divisor (k − 2) instead of k is given in the next paragraph. It will be interesting to compare the performance of the method (7.13) with (7.9).

Levina and Bickel (2005) derived the asymptotic bias and variance of estimator (7.8). They advocated the normalization of (7.8) by (k − 2) rather than k. With this normalization, they derived that to the first order,

The paper has huge impact on manifold learning with a wide range of applications from patten analysis and object classification to machine learning and statistics. It has been cited nearly 200 times within 6 years of publication.

7.1.3 Generalized Boosting

Boosting is an iterative algorithm that uses a sequence of weak classifiers, which perform slightly better than a random guess, to build a stronger learner (Schapire 1990; Freund 1990), which can achieve the Bayes error rate. One of successful boosting algorithms is the AdaBoost by Freund and Schapire (1997). The algorithm is powerful but appears heuristic at that time. It is Breiman (1998) who noted that the AdaBoost classifier can be viewed as a greedy algorithm for an empirical loss minimization. This makes a strong connection of the algorithm with statistical foundation that enables us to understand better theoretical properties.

Let {(X i , Y i )} i = 1 p be an i.i.d. sample where Y i ∈ { − 1, 1}. Let \(\mathcal{H}\) be a set of weak learners. Breiman (1998) observed that the AdaBoost classifier is sgn(F(X)), where F is found by a greedy algorithm minimizing

over the class of function

The work of Bickel et al. (2005) generalizes the AdaBoost in two important directions: more general class of convex loss functions and more flexible class of algorithms. This enables them to study the convergence of the algorithms and classifiers in a unified framework. Let us state in the population version of their algorithms to simplify the notation. The goal is to find \(F \in {\mathcal{F}}_{\infty }\) to minimize w(F) = EW(YF) for a convex loss W( ⋅). They proposed two relaxed Guass-Southwell algorithms, which are basically coordinatewise optimization algorithms in high-dimensional space. Given the current value F m and coordinate h, one intends to minimize W(F m + λh) over \(\lambda \in \mathbb{R}\). The first algorithm is as follows: For given α ∈ (0, 1] and F 0, find inductively \({F}_{1},{F}_{2},\ldots \), by F m + 1 = F m + λ m h m , \({\lambda }_{m} \in \mathbb{R}\), \({h}_{m} \in \mathcal{H}\) such that

In particular, when λ m and h m minimize W(F m + λh), then (7.16) is obviously satisfied with equality. The generalization covers the possibility that the minimum of W(F m + λh) is not assumed or multiply assumed. The algorithm is very general in the sense that it does not even specify a way to find λ m and h m , but a necessary condition of (7.16) is that

In other words, the target value decreases each iteration. The second algorithm is the same as the first one but requires

Under such a broad class of algorithms, Bickel et al. (2005) demonstrated unambiguously and convincingly that the generalized boosting algorithm converges to the Bayes classifier. They further demonstrated that the generalized boosting algorithms are consistent when the sample versions are used. In addition, they were able to derive the algorithmic speed of convergence, minimax rates of the convergence of the generalized boosting estimator to the Bayes classifier, and the minimax rates of the Bayes classification regret. The results are deep and useful. The work puts boosting algorithms in formal statistical framework and provides insightful understanding on the fundamental properties of the boosting algorithms.

7.1.3.1 Regularization of Covariation Matrices

It is well known that the sample covariance matrix has unexpected features when p and n are of the same order (Marčcenko and Pastur 1967; Johnstone 2001). Regularization is needed in order to obtain the desire statistical properties. Peter Bickel pioneered the work on the estimation of large covariance and led the development of the field through three seminal papers in 2008. Before Bickel’s work, the theoretical work is very limited, often confining the dimensionality to be finite [with exception of Fan et al. (2008)], which does not reflect the nature of high-dimensionality. It is Bickel’s work that allows the dimensionality grows much faster than sample size.

To regularize the covariance matrices, one needs to impose some sparsity conditions. The methods to explore sparsity are thresholding and the penalized quasi-likelihood approach. The former is frequently applied to the situations in which the sparsity is imposed on the elements which are directly estimable. For example, when the p ×p covariance matrix Σ is sparse, a natural estimator is the following thresholding estimator

for a thresholding parameter t. Bickel and Levina (2008b) considered a class of matrix

for 0 ≤ q < 1. In particular, when q = 0, c p is the maximum number of nonvanishing elements in each row. They showed that when the data follow the Gaussian distribution and t n = M′(n − 1(log p))1 ∕ 2 for a sufficiently large constant M′,

and

uniformly for the class of matrices in (4.3), where \(\|{\mathbf{A}\|}^{2} = {\lambda }_{\max }({\mathbf{A}}^{T}\mathbf{A})\) is the operator norm of a matrix A and \(\|{\mathbf{A}\|}_{F}^{2} ={ \sum \nolimits }_{i,j}{a}_{ij}^{2}\) is the Frobenius norm. Similar results were derived when the distributions are sub-Gaussian or have finite moments or when t n is chosen by cross-validation which is very technically challenging and novel. This along with Bickel and Levina (2008b) and El Karoui (2008) are the first results of this kind, allowing p ≫ n, as long as c p does not grow too fast.

When the covariance matrix admits a banded structure whose off-diagonal elements decay quickly:

as arising frequently in time-series application including the covariance matrix of a weak-dependent stationary time series, Bickel and Levina (2008a) proposed a banding or more generally tapering to take advantage of prior sparsity structure. Let

be the banded sample covariance matrix. They showed that by taking k n ≍ (n − 1 log p) − 1 ∕ (2(α + 1)),

uniformly in the class of matrices (7.22) with additional restrictions that

This again shows that large sparse covariance matrix can well be estimated even when p ≥ n. The results are related to the estimation of spectral density (Fan and Gijbels 1996), but also allow non-stationary covariance matrices.

When the precision matrix Ω = Σ − 1 is sparse, there is no easy way to apply thresholding rule. Hence, Rothman et al. (2008) appealed to the penalized likelihood method. Let ℓ n (θ) be the quasi-likelihood function based on a sample of size n and it is known that θ is sparse. Then, the penalized likelihood admits the form

Fan and Li (2001) advocated the use of folded-concave penalty p λ to have a better bias property and put down a general theory. In particular, when the data X 1, ⋯ , X n are i.i.d. from N(0, Σ), the penalized likelihood reduces to

where the matrix Ω is assumed to be sparse and is of primary interest. Rothman et al. (2008) utilized the fact that the diagonal elements are non-vanishing and should not be penalized. They proposed the penalized likelihood estimator \(\hat{{\Omega }}_{\lambda }\), which maximizes

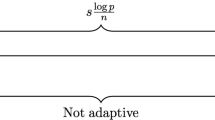

They showed that when λ ≍ [(log p) ∕ n]1 ∕ 2,

where s is the number of nonvanishing off diagonal elements. Note that there are p + 2s nonvanishing elements in Ω and (7.27) reveals that each nonsparse element is estimated, on average, with rate (n − 1(log p)) − 1 ∕ 2.

Note that thresholding and banding are very simple and easy to use. However, they are usually not semi-definite. Penalized likelihood can be used to enforce the positive definiteness in the optimization. It can also be applied to estimate sparse covariance matrices and sparse Chelosky decomposition; see Lam and Fan (2009).

The above three papers give us a comprehensive overview on the estimability of large covariance matrices. They have inspired many follow up work, including Levina et al. (2008), Lam and Fan (2009), Rothman et al. (2009), Cai et al. (2010), Cai and Liu (2011), and Cai and Zhou (2012), among others. In particular, the work inspires Fan et al. (2011) to propose an approximate factor model, allowing the idiosyncratic errors among financial assets to have a sparse covariance matrix, that widens significantly the scope and applicability of the strict factor model in finance. It also helps solving the aforementioned semi-definiteness issue, due to thresholding.

7.1.4 Variable Selections

Peter Bickel contributions to high-dimensional regression are highlighted by his paper with Ritov and Tsybakov (Bickel et al. 2009) on the analysis of the risk properties of the LASSO and Dantzig selector. This is done in least-squares setting on the nonparametric regression via basis approximations (approximate linear model) or linear model itself. This is based the following important observations in Bickel et al. (2009).

Recall that the LASSO estimator \(\hat{{\beta }}_{L}\) minimizes

A necessary condition is that 0 belongs to the subgradient of the function (7.28), which is the same as

The Danzig selector (Candes and Tao 2007) is defined by

Thus, \(\hat{{\beta }}_{D}\) satisfies (7.29), having a smaller L 1-norm than LASSO, by definition. They also show that for both the Lasso and the Danzig estimator, their estimation error δ satisfies

with probability close to 1, where J is the subset of non-vanishing true regression coefficients. This leads them to define restricted eigenvalue assumptions.

For linear model, Bickel et al. (2009) established the convergence rates of

The former is on the convergence rate of the estimator and the latter is on the prediction risk of the estimator. They also established the rate of convergence for the Lasso estimator. Both estimators admit the same rate of convergence under the same conditions. Similar results hold when the method is applied to nonparametric regression. This leads Bickel et al. (2009) to conclude that both the Danzig selector and Lasso estimator are equivalent.

The contributions of the paper are multi-fold. First of all, it provides a good understanding on the performance of the newly invented Danzig estimator and its relation to the Lasso estimator. Secondly, it introduced new technical tools for the analysis of penalized least-squares estimator. Thirdly, it derives various new results, including oracle inequalities, for the Lasso and the Danzig selector in both linear model and nonparametric regression model. The work has a strong impact on the recent development of the high-dimensional statistical learning. Within 3 years of its publications, it has been cited around 300 times!

References

Bickel PJ, Levina E (2008a) Regularized estimation of large covariance matrices. Ann Stat 36: 199–227

Bickel PJ, Levina E (2008b) Covariance regularization by thresholding. Ann Stat 36:2577–2604

Bickel PJ, Ritov Y, Zakai A (2005) Some theory for generalized boosting algorithms. J Mach Learn Res 7:705–732

Bickel PJ, Ritov Y, Tsybakov A (2009) Simultaneous analysis of Lasso and Dantzig selector. Ann Statist 37:1705–1732.

Brand M (2002) Charting a manifold. In: Advances in NIPS, vol 14. MIT Press, Cambridge, MA

Breiman L (1998) Arcing classifiers (with discussion). Ann Stat 26:801–849

Cai T, Liu W (2011) Adaptive thresholding for sparse covariance matrix estimation. J Am Stat Assoc 494:672–684

Cai T, Zhou H (2012) Minimax estimation of large covariance matrices under ℓ 1 norm (with discussion). Stat Sin, to appear

Cai T, Zhang C-H, Zhou H (2010) Optimal rates of convergence for covariance matrix estimation Ann Stat 38:2118–2144

Candes E, Tao T (2007) The Dantzig selector: statistical estimation when p is much larger than n (with discussion). Ann Stat 35:2313–2404

Donoho DL, Grimes C (2003) Hessian eigenmaps: new locally linear embedding techniques for high-dimensional data. Technical Report TR 2003-08, Department of Statistics, Stanford University, 2003

El Karoui N (2008) Operator norm consistent estimation of large-dimensional sparse covariance matrices. Ann Stat 36:2717–2756

Fan J, Gijbels I (1996) Local polynomial modelling and its applications. Chapman and Hall, London

Fan J, Li R (2001) Variable selection via nonconcave penalized likelihood and its oracle properties. J Am Stat Assoc 96:1348–1360

Fan J, Lv J (2011) Non-concave penalized likelihood with NP-dimensionality. IEEE Inf Theory 57:5467–5484

Fan J, Fan Y, Lv J, (2008) Large dimensional covariance matrix estimation via a factor model. J Econ 147:186–197

Fan J, Liao Y, Mincheva M (2011) High dimensional covariance matrix estimation in approximate factor models. Ann Statist 39:3320–3356

Freund Y (1990) Boosting a weak learning algorithm by majority. In: Proceedings of the third annual workshop on computational learning theory. Morgan Kaufmann, San Mateo

Freund Y, Schapire RE (1997) A decision-theoretic generalization of on-line learning and an application to boosting. J Comput Syst Sci 55:119–139

Johnstone IM (2001) On the distribution of the largest eigenvalue in principal components analysis. Ann Stat 29:295–327

Lam C, Fan J (2009) Sparsistency and rates of convergence in large covariance matrices estimation. Ann Stat 37:4254–4278

Levina E, Bickel PJ (2005) Maximum likelihood estimation of intrinsic dimension. In: Saul LK, Weiss Y, Bottou L (eds) Advances in NIPS, vol 17. MIT Press, Cambridge, MA

Levina E, Rothman AJ, Zhu J (2008) Sparse estimation of large covariance matrices via a nested lasso penalty. Ann Stat Appl Stat 2:245–263

Marčcenko VA, Pastur LA (1967) Distributions of eigenvalues of some sets of random matrices. Math USSR-Sb 1:507–536

Rothman AJ, Bickel PJ, Levina E, Zhu J (2008) Sparse permutation invariant covariance estimation. Electron J Stat 2:494–515

Rothman AJ, Levina E, Zhu J (2009) Generalized thresholding of large covariance matrices. J Am Stat Assoc 104:177–186

Roweis ST, Saul LK (2000) Nonlinear dimensionality reduction by locally linear embedding. Science 290:2323–2326

Schapire R (1990) Strength of weak learnability. Mach Learn 5:197–227

Severini TA, Wong WH (1992) Generalized profile likelihood and conditional parametric models. Ann Stat 20:1768–1802

Tenenbaum JB, de Silva V, Landford JC(2000) A global geometric framework for nonlinear dimensionality reduction. Science 290:2319–2323

Tibshirani R (1996) Regression shrinkage and selection via lasso. J R Stat Soc B 58:267–288

Verveer P, Duin R (1995) An evaluation of intrinsic dimensionality estimators. IEEE Trans PAMI 17:81–86

Zou H (2006) The adaptive Lasso and its oracle properties. J Am Stat Assoc 101:1418–1429

Zou H, Li R (2008) One-step sparse estimates in nonconcave penalized likelihood models (with discussion). Ann Stat 36:1509–1533

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Appendix

Appendix

Rights and permissions

Copyright information

© 2014 Springer Science+Business Media New York

About this chapter

Cite this chapter

Fan, J. (2014). High-Dimensional Statistics. In: Fan, J., Ritov, Y., Wu, C.F.J. (eds) Selected Works of Peter J. Bickel. Selected Works in Probability and Statistics, vol 13. Springer, New York, NY. https://doi.org/10.1007/978-1-4614-5544-8_7

Download citation

DOI: https://doi.org/10.1007/978-1-4614-5544-8_7

Published:

Publisher Name: Springer, New York, NY

Print ISBN: 978-1-4614-5543-1

Online ISBN: 978-1-4614-5544-8

eBook Packages: Mathematics and StatisticsMathematics and Statistics (R0)