Abstract

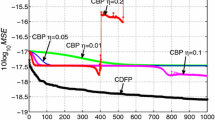

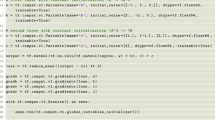

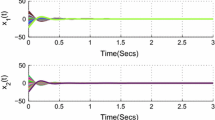

In this paper, we establish a deterministic convergence of Wirtinger-gradient methods for a class of complex-valued neural networks on the premise of a limited number of training samples. It is different from the probabilistic convergence results under the assumption that a large number of training samples are available. Weak and strong convergence results for Wirtinger-gradient methods are proved, indicating that Wirtinger-gradient of the error function goes to zero and the weight sequence goes to a fixed value. An upper bound of the learning rate is also provided to guarantee the deterministic convergence of Wirtinger-gradient methods. Simulations are provided to support the theoretical findings.

Similar content being viewed by others

References

Aizenberg I (2011) Complex-valued neural networks with multivalued neurons. Springer, Heidelberg

Hirose A (2012) Complex-valued neural networks. Springer, Berlin

Mandic DP, Goh SL (2009) Complex valued nonlinear adaptive filters: noncircu-larity widely linear and neural models. Wiley, New York

Amin MF, Murase K (2009) Single-layered complex-valued neural network for real-valued classification problems. Neurocomputing 72(4–6):945–955

Cao J, Rakkiyappan R, Maheswari K et al (2016) Exponential \(H_{\infty }\) filtering analysis for discrete-time switched neural networks with random delays using sojourn probabilities. Sci China Tech Sci 59(3):387–402

Rakkiyappan R, Velmurugan G, Cao J (2015) Stability analysis of fractional-order complex-valued neural networks with time delays. Chaos Soliton Fractals 78(11):1344–1349

Rakkiyappan R, Velmurugan G, Cao J (2015) Multiple \(\mu \)-stability analysis of complex-valued neural networks with unbounded time-varying delays. Neurocomputing 149((PB)):594–607

Rakkiyappan R, Cao J, Velmurugan G (2015) Existence and uniform stability analysis of fractional-order complex-valued neural networks with time delays. IEEE Trans Neural Netw Learn Syst 26(1):84–97

Yang R, Wu B, Liu Y (2015) A halanay-type inequality approach to the stability analysis of discrete-time neural networks with delays. Appl Math Comput 265:696–707

Liu Y, Xu P, Lu J et al (2016) Global stability of Clifford-valued recurrent neural networks with time delays. Nonlinear Dyn. doi:10.1007/s11071-015-2526-y

Xu D, Zhang H, Liu L (2010) Convergence analysis of three classes of split-complex gradient algorithms for complex-valued recurrent neural networks. Neural Comput 22(10):2655–2677

Xu D, Shao H, Zhang H (2012) A new adaptive momentum algorithm for split-complex recurrent neural networks. Neurocomputing 93:133–136

Xu D, Zhang H, Mandic DP (2015) Convergence analysis of an augmented algorithm for fully complex-valued neural networks. Neural Netw 69:44–50

Zhang H, Xu D, Zhang Y (2014) Boundedness and convergence of split-complex back-propagation algorithm with momentum and penalty. Neural Process Lett 39(3):297–307

Zhang H, Liu X, Xu D, Zhang Y (2014) Convergence analysis of fully complex backpropagation algorithm based on Wirtinger calculus. Cogn Neurodyn 8(3):261–266

Nitta T (1997) An extension of the back-propagation algorithm to complex numbers. Neural Netw 10(8):1391–1415

Hirose A (1992) Continuous complex-valued back-propagation learning. Electron Lett 28(20):1854–1855

Widrow B, McCool J, Ball M (1975) The complex LMS algorithm. Proc IEEE 63:712–720

Goh SL, Mandic DP (2004) A complex-valued RTRL algorithm for recurrent neural networks. Neural Comput 16(12):2699–2713

Wirtinger W (1927) Zur formalen theorie der funktionen von mehr komplexen veränderlichen. Math Ann 97:357–375

Li H, Adali T (2008) Complex-valued adaptive signal processing using nonlinear functions. EURASIP J Adv Signal Process 2008:1–9

Amin MF, Amin MI, Al-Nuaimi A, Murase K (2011) Wirtinger calculus based gradient descent and Levenberg–Marquardt learning algorithms in complex-valued neural networks. In: Neural information processing. Springer, Heidelberg, pp 550–559

Ding X, Zhang R (2015) Convergence of online gradient method for recurrent neural networks. J Interdiscipl Math 18(1–2):159–177

Wang J, Yang J, Wu W (2011) Convergence of cyclic and almost-cyclic learning with momentum for feedforward neural networks. IEEE Trans Neural Netw 22:1297–1306

Liu Y, Yang D, Nan N et al (2016) Strong convergence analysis of batch gradient-based learning algorithm for training pi-sigma network based on tsk fuzzy models. Neural Process Lett 43(3):745–758

Wu W, Feng G, Li Z, Xu Y (2005) Deterministic convergence of an online gradient method for BP neural networks. IEEE Trans Neural Netw 16(3):533–540

Wu W, Wang J, Cheng M, Li Z (2011) Convergence analysis of online gradient method for BP neural networks. Neural Netw 24(1):91–98

Huang Y, Zhang H, Wang Z (2014) Multistability of complex-valued recurrent neural networks with real-imaginary-type activation functions. Appl Math Comput 229(6):187–200

Brandwood D (1983) A complex gradient operator and its application in adaptive array theory. IEEE Commun Radar Signal Process 130(1):11–16

Ortega JM, Rheinboldt WC (1970) Iterative solution of nonlinear equations in several variables. Academic Press, New York

Author information

Authors and Affiliations

Corresponding author

Additional information

This work was supported by the National Natural Science Foundation of China (Nos. 61301202, 61101228), by the Research Fund for the Doctoral Program of Higher Education of China (No. 20122304120028).

Rights and permissions

About this article

Cite this article

Xu, D., Dong, J. & Zhang, H. Deterministic Convergence of Wirtinger-Gradient Methods for Complex-Valued Neural Networks. Neural Process Lett 45, 445–456 (2017). https://doi.org/10.1007/s11063-016-9535-9

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11063-016-9535-9