Abstract

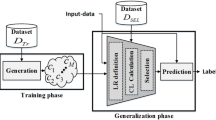

In this paper we propose a new approach for dynamic selection of ensembles of classifiers. Based on the concept named multistage organizations, the main objective of which is to define a multi-layer fusion function adapted to each recognition problem, we propose dynamic multistage organization (DMO), which defines the best multistage structure for each test sample. By extending Dos Santos et al.’s approach, we propose two implementations for DMO, namely DSAm and DSAc. While the former considers a set of dynamic selection functions to generalize a DMO structure, the latter considers contextual information, represented by the output profiles computed from the validation dataset, to conduct this task. The experimental evaluation, considering both small and large datasets, demonstrated that DSAc dominated DSAm on most problems, showing that the use of contextual information can reach better performance than other existing methods. In addition, the performance of DSAc can also be enhanced in incremental learning. However, the most important observation, supported by additional experiments, is that dynamic selection is generally preferred over static approaches when the recognition problem presents a high level of uncertainty.

Similar content being viewed by others

References

Brown G, Wyatt J, Harris R, Yao X (2005) Diversity creation methods: a survey and categorization. Inf Fusion 6(1):5–20

Dos Santos EM, Sabourin R, Maupin P (2006) Single and multi-objective genetic algorithms for the selection of ensemble of classifiers. In: Proceedings of international joint conference on neural networks, 2006, Vancouver, Canada, pp 3070–3077

Shipp CA, Kuncheva LI (2002) Relationships between combination methods and measures of diversity in combining classifiers. Inf Fusion 3(2):1351–48

Kuncheva LI, Whitaker CJ, Shipp C, Duin R (2003) Limits on the majority vote accuracy in classifier fusion. Pattern Anal Appl 6(1):22–31

Ruta D, Gabrys B (2002) A theoretical analysis of the limits of majority voting errors for multiple classifier systems. Pattern Anal Appl 5:333–350

Ruta D, Gabrys B (2005) Classifier selection for majority voting. Inf Fusion 1:63–81

Dos Santos EM, Sabourin R, Maupin P (2008) A dynamic overproduce-and-choose strategy for the selection of classifier ensembles. Pattern Recognit 41:2993–3009

Woods K, Kegelmeyer JWP, Bowyer K (1997) Combination of multiple classifiers using local accuracy estimates. IEEE Trans Pattern Anal Mach Intell 19(4):405–410

Giacinto G, Roli F (2001) Dynamic classifier selection based on multiple classifier behaviour. Pattern Recognit 34:1879–1881

Zhu X, Wu X, Yang Y (2004) Dynamic classifier selection for effective mining from noisy data streams. In: Proceedings of the 4th IEEE international conference on data mining, IEEE Computer Society, Washington, DC, USA, 2004, pp 305–312

Soares RGF, Santana A, Canuto AMP, de Souto MCP (2006) Using accuracy and diversity to select classifiers to build ensembles. In: Proceedings of the 2006 international joint conference on neural networks, Vancouver, Canada, 2006, pp 1310–1316

Ko AH, Sabourin R, Britto J (2008) From dynamic classifier selection to dynamic ensemble selection. Pattern Recognit 41(5):1718–1731

Kuncheva LI (2000) Cluster-and-selection model for classifier combination. In: Proceedings of international conference on knowledge based intelligent engineering systems and allied technologies, Brighton, UK, 2000, pp 185–188

Singh S, Singh M (2005) A dynamic classifier selection and combination approach to image region labelling. Signal Process Image Commun 20(3):219–231

Hansen LK, Liisberg C, Salamon P (1997) The error-reject tradeoff. Open Syst Inf Dyn 4(2):159–184

Kuncheva LI, Bezder JC, Duin RPW (2001) Decision templates for multiple classifier fusion: an experimental comparison. Pattern Recognit 34:299–314

Serpico SB, Bruzzone L, Roli F (1996) An experimental comparison of neural and statistical non-parametric algorithms for supervised classification of remote-sensing images. Pattern Recognit Lett 17(3):1331–1341

Park Y, Sklansky J (1990) Automated design of linear tree classifiers. Pattern Recognit 23(12):1393–1412

Oliveira LES, Sabourin R, Bortolozzi F, Suen CY (2002) Automatic recognition of handwritten numeral strings: a recognition and verification strategy. IEEE Trans Pattern Anal Mach Intell 24(11):1438–1454

Ho T (1998) The random subspace method for construction decision forests. IEEE Trans Pattern Anal Mach Intell 20:832–844

Rheaume F, Jousselme A-L, Grenier D, Bosse E, Valin P (2002) New initial basic probability assignments for multiple classifiers. In: Kadar I (eds) Society of photo-optical instrumentation engineers (SPIE) conference series, vol 4729, pp 319–328

Milgram J, Cheriet M, Sabourin R (2006) One against one” or “One against all”: which one is better for handwriting recognition with SVMs? In: Proceedings of 10th international workshop on frontiers in handwriting recognition, La Baule, France, 2006

Radtke P (2006) Classification systems optimization with multi-objective evolutionary algorithms, Ph.D. thesis, École de Technologie Supérieure (ETS), Montreal, Canada

Milgram J (2007) Contribution à l’intégration des machines à vecteurs de support au sein de systèmes de reconnaissance de formes: application à la lecture automatique de l’écriture manuscrite (in french), Ph.D. thesis, École de Technologie Supérieure

Cui B, Ooi BC, Su J, Tan K-L (2003) Contorting high dimensional data for efficient main memory knn processing. In: Proceedings of the 2003 ACM SIGMOD international conference on management of data, San Diego, USA, 2003, pp 479–490

Acknowledgments

The authors would like to acknowledge the CAPES-Brazil and NSERC-Canada for the financial support.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Cavalin, P.R., Sabourin, R. & Suen, C.Y. Dynamic selection approaches for multiple classifier systems. Neural Comput & Applic 22, 673–688 (2013). https://doi.org/10.1007/s00521-011-0737-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-011-0737-9