Abstract

Control Yourself is a natural user interaction system with a camera and depth sensor, a processor and a display. The user’s image is separated from the background of a camera’s output and rendered in the program in real-time. The result is that a display shows a video of a person inside the application. The software also recognizes various types of movement such as gestures, changing positions, moving in frame and multiplayer interaction. The technology utilizes the obtained gestures and movements for GUI transformations and creation and for positioning the image or mesh of a user with the background removed. Users of the system can manipulate virtual objects and various features of the program by using gestures and movements while seeing themselves as if they were viewing a mirror with an augmented reality around them. This approach allows users to interact with software by natural movements via intuitive gestures.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

- Augmented reality

- Mixed reality

- Control yourself

- Kinect

- Depth camera

- Computer vision

- Interactive games

- Interactive games

- Project-based learning

1 Introduction

When gamers discover a new video game adventure they may experience the feeling of not being a part of the game. The playing experience is typically a hardware based interface and computer screen which creates an atmosphere that tells the player that he is not in the game but outside of it. In most video games a player controls an avatar who does not look like a player. Therefore the player cannot intuitively immerse themselves into a video game as he does in real life. Control Yourself allows the player to get a full experience of being inside a game and to feel like a main character in the game.

Control Yourself is a Kinect-based gesture-controlled mixed-reality exercising game. The game idea is to see yourself inside the game. We developed a project where a player would have an opportunity to control the game with his or her own movements as well as to see themselves in the game as an avatar. The name “Control Yourself” gives an immediate idea of the game’s concept. Figure 1 shows a screenshot of the game in action.

The program takes the depth image provided by the Kinect, locates a person in the image, and then maps the depth image to a color image. The color image of the player is rendered into the game as it is played. The end result is that the game has a video of a cropped person inside.

After cropping the person’s image the program finds the users skeleton and analyses the user’s gestures. Control Yourself recognizes the following gestures: jump, sit (hide), stand up, punch and uppercut. A player sees him or herself in the game standing on a skateboard and moving forward. Gestures in the game work the same way that they work in real life.

The player moves on a cart while encountering obstacles and overcoming them using the gestures. Control Yourself keeps track of game points, crops the avatar every frame, recognizes gestures and renders the game world simultaneously. The game also has an innovative main menu and a pause menu where gestures control the options: pointing with a hand to choose an option and a clap to confirm the option (Fig. 2). Two gestures in the menu help overcome unintentional selection (“Midas Touch”).

2 Development Methodology

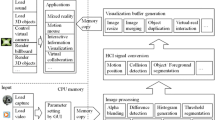

Control Yourself was written in C# and JavaScript using Unity3D and the Kinect SDK. Various other tools were used to develop the content and control systems of the game, including Blender, iTween, SketchUp, and MonoDevelop (Fig. 3).

The program uses the Unity Kinect Wrapper to bring the Kinect SDK into the Unity Game Engine. The program takes the depth image provided by the Kinect, locates a person in the image, and then maps the depth image to a color image (Fig. 4 left). Mapping is not linear because of the parallax effect which is produced by the distance between the color camera and the depth camera (Fig. 4 right). Consequently, the program decides how to translate the color image in order to compensate for the parallax issue dynamically in every frame. The players image is then rendered in the game and is updated in real time.

The 3D graphical assets of the game required integration with the Unity Framework (Fig. 5 right). Blender (Fig. 5 left) was used to model 3D assets or modify existing models from SketchUp. Since the game follows a rail, the animation uses iTween to designate the path that the train cart should follow.

3 Mixed-Reality Development as Project-Based Learning

Control Yourself begin as an undergraduate course project in a Computer Vision and Human-Computer Interaction seminar. Over 18 months, the project evolved and expanded to reach areas of game development, graphic design, and software engineering methodology [2]. The project afforded the opportunity to explore larger scale development than what is typically available in a single semester.

The project was showcased and presented at university’s research day as well as the regional New England Undergraduate Computing Symposium where it won an award for best game design [3]. Dozens of people have tried Control Yourself and provided feedback which was incorporated into the game in an iterative development process. The enthusiasm and interest of people observing and playing the game have also been a positive impact of this project in showcasing interesting developments in computer science and recruiting diverse populations to introductory computer science courses [1].

4 Discussion

The scientific contribution of this work includes novel approaches to Natural User Interfaces (NUI). With Control Yourself technology, users do not need be familiar with traditional computer interfaces. Using gestures and yourself as an avatar provides users with a completely natural way of interaction with software. This technology can be incorporated not only in the gaming experience, but in a variety of activities.

A text editor could utilize our techniques with speech recognition in order to bring a natural way of interaction with a document. A user should start using the software immediately, providing gestures and observing software responses without prior knowledge in software or the current application.

Educational programs could demonstrate gestures and then ask the user to perform them as they watch themselves on the screen. Such a program could teach kids different words and corresponding movements. Another application of showing/guessing gestures can be the game “charades”. The program would tell a player a word, the player would try to act it out, and online users would see the video of a person and try to guess the word.

This technology could also help people with disabilities, or those undergoing rehabilitation. For example, a program which would draw an enhanced reality world around a user, and provide useful tips about objects in the room and their usage.

Control Yourself is patented under a provisional patent with the title “Methods, apparatuses and systems for a mixed reality”.

5 Conclusion

Control Yourself introduces an innovative way of human interaction with software. It encourages an intuitive and natural approach of human-computer interaction. This approach should not require any previous experience according to Control Yourself tenets. The software includes a vast array of potential applications including games, educational software for children of all ages as well as adults, exercising software and others.

Control Yourself currently consists of a main menu and a few levels where you can see yourself. Future work involves adding more levels, working on the quality of the cropping a person’s image, additional gesture recognition, and improving the games graphics. Development of the game encountered many opportunities to overcome challenges and learn about important concepts in mixed-reality human-computer interaction.

References

Magee, J.J., Han, L.: Integrating a science perspective into an introductory computer science course. In: Proceedings of the 3rd IEEE Integrated STEM Education Conference (ISEC), Princeton, March 2013

Zhizhimontova, E.: Control Yourself - Kinect Game. Undergraduate honors thesis, Math and Computer Science Department, Clark University, Worcester (2014)

Zhizhimontova, E.: Control yourself - kinect game. In: The New England Undergraduate Computing Symposium (NEUCS), Boston, MA, April 2014

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2015 Springer International Publishing Switzerland

About this paper

Cite this paper

Zhizhimontova, E., Magee, J. (2015). Control Yourself: A Mixed-Reality Natural User Interface. In: Stephanidis, C. (eds) HCI International 2015 - Posters’ Extended Abstracts. HCI 2015. Communications in Computer and Information Science, vol 528. Springer, Cham. https://doi.org/10.1007/978-3-319-21380-4_42

Download citation

DOI: https://doi.org/10.1007/978-3-319-21380-4_42

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-21379-8

Online ISBN: 978-3-319-21380-4

eBook Packages: Computer ScienceComputer Science (R0)