Abstract

We present a numerical scheme for the approximation of Hamilton-Jacobi-Isaacs equations related to optimal control problems and differential games. In the first case, the Hamiltonian is convex with respect to the gradient of the solution, whereas the second case corresponds to a non convex (minmax) operator. We introduce a scheme based on the combination of semi-Lagrangian time discretization with a high-order finite volume spatial reconstruction. The high-order character of the scheme provides an efficient way towards accurate approximations with coarse grids. We assess the performance of the scheme with a set of problems arising in minimum time optimal control and pursuit-evasion games.

This research was supported by the following grants: AFOSR Grant no. FA9550-10-1-0029, ITN-Marie Curie Grant no. 264735-SADCO, and the FWF-START project Sparse Approximation and Optimization in High Dimensions.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

- Hamilton-Jacobi-Isaacs equations

- High-order schemes

- Semi-Lagrangian schemes

- Finite volume methods

- Optimal control

- Differential games

1 Introduction

The numerical approximation of Hamilton-Jacobi-Isaacs (henceforth HJI) equations appears as a crucial step in many fields of applications, including optimal control, image processing, fluid dynamics, robotics and geophysics. In general, these equations do not have regular solutions even if the data and the coefficients are regular, and therefore many efforts have been devoted to the development and the analysis of approximation schemes for such problems. The convergence of the schemes is understood in the sense of viscosity solutions; it is well known (see e.g. [5, 20]) that viscosity solutions are typically Lipschitz continuous, and therefore the main difficulty is to have a good resolution around the singularities, and a good accuracy in the parts of the domain where the solution is regular.

The theory of approximation schemes for viscosity solutions has been developed starting from the huge literature existing for the numerical solution of conservation laws in one dimension, exploiting the relation between entropy solutions and viscosity solutions. More precisely, the viscosity solution can be written as the space integral of the corresponding entropy solution and this relation can be also applied to the construction of numerical schemes, by simply integrating in space the schemes for conservation laws. At the very beginning, these techniques were successfully applied to the study of the class of monotone schemes; in this framework, the rate of convergence is limited to first order. Some of these schemes, like finite differences for instance, are used over structured grids and are strictly related to the above mentioned methods for conservation laws. Other approximation schemes, like the Finite Volume Method and semi-Lagrangian schemes, can easily work on unstructured grids and are based on different ideas, e.g. on the Hopf-Lax representation formula. In all these cases, the role of monotonicity is important to guarantee the convergence to the viscosity solution, and a general result for monotone schemes applied to second order fully nonlinear equations has been proved by Barles and Souganidis in [6]. Although a complete list of the contributions to numerical methods for HJI equations goes beyond the scopes of this paper, let us quote the application of Godunov/central schemes [1, 2], antidissipative and SuperBee/UltraBee [10, 11], MUSCL [26], and WENO schemes [12, 29].

A natural way to overcome the limitations of monotone schemes is by the application of high-order approximations. For a given accuracy, these methods can achieve acceptable error levels in coarser grids, with a considerably reduced number of nodes in comparison with low-order, monotone schemes. This can be a crucial point when the dimension of the problem is high or when complex computations are required at every grid node; both situations naturally arise in the context of HJI equations stemming from optimal control and differential games. In this paper we propose the coupling between a semi-Lagrangian (SL) time discretization with a finite volume reconstruction in space. High-order SL schemes for HJI equations have been first considered for a semi-discretization in time in [18], and for the fully discrete scheme in [19]. A convergence analysis based on the condition \(\varDelta x=O(\varDelta t^2)\) is carried out in [21]. The adaptation of the theory to weighted ENO reconstructions is presented in [14], along with a number of numerical tests comparing the various high-order versions of the scheme. Other numerical tests, mostly in higher dimension and concerned with applications to front propagation and optimal control, are presented in [13]. Let us mention that a first convergence result for a class of motonone Finite Volume schemes has been proved in [27].

The paper is organized as follows.

In Sect. 2, we illustrate our ideas with a setting related to minimum time optimal control and differential games, leading to stationary HJI equation. In Sect. 3, we deal with a high-order approximation scheme based on a coupling between a semi-Lagrangian discretization in time and a Finite Volume spatial reconstruction. Finally, in Sect. 4 we present some numerical experiments assessing the performance and accuracy of the proposed scheme.

2 HJI Equations Arising in Optimal Control and Differential Games

As we mentioned in the introduction, HJI equations often arise in optimal control and differential games; whenever a feedback controller is sought, the application of the Dynamic Programming Principle (DPP) leads to HJI equations, which can be time-dependent or stationary. Among a wide class of problems, in this section we illustrate our ideas by means of minimum time optimal control and pursuit-evasion games.

Let us start by considering consider system dynamics of the form

where \(y\in \mathbb {R}^n\) is the state, \(\alpha :[0,+\infty ) \rightarrow A\) is the control and \(f:\mathbb {R}^n \times A\rightarrow \mathbb {R}^n\) is the controlled vector field. To get a unique trajectory for every initial condition and a given control function, we will always assume that \(f\) is continuous with respect to both variables, and Lipschitz continuous with respect to the state space (uniformly in \(\alpha \)). Moreover, we will assume that the controls are measurable functions of time so that we can apply the Carathéodory theorem for the Cauchy problem (1).

In the minimum time optimal control problem, we want to minimize the time of arrival to a given target \(\mathcal {T}\). The cost will be given by

By the application of the DPP one can prove that the minimum time function

satisfies the Bellman equation

in the domain where \(T\) is finite (the so-called reachable set). Introducing the change of variable

where \(\mu \) is a free positive parameter to be suitably chosen, one can characterize \(T\) as the unique viscosity solution of the following Dirichlet problem

Another interesting example comes from the DPP approximation of the Hamilton-Jacobi-Isaacs equations related to pursuit-evasion games (see [5] for more details). Player-\(a\) (the pursuer) wants to catch player-\(b\) (the evader) who is escaping, and the controlled dynamics for each player are known. To simplify the notations, we will denote by \(y(t)=(y_P(t),y_E(t))\) the state of the system, where \(y_P(t)\) and \(y_E(t)\) are the positions at time \(t\) of the pursuer and of the evader, both belonging to \(\mathbb {R}^n\), and by \(f:\mathbb {R}^{2n}\times A\times B\rightarrow \mathbb {R}^{2n}\) the dynamics of the system (clearly, here the dynamics depend on the controls for both players). The payoff is defined as the time of capture but, in order to have a fair game, we need to restrict the strategies of the players to the so-called non-anticipating strategies (i.e. they cannot exploit the knowledge of the future strategy of the opponent). These strategies will be denoted respectively by \(\alpha \) and \(\beta \). Given the strategies \(\alpha (\cdot )\) and \(\beta (\cdot )\) for the first and the second player, we can define the corresponding time of capture as

If there is no capture for those strategies we set \(t_x(\alpha [\beta ],\beta )=+\infty \). Then we can define the lower time of capture as

and again \(T\) can be infinite if there is no way to catch the evader from the initial position of the system \(x\). In order to get a fixed point problem and to deal with finite values, it is useful to scale time by the change of variable (3), which corresponds to the payoff

The rescaled minimal time will be given by

Assuming \(v\) to be continuous, the application of the DPP leads to the following characterization of the value function

Note that the equation is complemented by the natural homogeneous boundary condition on the target \(T(x)=v(x)=0\). If \(v(\,\cdot \,)\) is continuous, then \(v\) is a viscosity solution in \(\mathbb {R}^n\setminus \mathcal {T}\) of the Dirichlet problem (5).

3 Semi-Lagrangian Schemes for HJI Equations

In this section we introduce the main building blocks for the construction of semi-Lagrangian/finite volume schemes for HJI equations of the form (4)–(5). The general procedure is decomposed into a time discretization step, and a space discretization procedure. In the time discretization step, the system dynamics (1) are approximated by a suitable integration rule, and the DPP is applied on its discrete-time version. In the space discretization procedure, the resulting HJI equation is then approximated over a finite set of elements. The same procedure holds for all the problems presented in the previous section, however, for the sake of simplicity, this section is illustrated by means of the minimum time problem and its associated HJB Eq. (4) (which is also a particular case of (5) for a single player setting).

Time Discretization

For the implementation of a time disretization procedure, we follow the ideas presented in [18]. The first step towards the construction of a high-order scheme for the equations (4)–(5) is to consider discrete time approximation of the system dynamics (1) of the form

where \(h>0\) corresponds to a time discretization parameter, \(\varPhi =\varPhi (y_n,A_n,h)\) is the Henrici function of a one-step approximation of the dynamical system, and \(A_n\) stands for a multidimensional control defined accordingly to the order \(q\) of the numerical integrator,

Particular cases of the aforementioned setting are

-

(i)

Explicit Euler’s method: \(\varPhi (y_n,A_n,h)=f(y_n,a_n)\), with \(A_n=a_n^0\).

-

(ii)

Midpoint rule: \(\varPhi (y_n,A_n,h)=f(y_n+hf(y_n,a_n^0)/2,a_n^1)\), with \(A_n=(a_n^0,a_n^1)\).

-

(iii)

Fourth-order Runge-Kutta scheme:

$$\begin{aligned} \varPhi (y_n,A_n,h)&=\frac{1}{6}(K_0+2K_1+2K_2+K_3)\,,\quad A_n=(a_n^0,a_n^1,a_n^2,a_n^3)\,,\\ K_0=f(y_n,a_n^0)\,,K_1&=f(y_n+h\frac{K_0}{2},a_n^1)\,,K_2=f(y_n+h\frac{K_1}{2},a_n^2)\,,K_3=f(y_n+h K_2,a_n^3). \end{aligned}$$

The application of the DPP for the discrete-time dynamics leads to an approximation of Eq. (4) of the form

where \(\beta =e^{-h}\) appears as a consequence of the application of the Kruzkhov transform (3) to the discrete version of the minimum time solution (in this case with \(\mu =1\)).

3.1 Space Discretization

Space Discretization

Note that, despite having introduced an approximation in time for Eq. (4), the resulting semi-discrete version (7) is still continuously defined over the state space. In order to implement a fully-discrete computational scheme, it is necessary to realize this expression in a bounded domain with a finite set of elements. Classical schemes for HJI equations of this form are based upon finite difference discretizations, where the domain \(\varOmega \subset \mathbb {R}^n\) over which the solution is sought, is discretized into a set of grid points, and the approximation is understood in a pointwise sense. A natural problem in this setting arises from the fact that the r.h.s. of Eq. (7) requires the evaluation of \(v_h\) at the arrival points \(x+h\varPhi (x,A_n,h)\), which are not necessarily part of the grid. In the low-order version of the SL scheme, this evaluation is performed via piecewise linear interpolation from the grid values, whereas in this work we focus on a high-order definition of such an operation. We follow an approach based on a Finite Volume approximation of the problem. For a given mesh parameter \(k\), and a set of central nodes \(\{x_i\}_{i=1}^N\), the domain is discretized into a set of cells \(\varOmega _i=[x_i-k/2,x_i+k/2]\). Instead of considering pointwise nodal values of \(v_h\), the solution will be represented by a set of cell-averaged values \(V:=\{v_i\}_{i=1}^N\) defined as

Its is straightforward to see that the exact expression for the averaged values of the solution of (7) is given by

A first approximation is introduced when the integral in (8) is replaced by a suitable Gaussian quadrature rule

where \(x_i\) and \(w_i\) are Gauss points and weights inside the \(i-\)th cell, respectively. This expression requires the evaluation of the exact \(v_h\) at a set of arrival points, which is not available. Analogously to the grid-based schemes, we approximate this evaluation with an interpolation operator \(I=I[V]\) defined upon the set of cell averages, i.e.

where \(I:\mathbb {R}^N\rightarrow \rightarrow S_k\) corresponds to a WENO (weighted essentially non-oscillatory) interpolation routine performed over the averaged dataset \(V\). The WENO reconstruction procedure and related numerical schemes date back to the work of [25], in the context of numerical methods for conservation laws, as a way of circumventing Godunov’s barrier theorem by considering nonlinear (on the data) reconstruction procedures for the implementation of high-order accurate schemes. As it has been shown in [21], the use of a WENO interpolation procedure can be considered as a building block in high-order, semi-Lagrangian schemes for time-dependent HJB equations, whereas here we introduce an application to static HJI equations. We now briefly describe the main ideas for a 1D WENO reconstruction.

From a set of cell values \(V\) and a polynomial degree \(r\), the WENO reconstruction procedure yields a set of polynomials \(P=\{p_i(x)\}_{i=1}^N\) of degree \(r\), holding standard interpolation properties

and an essentially non-oscillatory condition [24]; in general, such an interpolant is built by considering a set of stencils per cell, and weighting them according to a smoothness indicator. Several variations of this procedure can be found in the literature; for illustration purposes, we restrict ourselves to the reconstruction procedure presented in [4], on its 1D version, and reconstruction degree 2. In this case, given a set of averaged values \(V\), the reconstruction procedure seeks, for every cell, a local quadratic expansion upon a linear combination of Legendre polynomials rescaled in local coordinates \(\xi =[-1/2,\,1/2]\), expressed in the form

with

We assign the subscript “0” to the cell where we compute the coefficients, other values indicating location and direction with respect to \(v_0\) (note that the notation is coherent with the fact that the first coefficient in the expansion \(v_0\), holds \(v_0=v_{i}\), i.e., the centered value). Next, for this particular problem we define three stencils

and in every stencil we compute a polynomial of the form

Imposing the conservation condition (10), the coefficients are given by

For every polynomial we calculate a smoothness indicator defined as

where \(r\) is the polynomial reconstruction degree (in our case \(r=2\)), and which in our case yields to

This leads to the following WENO weights:

where \(\epsilon \) is a parameter introduced in order to avoid division by zero; usually \(\epsilon =10^{-12}\). The scheme is rather insensitive to the parameter \(r\), which we set \(r=5\). The parameter \(\lambda \) is usually computed in an optimal way to increase the accuracy of the reconstruction at certain points; we opt for a centered approach instead, thus \(\lambda ^{(1)}=\lambda ^{(3)}=1\), while \(\lambda ^{(2)}=100\). Finally, the expression for the 1D reconstructed polynomial at the \(i-th\) cell is given by

Having defined all the buildings blocks for a fully-discrete, high-order approximation of Eq. (4), we need to solve the following nonlinear system

Note that we added an additional boundary condition related to the external part of the computational domain which is not the target, computationally equivalent to setting a high value which is neglected in the minimization procedure for the interior elements. The computational domain must be set accordingly to this condition, in order to generate a consistent result. With respect to the solution of the nonlinear system (11), the approach which we follow is motivated by the standard approach undertaken in the low-order setting, which is to solve the system by some variation of a fixed point iteration

which, in the low-order monotone scheme, is well-justified since \(T\) is a contraction mapping. A key point is the fact that the corresponding linear interpolation operator is monotone, which is lost in the high-order scheme. However, it is still possible to develop a convergence theory for interpolation operators that are not monotone but have additional properties such as the WENO operator. In [21], a convergence framework has been developed for time-dependent HJB equations, and recently in [8], convergence results have been obtained for the stationary case. One of the advantages of this setting is the vast amount of available literature dealing with acceleration techniques for HJI iterative solvers (we refer the reader to [3] and references therein for a recent update on such methods).

4 Numerical Examples

We now present two numerical examples assessing the performance of the proposed scheme. We recall that, although we will present examples dealing with minimum time optimal control and pursuit-evasion games, the presented ideas can be applied in a straightforward manner to infinite/finite horizon optimal control, reachability analysis and differential games.

A Two-Dimensional Minimum Time Problem

We begin by considering a two-dimensional minimum time problem. In this first example, system dynamics are given by

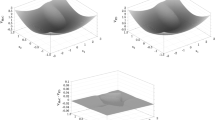

the domain is \(\varOmega =]-1,\,1[^2\), the target is \(\mathcal {T}=\partial \varOmega \), \(h=0.8k\) and \(A=\{(1,0),(0,1),(-1,0),(0,-1)\}\) is the set of 4 directions pointing to the facets of the unit square. The exact solution for this problem is the distance function to the unit square. As the characteristic curves for this problem correspond to straight lines moving towards the boundary, integration in time can be achieved exactly with a solver of any order. We consider then a Euler discretization in time, and a two-dimensional WENO reconstruction of order 2 in space. The multidimensional WENO reconstruction is based on a product of unidimensional reconstructions along every direction (we refer the reader to [4] for the specific version used in this test). Convergence rates and errors are shown in Table 1. Note that the second order of the space interpolation is achieved for the \(\Vert \cdot \Vert _1\) norm, while a lower order is observed for the \(\Vert \cdot \Vert _{\infty }\) norm. This is expected from the fact that the solution is not differentiable across the kinks of the solution. Note that if error computation is performed over a restricted zone excluding non-differentiable points, as in Table 2, higher order of accuracy and convergence are achieved for both norms, as it is generally expected for WENO-based schemes. However, this might not be the case for any high-order scheme. As an example, in Fig. 1, it can be seen that if a generic quadratic interpolation is used to build a similar scheme, spurious oscillations arise in non-differentiable areas, degenerating the high-order accuracy of the scheme. This latter justifies the use of WENO reconstruction operators in space, as they are accordingly designed in order to detect and penalize highly oscillatory stencils.

A Reduced-Coordinate Pursuit-Evasion Game

In a second example, we consider a 1D pursuit-evasion game with dynamics given by

where \(v_P\) and \(v_E\) denote the velocity of the pursuer and the evader respectively; \(a\in [0,1]\) and \(b\in [-1,1]\) are control variables. By defining the reduced coordinate \(x=x_E-x_p\), the game is written as

If we consider the target set \(\mathcal {T}=B(0,R)\), the exact solution is given by

We implement our SL/FV scheme with a fourth-order RK scheme in time and a WENO reconstruction in space of degree 2; results are shown in Fig. 2. A natural advantage of high-order methods is the level of accuracy that can be reached with a reduced number of elements, which is particularly relevant when fixed point iterations of the form (12) involving min or minmax operators are considered. However, at it has been previously discussed, for high-order schemes the fixed point operator is not a contraction anymore, and convergence has to be understood in a different sense. In [8], the \(\epsilon \)- monotonicity concept has been introduced in order to characterize the convergence behavior of such high-order schemes. Figure 2 illustrates this situation, as for different values of \(h\) and \(k\), convergence of the fixed point iteration is achieved in an oscillatory way, whereas the oscillation behavior decreases when \(h=h(k)\) is reduced.

SL/FV scheme for a 1D differential game. Left: exact and approximated solution for 100 elements. Right: oscillatory, but convergent behavior is achieved for the fixed point iteration (12), for different values of \(h\).

5 Concluding Remarks

We have introduced a semi-Lagrangian/finite volume scheme for the approximation of HJI equations. The main building blocks are a high-order approximation of the system dynamics, combined with high order of accuracy in space via a WENO interpolation operator. The resulting fully-discrete scheme is then solved by means of a fixed point iteration. High-order of accuracy is observed in smooth regions, and the convergence of the fixed point iteration is achieved as long as in the spatial-resolution building block, non-oscillatory interpolation operators are considered. Further developments in the directions of this paper will include the implementation of high-dimensional interpolation routines, as well as the construction of adaptive schemes with an ad-hoc refinement criterion.

References

Abgrall, R.: Numerical discretization of the first-order hamilton-jacobi equation on triangular meshes. Comm. Pure Appl. Math. 49, 1339–1373 (1996)

Abgrall, R.: Numerical discretization of boundary conditions for first order Hamilton-Jacobi equations. SIAM J. Numer. Anal. 41, 2233–2261 (2003)

Alla, A., Falcone, M., Kalise, D.: An efficient policy iteration algorithm for dynamic programming equations. ArXiv preprint: 1308.2087 (2013)

Balsara, D., Dumbser, M., Munz, C.-D., Rumpf, T.: Efficient, high accuracy ADER-WENO schemes for hydrodynamics and divergence-free magnetohydrodynamics. J. Comput. Phys. 228, 2480–2516 (2009)

Bardi, M., Capuzzo-Dolcetta, I.: Optimal Control and Viscosity Solutions of Hamilton-Jacobi-Bellman Equations. Birkhäuser, Boston (1997)

Barles, G., Souganidis, P.E.: Convergence of approximation schemes for fully nonlinear second order equations. Asymptotic Anal. 4, 271–283 (1991)

Bauer, F., Grüne, L., Semmler, W.: Adaptive spline interpolation for Hamilton-Jacobi-Bellman equations. Appl. Numer. Math. 56, 1196–1210 (2006)

Bokanowski, O., Falcone, M., Ferretti, R., Grüne, L., Kalise, D., Zidani, H.: Value iteration convergence of \(\epsilon \)-monotone schemes for stationary Hamilton-Jacobi equations. Preprint 33 pp. (2014)

Bokanowski, O., Garcke, J., Griebel, M., Klompmaker, I.: An adaptive sparse grid semi-Lagrangian scheme for first order Hamilton-Jacobi Bellman equations. J. Sci. Comput. 55, 575–605 (2013)

Bokanowski, O., Zidani, H.: Anti-dissipative schemes for advection and application to Hamilton-Jacobi-Bellman equations. J. Sci. Comput. 30, 1–33 (2007)

Bryson, S., Kurganov, A., Levy, D., Petrova, G.: Semi-discrete central-upwind schemes with reduced dissipation for Hamilton-Jacobi equations. IMA J. Numer. Anal. 25, 113–138 (2005)

Bryson, S., Levy, D.: Mapped WENO and weighted power ENO reconstructions in semi-discrete central schemes for Hamilton-Jacobi equations. Appl. Numer. Math. 56, 1211–1224 (2006)

Carlini, E., Falcone, M., Ferretti, R.: An efficient algorithm for Hamilton-Jacobi equations in high dimension. Comput. Vis. Sci. 7, 15–29 (2004)

Carlini, E., Ferretti, R., Russo, G.: A weighted essentially nonoscillatory, large time- step scheme for Hamilton-Jacobi equations. SIAM J. Sci. Comput. 27, 1071–1091 (2005)

Crandall, M.G., Lions, P.L.: Two approximations of solutions of Hamilton-Jacobi equations. Math. Comp. 43, 1–19 (1984)

Crandall, M.G., Majda, A.: Monotone difference approximations for scalar conservation laws. Math. Comp. 34, 1–21 (1980)

Falcone, M.: Numerical methods for differential games via PDEs. Int. Game Theory Rev. 8, 231–272 (2006)

Falcone, M., Ferretti, R.: Discrete time high-order schemes for viscosity solutions of Hamilton-Jacobi-Bellman equations. Numer. Math. 67, 315–344 (1994)

Falcone, M., Ferretti, R.: Semi-Lagrangian schemes for Hamilton-Jacobi equations, discrete representation formulae and Godunov methods. J. Comp. Phys. 175, 559–575 (2002)

Falcone, M., Ferretti, R.: Semi-Lagrangian Schemes for Linear and Hamilton-Jacobi Equations. SIAM, Philadelphia (2014)

Ferretti, R.: Convergence of semi-Lagrangian approximations to convex Hamilton- Jacobi equations under (very) large Courant numbers. SIAM J. Numer. Anal. 40, 2240–2253 (2003)

Harten, A., Osher, S.: Uniformly high-order accurate nonoscillatory schemes. I. SIAM J. Numer. Anal. 24, 279–309 (1987)

Harten, A., Osher, S., Engquist, B., Chakravarthy, S.R.: Some results on uniformly high-order accurate essentially nonoscillatory schemes. Appl. Numer. Math. 2, 347–377 (1986)

Harten, A., Osher, S., Engquist, B., Chakravarthy, S.R.: Uniformly high order accurate essentially non-oscillatory schemes. III. J. Comput. Phys. 71, 231–303 (1987)

Liu, X., Osher, S.: Weighted essentially non-oscillatory schemes. J. Comput. Phys. 115, 200–212 (1994)

Lions, P.L., Souganidis, P.E.: Convergence of MUSCL and filtered schemes for scalar conservation laws and Hamilton-Jacobi equations. Numer. Math. 69, 441–470 (1995)

Kossioris, G., Makridakis, C., Souganidis, P.E.: Finite volume schemes for Hamilton-Jacobi equations. Numer. Math. 83, 427–442 (1999)

Osher, S.: Convergence of generalized MUSCL schemes. SIAM J. Numer. Anal. 22, 947–961 (1985)

Zhang, Y.-T., Shu, C.-W.: High-order WENO schemes for Hamilton-Jacobi equations on triangular meshes. SIAM J. Sci. Comput. 24, 1005–1030 (2002)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2014 IFIP International Federation for Information Processing

About this paper

Cite this paper

Falcone, M., Kalise, D. (2014). A High-Order Semi-Lagrangian/Finite Volume Scheme for Hamilton-Jacobi-Isaacs Equations. In: Pötzsche, C., Heuberger, C., Kaltenbacher, B., Rendl, F. (eds) System Modeling and Optimization. CSMO 2013. IFIP Advances in Information and Communication Technology, vol 443. Springer, Berlin, Heidelberg. https://doi.org/10.1007/978-3-662-45504-3_10

Download citation

DOI: https://doi.org/10.1007/978-3-662-45504-3_10

Published:

Publisher Name: Springer, Berlin, Heidelberg

Print ISBN: 978-3-662-45503-6

Online ISBN: 978-3-662-45504-3

eBook Packages: Computer ScienceComputer Science (R0)