Abstract

We propose quantitative regular expressions (QREs) as a high-level programming abstraction for specifying complex numerical queries over data streams in a modular way. Our language allows the arbitrary nesting of orthogonal sets of combinators: (a) generalized versions of choice, concatenation, and Kleene-iteration from regular expressions, (b) streaming (serial) composition, and (c) numerical operators such as min, max, sum, difference, and averaging. Instead of requiring the programmer to figure out the low-level details of what state needs to be maintained and how to update it while processing each data item, the regular constructs facilitate a global view of the entire data stream splitting it into different cases and multiple chunks. The key technical challenge in defining our language is the design of typing rules that can be enforced efficiently and which strike a balance between expressiveness and theoretical guarantees for well-typed programs. We describe how to compile each QRE into an efficient streaming algorithm. The time and space complexity is dependent on the complexity of the data structure for representing terms over the basic numerical operators. In particular, we show that when the set of numerical operations is sum, difference, minimum, maximum, and average, the compiled algorithm uses constant space and processes each symbol in the data stream in constant time outputting the cost of the stream processed so far. Finally, we prove that the expressiveness of QREs coincides with the streaming composition of regular functions, that is, MSO-definable string-to-term transformations, leading to a potentially robust foundation for understanding their expressiveness and the complexity of analysis problems.

This research was partially supported by the NSF Expeditions Award CCF 1138996.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

1 Introduction

In a diverse range of applications such as financial tickers, data feeds from sensors, network traffic monitoring, and click-streams of web usage, the core computational problem is to map a stream of data items to a numerical value. Prior research on stream processing has focused on designing space-efficient algorithms for specific functions such as computing the average or the median of a sequence of values, and integrating stream processing in traditional data management software such as relational databases and spreadsheets. Our goal is orthogonal, namely, to provide high-level programming abstractions for the modular specification of complex queries over data streams, with a mix of numerical operators such as sum, difference, min, max, and average, supported by automatic compilation into an efficient low-level stream processing implementation.

To motivate our work, suppose the input data stream consists of transactions at a bank ATM, and consider how the following query f can be expressed in a natural, modular, and high-level manner: “On average, how much money does Alice deposit into her account during a month?” We can express this query naturally as a composition of two queries: the query \(f_{1}\) that maps the input stream to a stream consisting of only the transactions corresponding to the deposits by Alice, and the query \(f_{2}\) that computes the average of the sum of deposits during each month. This form of filtering, and cascaded composition of stream processors, is common in many existing stream processing languages (such as ActiveSheets [35]) and systems (such as Apache Storm), and our proposal includes a streaming composition operator to express it:  .

.

Now let us turn our attention to expressing the numerical computation \(f_{2}\). An intuitive decomposition for specifying \(f_{2}\) is to break up the input stream into a sequence of substreams, each corresponding to the transactions during a single month. We can a write a function \(f_{3}\) that maps a sequence of transactions during a month to its cumulative sum. The desired function \(f_{2}\) then splits its input stream into substreams, each matching the input pattern of \(f_{3}\), applies \(f_{3}\) to each substream, and combines the results by averaging. In our proposed calculus, \(f_{2}\) is written as  . This is exactly the “quantitative” generalization of the Kleene-* operation from regular expressions—a declarative language for specifying patterns in strings that is widely used in practical applications and has a strong theoretical foundation. However, we are not aware of any existing language for specifying quantitative properties of sequences that allows such a regular iteration over chunks of inputs.

. This is exactly the “quantitative” generalization of the Kleene-* operation from regular expressions—a declarative language for specifying patterns in strings that is widely used in practical applications and has a strong theoretical foundation. However, we are not aware of any existing language for specifying quantitative properties of sequences that allows such a regular iteration over chunks of inputs.

As in regular expressions, iterators in our language can be nested, and one can use different aggregation operators at different levels. For example, to specify the modified query, “On an average, during a month, what is the maximum money that Alice deposits during a single day?” in the program for the original query f, we can essentially replace the computation \(f_{3}\) for processing month-substreams by  , where the function \(f_{4}\) maps a sequence of transactions during a single day to its sum. Analogous to the iteration, the generalization of the concatenation operation

, where the function \(f_{4}\) maps a sequence of transactions during a single day to its sum. Analogous to the iteration, the generalization of the concatenation operation  , splits the input stream in two parts, applies f to the first part, g to the second part, and combines the results using the arithmetic combinator op. If we want to process streams in which no individual withdrawal exceeds a threshold value in a special manner, our program can be

, splits the input stream in two parts, applies f to the first part, g to the second part, and combines the results using the arithmetic combinator op. If we want to process streams in which no individual withdrawal exceeds a threshold value in a special manner, our program can be  , where the program f is written to process streams where all withdrawals are below the threshold and g handles streams with some withdrawal above the threshold. These core regular constructs,

, where the program f is written to process streams where all withdrawals are below the threshold and g handles streams with some withdrawal above the threshold. These core regular constructs,  ,

,  and

and  , are natural as they are the analogs of the fundamental programming constructs of conditionals, sequential composition, and iteration, but also allow the programmer a global view of the entire stream.

, are natural as they are the analogs of the fundamental programming constructs of conditionals, sequential composition, and iteration, but also allow the programmer a global view of the entire stream.

We formalize this idea of regular programming by designing the language of quantitative regular expressions for processing data streams (Sect. 2). Each quantitative regular expression (QRE) maps a (regular) subset of data streams to cost values. The language constructs themselves are agnostic to the cost types and combinators for combining cost values. The design of the language is influenced by two competing goals: on one hand, we want as much expressiveness as possible, and on the other hand, we want to ensure that every QRE can be automatically compiled into a streaming algorithm with provably small space and time complexity bounds. For the former, the same way as deterministic finite automata and regular languages serve as the measuring yardstick for design choices in formalizing regular expressions, the most suitable class of functions is the recently introduced notion of regular functions [7]. Unlike the better known and well-studied formalism of weighted automata, whose definition is inherently limited to costs with two operations that form a commutative semiring [21], this class is parametrized by an arbitrary set of cost types and operations. It has both a machine-based characterization using streaming string-to-term transducers [7] and a logic-based characterization using MSO-definable string-to-term transformations [16]. While this class is not closed under streaming composition, in Sect. 4, we show that QREs define exactly streaming compositions of regular functions. This expressiveness result justifies the various design choices we made in defining QREs.

In Sect. 3, we show how to compile a QRE into an efficient streaming algorithm. The implementation consists of a set of interacting machines, one for each sub-expression. To process operators such as  and

and  , the algorithm needs to figure out how to split the input stream. Since the splitting cannot be deterministically computed in a streaming manner, the algorithm needs to keep track of all potential splits. The typing rules are used to ensure that the number of splits under consideration are proportional to the size of the expression rather than the length of the input stream. While the compilation procedure is generic with respect to the set of cost types and operators, the exact time and space complexity of the algorithm is parametrized by the complexity of the data structure for representing cost terms. For example, every term that can be constructed using numerical constants, a single variable x, and operations of \(\min \) and \(+\), is equivalent to a term in the canonical form \(\min (x + a, b)\), and can therefore be summarized by two numerical values allowing constant-time updates. When we have both numerical values and sequences of such values, if we know that the only aggregate operator over sequences is averaging, then the sequence can be summarized by the sum of all values and the length (such a succinct representation is not possible if, for instance, we allow mapping of a sequence of values to its median). In particular, we show that when a QRE is constructed using the operations of sum, difference, minimum, maximum, and average, the compiled streaming algorithm has time complexity that is linear in the length of the data stream (that is, constant time for processing each symbol) and constant space complexity (in terms of the size of the QRE itself, both complexities are polynomial). This generalizes the class of queries for which efficient streaming algorithms have been reported in the existing literature.

, the algorithm needs to figure out how to split the input stream. Since the splitting cannot be deterministically computed in a streaming manner, the algorithm needs to keep track of all potential splits. The typing rules are used to ensure that the number of splits under consideration are proportional to the size of the expression rather than the length of the input stream. While the compilation procedure is generic with respect to the set of cost types and operators, the exact time and space complexity of the algorithm is parametrized by the complexity of the data structure for representing cost terms. For example, every term that can be constructed using numerical constants, a single variable x, and operations of \(\min \) and \(+\), is equivalent to a term in the canonical form \(\min (x + a, b)\), and can therefore be summarized by two numerical values allowing constant-time updates. When we have both numerical values and sequences of such values, if we know that the only aggregate operator over sequences is averaging, then the sequence can be summarized by the sum of all values and the length (such a succinct representation is not possible if, for instance, we allow mapping of a sequence of values to its median). In particular, we show that when a QRE is constructed using the operations of sum, difference, minimum, maximum, and average, the compiled streaming algorithm has time complexity that is linear in the length of the data stream (that is, constant time for processing each symbol) and constant space complexity (in terms of the size of the QRE itself, both complexities are polynomial). This generalizes the class of queries for which efficient streaming algorithms have been reported in the existing literature.

2 Quantitative Regular Expressions

2.1 Preliminaries

The Data and Cost Types. We first fix a set \(\mathcal {T}= \{ T_{1}, T_{2}, \ldots \}\) of types, each of which is non-empty. Typical examples might include the sets \({\mathbb {R}}\), \({\mathbb {Z}}\) and \({\mathbb {N}}\) of real numbers, integers and natural numbers respectively, the set  of boolean values, and the set \({\mathbb {M}}\) of multisets of real numbers.

of boolean values, and the set \({\mathbb {M}}\) of multisets of real numbers.

Another example is \(D_{{bank}} = \mathbb {R} \cup \left\{ {end_{d} ,end_{m} } \right\} \), indicating the transactions of a customer with a bank. The symbol \(end_{d}\) indicates the end of a working day, \(end_{m}\) indicates the passage of a calendar month, and each real number \(x \in {\mathbb {R}}\) indicates the deposit (withdrawal if negative) of x dollars into the account.

Data Predicates. For each \(D \in \mathcal {T}\), let \(\varPhi _{D}\) be a non-empty collection of predicates over D. In the case of \(D_{bank}\), an example choice is \(\varPhi _{{D_{{bank}} }} = \left\{ {d = end_{d} ,d = end_{m} ,d \in \mathbb {R},d \ge 0,d < 0, \ldots } \right\} \). We require the following:

-

1.

\(\varPhi _{D}\) be closed under Boolean connectives: for each \(\varphi _{1}, \varphi _{2} \in \varPhi _{D}\), \(\varphi _{1} \vee \varphi _{2}, \varphi _{1} \wedge \varphi _{2}, \lnot \varphi _{1} \in \varPhi _{D}\),

-

2.

whether a data value d satisfies a predicate \(\varphi \) is decidable, and

-

3.

there is a quantity \(time_{{\varphi {\text {-}}sat}} \) such that the satisfiability of each predicate \(\varphi \) is mechanically decidable in time \(\le time_{{\varphi {\text {-}}sat}} \). Satisfiability of predicates would typically be determined by an SMT solver [20].

We refer to a set of predicates satisfying these properties as an effective boolean algebra.

Data Streams, Data Languages, and Symbolic Regular Expressions. For each \(D \in \mathcal {T}\), a data stream is simply an element \(w \in D^{*}\), and a data language L is a subset of \(D^{*}\).

Symbolic regular expressions provide a way to identify data languages L. Our definitions here are mostly standard, except for the additional requirement of unambiguous parseability, which is for uniformity with our later definitions of function combinators. They are symbolic because the basic regular expressions are predicates over D. Through the rest of this paper, the unqualified phrase “regular expression” will refer to a “symbolic” regular expression.

Consider two non-empty languages \(L_{1}, L_{2} \subseteq D^{*}\). They are unambiguously concatenable if for each stream \(w \in L_{1} L_{2}\), there is a unique pair of streams \(w_1 \in L_1\) and \(w_2 \in L_2\) such that \(w = w_1 w_2\). The language L is unambiguously iterable if L is non-empty and for each stream \(w \in L^{*}\), there is a unique sequence of streams \(w_1, w_2, \ldots , w_k \in L\) such that \(w = w_1 w_2 \cdots w_k\).

We write \(\mathbf [\![ r\mathbf ]\!] \subseteq D^{*}\) for the language defined by the symbolic regular expression r. Symbolic unambiguous regular expressions are inductively defined as follows:

-

1.

\(\epsilon \) is a regular expression, and identifies the language \(\mathbf [\![ \epsilon \mathbf ]\!] = \{ \epsilon \}\).

-

2.

For each predicate \(\varphi \in \varPhi \), \(\varphi \) is a regular expression, and \(\mathbf [\![ \varphi \mathbf ]\!] = \{ d \in D \mid \varphi (d) \text { holds} \}\).

-

3.

For each pair of regular expressions \(r_1\), \(r_2\), if \(\mathbf [\![ r_1\mathbf ]\!] \) and \(\mathbf [\![ r_2\mathbf ]\!] \) are disjoint, then \(r_1 +r_2\) is a regular expression and \(\mathbf [\![ r_1 +r_2\mathbf ]\!] = \mathbf [\![ r_1\mathbf ]\!] \mathbin {\cup }\mathbf [\![ r_2\mathbf ]\!] \).

-

4.

For each pair of regular expressions \(r_1\), \(r_2\), if \(\mathbf [\![ r_1\mathbf ]\!] \) and \(\mathbf [\![ r_2\mathbf ]\!] \) are unambiguously concatenable, then \(r_1 r_2\) is a regular expression which identifies the language \(\mathbf [\![ r_1 r_2\mathbf ]\!] = \mathbf [\![ r_1\mathbf ]\!] \mathbf [\![ r_2\mathbf ]\!] \).

-

5.

When r is a regular expression such that \(\mathbf [\![ r\mathbf ]\!] \) is unambiguously iterable, then \(r^{*}\) is also a regular expression, and identifies the language \(\mathbf [\![ r^{*}\mathbf ]\!] = \mathbf [\![ r\mathbf ]\!] ^{*}\).

Example 1

In the bank transaction example, the languages \(\mathbf [\![ {\mathbb {R}}^{*} end_{d}\mathbf ]\!] \) and \(D_{bank}^{*}\) are unambiguously concatenable: the only way to split a string which matches \({\mathbb {R}}^{*} end_{d} \cdot D_{bank}^{*}\) is immediately after the first occurrence of \(end_{d}\). On the other hand, observe that \({\mathbb {R}}^{*}\) and \({\mathbb {R}}^{*} end_{d}\) are not unambiguously concatenable: the string \(2, end_{d} \in \mathbf [\![ {\mathbb {R}}^{*}\mathbf ]\!] \cdot \mathbf [\![ {\mathbb {R}}^{*} end_{d}\mathbf ]\!] \) can be split as \(2, end_{d} = (\epsilon ) (2, end_{d})\), where \(\epsilon \in \mathbf [\![ {\mathbb {R}}^{*}\mathbf ]\!] \) and \(2, end_{d} \in \mathbf [\![ {\mathbb {R}}^{*} end_{d}\mathbf ]\!] \), and can also be split as \(2, end_{d} = (2) (end_{d})\), where \(2 \in \mathbf [\![ {\mathbb {R}}^{*}\mathbf ]\!] \) and \(end_{d} \in \mathbf [\![ {\mathbb {R}}^{*} end_{d}\mathbf ]\!] \).

Similarly, the language \(\mathbf [\![ {\mathbb {R}}^{*} end_{d}\mathbf ]\!] \) is unambiguously iterable: the only viable split for any matching string is immediately after each occurrence of \(end_{d}\). The language \(\mathbf [\![ {\mathbb {R}}\mathbf ]\!] \) is also unambiguously iterable, but observe that \(\mathbf [\![ {\mathbb {R}}^{*}\mathbf ]\!] \) is not.

Given a regular expression \(r_1\) and \(r_2\), let \(min{\text {-}}terms\) be the set of distinct character classes formed by predicates from \(\varPhi \) [18]. The first three claims below can be proved by straightforward extensions to the traditional regular expression-to-NFA translation algorithm [12, 13, 33]. The final claim is proved in [34].

Theorem 1

Given unambiguous regular expressions \(r_1\) and \(r_2\), the following problems can be decided in time \(poly\left( {\left| {r_{1} } \right| ,\left| {r_{2} } \right| ,\left| {min{\text {-}}terms} \right| } \right) time_{\varphi {\text {-}} sat}\):

-

1.

Are \(\mathbf [\![ r_1\mathbf ]\!] \) and \(\mathbf [\![ r_2\mathbf ]\!] \) disjoint?

-

2.

Are \(\mathbf [\![ r_1\mathbf ]\!] \) and \(\mathbf [\![ r_2\mathbf ]\!] \) unambiguously concatenable?

-

3.

Is \(\mathbf [\![ r_1\mathbf ]\!] \) unambiguously iterable?

-

4.

Are \(r_1\) and \(r_2\) equivalent, i.e. is \(\mathbf [\![ r_1\mathbf ]\!] = \mathbf [\![ r_2\mathbf ]\!] \)?

Example 2

Consider again the case of the customer and the bank. We picked \(D_{bank} = {\mathbb {R}}\mathbin {\cup }\{ {end}_{d}, {end}_{m} \}\) and \(\varPhi _{D_{bank}} = \{ d = end_{d}, d = end_{m}, d \in {\mathbb {R}}, d \ge 0, d < 0 \}\). The transactions of a single day may then be described by the regular expression \(r_{day} = (d \in {\mathbb {R}})^{*} \cdot (d = end_{d})\), the transactions of a week, by the regular expression \(r_{week} = r_{day}^7\). Months which involve only deposits are given by the regular expression \(((d \ge 0) +(d = end_{d}))^{*} \cdot (d = end_{m})\).

An important restriction for the predicates is that they are only allowed to examine individual data values. The language \(w \in {\mathbb {R}}^{*}\) of monotonically increasing sequences can therefore not be expressed by a symbolic regular expression. Without this restriction, all problems listed in Theorem 1 are undecidable [17].

Cost Terms. Let \(\mathcal {G}=\{op_1,op_2,\ldots \}\) be a collection of operations over values of various types. Each operation is a function of the form \(op: T_1 \times T_2 \times \cdots \times T_k \rightarrow T\).

Example 3

Over the space of types \(\mathcal {T}_s = \{ {\mathbb {Z}}, {\mathbb {B}} \}\), a simple choice of operators is \(\mathcal {G}_s = \{ +, \min , \max , x < 7 \}\) where \(+, \min , \max : {\mathbb {Z}}\times {\mathbb {Z}}\rightarrow {\mathbb {Z}}\), are the usual operations of addition, minimum and maximum respectively, and the unary operator \(x < 7 : {\mathbb {Z}}\rightarrow {\mathbb {B}}\) determines whether the input argument is less than 7.

Over the space of types \(\mathcal {T}_m = \{ {\mathbb {R}}, {\mathbb {M}} \}\) where \({\mathbb {M}}\) is the set of multisets of real numbers, the chosen operations might be \({\mathcal {G}}_{m} = \{\mathtt{{ins}},\mathtt{{avg}},\mathtt{{mdn}}\}\), where \({\text {\texttt {ins}}}: {\mathbb {M}}\times {\mathbb {R}}\rightarrow {\mathbb {M}}\) is insertion into sets, defined as \(\mathtt{{ins}}(A, x) = A \mathbin {\cup }\{ x \}\), and \(\mathtt{{avg}}, \mathtt{{mdn}} : {\mathbb {M}}\rightarrow {\mathbb {R}}\) return the average and median of a multiset of numbers respectively.

Let \(X = \{ x_1, x_2, \ldots \}\) be a sequence of parameters, and each parameter \(x_i\) be associated with a type \(T_i \in \mathcal {T}\). We use x : T when we want to emphasize that the type of x is T. Parameters in X can be combined using cost operations from \(\mathcal {G}\) to construct cost terms:

We will only consider those terms \(\tau \) that are well-typed and single-use, i.e. where each parameter x occurs at most once in \(\tau \). For example, \(\min (x, y)\) is a well-typed single-use term when both parameters are of type \({\mathbb {R}}\). On the other hand, the term \(\min (x, x + y)\) is not single-use. Each term is associated with the set \(Param\left( \tau \right) = \left\{ {x_{{t1}} ,x_{{t2}} , \ldots ,x_{{tk}} } \right\} \) of parameters appearing in its description, and naturally encodes a function \(\mathbf [\![ \tau \mathbf ]\!] : T_1 \times T_2 \times \cdots \times T_k \rightarrow T\) from parameter valuations \(\overline{v}\) to costs \(\mathbf [\![ \tau \mathbf ]\!] (\overline{v})\), where \(T_i\) is the type of the parameter \(x_i\).

2.2 Quantitative Regular Expressions and Function Combinators

Informally, for some data and cost domains \(D, C \in \mathcal {T}\), QREs map data streams \(w \in D^{*}\) to terms f(w) over the cost domain C. Formally, given the data domain \(D \in \mathcal {T}\), cost domain \(C \in \mathcal {T}\), and the list of parameters \(X = \langle x_1, x_2, \ldots , x_k \rangle \), each QRE f identifies a function \(\mathbf [\![ f\mathbf ]\!] : D^{*} \rightarrow (T_1 \times T_2 \times \cdots \times T_k \rightarrow C)_\bot \).Footnote 1 Observe that whether \(\mathbf [\![ f\mathbf ]\!] (w, \overline{v})\) is defined depends only on the data stream w, and not on the parameter valuation \(\overline{v}\).Footnote 2

In addition to the data domain D, cost domain C, and the parameters of interest \(X = \langle x_1, x_2, \ldots \rangle \), each QRE f is therefore also associated with a regular expression r, describing the subset of data streams over which it is defined: \(\mathbf [\![ r\mathbf ]\!] = \{ w \in D^{*} \mid \mathbf [\![ f\mathbf ]\!] (w) \ne \bot \}\). We will represent this by saying that f is of the form \({\text {QRE}}(r, X, C)\), or even more succinctly as \(f : {\text {QRE}}(r, X, C)\).

We will now inductively define quantitative regular expressions. A summary of the syntax can be found in Fig. 1.

Basic Functions. If \(\varphi \in \varPhi _D\) is a predicate over data values \(d \in D\), and \(\lambda : D \rightarrow C\) is an operation in \(\mathcal {G}\), then  defined as follows:

defined as follows:

For each data domain D and parameter x of type C,  is a

is a  . If the input stream w is empty, then it produces the output \(v_x\), where \(v_x\) is the assignment to x in the parameter valuation \(\overline{v}\):

. If the input stream w is empty, then it produces the output \(v_x\), where \(v_x\) is the assignment to x in the parameter valuation \(\overline{v}\):

Example 4

In the bank transaction example, if we wish to count the number of transactions made by the customer in a month, we would be interested in the functions  and

and  , where 0 and 1 are the functions returning the constant values 0 and 1 respectively.

, where 0 and 1 are the functions returning the constant values 0 and 1 respectively.

Cost Operations. Consider two competing banks, which given the transaction history w of the customer, yield interest amounts of \(f_1(w)\) and \(f_2(w)\) respectively. The customer wishes to maximize the interest received: given the binary operation \(\max : {\mathbb {R}}\times {\mathbb {R}}\rightarrow {\mathbb {R}}\) from \(\mathcal {G}\), we are therefore interested in the QRE \(\max (f_1, f_2)\).

More generally, pick an operator \(op : T_1 \times T_2 \times \cdots \times T_k \rightarrow C\) and expressions \(f_1 : {\text {QRE}}(r_1, X_1, T_1)\), \(f_2 : {\text {QRE}}(r_2, X_2, T_2)\), ..., \(f_k : {\text {QRE}}(r_k, X_k, T_k)\). If the domains are equal, \(\mathbf [\![ r_1\mathbf ]\!] = \mathbf [\![ r_2\mathbf ]\!] = \cdots = \mathbf [\![ r_k\mathbf ]\!] \), and the parameter lists are pairwise-disjoint, \(X_i \mathbin {\cap }X_j = \emptyset \), for all \(i \ne j\), then \(op(f_1, f_2, \ldots , f_k)\) is an expression of the form \({\text {QRE}}(r_1, \bigcup _i X_i, C)\).

Given an input stream \(w \in D^{*}\), if \(\mathbf [\![ f_i\mathbf ]\!] (w)\) is defined for each i, then:

Otherwise, \(\mathbf [\![ op(f_1, f_2, \ldots , f_k)\mathbf ]\!] (w, \overline{v}) = \bot \).

Substitution. Informally, the expression  substitutes the result of applying g to the input stream into the parameter x while evaluating f.

substitutes the result of applying g to the input stream into the parameter x while evaluating f.

Example 5

In the bank transaction example, the bank may determine interest rates by a complicated formula rate of the form \({\text {QRE}}(D_{{bank}}^{*}, \emptyset , {\mathbb {R}})\), which is possibly a function of the entire transaction history of the customer. Given the monthly interest rate \(p \in {\mathbb {R}}\), we can define a formula earning of the form \({\text {QRE}}(D_{{bank}}^{*}, \{ p \}, {\mathbb {R}})\) which returns the total earnings of the customer’s account. The QREs rate and earnings thus encode functions \(\mathbf [\![ rate\mathbf ]\!] : D_{bank}^{*} \rightarrow {\mathbb {R}}\) and \(\mathbf [\![ earnings\mathbf ]\!] : D_{bank}^{*} \times {\mathbb {R}}\rightarrow {\mathbb {R}}\) respectively. The QRE  plugs the result of the rate computation into the earning computation, and maps the transaction history to the total interest earned.

plugs the result of the rate computation into the earning computation, and maps the transaction history to the total interest earned.

Formally, let f and g be of the form \({\text {QRE}}(r_f, X_f, T_f)\) and \({\text {QRE}}(r_g, X_g, T_g)\) respectively and with equal domains \(\mathbf [\![ r_f\mathbf ]\!] = \mathbf [\![ r_g\mathbf ]\!] \), let \(x \in X_f\) be of type \(T_g\), and let \((X_f \setminus \{ x \}) \mathbin {\cap }X_g = \emptyset \). Then,  is of the form \({\text {QRE}}(r_f, (X_f \setminus \{ x \}) \mathbin {\cup }X_g, T_f)\). If \(\mathbf [\![ f\mathbf ]\!] (w)\) and \(\mathbf [\![ g\mathbf ]\!] (w)\) are both defined, then:

is of the form \({\text {QRE}}(r_f, (X_f \setminus \{ x \}) \mathbin {\cup }X_g, T_f)\). If \(\mathbf [\![ f\mathbf ]\!] (w)\) and \(\mathbf [\![ g\mathbf ]\!] (w)\) are both defined, then:

where \(\overline{v}[x / t]\) replaces the value of x in \(\overline{v}\) with t. Otherwise,  is undefined.

is undefined.

Conditional Choice. If f and g are QREs with disjoint domains then  is a QRE defined as follows:

is a QRE defined as follows:

where \(int_{f} = \mathbf [\![ f\mathbf ]\!] (w, \overline{v})\) and \(int_{g} = \mathbf [\![ g\mathbf ]\!] (w, \overline{v})\) respectively.

Example 6

The expression  examines a single customer transaction with the bank, and maps it to true if it was a deposit, and false otherwise.

examines a single customer transaction with the bank, and maps it to true if it was a deposit, and false otherwise.

The bank rewards program may reserve a higher reward rate for each transaction made in a “deposit-only” month, and a lower reward rate for other months. Say we have expressions hiReward and loReward which compute the total customer rewards in qualifying and non-qualifying months respectively. The expression  then maps the transactions of an arbitrary month to the total reward earned.

then maps the transactions of an arbitrary month to the total reward earned.

Observe that the choice of isPositive depends only on the next customer transaction. On the other hand, the choice in reward depends on the entire list of transactions made in that month, and resolving this choice requires a global view of the data stream.

Concatenation. In the bank transaction example, say we have the transactions of two consecutive months, \(w \in r_{month}^2\), and that the QRE count returns the number of transactions made in a single month.

To express the minimum number of transactions for both months, a natural description is  , where the combinator

, where the combinator  splits the input string w into two parts \(w_{1}\) and \(w_{2}\), applies count to each part, and combines the results using the min operator. Similarly, the expression

splits the input string w into two parts \(w_{1}\) and \(w_{2}\), applies count to each part, and combines the results using the min operator. Similarly, the expression  would total the number of transactions made in both months. One reasonable choice for function concatenation is therefore to have a k-ary combinator

would total the number of transactions made in both months. One reasonable choice for function concatenation is therefore to have a k-ary combinator  , for each k-ary operator \({op} \in G\).

, for each k-ary operator \({op} \in G\).

We instead choose to have a pair of uniform binary, operator-agnostic combinators split:  and

and  . They split the given input stream into two parts, applying f to the prefix and g to the suffix, and use parameter x to pass the result from f to g in the case of

. They split the given input stream into two parts, applying f to the prefix and g to the suffix, and use parameter x to pass the result from f to g in the case of  and vice-versa in the case of

and vice-versa in the case of  (see Fig. 2). Therefore the sub-expressions f and g themselves determine how the intermediate results are combined. The

(see Fig. 2). Therefore the sub-expressions f and g themselves determine how the intermediate results are combined. The  combinators are just a special case of this more general construct (see Example 7). We now formalize this intuition.

combinators are just a special case of this more general construct (see Example 7). We now formalize this intuition.

Let \(f : {\text {QRE}}(r_f, X_f, T_f)\) and \(g : {\text {QRE}}(r_g, X_g, T_g)\) be a pair of QREs whose domains \(\mathbf [\![ r_f\mathbf ]\!] \) and \(\mathbf [\![ r_g\mathbf ]\!] \) are unambiguously concatenable. Let \(x : T_f\) be a parameter in \(X_g\) and let \(X_f \mathbin {\cap }(X_g \setminus \{ x \}) = \emptyset \). Then  is a QRE of the form \({\text {QRE}}(r_f \cdot r_g, X, T_g)\) where \(X = X_f \mathbin {\cup }(X_g \setminus \{ x \})\). Given an input stream w, if there exist sub-streams \(w_f\), \(w_g\) such that \(w = w_f w_g\) and such that both \(\mathbf [\![ f\mathbf ]\!] (w_f)\) and \(\mathbf [\![ g\mathbf ]\!] (w_g)\) are defined, then:

is a QRE of the form \({\text {QRE}}(r_f \cdot r_g, X, T_g)\) where \(X = X_f \mathbin {\cup }(X_g \setminus \{ x \})\). Given an input stream w, if there exist sub-streams \(w_f\), \(w_g\) such that \(w = w_f w_g\) and such that both \(\mathbf [\![ f\mathbf ]\!] (w_f)\) and \(\mathbf [\![ g\mathbf ]\!] (w_g)\) are defined, then:

Otherwise,  .

.

Example 7

We promised earlier that for each cost domain operator op, the  combinator was a special case of the more general

combinator was a special case of the more general  combinator just defined. We illustrate this in the bank transaction example by constructing a QRE for

combinator just defined. We illustrate this in the bank transaction example by constructing a QRE for  , where count is the expression counting the number of transactions in a single month.

, where count is the expression counting the number of transactions in a single month.

The desired function may be expressed by the QRE  , where the QRE \({minCount}(p) = \min (p, {count})\).Footnote 3 The

, where the QRE \({minCount}(p) = \min (p, {count})\).Footnote 3 The  combinator splits the input data string into a prefix and a suffix, and propagates the output of count on the prefix into the parameter p of the QRE minCount on the suffix.

combinator splits the input data string into a prefix and a suffix, and propagates the output of count on the prefix into the parameter p of the QRE minCount on the suffix.

In general, given appropriate QREs f and g, and a binary operator op, the expression  can be expressed as

can be expressed as  , where

, where  and p is a new temporary parameter.

and p is a new temporary parameter.

The definition of the left version of the split combinator,  is similar to the definition of

is similar to the definition of  , with just the direction of the f-g information flow reversed, and is given in the appendix.

, with just the direction of the f-g information flow reversed, and is given in the appendix.

Iteration. Our next combinator is iteration, the analog of Kleene-* from regular expressions. The definition of the combinator is similar to that of  , to permit more general forms of iteration than just those offered a hypothetical

, to permit more general forms of iteration than just those offered a hypothetical  operator.

operator.

Consider an expression \(f : {\text {QRE}}(r, \{ x \}, T)\), such that \(\mathbf [\![ r\mathbf ]\!] \) is unambiguously iterable, and x : T. Then  is an expression of the form \({\text {QRE}}(r^{*}, \{ x \}, T)\). The expression

is an expression of the form \({\text {QRE}}(r^{*}, \{ x \}, T)\). The expression  divides the input stream w into a sequence of substreams, \(w_1\), \(w_2\), ..., \(w_k\), such that \(\mathbf [\![ f\mathbf ]\!] (w_i)\) is defined for each i.

divides the input stream w into a sequence of substreams, \(w_1\), \(w_2\), ..., \(w_k\), such that \(\mathbf [\![ f\mathbf ]\!] (w_i)\) is defined for each i.  is defined if this split exists and is unique. In that case, for each \(i \in \{ 0, 1, 2, \ldots , k \}\), define \({int}_i\) as follows:

is defined if this split exists and is unique. In that case, for each \(i \in \{ 0, 1, 2, \ldots , k \}\), define \({int}_i\) as follows:

-

1.

\({int}_0 = v_x\), and

-

2.

for each \(i \ge 1\), \({int}_i = \mathbf [\![ f\mathbf ]\!] (w_i, \{ x \mapsto {int}_{i - 1} \})\).

Finally,  .

.

Example 8

In the bank transaction example, consider the query currBal which maps the transaction history w of the customer to her current account balance. We can write  , where the expression

, where the expression  records the change in account balance as a result of a single transaction.

records the change in account balance as a result of a single transaction.

To desugar currBal to use the parametrized version of the iteration combinator, we adopt a similar approach as in Example 7. We first write the expression \({tx}^\prime = {plus}(p, {tx})\). Observe that \({tx}^\prime \) is a QRE dependent on a single parameter p, the previous account balance, and returns the new balance. Then, we can write:  .

.

A similar approach works to desugar  , for any associative cost operator op with the identity element 0. We first write

, for any associative cost operator op with the identity element 0. We first write  , for some new parameter p of type \(T_f\). Then, observe that

, for some new parameter p of type \(T_f\). Then, observe that  .

.

Remark 1

We have presented here a simplified version of the iteration combinator, which is sufficient for most examples. The full combinator, used in the proof of expressive completeness, allows f to depend on multiple parameters (instead of just a single parameter as above), and multiple values are computed in each substream \(w_i\). This full combinator and the symmetric left-iteration combinator are defined in the full paper.

Streaming Composition. In the bank transaction example, suppose we wish to compute the minimum balance over the entire account history. Recall that the stream w is a sequence of transactions, each data value indicating the deposit / withdrawal of some money from the account. The expression currBal from Example 8 maps the transaction history of the customer to her current balance. It is also simple to express the function  which returns the minimum of a stream of real numbers. We can then express the minimum account balance query using the streaming composition operator:

which returns the minimum of a stream of real numbers. We can then express the minimum account balance query using the streaming composition operator:  .

.

Informally, the streaming composition  applies the expression currBal to each prefix

\(w_1, w_2, \ldots , w_i\) of the input data stream \(w = w_1, w_2, \ldots \), thus producing the account balance after each transaction. It then produces an intermediate data stream \(w^\prime \) by concatenating the results of currBal. This intermediate stream \(w^\prime \) is supplied to the function minValue, which then produces the historical minimum account balance.

applies the expression currBal to each prefix

\(w_1, w_2, \ldots , w_i\) of the input data stream \(w = w_1, w_2, \ldots \), thus producing the account balance after each transaction. It then produces an intermediate data stream \(w^\prime \) by concatenating the results of currBal. This intermediate stream \(w^\prime \) is supplied to the function minValue, which then produces the historical minimum account balance.

Formally, let f and g be of the form \({\text {QRE}}(r, \emptyset , T_f)\) and \({\text {QRE}}(T_f^{*}, X, T_g)\) respectively. Note that f produces results independent of any parameter, and that g is defined over all intermediate data streams \(w^\prime \in T_f^{*}\). Then  . Given the input stream \(w = w_1, w_2, \ldots , w_k\) and parameter valuation \(\overline{v}\), for each i such that \(1 \le i \le k\), define \({int}_i\) as follows:

. Given the input stream \(w = w_1, w_2, \ldots , w_k\) and parameter valuation \(\overline{v}\), for each i such that \(1 \le i \le k\), define \({int}_i\) as follows:

-

1.

If \(\mathbf [\![ f\mathbf ]\!] (w_1, w_2, \ldots , w_i, \overline{v}_\emptyset ) \ne \bot \), then \({int}_i = \mathbf [\![ f\mathbf ]\!] (w_1, w_2, \ldots , w_i, \overline{v}_\emptyset )\), where \(\overline{v}_\emptyset \) is the empty parameter valuation.

-

2.

Otherwise, \({int}_i = \epsilon \).

Then  is given by:

is given by:

2.3 Examples

Analyzing a Regional Rail System. Consider the rail network of a small city. There are two lines, the airport line, al, between the city and the airport, and the suburban line, sl, between the city and the suburbs. The managers of the network want to determine the average time for a traveler in the suburbs to get to the airport.

The data is a sequence of event tuples (line, station, time), where \({line} \in \{ {al}, {sl} \}\) indicates the railway line on which the event occurs, \({station} \in \{ {air}, {city}, {suburb} \}\) indicates the station at which the train is stopped, and \({time} \in {\mathbb {R}}\) indicates the event time.

We first write the expression \(t_{{sc}}\) which calculates the time needed to travel from the suburbs to the city. Let \(\varphi _{{ss}}(d) = (d.{line} = {sl} \wedge d.{station} = {suburb})\) and \(\varphi _{{sc}}(d) = (d.{line} = {sl} \wedge d.{station} = {city})\) be the predicates indicating that the suburban line train is at the suburban station and at the city station respectively. Consider the expression:

that maps event streams of the form \(\lnot \varphi _{{ss}}^{*} \cdot \varphi _{{ss}} \cdot \lnot \varphi _{{sc}}^{*} \cdot \varphi _{{sc}}\) to the time at which the train stops at the city station. The expression \(t_{{sc-pickup}}\) that maps event streams to the pickup time from the suburban station can be similarly expressed:

Our goal is to express the commute time from the suburbs to the city. This is done by the expression:  .

.

The QRE \(t_{{ca}}\) that expresses the commute time from the city to the airport can be similarly constructed. We want to talk about the total travel time from the suburbs to the airport:  . Our ultimate goal is the average travel time from the suburbs to the airport. The following QRE solves our problem:

. Our ultimate goal is the average travel time from the suburbs to the airport. The following QRE solves our problem:  .

.

Rolling Data Telephone Plans. Now consider a simple telephone plan, where the customer pays 50 dollars each month for a 5 GB monthly download limit. If the customer uses more than this amount, she pays 30 dollars extra, but otherwise, the unused quota is added to her next month’s limit, up to a maximum of 20 GB.

The data domain \(D_{{tel}} = {\mathbb {R}}\mathbin {\cup }\{ {end}_m \}\), where \(d \in {\mathbb {R}}\) indicates a download of d gigabytes and \({end}_m\) indicates the end of the billing cycle and payment of the telephone bill. Given the entire browsing history of the customer, we wish to compute the download limit for the current month.

First, the QRE  takes a sequence of downloads and returns the total data downloaded. The browsing history of a single month is given by the regular expression \(r_m = {\mathbb {R}}^{*} \cdot {end}_m\), and the QRE

takes a sequence of downloads and returns the total data downloaded. The browsing history of a single month is given by the regular expression \(r_m = {\mathbb {R}}^{*} \cdot {end}_m\), and the QRE  maps data streams \(w \in \mathbf [\![ r_m\mathbf ]\!] \) to the total quantity of data downloaded.

maps data streams \(w \in \mathbf [\![ r_m\mathbf ]\!] \) to the total quantity of data downloaded.

The following expression gives the unused allowance available at the end of the month, in terms of initLim, the download limit at the beginning of the month:  , and the QRE \({rollover}({initLim}) = \min (\max ({unused}({initLim}), 0), 20)\) gives the amount to be added to the next month’s limit.

, and the QRE \({rollover}({initLim}) = \min (\max ({unused}({initLim}), 0), 20)\) gives the amount to be added to the next month’s limit.

The QRE \({nextQuota}({initLim}) = {rollover}({initLim}) + 5\) provides the download limit for the next month in terms of initLim and the current month’s browsing history. Finally, the QRE  maps the entire browsing history of the customer to the download limit of the current month.

maps the entire browsing history of the customer to the download limit of the current month.

Aggregating Weather Reports. Our final example deals with a stream of weather reports. Let the data domain \(D_{{wth}} = {\mathbb {R}}\mathbin {\cup }\{ {autEqx}, {sprEqx}, {newYear}, \ldots \}\), where the symbols autEqx and sprEqx represent the autumn and spring equinoxes. Here a number \(d \in {\mathbb {R}}\) indicates a temperature reading of \(d^{\circ }\mathrm{{C}}\). We wish to compute the average winter-time temperature reading. For this query, let winter be defined as the time between an autumn equinox and the subsequent spring equinox.

The regular expression \(r_{{summ}} = {\mathbb {R}}^{*} \cdot {autEqx}\) captures a sequence of temperature readings made before the start of winter, and the expression  maps the summer readings to the empty set. The QRE

maps the summer readings to the empty set. The QRE  collects a sequence of temperature readings into a set and so we can write QRE

collects a sequence of temperature readings into a set and so we can write QRE  .

.

The QRE  constructs the set of winter-time temperatures seen in a data stream. The average winter-time temperature over all years is then given by:

constructs the set of winter-time temperatures seen in a data stream. The average winter-time temperature over all years is then given by:  .

.

3 Compiling QREs into Streaming Evaluation Algorithms

In this section, we show how to compile a QRE f into a streaming algorithm \(M_f\) that computes \(\mathbf [\![ f\mathbf ]\!] (w, \overline{v})\). Recall that the partial application \(\mathbf [\![ f\mathbf ]\!] (w) : T_1 \times T_2 \times \cdots \times T_k \rightarrow T\) is a term which maps parameter valuations \(\overline{v}\) to cost values c. Therefore, the complexity of expression evaluation depends on the complexity of performing operations on terms. This in turn depends on the choice of cost types \(\mathcal {T}\), cost operations \(\mathcal {G}\), and on the number of parameters appearing in f. Let \(time_{{\varphi {\text {-}}eval}} \left( f \right) \) be the maximum time needed to evaluate predicates appearing in f on data values, and let \(time_{\tau }\) (resp. \(mem_{\tau }\)) be the maximum time (resp. memory) needed to perform an operation \({op} \in \mathcal {G}\) on terms \(\tau _{1}, \tau _{2}, \ldots , \tau _{k}\). Then we have:

Theorem 2

Every QRE f can be compiled into a streaming algorithm \(M_f\) which processes each data item in time \( poly(|f|)time_{\tau } time_{{\varphi {\text {-}}eval}} (f) \) and which consumes \(poly\left( {| f |} \right) mem_{\tau }\) memory.

Since the size of all intermediate terms produced, \(| \tau |\), is bounded by \( \left| w \right| poly(|f|)\), this is also an upper bound on \(time_{\tau }\) and \(mem_{\tau }\). However, depending on the cost types \(\mathcal {T}\) and the cost operators \(\mathcal {G}\), they might be smaller. For instance, in the case of real-valued terms over \(\mathcal {G}\{ *, + ,\min ,\max ,{\text {ins}},{\text {avg}}\} \), the term simplification procedure of Subsect. 3.2 guarantees that \(time_{\tau } = poly(|f|) \) and \(mem_{\tau } = O(|f|)\).

Theorem 3

-

1.

For every choice of cost types \(\mathcal {T}\), and cost operations, \(\mathcal {G}\), \(time_{\tau } = |w|poly(|f|) \) and \(mem_{\tau } = |w|poly(|f|) \).

-

2.

If \(\mathcal {T}={\mathbb {R}, \mathbb {M}} \), where \(\mathbb {M}\) is the set of multisets of real numbers, and \(\mathcal {G}\{ *, + ,\min ,\max ,\texttt {ins},\texttt {avg}\}\), then \(time_{\tau } = poly(|f|)\) and \(mem_{\tau } = poly(|f|)\), independent of the length of the data stream stream |w|.

3.1 Overview

We construct, by structural induction on the QRE f, an evaluator

\(M_f\) that computes the function \(\mathbf [\![ f\mathbf ]\!] \). Let us first assume that each element \({w_i \in d}\) of the data stream is annotated with its index i, so the input to the streaming evaluator is a sequence of pairs \((i, w_i)\). Now consider the evaluator  when processing the stream w, as shown in Fig. 3.

when processing the stream w, as shown in Fig. 3.  forwards each element of the stream to both sub-evaluators \(M_f\) and \(M_g\). After reading the prefix \(w_{{pre}}\), \(M_f\) reports that \(\mathbf [\![ f\mathbf ]\!] (w_{{pre}})\) is defined, and returns the term produced. We are therefore now interested in evaluating g over the suffix beginning at the current position of the stream. Each evaluator therefore also accepts input signals of the form \(({\textsc {start}}, i)\), which indicates positions of the input stream from which to start processing the function. While evaluating

forwards each element of the stream to both sub-evaluators \(M_f\) and \(M_g\). After reading the prefix \(w_{{pre}}\), \(M_f\) reports that \(\mathbf [\![ f\mathbf ]\!] (w_{{pre}})\) is defined, and returns the term produced. We are therefore now interested in evaluating g over the suffix beginning at the current position of the stream. Each evaluator therefore also accepts input signals of the form \(({\textsc {start}}, i)\), which indicates positions of the input stream from which to start processing the function. While evaluating  , there may be multiple prefixes, such as \(w_{{pre}}\) and \(w_{{pre}}^\prime \), for which \(\mathbf [\![ f\mathbf ]\!] \) is defined. \(M_g\) gets a start signal after each of these prefixes is read, and may therefore be simultaneously evaluating g over multiple suffixes of the data stream. We refer to the suffix beginning at each start signal as a thread of evaluation.

, there may be multiple prefixes, such as \(w_{{pre}}\) and \(w_{{pre}}^\prime \), for which \(\mathbf [\![ f\mathbf ]\!] \) is defined. \(M_g\) gets a start signal after each of these prefixes is read, and may therefore be simultaneously evaluating g over multiple suffixes of the data stream. We refer to the suffix beginning at each start signal as a thread of evaluation.

After reading the data stream, \(M_{g}\) may report a result, i.e. that \(\mathbf [\![ g\mathbf ]\!] \) is defined on some thread. Recall the semantics of the split operator:  is now defined on the entire data stream, and the result is

is now defined on the entire data stream, and the result is  , where \(\tau _f\) and \(\tau _g\) are respectively the results of evaluating \(\mathbf [\![ f\mathbf ]\!] \) and \(\mathbf [\![ g\mathbf ]\!] \) on the appropriate substrings. The compound evaluator therefore needs to “remember” the result \(\tau _f\) reported by \(M_f\) after processing \(w_{{pre}}\)—a key part of the complexity analysis involves bounding the amount of auxiliary state that needs to be maintained. Next, since \(M_g\) may be processing threads simultaneously, it needs to uniquely identify the thread which is currently returning a result. Result signals are therefore triples of the form \(({\textsc {result}}, i, \tau )\), indicating that the thread beginning at index i is currently returning the result \(\tau \). On receiving the result \(\tau _g\) from \(M_g\) at the end of the input stream, the compound evaluator reconciles this with the result \(\tau _f\) earlier obtained from \(T_f\), and itself emits the result

, where \(\tau _f\) and \(\tau _g\) are respectively the results of evaluating \(\mathbf [\![ f\mathbf ]\!] \) and \(\mathbf [\![ g\mathbf ]\!] \) on the appropriate substrings. The compound evaluator therefore needs to “remember” the result \(\tau _f\) reported by \(M_f\) after processing \(w_{{pre}}\)—a key part of the complexity analysis involves bounding the amount of auxiliary state that needs to be maintained. Next, since \(M_g\) may be processing threads simultaneously, it needs to uniquely identify the thread which is currently returning a result. Result signals are therefore triples of the form \(({\textsc {result}}, i, \tau )\), indicating that the thread beginning at index i is currently returning the result \(\tau \). On receiving the result \(\tau _g\) from \(M_g\) at the end of the input stream, the compound evaluator reconciles this with the result \(\tau _f\) earlier obtained from \(T_f\), and itself emits the result  . The response time of each evaluator therefore depends on the time \(time_{\tau }\) required to perform basic operations over terms.

. The response time of each evaluator therefore depends on the time \(time_{\tau }\) required to perform basic operations over terms.

Finally, let us consider the auxiliary state that  needs to maintain during processing. It maintains a table of results \(Th_{g}\) reported by \(M_{f}\) (the subscript g indicates that the thread is currently being processed by \(M_{g}\)). Each time \(M_{f}\) reports a result \(({\textsc {result}}, i, \tau _{f})\), it adds the triple \((i, j, \tau _{f})\) to \(Th_{g}\): this indicates that the thread of

needs to maintain during processing. It maintains a table of results \(Th_{g}\) reported by \(M_{f}\) (the subscript g indicates that the thread is currently being processed by \(M_{g}\)). Each time \(M_{f}\) reports a result \(({\textsc {result}}, i, \tau _{f})\), it adds the triple \((i, j, \tau _{f})\) to \(Th_{g}\): this indicates that the thread of  beginning at index i received the result \(\tau _f\) from \(M_f\) at index j.

beginning at index i received the result \(\tau _f\) from \(M_f\) at index j.  then sends a start signal to \(M_g\). If left unoptimized, the number of entries in \(Th_{g}\) is therefore the number of start signals sent to \(M_g\), which is, in the worst case, \(O(|w |)\).

then sends a start signal to \(M_g\). If left unoptimized, the number of entries in \(Th_{g}\) is therefore the number of start signals sent to \(M_g\), which is, in the worst case, \(O(|w |)\).

The evaluator \(M_g\) therefore emits kill signals of the form \(({\textsc {kill}}, i)\), indicating that the thread beginning at index i will not be producing any more results, and that parent evaluators may recycle auxiliary state as necessary. Note that a kill signal \(({\textsc {kill}}, i)\) is a strong prediction about the fate of a thread: it is the assertion that for all future inputs, \(\mathbf [\![ g\mathbf ]\!] \) is undefined for the suffix of the data stream beginning at index i. Because of the unambiguity requirements on QREs, it follows that each function evaluator \(M_f\) will always have at most \(O(|f|)\) active threads. Such claims can be immediately lifted to upper bounds on the response times and memory consumption of the evaluator, \(poly(|f|) time_{\tau }\) and \(poly(|f|) mem_{\tau }\).

In summary, the evaluator accepts two types of signals: start signals of the form \(({\textsc {start}}, i)\) and data signals of the form \(({\textsc {symbol}}, i, d)\). It emits two types of signals as output: result signals of the form \(({\textsc {result}}, i, \tau )\) and kill signals of the form \(({\textsc {kill}}, i)\). The input fed to the evaluator satisfies the guarantee that no two threads ever simultaneously produce a result (input validity). In return, the evaluator is guaranteed to report results correctly, and eagerly kill threads.

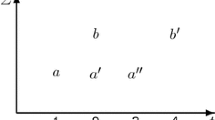

Processing  over an input data stream w. There are two prefixes \(w_{{pre}} = w_1 w_2 \cdots w_i\) and \(w_{{pre}}^\prime = w_1 w_2 \cdots w_j\) of w for which \(\mathbf [\![ f\mathbf ]\!] \) is defined. \(\mathbf [\![ g\mathbf ]\!] \) is defined for the suffix \(w_{{suff}} = w_{i + 1} w_{i + 2} \cdots w_n\).

over an input data stream w. There are two prefixes \(w_{{pre}} = w_1 w_2 \cdots w_i\) and \(w_{{pre}}^\prime = w_1 w_2 \cdots w_j\) of w for which \(\mathbf [\![ f\mathbf ]\!] \) is defined. \(\mathbf [\![ g\mathbf ]\!] \) is defined for the suffix \(w_{{suff}} = w_{i + 1} w_{i + 2} \cdots w_n\).

The input-output requirements of function evaluators are formally stated in the full paper. The construction is similar to the streaming evaluation algorithm for DReX [6]. The major differences are the following:

-

1.

QREs map data streams to terms, while DReX maps input strings to output strings. Even if the top-level QRE is parameter-free, sub-expressions might still involve terms, and intermediate results involve storing terms. We thus pay attention to the computational costs of manipulating terms: this includes the time \(time_{\tau }\) to perform basic operations on them, and the memory \(mem_{\tau }\) needed to store terms.

-

2.

The

combinator, as defined in this paper, is conceptually different from both the iteration and the chained sum combinators of DReX.

combinator, as defined in this paper, is conceptually different from both the iteration and the chained sum combinators of DReX.

3.2 Succinct Representation of Terms

Recall that the evaluation time is parametrized by \(mem_{\tau }\) and \(time_{\tau }\), the maximum memory and time required to perform operations on terms. It is therefore important to be able to succinctly represent terms. To prove the second part of Theorem 3, we now present a simplification procedure for terms over \({\mathcal {G}} = \{ *, + ,min,max,{\texttt {ins}},{\texttt {avg}}\} \) so that \(mem_{\tau }\) and \(time_{\tau }\) are both bounded by poly(|f|), independent of the length of the input stream. The representation we develop must support the following operations:

-

1.

Construct the term \({op}(\tau _1, \tau _2, \ldots , \tau _k)\), for each operator \({op} \in \mathcal {G}\), and appropriately typed terms \(\tau _1\), \(\tau _2\), ..., \(\tau _k\),

-

2.

given terms \(\tau _1\) and \(\tau _2\), and an appropriately typed \(x \in Param{\tau _{1}}\), construct the term

, and

, and -

3.

given a term \(\tau \) and a parameter valuation \(\overline{v}\), compute \(\mathbf [\![ \tau \mathbf ]\!] (\overline{v})\).

Intuitively, the simplification procedure simpl compiles an arbitrary copyless input term \(\tau \) into an equivalent term \(simpl(\tau )\) of bounded size. Consider, for example, a “large” term such as \(\tau (x) = \min (\min (x, 3) + 2, 9)\). By routine algebraic laws such as distributivity, we have \(\tau (x) = \min (\min (x + 2, 3 + 2), 9) = \min (x + 2, 5, 9) = \min (x + 2, 5)\).

Proposition 4

For each input \(\tau \), simpl runs in time \(O(|\tau |^2)\) and returns an equivalent term of bounded size: \(simpl(\tau )\) is equivalent to \(\tau \), and \( |simpl(\tau )| = O(|Param(\tau )|)\).

We apply simpl to every intermediate term produced by the streaming algorithm. It follows that \(mem_{\tau } = O(|X_f|) = O(|f|)\), where \(X_f\) is the set of parameters appearing in the description of f, and \( time_{\tau } = O(poly(|X_{f} |)) = O(poly(|f|)) \), and this establishes Theorem 3.

A first attempt at designing simpl might be to repeatedly apply algebraic laws until terms reach a normal form. For example, the term \(\min (x + 2, 5) + \min (y + 8, 7)\) could be mechanically simplified into the equivalent term \(\min (12, x + 9, y + 13, x + y + 10)\). Notice however, that this simplification potentially involves a constant for each subset of parameters, thus producing terms of size \(O(2^{|f|})\) (but still independent of the stream length |w|).

The simplification routine instead only propagates constants and does not attempt to completely reduce the term to a normal form. The term \(\min (x + 2, 5) + \min (y + 8, 7)\) would therefore be left unchanged.

Consider the set of elements \(A = \{ 3, 4, x, y + 3 \}\). The only operation over multisets is insertion and average. A is therefore represented by the pair \((x + y + 10, 4)\), indicating the sum of the elements in A and the number of elements in A respectively. The average \({\texttt {avg}}(A)\) would be represented by the term \((x + y + 10) / 4 = x / 4 + y / 4 + 2.5\). The simplified term may therefore also contain division terms of the form x / n and is drawn from the following grammar: The procedure \(simpl(\tau )\) works as follows:

-

1.

If \(\tau \) is a constant c or a parameter x, then return \(\tau \).

-

2.

Otherwise, if \(\tau = {op}(\tau _1, \tau _2)\), then compute \(\tau _{1}^{\prime } = simpl(\tau _{1} )\) and \(\tau _{2}^{\prime } = simpl(\tau _{2} )\). Output \(prop{\text {-}}const(op(\tau _{1}^{\prime }, \tau _{2}^{\prime }))\).

The procedure \(prop{\text {-}}const(\tau )\) performs constant propagation, and is essentially a large case-analysis with various pre-applied algebraic simplification laws. For example,

The full case-analysis is described in the full paper.

The key observation is that such constant propagation is sufficient to guarantee small terms: if all non-trivial sub-terms contain at least one parameter, and each parameter appears at most once in each term, then there are at most a bounded number of constants in the simplified term, and thus a bounded number of leaves in the term-tree, and the term is therefore itself of a bounded size. Proposition 4 follows.

3.3 Why the Unambiguity and Single-Use Restrictions?

We first consider the unambiguity rules we used while defining QREs. While defining \({op}(f_1, f_2)\), we require the sub-expressions to have equal domains. Otherwise, the compound expression is only defined for data streams in the intersection of \(Dom(f_{1})\) and \(Dom(f_{2})\). Efficiently determining whether strings match regular expressions with intersection is an open problem (see [32] for the state of the art).

Now consider  without the unambiguous concatenability requirement. For a stream w with two splits \(w = w_1 w_2 = w_1^\prime w_2^\prime \), such that all of \(\mathbf [\![ f\mathbf ]\!] (w_1)\), \(\mathbf [\![ f\mathbf ]\!] (w_1^\prime )\), \(\mathbf [\![ g\mathbf ]\!] (w_2)\) and \(\mathbf [\![ g\mathbf ]\!] (w_2^\prime )\) are defined. Since we are defining functions and not relations, the natural choice is to leave

without the unambiguous concatenability requirement. For a stream w with two splits \(w = w_1 w_2 = w_1^\prime w_2^\prime \), such that all of \(\mathbf [\![ f\mathbf ]\!] (w_1)\), \(\mathbf [\![ f\mathbf ]\!] (w_1^\prime )\), \(\mathbf [\![ g\mathbf ]\!] (w_2)\) and \(\mathbf [\![ g\mathbf ]\!] (w_2^\prime )\) are defined. Since we are defining functions and not relations, the natural choice is to leave  undefined for w. In the compound evaluator, we can no longer assume input validity, as two threads of \(T_g\) will report a result after reading w. Furthermore, the compound evaluator has to perform non-trivial bookkeeping and not report any result after reading w. Requiring unambiguous concatenability is a convenient way to avoid these issues.

undefined for w. In the compound evaluator, we can no longer assume input validity, as two threads of \(T_g\) will report a result after reading w. Furthermore, the compound evaluator has to perform non-trivial bookkeeping and not report any result after reading w. Requiring unambiguous concatenability is a convenient way to avoid these issues.

We conjecture that non-regular functions are expressible if the single-use restrictions are relaxed. Furthermore, lifting the single-use restrictions makes the term simplification procedure of Subsect. 3.2 more complicated. Consider the “copyful” term \({\tau } = \min ((x + y), (x + y) + z)\). Observe that terms are now best represented as DAGs, and consider applying constant propagation to the expression \(\tau + 3\): this results in the term \(\min (x + (y + 3), (x + y) + z + 3)\), thus causing the shared node \(x + y\) of the term DAG to be split. It is not clear that the constant propagation procedure \(prop{\text {-}}const\) does not cause a large blow-up in the size of the term being represented, because of reduced sharing.

4 The Expressiveness of Quantitative Regular Expressions

We now study the expressive power of QREs. The recently introduced formalism of regular functions [7] is parametrized by an arbitrary set of cost types and operations over cost values. Regular functions can be equivalently expressed both by the operational model of streaming string-to-term transducers (SSTTs), and as logical formulas mapping strings to terms in monadic second-order (MSO) logic. In this section, we show that QREs are expressively equivalent to the streaming composition of regular functions. This mirrors similar results from classical language theory, where regular languages can be alternately expressed by finite automata, by regular expressions and as formulas in MSO.

The bank gives the customer a \(\$10\) reward for each month in which no withdrawal is made. The current account balance is computed by the SSTT \({\mathcal {S}}_{{bal}}\). The machine maintains a single register bal, the state \(q_0\) indicates that no withdrawal has been made in the current month, and the machine moves to \(q_1\) if at least one withdrawal has been made.

See Fig. 4 for an example of an SSTT. Informally, an SSTT maintains a finite state control, and a finite set of typed registers. Each register holds a term \(\tau \) of the appropriate type, and register contents are updated during each transition. The main restrictions are: (a) transitions depend only on the current state, and not on the contents of the registers, (b) register updates are copyless: the update \(x := x + y\) is allowed, but not the update \(x := y + y\), and (c) at each point, the term held by each register is itself single-use.

We first describe the translation from QREs to the streaming composition of SSTTs. The construction proceeds in two steps: we first rewrite the given QRE as a streaming composition of composition-free QREs (Subsect. 4.1), and then we translate each composition-free QRE into an equivalent SSTT (Subsect. 4.2). In Subsect. 4.3, we describe the proof of expressive completeness: each SSTT can also be expressed by an equivalent QRE. For SSTTs with multiple registers, data may flow between the registers in complicated ways. The main part of the SSTT-to-QRE translation procedure is analysis of these data flows, and the imposition of a partial order among data flows so that an inductive construction may be performed. Definitions and omitted proofs may be found in the full paper.

4.1 A Normal Form for QREs

Our main goal is to convert each QRE into the streaming composition of SSTTs. We first rewrite the given QRE as the streaming composition of several QREs, each of which is itself composition-free (Theorem 5). We use the term \(\text {QRE}_{\not \gg }\) to highlight that the QRE does not include occurrences of the streaming composition operator. In Subsect. 4.2 we translate each of these intermediate \(\text {QRE}_{\not \gg }\)-s into a single SSTT.

Theorem 5

For each QRE e, there exists a k and \(\text {QRE}_{\not \gg }\)-s \(f_1, f_2, \ldots , f_k\), such that e is equivalent to  .

.

The proof is by induction on the structure of e. The claim clearly holds if e is a basic expression or itself of the form  . We now handle each of the other cases in turn.

. We now handle each of the other cases in turn.

Consider the expression  . The idea is to produce, after reading each element \(w_i\) of the input stream w, the pair \({int}_i = (\mathbf [\![ f\mathbf ]\!] (w_1, w_2, \ldots , w_i), w_i)\). We can now apply g to the first element and h to the second element of each pair in the stream of intermediate values \({int} = {int}_1, {int}_2, \ldots \), and use the cost operator op to produce the final result.

. The idea is to produce, after reading each element \(w_i\) of the input stream w, the pair \({int}_i = (\mathbf [\![ f\mathbf ]\!] (w_1, w_2, \ldots , w_i), w_i)\). We can now apply g to the first element and h to the second element of each pair in the stream of intermediate values \({int} = {int}_1, {int}_2, \ldots \), and use the cost operator op to produce the final result.

We are therefore interested in the operator  , which produces the output \((t_1, t_2) \in T_1 \times T_2\) for each pair of input values \(t_1 \in T_2\) and \(t_2 \in T_2\). Observe that g cannot be directly applied to elements of the intermediate data stream, because they are pairs, and only the first element of this pair is of interest to g. Instead, we write the expression

, which produces the output \((t_1, t_2) \in T_1 \times T_2\) for each pair of input values \(t_1 \in T_2\) and \(t_2 \in T_2\). Observe that g cannot be directly applied to elements of the intermediate data stream, because they are pairs, and only the first element of this pair is of interest to g. Instead, we write the expression  , which is identical to g except that each data predicate \(\varphi (d)\) is replaced with \(\varphi (d.{first})\), and each atomic function \(\lambda (d)\) is replaced with \(\lambda (d.{first})\). Similarly,

, which is identical to g except that each data predicate \(\varphi (d)\) is replaced with \(\varphi (d.{first})\), and each atomic function \(\lambda (d)\) is replaced with \(\lambda (d.{first})\). Similarly,  is identical to h except that it looks at the second element in each input data pair.

is identical to h except that it looks at the second element in each input data pair.

Now, observe that the original expression  is equivalent to the expression

is equivalent to the expression  , where the expression last simply outputs the last element of the input stream:

, where the expression last simply outputs the last element of the input stream:  . The remaining cases are presented in the full paper.

. The remaining cases are presented in the full paper.

4.2 From QREs to SSTTs

Theorem 6

Let f be a \(\text {QRE}_{\not \gg }\) over a data domain D. There exists an SSTT \({\mathcal {S}}_f\) such that \(\mathbf [\![ f\mathbf ]\!] = \mathbf [\![ {\mathcal {S}}_f\mathbf ]\!] \).

The proof proceeds by structural induction on f. The challenging cases are those of  and

and  . Consider the case

. Consider the case  . Just as in Fig. 3, it is not possible to determine, before seeing the entire stream w, where to split the stream into \(w = w_{{pre}} w_{{suff}}\) such that both \(\mathbf [\![ g\mathbf ]\!] (w_{{pre}})\) and \(\mathbf [\![ g\mathbf ]\!] (w_{{suff}})\) are defined. However, it is known that SSTTs are closed under regular lookahead, a powerful primitive operation by which the automaton can make a transition not just based on the next input symbol, but based on a regular property of the entire (as yet unseen) suffix. The detailed proof of Theorem 6, including a formal definition of regular lookahead, may be found in the full paper.

. Just as in Fig. 3, it is not possible to determine, before seeing the entire stream w, where to split the stream into \(w = w_{{pre}} w_{{suff}}\) such that both \(\mathbf [\![ g\mathbf ]\!] (w_{{pre}})\) and \(\mathbf [\![ g\mathbf ]\!] (w_{{suff}})\) are defined. However, it is known that SSTTs are closed under regular lookahead, a powerful primitive operation by which the automaton can make a transition not just based on the next input symbol, but based on a regular property of the entire (as yet unseen) suffix. The detailed proof of Theorem 6, including a formal definition of regular lookahead, may be found in the full paper.

4.3 From SSTTs to QREs

Theorem 7

Let \({\mathcal {S}}\) be an SSTT. There exists a \(\text {QRE}_{\not \gg }\) f such that \(\mathbf [\![ {\mathcal {S}}\mathbf ]\!] = \mathbf [\![ f\mathbf ]\!] \).

Proof Outline. The proof follows the idea in [33] for constructing a regular expression from a given DFA. Suppose the DFA has n states \(\{ q_1, q_2, \ldots , q_n \}\). Let \(R_{i,j}^{k}\) denote the set of input strings w that take the DFA from state \(q_i\) to state \(q_j\) without going through any intermediate state numbered higher than k (the origin and target states i and j can be of index greater than k).

The regular expressions corresponding to the sets \(R_{i, j}^k\) can be defined inductively. The base case when \(k=0\) corresponds to a single transition. For the inductive definition we have \(R_{i, j}^k = R_{i, k}^{k - 1} (R_{k, k}^{k-1})^{*} R_{k, j}^{k - 1} \mathbin {\cup }R_{i, j}^{k - 1}\). Given the accepting states are \(F=\{f_1,f_2,\ldots ,f_\ell \}\) and the initial state is 1 then the regular expression \(R_{1,f_1}^n + R_{1,f_2}^n + \ldots + R_{1,f_\ell }^n\) recognizes the same language as the given DFA.

We note that this construction works with unambiguous regular expressions. That is, whenever R and \(R^\prime \) above are combined using \(\cup \) their domain is disjoint, when they are concatenated they are unambiguously concatenable, and when R is iterated it is unambiguously iterable.

Generalizing the Idea to SSTTs. Let \(\mathcal {R}\) be the set of regular expressions used in the construction above. To extend this idea to SSTTs we would like to create for every \(R \in \mathcal {R}\) and every variable x of a given SSTT \({\mathcal {S}}\) a quantitative regular expression \(f_{[x, R]}\) such that for \(w \in R\) the value of variable x when processing of w by \({\mathcal {S}}\) terminates is given by \(f_{[x, R]}(w)\). If the SSTT has m variables \(\{ x_1, \ldots , x_m \}\) we can work with vectors of QREs \(\mathbf {V}_R = (f_{[1, R]}, \ldots , f_{[m, R]})\) where \(f_{[i, R]}\) abbreviates \(f_{[x_i, R]}\). In order to use the same inductive steps for building these vectors of QREs, given \(\mathbf {V}_{R}\) and \(\mathbf {V}_{R^\prime }\) we need to be able to calculate \(\mathbf {V}_{R \cdot R^\prime }\), \(\mathbf {V}_{R^{*}}\) and \(\mathbf {V}_{R \mathbin {\cup }R^\prime }\) whenever R and \(R^\prime \) are combined in a respective manner in the construction above. The major difficulty is calculating \(\mathbf {V}_{R^{*}}\). This is since variables may flow to one another in complicated forms. For instance, on a transition where variable x is updated to \(\max (x, y)\) and variable y is updated to \(z + 1\), the QRE for iterating this transition, zero, one or two times looks very different. Fortunately, there is a finite number of such variations, i.e. we can define terms that should capture each variation so that repeating the loop more than m times will yield a previously encountered term. The situation, though, is more complicated since there may be several loops on the same state, and in each such loop the variables flow may be different, and we will need to account for all loops consisting of arbitrary long concatenations of these paths. Thus, we will consider regular expressions of the form \(R{[i, j, k, \theta ]}\) where \(\theta \) describes the flow of variables as defined below, and we will need to find some partial order on such regular expressions so that we can always compute \(\mathbf {V}_{R^{\prime \prime }}\) using previously computed \(\mathbf {V}_{R}\) and \(\mathbf {V}_{R^\prime }\), and whenever these are combined they do not violate the unambiguity requirements.

Variable Flows. The variable-flow (in short flow) of a stream \({w \in R_{i, j}^k}\) is a function \({\theta :[1..m] \rightarrow [1..m]}\) such that \(\theta (i) = j\) if \(x_j\) depends on \(x_i\). By the copyless restriction there is at most one variable \(x_j\) which may depend on \(x_i\) and we assume without loss of generality that every variable flows into some other variable. We use \(\varTheta \) to denote the set of all flows. For \(\theta _1, \theta _2 \in \varTheta \) we use \(\theta _1 \cdot \theta _2\) (or simply \(\theta _1 \theta _2\)) to denote the flow function \(\theta (i) = \theta _2(\theta _1(i))\). Note that if \(w = w_1 w_2\) and \(w_1, w_2\) have flows \(\theta _1, \theta _2\) respectively, then w has flow \(\theta _1 \theta _2\). We use \(\theta ^0\) to denote the identity flow, \(\theta ^1\) to denote \(\theta \) and for \(k > 1\) we use \(\theta ^k\) to denote \(\theta \cdot \theta ^{k - 1}\). We say that a flow \(\theta \) is idempotent if \(\theta ^k = \theta \) for every \(k \in {\mathbb {N}}\). We use \(\varTheta _I\) to denote the set of all idempotent flows. We say that a flow \(\theta \) is normal if \(\theta (i) = j\) implies \(i \ge j\) (i.e. the variables flow only upwards). Given an SSTT \({\mathcal {S}}\) with set of states Q we can transform it to an SSTT \({\mathcal {S}}_N\) where all updates on the edges are normal by letting the states of \({\mathcal {S}}_N\) be \(Q \times P_n\) where \(P_n\) are all the permutations of [1..n]. The SSTT \({\mathcal {S}}_N\) remembers in the state the permutation needed to convert the flow to that of the original \({\mathcal {S}}\). Let \(\varTheta _N\) be the set of normal flows. It can be shown that concatenations of normal flows is a normal flow. Thus, we consider henceforth only normal flows.

For \(\theta \in \varTheta _N\) and \(i, j, k \in [1..n]\), let \(R{[i, j, k, \theta ]}\) denote the set of all streams w such that when \({\mathcal {S}}\) processes w starting in state i, it reaches state j without passing through any state indexed greater than k and the overall flow is \(\theta \). We use \(\mathcal {R}^\varTheta \) to denote the set of all such regular expressions. We are now ready to define the vectors \(\mathbf {V}_R\) corresponding to \(R \in \mathcal {R}^\varTheta \) for R’s that appear in the inductive construction.

5 Related Work

Data Management Systems. Traditional database management systems focus on efficient processing of queries over static data. When data is updated frequently, and queries need to be answered incrementally with each update, ideally without reprocessing the entire data set (due to its large size), the resulting data management problem of continuous queries has been studied in the database literature (see [9] for a survey, [1] for an example system, and [8] for CQL, an extension of the standard relational query language SQL for continuous queries). The literature on continuous queries assumes a more general data model compared to ours: there can be multiple data streams that encode relational data and queries involve, in addition to aggregation statistics, classical relational operators such as join. Solutions involve maintaining a window of the stream in memory and answer queries only approximately. A recent project on continuous queries is ActiveSheets [35] that integrates stream processing in spreadsheets and provides many high-level features aimed at helping end-users. In recent years, there is also increased focus on evaluating queries over data streams in a distributed manner, and systems such as Apache Storm (see http://storm.apache.org) and Twitter Heron [27] facilitate the design of distributed algorithms for query evaluation. There is also extensive literature on querying XML data using languages such as XPath and XQuery and their extensions [15, 26, 28].