Abstract

Most of applied statistics involves regression analysis of data. In practice, it is important to specify a regression model that has minimal assumptions which are not violated by data, to ensure that statistical inferences from the model are informative and not misleading. This paper presents a stand-alone and menu-driven software package, Bayesian Regression: Nonparametric and Parametric Models, constructed from MATLAB Compiler. Currently, this package gives the user a choice from 83 Bayesian models for data analysis. They include 47 Bayesian nonparametric (BNP) infinite-mixture regression models; 5 BNP infinite-mixture models for density estimation; and 31 normal random effects models (HLMs), including normal linear models. Each of the 78 regression models handles either a continuous, binary, or ordinal dependent variable, and can handle multi-level (grouped) data. All 83 Bayesian models can handle the analysis of weighted observations (e.g., for meta-analysis), and the analysis of left-censored, right-censored, and/or interval-censored data. Each BNP infinite-mixture model has a mixture distribution assigned one of various BNP prior distributions, including priors defined by either the Dirichlet process, Pitman-Yor process (including the normalized stable process), beta (two-parameter) process, normalized inverse-Gaussian process, geometric weights prior, dependent Dirichlet process, or the dependent infinite-probits prior. The software user can mouse-click to select a Bayesian model and perform data analysis via Markov chain Monte Carlo (MCMC) sampling. After the sampling completes, the software automatically opens text output that reports MCMC-based estimates of the model’s posterior distribution and model predictive fit to the data. Additional text and/or graphical output can be generated by mouse-clicking other menu options. This includes output of MCMC convergence analyses, and estimates of the model’s posterior predictive distribution, for selected functionals and values of covariates. The software is illustrated through the BNP regression analysis of real data.

Similar content being viewed by others

Introduction

Regression modeling is ubiquitous in empirical areas of scientific research, because most research questions can be asked in terms of how a dependent variable changes as a function of one or more covariates (predictors). Applications of regression modeling involve either prediction analysis (e.g., Dension et al., 2002; Hastie et al. 2009), categorical data analysis (e.g., Agresti, 2002), causal analysis (e.g., Imbens, 2004; Imbens & Lemieux, 2008; Stuart, 2010), meta-analysis (e.g., Cooper, et al. 2009), survival analysis of censored data (e.g., Klein & Moeschberger, 2010), spatial data analysis (e.g., Gelfand, et al., 2010), time-series analysis (e.g., Prado & West, 2010), item response theory (IRT) analysis (e.g., van der Linden, 2015), and/or other types of regression analyses.

These applications often involve either the normal random-effects (multi-level) linear regression model (e.g., hierarchical linear model; HLM). This general model assumes that the mean of the dependent variable changes linearly as a function of each covariate; the distribution of the regression errors follows a zero-mean symmetric continuous (e.g., normal) distribution; and the random regression coefficients are normally distributed over pre-defined groups, according to a normal (random-effects) mixture distribution. Under the ordinary linear model, this mixture distribution has variance zero. For a discrete dependent variable, all of the previous assumptions apply for the underlying (continuous-valued) latent dependent variable. For example, a logit model (probit model, resp.) for a binary-valued (0 or 1) dependent variable implies a linear model for the underlying latent dependent variable, with error distribution assumed to follow a logistic distribution with mean 0 and scale 1 (normal distribution with mean 0 and variance 1, resp.) (e.g., Dension et al., 2002).

If data violate any of these linear model assumptions, then the estimates of regression coefficient parameters can be misleading. As a result, much research has devoted to the development of more flexible, Bayesian nonparametric (BNP) regression models. Each of these models can provide a more robust, reliable, and rich approach to statistical inference, especially in common settings where the normal linear model assumptions are violated. Excellent reviews of BNP models are given elsewhere (e.g., Walker, et al., 1999; Ghosh & Ramamoorthi, 2003; Müller & Quintana, 2004; Hjort, et al., 2010; Mitra & Müller, 2015).

A BNP model is a highly-flexible model for data, defined by an infinite (or a very large finite) number of parameters, with parameter space assigned a prior distribution with large supports (Müller and Quintana 2004). Typical BNP models have an infinite-dimensional, functional parameter, such as a distribution function. According to Bayes’ theorem, a set of data updates the prior to a posterior distribution, which conveys the plausible values of the model parameters given the data and the chosen prior. Typically in practice, Markov chain Monte Carlo (MCMC) sampling methods (e.g., Brooks et al., 2011) are used to estimate the posterior distribution (and chosen functionals) of the model parameters.

Among the many BNP models that are available, the most popular models in practice are infinite-mixture models, each having mixture distribution assigned a (BNP) prior distribution on the entire space of probability measures (distribution functions). BNP infinite-mixture models are popular in practice, because they can provide a flexible and robust regression analysis of data, and provide posterior-based clustering of subjects into distinct homogeneous groups, where each subject cluster group is defined by a common value of the (mixed) random model parameter(s). A standard BNP model is defined by the Dirichlet process (infinite-) mixture (DPM) model (Lo 1984), with mixture distribution assigned a Dirichlet process (DP) (Ferguson 1973) prior distribution on the space of probability measures. Also, often in practice, a BNP model is specified as an infinite-mixture of normal distributions. This is motivated by the well-known fact that any smooth probability density (distribution) of any shape and location can be approximated arbitrarily-well by a mixture of normal distributions, provided that the mixture has a suitable number of mixture components, mixture weights, and component parameters (mean and variance).

A flexible BNP infinite-mixture model need not be a DPM model, but may instead have a mixture distribution that is assigned another BNP prior, defined either by a more general stick-breaking process (Ishwaran and James 2001; Pitman 1996), such as the Pitman-Yor (or Poisson-Dirichlet) process (Pitman 1996; Pitman and Yor 1997), the normalized stable process (Kingman 1975), the beta two-parameter process (Ishwaran and Zarepour 2000); or a process with more restrictive, geometric mixture weights (Fuentes-García, et al., 2009, 2010); or defined by the normalized inverse-Gaussian process (Lijoi et al. 2005), a general type of normalized random measure (Regazzini et al. 2003).

A more general BNP infinite-mixture model can be constructed by assigning its mixture distribution a covariate-dependent BNP prior. Such a BNP mixture model allows the entire dependent variable distribution to change flexibly as a function of covariate(s). The Dependent Dirichlet process (DDP; MacEachern, 1999, 2000, 2001) is a seminal covariate-dependent BNP prior. On the other hand, the infinite-probits prior is defined by a dependent normalized random measure, constructed by an infinite number of covariate-dependent mixture weights, with weights specified by an ordinal probits regression with prior distribution assigned to the regression coefficient and error variance parameters (Karabatsos and Walker 2012a).

The applicability of BNP models, for data analysis, depends on the availability of user-friendly software. This is because BNP models typically admit complex representations, which may not be immediately accessible to non-experts or beginners in BNP. Currently there are a few nice command-driven R software packages for BNP mixture modeling. The DPpackage (Jara et al. 2011) of R (the R Development Core Team, 2015) includes many BNP models, mostly DPM models, that provide either flexible regression or density estimation for data analysis. The package also provides BNP models having parameters assigned a flexible mixture of finite Pólya Trees BNP prior (Hanson 2006). The bspmma R package (Burr 2012) provides DPM normal-mixture models for meta-analysis. Newer packages have recently arrived to the scene. They include the BNPdensity R package (Barrios et al. 2015), which provides flexible mixture models for nonparametric density estimation via more general normalized random measures, with mixture distribution assigned a BNP prior defined by either a normalized stable, inverse-Gaussian, and generalized gamma process. They also include the PReMiuM R package (Liverani et al. 2015) for flexible regression modeling via DPM mixtures and clustering.

The existing packages for BNP modeling, while impressive, still suggest room for improvements, as summarized by the following points.

-

1.

While the existing BNP packages provide many DPM models, they do not provide a BNP infinite-mixture model with mixture distribution assigned any one of the other important BNP priors mentioned earlier. Priors include those defined by the Pitman-Yor, normalized stable, beta, normalized inverse-Gaussian process; or defined by a geometric weights or infinite-probits prior. As exceptions, the DPpackage provides a Pitman-Yor process mixture of regressions model for interval-censored data (Jara et al. 2010); whereas the BNPdensity package provides models defined by more general normalized random measures, but only for density estimation and not for regression.

-

2.

The bspmma R package (Burr 2012), for meta-analysis, is limited to DPM models that do not incorporate covariate dependence (Burr and Doss 2005).

-

3.

The DPpackage handles interval-censored data, but does not handle left- or right-censored data.

-

4.

While both BNP packages use MCMC sampling algorithms to estimate the posterior distribution of the user-chosen model, each package does not provide options for MCMC convergence analysis (e.g., Flegal & Jones, 2011). A BNP package that provides its own menu options for MCMC convergence analysis would be, for the user, faster and more convenient, and would not require learning a new package (e.g., CODA R package; Plummer et al., 2006) to conduct MCMC convergence analyses.

-

5.

Both BNP packages do not provide many options to investigate how the posterior predictive distribution (and chosen functionals) of the dependent variable, varies as a function of one or more covariates.

-

6.

Generally speaking, command-driven software can be unfriendly, confusing, and time-consuming to beginners and to experts. This includes well-known packages for parametric Bayesian analysis including BUGS and OpenBUGS (Thomas 1994; Lunn et al. 2009), JAGS (2015), STAN (2015), NIMBLE (2015), and BIPS (2015).

In this paper, we introduce a stand-alone and user-friendly software package for BNP modeling, which the author constructed using MATLAB Compiler (Natick, MA). This package, named: Bayesian Regression: Nonparametric and Parametric Models (Karabatsos 2016), provides BNP data analysis in a fully menu-driven software environment that resembles SPSS (I.B.M., 2015).

The software allows the user to mouse-click menu options:

-

1.

To inspect, describe, and explore the variables of the data set, via basic descriptive statistics (e.g., means, standard deviations, quantiles/percentiles) and graphs (e.g., scatter plots, box plots, normal Q-Q plots, kernel density plots, etc.);

-

2.

To pre-process the data of the dependent variable and/or the covariate(s) before including the variable(s) into the BNP regression model for data analysis. Examples of data pre-processing include constructing new dummy indicator (0 or 1) variables and/or two-way interaction variables from the covariates (variables), along with other options to transform variables; and performing a nearest-neighbor hot-deck imputation (Andridge and Little 2010) of missing data values in the variables (e.g., covariate(s)).

-

3.

To use list and input dialogs to select, in the following order: the Bayesian model for data analysis; the dependent variable; covariate(s) (if a regression model was selected); parameters of the prior distribution of the model; the (level-2 and possibly level-3) grouping variables (for a multilevel model, if selected); the observation weights variable (if necessary; e.g., to set up a meta-analysis); and the variables describing the nature of the censored dependent variable observations (if necessary; e.g., to set up a survival analysis). The observations can either be left-censored, right-censored, interval-censored, or uncensored. Also, if so desired, the user can easily use point-and-click to quickly highlight and select a large list of covariates for the model, whereas command-driven software requires the user to carefully type (or copy and paste) and correctly-verify the long list of the covariates.

After the user makes these selections, the Bayesian Regression software immediately presents a graphic of the user-chosen Bayesian model in the middle of the computer screen, along with all of the variables that were selected for this model (e.g., dependent variables, covariate(s); see #3 above). The explicit presentation of the model is important because BNP models typically admit complex representations. In contrast, the command-driven packages do not provide immediate on-screen presentations of the BNP model selected by the user.

Then the software user can click a button to run the MCMC sampling algorithm for the menu-selected Bayesian model. The user clicks this button after entering a number of MCMC sampling iterations. Immediately after all the MCMC sampling iterations have completed, the software automatically opens a text output file that summarizes the basic results of the data analysis (derived from the generated MCMC samples). Results include point-estimates of the (marginal) posterior distributions of the model’s parameters, and summaries of the model’s predictive fit to the data. Then, the user can click other menu options to produce graphical output of the results. They include density plots, box plots, scatter plots, trace plots, and various plots of (marginal) posterior distributions of model parameters and fit statistics. For each available BNP infinite-mixture model, the software implements standard slice sampling MCMC methods (Kalli et al. 2011) that are suitable for making inferences of the posterior distribution (and chosen functionals) of model parameters.

Next, after a few mouse-clicks of appropriate menu options, the user can perform a detailed MCMC convergence analysis. This analysis evaluates whether a sufficiently-large number of MCMC samples (sampling iterations of the MCMC algorithm) has been generated, in order to warrant the conclusion that these samples have converged to samples from the posterior distribution (and chosen functionals) of the model parameters. More details about how to use the software to perform MCMC convergence analysis is provided in “ANOVA-linear DDP model” and 5.2.

The software also provides menu options to investigate how the posterior predictive distribution (and functionals) of the dependent variable changes as a function of covariates. Functionals of the posterior predictive distribution include: the mean, median, and quantiles to provide a quantile regression analysis; the variance functional to prove a variance regression analysis; the probability density function (p.d.f.) and the cumulative distribution function (c.d.f.) to provide a density regression analysis; and the survival function, hazard function, and the cumulative hazard function, for survival analysis. The software also provides posterior predictive inferences for BNP infinite-mixture models that do not incorporate covariates and only focus on density estimation.

Currently, the Bayesian Regression software provides the user a choice from 83 Bayesian models for data analysis. Models include 47 BNP infinite-mixture regression models, 31 normal linear models for comparative purposes, and 5 BNP infinite normal mixture models for density estimation. Most of the infinite-mixture models are defined by normal mixtures.

The 47 BNP infinite-mixture regression models can each handle a dependent variable that is either continuous-valued, binary-valued (0 or 1), or ordinal valued (c=0,1,…,m), using either a probit or logit version of this model for a discrete dependent variable; with mixture distribution assigned a prior distribution defined either by the Dirichlet process, Pitman-Yor process (including the normalized stable process), beta (2-parameter) process, geometric weights prior, normalized inverse-Gaussian process, or an infinite-probits regression prior; and with mixing done on either the intercept parameter, or on the intercept and slope coefficient parameters, and possibly on the error variance parameter. Specifically, the regression models with mixture distribution assigned a Dirichlet process prior are equivalent to ANOVA/linear DDP models, defined by an infinite-mixture of normal distributions, with a covariate-dependent mixture distribution defined by independent weights (DeIorio et al. 2004; Müller et al. 2005). Similarly, the models with mixture distribution, instead, assigned a different BNP prior distribution (process) mentioned above, implies a covariate-dependent version of that process. See “ANOVA-linear DDP model” for more details. Also, some of the infinite-mixture regression models, with covariate-dependent mixture distribution assigned a infinite-probits prior, have spike-and-slab priors assigned to the coefficients of this BNP prior, based on stochastic search variable selection (SSVS) (George & McCulloch, 1993, 1997). In addition, the 5 BNP infinite normal mixture models, for density estimation, include those with mixture distribution assigned a BNP prior distribution that is defined by either one of the 5 BNP process mentioned above (excluding infinite-probits).

Finally, the 31 Bayesian linear models of the Bayesian Regression software include ordinary linear models, 2-level, and 3-level normal random-effects (or HLM) models, for a continuous dependent variable; probit and logit versions of these linear models for either a binary (0 or 1) or ordinal (c=0,1,…,m) dependent variable; and with mixture distribution specified for the intercept parameter, or for the intercept and slope coefficient parameters.

The outline for the rest of the paper is as follows. “Overview of Bayesian inference” reviews the Bayesian inference framework. Appendix A reviews the basic probability theory notation and concepts that we use. In “Key BNP regression models”, we define two key BNP infinite-mixture regression models, each with mixture distribution assigned a BNP prior distribution on the space of probability measures. The other 50 BNP infinite-mixture models of the Bayesian Regression software are extensions of these two key models, and in that section we give an overview of the various BNP priors mentioned earlier. In that section we also describe the Bayesian normal linear model, and a Bayesian normal random-effects linear model (HLM). “Using the Bayesian regression software” gives step-by-step software instructions on how to perform data analysis using a menu-chosen, Bayesian model. “Real data example” illustrates the Bayesian Regression software through the analysis of a real data set, using each of the two key BNP models, and a Bayesian linear model. Appendix B provides a list of exercises that the software user can work through in order to practice BNP modeling on several example data sets, available from the software. These data-analysis exercises address applied problems in prediction analysis, categorical data analysis, causal analysis, meta-analysis, survival analysis of censored data, spatial data analysis, time-series analysis, and item response theory analysis. The last section ends with conclusions.

Overview of Bayesian inference

In a given research setting where it is of interest to apply a regression data analysis, a sample data set is of the form \(\mathcal {D}_{n}=\{(y_{i}, \mathbf {x}_{i})\}_{i=1}^{n}\). Here, n is the sample size of the observations, respectively indexed by i=1,…,n, where y i is the ith observation of the dependent variable Y i , corresponding to an observed vector of p observed covariatesFootnote 1 \(\mathbf {x}_{i}=(1,x_{i1},{\ldots } ,x_{ip})^{\intercal }\). A constant (1) Term is included in x for future notational convenience.

A regression model assumes a specific form for the probability density (or p.m.f.) function f(y | x;ζ), conditionally on covariates x and model parameters denoted by a vector, ζ∈Ω ζ , where Ω ζ ={ζ} is the parameter space. For any given model parameter value ζ∈Ω ζ , the density f(y i | x i ;ζ) is the likelihood of y i given x i , and \(L(\mathcal {D}_{n}\,;\, \boldsymbol {\zeta })={\prod }_{i=1}^{n}f(y_{i}\,|\,\mathbf {x}_{i}; \boldsymbol {\zeta })\) is the likelihood of the full data set \(\mathcal {D}_{n} \) under the model. A Bayesian regression model is completed by the specification of a prior distribution (c.d.f.) π(ζ) over the parameter space Ω ζ , and π(ζ) gives the corresponding probability density of a given parameter ζ∈Ω ζ .

According to Bayes’ theorem, after observing the data \(\mathcal {D}_{n}=\{(y_{i},\mathbf {x}_{i})\}_{i=1}^{n}\), the plausible values of the model parameter ζ is given by the posterior distribution. This distribution defines the posterior probability density of a given parameter ζ∈Ω ζ by:

Conditionally on a chosen value of the covariates \(\mathbf {x} =(1,x_{1},{\ldots } ,x_{p})^{\intercal }\), the posterior predictive density of a future observation y n+1, and the corresponding posterior predictive c.d.f. (F(y | x)), mean (expectation, \(\mathbb {E}\)), variance (\( \mathbb {V}\)), median, uth quantile (Q(u | x), for some chosen u∈[0,1], with Q(.5 | x) the conditional median), survival function (S), hazard function (H), and cumulative hazard function (Λ), is given respectively by:

Depending on the choice of posterior predictive functional from (2a–2h), a Bayesian regression analysis can provide inferences in terms of how the mean (2c), variance (2d), quantile (2e) (for a given choice u∈[0,1]), p.d.f. (2a), c.d.f. (2b), survival function (2f), hazard function (2g), or cumulative hazard function (2h), of the dependent variable Y, varies as a function of the covariates x. While the mean functional \(\mathbb {E}_{n}(Y\,|\,\mathbf {x})\) is conventional for applied regression, the choice of functional \(\mathbb {V}_{n}(Y\,|\,\mathbf {x})\) pertains to variance regression; the choice of function Q n (u | x) pertains to quantile regression; the choice of p.d.f. f n (y | x) or c.d.f. F n (y | x) pertains to Bayesian density (distribution) regression; and the choice of survival S n (y | x) or a hazard function (H n (y | x) or Λ n (y | x)) pertains to survival analysis.

In practice, the predictions of the dependent variable Y (for a chosen functional from (2a–2h)), can be easily viewed (in a graph or table) as a function of a subset of only one or two covariates. Therefore, for practice we need to consider predictive methods that involve such a small subset of covariates. To this end, let \(\mathbf {x}_{\mathcal {S}}\) be a focal subset of the covariates (x 1,…,x p ), with \(\mathbf {x}_{\mathcal {S}}\) also including the constant (1) term. Throughout, the term “focal subset of the covariates” is a short phrase that refers to covariates that are of interest in a given posterior predictive analysis. Also, let \(\mathbf {x}_{\mathcal {C}}\) be the non-focal, complement set of q (unselected) covariates. Then \(\mathbf {x}_{\mathcal {S}}\cap \mathbf {x}_{\mathcal {C}}\neq \emptyset \) and \(\mathbf {x=x}_{\mathcal {S}}\cup \mathbf {x}_{ \mathcal {C}}\).

It is possible to study how the predictions of a dependent variable Y vary as a function of the focal covariates \(\mathbf {x}_{\mathcal {S}}\), using one of four automatic methods. The first two methods are conventional. They include the grand-mean centering method, which assumes that the non-focal covariates \(\mathbf {x}_{\mathcal {C}}\) is defined by the mean in the data \(\mathcal {D}_{n}\), with \(\mathbf {x}_{\mathcal {C}}:=\frac {1}{n} {\sum }_{i=1}^{n}\mathbf {x}_{\mathcal {C}i}\); and the zero-centering method, which assumes that the non-focal covariates are given by \(\mathbf {x}_{\mathcal {C}}:=\mathbf {0}_{q}\) where 0 q is a vector of q zeros. Both methods coincide if the observed covariates \( \{\mathbf {x}_{i}=(1,x_{i1},{\ldots } ,x_{ip})^{\intercal }\}_{i=1}^{n}\) in the data \(\mathcal {D}_{n}\) have average \((1,0,{\ldots } ,0)^{\intercal }\). This is the case if the covariate data \(\{x_{ik}\}_{i=1}^{n}\) have already been centered to have mean zero, for k=1,…,p.

The partial dependence method (Friedman 2001, Section 8.2) is the third method for studying how the predictions of a dependent variable Y varies as a function of the focal covariates \(\mathbf {x}_{\mathcal {S}}\). In this method, the predictions of Y, conditionally on each value of the focal covariates \(\mathbf {x}_{\mathcal {S}}\), are averaged over data (\(\mathcal {D}_{n}\)) observations \(\{\mathbf {x}_{\mathcal {C} i}\}_{i=1}^{n}\) (and effects) of the non-focal covariates \(\mathbf {x}_{\mathcal {C}}\). Specifically, in terms of the posterior predictive functionals (2a–2h), the averaged prediction of Y, conditionally on a value of the covariates \(\mathbf {x}_{\mathcal {S}}\), is given respectively by:

The equations above give, respectively, the (partial dependence) posterior predictive density, c.d.f., mean, variance, quantile (at u∈[0,1]), survival function, hazard function, and cumulative hazard function, of Y, conditionally on a value x S of the focal covariates. As a side note pertaining to causal analysis, suppose that the focal covariates include a covariate, denoted T, along with a constant (1) term, so that x S =(1,t). Also suppose that the covariate T is a binary-valued (0,1) indicator of treatment receipt, versus non-treatment receipt. Then the estimate of a chosen (partial-dependence) posterior predictive functional of Y under treatment (T=1) from (3a–3h), minus that posterior predictive functional under control (T=0), provides an estimate of the causal average treatment effect (CATE). This is true provided that the assumptions of unconfoundedness and overlap hold (Imbens, 2004).

The partial-dependence method can be computationally-demanding, as a function of sample size (n), the dimensionality of x S , the number of x S values considered when investigating how Y varies as a function of x S , and the number of MCMC sampling iterations performed for the estimation of the posterior distribution (density (1)) of the model parameters. In contrast, the clustered partial dependence method, the fourth method, is less computationally-demanding. This method is based on forming K-means cluster centroids, \(\{\mathbf {x}_{\mathcal {C}t}\}_{t=1}^{K}\), of the data observations \(\{\mathbf {x}_{\mathcal {C}i}\}_{i=1}^{n}\) of the non-focal covariates \(\mathbf {x}_{\mathcal {C}}\), with \(K=\text {floor}(\sqrt {n/2})\) clusters as a rule-of-thumb. Then the posterior predictions of Y, conditionally on chosen value of the covariate subset \(\mathbf {x}_{\mathcal {S }}\), is given by any one of the chosen posterior functionals (3a–3h) of interest, after replacing \(\frac {1}{n}{\sum }_{i=1}^{n}\) with \( \frac {1}{K}{\sum }_{t=1}^{K}\), and \(\mathbf {x}_{\mathcal {C}i}\) with \( \mathbf {x}_{\mathcal {C}t}\).

The predictive fit of a Bayesian regression model, to a set of data, \( \mathcal {D}_{n}=\{(y_{i},\mathbf {x}_{i})\}_{i=1}^{n}\), can be assessed on the basis of the posterior predictive expectation (2c) and variance (2d). First, the standardized residual fit statistics of the model are defined by:

An observation y i can be judged as an outlier under the model, when its absolute standardized residual | r i | exceeds 2 or 3. The proportion of variance explained in the dependent variable Y, by a Bayesian model, is measured by the R-squared statistic:

Also, suppose that it is of interest to compare M regression models, in terms of predictive fit to the given data set \(\mathcal {D}_{n}\). Models are indexed by \(\underline {m}=1,{\ldots } ,{M}\), respectively. For each model \(\underline {m}\), a global measure of predictive fit is given by the mean-squared predictive error criterion:

(Laud and Ibrahim 1995; Gelfand and Ghosh 1998). The first term in Eq. 6 measures model goodness-of-fit to the data \(\mathcal {D}_{n}\), and the second term is a model complexity penalty. Among a set of M regression models compared, the model with the best predictive fit for the data \(\mathcal {D}_{n}\) is identified as the one that has the smallest value of \(D(\underline {m})\).

MCMC methods

In practice, a typical Bayesian model does not admit a closed-form solution for its posterior distribution (density function of the form Eq. 1). However, the posterior distribution, along with any function of the posterior distribution of interest, can be estimated through the use of Monte Carlo methods. In practice, they usually involve Markov chain Monte Carlo (MCMC) methods (e.g., Brooks et al., 2011). Such a method aims to construct a discrete-time Harris ergodic Markov chain \(\{\boldsymbol {\zeta }^{(s)}\}_{s=1}^{S}\) with stationary (posterior) distribution \({\Pi } (\boldsymbol {\zeta }\,|\,\mathcal {D }_{n})\), and ergodicity is ensured by a proper (integrable) prior density function π(ζ) (Robert and Casella 2004, Section 10.4.3). A realization ζ (s) from the Markov chain can be generated by first specifying partitions (blocks) ζ b (b=1,…,B) of the model’s parameter ζ, and then simulating a sample from each of the full conditional posterior distributions \({\Pi } (\boldsymbol {\zeta }_{b}\,|\, \mathcal {D}_{n}, \boldsymbol {\zeta }_{c},c\neq b)\), in turn for b=1,…,B. Then, as \(S\rightarrow \infty \), the Markov (MCMC) chain \(\{ \boldsymbol {\zeta }^{(s)}\}_{s=1}^{S}\) converges to samples from the posterior distribution \({\Pi } (\boldsymbol {\zeta }\,|\,\mathcal {D}_{n})\). Therefore, in practice, the goal is to construct an MCMC chain (samples) \(\{ \boldsymbol {\zeta }^{(s)}\}_{s=1}^{S}\) for a sufficiently-large finite S.

MCMC convergence analyses can be performed in order to check whether a sufficiently-large number (S) of sampling iterations has been run, to warrant the conclusion that the resulting samples (\(\{\boldsymbol {\zeta }^{(s)}\}_{s=1}^{S}\)) have converged (practically) to samples from the model’s posterior distribution. Such an analysis may focus only on the model parameters of interest for data analysis, if so desired. MCMC convergence can be investigated in two steps (Geyer 2011). One step is to inspect, for each of these model parameters, the univariate trace plot of parameter samples over the MCMC sampling iterations. This is done to evaluate MCMC mixing, i.e., the degree to which MCMC parameter samples explores the parameter’s support in the model’s posterior distribution. Good mixing is suggested by a univariate trace plot that appears stable and “hairy” over MCMC iterations.Footnote 2 The other step is to conduct, for each model parameter of interest, a batch means (or subsampling) analysis of the MCMC samples, in order to calculate 95 % Monte Carlo Confidence Intervals (95 % MCCIs) of posterior point-estimates of interest (such as marginal posterior means, variances, quantiles, etc., of the parameter) (Flegal and Jones 2011). For a given (marginal) posterior point-estimate of a parameter, the 95 % MCCI half-width size reflects the imprecision of the estimate due to Monte Carlo sampling error. The half-width becomes smaller as number of MCMC sampling iterations grows. In all, MCMC convergence is confirmed by adequate MCMC mixing and practically-small 95 % MCCIs half-widths (e.g., .10 or .01) for the (marginal) posterior point-estimates of parameters (and chosen functionals) of interest. If adequate convergence cannot be confirmed after an MCMC sampling run, then additional MCMC sampling iterations can be run until convergence is obtained for the (updated) total set of MCMC samples.

For each BNP infinite-mixture model, the Bayesian Regression software estimates the posterior distribution (and functionals) of the model on the basis of a general slice-sampling MCMC method, which can handle the infinite-dimensional model parameters (Kalli et al. 2011). This slice-sampling method does so by introducing latent variables into the likelihood function of the infinite-mixture model, such that, conditionally on these variables, the model is finite-dimensional and hence tractable by a computer. Marginalizing over the distribution of these latent variables recovers the original likelihood function of the infinite-mixture model.

We now describe the MCMC sampling methods that the software uses to sample from the full conditional posterior distributions of the parameters, for each model that the software provides. For each DPM model, the full conditional posterior distribution of the unknown precision parameter (α) is sampled from a beta mixture of two gamma distributions (Escobar and West 1995). For each BNP infinite-mixture model based on a DP, Pitman-Yor process (including the the normalized stable process), or beta process prior, the full conditional posterior distribution of the mixture weight parameters are sampled from appropriate beta distributions (Kalli et al. 2011). Also, for the parameters of each of the 31 linear models, and for the linear parameters of each of the BNP infinite-mixture models, the software implements (direct) MCMC Gibbs sampling of standard full conditional posterior distributions, derived from the standard theories of the Bayesian normal linear, probit, and logit models, as appropriate (Evans, 1965; Lindley & Smith, 1972; Gilks et al., 1993; Albert & Chib, 1993; Bernardo & Smith, 1994; Denison et al., 2002; Cepeda & Gamerman, 2001; O’Hagan & Forster, 2004; Holmes & Held, 2006; George & McCulloch, 1997; e.g., see Karabatsos & Walker, 2012a, 2012b). When the full conditional posterior distribution of the model parameter(s) is non-standard, the software implements a rejection sampling algorithm. Specifically, it implements an adaptive random-walk Metropolis-Hastings (ARWMH) algorithm (Atchadé and Rosenthal 2005) with normal proposal distribution, to sample from the full conditional posterior distribution(s) of the mixture weight parameter of a BNP geometric weights infinite-mixture model; the mixture weight parameter of a BNP normalized inverse-Gaussian process mixture model, using the equivalent stick-breaking representation of this process (Favaro et al. 2012). Also, for BNP infinite-mixture models and normal random-effects models that assign a uniform prior distribution to the variance parameter for random intercepts (or means), the software implements the slice sampling (rejection) algorithm with stepping-out procedure (Neal 2003), in order to sample from the full conditional posterior distribution of this parameter. Finally, for computational speed considerations, we use the ARWMH algorithm instead of Gibbs sampling, in order to sample from the full conditional posterior distributions for the random coefficient parameters (the intercepts u 0h ; and possibly the u k h ,k=0,1,…,p, as appropriate, for groups h=1,…,H) in a normal random-effects (or random intercepts) HLM; and for the random coefficients (β j ) or random intercept parameters (β 0j ) in a BNP infinite-mixture regression model, as appropriate (Karabatsos & Walker, 2012a, b).

The given data set (\(\mathcal {D}_{n}\)) may consist of censored dependent variable observations (either left-, right-, and/or interval-censored). If the software user indicates the censored dependent variable observations (see “Using the Bayesian regression software”, Step 6), then the software adds a Gibbs sampling step to the MCMC algorithm, that draws from the full-conditional posterior predictive distributions (density function (2a)) to provide multiple MCMC-based imputations of these missing censored observations (Gelfand et al., 1992; Karabatsos & Walker, 2012a).

Finally, the software implements Rao-Blackwellization (RB) methods (Gelfand and Mukhopadhyay 1995) to compute estimates of the linear posterior predictive functionals from (2a–2h) and (3a–3h). In contrast, the quantile functional Q n (u | x) is estimated from order statistics of MCMC samples from the posterior predictive distribution of Y given x. The 95 % posterior credible interval of the quantile functional Q(u | x) can be viewed in a PP-plot (Wilk and Gnanadesikan 1968) of the 95 % posterior interval of the c.d.f. F(u | x), using available software menu options. The hazard functional H n (y | x) and the cumulative hazard functional Λ n (y | x) are derived from RB estimates of the linear functionals f n (y | x) and F n (y | x). The same is true for the partial-dependence functionals \(Q_{n}(u\,|\,\mathbf {x}_{\mathcal {S}})\), \( H_{n}(y\,|\,\mathbf {x}_{\mathcal {S}})\), and \({\Lambda }_{n}(y\,|\,\mathbf {x}_{\mathcal {S}})\).

Key BNP regression models

A BNP infinite-mixture regression model has the general form:

given a covariate (x) dependent, discrete mixing distribution G x ; kernel (component) densities f(y | x;ψ,𝜃 j (x)) with component indices j=1,2,…, respectively; with fixed parameters ψ; and with component parameters 𝜃 j (x) having sample space Θ; and given mixing weights \((\omega _{j}(\mathbf {x}))_{j=1}^{\infty }\) that sum to 1 at every \(\mathbf {x}\in \mathcal {X}\), with \(\mathcal {X}\) the covariate space.

In the infinite-mixture model (7), the covariate-dependent mixing distribution is a random probability measure that has the general form,Footnote 3

and is therefore an example of a species sampling model (Pitman 1995).

The mixture model (7) is completed by the specification of a prior distribution π(ζ) on the space Ω ζ ={ζ} of the infinite-dimensional model parameter, given by:

The BNP infinite-mixture regression model (7)–(8), completed by the specification of a prior distribution π(ζ), is very general and encompasses, as special cases: fixed- and random-effects linear and generalized linear models (McCullagh and Nelder 1989; Verbeke and Molenbergs 2000; Molenberghs and Verbeke 2005), finite-mixture latent-class and hierarchical mixtures-of-experts regression models (McLachlan and Peel 2000; Jordan and Jacobs 1994), and infinite-mixtures of Gaussian process regressions (Rasmussen et al. 2002).

In the general BNP model (7)–(8), assigned prior π(ζ), the kernel densities f(y | x;ψ,𝜃 j (x)) may be specified as covariate independent, with: f(y | x;ψ,𝜃 j (x)):=f(y | ψ,𝜃 j ); and may not contain fixed parameters ψ, in which case ψ is null. Also for the model, covariate dependence is not necessarily specified for the mixing distribution, so that G x :=G. No covariate dependence is specified for the mixing distribution if and only if both the component parameters and the mixture weights are covariate independent, with 𝜃 j (x):=𝜃 j and ω j (x):=ω j . The mixing distribution G x is covariate dependent if the component parameters 𝜃 j (x) or the mixture weights ω j (x) are specified as covariate dependent.

Under the assumption of no covariate dependence in the mixing distribution, with G x :=G, the Dirichlet process (Ferguson 1973) provides a standard and classical choice of BNP prior distribution on the space of probability measures \(\mathcal {G}_{\Theta }\) ={G}Θ on the sample space Θ. The Dirichlet process is denoted \(\mathcal {DP}(\alpha ,G_{0})\) with precision parameter α and baseline distribution (measure) G 0. We denote \(G\sim \mathcal {DP} (\alpha ,G_{0})\) when the random probability measure G is assigned a \(\mathcal {DP}(\alpha ,G_{0})\) prior distribution on \(\mathcal {G}_{\Theta }\). Under the \(\mathcal {DP}(\alpha ,F_{0})\) prior, the (prior) mean and variance of G are given respectively by Ferguson (1973):

For the \(\mathcal {DP}(\alpha ,G_{0})\) prior, Eq. 10a shows that the baseline distribution G 0 represents the prior mean (expectation) of G, and the prior variance of G is inversely proportional to the precision parameter α, as shown in Eq. 10b. The variance of G is increased (decreased, resp.) as α becomes smaller (larger, resp.). In practice, a standard choice of baseline distribution G 0(⋅) is provided by the normal N(μ,σ 2) distribution. The \(\mathcal {DP}(\alpha ,G_{0})\) can also be characterized in terms of a Dirichlet (Di) distribution. That is, if \(G\sim \mathcal {DP} (\alpha ,G_{0})\), then:

for every choice of k≥1 (exhaustive) partitions B 1,…,B k of the sample space, Θ.

The \(\mathcal {DP}(\alpha ,G_{0})\) can also be characterized as a particular “stick-breaking” stochastic process (Sethuraman 1994; Sethuraman and Tiwari 1982). A random probability measure (G) that is drawn from the \(\mathcal {DP}(\alpha , G_{0}) \) prior, with \(G\sim \mathcal {DP}(\alpha ,G_{0})\), is constructed by first taking independently and identically distributed (i.i.d.) samples of (υ,𝜃) from the following beta (Be) and baseline (G 0) distributions:

and then using the samples \((\upsilon _{j},\boldsymbol {\theta }_{j})_{j=1}^{\infty }\) to construct the random probability measure by:

Above, the ω j s are mixture weights, particularly, stick-breaking weights constructed by:

and they sum to 1 (i.e., \({\sum }_{j=1}^{\infty }\omega _{j}=1\)).

More in words, a random probability measure, G, drawn from a \(\mathcal {DP} (\alpha ,G_{0})\) prior distribution on \(\mathcal {G}_{\Theta }\) ={G}Θ, can be represented as infinite-mixtures of degenerate probability measures (distributions). Such a random distribution is discrete with probability 1, which is obvious because the degenerate probability measure (δ 𝜃 j (⋅)) is discrete. The locations 𝜃 j of the point masses are a sample from G 0. The random weights ω j are obtained from a stick-breaking procedure, described as follows. First, imagine a stick of length 1. As shown in Eq. 12d, at stage j=1 a piece is broken from this stick, and then the value of the first weight ω 1 is set equal to the length of that piece, with ω 1=υ 1. Then at stage j=2, a piece is broken from a stick of length 1−ω 1, and then the value of the second weight ω 2=υ 2(1−υ 1) is set equal to the length of that piece. This procedure is repeated for j=1,2,3,4,…, where at any given stage j, a piece is broken from a stick of length \(1-{\sum }_{l=1}^{j-1}\omega _{j}\), and then the value of the weight ω j is set equal to the length of that piece, with \(\omega _{j}=\upsilon _{j}{\prod }_{l=1}^{j-1}(1-\upsilon _{l})\). The entire procedure results in weights \((\omega _{j})_{j=1}^{\infty }\) that sum to 1 (almost surely).

The stick-breaking construction (12a–12d) immediately suggests generalizations of the \(\mathcal {DP}(\alpha ,G_{0})\), especially by means of increasing the flexibility of the prior (12a) for the random parameters \((\upsilon _{j})_{j=1}^{\infty }\) that construct the stick-breaking mixture weights (12d). One broad generalization is given by a general stick-breaking process (Ishwaran and James 2001), denoted \(\mathcal {SB}(\mathbf {a},\mathbf {b},G_{0})\) with positive parameters a=(a 1,a 2,…) and b=(b 1,b 2,…), which gives a prior on \(\mathcal {G}_{\Theta }\) ={G}Θ. This process replaces the i.i.d. beta distribution assumption in Eq. 12a, with the more general assumption of independent beta (Be) distributions, with

In turn, there are many interesting special cases of the \(\mathcal {SB}(\mathbf {a},\mathbf {b},G_{0})\) process prior, including:

-

1.

The Pitman-Yor (Poisson-Dirichlet) process, denoted \(\mathcal {PY}(a,b,G_{0})\), which assumes a j =1−a and b j =b+j a, for j=1,2,…, in Eq. 13, with 0≤a<1 and b>−a (Perman et al. 1992; Pitman and Yor 1997).

-

2.

The beta two-parameter process, which assumes a j =a and b j =b in Eq. 13 (Ishwaran and Zarepour 2000).

-

3.

The normalized stable process (Kingman 1975), which is equivalent to the \(\mathcal {PY}(a,0,G_{0})\) process, with 0≤a<1 and b=0.

-

4.

The Dirichlet process \(\mathcal {DP}(\alpha ,G_{0})\), which assumes a j =1 and b j =α in Eq. 13, and with is equivalent to the \(\mathcal {PY}(0,\alpha ,G_{0})\) process.

-

5.

The geometric weights prior, denoted \(\mathcal {GW}(a,b,G_{0})\), which assumes in Eq. 13 the equality restriction υ=υ j for j=1,2,…, leading to mixture weights (12d) that can be re-written as \(\omega _{j}=\upsilon \left (1-\upsilon \right )^{j-1}\), for j=1,2,… (Fuentes-García et al. 2009, 2010). These mixture weights may be assigned a beta prior distribution, with υ∼Be(a,b).

Another generalization of the \(\mathcal {DP}(\alpha ,G_{0})\) is given by the mixture of Dirichlet process (MDP), defined by the stick-breaking construction (12a–12d), after sampling from prior distributions \(\alpha \sim {\Pi } \left (\alpha \right ) \) and \(\boldsymbol { \vartheta }\sim {\Pi } \left (\boldsymbol {\vartheta }\right ) \) for the precision and baseline parameters (Antoniak 1974).

A BNP prior distribution on \(\mathcal {G}_{\Theta }\) ={G}Θ, defined by a Normalized Random Measure (NRM) process, assumes that a discrete random probability measure G, given by Eq. 12c, is constructed by mixture weights that have the form

The I 1,I 2,I 3,… are the jump sizes of a non-Gaussian Lévy process whose sum is almost surely finite (see e.g. James et al., 2009), and are therefore stationary independent increments (Bertoin 1998). The \(\mathcal {DP}(\alpha ,G_{0})\) is a special NRM process which makes the gamma (Ga) distribution assumption \({\sum }_{j=1}^{\infty }I_{j}\sim \text {Ga} (\alpha ,1)\) (Ferguson 1973, pp. 218– 219).

An important NRM is given by the normalized inverse-Gaussian \(\mathcal {NIG} (c,G_{0})\) process (Lijoi et al. 2005), which can be characterized as a stick-breaking process (Favaro et al. 2012), defined by the stick-breaking construction (12a–12d), after relaxing the i.i.d. assumption (12a), by allowing for dependence among the υ j distributions, with:

The random variables (15a) follow normalized generalized inverse-Gaussian (GIG) distributions, with p.d.f. given by equation (4) in Favaro et al. (2012), and Eqs. 15b–15c refer to GIG and inverse-gamma (IG) distributions.

Stick-breaking process priors can be characterized in terms of the clustering behavior that it induces in the posterior predictive distribution of 𝜃. Let \(\{\boldsymbol {\theta }_{c}^{\ast }:c=1,{\ldots } ,k_{n}\leq n\}\) be the k n ≤n unique values (clusters) among the n observations of a data set. Let \({\Upsilon }_{n}=\{\mathcal {C}_{1}, {\ldots } ,\mathcal {C}_{c},{\ldots } ,\mathcal {C}_{k_{n}}\}\) be the random partition of the integers {1,…,n}. Each cluster is defined by \( \mathcal {C}_{c}=\{i:\boldsymbol {\theta }_{i}= \boldsymbol {\theta }_{c}^{\ast }\}\subset \{1,{\ldots } ,n\},\) and has size \(n_{c}=|\mathcal {C}_{c}|\), with cluster frequency counts n n =(n 1,…,n c ,…,n k n ) and \({\sum }_{c=1}^{k_{n}}n_{c}=n\).

When G is assigned a Pitman-Yor \(\mathcal {PY}(a,b,G_{0})\) process prior, the posterior predictive probability of a new observation 𝜃 n+1 is defined by:

That is, 𝜃 n+1 forms a new cluster with probability (b+a k n )/(b+n), and otherwise with probability (n c −a)/(b+n), 𝜃 n+1 is allocated to old cluster \(\mathcal {C}_{c}\), for c=1,…,k n . Recall that the normalized stable process (Kingman 1975) is equivalent to the \(\mathcal {PY}(a,0,G_{0})\) process with 0≤a<1 and b=0; and the \(\mathcal {DP}(\alpha ,G_{0})\) is the \(\mathcal {PY}(0,b,G_{0})\) process with a=0 and b=α.

Under the \(\mathcal {NIG}(c,G_{0})\) process prior, the posterior predictive distribution is defined by the probability function,

with:

where Γ(⋅ ; ⋅) is the incomplete gamma function (Lijoi et al. 2005, p. 1283). Finally, exchangeable partition models (e.g., Hartigan, 1990; Barry & Hartigan, 1993; Quintana & Iglesias, 2003) also give rise to random clustering structures of a form (17a–17c), and therefore coincide with the family of Gibbs-type priors, which include the \(\mathcal {PY}(a,b,G_{0})\) and \(\mathcal {NIG} (c,G_{0})\) processes and their special cases. More detailed discussions on the clustering behavior induced by various BNP priors are given by DeBlasi et al. (2015).

So far, we have described only BNP priors for the mixture distribution (8) of the general BNP regression model (7), while assuming no covariate dependence in the mixing distribution, with G x :=G. We now consider dependent BNP processes. A seminal example is given by the Dependent Dirichlet process (\(\mathcal {DDP}(\alpha _{\mathbf { x}},G_{0\mathbf {x}})\)) (MacEachern, 1999, 2000, 2001), which models a covariate (x) dependent process G x , by allowing either the baseline distribution G 0x , the stick-breaking mixture weights ω j (x), and/or the precision parameter α x to depend on covariates x. In general terms, a random dependent probability measure \(G_{\mathbf {x}}\,|\,\alpha _{\mathbf {x}},G_{0 \mathbf {x}}\sim \mathcal {DDP} (\alpha _{\mathbf {x}},G_{0\mathbf {x}})\) can be represented by Sethuraman’s (1994) stick-breaking construction, as:

Next, we describe an important BNP regression model, with a dependent mixture distribution G x assigned a specific \( \mathcal {DDP}(\alpha _{\mathbf {x}},G_{0\mathbf {x}})\) prior.

ANOVA-linear DDP model

Assume that the data \(\mathcal {D}_{n}=\{(y_{i},\mathbf {x}_{i})\}_{i=1}^{n}\) can be stratified into N h groups, indexed by h=1,…,N h , respectively. For each of group h, let y i(h) be the ith dependent observation of group h, and let \(\mathbf {y}_{h}=(y_{i(h)})_{i(h)=1}^{n_{h}} \) be the column vector of n h dependent observations, corresponding to an observed design matrix \(\mathbf {X}_{h}=(\mathbf {x}_{1(h)}^{\mathbf {\intercal }}, \ldots , \mathbf {x}_{i(h)}^{\mathbf { \intercal }},{\ldots } ,\mathbf {x}_{n_{h}}^{\mathbf {\intercal }})\) of n h rows of covariate vectors \(\mathbf {x}_{i(h)}^{\mathbf {\intercal }}\) respectively. Possibly, each of the N h groups of observations has only one observation (i.e., n h =1), in which case N h =n.

The ANOVA-linear DDP model (DeIorio et al. 2004; Müller et al. 2005) is defined as:

where N(μ,T) and N(μ | 0,r 0 I p+1) each refers to a multivariate normal distribution, and IW refers to the inverted-Wishart distribution. Therefore, all the model parameters are assigned prior distributions, which together, define the joint prior p.d.f. for ζ∈Ω ζ by:

with beta (be), multivariate normal (n), inverse-gamma (ig), inverted-Wishart (iw), and gamma (ga) p.d.f.s together defining the prior distributions in the ANOVA-linear DDP model (19a–19h). As shown, this model is based on a mixing distribution G(β) assigned a \(\mathcal {DP}(\alpha ,G_{0})\) prior, with precision parameter α and multivariate normal baseline distribution, G 0(⋅):=N(⋅ | μ,T). Prior distributions are assigned to (α,μ,T) in order to allow for posterior inferences to be robust to different choices of the \(\mathcal {DP}(\alpha ,G_{0})\) prior parameters.

The ANOVA-linear DDP model (19a–19h) is equivalent to the BNP regression model (7), with normal kernel densities n (y i | μ j ,σ 2) and mixing distribution G x (μ) (8) assigned a \(\mathcal {DDP}(\alpha ,G_{0 \mathbf {x}})\) prior, where:

with β j | μ,T∼N(μ,T) and σ 2∼ IG (a 0/2,a 0/2) (i.e., G 0(⋅)=N (β |μ,T)IG(σ 2 |a 0/2,a 0/2)), and with the ω j stick-breaking weights (19c) (DeIorio et al. 2004).

A menu option in the Bayesian Regression software labels the ANOVA-linear DDP model (19a–19h) as the “Dirichlet process mixture of homoscedastic linear regressions model” (for Step 8 of a data analysis; see next section). The software allows the user to analyze data using any one of many variations of the model (19a–19h). Variations of this DDP model include: “mixture of linear regressions” models, as labeled by a menu option of the software, with mixing distribution G(β,σ 2) for the coefficients and the error variance parameters; “mixture of random intercepts” models, with mixture distribution G(β 0) for only the intercept parameter β 0, and with independent normal priors for the slope coefficient parameters \((\beta _{k})_{k=1}^{p}\); mixture models having mixture distribution G assigned either a Pitman-Yor \(\mathcal {PY}(a,b, G_{0})\) (including the normalized stable process prior), beta process, geometric weights, or normalized inverse-Gaussian process \( \mathcal {NIG}(c,G_{0})\) prior, each implying, respectively, a dependent BNP prior for a covariate-dependent mixing distribution (20) (using similar arguments made for the DDP model by De Iorio et al., 2004); and mixed-logit or mixed-probit regression models for a binary (0 or 1) or ordinal (c=0,1,…,m) dependent variable. Also, suppose that the ANOVA-linear DDP model (19a–19h) is applied to time-lagged dependent variable data (which can be set up using a menu option in the software; see “Installing the software”, Step 3, and “Modify data set menu options”). Then this model is defined by an infinite-mixture of autoregressions, with mixture distribution assigned a time-dependent DDP (Lucca et al. 2012). The Help menu of the software provides a full list of models that are available from the software.

Infinite-probits mixture linear model

As mentioned, typical BNP infinite-mixture models assume that the mixture weights have the stick-breaking form (12d). However, a BNP model may have weights with a different form. The infinite-probits model is a Bayesian nonparametric regression model (7)–(8), with prior π(ζ), and with mixture distribution (8) defined by a dependent normalized random measure (Karabatsos and Walker 2012a).

For data, \(\mathcal {D}_{n}=\{(y_{i},\mathbf {x}_{i})\}_{i=1}^{n}\), a Bayesian infinite-probits mixture model can be defined by:

with \({\Phi } \left (\cdot \right ) \) the normal N(0,1) c.d.f., and model parameters \(\boldsymbol {\zeta }= ((\mu _{j})_{j=1}^{\infty },\sigma _{\mu }^{2},\boldsymbol {\beta },\sigma ^{2},\boldsymbol {\beta }_{\omega }, \sigma _{\omega })\) assigned a prior π(ζ) with p.d.f.:

where J p denotes a p×1 vector of 1s, and u (σ μ | 0,b) refers to the p.d.f. of the uniform distribution with minimum 0 and maximum b.

The Bayesian Regression software labels the BNP model (21a–21j) as the “Infinite homoscedastic probits regression model,” in a menu option (in Step 8 of a data analysis; see next section). This model is defined by a highly-flexible robust linear model, an infinite mixture of linear regressions (21b), with random intercept parameters μ j modeled by infinite covariate-dependent mixture weights (21c). The model (21a–21j) has been extended and applied to prediction analysis (Karabatsos and Walker 2012a), meta-analysis (Karabatsos et al. 2015), (test) item-response analysis (Karabatsos and Walker 2015b), and causal analysis (Karabatsos and Walker 2015a).

The covariate-dependent mixture weights ω j (x) in Eq. 21c, defining the mixture distribution (8), are modeled by a probits regression for ordered categories j=…,−2,−1,0,1,2,…, with latent location parameter \(\mathbf {x}^{\intercal }\boldsymbol {\beta }_{\omega }\), and with latent standard deviation σ ω that controls the level of modality of the conditional p.d.f. f(y | x;ζ) of the dependent variable Y. Specifically, as \(\sigma _{\omega }\rightarrow 0\), the conditional p.d.f. f(y | x;ζ) becomes more unimodal. As σ ω gets larger, f(y | x;ζ) becomes more multimodal (see Karabatsos & Walker, 2012a).

The Bayesian Regression software allows the user to analyze data using any one of several versions of the infinite-probits regression model (21). Versions include models where the kernel densities are instead specified by covariate independent normal densities n\((y\,|\,\mu _{j},{\sigma _{j}^{2}})\), and the mixture weights are modeled by:

include models where either the individual regression coefficients β in the kernels, or the individual regression coefficients (β ω ,λ ω ) in the mixture weights (23) are assigned spike-and-slab priors using the SSVS method (George & McCulloch, 1993, 1997), to enable automatic variable (covariate) selection inferences from the posterior distribution; and include mixed-probit regression models for binary (0 or 1) or ordinal (c=0,1,…,m) dependent variables, each with inverse-link function c.d.f. modeled by a covariate-dependent, infinite-mixture of normal densities (given by Eq. 21b, but instead for the continuous underlying latent dependent variable; see Karabatsos & Walker, 2015a).

Some linear models

We briefly review two basic Bayesian normal linear models from standard textbooks (e.g., O’Hagan & Forster, 2004; Denison et al., 2002).

First, the Bayesian normal linear model, assigned a (conjugate) normal inverse-gamma prior distribution to the coefficients and error variance parameters, (β,σ 2), is defined by:

An extension of the model (24a–24e) is provided by the Bayesian 2-level normal random-effects model (HLM). Again, let the data \(\mathcal {D}_{n}=\{(y_{i},\mathbf {x}_{i})\}_{i=1}^{n}\) be stratified into N h groups, indexed by h=1,…,N h . Also, for each group h, let y i(h) be the ith dependent observation, and let \(\mathbf {y}_{h}=(y_{i(h)})_{i(h)=1}^{n_{h}}\) be the column vector of n h dependent observations, corresponding to an observed design matrix \(\mathbf {X}_{h}=(\mathbf {x}_{1(h)}^{\mathbf {\intercal }},{\ldots } ,\mathbf {x}_{i(h)}^{\mathbf { \intercal }},{\ldots } ,\mathbf {x}_{n_{h}}^{\mathbf {\intercal }})\) of n h rows of covariate vectors \(\mathbf {x}_{i(h)}^{\mathbf {\intercal }}\) respectively. Then a Bayesian 2-level model (HLM) can be represented by:

This model (25a–25h), as shown in (25f), assumes that the random coefficients u h (for h=1,…,N h ) are normally distributed over the N h groups.

Both linear models above, and the different versions of these models mentioned in “Introduction”, are provided by the Bayesian Regression software. See the Help menu for more details.

Using the Bayesian regression software

Installing the software

The Bayesian Regression software is a stand-alone package for a 64-bit Windows computer.Footnote 4 To install the software on your computer, take the following steps:

-

1.

Go the Bayesian Regression software web page: http://www.uic.edu/~georgek/HomePage/BayesSoftware.html. Then click the link on that page to download the Bayesian Regression software installation file, named BayesInstaller_web64bit.exe (or BayesInstaller_web32bit.exe).

-

2.

Install the software by clicking the file BayesInstaller_webXXbit.exe. This will include a web-based installation of MATLAB Compiler Runtime, if necessary. As you install, select the option “Add a shortcut to the desktop,” for convenience. (To install, be connected to the internet, and temporarily disable any firewall or proxy settings on your computer).

Then start the software by clicking the icon BayesRegXXbit.exe.

The next subsection provides step-by-step instructions on how to use the Bayesian Regression software to perform a Bayesian analysis of your data set. The software provides several example data files, described under the Help menu. You can create them by clicking the File menu option: “Create Bayes Data Examples file folder.” Click the File menu option again to import and open an example data set from this folder. The next subsection illustrates the software through the analysis of the example data set PIRLS100.csv.

The Bayesian Regression software, using your menu-selected Bayesian model, outputs the data analysis results into space- and comma-delimited text files with time-stamped names, which can be viewed in free NotePad++. The comma-delimited output files include the posterior samples (.MC1), model fit residual (*.RES), and the model specification (*.MODEL) files. The software also outputs the results into graph (figure *.fig) files, which can then be saved into a EPS (*.eps), bitmap (*.bmp), enhanced metafile (*.emf), JPEG image (*.jpg), or portable document (*.pdf) file format. Optionally you may graph or analyze a delimited text output file after importing it into spreadsheet software (e.g., OpenOffice) or into the R software using the command line: ImportedData = read.csv(file.choose()).

Running the software (12 steps for data analysis)

You can run the software for data analysis using any one of many Bayesian models of your choice. A data analysis involves running the following 12 basic steps (required or optional).

In short, the 12 steps are as follows:

-

(1)

Import or open the data file (Required);

-

(2)

Compute basic descriptive statistics and plots of your data (Optional);

-

(3)

Modify the data set (e.g., create variables) to set up your data analysis model (Optional);

-

(4)

Specify a new Bayesian model for data analysis (Required);

-

(5)

Specify observation weights (Optional);

-

(6)

Specify the censored observations (Optional);

-

(7)

Set up the MCMC sampling algorithm model posterior estimation (Required);

-

(8)

Click the Run Posterior Analysis button (Required);

-

(9)

Click the Posterior Summaries button to output data analysis results (Required);

-

(10)

Check MCMC convergence (Required);

-

(11)

Click the Posterior Predictive button to run model predictive analyses (Optional);

-

(12)

Click the Clear button to finish your data analysis project.

Then you may run a different data analysis. Otherwise, you may then Exit the software and return to the same data analysis project later, after re-opening the software.

Below, we give more details on the 12 steps of data analysis.

-

1.

(Required) Use the File menu to Import or open the data file for analysis. Specifically, the data file that you import must be a comma-delimited file, with name having the .csv extension. (Or, you may click the File menu option to open an existing (comma-delimited) data (*.DAT) file). In the data file, the variable names are located in the first row, with numeric data (i.e., non-text data) in all the other rows. For each row, the number of variable names must equal the number of commas minus 1. The software allows for missing data values, each coded as NaN or as an empty blank. After you select the data file to import, the software converts it into a comma-delimited data (*.DAT) file. Figure 1 shows the interface of the Bayesian Regression software. It presents the PIRLS100.DAT data set at the bottom of the interface, after the PIRLS100.csv file has been imported.

-

2.

(Optional) Use the Describe/Plot Data Set menu option(s) to compute basic descriptive statistics and plots of the data variables. Statistics and plots include the sample mean, standard deviation, quantiles, frequency tables, cross-tabulations, correlations, covariances, univariate or bivariate histograms,Footnote 5 stem-and-leaf plots, univariate or bivariate kernel density estimates,Footnote 6 quantile-quantile (Q-Q) plots, two- or three-dimensional scatter plots, scatter plot matrices, (meta-analysis) funnel plots (Egger et al. 1997), box plots, and plots of kernel regression estimates with automatic bandwidth selection.Footnote 7

-

3.

(Optional) Use the Modify Data Set menu option(s)to set up a data analysis. The menu options allow you to construct new variables, handle missing data, and/or to perform other modifications of the data set. Then the new and/or modified variables can be included in the Bayesian model that you select in Step 4. Figure 1 presents the PIRLS100.DAT data at the bottom of the software interface, and shows the data of the variables MALE, AGE, CLSIZE, ELL, TEXP4, EDLEVEL, ENROL, and SCHSAFE in the last 8 data columns, respectively, after taking z-score transformations and adding “Z:” to each variable name. Such transformations are done with the menu option: Modify Data Set > Simple variable transformations > Z score. “Modify data set menu options” provides more details about the available Modify Data Set menu options.

-

4.

(Required) Click the Specify New Model button to select a Bayesian model for data analysis, and then for the model select: the dependent variable; the covariate(s) (predictor(s)) (if selected a regression model); the level-2 (and possibly level-3) grouping variables (if a multi-level model); and the model’s prior distribution parameters. Figure 2 shows the software interface, after selecting the Infinite homoscedastic probits regression model, along with the dependent variable, covariates, and prior parameters.

-

5.

(Optional) To weight each data observation (row) differently under your selected model, click the Observation Weights button to select a variable containing the weights (must be finite, positive, and non-missing). (This button is not available for a binary or ordinal regression model). By default, the observation weights are 1. For example, observation weights are used for meta-analysis of data where each dependent variable observation y i represents a study-reported effect size (e.g., a standardized mean difference in scores between a treatment group and a control group, or a correlation coefficient estimate). Each reported effect size y i has sampling variance \(\widehat { \sigma }_{i}^{2}\), and observation weight \(1/\widehat {\sigma }_{i}^{2}\) that is proportional to the sample size for y i . Details about the various effect size measures, and their sampling variance formulas, are found in meta-analysis textbooks (e.g., Cooper et al., 2009). “Modify data set menu options” mentions a Modify Data Set menu option that computes various effect size measures and corresponding variances.

-

6.

(Optional) Click the Censor Indicators of Y button, if the dependent variable consists of censored observations (not available for a binary or ordinal regression model). Censored observations often appear in survival data, where the dependent variable Y represents the (e.g., log) survival time of a patient. Formally, an observation, y i , is censored when it is only known to take on a value from a known interval [Y L B i ,Y U B i ]; is interval-censored when \(-\infty <Y_{LBi}<Y_{UBi}<\infty \); is right censored when \(-\infty <Y_{LBi}<Y_{UBi}\equiv \infty \); and left censored when \(-\infty \equiv Y_{LBi}<Y_{UBi}<\infty \) (e.g., Klein & Moeschberger, 2010). After clicking the Censor Indicators of Y button, select the two variables that describe the (fixed) censoring lower-bounds (LB) and upper-bounds (UB) of the dependent variable observations. Name these variables LB and UB. Then for each interval-censored observation y i , its L B i and U B i values must be finite, with L B i <U B i , y i ≤U B i , and y i ≥L B i . For each right-censored observation y i , its L B i value must be finite, with y i ≥L B i , and set U B i =−9999. For each left-censored observation y i , its U B i value must be finite, with y i ≤U B i , and set L B i =−9999. For each uncensored observation y i , set L B i =−9999 and U B i =−9999.

-

7.

(Required) Enter: the total number (S) of MC Samples, i.e., MCMC sampling iterations (indexed by s=1,…,S, respectively);the number (s 0≥1) of the initial Burn-In period samples; and the Thin number k, to retain every k th sampling iterate of the S total MCMC samples. The MCMC samples are used to estimate the posterior distribution (and functionals) of the parameters of your selected Bayesian model. Entering a thin value k>1 represents an effort to have the MCMC samples be (pseudo-) independent samples from the posterior distribution. The burn-in number (s 0) is your estimate of the number of initial MCMC samples that are biased by the software’s starting model parameter values used to initiate the MCMC chain at iteration s=0.

-

8.

(Required) Click the Run Posterior Analysis button to run the MCMC sampling algorithm, using the selected MC Samples, Burn-In, and Thin numbers. A wait-bar will then appear and display the progress of the MCMC sampling iterations. After the MCMC sampling algorithm finishes running, the software will create an external: (a) model (*.MODEL) text file that describes the selected model and data set; (b) Monte Carlo (*.MC1) samples file which contains the generated MCMC samples; (c) residual (*.RES) file that contains the model’s residual fit statistics; and (d) an opened, text output file of summaries of (marginal) posterior point-estimates of model parameters and other quantities, such as model predictive data-fit statistics. The results are calculated from the generated MCMC samples aside from any burn-in or thinned-out samples. Model fit statistics are calculated from all MCMC samples instead. The software puts all output files in the same subdirectory that has the data (*.DAT) file.

-

9.

(Required) Click the Posterior Summaries button to select menu options for additional data analysis output, such as: text output of posterior quantile estimates of model parameters and 95 % Monte Carlo Confidence Intervals (see Step 10); trace plots of MCMC samples; 2-dimensional plots and 3-dimensional plots of (kernel) density estimates, univariate and bivariate histograms, distribution function, quantile function, survival function, and hazard functions, box plots, Love plots, Q-Q plots, and Wright maps, of the (marginal) posterior distribution(s) of the model parameters; posterior correlations and covariances of model parameters; and plots and tables of the model’s standardized fit residuals. The software creates all text output files in the same subdirectory that contains the data (*.DAT) file. You may save any graphical output in the same directory.

-

10.

(Required) Click the Posterior Summaries button for menu options to check the MCMC convergence of parameter point-estimates, for every model parameter of interest for data analysis. Verify: (1) that the univariate trace plots present good mixing of the generated MCMC samples of each parameter; and (2) that the generated MCMC samples of that parameter provide sufficiently-small half-widths of the 95 % Monte Carlo Confidence Intervals (95 % MCCIs) for parameter posterior point-estimates of interest (e.g., marginal posterior mean, standard deviation, quantiles, etc.). If for your model parameters of interest, either the trace plots do not support adequate mixing (i.e., plots are not stable and “hairy”), or the 95 % MCCI half-widths are not sufficiently small for practical purposes, then the MCMC samples of these parameters have not converged to samples from the model’s posterior distribution. In this case you need to generate additional MCMC samples, by clicking the Run Posterior Analysis button again. Then re-check for MCMC convergence by evaluating the updated trace plots and the 95 % MCCI half-widths. This process may be repeated until MCMC convergence is reached.

-

11.

(Optional) Click the Posterior Predictive button Footnote 8 to generate model’s predictions of the dependent variable Y, conditionally on selected values of one or more (focal) covariates (predictors). See “Overview of Bayesian inference” for more details. Then select the posterior predictive functionals of Y of interest. Choices of functionals include the mean, variance, quantiles (to provide a quantile regression analysis), probability density function (p.d.f.), cumulative distribution function (c.d.f.), survival function, hazard function, the cumulative hazard function, and the probability that Y≥0. Then select one or more focal covariate(s) (to define \(\mathbf {x}_{\mathcal {S}}\)), and then enter their values, in order to study how predictions of Y varies as a function of these covariate values. For example, if you chose the variable Z:CLSIZE as a focal covariate, then you may enter values like −1.1, .02, 3.1, so that you can make predictions of Y conditionally on these covariate values. Or you may base predictions on an equally-spaced grid of covariate (Z:CLSIZE) values, like −3,−2.5,−2,…2,2.5,3, by entering −3:.5:3. If your data set observations are weighted (optional Step #5), then specify a weight value for the Y predictions. Next, if your selected focal covariates do not constitute all model covariates, then select among options to handle the remaining (non-focal) covariates. Options include the grand-mean centering method, the zero-centering method, the partial dependence method, and the clustered partial dependence method. After you made all the selections, the software will provide estimates of your selected posterior predictive functionals of Y, conditionally on your selected covariate values, in graphical and text output files, including comma-delimited files. (The software generates graphs only if you selected 1 or 2 focal covariates; and generates no output if you specify more than 300 distinct values of the focal covariate(s)). All analysis output is generated in the same subdirectory that contains the data (*.DAT) file. You may save any graphical output in the same directory.

-

12.

(Required) After completing the Bayesian data analysis, you may click the Clear button. Then you may start a different data analysis with another Bayesian model, or exit the software. Later, you may return to and continue from a previous Bayesian data analysis (involving the same model and data set) by using menu options to generate new MCMC samples and/or new data analysis output. To return to the previous analysis, go to the File menu to open the (relevant) data file (if necessary), and then click the Open Model button to open the old model (*.MODEL) file. Then, the software will load this model file along with the associated MCMC samples (*.MC1) file and residual (*.RES) files. (Returning to a previous Bayesian regression analysis is convenient if you have already stored the data, model, MC samples, and residual files all in the same file subdirectory). Then after clicking the Run Posterior Analysis button, the newly generated MCMC samples will append the existing MCMC samples (*.MC1) file and update the residual (*.RES) file.

Finally, the software provides a Fast Ridge Regression menu option that performs a Bayesian data analysis using the ridge (linear) regression model (Hoerl and Kennard 1970), with parameters estimated by a fast marginal maximum likelihood algorithm (Karabatsos 2014). This menu option can provide a fast analysis of an ultra-large data set, involving either a very large sample size and/or number of covariates (e.g., several thousands). At this point we will not elaborate on this method because it is currently the subject of ongoing research.

Modify data set menu options

Some Modify Data Set menu options allow you to construct new variables from your data. These new variables may be included as either covariates and/or the dependent variable for your Bayesian data analysis model. Methods for constructing new variables include: simple transformations of variables (e.g., z-score, log, sum of variables); transforming a variable into an effect size dependent variable for meta-analysis (Borenstein 2009; Fleiss and Berlin 2009; Rosenthal 1994); the creation of lagged dependent variables as covariates for a Bayesian autoregression time-series analysis (e.g., Prado & West, 2010); dummy/binary coding of variables; the construction of new covariates from other variables (covariates), via transformations including: polynomials, two-way interactions between variables, univariate or multivariate thin-plate splines (Green and Silverman 1993) or cubic splines (e.g., Denison et al., 2002); spatial-weight covariates (Stroud et al., 2001; or thin-plate splines; Nychka, 2000) from spatial data (e.g., from latitude and longitude variables) for spatial data analysis.

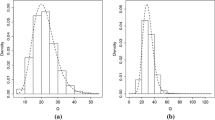

Now we briefly discuss Modify Data Set menu options that can help set up a causal analysis of data from a non-randomized (or randomized) study. First, a propensity score variable, included as a covariate in a regression model; or as a dummy-coded covariate that stratifies each subject into one of 10 (or more) ordered groups of propensity scores; or as observations weights (entered as the inverse of the propensity scores); can help reduce selection bias in the estimation of the causal effect (slope coefficient) of a treatment-receipt (versus non-treatment/control) indicator covariate on a dependent variable of interest (Rosenbaum & Rubin, 1983a, 1984; Imbens, 2004; Lunceford & Davidian, 2004; Schafer & Kang, 2008; Hansen, 2008). As an alternative to using propensity scores, we may consider a regression discontinuity design analysis (Thistlewaite and Campbell 1960; Cook 2008). This would involve specifying a regression model, with dependent (outcome) variable of interest regressed on covariates that include an assignment variable, and a treatment assignment variable that indicates (0 or 1) whether or not the assignment variable exceeds a meaningful threshold. Then under mild conditions (Hahn et al. 2001; Lee and Lemieux 2010), the slope coefficient estimate for the treatment variable is a causal effect estimate of the treatment (versus non-treatment) on the dependent variable. For either the propensity score or regression discontinuity design approach, which can be set up using appropriate Modify Data Set menu options, causal effects can be expressed in terms of treatment versus non-treatment comparisons of general posterior predictive functionals of the dependent variable (e.g., Karabatsos & Walker, 2015a).