Abstract

Semantic norms for properties produced by native speakers are valuable tools for researchers interested in the structure of semantic memory and in category-specific semantic deficits in individuals following brain damage. The aims of this study were threefold. First, we sought to extend existing semantic norms by adopting an empirical approach to category (Exp. 1) and concept (Exp. 2) selection, in order to obtain a more representative set of semantic memory features. Second, we extensively outlined a new set of semantic production norms collected from Italian native speakers for 120 artifactual and natural basic-level concepts, using numerous measures and statistics following a feature-listing task (Exp. 3b). Finally, we aimed to create a new publicly accessible database, since only a few existing databases are publicly available online.

Similar content being viewed by others

Semantic memory is our knowledge about the world. It is the system that stores and organizes the representation and processing of semantic knowledge (Tulving, 1972)—that is, internal representations about words, things, and their properties that allow us to understand and interact with the world around us and with the objects present in it. This knowledge largely derives from daily observation of living and nonliving things, from interacting with them, and from witnessing others’ interaction with them from birth on, although it can also be acquired culturally or by talking and reading about concepts. Importantly, semantic knowledge plays a central role in both online and offline processing of the environment: Online, it guides perception, categorization, and inferences, and offline, it reconstructs memories, underlies the meanings of linguistic expressions, and provides the representations manipulated by thought (Barsalou, Simmons, Barbey, & Wilson, 2003).

Semantic features are assumed to be the building blocks of semantic knowledge by a variety of theories (e.g., Collins & Quillian, 1969; Jackendoff, 1990, 2002; Minsky, 1975; Norman & Rumelhart, 1975; Saffran & Sholl, 1999; Smith & Medin, 1981) and by computational models of conceptual representation (e.g., Caramazza & Shelton, 1998; Farah & McClelland, 1991; Humphreys & Forde, 2001). Furthermore, some of these computational models of semantic representations, in which concepts are described as distributed patterns of activation across units representing semantic features, have proposed that at least a subset of these features are modality-specific (e.g., Cree & McRae, 2003; Plaut, 2002; Rogers et al., 2004; Vigliocco, Vinson, Lewis, & Garrett, 2004)—that is, grounded in sensory–motor processing—as has been advocated by the embodied-cognition view on mental representation (see, e.g., Barsalou, 1999, 2008; Grush, 2004) and confirmed by neurophysiological studies (Martin & Chao, 2001; Vigliocco et al., 2006).

Some models have used semantic feature norms obtained from naive participants to construct empirically derived conceptual representations that can be used to test theories about semantic representation and computation (e.g., Cree, McNorgan, & McRae, 2006; Cree & McRae, 2003; McRae, de Sa, & Seidenberg, 1997; Garrard, Lambon Ralph, Hodges, & Patterson, 2001; Moss, Tyler, & Devlin, 2002; Rogers & McClelland, 2004). In this empirical approach to the study of semantic knowledge, participants are presented with a set of concept names and are asked to produce the features that they feel best describe each of the concepts. From the data obtained, it is then possible to calculate numerous measures and distributional statistics at both the feature and concept levels, such as estimates of semantic similarity/distance between concepts, or measures of how features of various knowledge types are distributed across concepts. Several other measures can also be used to increase the information obtained from the norms themselves, such as collecting them either by means of participants’ explicit ratings, as in the case of familiarity or typicality ratings, or using language corpora, as in the case of word frequency or the linguistic variables associated with a concept’s name.

These measures and statistics allow researchers to construct stimuli for further experiments while controlling for nuisance variables; to model human behavior in computational simulation models of semantic knowledge; and to test theories about semantic memory and its deficits. Many studies have, in fact, used feature representations deriving from feature production norms to account for empirical phenomena, such as semantic priming (Cree, McRae, & McNorgan, 1999; McRae et al., 1997; Vigliocco et al., 2004), feature verification (Ashcraft, 1978, McRae, Cree, Westmacott, & de Sa, 1999; Solomon & Barsalou, 2001), categorization (Hampton, 1979; Smith, Shoben, & Rips, 1974), and category learning (Kruschke, 1992), among others (for a more detailed discussion of the aims and limits of feature norms, see McRae, Cree, Seidenberg, & McNorgan, 2005; for a discussion of theories of semantic memory organization that use feature representations to account for category-specific semantic deficits, see Cree & McRae, 2003; Zannino, Perri, Pasqualetti, Caltagirone, & Carlesimo, 2006).

The present study

The principal aims of the present study were the following. First, we aimed to extend existing sets of semantic feature production norms. Second, we aimed to make the categories and concepts included in the database more representative of our participants’ semantic memory by adopting empirical methods to choose categories (Exp. 1) and concepts (Exp. 2), while in Experiment 3, a large sample of Italian native speakers were asked to list the features of each of the resultant concepts (a property generation task). In most normative studies, researchers have typically designated categories and their exemplars a priori on the basis of intuition or by selecting them from previously published databases. In this way, participants are made to list characteristics for concepts that may not be most representative of the structure of their conceptual knowledge. Indeed, some authors (e.g., Bueno & Megherbi, 2009; Van Overschelde, Rawson, & Dunlosky, 2004) have questioned the appropriateness of earlier databases (especially the norms of Battig & Montague, 1969) and have highlighted the fact that semantic norms rapidly become obsolete, due to the speed of language (and cultural) changes. More importantly, to the best of our knowledge, no previous study has actually controlled, or even measured, the representativeness of the categories or concepts used in creating norms.

Third, we described the measures and statistics included in our norms and briefly discussed their application in cognitive neuroscience and neuropsychology, in line with the idea of creating a freely accessible public database for researchers interested in testing theories about semantic memory. Semantic norms are useful and powerful tools for the research community, and although several normative databases currently exist, only a few research groups have made their norms freely available (De Deyne et al., 2008; Garrard et al., 2001; McRae et al., 2005; Vinson & Vigliocco, 2008); to the best of our knowledge, only one set of semantic norms in Italian is freely available (Kremer & Baroni, 2011).

Before proceeding to the description of the norms, we will describe the terminology and notation that will be used throughout the article. The notion of an exemplar denotes a concept (i.e., member of a category), such as cane/dog, coltello/knife, and so forth, and will be printed in italics, while a <category > refers to a group of exemplars, such as <animali/animals>, <utensili da cucina/kitchenware>, and so forth, and will be printed in italics between triangular brackets. Features that participants listed in response to an exemplar, such as “verde/green,” “alto/high,” and “ha ago/has needle” in response to abete/fir, will be indicated in the text with an italic typeface between quotation marks. Note that throughout we present the Italian word first, followed by a slash and the English translation.

We will also refer to Excel files that can be found in the Supplementary Materials. Four files are provided: C-C_Pairs.xls contains concept production measures; Concepts.xls contains information about individual concepts derived from the feature-listing task; C-F_Pairs.xls contains information about concept–feature pairs derived from the feature-listing task; and Distance_Matrix.xls contains the semantic distance matrices. We will refer to the variables in the files by indicating their names between square brackets (e.g., [Prod_Fr]).

Experiment 1

Experiment 1 was preliminary to the rest of the normative data collection and was designed to empirically determine the representative categories of semantic memory. Existing norms in the literature are typically based on concepts or categories that either are selected from previously published databases (De Deyne et al., 2008; Garrard et al., 2001; Izura, Hernández-Muñoz, & Ellis, 2005; Kremer & Baroni, 2011; McRae et al., 2005; Ruts et al., 2004; van Dantzig, Cowell, Zeelenberg, & Pecher, 2011; Zannino et al., 2006) or are chosen a priori by researchers (Lynott & Connell, 2009; McRae et al., 2005; Vinson & Vigliocco, 2008). In this manner, participants describe categories or concepts that are only supposed to be representative of semantic memory, without controlling for their actual representativeness, or importance, in the semantic organization. In our study, we chose to use an empirical approach to construct our norms. Specifically, in order to determine which semantic categories were more strongly represented in our participants’ semantic memory, we asked participants to generate a number of concrete concepts, without constraints.

Method

Participants

A group of 435 native Italian speakers (105 male, 330 female) participated in our study. Their ages ranged from 18 to 35 years (M = 22.7, SD = 3.3), and their mean years of education were 13.7 (SD = 2.1). All participants were naïve as to the purpose of the study, and most of them were undergraduate psychology students from the University of Chieti.

Materials and procedure

Context is essential for basic-level concept representations, since this level of abstraction is suitable for using, thinking about, or naming an object in most situations in which the object occurs. In situations in which context is not specified (e.g., in laboratory conditions), participants generally assume that the object is in a typical context (Rosch, 1978). Thus context, defined as the place in which participants perform a task, could influence the frequency and order of production of concepts in a free concept-listing task, and participants may likely take advantage of environmental clues when producing concepts. We therefore asked participants to freely produce words that referred to concrete concepts in one of three different environmental conditions—namely, a classroom, an online context, and a laboratory—in order to balance the context influences.

In all environmental conditions, we asked participants to list a minimum of 20 words that referred to concrete entities. In the classroom context, data collection took place in two large classrooms. All participants (N = 310 undergraduate psychology students from the University of Chieti; 51 male, 259 female; mean age ± SD = 21.7 ± 2.6 years) were present at the same time. The participants received a piece of paper with written instructions at the top, followed by 60 empty lines. They were instructed to write one word for each line, starting from the top of the sheet. In the online context, we created a form (Word document) similar to the one used in the classroom context. These forms were then e-mailed to 150 native Italian speakers. Sixty-one participants (23 male, 38 female; mean age ± SD = 25.8 ± 4.0 years) returned the completed forms. Finally, in the laboratory context, we collected the forms in our laboratory. Sixty-four participants (31 male, 33 female; mean age ± SD = 24.8 ± 3.1 years) were asked to list concepts out loud while standing in a dark, unfurnished room. The experimenter took note of the concepts produced.

It is important to specify that while we chose to use the pencil-and-paper (classroom) condition because it has been the most used procedure in previous works, the other two environmental conditions were chosen to try to cope with the influence of context in prompting participants’ responses. In particular, the controlled laboratory context was chosen because it should reduce the influence of environmental cues. In addition, since data collection is not feasible in every possible context, we chose to use the online context. Indeed, this condition, even if uncontrolled, should represent several contexts in which we daily live. In fact, the Internet is accessible from a vast variety of locations by means of smartphones, tablets, laptops, and similar devices. However, as we will discuss below, the analysis that we employed allowed us to cope with possible contextual influences.

Transcription and labeling

The data consisted of a number of concepts listed by each participant, separated for context. First, we removed the abstract concepts that were occasionally listed (e.g., amore/love or giustizia/justice; less than 1 % of the total recorded data), and plurals were converted into their respective singular forms. Moreover, synonyms referring to the same concept were recorded with the same label (e.g., gatto/cat and micio/kitty were both recorded as gatto/cat). Next, we added information about the order of production of each word for each participant. Participants listed a different number of concepts, so we calculated a normalized order of production by converting each order-of-production value into a centile. We computed the centile order of production C i,m for the concept i and participant m as C i,m = [1 – (O i,m – 1) / N m ], where O i,m represents the order of production of the concept i for participant m, and N m represents the total number of concepts produced by that participant. For example, a concept listed as third by a participant who produced 16 concepts would take a centile order value of [1 – (3 – 1) / 16] = .875. Therefore, the centile order value assumed a value of 1 for a concept listed first by a participant and approached 0 for a concept listed last.

Finally, we classified concepts into their respective semantic categories, irrespective of their level of abstraction. In other words, words referring to superordinate, subordinate, and basic-level concepts, such as pianta/plant, rosa/rose, and fiore/flower, respectively, were all classified as belonging to the <piante/plants> category. Concepts were classified into 33 different categories with the same abstraction level. Two native Italian speakers (authors M.M. and E.A.) independently classified all of the concepts, and a third native speaker judged disagreements. We also assessed the intercoder reliability in concept classification by asking a fourth native Italian speaker to classify the entire set of included concepts using the same set of categories that we used. Agreement between our labeling and that of the secondary rater was high, with a Cohen’s κ value of .71 (N = 1,007, p < .0001). (We will use the term intercoder reliability or agreement when using the procedure outlined above).

Results and discussion

From a total of 11,357 concepts, we collected 1,007 distinct concepts belonging to 33 semantic categories. The average number of words produced per participant was 26.08 (SD = 7.28, range = 10 to 57). The reliability of the collected data, in terms of the categories to which concepts belonged, was evaluated by computing split-half correlations corrected with the Spearman–Brown formula after randomly dividing participants into two subgroups of equal size. This reliability index was calculated on 10,000 different randomizations of the participants, and the resultant median reliability index was very high (.994; range = .977 to .999) (unless explicitly specified, this holds true for all of the reliability estimations reported throughout this article, and we will refer to reliability when using the procedure outlined above).

We then calculated the mean dominance value of each category for each context as the proportion of participants who produced at least one concept belonging to that category. For example, 33 out of 64 participants listed one or more <articoli di cancelleria/stationery> exemplars in the laboratory context, so this category took a mean dominance value of ≈ 52 % (i.e., 33/64). We also calculated the mean centile order value of each category for each context. Specifically, we averaged the centile order values across all of the concepts produced in that context that belonged to that category. For example, if three <veicoli/vehicles> concepts were listed in the classroom context, with corresponding centile order values of .80, .63, and .37, the <veicoli/vehicles> category would assume a mean centile order value of .60. Note that by computing the average across participants of the mean centile values across the concepts listed by each participant, the main result of the subsequent cluster analysis would not change (i.e., with both of the averaging methods, the same categories were included in the “more representative” cluster in all three environmental conditions; see below). Note that both of these measures range from 0 to 1, with 1 corresponding to a category that was always listed as first (centile order) or that was produced by all of the participants (dominance measure).

Subsequently, in order to choose the most representative categories of semantic memory, we conducted a K-means cluster analysis for each environmental condition. K-means clustering is a data analysis technique that seeks to segregate category sets into a predefined number of K subsets of the greatest possible distinction. This analysis classified each semantic category into one of two a priori clusters (“more representative” or “less representative”) on the basis of the mean dominance and the mean centile order values of that category. We assumed that both dominance and mean centile order values reflect the representativeness of a given concept in semantic memory.

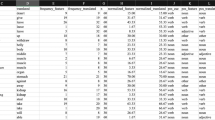

We repeated this procedure 100 times using different random seeds to calculate cluster centroids, since K-means cluster analysis is sensitive to starting conditions (Berkhin, 2006). From the 100 solutions, the best clustering solution was selected by calculating the ratio between the between- and within-cluster variances. On the basis of these results, we then assigned each category a value of 1 if it was included in the cluster with both higher dominance and centile order values—that is, the more representative (MR) cluster—and a value of 0 if it entered in the other (less representative, LR) cluster. This procedure was repeated for each of the three context conditions. Consequently, by summing this binary measure for the three context conditions, each category assumed a comprehensive value that ranged from 3 (if that category was included in the MR cluster for all three of the contexts) to 0 (if that category was included in the LR cluster for all three of the contexts) (see Table 1). Figure 1 presents the results of the cluster analysis.

Mean dominance and centile order values for the 33 categories of Experiment 1, as a function of the number of context conditions in which that category was more representative (MR). Each concept is plotted in the Dominance × Centile Order space (with both of these measures averaged over the three contexts), and the size and color of the corresponding points reflect the number of context conditions in which that category entered the MR cluster

Twelve categories were not included in the MR cluster in any of the three K-means cluster analyses, and thus assumed a comprehensive inclusion value of 0. Interestingly, several of the most commonly used categories in the literature (e.g., in Battig & Montague, 1969; Bueno & Megherbi, 2009; Garrard et al., 2001; McRae et al., 2005; Van Overschelde et al., 2004; Zannino et al., 2006) were among these LR categories—namely <frutta/fruit>, <strumenti musicali/musical instruments>, <armi/weapons>, and <vegetali/vegetables> —since their concepts were listed on average by only a minority of our participants (20.1 %, 11.1 %, 8.5 %, and 6.2 %, respectively).

Thirteen categories, instead, were constantly produced by a high percentage of participants, irrespective of context condition, and were included in the MR cluster in all three K-means cluster analyses. Accordingly, when a category emerged in the MR cluster in only one environmental condition (thus assuming a comprehensive value of 1), we did not include it among the selected categories for subsequent experiments, because in this case it was not possible to exclude possible influences of environmental cues in prompting the participants’ responses. We thus assumed that the categories with a comprehensive value of 3 primarily represented semantic memory, at least in our sample. Again, it is noteworthy that participants produced a number of concepts belonging to semantic categories that are neglected in existing norms, such as <articoli di cancelleria/stationery>, <costruzioni abitative/housing buildings>, and <passatempi/hobbies>, as well as more “classical” categories such as <vestiti/clothes> and <veicoli/vehicles>. Finally, we chose to exclude one of the categories, <apparecchiatura informatica/informatics>, from Experiment 2 because it was composed of a low number of exemplars (five), even though it was one of the more representative categories (see Table 1).

One of the most interesting results is that the majority of categories (26 out of 33, or 78.8 %) belong to the artifact domain, and this imbalance holds true even when we take into consideration the concepts produced instead of the categories. In fact, 84 % (9,539 out of 11,357) of the total number of concepts collected belong to the artifact domain, as well as 70.7 % (712 out of 1,007) of the distinct concepts (i.e., without considering concepts listed by more than one participant). Thus, our results suggest an asymmetrical distribution of natural and artifactual concepts in semantic memory, which is reflected in the 12 categories that we chose to use for our normative study (in fact, 75 % were artifact categories).

Experiment 2

The results from Experiment 1 indicated nine artifact (<articoli di cancelleria/stationery>, <costruzioni abitative/housing buildings>, <veicoli/vehicles>, <mobili/furniture>, <cibi/food>, <suppellettili/furnishings and fittings>, <utensili da cucina/kitchenware>, <vestiti/clothes>, and <passatempi/hobbies>) and three natural (<animali/animals>, <parti del corpo/body parts>, and <piante/plants>) categories as representative of our participants’ semantic memory. Experiment 2 was designed to investigate the distribution of concepts within each of these categories in order to select the categories’ most representative exemplars to be used in the feature-listing task (Exp. 3b). According to the prototype theory (Rosch, 1973), knowledge is clustered into semantic categories, and some members are better exemplars of a category than others. This process, called categorization, is a basic mental process in cognition, and without it the world would appear chaotic (Bueno & Megherbi, 2009).

Several tasks have been used to investigate the organization of categories, such as the category verification task, in which participants have to decide whether or not a stimulus belongs to a given category (Casey, 1992; McFarland, Kellas, Klueger, & Juola, 1974), or the exemplar generation task, in which participants are asked to list as many members of a given category as possible (Bueno & Megherbi, 2009; Ruts et al., 2004; Storms, 2001; Storms, De Boeck, & Ruts, 2000; Storms, De Boeck, Van Mechelen, & Ruts, 1996). In Experiment 2, we adopted an exemplar generation task and identified four alternative measures to describe the strength of the relationship between a given category and its exemplars: exemplar generation dominance, mean rank order, first occurrence, and exemplar availability.

Method

Participants

A group of 62 undergraduate psychology students from the University of Chieti (23 male, 39 female) participated in Experiment 2. Their ages ranged from 18 to 34 years (M = 25.6, SD = 3.4), and the mean years of education were 15.5 (SD = 2.4). All participants were naïve as to the purpose of the experiment.

Materials and collection procedure

We used the 12 semantic categories selected from Experiment 1 as stimuli (see Table 1). Participants were given a 13-page, A5-format booklet with written instructions on the first page and a different category, followed by eight empty lines, at the top of each of the next 12 pages. The instructions on the first page told participants to list eight different exemplars for each of the 12 given semantic categories. They were also asked to write the exemplars in the order that they thought of them. The order of category presentation within the booklet was determined randomly for each participant so as to reduce order effects. Data collection took place in a classroom where all of the participants were present at the same time.

Recording procedure

Responses for the 12 categories from the 62 booklets were manually entered into an Excel spreadsheet that included their ranking order in each category for every participant. We collected 5,761 responses, because some participants failed to give eight responses per category. About 1 % of the responses were excluded due to illegible handwriting. We also eliminated legible responses that were clearly category nonmembers, as well as synonyms and superordinate concepts (i.e., we discarded responses such as foresta/wood in response to the <piante/plants> category). Concepts at the subordinate level were replaced by corresponding basic-level concepts (i.e., if a participant listed pitbull for the <animali/animals> category, we recorded the entry as cane/dog) only if three independent native Italian speakers (M.M., E.A., and a naïve speaker) reached unanimous consensus. We then added information about the abstraction level of each recorded concept. The intercoder reliability was very high, with a Cohen’s κ value of .89 (N = 824; p < .0001).

Results and discussion

The data set was composed of 5,444 words or, without taking into account those repeated across participants, 824 distinct concepts belonging to the 12 semantic categories. The average number of concepts per category was 7.32 (SD = 1.01), ranging from 6.68 (SD = 1.62), for the <suppellettili/furnishing and fittings> category, to 7.73 (SD = 1.07), for the <vestiti/clothes> category. The estimated reliability of the data was very high (.966, range = .954–.975).

We calculated the dominance value of each concept as the proportion of the participants (out of 62) who produced a determinate concept in response to a given category label and the mean output position of each concept for the corresponding category (mean rank order). Together, these measures reflect the accessibility of a concept for a given category. In previous research, the order of production has been neglected by studies of semantic memory organization. In fact, only Ruts et al. (2004) found a significant correlation between generation frequency and the mean rank position of the exemplars.

Successively, we computed the first-occurrence value—that is, the proportion of participants who produced a given concept as their first response (Bueno & Megherbi, 2009; Van Overschelde et al., 2004). This was computed by dividing the number of participants who produced a given concept as the first response for a given category by the total number of participants. Finally, we calculated the availability of each concept in response to the category label (Izura et al., 2005), a measure that represents the readiness with which a concept is produced as a member of a given category, by taking into account its position in a given category for each participant, its production frequency within a category, the lowest position in which it was produced across participants, and the total number of participants who responded with it. The formula (adapted from Lopez-Chavez & Strassburger-Frias, 1987) is

where z i is the accessibility of the concept i for a given category, p is the position of that concept in that category, n is the lowest position occupied by that concept for that category, f pi is the number of participants who produced the concept i at the position p in that category, and N is the total number of participants. We included all of the aforementioned variables for all concepts with a dominance value higher than .1 (i.e., concepts produced by at least 10 % of our participants) in the C-C_Pairs.xls file. As expected, all four of the measures described above were significantly correlated, reflecting the strength of the relationship between an exemplar and its corresponding category. In particular, exemplar dominance, availability, and first occurrence were all strongly and positively correlated (rs = .95, .76, and .90 for dominance vs. availability, dominance vs. first occurrence, and availability vs. first occurrence, respectively; N = 824; ps < .0001), whereas the mean rank order of production correlated weakly and negatively with the other three measures (rs = –.22, –.29, and –.28 for dominance, availability, and first occurrence, respectively; N = 824; ps < .0001).

In order to select the best exemplars for the construction of our norms, we chose to retain only those concepts produced by at least seven participants (i.e., those with a dominance value higher than .1). This cutoff criterion resulted in the inclusion of at least 12 exemplars per category (range 12–19; M = 15.75, SD = 2.05). In order to select the same number of exemplars for each category, we then selected the 12 concepts per category with the highest availability values (these concepts have a value of 1 in the [Final_Dataset] variable in the C-C_Pairs.xls file) for Experiments 3a and 3b. Moreover, we chose to exclude the <cibi/food> and <passatempi/hobbies> categories from Experiments 3a and 3b because these categories included a high number of exemplars that could be included in other semantic categories or that belonged to both the natural and artifact domains.

Experiment 3a

Concept familiarity represents the degree to which people have heard, read, or otherwise been exposed to a given concept in their lives, and it has been proposed that semantic knowledge about any given concept is reflected, at least in part, by the degree of familiarity with that concept (Marques, 2007; see also Funnell, 1995; Glass & Meany, 1978). For example, Glass and Meany showed that knowledge of some unfamiliar concepts (e.g., mite) is restricted to the information about category membership (e.g., “is an insect”). Although familiarity is most often considered a nuisance variable, several studies have highlighted its influence as a statistical variable in tasks requiring word processing (e.g., Gernsbacher, 1984) as, for example, in feature verification tasks (McRae et al., 1999; McRae et al., 1997).

Note that the concept of familiarity is not independent from typicality, and therefore it is not easy to determine the exact contributions of these two variables in experimental results (see McCloskey, 1980, for a detailed discussion). An exemplar’s typicality, also known as goodness of example, representativeness, and prototypicality, reflects how representative an exemplar is of a category (Hampton, 2007) and has been explained by both prototype models (Hampton, 1979; Rosch & Mervis, 1975) and exemplar models (Heit & Barsalou, 1996; Storms et al., 2000). Typicality is usually measured by asking participants to rate, on a numerical scale, the degree to which a category item is a representative member of a category. The impact of typicality is evident in categorization tasks: The more typical an exemplar of a category is, the more quickly it is verified as being a member of that category (e.g., Larochelle & Pineau, 1994; Rosch, 1975). Several studies have shown that typicality is an influential variable that explains a wide range of behavioral findings, including priming effects (Rosch, 1975), categorization probability (e.g., Hampton, Dubois, & Yeh, 2006), inductive inference (e.g., Osherson, Smith, Wilkie, López, & Shafir, 1990), memory interference effects (Keller & Kellas, 1978), and semantic substitutability (Rosch, 1977), as well as performance in production (Hampton & Gardiner, 1983) and naming (Dell’Acqua, Lotto, & Job, 2000) tasks.

Method

Participants

A group of 120 native Italian speakers who had not participated in the previous experiments participated in this experiment. All of the participants gave informed consent before beginning the experimental session. They were equally divided into two groups: the first (mean age 26.4 years, range 21–56) performed a familiarity rating task, and the second (mean age 26.4 years, range 18–51) a typicality rating task.

Materials and collection procedure

Familiarity with a concept (i.e., “How familiar are you with the thing the word refers to?”) was rated using a Likert-type 10-point rating scale, with 1 corresponding to not familiar at all and 10 to extremely familiar. The typicality of a concept for the corresponding category was also rated using a Likert-type rating scale, ranging from 1 (extremely atypical exemplar) to 10 (extremely typical exemplar). In both tasks, every participant provided ratings for five of the ten categories (60 concepts), so that each concept was rated by 30 different participants. The order of concepts was randomized for each category, and the assignment of categories to participants was pseudorandomized, with the restriction that each participant received either one or two categories among the three that belonged to the natural domain: <animali/animals>, <piante/plants>, and <parti del corpo/body parts> .

Results and discussion

In the Concepts.xls file, we provide the mean familiarity and typicality ratings (the variables [Familiarity] and [Typicality]) for each concept. As expected, a feature’s rated familiarity and typicality were highly correlated (r = .77; N = 120; p < .0001). The estimated reliability of the ratings of both familiarity and typicality were quite high (.93 and .88, respectively). The reliability of the familiarity ratings was also assessed by correlating the values collected from our participants with those reported by Della Rosa, Catricalà, Vigliocco, and Cappa (2010), for the 28 words found in both sets of norms, and with those of McRae et al. (2005), with which we held 61 concepts in common. The significant correlations (rs = .86 and .69, respectively; ps < .001) further indicated that concept familiarity values were highly reliable.

Experiment 3b

Although it is widely accepted that concepts cannot be defined by a list of singly necessary and jointly sufficient features, semantic features provide a vast amount of information about the representation of semantic concepts (Cree & McRae, 2003; Garrard et al., 2001; McRae et al., 2005), especially regarding their coherence (Sloman, Love, & Ahn, 1998), similarity (Markman & Gentner, 1993; Markman & Wisniewski, 1997), and cognitive economy in hierarchical taxonomies (Collins & Quillian, 1969; Smith et al., 1974).

Experiment 3b was designed to provide normative data for 120 concrete concepts from the ten different concept classes resulting from Experiments 1 and 2. We collected normative data by asking native Italian speakers to perform a feature generation task similar to the one that has been used for other norms (De Deyne et al., 2008; Kremer & Baroni, 2011; McRae et al., 2005; Vinson & Vigliocco, 2008; Zannino et al., 2006).

Method

Participants

A group of 417 volunteers (126 male, 291 female), principally undergraduate psychology students at the University of Chieti, participated in Experiment 3b. Their ages ranged from 18 to 35 years (M = 24.6, SD = 3.6), and the mean years of education were 13.6 (SD = 1.6). All of the participants were native Italian speakers and were naïve as to the purpose of the study.

Materials and collection procedure

The stimuli came from the ten artifact and natural categories, with the 12 exemplars in each retained from Experiments 1 and 2, for a total of 120 words. The words were randomized and subdivided into norming forms, each of which contained five concepts belonging to five different categories. This subdivision was done for a number of reasons: to keep participants from producing features for multiple semantically similar concepts or exploiting explicit comparisons among concepts belonging to the same category; to avoid order effects; and to leave as much time as needed to complete the feature-listing task. After completing the first feature-listing task, participants were free to complete a second feature-listing task with the remaining five categories. Thus, each participant listed the features for either five or ten concepts (215 and 202 participants, respectively), and each concept were listed by a minimum of 25 participants (and at most 28; the mean number of participants per concept was 25.78).

Forms were distributed during psychology classes at the University of Chieti and collected in our laboratory. The participants received a form with written instructions at the top and five concepts, followed by ten blank lines on which to list features. The instructions avoided excessive constraints, and participants were asked to define and describe each word on the list by using features. The participants were asked to enter one descriptive word or phrase per line and to try to list at least six features for each target word. An example was provided.

Transcription and labeling

The data consisted of a large quantity of features for each concept listed by participants. We interpreted, organized, and labeled the feature set as follows: Participants often produced nonelementary pieces of information, such as adjective–noun or adjective–adjective composite attributes (e.g., “ha quattro gambe/has four legs” and “è gialla e nera/is yellow and black” for tigre/tiger), disjunctive features (e.g., “singolo o matrimoniale/single or double” for letto/bed), or compound phrases (e.g., “usato per trasportare persone/used to transport people” for autobus/bus). In these cases, we broke nonelementary attributes down into elementary pieces of information, assuming that the participant had provided two pieces of information. In less obvious cases (e.g., when talking about a tiger, “è giallo e nero/is yellow and black” has a different meaning than “è giallo/is yellow” and “è nero/is black”), three native Italian speakers (M.M., E.A., and a naïve speaker) judged whether the multiple terms taken together had a different meaning than the terms considered separately. Terms were divided only if the three speakers reached unanimous consensus. Quantifiers relative to the occurrence frequency of a given feature in a given concept were always dropped from the participants’ responses—assuming, in line with McRae et al. (1997), that the information provided by these quantifiers would be indexed by production frequency. Finally, to ensure coherent recording of synonymous features, both within and across concepts, synonymous features were recorded under the same label (e.g., “spazioso/spacious” and “ampio/roomy” were both recorded as “spazioso/spacious”) only when the three aforementioned raters reached unanimous agreement. Instead, to distinctly label features that differed in meaning but were defined by the same word, we followed the criterion used by McRae et al. (2005). We interpreted responses conservatively, without modifying feature names, to avoid potential ambiguity (see McRae et al., 2005). We also used a number of key words and phrases to organize and code the features in order to indicate their feature type; some examples are “un carnivoro/a carnivore” (taxonomic feature); “richiede un biglietto/requires a ticket,” “si trova in casa/found in houses,” and “produce latte/produces milk” (encyclopedic features); “fatto di plastica/made of plastic” (sensorial feature); or “usato per scrivere/used to write” and “usato dai fumatori/used by smokers” (functional features).

After modifying the participants’ responses, we added information regarding the order of production and entered the data in an exemplar-by-feature intensity matrix, with each cell corresponding to the production frequency of a feature for a given concept. This value represented the number of participants who listed that feature for that concept, ranging from 0 to 25–28, with the upper limit depending on the concept. Idiosyncratic features—those whose production frequency did not reach a value of 4 in at least one concept in the set—were discarded. We chose an inclusion cutoff that was slightly different in magnitude from that used with McRae et al.’s (2005) norms, but the cutoffs were similar in terms of relative dominance. In fact, McRae et al. (2005) chose an inclusion cutoff of five of 30 participants who listed each concept (see also Zannino et al., 2006), corresponding to a relative dominance of 16.7 %. We, on the other hand, used a cutoff corresponding to an average relative dominance of 15.5 %.

The included features were then classified into feature types according to the kinds of information that they represented. In the main classification, all features were classified into basic knowledge types in line with McRae et al.’s (2005) criteria: A feature was defined as sensorial if it represented information regarding visual (visual–color, visual–form and surface, or visual–motion) or other (sound, tactile, smell, or taste) primary sensory systems, and as functional if it referred to functional/motor information regarding the ways in which people interact with the object. In particular, the functional label was applied to features that represented information regarding what an entity is used for by people, the agent who typically interacts with an exemplar, or the action or motor movement associated with the interaction with an entity (see Cree & McRae, 2003, for a detailed discussion; see also Zannino et al., 2006). Thus, in line with McRae et al.’s (2005) procedure, we did not consider a feature as functional if it referred to “what an item does”, but only if it referred to “what it is used for”. In this manner, we teased apart two types of information that Farah and McClelland’s (1991) original definition considered comparable. Moreover, a feature was classified as encyclopedic if it described some other type of knowledge or associative relationship. Finally, we added the taxonomic feature type to McRae et al.’s (2005) classifications, because participants often listed features regarding the superordinate category of a concept or subordinate and coordinate concepts as well.

All features were independently labeled by two native Italian speakers (M.M. and E.A.), and disagreements were mediated by a third colleague. The agreement between our labeling and that of the secondary rater was high, with a Cohen’s κ value of .94 (N = 730; p < .001).

Measures and statistics

The following sections describe the measures that we collected for the norms, their past application, and the statistics calculated from them. Note that, where possible, we also calculated (and included in our files) the same distributional measures excluding all taxonomic features, according to the assumption that this type of knowledge provides a somewhat different type of information (mainly referring to the superordinate category of a concept) as compared to other knowledge types (see McRae et al., 2005). However, for ease of reading, we will not discuss the measures calculated here without also considering taxonomic features.

Overall database characteristics

Overall, we collected data for 23,696 features or, excluding those repeated across participants, 4,481 distinct features. Each participant listed features for 7.66 concepts, on average (SD = 2.62), while the average total number of features obtained for a concept was 197.47 (SD = 22.51). By applying our production frequency cutoff for feature inclusion, the total set was reduced to 730 unique features, yielding 1,715 concept–feature pairs. Finally, the average number of distinct features per concept ([n_Feat] in the Concepts.xls file) was 14.29 (SD = 3.24). In particular, the latter index provides information about the semantic richness of the conceptual representation—that is, the amount of information that a concept can evoke—and its importance in the semantic system has been proven by various studies (for a review of this topic, see Pexman, Hargreaves, Siakaluk, Bodner, & Pope, 2008)

Concept lexical measures

Concept-level measures for the 120 concepts are presented in the Concepts.xls file. Column names and a short explanation are presented in Appendix C. The first four columns contain the names of each concept in Italian and English, as well as the corresponding category and domain. Next, we included the number of letters for each Italian and English word and the number of syllables for the Italian word denoting each concept. The last lexical measures were word frequency—taken from the COLFIS corpus (Corpus e Lessico di Frequenza dell’Italiano Scritto, the “Corpus and Frequency Lexicon of Written Italian”; Bertinetto et al., 2005), a three-million word corpus of contemporary written Italian—summed over a word’s singular and plural uses, and the natural logarithm of the frequency. The more frequently a word is read, heard, written, or spoken, the more often the conceptual representation of the corresponding object is computed. Thus, the frequency with which a word is encountered likely influences how easily the meaning of a word can be computed (Cree & McRae, 2003), as well as how quickly and easily that word can be recognized and produced (Duncan, 1977; Forster & Chambers, 1973; McRae, Jared, & Seidenberg, 1990). Moreover, this index correlates with both concept familiarity and word length: Lower-frequency words tend to be less familiar and longer (Graves, Grabowski, Mehta, & Gordon, 2007).

Exemplar generation measures

We included the measures reflecting the relationship between concepts and categories, computed as described above (see the Results and Discussion section of Exp. 2), in the Concepts.xls file. These four variables—[Exempl_M_Rank], Exempl_Dominance], Exempl_1ºOccur], and [Exempl_Availability]—refer to the internal structure of semantic categories and measure different aspects of categorization processes. Since the most widely studied semantic property in categorization research is typicality (Koivisto & Laine, 1999; McFarland, Duncan, & Kellas, 1978; Mervis & Rosch, 1981; Smith et al., 1974), we assessed the relationship between the four exemplar generation measures and typicality ratings. Correlational analyses showed that typicality ratings were significantly correlated with the exemplar generation measures (rs = .56, –.55, and .52 for the exemplar dominance, mean rank, and first-occurrence measures, respectively; N = 120; ps < .0001), and especially with the exemplar availability measure (r = .62; see Fig. 2). Moreover, since other variables, such as concept familiarity or word frequency, have also proven to influence the ease of exemplar production for a given category (e.g., Hernández-Muñoz, Izura, & Ellis, 2006), we also assessed the correlation between the four exemplar generation measures and familiarity ratings. We found significant correlation indexes (rs = .52, –.47, and .45 for the exemplar dominance, mean rank, and first-occurrence measures, respectively; N = 120; ps < .0001), and, again, especially with the exemplar availability measure (r = .54; see Fig. 2).

Feature dominance

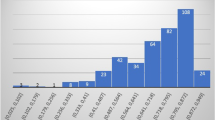

Feature production frequency, also called dominance (especially when calculated as the proportion of the total sample of participants), represents the number of participants who listed a given feature for a given entity in a feature-listing task. This index refers to the salience or importance of a feature for a given concept (Smith & Medin, 1981) and reflects the accessibility of that feature when a concept is computed from its name. In the C-F_Pairs.xls file, we have included the raw production frequency of each concept–feature pair [Prod_Fr], indicating the number of participants who listed that feature for that concept. On the basis of the number of participants presented with each concept ([n_Subj]), we calculated dominance ([Domin]) as the ratio between [Prod_Fr] and [n_Subj]. The dominance distributions for concept–feature pairs are shown in Fig. 3, which shows that a plurality of features (420, corresponding to nearly 25 %) were listed for a given concept by four participants (i.e., the production frequency cutoff that we used to include concept–feature pairs in our norms, corresponding to an average relative dominance value of 15.5 %).

Distributions of feature accessibility (green bars), dominance (blue bars), and normalized order of production (red bars) for the total set of 1,715 concept–feature pairs in Experiment 3b

We included the corresponding measures calculated for each concept, with [sum_Prod_Fr] indicating the summed frequency of production of all the features listed for a given concept, and [M_Prod_Fr] indicating the mean production frequency of the features listed for that concept (calculated by dividing [sum_Prod_Fr] by [n_Feat], the number of features listed for that concept) in the Concepts.xls file. Moreover, we calculated a [Domin] variable for every concept that indicates the average of the dominance values of all of the features listed for a given concept and that was calculated as the ratio between [M_Prod_Fr] and [n_Subj].

Feature accessibility

The order of production can also be used in the computation of the accessibility of a feature for a given concept, as well as as an index of the generation of an exemplar in a given category. Thus, feature accessibility can also be reflected in the order of generation of that feature. For example, the features “fatto di legno/made of wood,” for tavolo/table, and “lunga/long,” for sciarpa/scarf, both have the same dominance, but “fatto di legno/made of wood” was listed sooner than “lunga/long,” indicating that it was more readily accessible in semantic memory when the corresponding concept cue was presented. Therefore, the accessibility of a feature in response to a concept’s name might be independent of that feature’s absolute production frequency and might be related to a feature’s salience, defined in relation to other features.

In C-F_Pairs.xls, we included the variable [M_Prod_Ord], representing the mean order of production of each feature for a given concept. Because participants listed a different number of features, we also calculated a normalized order of production ([M_Prod_Cent]) by converting each order of production value in centiles, as explained above (see Exp. 1, Transcription and Labeling section). Thus, the [M_Prod_Cent] index assumes a maximum value of 1 for a feature listed first for a concept by a participant, and it approaches 0 for a feature listed last (see Fig. 3 for the distribution of this index across concept–feature pairs). To confirm that the order in which a feature was listed reflected the accessibility of that feature for a given concept, we performed a correlation analysis between the normalized order of production and the dominance of each feature. The Pearson’s correlation coefficient (r = .27; N = 1,715; p < .0001) was significant, confirming that features with high dominance were listed sooner, and features with low dominance were generated later.

We also calculated a new measure to reflect the accessibility of a feature in response to a concept’s name, by taking into account both the order and the frequency of production of a given feature. Assuming that a feature with an ideal accessibility would be generated first by all participants (i.e., it would have both a normalized order of production and a dominance of 1), we measured the accessibility value a ij of a feature by calculating its Euclidean distance from the point (1, 1) in the Dominance × Order of Production space. We calculated this measure ([Access] in the C-F_Pairs.xls file) for each feature j in a concept i as follows:

where x ij and y ij indicate, respectively, the dominance and the normalized order of production of a given feature j in a given concept i.

Accessibility values range from 0 to 1, with 1 indicating ideal accessibility (see Fig. 3 for the distribution of the accessibility value across concept–feature pairs). With this measure, it is possible to find two features with the same accessibility but different dominance values: For example, both the feature “annusa/sniffs” for naso/nose and the feature “usato per riscaldare/used to warm up” for cappotto/coat show the same accessibility value of .64, with the latter listed sooner but by fewer participants, as compared to the former.

Feature distinctiveness

An important property of feature-based semantic representations is that features differ in terms of how informative they are about the entity they describe—that is, some features provide more information than others in identifying a concept, by distinguishing it in general or among other similar concepts in the same category. For example, “miagola/meows” is a highly distinctive feature for gatto/cat, whereas “ha quattro gambe/has four legs” is very nondistinctive, because many things (not only cats) share this attribute. Thus, distinguishing features provide necessary or sufficient information for identifying an entity and are critical when performing tasks that require discriminating a specific concept among similar concepts, such as word–picture matching and naming from definitions or pictures.

We defined a concept’s feature as distinguishing if it was listed for only one concept in the norms (variable [Disting] in the C-F_Pairs.xls file). On the basis of this information, we included the number of distinguishing features per concept ([n_Disting_Feat]) and, by dividing this measure by the number of features listed for that concept, we also calculated the corresponding percentage of distinguishing features ([%_Disting_Feat]) in the Concepts.xls file. As can be seen in Fig. 4, the majority of features (481 out of 730, corresponding to 65.9 %) were listed for only one of the concepts, and were accordingly classified as distinguishing. Furthermore, the number of features dramatically decreased as a power function of the number of concepts for which they were listed.

Although the measures above are useful for understanding which attributes best discriminate a given concept among others, the amount of information provided by a feature about a concept’s identity falls along a continuum, and thus is not perfectly represented by a dichotomic variable. For example, “ha gambe/has legs” and “ha una coda/has a tail” are not very distinguishing features at all, whereas “ha una criniera/has a mane” is quite distinguishing, since it is shared by only two concepts in our norms, leone/lion and cavallo/horse, and only the highly distinguishing feature “usato per trainare carrozze/used to pull coaches” permits one to correctly identify the corresponding entity as a horse. Therefore, we calculated the distinctiveness, also known as informativeness, a measure that allows for a continuum representation of the amount of distinguishing information provided by a given feature for a concept. Distinctiveness values range from highly shared to highly distinguishing (Cree & McRae, 2003; Devlin, Gonnerman, Andersen, & Seidenberg, 1998; Garrard et al., 2001; McRae et al., 2005; Zannino et al., 2006). In line with Devlin et al. and McRae et al. (2005), but contrary to Garrard et al. and Zannino et al., we computed the distinctiveness of a feature (the variable [Distinctiveness] in C-F_Pairs.xls) as the inverse of the number of concepts in which the feature appears in the entire norms (i.e., as 1/[n_Concepts]), without limiting the contrast set of concepts to those within a category. In this manner, distinctiveness was defined as a continuous but nonlinear measure, since the difference in distinctiveness for a feature occurring in one versus two concepts is larger than that for a feature occurring in two versus three concepts, so that the distinguishing nature of a feature is represented by a binary measure, whereas feature distinctiveness is a continuous index. Importantly, features that appear in only one concept have distinguishing and distinctiveness values that are equal (i.e., 1). Next, for every concept, we calculated the mean distinctiveness of all of the features that composed it (the variable [M_Distinctiveness] in the Concepts.xls file).

Finally, we computed another continuous measure regarding a feature’s informativeness, the so-called cue validity (Bourne & Restle, 1959; Rosch & Mervis, 1975). This is the conditional probability of a concept, given a feature, and represents the importance of a property, or how easily it “pops up” when one thinks of a concept (Smith & Medin, 1981). We included in the C-F_Pairs.xls file the corresponding variable [Cue_Val], calculated as the production frequency of a given feature for a given concept ([Prod_Fr]) divided by the sum of the production frequencies of that feature for all concepts in which it appears in the norms ([Cum_Prod_Fr]). Again, we calculated the mean cue validity of each feature for every concept (the variable [M_Cue_Val] in the Concepts.xls file). As expected, the cue validity and distinctiveness were highly correlated (r = .99; N = 1,715; p < .0001), since the former is a measure analogous to distinctiveness, except that it additionally takes into account an estimate of feature salience. Therefore, if a feature is truly distinguishing, and thus is listed for only one concept in the norms (as “miagola/meows” for gatto/cat), it will have a maximum value (i.e., 1) for both cue validity and distinctiveness. On the contrary, if a feature is not very distinguishing, being shared by many concepts, it will have a very low score (i.e., approaching to 0) for both cue validity and distinctiveness.

Feature relevance

Previous authors have proposed a feature-based model of semantic memory containing a new measure, semantic relevance, considered the factor that “best captures the importance of a feature for the meaning of a concept” (Sartori & Lombardi, 2004, p. 440). In other words, high-relevance features are those that mainly contribute to the “core” meaning of a concept and are crucial both in identifying that concept and in discriminating it from other, similar concepts (Sartori, Gnoato, Mariani, Prioni, & Lombardi, 2007; Sartori & Lombardi, 2004).

Semantic relevance can be construed as a nonlinear combination of a feature’s dominance and distinctiveness (Sartori et al., 2007; Sartori, Lombardi, & Mattiuzzi, 2005), since it is computed as the result of two components: a local component, comparable to dominance, reflecting the importance of a semantic feature for a given concept, and a global component, comparable to distinctiveness, reflecting the importance of the same semantic feature for the whole set of concepts. For example, the semantic feature “sente/hears” is of very high relevance for the concept orecchio/ear, since all participants listed it when describing that concept, and at the same time, none of them used the same feature to define other concepts. In other words, the feature “sente/hears” for the concept orecchio/ear has a maximum score in both dominance and distinctiveness measures—that is, it has both high local importance and high global importance. In contrast, the feature “grande/big” is of low relevance for the concept orecchio/ear, because very few participants used it to describe that concept, and it was listed at the same time for many concepts. In this case, the feature “grande/big” has both low dominance and distinctiveness values. It is worth noting that relevance is different from distinctiveness. Distinctiveness is a concept-independent measure, and therefore the distinctiveness score of a feature is the same for all concepts (i.e., regardless of the specific concept in which that feature appears). Instead, relevance may be considered a concept-dependent measure, because a given feature can take different relevance scores across different concepts (i.e., depending on the concept in which that feature appears). For example, the feature “fatto di vetro/made of glass” has a low relevance score for the concepts centrotavola/centerpiece and teglia/baking pan, but it has a high relevance score for the concept bicchiere/glass.

We calculated the semantic relevance of each concept–feature pair as described in Sartori et al. (2007) (the variable [Relevance] in the C-F_Pairs.xls file). Briefly, we computed the relevance value k ij of a feature j in a concept i as follows:

where x ij indicates the dominance of a feature j in a concept i and represents the local component of k ij ; I indicates the total number of concepts in our norms (i.e., 120); I j indicates the number of concepts in which the feature j appears; and ln(I/I j ) represents the global component of k ij . Note that we used dominance instead of raw production frequency (used by Sartori, Lombardi, & Mattiuzzi, 2005) in computing relevance because the concepts in our norms were presented to a variable number of participants. We also included the measure [M_Relevance], computed as the mean relevance of each concept’s features in the Concepts.xls file.

Moreover, for each concept–feature pair, we computed a new measure that shares the same underlying principle as semantic relevance—that is, semantic significance (the variable [Significance] in the C-F_Pairs.xls file). As for relevance, this measure is composed of a local and a global component, but in this case, the local component of semantic significance corresponds to the accessibility of a feature, as described above. In this way, the importance of a given semantic feature for its corresponding concept is reflected by the accessibility. This measure accounts for both the dominance and the priority with which that feature emerged in the participants’ semantic conceptual representations. We calculated the significance score s ij of a feature j in a concept i, as in the case of relevance, but modified the local component of s ij by replacing the feature’s dominance—x ij in the Eq. 3—with the accessibility of that feature, a ij . We have included the corresponding variable [M_Significance], computed as the mean significance of each concept’s features, in the Concepts.xls file. Relevance and significance values are strongly correlated (r = .90; N = 1,715; p < .001)

Feature correlation

Features are considered correlated if they tend to appear together in the semantic representation of a concept. For example, the features “ha ruote/has wheels” and “usato per muoversi/used to move” are highly correlated, because typically, something that is used to move probably also has wheels. Thus, these two features tend to co-occur in the representation of that concept. Correlations among features represent a central factor in various models of semantic organization (Malt & Smith, 1984; Rosch, 1978; Rosch, Mervis, Gray, Johnson, & Boyes-Braem, 1976). Rosch (1978) claimed that clumps of correlated features form the basis of basic-level conceptual representations (e.g., mucca/cow or cavallo/horse), a level at which feature correlations best fit together within categories and can be used to distinguish among concepts (Rosch et al., 1976).

Feature correlations are also central in connectionist models of featural representations of word meaning (McRae et al., 1997) and are believed to play an important role in the organization of semantic memory. Feature correlations influence the early computation of word meaning in online tasks, in contrast to slow, offline tasks, which require high-level reasoning processes (i.e., similarity and typicality ratings). To test these hypotheses, McRae et al. (1997) compared performance on offline concept similarity rating and typicality judgment tasks with performance for online speeded priming semantic and feature verification tasks. They found that feature correlations predicted performance only in online tasks. Other studies replicated this finding in a feature–concept priming paradigm: Strongly intercorrelated features primed lexical decisions to target concepts significantly more than weakly intercorrelated features did (Taylor, Moss, Randall, & Tyler, 2004).

We calculated several variables based on the correlational structure of feature representations. First, we constructed a Feature × Concept intensity matrix, with 730 rows (one for each feature) and 120 columns (one for each concept) in which each cell corresponded to the production frequency of a given feature for the corresponding concept (see the Fr_Production sheet in the C-F_Matrix.xls file). In line with McRae et al. (2005; McRae et al., 1997), we chose to exclude from the correlational analyses features that occurred in only one or two concepts in the norms, in order to avoid spurious correlations. In fact, including very distinguishing features would have caused an artificial increase in the number of perfectly correlated feature pairs. We then performed correlational analyses on the resulting 161 × 120 Feature × Concept matrix by computing the Pearson product–moment correlation coefficient for each resulting pairwise combination of feature vectors. A feature pair was considered correlated if the features were associated by |r| ≥ .234, indicative of a significant correlation at the p ≤ .01 level, and thus shared at least 5.5 % of their variance (i.e., with r 2 ≥ .055). Finally, in line with McRae et al. (2005), we calculated a number of concept-dependent measures indicating the degree to which a concept’s features were intercorrelated (see Zannino et al., 2006, for a comparison of concept-dependent and concept-independent measures of feature correlation).

We included the variable [n_Correl], which indicates, for a given feature, the number of features occurring in the same concept (and in at least two further concepts) with which that feature is significantly correlated, in the C-F_Pairs.xls file. Then we calculated the percentage of significant correlation [%_Correl] based on [n_poss_Correl] for each feature, which in turn indicates the number of possible pairwise combinations for a feature (i.e., for each feature, the number of other features occurring in the same concept, excluding those listed for only one or two concepts). In the same manner, we included [n_Correl_Pairs], indicating the number of significantly correlated feature pairs per concept, and [%_Correl_Pairs], indicating the percentage of correlated feature pairs per concept, and calculated by dividing [n_Correl_Pairs] by [n_poss_Correl_Pairs], which in turn indicates the number of possible pairwise combinations of features that appeared in that concept and were listed for three or more concepts, in the Concepts.xls file.

Second, we included in the C-F_Pairs.xls file a measure of the intercorrelational strength of a given feature for its corresponding concept, [Intercorr_Str], which indicates the degree to which a feature is correlated with the other features that occur in the same concept and is computed by summing the proportions of shared variance (r 2) between that feature and each of the other features occurring in the same concept with which it is significantly correlated (McRae et al., 2005). Because this measure is no longer a percentage (being actually a sum of percentages), we also calculated [M_Intercorr_Str] by computing the ratio between [Intercorr_Str] and [n_Correl]. Similarly, in the Concepts.xls file we included [Intercorr_Density], a measure of the amount of intercorrelation between features occurring in a given concept, calculated by summing the proportions of shared variance across all significantly correlated feature pairs of that concept. Again, to have a somewhat standardized measure, we also included the measure [M_Intercorr_Density], which is calculated by dividing [Intercorr_Density] by [n_Correl_Pairs].

Feature type distribution

As is described above (see the Recording Procedure section of Exp. 2), we first classified each feature into four principal types of knowledge, according to the kind of information that it represented (i.e., sensorial, functional, encyclopedic, or taxonomic). This gave us a measure of the importance of the type of knowledge for each concept (see Cree & McRae, 2003, for a detailed discussion). This information about each feature’s main knowledge type was included in the C-F_Pairs.xls file as [Main_Feat_Type]. We also included [Det_Feat_Type], representing a more detailed classification in which each feature was classified into one of the nine knowledge types defined by Cree and McRae. On the basis of the main classification in knowledge types, we also computed four variables representing the number of features of each of the four principal knowledge types that were listed for each concept. These measures were included in the Concepts.xls file and were called [n_Sens_Feat], [n_Funct_Feat], [n_Encyc_Feat], and [n_Taxon_Feat]. We also computed the corresponding percentages ([%_Sens_Feat], [%_Funct_Feat], [%_Encyc_Feat], and [%_Taxon_Feat]) by dividing each of the four aforementioned measures by [n_Feat]—that is, the total number of features listed for each concept (see Fig. 5a).

The supposed difference between living and nonliving things in the distribution of features representing different types of knowledge depends on the precise definition of sensorial and functional information. In fact, different definitions have revealed discrepancies in the literature (Caramazza & Shelton, 1998; Farah & McClelland, 1991; Garrard et al., 2001; McRae & Cree, 2002; Vinson & Vigliocco, 2008; Zannino et al., 2006). As far as functional features are concerned, in contrast with Farah and McClelland and in line with Garrard et al., we chose to use a quite restrictive classification (see also McRae et al., 2005, and Zannino et al., 2006). In this manner, however, it was unlikely that a living concept could assume a functional feature, and this may have artificially caused the difference in the distributions of sensorial and functional features across semantic domains. For this reason, we performed an additional classification of all features according to the sensorial and functional definitions proposed by Garrard et al. These authors defined features that can be appreciated in a sensorial modality as sensorial, and those that describe an action, activity, or use of a given item as functional. We included this feature-based information in the C-F_Pairs.xls file in the variable [Feat_Type(Garrard)]. We also included the number and the percentage of features of each of the four principal types of knowledge listed for each concept (according to Garrard et al.’s classification), in the Concepts.xls file (see Fig. 5b).

Concept distance

Semantic representations can be characterized as points in a multidimensional space in which dimensions are defined by features. In these spatial representational models, the similarity between a pair of concepts is defined as the proximity between the pair of corresponding points: The closer that two concepts are, the more similar their semantic representations. Different measures of semantic similarity have been used to predict graded semantic effects (i.e., effects that reflect the degree of similarity between concepts, rather than simply a related/unrelated dichotomy), such as performance in a category-learning task (Kruschke, 1992), category verification latencies (Smith et al., 1974), semantic priming effects (Cree et al., 1999; Vigliocco et al., 2004), and semantic interference effects in object naming (Vigliocco, Vinson, Damian, & Levelt, 2002).

In psychological research, semantic similarity has typically been measured subjectively by asking participants to rate the pairwise similarity of a set of concepts. However, when the stimulus set is quite large, rated pairwise similarities are not feasible, due to the extremely large number of stimulus pairs. In this case, semantic distance measures can be computed from an Exemplar × Feature matrix either by correlating the exemplars’ feature vectors or by computing Euclidean or cosine distances between the exemplars’ feature vectors, as well as in sorting tasks, direct and triad ratings, conditional probability ratings, and proximity ranks in generation tasks (see Borg & Groenen, 2005, for a review).

We chose to compute the different concept distance measures directly from our feature norms, starting from the raw Feature × Concept matrix. The first semantic distance measure that we defined was the cosine distance between two concepts—that is, the complement of the cosine of the angle between two feature vector representations. The Cos_Domin sheet in the Distance_Matrix.xls file is a 120 × 120 matrix of cosine distances between each pairwise combination of concepts in our norms. This matrix was calculated from the 730 × 120 Feature × Concept dominance matrix, in which each cell corresponds to the dominance of a given feature for its corresponding concept. We then calculated the cosine distances d Cos(m, n) as the complement of the dot product between two concept vectors, divided by the product of their length, as follows:

where x mj and x nj represent dominance values of the feature j for the concepts m and n, respectively, and J indicates the number of features. Cosine distance values range from 0 (identical concepts: minimum distance, total similarity) to 1 (concept vectors are orthogonal: maximum distance, no similarity) and provide a symmetric and nonlinear measure of proximity that reflects the similarity between active features in a given pair of concepts, emphasizing shared features.

We also calculated two further familiar and commonly used geometric measures—that is, Euclidean and city block distances—that are well-known Minkowski metrics (see the Euc_Domin and City_Domin sheets in the Distance_Matrix.xls file). The Euclidean distance d Euc(m, n) was calculated as

whereas the city block distance d City(m, n), which emphasizes distinctive features (e.g., Dry & Storms, 2009), was calculated as

For both Euclidean and city block distances, highly similar concept pairs assume values approaching zero. We also included three other distance matrixes (the sheets Cos_Binary, Euc_Binary, and City_Binary) in the Distance_Matrix.xls file that were similar to the three just described, except that they were computed from the 730 × 120 Feature × Concept applicability matrix, in which each cell assumes a value of 1 if a given feature is listed for the corresponding concept, or otherwise the cell assumes a value of 0.

Next, for each distance measure, we computed the mean distance between a given concept’s feature vector and the feature vectors for every other concept in the norms ([M_Cos_Domin], [M_Euc_Domin], [M_City_Domin], [M_Cos_Binary], [M_Euc_Binary], and [M_City_Binary] in the Concepts.xls file). In particular, the [M_Cos_Binary] measure represents the density of a concept’s distant neighborhood (see Mirman & Magnuson, 2008, for the opposite effects of near and distant neighbors on word processing), since it is strongly correlated with the total number of a concept’s neighbor (the variable [n_Neighb] in the Concepts.xls file)—that is, the number of concepts that share at least one semantic feature with that concept (r = .90; N = 120; p < .0001). We then calculated the variable [M_Cos_Near_Neighb] for each concept as the mean cosine proximity of its four closest semantic neighbors. This measure represents the degree of a concept’s potential confusability (Cree & McRae, 2003) and reflects the influence of its near semantic neighborhood.

Conclusion

In our study, we have described norms for a large set of concrete concepts in Italian, together with descriptive statistics and participants’ ratings. Importantly, our norms differ from existing norms in that the concepts and categories were empirically selected, while those in past literature have been based on published databases or chosen a priori by the experimenters. Our method of selecting categories and concepts identified two properties missing from existing norms: Some categories neglected in the literature were present in the semantic memory of our participants, and a larger presence of artifacts than of natural concepts emerged.

Semantic norms provide valuable information about the representations of categories and concepts and have served as the basis to account for several empirical phenomena. In line with this, we have added new measures to better clarify feature representations in semantic memory—that is, featural accessibility and significance. These indexes are measures of the informativeness of the features that best capture the importance of a property for a given concept. In summary, we believe that these norms may be a useful tool in several areas of research. In particular, we propose that they can be used to verify models and theories about the organization of semantic knowledge and about conceptual representation.

References

Ashcraft, M. H. (1978). Property dominance and typicality effects in property statement verification. Journal of Verbal Learning and Verbal Behavior, 17, 155–164. doi:10.1016/S0022-5371(78)90119-6

Barsalou, L. W. (1999). Perceptual symbol systems. Behavioral and Brain Sciences, 22, 577–660. doi:10.1017/S0140525X99002149

Barsalou, L. W. (2008). Grounded cognition. Annual Review of Psychology, 59, 617–645. doi:10.1146/annurev.psych.59.103006.093639

Barsalou, L. W., Simmons, W. K., Barbey, A. K., & Wilson, C. D. (2003). Grounding conceptual knowledge in modality-specific systems. Trends in Cognitive Sciences, 7, 84–91. doi:10.1016/S1364-6613(02)00029-3

Battig, W. F., & Montague, W. E. (1969). Category norms for verbal items in 56 categories: A replication and extension of the Connecticut category norms. Journal of Experimental Psychology, 80(3, Pt. 2), 1–46. doi:10.1037/h0027577

Berkhin, P. (2006). Survey of clustering data mining techniques. In J. Kogan, C. K. Nicholas, & M. Teboulle (Eds.), Grouping multidimensional data: Recent advances in clustering (pp. 25–71). New York: Springer. doi:10.1007/3-540-28349-8_2