Abstract

A previous study (Schneider, Daneman, Murphy, & Kwong See, 2000) found that older listeners’ decreased ability to recognize individual words in a noisy auditory background was responsible for most, if not all, of the comprehension difficulties older adults experience when listening to a lecture in a background of unintelligible babble. The present study investigated whether the use of a more intelligible distracter (a competing lecture) might reveal an increased susceptibility to distraction in older adults. The results from Experiments 1 and 2 showed that both normal-hearing and hearing-impaired older adults performed poorer than younger adults when everyone was tested in identical listening situations. However, when the listening situation was individually adjusted to compensate for age-related differences in the ability to recognize individual words in noise, age-related difference in comprehension disappeared. Experiment 3 compared the masking effects of a single-talker competing lecture to a babble of 12 voices directly after adjusting for word recognition. The results showed that the competing lecture interfered more than did the babble for both younger and older listeners. Interestingly, an increase in the level of noise had a deleterious effect on listening when the distractor was babble but had no effect when it was a competing lecture. These findings indicated that the speech comprehension difficulties of healthy older adults in noisy backgrounds primarily reflect age-related declines in the ability to recognize individual words.

Similar content being viewed by others

Introduction

Conducting a conversation in everyday situations often is quite challenging both perceptually and cognitively. For example, imagine that you have arranged to have lunch with your nephew who has been out of the country for 3 years. Both of you are interested in catching up on what each other has been doing. When you arrive at the restaurant just shortly before noon, there is music playing in the background, and the waiter seats you at a table with a good view but one that is near the air conditioner. Hence, the background noise level makes it somewhat difficult to hear one another. Unfortunately, just shortly after you are seated, the regular noonday patrons begin to arrive, and soon the multiple conversations that ensue create a babble of voices. Finally, to make matters worse, a member of the company you work for, who doesn’t know you, is seated at the table next to you and begins to talk with his lunch partner about impending layoffs at your firm. As a result, you and your nephew have to raise your voices to be heard, and both of you find it increasingly difficult to follow, comprehend, and digest what the other is saying. As a result, you miss much of what your nephew said, and your lack of understanding makes the conversation both difficult and uncomfortable.

The preceding scenario illustrates how competing sound sources—the air conditioner, a babble of voices, and a single person talking—can interfere with communication and social interaction in our everyday life, and this may be especially exacerbated for an older person. All three of these sound sources present a perceptual challenge to the extent that the energy in them overlaps that of the target voice both temporally and spectrally. This kind of interference, in which the competing sound sources simultaneously activate overlapping regions along the basilar membrane of the inner ear, thereby overwhelming the signal, often is referred to as energetic masking. Competing sound sources, in addition to producing energetic masking, also can interfere with comprehending the target speech at levels beyond the periphery. For example, the babble of background voices, in addition to energetically masking the speech target, could elicit phonemic activity. This competing activation could make it more difficult to access the meaning of the words in the target speech. Because this interference is occurring after basilar membrane processing of the auditory input, it is classified as informational interference or informational masking (Durlach et al., 2003). In contrast, because a steady-state noise is unlikely to elicit any lexical activity, its effects on performance are considered to be primarily energetic.

Informational masking can also occur when the speech target is being processed at higher-level linguistic and cognitive levels. For example, the overheard conversation at the other table could compete with your nephew’s voice for your attention. Hence, any sound that competes with the speech signal for a listener’s attention could interfere with the processing of the speech signal because of the demands it places on working memory resources. All simultaneously presented competing sounds will energetically mask the speech signal. In addition, any competing sound that elicits linguistic activity, or diverts attention from the target speech, is likely to produce informational masking. We investigated the extent to which two types of informational maskers—multi-talker babble versus a single competing talker—interfere with a listener’s ability to comprehend spoken language and whether there any age differences with respect to the degree of interference produced by these two types of maskers.

These two types of maskers (babble vs. competing talker), in addition to energetically masking the speech target, are likely to produce different amounts of informational masking. Because individual words are not, in general, recognizable in multi-talker babble, the degree of informational interference is likely to be considerably less than that produced when the masker is single-talker intelligible speech. In the latter case, the competing signal is much more likely to compete with the target speech for the listener’s attention, and initiate higher-order, post-lexical processing of the competing speech. For instance, when the language of the competing talker is understood by the listener, it produces a greater degree of interference with speech recognition, comprehension, and memory than when the competing speech is in a language foreign to a listener (Tun, O’Kane, & Wingfield, 2002; Rhebergen., Versfeld,, & Wouter, 2005; Van Engen & Bradlow, 2007; Van Engen, 2010; Brouwer, Van Engen, Calandruccio, & Bradlow, 2012). It also is the case that competing speech in the same language is a less effective masker when it is heavily accented than when it is not (Calandruccio, Dhar, & Bradlow, 2010). Because competing speech in the listener’s native language is more likely to compete for her or his attention than a multi-talker babble, and because it is likely to elicit competing activity at higher levels of linguistic and cognitive processing, it is a more potent informational masker than a babble of unintelligible voices.

Another factor that is likely to increase the potency of a competing speech masker is the acoustic similarity between the target voice and the competing voice. For example, Humes, Lee, and Coughlin (2006) have shown that informational masking is greater when the competing talker is of the same gender as the target talker. In addition, a number of studies have shown that informational masking effects are maximal when the speech target and the competing speech appear to originate from the same point in space (Freyman, Helfer, McCall, & Clifton, 1999; Freyman, Balakrishnan, & Helfer, 2001). Li, Daneman, Qi, & Schneider (2004) investigated the degree to which spatially separating a target sentence from two competing sentences provided a release from masking and found that younger and older adults reaped the same benefit from spatial separation. To investigate whether there were age differences when the acoustic similarity between masker and target sentences was increased, Agus, Akeroyd, Gatehouse, and Warden (2009) had both target and masker sentences spoken by the same person. These investigators found that younger and older adults benefited to the same degree when the informational content of the competing sentence was reduced by replacing the competing sentence with an amplitude modulated noise having the same amplitude envelope as the sentence, but being unintelligible. Hence these two studies suggest that when sentence level material is used, the amount of informational masking is the same for younger and older adults (for a review of informational masking of speech by speech using sentence level material, see Schneider, Li, & Daneman, 2007; Schneider, Pichora-Fuller, & Daneman, 2010).

While there have been many studies of how various types of maskers affect the recognition and comprehension of single words and short sentences (Humes, Kidd, & Lentz, 2013; Schneider, Pichora-Fuller, & Daneman, 2010), relatively little is known about the effects of babble and competing speech on the comprehension of the kinds of complex and extended spoken discourse that are encountered in everyday situations. To ensure greater ecological validity, in this study the target speech consisted of lengthy lectures (8-13 minutes in length) on familiar topics (e.g., careers, food, speech making), and the masker was either the same kind of lengthy lecture, or 12-talker babble. To maximize the degree of informational masking in this study, both the target lecture and competing lecture were in the same voice and were presented over the same earphone.

Schneider et al. (2000) investigated whether there were age-related differences in comprehending lengthy lectures in a background of 12-talker babble and whether these differences reflected age-related declines in hearing or cognition. They asked normal-hearing younger and older listeners and hearing-impaired older listeners to answer questions concerning the content of lectures (8-13 minutes in length) either in quiet or a background of 12-talker babble. Some of the questions were about the specific details of the lecture, and some were more integrative and general. To determine the effect of hearing capacity on performance, in one experiment the signal level and babble level were individually adjusted to ensure that each participant was equally likely to hear individual words without the help of context (hereafter referred to as recognition of the individual words being spoken), whereas in another experiment no adjustment was made, so both the target lecture and babble distractor were presented at the same levels to all listeners. The results showed that when target levels and signal-to-noise ratios (SNRs) were individually adjusted in both younger and older adults to make it equally difficult for all individuals to recognize the individual words, good-hearing younger and older adults, as well as hearing-impaired older adults, were equally adept at answering questions when the lectures were presented either in quiet or in a background babble. However, when no adjustments were made, neither older group could recall as many details of the lectures as their younger counterparts did. The study suggested that older adults can perform as well as younger adults in listening comprehension tasks even when the target listening materials are relatively complex, as long as they can hear the individual words comprising the lecture as well as can their younger counterparts. However, when older listeners’ ability to recognize individual words is impaired for any reason, their ability to comprehend speech will be compromised.

In Schneider et al. (2000), the masker was unintelligible 12-talker babble, in which individual words were largely unrecognizable, and both the spectrum of the babble masker and its amplitude envelope differed substantially from that of the lecturer’s voice. This naturally raised the question as to whether age differences would emerge if a more meaningful and acoustically similar distractor was used as a masker. Older adults could be more distracted by complex and meaningful competing stimuli than are younger adults because of age-related declines in inhibitory control. A number of studies have shown that aging is associated with a reduced ability to ignore irrelevant information that is presented visually (Hasher & Zacks, 1988; Lustig, Hasher, & Zacks, 2007) or aurally (Bell, Buchner, & Mund, 2008). In a typical reading-with-distraction experiment (Carlson, Hasher, Zacks, & Connelly, 1995; Connelly, Hasher, & Zacks, 1991; Darowski, Helder, Zacks, Hasher, & Hambrick, 2008; Dywan & Murphy, 1996; Kim, Hasher, & Zacks, 2007), younger and older participants are required to read short prose passages out loud, while distracting words are interspersed into the text with a distinct font style (upright font) different from the target words (italic font). The participants’ task is to attend only to the target texts and ignore the distractor. It is usually found that older adults’ reading speed is disproportionately slowed down by the distracting words than is that of their younger counterparts compared with baseline (reading with no distracting words), and older adults are less likely to correctly recall or identify aspects of the target passage.

However, few of the studies that have claimed that older adults are more susceptible to informationally complex distractors took age-related sensory decline into consideration. Indeed, previous studies have indicated that age-related declines in spoken word recognition are responsible for most—if not all—of older adults’ difficulty in hearing in noise (Avivi-Reich, Daneman, & Schneider, 2014; Divenyi & Haupt, 1997a, 1997b; Helfer & Wilber, 1990; Humes & Christopherson, 1991; Humes & Roberts, 1990; Jerger, Jerger, & Pirozzolo, 1991; McCoy et al., 2005; Murphy, Daneman, & Schneider, 2006; Murphy, McDowd, & Wilcox, 1999; van Rooij, Plomp, & Orlebeke, 1989). Therefore, in the present study we used the same procedure as in Schneider et al. (2000) to adjust individually the listening situation (by manipulating the target level and the SNR), so that both younger and older adults were equally likely to recognize the individual words being spoken. We hypothesize that if comprehension in older adults is primarily limited by their inability to recognize the individual words being spoken, age difference should be minimized if not eliminated in the present task when age-related differences in word recognition are no longer a factor, even though the distractor in the present study is acoustically similar to the target and semantically meaningful. However, it has been argued that older adults are less able to inhibit the processing of irrelevant but meaningful information (e.g., the inhibitory deficit hypothesis; Hasher & Zacks, 1988). Consequently, adjusting for differences in speech recognition may not be sufficient to eliminate age differences in comprehending lectures when there is a competing meaningful distractor.

Experiments 1 and 2 adopted the same paradigm and lecture listening materials as in Schneider et al. (2000), except that instead of 12-talker babble, another lecture, spoken by the same person, was used as the masker, with both lectures presented monaurally to the right ear. We used the same voice for both the target and distracting lectures to maximize the amount of informational masking. The greater the similarity in voices, the more difficult it should be to inhibit the processing of irrelevant information. Hence, the use of the same voice for both target and masker should enhance the effects of any inhibitory deficit in older normal-hearing and hearing-impaired adults.

The difference between the two experiments was that in Experiment 1, all participants were tested in identical listening situations (same signal level and same SNR for everyone), whereas in Experiment 2 the signal level and SNR were individually adjusted to equate for individual differences in word recognition, so that every participant was equally capable of recognizing the individual words being spoken. The logic motivating Experiments 1 and 2 is as follows. If age differences in listening comprehension are largely attributable to the greater difficulty that older listeners have in hearing individual words in noise, we might expect large age differences in comprehending lectures when there is an informationally complex competing background (another lecture in the same voice) as in Experiment 1. However, these should be eliminated or reduced in Experiment 2 when we experimentally equate all individuals with respect to spoken word recognition. This would replicate the results of Schneider et al. (2000) where the competing sound source was unintelligible babble. If, on the other hand, group differences persisted after experimentally adjusting for individual and group differences in spoken word recognition in Experiment 2, we would have to conclude that age-related changes in spoken word recognition cannot account for all of the age-related differences in comprehending spoken language when there is competing speech in the background. Experiment 3 directly compared the distracting effects of single-talker competing speech and 12-talker babble after adjusting for individual differences in word recognition. This experiment allowed us to calibrate the relative amount of interferences produced by these two maskers, again after equating for word recognition. We expected that a competing lecture would have a more deleterious effect on comprehension than competing babble when presented at comparable SNRs.

Experiment 1

In Experiment 1, normal-hearing younger and older adults and hearing-impaired older adults listened to target lectures presented with a meaningful competing lecture played in the background.Footnote 1 Both the target and distractor were played monaurally to the right ear over a headphone. Immediately following each lecture, participants answered detail and integrative questions concerning the target lecture. The levels of the target and competing lectures were set to 62 and 69 dB SPL, respectively, for all listeners. Based on Schneider et al.’s (2000) study with babble as a masker, we would expect to find age-related differences in comprehension performance when there is no control for age-related difference in spoken word recognition.

Method

Participants

The participants in Experiment 1 consisted of 18 normal-hearing younger adults (6 males), 18 older adults (5 males) with normal hearing for their age, and 18 older adults (11 males) with mild-to-moderate hearing loss. Older normal-hearing participants were required to have a pure tone threshold of 25 dB HL or lower from 0.25 to 3 kHz, with balanced hearing in the two ears (≤15-dB difference between ears at these frequencies). Older adults with audiometric thresholds in this range are usually considered to have normal hearing for their age (ISO 7029-2000) and are referred to in this paper as normal-hearing older adults. In contrast, none of the participants in the hearing impaired group met the audiometric hearing criteria stated above and had hearing thresholds ≥ 30 dB HL at one or more frequencies between 0.25 and 3 kHz. The ages and years of education of all participants appear in Table 1, along with those of the participants in Experiments 2 and 3. A three-group (Young, Normal-Hearing Old, and Hearing-Impaired Old) by three-experiment (1, 2, and 3) between-subjects ANOVA failed to reveal any effect of Group (F[2,132] < 1), Experiment (F[2, 132] < 1), or Group X Experiment interaction (F[3,132] < 1) on Years of Education. Hence there is no indication that Years of Education differed across Groups or Experiments. However, a two-group (Normal-Hearing Old, and Hearing-Impaired Old) by three-experiment (1, 2, and 3) between-subjects ANOVA on the participants’ age found a significant effect of group (F[1.83] = 25.27, p < 0.001, ηp 2 = 0.233) but no signficant effect of Experiment (F[2,83] < 1) or Experiment X Group interaction (F[1,83] = 2.98, p = 0.088, ηp 2 = 0.033). Hence, the participants in the older hearing-impaired group were significantly older than those in the older normal-hearing group.

The younger adults were students from the University of Toronto Mississauga. They participated in the study to fulfill a course requirement or were paid $10/hour compensation. All the older adults were volunteers from the local community, and each of them was paid $10/hour for participating. Only participants who reported that they were in good health and had no history of serious pathology (e.g., stroke, head injury, neurological disease, seizures) were included in the study, and they were all fluent English speakers.Footnote 2

Auditory measures

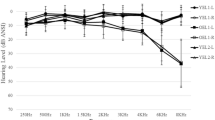

All of the younger and normal hearing older participants in Experiment 1 had pure-tone, air-conduction thresholds ≤25 dB HL between 0.25 and 3 kHz (ANSI S3.6-1996) in both ears, with the interaural difference ≤15 dB between 0.25 and 3 kHz, and the older participants had normal hearing for their age (International Organization for Standardization [ISO] 7029-2000, ISO 2000). In contrast, none of the participant in the hearing impaired groups met the audiometric hearing criteria stated above. All had hearing thresholds ≥30 dB HL in at least one frequency level between 0.25 and 3 kHz, with HLs ≤60 dB for frequencies ≤2 kHz, and ≤70 dB at 3 kHz. None of these individuals wore hearing aids or had a history of hearing disorders or hearing diseases. The audiometric hearing thresholds of the three different groups, averaged across both ears, are presented in Fig. 1.

Cognitive measures

Vocabulary

Participants were given the Mill Hill Vocabulary Scale (Raven, 1965) to assess their vocabulary knowledge. In this 20-item synonym test, participants matched each test item with its closest synonym from a list of six alternatives (maximum score = 20). The average scores on this test are presented in Table 1, along with the scores of the individuals in the other two experiments.

Reading comprehension skill

All participants were administered the Nelson-Denny test of reading comprehension (Brown, Bennett, & Hanna, 1981). Participants had to read 8 short passages, and answer 36 multiple-choice questions on them. They were given 20 minutes to complete the test. Participants were given a reading comprehension score based on the number of questions answered correctly (maximum score = 36). One older hearing-impaired participant in Experiment 2 did not complete this test because this person had to leave early for an appointment. The scores of the different groups of participants on this reading comprehension test are also presented in Table 1.

Working memory span

The Reading Span test (Daneman & Carpenter, 1980) was used to assess participants’ working memory capacity. In this task, participants were presented with progressively longer sets of unrelated sentences on a computer screen. Set size ranged from 2 to 5 sentences, with 5 sets at each size for a total of 70 sentences, and the sentences were presented on the screen one by one. Set sizes were presented in ascending order. The participant’s task was to read each sentence out loud, and memorize the last word of each sentence for immediate recall at the end of the set. The total number of correctly recalled last words was used as the participant’s working memory span (maximum score = 70). One older hearing-impaired participant in Experiment 2 did not complete this test because of difficulty in reading the sentences, and another older normal-hearing participant in Experiment 3 also did not complete the test because she was not feeling well. The average scores of the different groups of participants on this working memory test are presented in Table 1, along with those of Experiments 2 and 3.

To evaluate whether there were any differences among the samples in the three different experiments, separate three-group (Young, Normal-Hearing Old, and Hearing-Impaired Old) by three-experiment (1, 2, and 3) between-subjects ANOVAs were conducted on each of these variables. There were no significant effects of Experiment, nor significant Experiment X Group interactions for Nelson-Denny scores (Experiment: F[2, 131] < 1; Group X Experiment: F[3, 131] = 1.12, p = 0.345, ηp 2 = 0.025); Working Memory Scores (Experiment: F[2, 130] < 1; Group X Experiment: F[3, 130] = 1.92, p = 0.130, ηp 2 = 0.042); or Mill Hill Vocabulary (Experiment: F[2,132] = 1.21, p = 0.303, ηp 2 = 0.018; Group X Experiment: F[3,132] < 1). However, there were significant Group Main Effects for Nelson-Denny scores (F[2, 131] = 17.79, p < 0.001, ηp 2 = 0.214); Working-Memory Scores (F[2, 130] = 16.067, p < 0.001, ηp 2 = 0.198); and Mill-Hill scores (F[2, 132] = 9.05, p < 0.001, ηp 2 = 0.121). Significant differences among the three groups for these three cognitive tests (LSD post hoc tests were used) are indicated in Table 1. Hence, there is no evidence that there were any differences across Experiments with respect to these three cognitive measures nor any evidence of Group by Experiment interactions. Normal-hearing younger participants had significantly higher reading span and working memory scores than did older normal-hearing participants, who, in turn, had significantly higher scores than older, hearing-impaired participants. By way of contrast, both older groups had equivalent vocabulary scores that were higher than those of the younger group.

Listening comprehension task

Materials and apparatus

We used the same six lectures as in Schneider et al. (2000). The lectures were chosen from Efficient Reading (Brown, 1984) and were recorded by a male actor at a sampling rate of 24,414 Hz. The six lecture topics were (a) “Your voice gives you away”; (b) “Of happiness and despair we have no measure”; (c) “Mark Twain's speech making strategy”; (d) “Feeding the mind”; (e) “Ten tips to help you write better”; and (f) “Which career is the right one for you.” The lectures were 8 to 17 minutes in length (mean: 13.2 minutes) and were read at a speaking rate of approximately 120 words/min.

Digital recordings of these lectures were converted to voltages using the Tucker Davis (DD1) digital-to-analog converter. We used programmable attenuators (Tucker Davis PA4) to set the target lecture level and the SNR between target lecture and competing lecture. After passing through the attenuators, target and competing lectures (or babble) were added together and presented to the participants over the right side of a calibrated set of Sennheiser HD265 headphones within a double-walled sound-attenuating chamber whose background noise was <20 dB SPL. Earphones were calibrated using Brüel & Kjær Head and Torso simulator (Type 4218 C) and Portable PULSE system (Type 3560 C).

Procedure

Stimuli were presented monaurally over the right headphone with the competing lecture beginning 5 s after the target lecture. Immediately following each lecture, participants were asked to answer 10 multiple-choice questions (4 alternatives per question) about it. The questions were presented in written form. Five were detail questions concerning a specific item of information that was mentioned explicitly once during the lecture. For example, one of the detail questions from "Which career is the right one for you" was "The job of bookkeeper is classed as (a) a social job; (b) a realistic job; (c) a conventional job; or (d) an investigative job." Five were integrative questions which required the listener to identify or infer the overall gist of the lecture. For example, an integrative question concerning the same lecture was "The selection is given chiefly to help listeners (a) know the satisfactions of certain types of jobs; (b) know the key characteristics of success; (c) choose a career; or (d) know what interest patterns to note."

In this experiment, the three shortest lectures (mean length = 10.8 minutes) were used as the target lectures, whereas the three longest lectures (mean length = 15.6 minutes) were used as the competing lectures. For each participant, two target lectures were selected from the three shortest lectures and the two competing lectures from the three longest lectures. The nine possible combinations of target and competiting lectures were counterbalanced across the 18 participants in each age group. The target lecture was played to the right ear at 62 dB SPL for all participants; the competing lecture was presented to the right ear as well but 5 s after the target lecture had started, and at a level of 69 dB SPL, for a SNR = −7 dB for everyone. Participants were instructed to pay attention to the lecture which started first and to ignore the lecture that started second. They were warned that the two lectures were spoken by the same person.

After answering questions concerning the second target lecture, participants were asked to answer five detailed questions about the competing lecture, even though the participants had been asked to ignore it in the first place. Because the competing lecture was always longer than the target, it was cut off when the target lecture was finished. Therefore, we only counted the detail questions that could possibly be answered based on the cutoff point (the maximum number of detail questions that were scored ranged from 3-5 out of 5, depending on the particular combination of target and competing lectures). Peformance on this surprise test measured the degree of intrusion of material from the to-be-ignored lecture.

Statistical analyses

Because assumptions concerning homogeneity of variance can be violated when the dependent variable is a percentage, we conducted Levene’s test of the homgeneity assumption in all three experiments. The assumption of homogeneity of variance was rejected (α = 0.05) in only 1 of the 19 cases where it could be tested across the three experiments. Because we would expect 1 rejection out of 20 tests by chance alone, we assumed the homogeneity assumption was met in all of the ANOVAs performed when the dependent variable was percent correct.

With respect to the ANOVAs on the integrative and detail questions about the target stories in Experiments 1, 2, and 3, we also tested the null hypothesis that the observed covariance matrices of the dependent variables were equal across groups. This null hypothesis was not rejected in any of the 3 experiments.

Finally, in Experiment 2, where there were 3 masker levels, thereby permitting tests of sphericity for F-tests involving masker level, there was no evidence of a violation of sphericity for the interaction between Masker Level and Question Type (p = 0.706) but a marginally significant (p = 0.052) violation of sphericity for the main effect of masker level. However, applying a Greenhouse-Geisser correction to tests involving Masker Level as a factor had a negligible effect on significance levels. Therefore, we assumed that the dependent variable (percent correct responses) satisfied the assumptions for an analysis of variance within the general linear model, and that no corrections for violations of sphericity were required.

Results and discussion

Figure 2 plots the average number of correctly answered detail (left) and integrative (right) questions for normal-hearing younger, normal-hearing older, and hearing-impaired older adults. As Fig. 2 shows, detail questions were answered correctly more often than integrative questions. Also, normal-hearing younger adults answered more detail and integrative questions correctly than did normal-hearing older adults and hearing-impaired older adults. In turn, normal-hearing older adults answered more detail and integrative questions than did hearing-impaired older adults. Thus, it appears that when a meaningful distractor is present, listening comprehension in older adults was poorer than in younger adults, and particularly so if older adults had poor hearing.

Average percentage of detail and integrative questions answered correctly by younger adults, older adults with normal hearing, and hearing-impaired older adults in the presence of a competing lecture in Experiment 1. Listening conditions were identical for all three groups of participants. Standard error bars are shown. The dotted line indicates chance level performance

These results were confirmed by a mixed-design ANOVA (3 Groups × 2 Question Types), with Group as the between-subjects factor, and Question Type as the within-subject factor. The ANOVA showed main effects of Group (young NH > old NH > old HI, respectively), F (2, 51) = 20.37, p < 0.001, ηp 2 = 0.444, and of Question Type (detail > integrative), F (1, 51) = 54.25, p < 0.001, ηp 2 = 0.515, but no significant interaction, F (2, 51) = 2.82, p = 0.069, ηp 2 = 0.099. Hence, the extent of the difference in performance on detail and integrative questions was essentially the same for young normal-hearing, old normal-hearing, and old hearing-impaired individuals. Post-hoc LSD tests indicated that the young normal-hearing participants answered more questions correctly than either old, normal-hearing (p = 0.017) or old hearing-impaired (p < 0.001) participants, and the old normal-hearing participants answered more questions correctly than did old hearing-impaired participants (p < 0.001).

To check whether performance was limited by a floor effect, we compared the performance on detail and integrative questions for each group to chance level (25 %). The t-tests indicated that each groups’ performance for both question types was significantly above chance (all ps < 0.02, one-tailed) except for the hearing-impaired older group when answering the integrative questions (t [17] = −0.176, p = 0.431, one-tailed). Hence, performance on the integrative questions may have been limited by a floor effect.

It should be noted that in Experiment 1 there are both bottom-up, acoustic-level cues and top-down, knowledge-driven cues that are available to support stream segregation. At the acoustic level, the target lecture is presented at a level that is 7 dB lower than that of the competing lecture. This level difference is a bottom-up acoustic cue that can be used to focus attention on the target lecture. Because the target lecture starts 5 s before the competing lecture, the listener is given advanced information as to the nature of the target topic which can be used to maintain attention to the target stream. Because the three groups differ in both age and hearing status, it is difficult to pinpoint the contribution of age-related deficiencies in hearing versus potential age-related differences in the ability to use top-down cues to maintain attention on, and efficient processing of, the target lecture.

The poorer performance of the hearing-impaired older adults compared to the normal-hearing older adults is most likely due to their hearing impairment. However, because the hearing-impaired older adults were significantly older, and had lower vocabulary and reading comprehension scores than their normal-hearing counterparts, it is possible that some portion of the poorer listening comprehension in the hearing-impaired group could be due to their poor cognitive performance on these two measures, which may reflect the age difference between the two older groups. The poorer performance of older adults compared to younger adults could reflect poorer hearing in the older group or age-related declines in the cognitive functions supporting spoken language comprehension, or both of these factors. The relative contributions of hearing loss versus cognitive decline will be addressed in Experiment 2.

To investigate whether there were group differences in susceptibility to intrusions from the competing lecture, we examined the degree to which participants were able to answer detail questions about the competing lecture they heard last. The percentage of questions correctly answered were 48 %, 30 %, and 41 % for normal-hearing younger, normal-hearing older, and hearing-impaired older participants, respectively. A between-subjects ANOVA performed on these three groups failed to reveal any significant differences among them with respect to the percentage of questions about the competing lecture that were correctly answered (F [2, 51] = 2.09, p = 0.135, ηp 2 = 0.076). Because a one-tailed t-test indicated that the percentage of questions correctly answered concerning the competing lectures was significantly above chance level for the participants in this experiment (t [53] = 3.962, p < 0.001), we concluded that at least some information from the competing lecture was being processed and stored in memory and that the amount of information recalled did not differ significantly with the age or hearing status of the participants.

Experiment 2

The results of Experiment 1 suggested that older adults, especially those with poor hearing, were at a disadvantage relative to younger adults at understanding lectures presented in meaningful competing speech when all individuals were tested under comparable listening conditions. The next question was whether the age difference would disappear if we individually adjusted the target and masker levels, so that each participant was equally likely to recognize the individual words being spoken. Adjusting the listening situation to ensure equivalent levels of lexical access when individual words were masked by a babble of voices should compensate, at least in part, for age-related hearing declines that may be inhibiting lexical access by interfering with phonetic and phonemic encoding of sounds. If these age-related declines in hearing are primarily responsible for the poorer performance of the two older groups in Experiment 1, then age-related differences in spoken-language comprehension should be substantially reduced or eliminated in Experiment 2.

Experiment 2 was similar to Experiment 1 with two differences. First, each participant listened to three lectures instead of two. One of the lectures was presented in quiet, another was presented in the presence of a low level of competing speech, and the third was presented in the presence of a high level of competing speech. Second, in Experiment 2 the listening situation was adjusted to compensate for individual differences in the listener’s ability to recognize words in multi-talker babble (Schneider et al., 2000) by presenting the target lecture at a level that was 45 dB above each individual’s babble threshold, and adjusting the level of the competing lecture to compensate for an individual’s ability to recognize words masked by a babble of voices. The predictions were as follows. If the ability to recognize the individual words being spoken was the primary reason for the poorer performance of older adults in Experiment 1, then we would expect comparable performance from all three groups when the target lecture was masked by a competing lecture. However, if the older adults’ poorer performance in Experiment 1 was due primarily to an inhibitory deficit, or any other age-related declines in the linguistic and cognitive functions supporting spoken-language comprehension when the target lecture was masked by a competing lecture, then adjusting the listening situation to ensure equal levels of word recognition should not substantially affect age differences in spoken language comprehension. When the target lecture is presented in quiet at the same sensation level to younger and normal-hearing older adults, we would expect the recognition of the individual words being spoken to be comparable and near perfect for both groups. Hence, we would not expect performance differences between the younger and older normal-hearing groups. However, because word recognition in quiet is affected by hearing loss even when the target speech material is adjusted for babble thresholds (Pichora-Fuller, Schneider, & Daneman, 1995), we might expect to find the older hearing-impaired group to comprehend less than the younger and older normal-hearing groups. The finding that equating the three groups of listeners with respect to word recognition led to minimal differences in spoken language comprehension among these three groups strongly suggests that the age-related differences in comprehension observed in Experiment 1 are primarily a result of the age-related differences in hearing.

Method

Participants

The participants in Experiment 2 consisted of 18 normal-hearing younger adults (5 males), 18 normal hearing older adults (5 males), and 18 older adults (5 males) with mild-to-moderate hearing loss. Their ages and years of education appear in Table 1. They were recruited from the same pool of participants as the volunteers in Experiment 1, and were subject to the same inclusion criteria as the volunteers in Experiment 1. Their audiometric thresholds are displayed in Fig. 1.

Additional auditory measures

Babble threshold test

In Experiments 2, an adaptive two-interval forced-choice procedure was used to determine detection thresholds for the 12-talker babble stimuli. In this procedure, a 1.5-s babble segment was played in one of two randomly chosen intervals. The two intervals began 1.5 s after the listener pressed the start button, and were separated by a 1.5-s silent period. A green and a red light on the response box were matched to the two intervals, and the listener’s task was to identify the interval containing the babble segment by pressing the corresponding green or red button. Immediate feedback was provided. A three-down, one-up adaptive staircase procedure (Levitt, 1971) was used to determine the babble threshold for each person’s right ear. Table 1 presents the average right-ear babble thresholds for each of the three groups of participants in both this experiment and in Experiment 3. A three-group (Young, Normal-Hearing Old, and Hearing-Impaired Old) by two-experiment (2, and 3) between-subjects ANOVA failed to reveal any Group X Experiment interaction (F[1,81] < 1). The effect of Group was highly significant (F[2,81] = 66.12, p < 0.001, ηp 2 = 0.621). The effect of Experiment did reach significance in this instance (F[1,81] = 4.74, p = 0.032, ηp 2 = 0.055). Hence there is some evidence that the participants in Experiment 3 had lower right-ear Babble thresholds than those in Experiment 2. Post-hoc LSD tests indicated that young, normal-hearing participants had significantly lower babble thresholds than older-normal hearing participants, who, in turn, had significantly lower babble thresholds than older, hearing-impaired participants.

R-SPIN test

In the revised Speech Perception in Noise test (R-SPIN, Bilger, Nuetzel, Rabinowitz, & Rzeczkowski, 1984), the listener is asked to repeat the final word in a sentence presented in a background of babble and indicate whether the final word was predictable from the context. Sentences are presented in lists of 50, with 25 of them containing final words that are predictable from the context (e.g., “The boat sailed along the coast”) and 25 containing final words that have low predictability (e.g., “Peter knows about the raft”), but only the responses for the low-context sentences were used for determining the R-SPIN thresholds. Sentences and babble were presented to the right ear. The average SPL of the sentences was set 45 dB higher than the individual listener’s right-ear babble threshold, and at least two lists were employed to bracket the 50 % point on the psychometric function relating percent correct identification to SNR for low-context, sentence-final words. The SNR corresponding to the 50 % point (the low-context R-SPIN threshold) was then determined through linear interpolation. The R-SPIN thresholds also are presented in Table 1, along with those of Experiment 3. A three-group (Young, Normal-Hearing Old, and Hearing-Impaired Old) by two-experiment (2 and 3) between-subjects ANOVA failed to reveal any effect of Experiment (F[2, 132] < 1), or Group X Experiment interaction (F[3,132] < 1). However, the Group main effect was highly significant (F[2, 81] = 44.65, p < 0.001, ηp 2 = 0.524). Post-hoc tests indicate that R-SPIN thresholds are equivalent for both normal-hearing groups who, in turn, have significantly lower R-SPIN thresholds than the older, hearing-impaired group.

Cognitive measures

The same three cognitive measures (vocabulary, reading comprehension, and working memory) were collected on participants in this study and appear in Table 1.

Listening comprehension task

Materials and apparatus

The lectures presented in this experiment were the same ones used in Experiment 1. In this experiment, one target lecture was presented in quiet, and the other two were presented in a low or high level of a competing lecture. In all conditions, the target lecture was presented to the right ear at 45 dB above the individual’s right-ear babble threshold.Footnote 3 The competing lecture was presented to the right ear as well, but 5 s after the target lecture had started. In the low level competing lecture condition, the SNR was set equal to the value of the listener's low-context R-SPIN threshold minus 6 dB, and in the high level competing lecture condition, the SNR was set equal to the value of the listener’s low-context R-SPIN threshold minus 12 dB.Footnote 4 For example, if a participant had a babble threshold of 21 dB SPL and a R-SPIN threshold of 3 dB, the target lecture was presented at a level of 66 dB SPL (45 + 21), and the competing lecture in the low-level competing lecture condition to 69 dB SPL to produce an SNR of −3 dB (3-6). In the high competing lecture condition, the competing lecture would be set to 75 dB SPL for this individual, resulting in an SNR of −9 dB. Another participant with better hearing, whose babble threshold was 16 dB SPL, and whose R-SPIN threshold was −2 dB, would have the target presented at 61 dB, and the masker presented at 69 in the low-level competing lecture condition for an SNR of −8 dB. The high-level competing lecture condition for this individual with better hearing would be set to 75 dB SPL to produce an SNR of −14 dB. Note that the individual with the poorer hearing has the target level presented at a higher level than the person with good hearing and at a more favorable SNR. The higher target level for the individual with poorer hearing compensates for that individual’s higher threshold for recognizing the individual words being spoken. The more favorable SNR for the individual with poorer hearing equates the two individuals with respect to their ability to recognize the individual words in the target lecture.

As in Experiment 1, participants were instructed to pay attention to the lecture which started first and to ignore the second lecture. If the level of the target lecture was more than 4 dB lower than that of the competing lecture, the participants also were told to attend to the softer lecture, with the opposite instructions being given if the target lecture was 4 dB higher than the competing lecture.Footnote 5 A counterbalancing procedure was used to ensure that an equal number of participants heard each of the three target lectures in each of the three conditions: quiet, low level competing lecture, and high level competing lecture. Order of presentation of the three conditions was counterbalanced across participants.

Procedure

Participants were given the same instructions as in Experiment 1, using the same equipment and sound-attenuating chamber.

As in Experiment 1, after answering questions concerning the last target lecture heard by a participant when it was being masked by a competing target lecture, the participant was asked to answer five detailed questions about the competing lecture, even though the participant had been asked to ignore it in the first place.

Power calculations

The results from Experiment 1 indicated that when the three groups were not equated with respect to word recognition, there were statistically significant and substantial differences in performance across the three groups. Our rationale for conducting Experiment 2 was to investigate the extent to which these groups differences were reduced or eliminated when we did equate all individuals, irrespective of the group to which they belonged, for word recognition. In an F-test, the power to reject the null hypothesis of no differences among groups when the research hypothesis is true is determined by the value of the noncentrality parameter of a noncentral F distribution (higher values yield more power). We determined (see Appendix) the expected power of an F test for group differences, assuming a 75 %, 80 %, or 90 % reduction in the estimate of the noncentrality parameter found in Experiment 1. The power to detect a group effect given a 75 % reduction was 0.8. This means that if the noncentrality parameter in Experiment 2 were to be 25 % of its corresponding value in Experiment 1, the probability of rejecting the null hypothesis would be 0.80. Hence, we would have an 80 % chance of rejecting the null hypothesis that there were no group differences if equating for word recognition reduced the noncentrality parameter to 25 % of its estimated value when we did not equate for word recognition. The powers associated with 80 and 90 % reductions were 0.7 and 0.4, respectively. See the Appendix for further details.

Results and discussion

Figure 3 plots the average number of correctly answered detail (left) and integrative (right) questions as a function of the level of the competing lecture (quiet, low, high) for the normal hearing younger, normal hearing older, and hearing-impaired older participants. As in Experiment 1, this figure indicates that the percentage of correctly answered questions was greater for detail than for integrative questions. It also suggests that for detail, but not for integrative questions, more questions were answered correctly when the target lecture was presented without a competing lecture. There was an indication that in a quiet background the hearing-impaired group answered fewer detail questions correctly than did the other two normal-hearing groups. Finally, there does not appear to be any differences in performance across listener groups (young NH, old NH, old HI) in the presence of a competing lecture. A mixed-design ANOVA (2 Question Types × 3 Competitor Levels × 3 Groups, with Question Type and Competitor Level as the within-subject factors, and Group as the between-subjects factor) did not find any evidence that performance was affected by Group (F [2, 51] = 2.86, p = 0.066, ηp 2 = 0.101), and there were no significant interactions between Group and the other factors (Competitor Level X Group, F[4,102] = 1.03, p = 0.67, ηp 2 = 0.023; Question Type X Group, F[2, 51] < 1, ηp 2 = 0.020; Competitor Level X Question Type X Group, F[4,102] < 1, ηp 2 = 0.037). The lack of any significant effect of Group suggests that the higher-level post-lexical processes involved in spoken-language comprehension are capable of sustaining the same level of performance in each of the three groups when adjustments are made to equate all listeners with respect to word recognition, and that the group differences in comprehension found in Experiment 1 were primarily due to age-related changes in hearing.

Average percentage of detail and integrative questions answered correctly by younger adults, older adults with normal hearing, and hearing-impaired older adults in quiet and in two levels of a competing lecture in Experiment 2. Both the signal level and the levels of the background lectures (when present) were adjusted for individual differences in word recognition (see text). Standard error bars are shown. The dotted line indicates chance level performance

The percentage of detail questions answered correctly was higher than the percentage of integrative question answered correctly, (F [1, 51] = 99.90, p < 0.001, ηp 2 = 0.662). There was also a significant main effect of Competitor Level (F [2, 102] = 7.330, p = 0.001, ηp 2 = 0.126), which was qualified by a significant interaction between Question Type and Competitor Level (F [2, 102] = 22.876, p < 0.001, ηp 2 = 0.310).

Because there was a significant interaction between Question type and Competitor Level, we carried out separate mixed-design ANOVAs for detail and integrative questions, with Competitor Level as a within-subject variable, and Group as a between-subjects variable. For detail questions there was a significant main effect of Competitor Level with the percentage of correctly answered detail questions being greater in the absence of a competing lecture than in the presence of one (F [2, 102] = 22.336, p < 0.001, ηp 2 = 0.305) but no Group effect (F [2, 51] < 1, ηp 2 = 0.027), and no interaction between Competitor Level and Group (F [4, 102] = 1.16, p = 0.33, ηp 2 = 0.043). Follow-up pairwise comparisons with respect to Competitor Level indicated that more detail questions were correctly answered in the quiet condition than in the presence of either a low-level or high-level competitor (F [1,53] = 39.77, p < 0.001, and F [1,53] = 27.85, p < 0.001, respectively), with no significant difference between the low- and high-level conditions (F [1,53] < 1).

For integrative questions, neither the main effect of Competitor Level, nor Group, nor the interaction between Competitor Level and Group was significant (F [2,102] < 1, ηp 2 = 0.011, F [2, 51] = 2.90, p = 0.064, ηp 2 = 0.102, and F [4,102] < 1, ηp 2 = 0.007,for the Competitor Level main effect, Group main effect, and Competitor Level × Group interaction effect, respectively). Hence, there is no evidence to support the hypothesis that the ability to integrate information gleaned from the lecture is affected by the background level of the lecture or that it differs among the group of participants. It is possible that the lack of a group effect for the integrative questions may be due to the fact that declines in performance in the older groups were limited by a floor effect since these groups were performing close to chance level. Another possible reason for this result is that correct recognition of detail information is not necessarily required in order to get the overall ‘gist’ of the passage. Hence, integrative questions may simply be less susceptible to the presence of competing sound sources.

To investigate whether there were group differences in susceptibility to intrusions from the competing lecture, an ANOVA was performed on the data for the surprise test with Group as a between-subjects factor. The percentage of correctly answered detail questions from the competing lecture (46.55 %, 32.65 %, and 43.75 % for young normal-hearing, old normal-hearing, and old hearing-impaired participants, respectively) did not differ significantly from one another (F [2, 33] < 1, ηp 2 = 0.042), which indicated that all groups of participants were equally likely to remember the same number of details for the distracting lectures. Moreover, a one-tailed t-test indicated that the participants in this Experiment were processing and storing information from the competing lecture above chance levels (t [35] = 3.211, p = 0.003). Hence the evidence supports the hypothesis that the participants in Experiment 2 were processing and storing at least some information from the competing lecture, and that the amount of information recalled from the competing lecture did not differ with respect to age and hearing status.

Experiment 3

Experiment 3 compared two types of maskers—12-talker babble and single-talker competing speech—in a within-subject design, and examined the degree to which age differences between younger and older normal-hearing adults might be affected by the informational complexity of the masker. More specifically, the lectures in Experiments 1 and 2 were used as the target speech, and the masker was either 12-talker babble (Schneider et al., 2000) or similar lectures spoken by the same person.

Note that a babble of voices is acoustically more dissimilar to the target lecture than a competing lecture. Note also that a competing lecture is more likely to initiate competing activity in the syntactic, semantic, and linguistic processes supporting spoken-language comprehension. Both of these factors make the competing lecture more of an informational masker than a babble of voices.Footnote 6 However, it is not clear as to which masker (babble or a competing lecture) provides a greater degree of energetic masking.

Multi-talker babble is a broadband distractor. Previous research shows that a larger number of interfering talkers (e.g., 8 compared to 2 talkers) reduces target speech intelligibility due to the gradually increased spectral-temporal saturation (Boulenger, Hoen, Ferragne, Pellegrino, & Meunier, 2010; Brungart, Simpson, Ericson, & Scott, 2001; Hoen et al., 2007). Single-talker competing speech, on the other hand, may only mask limited frequencies of target speech at one given time, and the pauses between clauses or sentences could provide listeners with the opportunity to listen in the gaps (Peters, Moore, & Baer, 1998). Hence, even a same voice masker (which would have the same long-term spectrum as the target voice) could produce a lesser amount of energetic masking because the peaks and troughs in the amplitude of the masking voice would not necessarily occur at the same point of time as those of the target voice. For example, a spoken word in the target stream could occur during a pause in the masker voice, producing very little energetic masking at that point of time. Therefore, from an energetic masking point of view, multi-talker babble might produce more masking than single-talker competing speech.

However, single-talker competing speech may cause more informational masking than babble mainly for two reasons. Firstly, the competing speech is acoustically more similar to the target speech than is babble due to the prosodic and fine structure similarities, not to mention that the target and distracting lectures share the same voice. Considering that acoustic similarity is one of the most important determining factors for ease of stream segregation and level of informational masking (Agus, Akeroyd, Gatehouse, et al., 2009; Drullman & Bronkhorst, 2004; Durlach et al., 2003; Ezzatian, Li, Pichora-Fuller, & Schneider, 2012), we would expect the competing speech to produce more informational masking than 12-talker babble. Second, the semantic content in the competing speech is capable of activating the same semantic and linguistic process as the target speech; thus, it may interfere with comprehension of the target speech at more central levels (Boulenger et al., 2010; Rossi-Katz & Arehart, 2009). Taken together, it is reasonable to predict that single-talker speech may be more distracting than 12-talker babble.

The purpose of Experiment 3 was to examine the effect of competitor type, competitor level, and age on informational masking. A similar methodology to that of Experiment 2 was adopted here. Normal hearing younger listeners and normal hearing older listeners were presented with four lectures in a 2 (Competitor Type: babble vs. competing lecture) × 2 (Competitor Level: low vs. high) within-subject design. As in Experiment 2, the level of the target lecture was set to 45 dB above each listener’s babble threshold, with the SNR in the low Competitor Level condition set at each individual’s R-SPIN threshold −6 dB, whereas the SNR in the high Competitor Level condition was set at each individual’s R-SPIN threshold −12 dB.

Method

Participants

The participants in Experiment 3 consisted of 16 normal-hearing younger adults (5 males) and 16 normal hearing older adults (5 males). Their ages and years of education appear in Table 1. They were recruited from the same pool of participants as the volunteers in Experiments 1 and 2 and were subject to the same inclusion criteria as the volunteers in those experiments. Their audiometric thresholds are displayed in Fig. 1.

Auditory measures

Both babble threshold and low-context R-SPIN thresholds of each of the participants were determined following the procedures described in the “Method” Section of Experiment 2 and appear in Table 1.

Cognitive measures

The same three cognitive measures (vocabulary, reading comprehension, and working memory) were collected on participants in this study and appear in Table 1.

Materials and apparatus

In Experiment 3, the four shorter lectures were used as targets, and the two longer ones were used as competitors. Each participant listened to four lectures monaurally over the right headphone: two were presented with babble as a masker, and the other two were presented with a competing lecture as the masker. Also, two of the lectures were presented in the low distractor level condition, and the other two in the high distractor level condition, so the experiment was a 2 (distractor type: babble vs. lecture) × 2 (distractor level: high vs. low) within-subject design. The target lecture was presented to the right ear 45 dB above the level of each individual’s right-ear babble threshold. The distractor (babble or competing lecture) was presented to the right ear as well, but it started 5 s after the target lecture had started. In the low distractor level condition the SNR was set to −6 dB lower than the listener's low-context SPIN threshold, and in the high distractor level condition it was set −12 dB lower. Order of presentation of the four conditions was counterbalanced across participants. The four target lectures and two masking lectures appeared equally often in each of the four conditions.

Procedure

Participants were given the same instructions as in Experiment 1, using the same equipment and sound-attenuating chamber.

After answering questions concerning the last target lecture heard by a participant when it was being masked by a competing target lecture, the participant was asked to answer five detailed questions about the competing lecture, even though the participant had been asked to ignore it in the first place. In addition, in Experiment 3 only, after each lecture, participants also rated how difficult it was to understand the lecture on a scale from 1 to 7 (with 7 being the most difficult).

Results and discussion

Figure 4 plots the percentage of correctly answered detail and integrative questions as a function of Age (younger vs. older), Competitor Type (lecture vs. babble) and the level at which the competitor was presented (low vs. high). As in Experiments 1 and 2, more detail than integrative questions were answered correctly in all conditions. However, the difference in performance between detail and integrative questions appears to be larger when the masker was babble than when it was a competing lecture. Consistent with Experiment 2, the level of the competing lecture does not appear to affect performance for detail questions. However, performance on detail questions appears to decrease with an increase in masker level when the masker is babble. Finally, for integrative questions, the level of the masker does not appear to have any systematic effect on performance.

Average percentage of detail and integrative questions answered correctly by younger and older adults in Experiment 3 as a function of competitor type (babble vs. lecture) and the level at which the competitor was presented (high vs. low). Both the signal level and the levels of the background lectures (when present) were adjusted for individual differences in word recognition (see text). Standard error bars are shown. The dotted line indicates chance level performance

This pattern of results was confirmed by a 2 (Question Type: detail vs. integrative) × 2 (Competitor Type: babble vs. lecture) × 2 (Age: younger vs. older) × 2 (Competitor Level: low vs. high) mixed-design ANOVA with Question Type, Competitor Type, and Competitor Level as within-subject factors, and age as a between-subjects factor. Neither the main effect of Age (F[1,30] =1.03, p = 0.318, ηp 2 = 0.033) nor any of the interactions of Age with the other factors were found to be significant (ps> 0.15, for all interactions between Age and the other factors, ηp 2 < 0.063 for all interactions between Age and the other factors). The main effect of Competitor Level was not significant (F [1,30] = 1.02, p = 0.321, ηp 2 = 0.033), whereas the main effect of Competitor Type was (F [1,30] = 24.84, p < 0.001, ηp 2 = 0.453), but these two main effects were qualified by an interaction between Competitor Type and Competitor Level (F [1,30] = 4.26, p = 0.048, ηp 2 = 0.124). The main effect of Question Type was significant (F [1,30] = 70.55, p < 0.001, ηp 2 = 0.702) as was the interaction between Competitor Type and Question Type (F [1,30] = 13.21, p = 0.001, ηp 2 = 0.306). Finally, the three-way interaction between Competitor Type, Competitor Level, and Question Type was marginally significant (F [1,30] = 4.10, p = 0.052, ηp 2 = 0.120). No other significant interactions were observed.

Because there was no main effect of age, nor any interactions involving age, we collapsed over age. Figure 5 plots the percentage of correctly answered detail and integrative questions for the two levels of babble and competing lecture. This figure shows that when the distractor was babble, the percentage of correctly answered detail questions decreased with an increase in the level of the babble distractor. The figure also shows that fewer detail questions were answered correctly when the distractor was a competing lecture than when the distractor was babble. Moreover, the level of the competing lecture did not appear to affect performance. A repeated measures ANOVA on detail questions confirmed that there was a significant main effect of Competitor Type (F [1, 31] = 36.62, p < 0.001, ηp 2 = 0.542), and a significant interaction between Competitor Type and Competitor level (F [1, 31] = 6.70, p = 0.015, ηp 2 = 0.178). The main effect of Competitor Level was not significant (F [1,31] = 2.131, p = 0.155, ηp 2 = 0.064). Hence, for detail questions, the level of the distractor affects performance only when the competitor is babble.

Percentage of detail and integrative questions correctly answered (collapsed across age groups) for two different levels of either a babble or lecture competitor when target and competitor levels were adjusted to compensate for individual differences in word recognition in Experiment 3. Standard error bars are shown. The dotted line indicates chance level performance

The most likely reason for the interaction between level and masker type is that the particular SNRs employed in this experiment affected the similarity, and hence the informational interference between the target and competing lectures. In the low distractor level condition, the SNR was set at 6 dB lower than the individual’s SPIN threshold, and in the high distractor level condition it was set at 12 dB lower. Because the average SPIN thresholds for younger and older adults in Experiment 3 were 3.69 dB and 4.19 dB respectively, in the low distractor level condition the average SNR at which the target lecture was presented was approximately −6 + 4 = −2 dB, whereas in the high distractor level condition it was approximately −12 + 4 = −8 dB. However, performance did not differ across these two SNRS, producing a plateau in the function relating performance to SNR.

Freyman et al. (1999) also reported a similar plateau in the function relating performance to SNR for SNRs between −10 and −4 when a sentence target spoken by a female was masked by another sentence spoken by a different female whenever the target and masker were perceived to originate from the same source, as was the case in our experiments. However, when the sentence target was masked by speech spectrum noise, no such plateau was observed. In that case, decreasing the SNR always resulted in a decrease in performance. Such an effect also was noted by Egan, Carterette, and Thwing (1954).

What could be the reason for such a plateau when speech is masked by speech but not when it is masked either by steady-state noise or babble? From an energetic masking point of view, we would expect comprehension to suffer when the SNR is decreased, as was the case when the masker was babble. However, for single-talker competing speech, the increase in energetic masking that occurs when the SNR is reduced from −2 dB to −8 dB may have been counterbalanced by a decrease in the amount of informational masking associated with a change from −2 dB to −8 dB. From an informational masking point of view, the acoustic similarity between the target and the competing lectures would be greater for an SNR of −2 than it would be at an SNR of −8 dB. Hence, an increase in dissimilarity between the target and masker may have counterbalanced a decrease in SNR.

No such interaction between Competitor Type and Competitor Level was observed for integrative questions. When only integrative questions were considered, a repeated measures ANOVA did not reveal main effects of Competitor Level (F [1,31] < 1, ηp 2 = 0.001), Competitor Type (F [1,31] = 2.49, p = 0.125, ηp 2 = 0.074), nor any interaction between the two (F [1,31] < 1, ηp 2 = 0.000). Hence, in Experiment 3, neither Competitor Type nor Competitor Level significantly affected performance on integrative questions. However, the lack of effects for integrative questions may be due, in part, to the fact that performance for integrative questions was quite close to chance levels.

In Experiment 3, participants also rated each target lecture with respect to how difficult it was to understand. Figure 6 shows how subjective ratings of difficulty were affected by competitor type (babble vs. competing lecture), competitor level (low vs. high), and age (young normal hearing versus old normal hearing). Figure 6 suggests that it is more difficult to understand a lecture in the presence of a competing lecture than in the presence of babble. There also is some indication that perceived difficulty increases with competitor level for babble maskers, with the opposite appearing to occur when the competitor is a lecture. A two Age × 2 Competitor Type × 2 Competitor Level ANOVA revealed a significant main effect of Competitor Type (F [1, 30] = 182.45, p < 0.001, ηp 2 = 0.859) and a significant interaction between Competitor Type and Competitor Level (F [1, 30] = 6.10, p = 0.019, ηp 2 = 0.169). The main effect of Age, as well as any interaction between Age and the other factors failed to reach significance (all ηp 2 < 0.030). Hence, the age of the listeners did not affect how difficult it was to understand the target lectures when the SNRs were adjusted to compensate for age-related declines in speech recognition.

Perceived difficulty in listening to the target lecture in the presence of either a lecture or babble competitor for normal-hearing younger and older participants at two different levels of the competitor when target and competitor levels were adjusted to compensate for individual differences in word recognition in Experiment 3. Standard error bars are shown

To investigate whether there was an age difference in susceptibility to intrusions from the competing lecture, we examined performance on the surprise test. Younger and older adults had exactly the same percentage of correct responses for distractor details (48.33 %), which indicated that both age groups were equally likely to remember the details of the distracting lectures. Moreover, a one-tailed t-test indicated that the participants in this experiment were processing and storing information from the competing lecture above chance levels (t [31] = 5.042, p < 0.001). Hence, the evidence supports the hypothesis that the participants in Experiment 3 were processing and storing at least some information from the competing lecture, and that the amount of information recalled from the competing lecture did not differ with respect to age.

The results of Experiment 3 demonstrated the following three points: (1) single-talker competing speech was more distracting than 12-talker babble; (2) older and younger adults were equally distracted by maskers in a situation in which the listening situation was individually adjusted for age differences in word recognition, and (3) increasing the distractor level negatively affected speech comprehension when the masker was babble but had no effect when the masker was a competing lecture in the same voice.

Contribution of cognitive abilities to speech comprehension in experiments 1-3

Because the comprehension, interpretation, and recall of information presented in these target lectures is likely to place demands on a number of linguistic and cognitive processes, we also obtained measures of vocabulary knowledge, reading comprehension, and working memory in all but three of the participants in these experiments. To evaluate the relative contributions of these three measures, for each individual, we averaged the percentage of both detail and integrative questions answered across all conditions in which the target lecture was presented in the presence of a competing lecture. We then centered these averaged scores within each combination of experiment (Experiments 1, 2, and 3) and group (young normal hearing, old normal hearing, old hearing impaired) to remove any Group × Experiment differences in performance. We then collapsed over old normal-hearing and old hearing-impaired individuals to form two groups (old and young) and centered each of the three measures within each of the two groups to control for level differences between the two groups. The contribution of these three measures to speech comprehension when a competing lecture was present was investigated in the following model

where y is the centered speech comprehension score, MH is the centered Mill Hill score, ND is the centered Nelson Denny score, and WM is the centered working memory score. This model was able to account for 18.1 % of the variance in the centered speech comprehension scores. To see whether there were age differences with respect to the contribution of these three variables, we tested the joint null hypothesis that a Young = a Old ; b Young = b Old ; and c Young = c Old . This null hypothesis could not be rejected (F [3,131] = 2.15, p = 0.097). We collapsed the data over Age to arrive at a model in which

To determine which variables contributed significantly to speech comprehension, we tested the null hypothesis that a = c = 0. This null hypothesis could not be rejected (F [2, 134] < 1). These results suggest that the contributions of these three factors to performance does not differ with Age and that the only measure that is significantly (but weakly) correlated with performance is reading comprehension (r = 0.37). The fact that reading comprehension is the best predictor of speech comprehension is not surprising given that the reading comprehension test taps many of the same comprehension skills that are essential for understanding the spoken lectures and answering the comprehension questions.

General discussion

In three experiments, we examined the manner and extent to which a meaningful and acoustically similar lecture might interfere with comprehension of a target lecture and also evaluated whether or not there were any age differences with respect to the degree of interference produced by the competing lecture. The results showed that older adults did experience more difficulties than younger adults in speech comprehension under identical listening situations (Experiment 1). However, after adjusting the listening situation to compensate for each listener’s ability to recognize individual words (Experiments 2), age differences in listening comprehension were no longer evident, even for an older hearing-impaired group.

It is especially interesting that the participants in the older hearing-impaired group performed as well as the participants in the other two groups when all participants were equated for spoken-word recognition. The hearing-impaired older adults not only had poorer hearing than the normal-hearing older adults (greater degree of hearing loss, higher babble, and R-SPIN thresholds, they also had significantly lower working memory and reading comprehension scores than normal-hearing older adults (Fig. 1; Table 1). Although equating individuals in both groups for recognition of individual words might be expected to compensate for the poorer hearing of the hearing-impaired older adults, we would not necessarily expect it to compensate for their poorer working memory and reading comprehension abilities. Yet when all three groups were equated for the recognition of the individual words being spoken, group differences in the ability to answer questions concerning the target lectures disappeared. This suggests that the primary factor that is responsible for group differences with respect to comprehending and remembering the information contained in these lectures is the ability of individuals to recognized the individual words when the lectures are masked either by a competing lecture in the same voice or by multi-talker babble. Hence, adjusting the level of the target lecture, and the SNR at which it is presented, is sufficient to eliminate comprehension differences between younger normal-hearing and older hearing-impaired individuals when listening to a lecture masked by another lecture despite: 1) 16-dB difference in babble thresholds; 2) 7.5-dB difference in R-SPIN thresholds; 3) 55-year-age difference, 4) 17 % decline in working memory capacity, and 5) 32 % decline in reading comprehension. With respect to this issue, it is important to note that the older adults’ vocabulary knowledge exceeds that of the younger adults by 12 %. Hence, once both younger and older adults are equated for word recognition, the superior vocabulary knowledge may allow them to offset age-related declines in working memory and reading comprehension (Schneider, Avivi-Reich, & Daneman, 2016).

Experiment 3 directly compared the disruptiveness of babble versus that of a competing lecture in the same voice, again when the level of the distractor was adjusted for individual differences in babble thresholds and in speech recognition thresholds. The results of Experiment 3 showed: 1) that normal-hearing older adults performed as well as their younger counterparts; 2) that a competing lecture was more disruptive than babble for all listeners; and 3) that higher levels of the babble distractor were more disruptive than lower levels, but this was not true for competing lectures.

Source of age differences in spoken language comprehension