Abstract

Tools afford specialized actions that are tied closely to object identity. Although there is mounting evidence that functional objects, such as tools, capture visuospatial attention relative to non-tool competitors, this leaves open the question of which part of a tool drives attentional capture. We used a modified version of the Posner cueing task to determine whether attention is oriented towards the head versus the handle of realistic images of common elongated tools. We compared cueing effects for tools with control stimuli that consisted of images of fruit and vegetables of comparable elongation to the tools. Critically, our displays controlled for lower-level influences on attention that can arise from global shape asymmetries in the image cues. Observers were faster to detect low-contrast targets positioned near the head end versus the handle of tools. As expected, no lateralized performance bias was observed for the control stimuli. In a follow-up experiment, we confirmed that the bias towards tool heads was not due to inhibition of return as a result of early attentional orienting towards tool handles. Finally, we confirmed that real-world exemplars of the tools in the cueing studies were associated more strongly with specific grasping patterns than the elongated fruits and vegetables. Together, our results demonstrate that affordance effects on attentional capture are driven by the head end of a tool. Prioritizing the head end of a tool is adaptive because it ensures that the most relevant region of the object takes priority in selecting an effective motor plan.

Similar content being viewed by others

Introduction

Objects in our everyday environment vary in the extent to which they offer functional interaction. The properties of an object that convey relevance for action have been referred to classically as “action affordances” (Gibson, 1979). Tools are a unique class of objects, because they are artifacts whose function is tied closely to the identity of the object, and the actions they typically afford consist of highly specific motor routines (Creem & Proffitt, 2001a; Guillery, Mouraux, & Thonnard, 2013; Tucker & Ellis, 1998). For example, for an able-bodied observer, a nearby hammer affords pounding and it is wielded with a characteristic up-and-down arm motion. This strong functional specificity differentiates tools from other classes of objects, many of which are familiar and graspable, but whose identity is not typically associated with a specific function. For example, although a carrot could be grasped at its end to pound something, natural objects, such as vegetables, do not have a specific function and are not associated with a typical motor routine.

In line with the strong functional specificity of tools, a well-known finding is that viewing a tool automatically facilitates motor responses. For example, viewing a tool can speed responses that are compatible with the object’s function, even when the shape of the object is irrelevant to the observer’s task (Tucker & Ellis, 1998, 2001). These affordance effects are thought to arise due to the automatic activation of brain regions that integrate visual and motor information, and are sometimes called “visuomotor responses” (Gallivan, McLean, & Culham, 2011; Handy, Grafton, Shroff, Ketay, & Gazzaniga, 2003). For example, compared with non-manipulable objects, such as faces, houses, and animals (Chao & Martin, 2000; Gallivan, McLean, Valyear, & Culham, 2013; Handy et al., 2003; Proverbio, Adorni, & D'Aniello, 2011), and basic shapes (Creem-Regehr & Lee, 2005), tools elicit neural responses in posterior parietal cortex (PPC) and ventral premotor cortex (PMv) of the left hemisphere—areas understood to be involved in processing visual inputs for planning and performing actions (Lewis, 2006).

Given the apparent overlap between dorsal systems involved in planning and executing action, and those of spatial attention (Craighero, Fadiga, Rizzolatti, & Umilta, 1999; Rizzolatti, Riggio, Dascola, & Umilta, 1987), and eye-movements (Kustov & Robinson, 1996; Sheliga, Riggio, Craighero, & Rizzolatti, 1995), an important prediction is that automatically preparing a grasp should bias the allocation of attention between competing visual representations. According to a classic object competition model (Desimone & Duncan, 1995; Duncan, Humphreys, & Ward, 1997) sensory inputs compete for neural representation within visual and motor systems that represent object properties and their implications for action. Competition is integrated across the sensorimotor network and the “winner” is selected for further processing and response planning. To the extent that higher-order attributes, such as action affordances, are sufficient to influence the selection of competing visual inputs (Humphreys et al., 2013), this should enhance visual processing at the attended location (Adamo & Ferber, 2009; Handy et al., 2003).

In line with this prediction, there is mounting evidence that not only preparing a genuine motor response towards an object (Bekkering & Neggers, 2002; Humphreys & Riddoch, 2001; Kitadono & Humphreys, 2007; Symes, Ottoboni, Tucker, Ellis, & Tessari, 2010; Wykowska, Schubo, & Hommel, 2009) but also looking at objects that imply action (Handy et al., 2003; Humphreys et al., 2013) has a pronounced influence on attentional orienting. For example, patients with unilateral neglect are faster and more able to locate target objects within the neglected field when search is based on affordance-related cues (e.g., “find a target for which you would make a twisting action”) versus color or name cues (Humphreys & Riddoch, 2001). Neurologically healthy observers also are faster to respond to images of graspable versus non-graspable objects, particularly when it appears to lie within reach (Garrido-Vasquez & Schubo, 2014). The bias in attention towards tools is paralleled by strengthened neural responses in fronto-parietal brain regions. For example, using a variant of the classic Posner cueing paradigm (Posner, Snyder, & Davidson, 1980), Handy et al. (2003) presented observers with two line drawings of objects (which served as cues) simultaneously in either hemifield. The drawings depicted either tools, or non-tool objects. The observer’s task was to detect a target grating that was later superimposed upon one of the two objects. The authors compared brain responses using EEG, and later fMRI, on trials in which targets were superimposed on the competing tool, versus non-tool (non-graspable) cues. Event-related potential (ERP) data showed that attention (as reflected by increased P1 amplitude) was drawn to tools, particularly when the tool was located in the right (versus left) and lower (versus upper) visual field. The ERP results were paralleled by stronger fMRI responses for right-sided tool displays within left dorsal premotor (PMd), and inferior parietal cortex, consistent with the overall pattern of left-lateralization of tool-specific fMRI responses (Lewis, 2006). Similarly, using EEG, Humphreys et al. (2010) found that when participants made decisions about images of objects that were gripped congruently versus incongruently, there was a “desynchronization of the mu rhythm” (an early neural signature of action preparation) over motor cortex in the left hemisphere ~100-200 ms after display onset. Increased attentional allocation towards, and early dorsal coding, of tools was later demonstrated by Proverbio et al. (2011) using source localization of high-density ERP signals: early neural responses (within 250 ms of stimulus onset) that were greater for colored images of tools versus non-tools were recorded in somatosensory and premotor areas, followed by a later tool-specific component (in the 550-600 ms time window) over centroparietal cortex, particularly in the left hemisphere.

Together, these results support the argument that tools bias attention and activate dorsal motor networks involved in action programming, but they leave open the question of which part of a tool drives attentional capture. Tools, such as those used in the studies described above, are characteristically elongated objects with two distinct ends: the handle and the head. The handle is the part that is usually grasped to use the tool, while the head specifies the identity of the tool and is the part that is normally used to interact with or modify other objects or surfaces. Although, the results of a number of studies have been interpreted to suggest that tool handles drive affordance effects on behavior more generally (Anderson, Yamagishi, & Karavia, 2002; Barrett, Davis, & Needham, 2007; Cho & Proctor, 2010; Masson, Bub, & Breuer, 2011; Phillips & Ward, 2002; Riddoch, Edwards, Humphreys, West, & Heafield, 1998; Tucker & Ellis, 1998; Vainio, 2009; Yang & Beilock, 2011; Yoon & Humphreys, 2007), the effects can be inconsistent (Cho & Proctor, 2011), and task-specific (Pellicano, Iani, Borghi, Rubichi, & Nicoletti, 2010; Vainio, Ellis, & Tucker, 2007). Moreover, few, if any, studies have tested this question using single-element displays with behavioral tasks sensitive to early attentional orienting.

In the one study that has addressed this question to date, Matheson, Newman, Satel, and McMullen (2014) tested whether detection of a target probe was superior at the handle verses the head end of images of everyday graspable objects (tools) using a modified version of the Posner cueing task (Posner et al., 1980). Performance for the tools was contrasted with control images consisting of large animals. They found that detection accuracy was superior for targets that appeared near the handle versus the head end of the tools, and that this effect was paralleled by an enhancement in the early P1 ERP component for targets cued by the handle. There was no behavioral or EEG evidence for a lateralized bias towards the head or tail of the animals (although see Matheson, White, & McMullen, 2013). Surprisingly, and contrary to the findings of the previous ERP studies described above (Handy et al., 2003; Humphreys et al., 2010; Proverbio et al. 2011), the authors concluded that the P1 component probably reflects early attentional orienting that precedes the activation of dorsal sensorimotor responses, and that the effect was best explained by lower-level stimulus attributes rather than action affordance (Matheson et al., 2014). However, in line with the possibility that the tool handles cued attention due to lower-level shape cues, other studies have found that basic shapes that are not strongly associated with grasping actions but whose global shape conveys a sense of directionality can have a strong influence on attentional orienting (Anderson et al., 2002; Sigurdardottir, Michalak, & Sheinberg, 2014). It also is the case that, compared with a tool head, the handle could serve as a more reliable spatial cue for the position of the upcoming target (Gould, Wolfgang, & Smith, 2007).

We used a modified Posner cueing paradigm, modelled on that of Matheson et al. (2014), to examine whether attention is drawn to the handle versus the head end of everyday elongated tools when lower-level biases on attention are minimized. In the cueing experiments, participants were asked to detect a target dot that was positioned near either the handle or the head end of a single, centrally presented, high-resolution color photo of a tool (the cue), which was presented for 800 ms (Experiment 1) or 200 ms (Experiment 2). Importantly, to determine the extent to which lateralized effects of attentional capture by the tool images was attributable to action affordances implied by the image, we compared performance for the tools with natural control stimuli (fruits and vegetables) that are presumably not strongly associated with a specific function or action routine. Critically, we positioned square boxes at either end of the cue, which served as markers to indicate the possible position of the upcoming target. The markers therefore minimized the potential influence on attentional capture of lower-level stimulus characteristics, such as directionality and spatial uncertainty, that could arise in the context of elongated or asymmetric stimuli (Matheson et al., 2013, 2014). In Experiment 3, we sought to confirm whether or not real-world exemplars of the tools (which were depicted as color photographs in the cueing experiments) evoked more consistent grasping responses than the vegetable and fruit control stimuli. To maximize the relevance of our findings to real-world contexts, all of the images were scaled to match the size of the real-world objects they depicted.

Experiment 1

Method

Participants

Twenty-two undergraduate students (17 females, mean age = 21.95, SD = 2.9) from the University of Nevada, Reno, participated in the experiment for course credit, or $10 in cash. All participants were right-handed (Oldfield, 1971) and reported normal or corrected-to-normal vision. Participants provided written informed consent prior to the experiment, and all procedures reported here and in the following studies, were approved by the University of Nevada, Reno Social, Behavioral, and Educational Institutional Review Board. Data from two participants were excluded from the analysis due to an inability to maintain their gaze on the central fixation point, resulting in a loss of > 50 % of the trials (see Procedure).

Stimuli and apparatus

The stimuli were twelve high-resolution color images of graspable objects. The images served as cues to the location of an upcoming target, which could appear at either the left or right end of the cue. Six of the images depicted tool artifacts, and six depicted natural objects (fruits and vegetables) (Figure 1). Importantly, our control stimuli were realistic color images of objects that are encountered and grasped frequently in everyday life, and were matched closely in size to the tools. The vegetables/fruits provide a strong test of the influence of the strength of action affordance on attentional capture, because although they are graspable they do not have particularly strong action associations or functions. In comparison, stimuli such as large animals and houses differ in size by orders of magnitude from tools, leaving open the possibility that they could be processed or represented differently to graspable objects for reasons other than affordance, even when matched for retinal size (Konkle & Oliva, 2012a, 2012b). Our stimuli, when viewed in profile, all had a left-right asymmetry in vertical extent, and each fruit/vegetable matched one of the tools in being greater in width (vertical extent) either at the head end/“tip,” versus the handle end/“stem” (Figure 1, right panel). We used a Canon Rebel T2i DSLR camera with constant F-stop and shutter speed to photograph the real objects. Image size was adjusted using Adobe Photoshop and the resulting stimuli were matched in size to the real-world objects they depicted. In degrees (○) of visual angle, the vertical (V) and horizontal (H) extent of the vegetables/fruits (V: M = 4.52○; SD = 2.06; H: M = 18.12○; SD = 1.95) matched that of the tools (V: M = 4.08○; SD = 1.81; H: M = 18.95○; SD = 3.22) (V: t(10) = 0.362, p = 0.79; H: t(10) = −0.492, p = 0.63, two-tailed). The images were cropped (1920 × 1080 pixels) and superimposed on a white background (RGB: 255, 255, 255). The images were displayed at the center of a 27” ASUS (VG278HE) LCD monitor (120-Hz refresh rate) with a screen resolution of 1920 × 1080 pixels, controlled by an Intel Core I7-4770 3.4 GHz computer (16 GB RAM), supported by a Figure 1. The cues were six high-resolution images of common elongated tool artifacts (left panel), and six control images depicting natural stimuli (vegetables and fruits; right panel). All images were presented in color and were matched in size to the real-world objects they depicted. The non-tool control stimuli provide a strong test of the strength of action affordances on attention, because they are familiar and graspable, and they are closely matched to the tools for size, elongation, and left/right shape asymmetry, yet they are not associated strongly with a specific function or action routine.

The cues were six high-resolution images of common elongated tool artifacts (left panel), and six control images depicting natural stimuli (vegetables and fruits; right panel). All images were presented in color and were matched in size to the real-world objects they depicted. The non-tool control stimuli provide a strong test of the strength of action affordances on attention, because they are familiar and graspable, and they are closely matched to the tools for size, elongation, and left/right shape asymmetry, yet they are not associated strongly with a specific function or action routine.

dedicated NVIDIA Quadro K4000 video card. The target was a gray dot (RGB: 128 128 128) of 50 mm in diameter (0.45° VA). The target was presented such that its edge appeared 100 mm from the nearest edge of the image (0.91° VA). Two black squares (100 × 100 mm), marking the potential position of the upcoming target, were centered vertically at each end of the cue. The fixation point was a black cross (10 × 10 mm, 1.01° VA) presented at the center of the screen. Throughout all trials, the participant’s head was stabilized using a chin rest fixed at 60 cm from the monitor. Participants’ gaze was monitored on all trials using a remote infrared eye-tracker (RED, SMI, Germany) with 60-Hz sampling rate, ~0.03○ spatial resolution, and 0.4o accuracy. At the beginning of the experiment the eye tracker was calibrated using a nine point calibration procedure. Participants used a standard wired QWERTY computer keyboard to make a button-press response to indicate target location. Stimulus presentation and timing, and recording of responses, was controlled using MATLAB (Mathworks, USA) and Psychtoolbox (Brainard, 1997).

Procedure

On each trial, an image of a tool or non-tool (cue) was presented, oriented to the left or right (Figure 2). We measured observers’ response time and accuracy to detect a target dot that appeared briefly near the handle/stem or head end/tip of the cue. At the beginning of the study, participants were instructed to maintain fixation at the center of the screen throughout the length of the study and to not pay attention to the cue and only anticipate the target. The cue did not appear until fixation had been maintained upon the central fixation point for 2,000 ms. The cue display consisted of an object image facing left or right, flanked at either end by a square in which the target could appear. The cue display was presented centrally for 800 ms, before target onset (50 ms). The cue remained on-screen for 400 ms, after which time all stimuli offset and participants had a further 6,000 ms to enter a response. If a response was not entered, the response window remained on-screen for 6,000 ms. The intertrial interval was 2,000 ms. Participants completed all trials with the head secured in a chin rest, which ensured constant viewing angle and facilitated monitoring of fixation using the eye tracker. Any trials in which gaze deviated outside an area of 2.5° VA in diameter around the fixation point during the 800-ms cue period were removed from further analysis (747 of 3,840 trials).

Trial sequence and displays used in a modified version of the Posner cueing task. Participants fixated a central crosshair for 2000 ms prior to the onset of the cue (800 ms). The cue display consisted of a single, centrally-positioned image of tool or non-tool, flanked at either end by a black square that indicated the possible position of the upcoming target. A target (grey dot) appeared briefly (50 ms) within one of the squares positioned at either the head or handle of the tool, or at the tip or stem of the non-tool image. The inset (upper right) shows a target appearing at the head of a tool cue (upper), and the tip of the non-tool cue (lower). The cue remained on-screen for 400 ms, followed by a blank screen that remained for a further 6,000 ms, or until a response was entered. The inter-trial interval was 2,000 ms. For illustration purposes the target is shown above as a dark gray dot, but in the experiment the target was low-contrast.

On the half of the trials, the tool/non-tool stimuli were presented with the handle/stem facing left and on the remaining trials the handle/stem faced to the right. As a result of varying stimulus orientation, the target was cued by the handle/head end of a tool, or the tip/stem end of the non-tool fruit/vegetable. For each cue Orientation (left vs. right), the target appeared near the handle/stem on half of the trials, and the head end/stem on the remaining trials. The experiment consisted of 4 blocks of trials each with 48 trials per block (12 stimulus exemplars (6 tools vs. 6 non-tools; Table 1) × 2 orientations (left vs. right) × 2 target positions (head/tip vs. handle/stem), for a total of 192 trials. The order of conditions was randomized within each block. The experiment took approximately 30 minutes to complete. RT and accuracy data were analyzed using repeated measures (RM) analysis of variance (ANOVA) and follow-up paired samples t tests, where appropriate.

Participants were instructed to make a speeded decision as to whether the target dot appeared on the left or right side of the display. Participants were explicitly informed that the central image cue was not predictive of the location of the upcoming target and that it should be ignored for optimal task performance. Participants were instructed to respond as quickly as possible, while maintaining highest possible accuracy (Sigurdardottir, Michalak, & Sheinberg, 2014).

Results

Data were analyzed from 20 subjects. In the analysis of reaction times (RTs), only correct trials were included, and trials in which RT was >3 SD from the mean were excluded from further analysis (0.005 %). In the ANOVA on accuracy, and for Experiment 2, we used all trials in the analysis (including those >3 SDs from the mean). The RT and accuracy data were analyzed using a three-way RM ANOVA, with the factors of Object Category (tools vs. non-tool controls), Target Location (head/tip vs. handle/stem) and Orientation (handle/stem left vs. right). In the analysis of RTs, although there was a marginal main effect of target location in which RTs were slightly faster towards the head/tip end of the stimuli versus the handle/stem (F(1, 19) = 3.55, p = 0.075, ηp2 = 0.158), there was a significant two-way interaction between Target Location and Object Category (F(1, 19) = 6.29, p = 0.021, ηp2 = 0.249). Figure 3A shows the mean RT to detect targets located at the head/tip versus handle/stem end, separately for the tool and non-tool control stimuli. Observers were faster to detect targets located at the head end compared with the handle of the tools (head end: M = 506, SD = 17.89, handle: M = 519, SD = 22.36; t(19) = −3.358, p = 0.003, r = −0.31), whereas there was no difference at either end of the control stimuli (tip: M = 509, SD = 26, stem: M = 508, SD = 20; t < 1). There were no other significant main effects or interactions in the RT data (all p > 0.120). For illustrative purposes, Figure 4 shows the mean RT to detect targets and the head/tip versus the handle/stem separately for each of the stimulus exemplars. Qualitative inspection of the RT data shows that performance was biased consistently in the direction of the head end for each of the tool stimuli, even for stimuli with markedly different global shapes. In contrast, detection RTs for targets positioned near the tip versus the stem of the non-tool control stimuli were mixed in that they did not favor either end.

Target detection performance plotted separately as a function of Object Category (tools versus non-tools) and Target Location (head / tip vs. handle/stem). (A) Reaction time (RT) to detect targets was faster when the target was positioned near the head versus the handle of the tool images. There was no difference in detection RT for the tip vs. stem of the non-tool stimuli. (B) There was no significant difference in target detection accuracy between the head versus handle of the tools, or the tip versus stem of the non-tools, although qualitatively, accuracy was higher for targets positioned near the tool head. Error bars represent ±1 standard error of the mean.

For illustrative purposes, RT is plotted for tool (left panel) and non-tool (right panel) stimuli for each Target Location, separately for each stimulus exemplar. Qualitatively, RTs were consistently faster at the head versus the handle of the tools, irrespective of the global shape of the object. No consistent pattern was observed for the non-tool control fruit/vegetable stimuli.

Overall, detection accuracy was high in all conditions (M = 99.6 % correct, SD = 0.009), indicating that subjects were able to comply with the instructions to maintain high accuracy levels (Matheson et al., 2014; Sigurdardottir, Michalak, & Sheinberg, 2014). For comparative purposes and to demonstrate that the effects of RT reported above were not due to speed-accuracy tradeoffs, Figure 3B displays the mean % of correct target detections at the head/tip versus handle/stem end, separately for the tool and non-tool stimuli. Qualitatively, the pattern of detection accuracy matched that of the RT analysis, with a trend for superior performance at the head end of the tools (vs. the handle), but no lateralized performance difference for the non-tool control stimuli. A three-way RM ANOVA revealed a significant main effect of Orientation (F(1, 19) = 11.24, p = 0.003, ηp2 = 0.372), indicating that detection accuracy was higher when the handle/stem was oriented leftward (and the head/tip oriented rightward) (M = 99.3 %, SD = 0.89 %) versus the handle/stem oriented rightward (M = 99.9 %, SD = 0.44 %). There were no other statistically significant main effects or interactions in the accuracy data (all p > 0.31).

Experiment 2

In Experiment 1, we found that targets preceded by an 800-ms cue depicting a tool were detected more rapidly when they appeared near the head end of the tool versus the handle. No lateralized detection performance bias was observed for targets positioned at either end of the control fruit/vegetable stimuli. Together, these results suggest that there is a bias in covert attention towards the head end of a tool—the region that specifies the action affordance implied by the image. It is possible, however, that attention could have been deployed to the handle relatively early following cue onset and shifted subsequently to the opposite end of the shape (Egly, Driver, & Rafal, 1994). The results reported above could therefore reflect slower responding to targets at the previously attended handle location consistent with a pattern of inhibition of return (IOR) (Posner & Cohen, 1984), rather than a facilitatory effect reflecting automatic initial orienting towards the tool head. It is the case that centrally presented cues, such as arrows, can elicit IOR, although often at longer cue-target SOA delays than those studied here (i.e., 900 ms: Weger, Abrams, Law, & Pratt, 2008). To rule out this alternative explanation, we examined cueing effects for tools (and control stimuli) with a brief cue-target SOA of 200 ms. If attention is drawn automatically to tool handles early after cue onset (followed by subsequent inhibition-of-return to the cued location), then detection performance should be superior for targets appearing near the handle (versus the tool head) at a shorter 200 ms cue-target SOA. Alternatively, if the results of Experiment 1 reflect a lateralized bias in attention that was initially in the direction of the head end of the tool, then at the shorter 200 ms cue-target SOA we might expect to observe either an early facilitatory effect on RTs to the head end of the tool, or no difference in detection performance at the head versus the handle, consistent with previous studies showing that the time course of reflexive attentional orienting to the periphery following meaningful central cues can take longer to develop (Fischer, Castel, Dodd, & Pratt, 2003; Van der Stigchel, Mills, & Dodd, 2010) than for peripheral cues.

Participants

Twenty undergraduate students (14 females, mean age = 21.6, SD = 3.1) from the University of Nevada, Reno, participated in Experiment 2 for course credit. All participants were right-handed (Oldfield, 1971) and reported normal or corrected-to-normal vision. Data from all 20 participants were analyzed.

Stimuli, apparatus, and procedure

The stimuli, apparatus, and procedure for Experiment 2 were identical to Experiment 1, except that the cue was presented for 200 ms before the onset of the target. As in Experiment 1, an eye tracker was used to ensure that our participants maintained fixation before trial onset and throughout the cue period. Trials in which gaze deviated outside 2.5° VA in diameter around the fixation point were removed from further analysis (359 of 3,840 trials). As in Experiment 1, participants each performed 4 blocks of trials, and with 48 trials per block, there were a total of 192 trials. Participants were again informed that the central image cue was not predictive of the location of the upcoming target and that it should be ignored for optimal task performance.

Results

For Experiment 2, mean (SD) target detection RT in each Object Category, Target Location, and Cue Orientation are shown in Table 1. The RT and accuracy data were analyzed using RM ANOVA with the factors of Object Category (tools vs. non-tool controls), Target Location (head/tip vs. handle/stem) and Orientation (handle/stem left vs. right). For the analysis of reaction times (RTs), only correct trials were included, and trials in which RT was >3 SD from the mean were excluded from further analysis (0.003 %). There were no significant main effects or interactions in the analysis of RTs (Table 1). Detection accuracy was again high (M = 99.7 %, SD = 0.89 %), and there were no significant main effects or interactions in accuracy.

In summary, in Experiment 2 we found that there is no lateralized bias in the allocation of attention towards the handle (or the head end) of a tool image following the brief (200 ms) cue-target SOA. Similarly, no lateralized bias in attention was observed in target detection for the vegetable/fruit cues. Together with the results of Experiment 1, our results indicate that affordance effects on attentional capture reflect an initial attentional bias toward tool heads, not tool handles. This attentional effect is measurable behaviorally at 800 ms, but not at very brief (e.g., 200 ms) cue-target SOAs, consistent with previous studies that have found that learned, arbitrary orienting responses elicited by centrally presented cues emerge relatively more slowly (Fischer et al., 2003; Van der et al., 2010). The results of Experiment 2 further suggest that the box markers we positioned at either end of the cues were effective in minimizing rapid, reflexive attentional orienting as a result of lower-level shape cues (Anderson et al., 2002; Sigurdardottir, Michalak, & Sheinberg, 2014).

Experiment 3

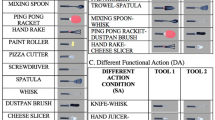

In Experiment 1, we observed a lateralized attentional bias towards tool heads (versus handles) but no bias toward the stem vs. tip end of vegetables and fruits. This result is consistent with the notion that tool heads capture attention because of they carry important information about affordance for action. We postulated that a lateralized bias should not be observed for other graspable objects like fruits and vegetables, because they do not have strong action affordances. Although fruits/vegetables are not associated with stereotypical actions or motor routines, they are more similar to tools in terms of frequency of use, real-world size, and elongation than stimuli, such as large animals and inanimate objects, as have been used as controls in previous studies (Matheson et al. 2013, 2014, Handy et al., 2003). Nevertheless, it is important to provide empirical evidence that tools are indeed more strongly associated with specific action routines than elongated fruits and vegetables. In Experiment 3, we asked a set of observers to grasp real-world exemplars of each of the stimuli depicted as image cues in Experiments 1 and 2 and photographed each subject’s grasp. We then collected similarity ratings on the grasps from a different set of participants.

Participants

A total of 22 right-handed observers participated in Experiment 3 for course credit. Twelve of these observers (who had previously completed Experiment 2; 8 females, mean age = 21.4, SD = 2.9) performed the Grasping Task and were photographed while performing a typical grasp with each of the objects. The remaining 10, new participants (6 females, mean age = 23.3, SD = 3.2), performed the Rating Task, in which they judged the overall similarity of the grasps that had been performed with each object.

Stimuli and apparatus

In the Grasping Task, we used the six real-world tools and the six vegetables and fruits that were depicted as photographs in the cueing studies (Figure 1). An additional two objects (pear, plastic fork) served as practice items for the purpose of explaining the task to participants. The stimuli were presented on a table covered with a black cloth. Participants were photographed during naturalistic grasping using an iPhone 6 s camera (12 mega pixel). For the Rating Task, the images for each object were displayed on a 27” ASUS (VG278HE) LCD monitor.

Procedure

In the Grasping Task, the experimenter placed each object on the table, directly in front of the participant. Participants were instructed to grasp each item as if to perform the most typical action with the object. Participants initially performed a grasp with each of the practice stimuli (fork, pear) before continuing with the experimental items. The 12 objects were presented to observers one at a time in random order. The objects were placed on the table with handle/stem pointing towards the participant’s dominant hand. The experimenter photographed subjects’ hand after the object had been grasped (Figure 5). The Grasping Task took ~15 minutes to complete.

“Typical grasps” for the tool and non-tool objects. In the Grasping Task, participants were instructed to grasp each item “as if to perform the most typical action with the object.” Photos are shown separately for each tool (left panel) and fruit/vegetable (right panel). Each row illustrates grasps performed by a single subject (S1-12).

In the Rating Task, the 12 grasp images for each object (one image per subject in the Grasping Task) were displayed to participants in a 4 × 3 matrix on a computer monitor, separately for each exemplar. The stimuli were displayed in random order. Participants were told that the images depicted photos of twelve individuals grasping familiar objects and were asked to rate the overall similarity of the grasps for each object. Responses were made by entering a number from 1 (“not similar at all”) to 10 (“very similar”) on a computer keyboard. The Rating Task took ~10 minutes to complete.

Results

Photos from the Grasping Task are displayed in Figure 5, separately for each object (columns) and participant (rows). Inspection of the photos indicates, qualitatively, that the grasping actions were more similar across subjects for the tools than the non-tool exemplars. Indeed, several of the participants grasped the vegetables naturally with the left hand, as if preparing “to peel” with the dominant hand (i.e., Subject 4, eggplant and pepper).

For the Rating Task, mean (SE) similarity ratings from a separate set of observers are displayed in Figure 6. For illustrative purposes, the mean similarity ratings are displayed separately for each exemplar, as well as overall for each class of stimuli (tools vs. non-tools). Overall, there was a striking consistency in the mean ratings for the tools (which were generally perceived as being very similar) and for the fruits/vegetables (which were perceived as being less similar). A paired-samples t test confirmed that grasps for the tools were significantly more similar than those for the fruit/vegetables (t(9) = 14.949, p < 0.001, Cohen’s d = 2.839).

Mean similarity ratings for the tool (dark gray) and non-tool (light gray) stimuli in the Rating Task. Ratings were collected from 10 individuals who had not participated in the cueing experiments. Mean similarity ratings are shown separately for each item (left), as well as averaged across the tool and non-tool sets (right). Tools grasps were rated as being significantly more similar than those for the fruits/vegetables.

Discussion

We used a sensitive attentional task to determine whether the biases in visuospatial attention that have been reported for elongated tools is attributable to lateralized capture by the end that is grasped (the handle) or the end that identifies uniquely the object (the head). Detection performance was compared for tools that have a strong action association versus fruit/vegetable control stimuli that are not associated with a specific function or motor routine. Critically, we used cue displays that minimized potential influences of global shape and spatial uncertainty on attention in a modified version of the Posner cueing task (Green & Woldorff, 2012). To the extent that one end of a tool is prioritized for attention should lead to increased visual sensitivity, as reflected by better target detection performance, at the attended location. In Experiment 1 we found a lateralized bias in detection performance for the tool cues: low-contrast targets were detected more rapidly when they appeared near the head versus the handle of the tool. In Experiment 2 we reduced the cue-target SOA from 800 ms (Experiment 1) to 200 ms and confirmed that the attentional advantage near the tool heads observed in Experiment 1 was not due to inhibition of return resulting from an early attentional bias toward tool handles. Indeed, at the shorter cue-target SOA we did not find any evidence of lateralized attentional bias for the tools or the control stimuli, consistent with the expected (slower) time course of reflexive attentional orienting to the periphery following meaningful central cues (Fischer et al., 2003; Van der Stigchel et al., 2010). Importantly, the attentional bias to tool heads observed in Experiment 1 was automatic because the image cues were orthogonal to the observers’ task and they held no predictive information about target location. Importantly, we found no systematic attentional bias in favor of either end of the fruit/vegetable control stimuli. In Experiment 3, we compared grasping patterns for the real-world versions of our tool and non-tool stimuli and confirmed that the tools were associated with more specific grasping actions than the fruits and vegetables. These results suggest that it is the association of tools with specific actions, rather than simply their size or familiarity (Handy et al., 2003; Matheson et al., 2013), that drives lateralized effects on attentional capture. Together, our findings help to resolve the apparent discrepancy between studies that have documented high-level effects of action affordance on attention (Garrido-Vasquez & Schubo, 2014; Handy et al., 2003; Humphreys et al., 2013; Roberts & Humphreys, 2011; Humphreys et al., 2013) and recent arguments that these attentional effects are not attributable to affordances (Matheson et al., 2014). Specifically, when lower-level influences of the stimulus are controlled in the context of our version of the Posner cueing task, lateralized biases on attention are observed, but in this case attention is drawn rapidly to the head end of tools, not the handle.

Our findings build on and extend the results of recent work by Matheson and colleagues (2014) who argued that facilitation of motor responses to tools reflects low-level attentional biases elicited by tool handles, rather than the automatic activation of motor schemas. The authors used a modified version of the Posner cueing task to compare detection performance for targets cued by images of manipulable objects (e.g., mug, frying pan, knife, axe) versus large animals (e.g., bear, cheetah, deer, elephant), which presumably we do not interact with manually and therefore are not associated with action affordances. The cues had no effect on reaction times in their study, but detection accuracy was higher for targets located near the handle rather than the head end of the tools. No lateralized performance bias was observed for the control stimuli, although qualitatively accuracy was also superior for targets cued by the animal heads (which were more pointy/directional) versus the tail, consistent with a previous study by the same authors using a matching stimulus set (Matheson et al., 2013). The behavioral effect of the tool cues on accuracy was matched by an enhanced P1 ERP component for targets cued by the handle (versus the head) of the tools, but again no neural difference was observed for the head versus tail of the animal cues. The authors concluded that attention is drawn automatically to tool handles. Critically, although these behavioral results could be viewed as being consistent with an affordance explanation (because lateralized effects were observed for the tools but not the control stimuli), the authors argued that “because P1 is an early index of visual attention, it likely precedes activation of sensorimotor simulation in the motor or premotor cortices” (p. 22) and that the effect was explained by lower-level stimulus attributes rather than action affordance (Matheson et al., 2014). However, this interpretation of the P1 response seems inconsistent with that of several previous ERP studies reporting high-level tool-based effects on attention (Handy et al., 2003; Humphreys et al., 2010; Proverbio et al., 2011).

We resolve the apparent discrepancy between the findings of Matheson et al. (2014), and mounting evidence from other studies showing affordance-related effects on attentional orienting, by demonstrating that when low-level attentional biases elicited by tool handles in the context of a sensitive Posner cueing task are controlled, then we see a lateralized bias in target detection performance for tools versus non-tool control stimuli (as in Matheson et al., 2014). However, under these conditions the direction of the cueing effect is reversed: attention is directed towards the tool head rather than the handle. These results suggest that the directional effect for the tools reported by Matheson et al. (2014) therefore may have reflected stimulus-driven attentional capture due to the global shape of their tool stimuli, which were “pointier” towards the handle (Sigurdardottir et al., 2014), and probably also pointier than the control (animal) stimuli. Indeed, previous studies have shown that global shape cues can have a powerful influence on visuospatial attention, which is typically drawn towards the pointier region of asymmetrical nonsense shapes (Sigurdardottir et al., 2014) and familiar objects (Anderson et al., 2002). Therefore, when lateralized imbalances in stimulus directionality and spatial uncertainty are minimized in the Posner cueing task (Green & Woldorff, 2012), attention is drawn rapidly and reliably to the head end of tools, irrespective of the global shape of the image.

We argue that attentional orienting towards the head end of the tools in our study is unlikely to be due to lower-level attributes of the stimuli. We did not observe a lateralized asymmetry in target sensitivity on randomly interleaved trials depicting images of fruits and vegetables that were matched to the tools in size and shape asymmetry. Similarly, there was no lateralized bias towards either end of the tools (or the control stimuli) when the cue-target SOA was reduced to 200 ms in Experiment 2, arguing against a rapid, reflexive effect on attentional orienting as a result of lower-level shape cues (Sigurdardottir, Michalak, & Sheinberg, 2014). Finally, almost all of our tools were pointier at the handle than the head end, and if our results reflected an influence of global shape, this would predict the opposite pattern: enhanced target detection near the handle. In line with this idea, qualitative inspection of the pattern of response times for each of the tool exemplars used here revealed a strikingly consistent pattern of increased target sensitivity at the head end. In all, our data demonstrate that attention can be influenced by higher-level affordance cues and that the region of an elongated tool that drives these effects on attentional capture is the head, not the handle. Our data for the control stimuli in both the cueing and rating tasks provide a strong test of the claim that action affordances bias attention and rule out alternative explanations based on discrepancies between the real-world size, or familiarity, of “graspable” versus “non-graspable” stimulus classes.

Importantly, our image cues were matched in size and color to the real-world objects they represented. We argue that using realistically sized images of tools should maximize action-related influences on attentional capture, because the dimensions of the image are maximally consistent with the egocentric coordinates for action that would be computed if the stimulus were real and indeed could be grasped (Goodale, 2014). Using schematized or greyscale images of stimuli that are distinctly smaller in size than the real-world objects that they depict (Cho & Proctor, 2011; Matheson et al., 2013, 2014; Phillips & Ward, 2002) may increase the likelihood of observing low-level image-based, rather than affordance-based, influences on attentional orienting. Although it has been argued that we automatically access real-world size information when we recognize an image of an object (Konkle & Oliva, 2012a), which has implications for the activation of neural representations within ventral visual cortex (Konkle & Oliva, 2012b), dorsal stream motor networks are more likely to be sensitive to the dimensions of a stimulus that relate to action, and one important metric is physical size (Goodale, Westwood, & Milner, 2004; Haffenden, Schiff, & Goodale, 2001; Riddoch et al., 2011).

Nevertheless, although richer, more realistic stimuli bear a closer resemblance to the kinds of objects we encounter in everyday life, they can differ on many dimensions and it can be difficult to identify and therefore control for dimensions that influence performance. With respect to color, it is possible that observers were less inclined to attend to one end of our control stimuli, because color was more predictive of object identity compared to that of the man-made artifacts (Humphrey, Goodale, Jakobson, & Servos, 1994). Although we cannot rule out a potential influence of color, if attention was more evenly distributed across the fruit/vegetable stimuli due to color cues to identity, then detection performance should have been equally as good at the tip versus the stem. Contrary to this prediction, Figure 4 shows that detection performance was biased towards one end of the non-tool exemplars, but unlike the tools the direction of this bias varied from one stimulus to the next in a way that is not clearly predicted by color differences (or global shape). Color cues also are unable to explain the consistent bias we observed towards the head end of the tool stimuli. Finally, it is important to emphasize that in our study the object cues were irrelevant to the observers’ task and they held no predictive information about the location of the target.

Our finding of attentional bias toward the head end of tools seems surprising in some respects because behavioral studies have often shown that manual responses are influenced by the orientation of the handle of graspable objects (Phillips & Ward, 2002; Tucker & Ellis, 1998; Vainio et al., 2007). For example, observers are faster to make a button-press response to an image of a tool when the handle of the stimulus is oriented towards (versus away from) the responding hand (Tucker & Ellis, 1998). Similarly, judgments about actions with an object have been shown to be impaired when an object’s handle is not presented in an appropriate orientation for a grasp (Yoon & Humphreys, 2007). Neuropsychological evidence also suggests that tool handles can influence attention and action (Humphreys & Riddoch, 2001). For example, in patients with anarchic hand syndrome (a neurological condition typically resulting from damage to supplementary motor area (SMA) circuits thought to be responsible for controlling complex voluntary movements (Della Sala, Marchetti, & Spinnler, 1991) the dominant limb responds to visually presented objects in an uninhibited and uncontrolled manner, often acting automatically and at cross-purposes to the other hand. Riddoch et al. (1998) described a patient with anarchic hand syndrome who initiated incorrect and unintentional grasping responses with the dominant right hand but only under conditions where the handle of the object (a cup) was oriented towards the affected hand. The effect was diminished when the stimulus was an unfamiliar “non-object” and when the cup was inverted and presumably no longer in an orientation conducive to its function. Evidence from developmental psychology also suggests that familiarity with how to grasp a tool, rather than its functional properties, is critical in shaping object-directed actions. Whereas infants aged 12- to 18-months are flexible in the way that they grasp novel tools, they tend to grasp familiar tools by the handle, even when this is detrimental to solving a manual task with the object (Barrett et al., 2007). These provocative results suggest that infants learn about how a tool should be grasped before they understand how the tool is used. Given the results of Matheson et al. (2014), it is possible that in some tasks, global shape cues may be responsible for the observed behavioral effects.

However, there are a number of reasons why the head end of a tool could be critical in driving, what appear to be, handle orientation effects on attention and behavior. First, although tool actions are initiated by grasping the handle, it is the case that elongated objects can be grasped in different ways, yet not all are effective. For example, a handle may be grasped in one way to pick up a tool to move it to a different position, but in a different way to use it according to its specific affordance (Lederman & Klatzky, 1990). Importantly, in most cases it is the shape (Kourtzi & Kanwisher, 2001) and material properties (Cant, Arnott, & Goodale, 2009; Cant & Goodale, 2007) of the head end of a tool (not the handle) that provide specific information about the identity of the object, and this has critical implications for its perceived usefulness. In line with this idea, previous studies have shown that we need to know the identity of a tool before it can be grasped effectively (Creem & Proffitt, 2001b; Guillery et al., 2013; Jarry et al., 2013; Masson et al., 2011). Behavioral priming studies provide particularly strong evidence in favor of the idea that tool heads are critical in driving affordance effects on motor planning. Initiating a tool-specific grasp is faster after having previewed briefly the same (versus a different) tool—a result often termed a “priming effect for action.” Critically, several studies have shown robust action priming effects even when the handles of the various prime stimuli are identical (Squires, Macdonald, Culham, & Snow, 2015; Valyear, Chapman, Gallivan, Mark, & Culham, 2011), indicating that the shape of the tool’s head end is sufficient to prime tool-specific motor routines. Finally, when observers look at images of common tools their initial saccades, which are known to be tightly linked to attention (Orquin & Mueller Loose, 2013), have been shown to be directed towards the head end, not the handle of the object (van der Linden, Mathot, & Vitu, 2015). It also is possible that the directional bias towards tool heads reflects observers’ predictions about the region of the object that could have the greatest future motion (Kourtzi, Krekelberg, & van Wezel, 2008; Sigurdardottir, Michalak, & Sheinberg, 2014).

Conclusions

Our study resolves important discrepancies between studies that have claimed that tools influence visuospatial attention by virtue of high-level action affordances versus lower-level stimulus-based attributes. We show that when low-level biases are controlled, competition between visual inputs is biased towards the region of a tool that indicates uniquely its identity and function. Given that attention can reflect the need to perform purposive actions with the most relevant object and that action constraints can influence the earliest stages of object selection (Humphreys et al., 2013), the available evidence suggests that attention is drawn rapidly to the head of a tool to facilitate the activation of highly specific motor routines that are tied to tool identity. If selection is based on information derived primarily from the head end of a tool, then why would a tool’s orientation so often influence performance in manual tasks? Given that cueing attention to one part of an object can facilitate discrimination in another part of the same object (Duncan, Ward, & Shapiro, 1994; Egly, Driver, & Rafal, 1994), and programming an action towards one part of an object can lead to enhanced selection of the whole object, even when it has distinct parts (Linnell, Humphreys, McIntyre, Laitinen, & Wing, 2005), tool-specific motor routines may be modulated by the size, position, and orientation of the tool as a whole relative to the effectors. Given that humans are limited in the number of actions that can be performed at a given moment (Allport, 1987), it would seem adaptive not only for the most behaviorally relevant stimulus to control action at one time, but also for the most behaviorally relevant part of an affordance-related object to take priority in the selection of an effective motor plan.

References

Adamo, M., & Ferber, S. (2009). A picture says more than a thousand words: Behavioural and ERP evidence for attentional enhancements due to action affordances. Neuropsychologia, 47(6), 1600–1608. doi:10.1016/j.neuropsychologia.2008.07.009

Allport, D. A. (1987). Selection for action: Some behavioural and neurophysiological considerations of attention and action. In H. Heuer & D. F. Saunders (Eds.), Perspectiveson perception and action (pp. 395–419). Hillsdale, NJ: Erlbaum.

Anderson, S. J., Yamagishi, N., & Karavia, V. (2002). Attentional processes link perception and action. Proceedings of the Royal Society B: Biological Sciences, 269(1497), 1225–1232. doi:10.1098/rspb.2002.1998

Barrett, T. M., Davis, E. F., & Needham, A. (2007). Learning about tools in infancy. Developmental Psychology, 43(2), 352–368. doi:10.1037/0012-1649.43.2.352

Bekkering, H., & Neggers, S. F. (2002). Visual search is modulated by action intentions. Psychological Science, 13(4), 370–374.

Brainard, D. H. (1997). The psychophysics toolbox. Spatial Vision, 10(4), 433–436.

Cant, J. S., Arnott, S. R., & Goodale, M. A. (2009). fMR-adaptation reveals separate processing regions for the perception of form and texture in the human ventral stream. Experimental Brain Research, 192(3), 391–405. doi:10.1007/s00221-008-1573-8

Cant, J. S., & Goodale, M. A. (2007). Attention to form or surface properties modulates different regions of human occipitotemporal cortex. Cerebral Cortex, 17(3), 713–731. doi:10.1093/cercor/bhk022

Chao, L. L., & Martin, A. (2000). Representation of manipulable man-made objects in the dorsal stream. NeuroImage, 12(4), 478–484. doi:10.1006/nimg.2000.0635

Cho, D. T., & Proctor, R. W. (2010). The object-based Simon effect: Grasping affordance or relative location of the graspable part? Journal of Experimental Psychology: Human Perception and Performance, 36(4), 853–861. doi:10.1037/a0019328

Cho, D. T., & Proctor, R. W. (2011). Correspondence effects for objects with opposing left and right protrusions. Journal of Experimental Psychology: Human Perception and Performance, 37(3), 737–749. doi:10.1037/a0021934

Craighero, L., Fadiga, L., Rizzolatti, G., & Umilta, C. (1999). Action for perception: A motor-visual attentional effect. Journal of Experimental Psychology: Human Perception and Performance, 25(6), 1673–1692.

Creem-Regehr, S. H., & Lee, J. N. (2005). Neural representations of graspable objects: Are tools special? Cognitive Brain Research, 22(3), 457–469. doi:10.1016/j.cogbrainres.2004.10.006

Creem, S. H., & Proffitt, D. R. (2001a). Defining the cortical visual systems: "What", "where", and "how". Acta Psychologica, 107(1-3), 43–68.

Creem, S. H., & Proffitt, D. R. (2001b). Grasping objects by their handles: A necessary interaction between cognition and action. Journal of Experimental Psychology: Human Perception and Performance, 27(1), 218–228.

Della Sala, S., Marchetti, C., & Spinnler, H. (1991). Right-sided anarchic (alien) hand: A longitudinal study. Neuropsychologia, 29(11), 1113–1127.

Desimone, R., & Duncan, J. (1995). Neural mechanisms of selective visual attention. The Annual Review of Neuroscience, 18(0147-006X (Print)), 193-222.

Duncan, J., Humphreys, G., & Ward, R. (1997). Competitive brain activity in visual attention. Current Opinion in Neurobiology, 7(2), 255–261.

Duncan, J., Ward, R., & Shapiro, K. (1994). Direct measurement of attentional dwell time in human vision. Nature, 369(6478), 313–315. doi:10.1038/369313a0

Egly, R., Driver, J., & Rafal, R. D. (1994). Shifting visual attention between objects and locations: Evidence from normal and parietal lesion subjects. Journal of Experimental Psychology: General, 123(2), 161–177.

Fischer, M. H., Castel, A. D., Dodd, M. D., & Pratt, J. (2003). Perceiving numbers causes spatial shifts of attention. Nature Neuroscience, 6, 555–556. doi:10.1038/nn1066

Gallivan, J. P., McLean, A., & Culham, J. C. (2011). Neuroimaging reveals enhanced activation in a reach-selective brain area for objects located within participants' typical hand workspaces. Neuropsychologia, 49(13), 3710–3721. doi:10.1016/j.neuropsychologia.2011.09.027

Gallivan, J. P., McLean, D. A., Valyear, K. F., & Culham, J. C. (2013). Decoding the neural mechanisms of human tool use. eLife, 2, e00425. doi:10.7554/eLife.00425

Garrido-Vasquez, P., & Schubo, A. (2014). Modulation of visual attention by object affordance. Frontiers in Psychology, 5, 59. doi:10.3389/fpsyg.2014.00059

Gibson, J. J. (1979). The ecological approach to visual perception. Boston, MA: Houghton Mifflin.

Goodale, M. A. (2014). How (and why) the visual control of action differs from visual perception. Proceedings of the Royal Society B: Biological Sciences, 281(1785), 20140337. doi:10.1098/rspb.2014.0337

Goodale, M. A., Westwood, D. A., & Milner, A. D. (2004). Two distinct modes of control for object-directed action. Progress in Brain Research, 144, 131–144.

Gould, I. C., Wolfgang, B. J., & Smith, P. L. (2007). Spatial uncertainty explains exogenous and endogenous attentional cuing effects in visual signal detection. Journal of Vision, 7(13), 4.1–17. doi:10.1167/7.13.4

Green, J. J., & Woldorff, M. G. (2012). Arrow-elicited cueing effects at short intervals: Rapid attentional orienting or cue-target stimulus conflict? Cognition, 122(1), 96–101. doi:10.1016/j.cognition.2011.08.018

Guillery, E., Mouraux, A., & Thonnard, J. L. (2013). Cognitive-motor interference while grasping, lifting and holding objects. PloS One, 8(11), e80125. doi:10.1371/journal.pone.0080125

Haffenden, A. M., Schiff, K. C., & Goodale, M. A. (2001). The dissociation between perception and action in the Ebbinghaus illusion: Nonillusory effects of pictorial cues on grasp. Current Biology, 11(3), 177–181.

Handy, T. C., Grafton, S. T., Shroff, N. M., Ketay, S., & Gazzaniga, M. S. (2003). Graspable objects grab attention when the potential for action is recognized. Nature Neuroscience, 6(4), 421–427. doi:10.1038/nn1031

Humphrey, G. K., Goodale, M. A., Jakobson, L. S., & Servos, P. (1994). The role of surface information in object recognition: Studies of a visual form agnosic and normal subjects. Perception, 23(12), 1457–1481.

Humphreys, G. W., Kumar, S., Yoon, E. Y., Wulff, M., Roberts, K. L., & Riddoch, M. J. (2013). Attending to the possibilities of action. Philosophical Transactions of the Royal Society, B: Biological, 368(1628), 20130059. doi:10.1098/rstb.2013.0059

Humphreys, G. W., & Riddoch, M. J. (2001). Knowing what you need but not what you want: Affordances and action-defined templates in neglect. Behavioural Neurology, 13(1-2), 75–87.

Humphreys, G. W., Yoon, E. Y., Kumar, S., Lestou, V., Kitadono, K., Roberts, K. L., & Riddoch, M. J. (2010). The interaction of attention and action: From seeing action to acting on perception. British Journal of Psychology, 101(Pt 2), 185–206. doi:10.1348/000712609x458927

Jarry, C., Osiurak, F., Delafuys, D., Chauvire, V., Etcharry-Bouyx, F., & Le Gall, D. (2013). Apraxia of tool use: More evidence for the technical reasoning hypothesis. Cortex, 49(9), 2322–2333. doi:10.1016/j.cortex.2013.02.011

Kitadono, K., & Humphreys, G. W. (2007). Interactions between perception and action programming: Evidence from visual extinction and optic ataxia. Cognitive Neuropsychology, 24(7), 731–754. doi:10.1080/02643290701734721

Konkle, T., & Oliva, A. (2012a). A familiar-size Stroop effect: Real-world size is an automatic property of object representation. Journal of Experimental Psychology: Human Perception and Performance, 38(3), 561–569. doi:10.1037/a0028294

Konkle, T., & Oliva, A. (2012b). A real-world size organization of object responses in occipitotemporal cortex. Neuron, 74(6), 1114–1124. doi:10.1016/j.neuron.2012.04.036

Kourtzi, Z., & Kanwisher, N. (2001). Representation of perceived object shape by the human lateral occipital complex. Science, 293(5534), 1506–1509. doi:10.1126/science.1061133

Kourtzi, Z., Krekelberg, B., & van Wezel, R. J. (2008). Linking form and motion in the primate brain. Trends in Cognitive Sciences, 12(6), 230–236. doi:10.1016/j.tics.2008.02.013

Kustov, A. A., & Robinson, D. L. (1996). Shared neural control of attentional shifts and eye movements. Nature, 384(6604), 74–77. doi:10.1038/384074a0

Lederman, S. J., & Klatzky, R. L. (1990). Haptic classification of common objects: Knowledge-driven exploration. Cognitive Psychology, 22(4), 421–459.

Lewis, J. W. (2006). Cortical networks related to human use of tools. The Neuroscientist, 12(3), 211–231. doi:10.1177/1073858406288327

Linnell, K. J., Humphreys, G. W., McIntyre, D. B., Laitinen, S., & Wing, A. M. (2005). Action modulates object-based selection. Vision Research, 45(17), 2268–2286. doi:10.1016/j.visres.2005.02.015

Masson, M. E., Bub, D. N., & Breuer, A. T. (2011). Priming of reach and grasp actions by handled objects. Journal of Experimental Psychology: Human Perception and Performance, 37(5), 1470–1484. doi:10.1037/a0023509

Matheson, H. E., Newman, A. J., Satel, J., & McMullen, P. (2014). Handles of manipulable objects attract covert visual attention: ERP evidence. Brain and Cognition, 86, 17–23. doi:10.1016/j.bandc.2014.01.013

Matheson, H. E., White, N. C., & McMullen, P. A. (2013). A test of the embodied simulation theory of object perception: Potentiation of responses to artifacts and animals. Psychological Research, 78(4), 465–482. doi:10.1007/s00426-013-0502-z

Oldfield, R. C. (1971). The assessment and analysis of handedness: The Edinburgh inventory. Neuropsychologia, 9(1), 97–113.

Orquin, J. L., & Mueller Loose, S. (2013). Attention and choice: A review on eye movements in decision making. Acta Psychologica, 144(1), 190–206. doi:10.1016/j.actpsy.2013.06.003

Pellicano, A., Iani, C., Borghi, A. M., Rubichi, S., & Nicoletti, R. (2010). Simon-like and functional affordance effects with tools: The effects of object perceptual discrimination and object action state. Quarterly Journal of Experimental Psychology, 63(11), 2190–2201. doi:10.1080/17470218.2010.486903

Phillips, J. C., & Ward, R. (2002). SR correspondence effects of irrelevant visual affordancc: Time course and specificity. Independence and Integration of Perception and Action, 4, 540.

Posner, M. I., Snyder, C. R., & Davidson, B. J. (1980). Attention and the detection of signals. Journal of Experimental Psychology: General, 109(2), 160–174.

Posner, M. I., & Cohen, Y. (1984). Components of visual orienting. In H. Bouma & D. G. Bowhuis (Eds.), Attention and performance X: Control of language processes (pp. 531–556). Hove, UK: Lawrence Erlbaum Associates Ltd.

Proverbio, A. M., Adorni, R., & D'Aniello, G. E. (2011). 250 ms to code for action affordance during observation of manipulable objects. Neuropsychologia, 49(9), 2711–2717. doi:10.1016/j.neuropsychologia.2011.05.019

Riddoch, M. J., Edwards, M. G., Humphreys, G. W., West, R., & Heafield, T. (1998). Visual affordances direct action: Neuropsychological evidence from manual interference. Cognitive Neuropsychology, 15(6-8), 645–683. doi:10.1080/026432998381041

Riddoch, M. J., Pippard, B., Booth, L., Rickell, J., Summers, J., Brownson, A., & Humphreys, G. W. (2011). Effects of action relations on the configural coding between objects. Journal of Experimental Psychology: Human Perception and Performance, 37(2), 580–587. doi:10.1037/a0020745

Rizzolatti, G., Riggio, L., Dascola, I., & Umilta, C. (1987). Reorienting attention across the horizontal and vertical meridians: Evidence in favor of a premotor theory of attention. Neuropsychologia, 25(1a), 31–40.

Roberts, K. L., & Humphreys, G. W. (2011). Action-related objects influence the distribution of visuospatial attention. Quarterly Journal of Experimental Psychology, 64, 669–688. doi:10.1080/17470218.2010.520086

Sheliga, B. M., Riggio, L., Craighero, L., & Rizzolatti, G. (1995). Spatial attention-determined modifications in saccade trajectories. Neuroreport, 6(3), 585–588.

Sigurdardottir, H. M., Michalak, S. M., & Sheinberg, D. L. (2014). Shape beyond recognition: Form-derived directionality and its effects on visual attention and motion perception. Journal of Experimental Psychology: General, 143(1), 434–454. doi:10.1037/a0032353

Squires, S. D., Macdonald, S. N., Culham, J. C., & Snow, J. C. (2015). Priming tool actions: Are real objects more effective primes than pictures? Experimental Brain Research. doi:10.1007/s00221-015-4518-z

Symes, E., Ottoboni, G., Tucker, M., Ellis, R., & Tessari, A. (2010). When motor attention improves selective attention: The dissociating role of saliency. Quarterly Journal of Experimental Psychology, 63(7), 1387–1397. doi:10.1080/17470210903380806

Tucker, M., & Ellis, R. (1998). On the relations between seen objects and components of potential actions. Journal of Experimental Psychology: Human Perception and Performance, 24(3), 830–846.

Tucker, M., & Ellis, R. (2001). The potentiation of grasp types during visual object categorization. Visual Cognition, 8, 769–800.

Vainio, L. (2009). Interrupted object-based updating of reach program leads to a negative compatibility effect. Journal of Motor Behavior, 41(4), 305–315. doi:10.3200/jmbr.41.4.305-316

Vainio, L., Ellis, R., & Tucker, M. (2007). The role of visual attention in action priming. Quarterly Journal of Experimental Psychology, 60(2), 241–261. doi:10.1080/17470210600625149

Valyear, K. F., Chapman, C. S., Gallivan, J. P., Mark, R. S., & Culham, J. C. (2011). To use or to move: Goal-set modulates priming when grasping real tools. Experimental Brain Research, 212(1), 125–142. doi:10.1007/s00221-011-2705-0

Van der Linden, L., Mathot, S., & Vitu, F. (2015). The role of object affordances and center of gravity in eye movements toward isolated daily-life objects. Journal of Vision, 15(5), 8. doi:10.1167/15.5.8

Van der Stigchel, S., Mills, M., & Dodd, M. D. (2010). Shift and deviate: Saccades reveal that shifts of covert attention evoked by trained spatial stimuli are obligatory. Attention, Perception, & Psychophysics, 72(5), 1244–1250.

Weger, U. W., Abrams, R. A., Law, M. B., & Pratt, J. (2008). Attending to objects: Endogenous cues can produce inhibition of return. Visual Cognition, 16(5), 659–674.

Wykowska, A., Schubo, A., & Hommel, B. (2009). How you move is what you see: Action planning biases selection in visual search. Journal of Experimental Psychology: Human Perception and Performance, 35(6), 1755–1769. doi:10.1037/a0016798

Yang, S. J., & Beilock, S. L. (2011). Seeing and doing: Ability to act moderates orientation effects in object perception. Quarterly Journal of Experimental Psychology, 64(4), 639–648. doi:10.1080/17470218.2011.558627

Yoon, E. Y., & Humphreys, G. W. (2007). Dissociative effects of viewpoint and semantic priming on action and semantic decisions: Evidence for dual routes to action from vision. Quarterly Journal of Experimental Psychology, 60(4), 601–623. doi:10.1080/17470210600701007

Acknowledgments

Research reported in this publication was supported by a grant to J.C. Snow from the National Eye Institute of the National Institutes of Health under Award Number R01EY026701. The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health. The research was also supported by the National Institute of General Medical Sciences of the National Institutes of Health under grant number P20 GM103650. We thank Kim Kirkeby and Andrew Delloro for their help in developing the stimuli and collecting the data.

Author information

Authors and Affiliations

Corresponding author

Additional information

The original version of this article was revised: In the original article the first name of the second author was misspelled. The incorrect name “Jaqueline C. Snow” should have appeared as “Jacqueline C. Snow”.

An erratum to this article is available at http://dx.doi.org/10.3758/s13414-016-1239-8.

Rights and permissions

About this article

Cite this article

Skiba, R.M., Snow, J.C. Attentional capture for tool images is driven by the head end of the tool, not the handle. Atten Percept Psychophys 78, 2500–2514 (2016). https://doi.org/10.3758/s13414-016-1179-3

Published:

Issue Date:

DOI: https://doi.org/10.3758/s13414-016-1179-3