Abstract

Background

Errors in reported height and weight raise concerns about body mass index (BMI) and obesity estimates obtained from self or proxy reports. Researchers have corrected BMI using linear statistical models, primarily with adult samples. We compared the accuracy of BMI correction in children for models that included child or parent reports versus both reports, and models that separately predicted height and weight compared to a single model for BMI.

Methods

Height and weight from child reports, parent reports, and objective measurements for 475 children participating in the Military Teenagers’ Environment, Exercise and Nutrition Study were analyzed. Two approaches were evaluated: (1) separate linear correction models for height and weight versus (2) a single linear correction model for BMI. Each approach considered models for height, weight, or BMI with child reports, parent reports, or both reports, respectively, as predictors, stratified by gender. Prediction accuracy was computed using leave-one-out validation. Models were compared using root mean squared error for BMI, and sensitivity and specificity for overweight and obesity indicators.

Results

Models that included both reports provided the best fit relative to a model using either set of reports, with adjusted R2 of height, weight, and BMI models ranging from 67.1 to 87.6 % in males, and 69.2 to 88.3 % in females. Estimates of BMI from separate models for height and weight had the least prediction error, relative to those derived from a single model for BMI or from uncorrected (child or parent) reports. Cross-validated Root Mean Squared Error (RMSEs) preferred a model that included only parent reports among males and females, compared to models with only child reports or both reports. When assessing sensitivity (true positive) for obesity and overweight/obesity, the results varied by gender and outcomes. Specificity (true negative) was similarly high for all models.

Conclusion

Objective measurements are more accurate than self- or proxy-reports of BMI. In situations where objective measurement is infeasible, an approach that combines collecting a validation sub-sample including multiple reports of children’s height and weight, with estimation of BMI correction models maybe a cost-effective and practical solution. Correction models generate BMI estimates that are closer to objective measurements than reports.

Similar content being viewed by others

Background

Obesity is “one of the most serious public health challenges of the 21st century” [1, 2]. It has more than doubled among children during the past 30 years [3, 4]. High rates of obesity in children are particularly concerning because of the potential for long-term health consequences, such as increased risk of heart disease, type 2 diabetes, high blood pressure and other chronic conditions [5]. Thus, ongoing monitoring of overweight and obesity is important. Body mass index (BMI, weight [kg]/height [m2]) has become the most common indicator to assess obesity because it is an inexpensive and noninvasive surrogate measure of body heaviness, shown to exhibit high correlations with body fat and future health risks [6–9].

The three standard ways to collect height and weight data are: 1) self-report, 2) proxy-report (often by a parent), and 3) in-person objective measurement. While objective measurements conducted by trained personnel are superior to self- or proxy-reports, they are expensive to collect, especially in large or dispersed samples [9]. In an environment of budget cuts and declining response rates [10], many national surveys including the Behavioral Risk Factor Surveillance System [11], the National Health Interview Survey [12], and the National Survey of Children’s Health [13] primarily rely on parental reports of children’s height and weight. Yet reported height and weight are shown to include non-trivial amounts of measurement error. For example, BMI was 0.7 kg/m2 higher when based on parent-reported values vs measured height and weight [14]. A review of the literature found that sensitivity of reported BMI for screening for overweight ranged from 55 to 76 %, and that overweight prevalence was −0.4 to −17.7 % lower when BMI was based on self-reported versus directly measured data for adolescents [15].

Concern about measurement error has fostered an emerging literature on how less costly data collection efforts can be utilized in BMI studies. One cost effective approach is the use of BMI “correction models” [16–22]. A “correction model” can be estimated with a dataset that includes both reports and measurements. The estimated model coefficients can then be applied in other studies which have collected reported data only to produce corrected BMI estimates. Past efforts have largely focused on self-reports in adult samples. The quality of BMI correction models has not been fully explored with other sub-populations (e.g., children), where the nature of reporting errors might differ and where proxy reports are more common. Further, most studies have ignored the issue of out-of-sample prediction and validity shrinkage [23], and do not provide a good understanding of predictive performance of such models.

The response error mechanism underlying misreporting of height and weight can be random or systematic. While some respondents may not know their actual height or weight, others may intentionally misreport to fit social norms. An additional complication specific to BMI is that the error mechanism may differ for height and weight. A review paper summarizing multiple studies found that self-reported height in adolescents was both underestimated and overestimated with reporting biases ranging from −1.1 to 2.4 cm, while reported weight was usually underestimated [15]. Results from earlier studies also suggest that girls tend to underestimate their weight more so than boys [15]. Thus, separate correction models for height and weight stratified by gender [24] may be superior to a single model for BMI.

An additional issue with surveys of children is that they typically ask for parental reports of height and weight, in lieu of children’s self-reports. The magnitude of response biases has been found to differ for self- versus proxy-reports in adults [25]. One recent study in Quebec actually collected child- and parent-reports for children between 8 and 12 years of age, and found that the two were comparable. Both child and parent reports underestimated the children’s weight by 1 kg, height by less than 1 cm, and BMI by less than 0.25 kg/m2, on average [26]. Given the lack of multiple studies comparing child and parent reports, it is not well understood whether collecting two sets of reports of height and weight has added value.

The Military Teenagers’ Environment, Exercise and Nutrition Study (M-TEENS) provides a unique opportunity to explore these issues. A subsample of M-TEENS respondents completed objective measurements, in addition to providing child- and parent-reports of child’s height and weight. This is the only national sample in the U.S. that we know of that includes child and parent reports of height and weight, in addition to measurements, for youth (12–13 year olds). As a result, it provides a unique chance to conduct a validation study. The aim of this study is to propose a simple, linear BMI correction model which does not require many additional predictors, and can be estimated with small validation samples. We also tested two hypotheses – (i) a model that predicts BMI using both parent and child reports will be more accurate than a model that includes only one set of reports; and (ii) separate correction of height and weight to derive BMI will improve prediction accuracy compared to a model that directly predicts BMI because the reporting errors for height and weight may differ. We explored both hypotheses using measures of predictive performance.

Methods

Design and sample

M-TEENS was designed to assess how the food and physical activity environment in schools and neighborhoods may influence the diet, physical activity, and BMI of children ages 12–13. The study surveyed children and their parents/guardians (hereafter, parents) from families of enlisted Army personnel located at 12 Army installations across the four U.S. Census regions. Using the Army’s records, M-TEENS staff contacted families who were located at these installations for at least 18 months and who likely had a 12–13-year-old (as of March 31, 2013) in the household. The Defense Manpower Data Center (DMDC) provided participants’ contact information. We attempted to contact a larger than necessary sample for several reasons. First, military families move frequently due to periodic re-assignment, so that their contact information is not always updated in a timely manner. Second, information on members’ active duty status does not reflect recent separations from the military. Lastly, response rates in military samples are considerably lower than in civilian samples [27, 28].

Of 8,545 enlisted personnel that were initially emailed and/or mailed recruitment letters, 2,106 completed an eligibility screener. Families were eligible to participate if they met three conditions. First, the service member did not intend to leave the military within the coming year. Second, the 12- or 13-year-old resided with the enlisted parent at least half-time. Finally, the 12- or 13-year old child was enrolled in a public or Department of Defense Education Activity school. Of those screened, 1,794 (85 %) households were eligible and 1,188 (66 %) provided consent.

Between Spring 2013 and Winter 2013/2014, 1,022 surveys were completed online. During data collection, study personnel visited each installation for three days to collect objective measurements for those with a survey completed, with families participating on a walk-in basis. Of the 1,022 survey respondents with at least a parent or child survey response, 521 completed an objective measurement. The sample size of children with self-reports, parent-reports, and measurements of height and weight is 475. The limited window for objective measurements lowered the number of measurements, while living on-installation increased the chances of completing the measurements. This study was approved by the Institutional Review Boards at RAND, University of Southern California, and the Army Human Research Protection Office. Online consent and assent was obtained from parents and children, respectively. All sampled, enlisted persons were sent instructions to their Department of Defense email address with unique login credential and a unique link to the consent form generated specifically for the sampled person.

Measures

Objective measurements

Height and weight were measured by trained personnel at each installation. Height was measured using a Seca 213 stadiometer, rounded to the nearest 0.1 cm. Weight was measured using a Tanita UM-041 F digital scale, recorded to the nearest 0.1 kg. All measurements were taken at least twice; a third measurement was taken if the two measurements differed by a pre-determined amount (>0.5 cm for height, >0.2 kg for weight). The average of the two closest measurements was used. About 90 % of measurements were conducted within one month of the survey.

Reported height and weight

The child (parent) survey included the following questions: “how tall are you (is your child) without shoes on?” and “how much do you (does your child) weigh without shoes on?” The reporting units are inches and feet for height and pounds for weight. Child- and parent-reported BMI were computed as the ratio of child- and parent-reported weight (kg) and height-squared (m2), respectively.

BMI, obesity

BMI was computed as the ratio of measured or reported weight [kg] to height[m]-squared. The child’s age and gender were used to calculate BMI percentile using the 2000 Centers for Disease Control BMI-for-age growth charts [29]. We constructed indicators of obesity and overweight/obesity, where obesity is defined as BMI in the 95th percentile or higher and overweight is defined as BMI between the 85 and 95th percentiles.

Participant characteristics

The parent survey asked about the child’s race/ethnicity. Gender was obtained from child survey; birthdate came from DMDC records. Age in months is the difference between the measurement date and the birthdate and rounded to the nearest month.

Analysis

We summarized the distributions of reported and measured outcomes for the overall sample, and by gender for the analysis sample (n = 475). To identify biases in reporting, we tested for significant differences and correlations between objective and reported values of height, weight and BMI using a paired t-Test and Fisher’s Z-Test, respectively. Similarly, we tested for agreement between reports and measurements of binary variables such as obesity or overweight/obesity using McNemar’s test.

A linear regression model with measured height, weight, or BMI as the dependent variable and reported values of the same variable as the key independent variable(s) was used for correction. Three models were considered per outcome – Model 1 included parent report only, Model 2 included child report only, and Model 3 included both child and parent reports, estimated separately for boys and girls. The reported variables were centered before they were entered as linear and quadratic terms. Age (in months) and race-ethnicity indicators were included in all models [21]. The quality of models was assessed using adjusted-R 2 and Akaike Information Criterion (AIC) [30]. The AIC is proportional to the log likelihood penalized by the number of model parameters, and used as a measure of the relative quality of statistical models.

Typically, regression coefficients from a BMI correction model estimated with a validation sample are applied to another dataset which only includes reported height and weight. As Ivanescu et al. [23] have underscored, the true test of a model’s predictive capacity can be assessed when the model is tested on an independent dataset that was not used to develop the model; cross-validation approaches provide an objective assessment of out-of-sample prediction accuracy, accounting for both the bias and variance of model predictions. Thus, we provide estimates of prediction error that are obtained from leave-one-out cross validation [31]. In particular, leave-one-out cross validation is well suited to smaller sample sizes (e.g. M-TEENS) [23].

Corrected BMI was computed from predicted height and weight (‘indirect’ approach), or directly predicted using reported BMI (‘direct’ approach). We computed RMSE associated with the continuous outcome (BMI). We also assessed classification error using sensitivity and specificity for the binary indicators of obesity and overweight/obesity. Sensitivity (true positive) indicates a model’s ability to correctly classify an individual as overweight or obese while specificity (true negative) indicates its ability to correctly classify an individual as non-obese or non-overweight/non-obese [32]. Further, we compared the corrected estimates to uncorrected reports to understand whether correction improves upon use of self- or parent-reports. To account for sampling error, RMSE values within 0.05 units of each other and sensitivity and specificity estimates within 3 % of each other are treated as similar and not discussed. All analyses were performed in SAS software, Version 9.4 of the SAS System for Windows.

Results

Sample characteristics

In the analysis sample of 475 with validation data, 46 % were female, 43 % non-Hispanic white, 21 % non-Hispanic black, and 24 % Hispanic/Latino (Table 1, column 1). The average age was 157 months (13.1 years). The average measured height and, weight were 159 cm and 53 kg, respectively. The average BMI was 20.8 kg/m2. The percent obese and percent overweight or obese, derived from measured BMI, were 10.7 and 28.0 %, respectively.

Reporting biases

On average, child-reported and parent-reported heights were 0.9 cm lower (P <.001) and 1.4 cm lower (P <.001), respectively, than measured height (Table 1). Child-reported and parent-reported weights were 1.9 kg lower (P <.001) and 2.3 kg lower (P <.001) than measured weight. Also, child-reported and parent-reported BMI were both 0.5 units lower than measured BMI (P <.001). The estimates of obesity and overweight/obesity derived from child reports were 2.1 and 4.2 percentage points (hereafter, pp) lower, respectively, than the measured values. The child-reported estimates of the binary indicators were less accurate, on average, compared to parent-reports (which were 1.2 and 2.5 pp lower, respectively). Additional information about the distribution of bias is provided in the Appendix.

Among males, child- and parent-reports of height were 1.4 cm (P <0.01) and 1.6 cm (P <0.001) lower than the measured value, respectively. Child- and parent-reported weights were 1.7 kg lower (P <.001) and 2.1 kg lower than measured weight (P <.001). Also, child-reported BMI was 0.3 kg/m2 lower while parent-reported BMI was 0.4 kg/m2 lower than measured BMI (P <.01). Obesity was underestimated by 2.7 pp using child-reports. Obesity was underestimated by 2.3 pp, while overweight/obesity was over-estimated by 1.9 pp using parent-reports. Among females, parent-reported height was 1.2 cm lower than measured height (P <.001). Child-reported and parent-reported weight were 2.3 kg lower (P <.001) and 2.7 kg lower (P <.001), respectively, than measured weight. Also, child-reported BMI was 0.8 kg/m2 lower (P <.001) and parent-reported BMI was 0.7 kg/m2 lower (P <.001) than measured BMI. Obesity and overweight/obesity were underestimated by 1.4 and 9.2 pp, respectively, using child reports. While overweight/obesity was underestimated by 7.2 pp using parent reports, obesity alone was not underestimated. The magnitude of bias was greater when using self-reports than parent-reports among females.

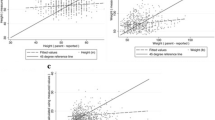

Association of reported and measured outcomes

In Table 2, we explored the correlations among parent reports, child reports, and measurements of weight, height, and BMI. The correlations between reported and measured weight were higher (r = 0.93) than that between reported and measured height (r = 0.79–0.82) and BMI (r = 0.87). The patterns of association for males and females were similar, with slightly higher correlation between measured and reported height and BMI among females. All correlations were statistically significant (P <0.001).

Quality of BMI correction models

In Table 3, the adjusted- R 2 statistics were higher for models with weight as dependent variable (85.6–88.3 %) compared to models of height (67.1–77.4 %) and BMI (72.7–83.1 %), which is consistent with the pattern of observed correlations (Table 2). Across all outcomes (height, weight, BMI), the model with child and parent reports (Model 3) had the highest adjusted- R 2 (higher is better) for males and females. The AIC statistic for Model 3 was consistently smaller (smaller AIC is better) compared to models with only either report, with a reduction of 10 units or more which indicates significant improvement [33]. Model equations are provided in the Appendix.

One difference between males and females is worth noting. Models with only the child report have superior fit (higher adjusted- R 2, smaller AIC) compared to models with only the parent report for height, weight and BMI among males. However, models with only the parent report are superior to models with only the child report for weight and BMI among females.

Accuracy of corrected BMI and obesity estimates

When comparing corrected estimates to raw reports, we found that cross-validated RMSEs of ‘indirect’ corrected estimates were always smaller than those of raw reports (Tables 4 and 5). As shown in Table 6, corrected means of height, weight and BMI were closer to those obtained from objective measurements, compared to child- or parent-reported means. Also, the corrected obesity and overweight/obesity prevalence estimates (Table 6) were less biased than estimates derived from raw reports (Table 1), suggesting that correction improves upon raw reports.

When comparing the ‘indirect’ and ‘direct’ correction approaches, we found that the cross-validated RMSEs from the ‘indirect’ approach were smaller than those of the ‘direct’ approach (Tables 4 and 5). While the three models for the ‘indirect’ approach had similar RMSEs for male BMI, model 3 had the smallest RMSE while ‘indirect’ model 1 had the smallest RMSE for female BMI. Generally, sensitivity of estimates from the ‘indirect approach’ was higher than those from the ‘direct approach’.

When comparing sensitivity across indirect models, results varied by gender and outcome. Indirect model 1 had the highest sensitivity for male obesity (83.9 %), and substantially higher than that of uncorrected reports (71 %). For the indicator of male overweight/obesity, uncorrected estimates from parent reports and corrected estimates from indirect models 1 and 2 had similar sensitivity (84.6 and 83.1 %, respectively). Among females, indirect model 1 (90 %) had higher sensitivity than uncorrected reports for obesity. For the indicator of overweight/obesity in females, the sensitivity of raw child and parent reports was especially low at 61.8 and 63.2 %, respectively. In comparison, indirect model 2 had the highest sensitivity (76.5 %) for female overweight/obesity.

When comparing specificity across the six models (using child report, parent report, or dual reports) under ‘indirect’ or ‘direct’ approach, we found the accuracy to be similar across all models. When comparing specificity for indicators of obesity and overweight/obesity, accuracy was slightly lower for overweight/obesity.

Discussion

This paper aimed to assess the performance of linear correction models for BMI in child samples. Three linear models were considered – using only parent report, using only child report, using both reports. The robustness of our correction was evaluated with cross-validation. Our findings highlight that reported height and weight are unreliable, regardless of gender of child, and emphasize the importance of objective measurement. Yet objective measurements may not be feasible for many studies. When choosing between parent- and child-reports of children’s height and weight, parent-reports may be less biased, on average. However, there appears to be a non-negligible amount of bias in uncorrected parent-reports in the right tail (indicators of male obesity, female overweight/obesity), which may be of interest to researchers and practitioners. Thus, BMI correction, shown to work well in adults, may be helpful for studies of children that collect only reports.

Two important, consistent findings emerge from the cross-validated metrics of prediction performance of our correction models estimated with a sample of 12–13-year-olds. First, corrected estimates of height, weight and BMI have less error than uncorrected estimates (Table 6). Second, an ‘indirect’ approach has better performance than a ‘direct’ approach – together, these findings provide support for linear BMI correction using separate models for height and weight. The quality of our models is similar to that of correction equations published by two recent studies conducted with 17–18 year olds in France (R2 ranging from 67 to 87 %) [24], and with 8th and 11th graders enrolled in Texas schools [34].

However, the choice of which ‘indirect’ model to use – i.e. only parent-report, only child-report, or both reports is less clear. When assessing model fit, we found that there may be additional value in collecting multiple reports of height and weight. However, cross-validated RMSEs of BMI indicated that a model that includes only parent reports is most efficient for males and females, compared to a model with only child reports or a model with both reports. This difference may be explained by the fact that while AIC and leave-one-out cross validation are asymptotically equivalent, AIC can under-penalize complex models for smaller sample sizes [35]. The results for specificity, which is the proportion of those without ‘disease’ accurately classified, were generally high across all models. When assessing sensitivity for obesity and overweight/obesity, the corrected estimate did better than the raw report for three of four binary indicators. However, the best model varied by gender and outcome, and included only parent reports or only child reports. There may be considerable variation in model performance across other child characteristics (e.g. race/ethnicity, age or BMI categories) also, which were not assessed in this study.

Based on these findings, we provide guidance for future studies collecting height and weight of children. Evidence of bias in parent and child reports suggests that objective measurement is best. For researchers whose study budgets do not allow BMI measurement for all subjects, an option is to collect child reports, parent reports and objective measurements on a sub-sample, which can be used to conduct corrections for reported BMI in the sample without measurements. However, different BMI correction models may perform best for different outcomes (as suggested by our results) or subgroups of children so that a large validation sample may be necessary to identify the optimal model for each sub-group, which may be very costly. Our results in Tables 4 and 5 indicate that a model with multiple (parent and child) reports was best, or a close second, for 9 out of 10 metrics of prediction performance. Thus, a model with multiple reports may offer a multi-purpose and practical solution for BMI correction. When a validation sample cannot be obtained as part of the study design, the benefit of using correction equations developed for another dataset versus use of raw reports should be weighed.

Prior studies conducted with youth samples have typically focused on the magnitude of response biases and predictors of reporting bias rather than on BMI correction. Our descriptive analyses provided similar findings as those in the literature with some exceptions. On average, underreporting was greater for weight than height; child and parent reports of weight were less accurate for females than males [15]. Also, under-reporting of height and weight led to slight underestimation of BMI, and lower prevalence of obesity, compared to measurements. This last finding differs from Shields et al. [14] who found that estimates of obesity from parental reports and measurements were similar among 9–11 year olds. Our sample of children is slightly older, however. We also found that the sensitivity of parent-reported overweight/obese in males (84.6 %) and obese in females (85 %) in our sample was considerably higher than the sensitivity of child reported estimates (59 to 75 % for overweight/obese and 70 to 74 % for obese) from nationally representative data [15].

The few papers that have considered BMI correction in children have several limitations that we have been able to address. Two papers proposed correction models using the ‘direct’ approach [24, 36], without exploring differences in reporting errors in height and weight. Another study did not include parent-reported height and weight [34]. A well-established set of correction models for height and weight [16] extensively used in the economics of obesity literature (e.g., [37–39]) was also applied to a sample of 14–22 year olds in the National Longitudinal Survey of Youth without careful consideration of out-of-sample predictive performance. None of these applications considered the value of parent reports, although self-reported height and weight among children aged younger than 14 years have low accuracy [40–43]. Also, most have ignored the issue of validity shrinkage [23].

Our results suggest an important opportunity for improving BMI predictions when objective assessments are not available for the entire sample of children surveyed by collecting self and proxy reports of the child’s height and weight. While the sample size of our validation study did not permit stratified comparisons by child characteristics (e.g. age group, race-ethnicity or weight group), future research is needed to explore these issues.

Limitations

Our study had several limitations. First, the sample size of the validation study was smaller than those of other correction studies, so that our statistical power was limited. It is important to replicate our results for less common outcomes (e.g. obesity) with larger sample sizes. Second, our study included 12–13 year olds with parents in the military, so that our sample may have different reporting biases than children of other ages and in the civilian population. Third, while all children who completed an M-TEENS survey were eligible for measurement, our validation sample included only those who attended on-site measurement visits. Children who were measured differed from those who were not with respect to whether they lived on base, which is to be expected given that measurements were done on-base. Reported height and weight were not significantly different for these groups, however, so that this may not be a source of systematic bias. Finally, the sensitivity of corrected estimates might be improved (100 % is the maximum) by using a measurement-error model that can account for reporting errors in surveys, or classification or regression approaches that directly model binary outcomes.

Conclusions

Objective measurements of height and weight are always preferable over self- or proxy-reports. However, correction models generate estimates that are closer to objective measures than reports. Therefore, in situations where BMI measurement for all subjects is infeasible, an approach that combines collecting a validation sub-sample and multiple reports of adolescents’ height and weight with estimation of BMI correction models may offer a cost-effective and reasonable solution.

References

Ebbeling CB, Pawlak DB, Ludwig DS. Childhood obesity: public-health crisis, common sense cure. Lancet. 2002;360(9331):473–82.

World Health Organization. Global strategy on diet, physical activity, and health: childhood overweight and obesity. Available from: http://www.who.int/dietphysicalactivity/childhood/en/.

National Center for Health Statistics. Health, United States, 2011: with special features on socioeconomic status and health. Hyattsville: U.S. Department of Health and Human Services; 2012.

Ogden CL, Carroll MD, Kit BK, Flegal KM. Prevalence of childhood and adult obesity in the United States, 2011–2012. JAMA. 2014;311(8):806–14. doi:10.1001/jama.2014.732.

Kumanyika SK, Obarzanek E, Stettler N, Bell R, Field AE, Fortmann SP, Franklin BA, Gillman MW, Lewis CE, Poston WC, Stevens J, Hong Y. Population-based prevention of obesity: the need for comprehensive promotion of healthful eating, physical activity, and energy balance: a scientific statement from American Heart Association Council on Epidemiology and Prevention, Interdisciplinary Committee for Prevention (formerly the expert panel on population and prevention science). Circulation. 2008;118(4):428–64. doi:10.1161/CIRCULATIONAHA.108.189702.

Barlow SE, Dietz WH. Obesity evaluation and treatment: expert Committee recommendations. The Maternal and Child Health Bureau, Health Resources and Services Administration and the Department of Health and Human Services. Pediatrics. 1998;102(3):E29.

Himes JH, Dietz WH. Guidelines for overweight in adolescent preventive services: recommendations from an expert committee. The Expert Committee on Clinical Guidelines for Overweight in Adolescent Preventive Services. Am J Clin Nutr. 1994;59(2):307–16.

Krebs NF, Himes JH, Jacobson D, Nicklas TA, Guilday P, Styne D. Assessment of child and adolescent overweight and obesity. Pediatrics. 2007;120 Suppl 4:S193–228. doi:10.1542/peds.2007-2329D.

Himes JH. Challenges of accurately measuring and using BMI and other indicators of obesity in children. Pediatrics. 2009;124 Suppl 1:S3–22. doi:10.1542/peds.2008-3586D.

Groves R. Nonresponse rates and nonresponse bias in household surveys. Public Opin Q. 2006;70(5):646–75.

Centers for Disease Control and Prevention (CDC). Behavioral risk factor surveillance system survey data. Atlanta: U.S. Department of Health and Human Services; 2014.

National Center for Health Statistics. Data file documentation, National Health Interview Survey. Hyattsville: National Center for Health Statistics, Centers for Disease Control and Prevention; 2013.

Data Resource Center on Child and Adolescent Health. National Survey of Children’s Health 2011/2012, Child and Adolescent Health Measurement Initiative. Available from: http://childhealthdata.org/.

Shields M, Connor Gorber S, Janssen I, Tremblay MS. Obesity estimates for children based on parent-reported versus direct measures. Health Rep. 2011;22(3):47–58.

Sherry B, Jefferds ME, Grummer-Strawn LM. Accuracy of adolescent self-report of height and weight in assessing overweight status: a literature review. Arch Pediatr Adolesc Med. 2007;161(12):1154–61. doi:10.1001/archpedi.161.12.1154.

Cawley J. The impact of obesity on wages. J Hum Resour. 2004;39(2):451–74. doi:10.2307/3559022.

Dutton DJ, McLaren L. The usefulness of “corrected” body mass index vs. self-reported body mass index: comparing the population distributions, sensitivity, specificity, and predictive utility of three correction equations using Canadian population-based data. BMC Public Health. 2014;14:430. doi:10.1186/1471-2458-14-430. PubMed PMID: 24885210; PubMed Central PMCID: PMC4108015.

Jain RB. Regression models to predict corrected weight, height and obesity prevalence from self-reported data: data from BRFSS 1999–2007. Int J Obes. 2010;34(11):1655–64. doi:10.1038/ijo.2010.80.

May AM, Barnes DR, Forouhi NG, Luben R, Khaw KT, Wareham NJ, Peeters PH, Sharp SJ. Prediction of measured weight from self-reported weight was not improved after stratification by body mass index. Obesity. 2013;21(1):E137–42. doi:10.1002/oby.20141.

Nyholm M, Gullberg B, Merlo J, Lundqvist-Persson C, Rastam L, Lindblad U. The validity of obesity based on self-reported weight and height: implications for population studies. Obesity. 2007;15(1):197–208. doi:10.1038/oby.2007.536.

Rowland ML. Self-reported weight and height. Am J Clin Nutr. 1990;52(6):1125–33.

Spencer EA, Appleby PN, Davey GK, Key TJ. Validity of self-reported height and weight in 4808 EPIC-Oxford participants. Public Health Nutr. 2002;5(4):561–5. doi:10.1079/PHN2001322.

Ivanescu AE, Li P, George B, Brown AW, Keith SW, Raju D, Allison DB. The importance of prediction model validation and assessment in obesity and nutrition research. Int J Obes. 2015. doi:10.1038/ijo.2015.214.

Legleye S, Beck F, Spilka S, Chau N. Correction of body-mass index using body-shape perception and socioeconomic status in adolescent self-report surveys. PLoS One. 2014;9(5):e96768. doi:10.1371/journal.pone.0096768. PubMed PMID: 24844229, PubMed Central PMCID: PMC4028195.

Reither EN, Utz RL. A procedure to correct proxy-reported weight in the National Health Interview Survey, 1976–2002. Popul Health Metrics. 2009;7(2). doi: 10.1186/1478-7954-7-2.

Brault M-C, Turcotte O, Aimé A, Côté M, Bégin C. Body mass index accuracy in preadolescents: can we trust self-report or should we seek parent report? J Pediatr. 2015;167(2):366–71. http://dx.doi.org/10.1016/j.jpeds.2015.04.043.

Tanielian T, Karney B, Chandra A, Meadows S. The deployment life study. Methodological overview and baseline sample description. Santa Monica: RAND Corporation; 2014.

Chandra A, Lara-Cinisomo S, Jaycox LH, Tanielian T, Burns RM, Ruder T, Han B. Children on the homefront: the experience of children from military families. Pediatrics. 2010;125(1):16–25. doi:10.1542/peds.2009-1180.

Kuczmarski RJ, Ogden CL, Grummer-Strawn LM, Flegal KM, Guo SS, Wei R, Mei Z, Curtin LR, Roche AF, Johnson CL. CDC growth charts: United States. Adv Data. 2000;314:1–27.

Kutner MH, Nachtsheim C, Neter J, Li W. Applied linear statistical models. 5th edition Boston: McGraw-Hill Irwin; 2004.

Hastie T, Tibshirani R, Friedman J. The elements of statistical learning: data mining, inference, and prediction. Second Edition. New York, USA: Springer Series in Statistics, Springer; 2009. p. 139–81.

Lapidus J. Sensitivity and specificity. In: Boslaugh S, editor. Encyclopedia of epidemiology. Thousand Oaks: SAGE Publications; 2008. p. 947–50.

Burnham KP, Anderson DR. Multimodel inference: understanding AIC and BIC in model selection. Sociol Methods Res. 2004;33(2):261–304. doi:10.1177/0049124104268644.

Pérez A, Gabriel KP, Nehme EK, Mandell DJ, Hoelscher DM. Measuring the bias, precision, accuracy, and validity of self-reported height and weight in assessing overweight and obesity status among adolescents using a surveillance system. Int J Behav Nutr Phys Act. 2015;12 Suppl 1:S2. doi:10.1186/1479-5868-12-S1-S2.

Hurvich CM, Tsai C-L. Regression and time series model selection in small samples. Biometrika. 1989;76(2):297–307.

Brettschneider AK, Schaffrath Rosario A, Wiegand S, Kollock M, Ellert U. Development and validation of correction formulas for self-reported height and weight to estimate bmi in adolescents. Results from the kiggs study. Obes Facts. 2015;8(1):30–42.

Cawley J, Danziger S. Morbid obesity and the transition from welfare to work. J Policy Anal Manage. 2005;24(4):727–43.

Gregory C, Ruhm C. Where does the wage penalty bite? In: Grossman M, Mocan N, editors. Economic aspects of obesity. Chicago: University of Chicago Press; 2011.

Majumder MA. Does obesity matter for wages? Evidence from the United States. Econ Pap. 2013;32(2):200–1.

Beck J, Schaefer CA, Nace H, Steffen AD, Nigg C, Brink L, et al. Accuracy of self-reported height and weight in children aged 6 to 11 years. Prev Chronic Dis. 2012. doi:10.5888/pcd9.120021.

Himes JH, Faricy A. Validity and reliability of self-reported stature and weight of us adolescents. Am J Hum Biol. 2001;13(2):255–60. doi:10.1002/1520-6300(200102/03)13:2<255::AID-AJHB1036>3.0.CO;2-E.

Seghers J, Claessens AL. Bias in self-reported height and weight in preadolescents. J Pediatr. 2010;157(6):911–6. doi:10.1016/j.jpeds.2010.06.038.

Jansen W, van de Looij-Jansen PM, Ferreira I, de Wilde EJ, Brug J. Differences in measured and self-reported height and weight in dutch adolescents. Ann Nutr Metab. 2006;50(4):339–46.

Acknowledgements

We thank the U.S. Army for its support of the M-TEENS as well as the military families for their participation. We thank Victoria Shier and Elizabeth Wong for excellent research assistance, and Lane Burgette for valuable statistics consultation.

Funding

This research was supported by National Institute of Child Health and Human Development (grant no. R01HD067536).

Availability of data and material

The datasets analyzed during the current study available from the corresponding author on reasonable request.

Authors’ contributions

AD, MBG-D and NN worked on study conception and design. MBG-D and AH set up the analysis plan, and AH implemented the analysis. All authors reviewed the results, and provided feedback on results. MBG-D, AH and AD compiled the literature review. MBG-D took the lead in writing; AD, NN and AH provided critical comments and feedback. All authors read and approved the final manuscript.

Competing interests

We have read and understood BMC policy on declaration of interests, and declare that we have no competing interests.

Consent for publication

Not applicable.

Ethics approval and consent to participate

All research was reviewed and approved by the Institutional Review Boards at RAND, University of Southern California, and the Army Human Research Protection Office. Online consent and assent was obtained from parents and children, respectively. First, parents consented online; then, children provided assent online.

Author information

Authors and Affiliations

Corresponding author

Appendix

Appendix

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated.

About this article

Cite this article

Ghosh-Dastidar, M.(., Haas, A.C., Nicosia, N. et al. Accuracy of BMI correction using multiple reports in children. BMC Obes 3, 37 (2016). https://doi.org/10.1186/s40608-016-0117-1

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s40608-016-0117-1