Abstract

Qualification of aerospace components is a long and costly process involving material properties, material specifications, manufacturing process, and design among others. Reducing qualification time and cost while maintaining safety offers a large economic advantage and enables faster response to the market demands. In 2012, DARPA established the Open Manufacturing program, a project to develop an integrated computational materials engineering (ICME) framework aimed at rapid qualification. Rapid qualification requires the integration of several technologies: materials, process, design, models, monitoring and control, non-destructive evaluation (NDE), testing, among others. A probabilistic design approach is adopted in the rapid qualification process to enable the integration of these technologies into a single risk-based function to optimize the design process. This approach directs the efforts to those areas that play the most important roles, potentially reducing specimen testing that will be required to develop material databases and design limits. New tests also will be required to validate and verify the ICME framework and develop a better understanding of the processing-microstructure-property relation and associated variability of the processing conditions. The probabilistic design approach is demonstrated for the rapid qualification of an actual aircraft engine component constructed via the powder-bed additive manufacturing process. This paper summarizes the probabilistic rapid qualification design approach and its application to this novel manufacturing process with the goal of reducing the overall qualification process time by 40 % and qualification process cost by 20 %.

Similar content being viewed by others

Background

The current certification process is described in The FAA and Industry Guide for Product Certification [1]. It outlines a structured process in which requirements are defined and compliance is demonstrated. The vision of this document is a “timely and efficient product type design and production approvals; and a timely identification and resolution of the certification basis, potential safety issues, and business practice requirements.” The certification basis identifies the applicable standards to which compliance must be demonstrated and the means of compliance through which it will be demonstrated. Of interest for the additive manufacturing process are safety issues that may arise due to unique designs permitted by this technology as well as the use of new materials that may be tailored to take advantage of the process. Also of interest are the novelty of the process and the possibilities of the process yielding yet unknown defects that are extremely rare and could cause catastrophic failures. The terms certification and qualification will be used interchangeably. The discussion in this paper is intended to apply equally to both certification and qualification with the difference being that certification means demonstrating compliance to civil aviation requirements; whereas qualification means demonstrating compliance to military requirements. Furthermore, qualification may also mean compliance to a set of requirements for a single process or design intent, and may apply to a component or a manufacturing process.

The traditional certification approach tends to be a sequential and iterative process especially in the areas of material development and manufacturing process development. Figure 1a shows the sequential nature of the product development phase, where, for example, the material properties are generated first and as the component is being designed, one may find out that the material property requirements have changed. For example, it could turn out that damage tolerance becomes more important than fatigue during the course of the part design process. Moving on to a simpler example for the sake of illustration, in the traditional approach of the materials development process, one may need to run several trials in order to determine the optimal solution and aging temperatures and time to optimize the yield strength of the material. In the case of a powder-bed laser melting additive manufacturing process, one would need to run several trials varying the power, laser speed, and hatch spacing in order to determine the best conditions to minimize quality metrics such as porosity, for example. These sequential and iterative examples make the traditional certification/qualification process costly and lengthy. Many experiments and tests may be needed to determine which condition yields the required material properties.

In the proposed rapid qualification framework, shown in Fig. 1b, the product development phase is not sequential but concurrent; the manufacturing, materials, and design are at the same level. One is able to numerically simulate the material process, predict the strength, and evaluate the design for compliance; if the design does not meet the requirements, one can numerically make design changes or modify the material processing in order to provide the required strength to meet requirements. In the traditional approach, the analyst may have a general idea of which knobs to turn, but only an analytical or computational tool would be able to direct the analyst as to how far to turn the knobs to reach the desired results. To that effect, a suite of integrated computational materials engineering (ICME) models are being developed and implemented to develop the knowledge needed to drive the desired changes. But, models cannot be relied upon solely to achieve this rapid qualification approach. Targeted testing must be performed in order to develop a deep understanding of the model capabilities in order to frame the range of applicability. Targeted testing and in-process sensing must be performed in order to inform the models such that they can be calibrated, validated, and verified. The models must be framed in a probabilistic setting in order to ascertain the sensitivity of the predictions to the parameters of interest and to understand the expected variability as a result of the random nature of the inputs. At the same time, in situ process monitoring is being implemented in order to provide evidence in the form of In-Process Quality Assurance™ (IPQA®) that the process is under control for the purpose of certification. It is this suite of activities and the knowledge generated that makes the rapid qualification possible. The goal is to be able to reduce the qualification process time by 40 % and costs by 20 %. We intend to achieve the time savings by exercising the process and material models to predict the best processing conditions to minimize defects in the build and to predict the best heat treatment cycle to obtain the highest material strength. This will substantially reduce the exploratory set of experiments needed to define processing conditions and to optimize the heat treatment cycle. The cost savings will be the result of the substantial reduction in material testing needed to optimize the above processes.

This paper describes several different components needed to implement a certification process that takes advantage of computational models, and it is organized in the sequence described in this paragraph. We begin by describing the probabilistic methodology which wraps around the computational models to help evaluate the sensitivity of the input variables and determines the statistical limits of the outputs. It is followed by a very short description of the powder-bed laser additive manufacturing process and a description of the experimental efforts needed to understand the additive manufacturing process and to support the calibration and verification of the computational models. Then the various computational models developed to simulate the various stages of the manufacturing process are presented. In this section, we also describe the pair of monitoring techniques that were used to determine key characteristics of the process. These were used to calibrate the computational models and to collect data that can be used to determine if the process is in check with NDE methodologies. The validation and verification of the computational models is discussed towards the end, it concludes with a summary of the paper.

Rapid qualification framework

In order to enable rapid qualification, a holistic risk-based probabilistic framework is proposed. The models are utilized to identify the optimum process window. The applicable bandwidth of process controls and material property variations is estimated using uncertainty quantification. Manufacturing risks are mitigated by incorporating process monitoring and IPQA®; the real-time assessment of build quality utilizes the range defined by models and uncertainty quantification to immediately inform the user of the manufacturing process status.

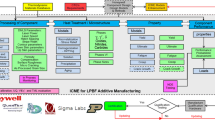

A high-level schematic of the probabilistic framework is shown in Fig. 2, where ICME is coupled with design models and methods. The inputs to the framework are requirements and critical-to-quality (CTQ) metrics, which are redefined as risk-based acceptance criteria to be consistent with a probabilistic framework. The preferred output of the framework is validation of design by analysis.

One of the most important features of this framework is its generic approach that allows for interchangeability of the components and additive manufacturing processes, as well as the addition of new requirements and technology updates. The component definition is not limited to simple geometrical considerations of the design intent, it also defines the manufacturing process that would achieve the location-specific microstructure and properties needed in order to meet design requirements. The new component definition facilitates rapid procurement of qualified hardware needed for validation and certification testing.

The probabilistic framework (Fig. 2) consists of two feedback loops; a digital optimization loop (dotted, inner loop) and a physical validation loop (everything outside the inner loop). The framework is executed by first performing a digital optimization of the design/material based on the available process-microstructure-property models. If the digital optimization does not yield a component/design with acceptable risk for validation, then additional testing/data will be required to mitigate risk. In this case, the physical validation loop would be executed. One of the benefits of having a probabilistic framework is that it enables the determination and optimization of the testing required for validation. For example, a desirability function can be defined to prioritize test requirements based on needs, time, and costs.

Another important benefit of a probabilistic framework is that once the validation testing is completed, the data gathered would be used to update/validate/calibrate the models. As a matter of fact, any additional down the line testing, such as certification and substantiation testing, would also be used to update/validate/calibrate the framework. This ensures continuous improvement of the frameworks’ overall robustness and predictability.

The framework presented in Fig. 2 contains within the dotted line box the set of ICME tools needed to achieve rapid qualification. A micro-model which describes the melting of the powder and solidification of the material to form the part, and a macro-model which describes the residual stresses that build up as a result of the rapid cooling and solidification of the material. Both of these models were developed by ESI to simulate the powder-bed laser additive manufacturing process. QuesTek® developed the models to predict the material microstructure that develops as a result of the heat treatment process along with a model to predict the tensile properties of the resulting microstructure. Sigma Labs and Stratonics developed techniques for in situ monitoring of the process. Sigma Labs uses a pyrometer to monitor the response of the melt pool whereas Stratonics uses a digital imaging technique to capture the response of the melt pool. Furthermore, Sigma Labs has implemented their In-Process-Quality-Assurance (IPQA) technologies to determine if and when the process may be out of control. Honeywell is implementing non-destructive evaluation (NDE) methods to determine if defects that may develop in the part during the manufacturing process can be detected by inspection. SwRI® is developing the probabilistic design and uncertainty quantification tools to be able to define the minimum predicted material property and risk derived from the novel manufacturing process. Lastly, Honeywell is developing the overall rapid qualification framework to bring all these technologies together in order to demonstrate an acceptable means of compliance with extensive use of simulation models.

Discussion

Several different technologies form part of the tools being applied in the proposed rapid qualification approach. The sections described below are intended to describe the capabilities of the tools and how they are being used in the rapid qualification approach, not to describe the theory behind the tools. The theory and methods implemented in the tools and their capabilities are described to a greater extent in the references cited in each of the sections as appropriate. Results generated by the tools are illustrated in each of the sections.

Probabilistic design, uncertainty quantification, sensitivity analysis, and Bayesian calibration

In 1975, a series of papers by Haugen and Wirsching [2] appeared in Machine Design, to quote them: “Sooner or later you will have to use probabilistic design. It is coming. It’s inevitable. It is the only means for setting realistic definitions or bounds on problems involving costs, warrantees, and even the risk of life itself.” To that effect, we intend to embrace the possibilities that this technology brings and implement them in the proposed rapid qualification approach. Rapid qualification requires the integration of several seemingly unrelated activities; the advantage of probabilistic design [3] is that it is able to integrate them all into a single function based on risk. The probabilistic rapid qualification approach is compared to the traditional probabilistic qualification approach in Fig. 3. The traditional qualification approach (Fig. 3a) typically involves the identification and execution of physical tests that are used to construct an empirical model to predict response over a range of input parameters. Similar to traditional qualification, the probabilistic rapid qualification approach (Fig. 3b) may also involve the identification/execution of physical tests and construction of empirical models to predict the response of a component. It involves the development of computational models to predict response, and the computational models also require identification tests. However, the tests associated with the computational models are virtual tests that can be executed in a fraction of the time and cost of physical tests. As a result, the analyst can consider a wider range of variables for assessment of failure, and the design can evolve more quickly compared to the traditional qualification approach. In addition, the probabilistic approach quantifies the sensitivities of the response with respect to the input variables, which helps the analyst to identify the parameters that have the most impact on the design and manufacturing processes.

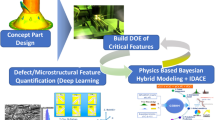

A very important requirement for the DARPA OM program is to predict the design curve for the material. This means that the model predictions must provide not only a mean value but also the tails of the distribution. It requires the prediction of these properties throughout the component in a location-specific sense that incorporates all submodels of the design process, see Fig. 4. In this section, we present a summary of uncertainty quantification, sensitivity, and Bayesian calibration analyses and how these have been used to provide insight into the workings and accuracy of the proposed ICME models.

The first example addresses uncertainty quantification for the residual stress prediction model. Five process or material parameters were explored to develop the response surface for the process. The parameters include (a) yield strength of the material; (b) modulus of elasticity; (c) absorptivity of the powder; (d) thermal conductivity; and (e) heat capacity. Twenty-one simulations were performed, and residual stresses were computed and used to generate a response surface. The simulations included variations of the five listed parameters as defined by a Latin Hypercube sampling approach. The uncertainty analysis was performed on a model of a beam with a rectangular cross section. The sensitivity results shown in Fig. 5 indicate that the residual stresses at the interface between the build and the plate are more sensitive to the conductivity of the material; while internal to the build, it is more sensitive to the yield strength of the material, as indicated by the circled locations. The bar charts on the left of the figure show the sensitivity of the model to each one of the variables that are part of the models. The line charts on the right of the figure describe the scatter or variation of the yield strength as a function of the scatter of each one of the variables. Note that the one with the higher sensitivity yields large scatter in the prediction.

The sensitivity analysis revealed that different properties are more relevant at different locations of the build process. Thermal conductivity is more important at the interface with the build plate, whereas yield strength is more important higher in the build. At the plate-part interface, the thermal conductivity acts to conduct heat away into the much larger and cooler build plate essentially quenching the material right at the interface, leading to very high residual stresses. The thermal difference between the plate and the part controls the residual stress and since the thermal conductivity controls the thermal gradient, it controls the sensitivity of the residual stresses. Higher up into the build, the material is not as cold as the plate due to the heat build-up that accumulates as the build progresses leading to a lower thermal gradient between the layer and the substrate. As the build height increases, the thermal gradient reaches a steady state and the residual stress is controlled by or is more sensitive to the yield strength of the material.

For rapid qualification, it can be deduced that attention should be paid to the accuracy of yield stress data; either obtained numerically or experimentally. The material conductivity is only important for a region that is not going to be part of the final product. We therefore are able to focus cost and time on the parameters most relevant for final product performance.

The second example presents the approach employed to calibrate the yield strength model using a Bayesian calibration framework. The intent of this example is to show that the model calibration approach can identify and correct for model inadequacies, establish updated ranges for the calibration parameters, and provide calibrated model predictions with quantified uncertainty. The framework goal is to calibrate the yield strength model to the variables in the functional relationship of the yield strength along with other known physical variables that are part of the experimental procedure used to generate the data, including discrepancy variables.

During development of the microstructure models, experiments were performed at different heat-treat temperatures and global energy densities (GEDs) to determine the effect of such variables on the material microstructure. Data were available at selected values of the solution temperature, and GED. Twenty-seven observations were applied to the calibration at three different solution temperatures. The solution temperature was set at the deterministic values associated with the selected experiment data, whereas GED was not an input variable in this model. A Gaussian process [4] response surface was used as a surrogate model in order to carry out the calibration analysis.

The resulting calibrated model is shown in Fig. 6 at fixed values of solution temperature and GED, respectively. Figure 6a illustrates that GED has a significant influence on the variability in yield strength. An analysis of the calibration data without accounting for GED therefore results in an overestimate in yield strength variance, and this error increases as GED values are increased. In both Fig. 6a, b, it can be observed that when a discrepancy term is not used, most of the experimental observations fell completely outside the entire range of values associated with the calibrated probabilistic model. When a discrepancy term was applied, nearly all of the experimental observations fell within the range of values associated with the calibrated probabilistic model. Such a calibration framework can help improve the accuracy of the predictive models both in the mean as well as the predictive variability of the property. It helps define a more accurate design limit curve [5].

Manufacturing process

The rapid qualification framework is demonstrated for components manufactured using the Direct Metal Laser Sintering (DMLS®) or powder-bed process. DMLS® is a form of additive manufacturing (AM) that deposits very thin layers of metal (microns thick); it is melted by a laser beam that is on the order of 100 μm in diameter. The laser melts the metallic powder in the selected areas of the powder bed; these areas then solidify to become a section of the final build. Additional powder layers are added, and the process is repeated. The equipment is capable of producing laser travel speeds of up to a few meters per second and travel distances on the order of multiple kilometers. A complete build will typically have thousands of material deposit layers. The question is whether the process is controlled to such precision that the likelihood of producing anomalies in the build are to aerospace quality standards, or is the process resilient enough that any mishaps are healed either by the adjacent laser pass or perhaps by the melting of subsequent layers.

Modeling the DMLS® process therefore involves a wide range of length and time scales that have to be accounted for in the rapid qualification framework. Modeling all length scales is necessary in order to predict work-piece material characteristics such as lack of fusion, boiling porosity, residual stresses, and deformations. Attempting to pursue all physics aspects in one model is prohibitively expensive from a computational standpoint, making it necessary to develop multi-scale models that exchange relevant information ensuring accurate predictions across all length scales.

Experimental effort to support rapid qualification

The experimental effort outlined in Fig. 7 supports optimum manufacturing process parameter setting and also supports ICME model development, validation, calibration, and verification. The first task performed was the development of the optimum DMLS processing conditions for the 718Plus alloy such as laser power, laser speed, and hatch spacing. This was followed by a series of specimen builds to verify the models included the important physics of the problem. Furthermore, data was then generated to calibrate the ICME models wherever appropriate. Additionally, the effort included the development of process monitoring capabilities to differentiate between nominal and off-nominal processing conditions and to provide independent temperature measurements for calibration of the ICME process modeling tools. The empirical approach, experimental testing, and data collection were guided by the program metrics, the V&V checklist, and initial UQ assessment. As a result, when possible, a concerted effort was made to generate quantitative data suitable for computational models and to ascertain variability. This approach differs significantly from our traditional material/process characterization because a majority of the testing was generated for model development, for example: subscale and in-process data. The knowledge gained in this effort we believe will lead to a reduction in the overall testing needed to certify a component by as much as 40 %, and an increase from 10 to 20 % of the overall certification by analysis potential.

Modeling components

To address the challenge of multiple length scales and multiple physics representations, the problem is subdivided into multiple length scale categories: micro- and macro-models. The micro-model characterizes the weld pool with length scales ranging from a few microns to a few millimeters. The micro-model delivers information about the lack of fusion, boiling porosity and quantifies the melt pool dimension and the thermal cycle. Macro-models utilize the heat distribution boundary conditions characterized by the micro-models to resolve the work-piece build process and determine the residual stresses and displacements. Metallurgical models analyze alloy components to predict microstructural phases and analyze the heat treatment processes to predict the volume content of each phase, which deliver the material properties required for design.

Micro-models

The micro-models resolve the weld pool physics including heat transfer, melting, surface tension forces, effect of thermal gradients leading to Marangoni forces, and solidification. The model is based on computational fluid dynamics (CFD) software suite CFD-ACE+ [6]. The Navier-Stokes equations are solved to determine the flow characteristics in the weld pool. The momentum equations are extended using source terms to account for gravitational body forces, and surface tension is deduced from free surface curvature and surface tension values. The energy equation accounts for conduction (diffusion term) and convection. It is complemented with source terms to account for the latent heat released or required during solidification or melting, respectively. Within the scope of this program, radiation source terms were developed that account for the position of the molten front. This enables accurate capture of all heat transfer mechanisms. Volume of fluid (VOF) tracks a scalar that indicates the position of the free surface between a liquid and gas. VOF calculates the free surface curvature using a second order reconstruction scheme (PLIC) to provide the surface tension forces required in the momentum equations. The details of the micro-model, corresponding validation, and coupling with macro-models are available in [7, 8]. Porosity predictions and how models are used to identify ideal process window towards fully dense material were presented by Mindt et al. [9].

Macro-models

The finite element program, Sysweld, is used to assess as-built residual stresses and final work-piece distortion [10]. The coupling between micro- and macro-models is based on transferring the process fingerprint from high resolution to lower resolution models [7, 11], Fig. 8. The rapid qualification platform, however, provides additional flexibility by providing access to measurements acquired from the process; such as the pyrometer measurement shown in Fig. 9b. Figure 9a describes the relationship of the pyrometer field of view to the manufacturing parameters and provides the rational for the observed temperature trace. The laser traverses the pyrometer field of view several times, and it is able to detect a temperature rise even when the laser is outside but near the field of view of the pyrometer. Details of macro-modeling and corresponding work-piece thermal history calculations as well as the theoretical background of mechanical analysis are discussed in [8].

Based on the size and related temporal scales involved in the DMLS process, it is not practical to simulate the entire laser path or actual deposit layer thickness due to the large computational costs. The goal of the residual stress model was to determine an effective method to capture the heating and cooling rates on a real-scale model and apply those to the lumped deposits. The lumped deposits may represent stripes or multiple layers in the actual build. By lumping deposit layers, multiple deposit layers experience the heating and cooling rates from the laser simultaneously, which dramatically improves the computation time. These lumped models are used to predict residual stress and deformation during and after the build. N’Dri et al. summarized the validation methodology effort performed in [7]. Figure 10 shows a comparison between predicted and measured distortion along the centerline of a beam that was cut off the build plate without any stress relief. The specimens of the beams are 5-mm wide and 75-mm long, and thicknesses varying from 1.5 to 4 mm.

Rapid qualification is enriched with a large database of experimental data to support, verify, and validate the models. For example, a set of experiments were performed to understand the evolution of the residual stresses as the beam is built. Several different beams of varying thickness were built and removed from the base without the application of the stress relief cycle. The curvature of the beams of different thickness is plotted against the thickness of the beam as shown in Fig. 11. It is well known that the curvature of the beam is inversely proportional to the bending moment as shown by the 1/x relationship in this figure, and it also implies that the residual stresses at the outer layer of the beams is always of the same magnitude regardless of the beam thickness.

Metallurgical models

The prediction of the microstructure evolution during the DMLS® build is not being pursued in this program. It is assumed that as the specimens or parts go through the HIPping cycle, the material is fully homogenized and that the microstructure of the final part would depend exclusively on the solution and age cycle. The post build processing for the ATI 718Plus alloy used in this program includes a stress relief followed by a hot isostatic pressure (HIP) cycle, and then solution heat treat (SHT). Following solution, a two-step age, microstructural evolution of the material during the heat treatment process is characterized both experimentally and numerically. The experiments provide direct evidence of the microstructure under various processing conditions. The analytical approach is used to predict the microstructure [12], and afterwards, it was compared with the experimental data to perform a calibration of the models. The intent is to show how the concurrent use of the calibrated and verified analytical model and experimental methods yields a much more robust approach for qualification than the classical approach. Such robustness is possible because there is much more knowledge brought into the process by the analytical models that can be explored than it is possible with a purely experimental methodology involved in the classical approach.

Alloy 718Plus possesses a complex microstructure with multiple phases present. Analytically, the equilibrium step diagram was computed to show the phase fraction of the primary phases, as well as the oxide (Al2O3), nitride (TiN), boride (Mo2CrB2), and carbides (NbC). The solution treatment temperature that has been commonly used in wrought 718Plus which lies in the subsolvus range of the \( \delta \)-Ni3Nb was used with the current powder composition. Primary \( {\gamma}^{\prime } \) as well as the secondary \( {\gamma}^{\prime } \) fractions have also been computed using an appropriate database. A simulation was performed using QuesTek’s proprietary PrecipiCalc® modeling tool to predict the size distribution, number density, and volume fraction of the secondary \( {\gamma}^{\prime } \) precipitates during the heat treatment at the beginning and end of the second aging. The predicted final \( {\gamma}^{\prime } \) precipitate radius of 14.6 nm agrees very well with literature data from TEM and LEAP for wrought 718Plus. The composition of the \( {\gamma}^{\prime } \) precipitate and the matrix has also been calculated, and the comparison with LEAP data shows a RMS composition error less than 1 %, which represents good accuracy of the prediction.

Experimentally, a full microstructural assessment of 718Plus processed by DMLS® was conducted to characterize the development of microstructures through the complex processing route. Samples were characterized at each of the stages of the heat treatment cycle using standard metallographic techniques to assess the development of the microstructure. Light optical microscopy at low magnifications was used to observe the distinct as-solidified melt pool regions of the numerous laser tracks. Increasing magnifications reveal the fine dendritic structure of the solidification segregation. SEM was used to observe several of these phases and verify their constitution; Fig. 12 shows a representative micrograph of the \( \delta \) phase; other phases such as the \( {\gamma}^{\prime } \), carbide, and oxide phases present in the alloy were observed in similarly representative micrographs and corresponding EDS spectral analyses that validated their constitution. Carbide phases were identified to be primarily Nb-based, with a small amount of Ti. Oxide phases were found to be primarily Al-based, also with a minor amount of Ti. High resolution SEM was needed to observe the highly refined secondary dendrite arm spacing (SDAS), which was measured at an average of 0.4 μm; it was then used to determine the cooling rate during solidification, and was fed back into the analytical simulations. Preliminary solidification micro-segregation and HIP homogenization simulation were performed using a Scheil model and the DICTRA code. This simplified simulation shows the homogenization process is very rapid at the high HIP temperature. Figure 13 presents EBSD views of the microstructure for various processing conditions. This figure shows the effect of grain orientation as a result of the DMLS® build and the various processing conditions studied.

Strength models

Methods have been developed to modify the yield strength, ductility, and toughness of the materials giving engineers the ability to tailor the mechanical properties to suit a variety of different applications. For example, the favorable properties of steel result from interstitial incorporation of carbon into the iron lattice. Brass, a binary alloy of copper and zinc, has superior mechanical properties compared to its constituent metals due to solution strengthening. Work hardening has been used for centuries by blacksmiths to introduce dislocations into materials to increase yield strength.

Plastic deformation occurs due to motion and generation of dislocations so as to result in macroscopic deformation. Hence, if we want to enhance a material’s mechanical properties (i.e., increase the yield and tensile strength), we simply need to introduce mechanisms that prohibit the motion of the dislocations. Typical strengthening mechanisms are work hardening, solid solution hardening, precipitation hardening, grain boundary strengthening, and transformation hardening. The strength developed in \( \gamma /{\gamma}^{\prime } \) nickel-based superalloys comes primarily due to precipitation hardening, but it is also influenced by solution hardening and grain boundary strengthening. Each mechanism contributes to the overall strength in varying amounts depending on the material composition and processing conditions. The microstructure of the 718Plus alloy consists mainly of a \( \gamma /{\gamma}^{\prime } \) structure, with the \( {\gamma}^{\prime } \) precipitate as the primary strengthening phase. The \( \delta \) phase is sometimes observed depending on processing conditions, which also contributes to overall material strength. To model the strength of 718Plus, a simple linear superposition of the strengths of different phases in the alloy is employed (AIM Model reference). Figure 14 shows a comparison of the obtained DMLS yield strength data against those for casting and forging properties. The comparison of the calibrated analytical predictions is not shown. Although the mean prediction is on target, the scatter prediction is not sufficient yet. We are in the process of generating additional specimen testing in order to better understand the data scatter. The goal is to attain a 40 % reduction in overall testing to develop the databases needed for certification.

In-process sensors

In-process sensing, monitoring, and melt pool measurements have been highlighted as important development needs by NIST [13] for the qualification of components produced via the additive manufacturing process. The report notes that new sensors, integrated models, and measurement methods will be needed to enable integration of materials and processing control and feedback. Sensors are needed to monitor attributes of the process in order to ensure repeatability and assure uniformity of the manufactured parts. But, in this program, sensors also serve a very important need. They are being used to acquire process knowledge and understanding that has been missing due to the novelty and maturity of the technology. The sensors help identify characteristics of the process that need to be controlled, but also help identify system behavior or response that can be used as indicators of process quality and repeatability. These indicators include heating and cooling rate, peak temperature, and size and aspect ratio of the melt pool. They were used to sense, measure, and visualize the effect of changes to the processing conditions on the temperature distribution and geometry of the melt pool in order to verify that the ICME models incorporate the relevant physics of the problem. The capabilities highlighted in this section were implemented by two different companies, Sigma Labs and Stratonics.

In-process monitoring experiments were performed, and data was collected using high-speed pyrometers and a digital camera to capture the thermal response of the DMLS® process and to visualize the melt pool shape. In-process data under nominal “optimized” processing conditions was gathered to establish a baseline and used as a QualityMetric™ (“QM”) [14, 15]. The data was also used to compare the metrics under different processing conditions to determine if they could be differentiated and to provide independent temperature data to validate the ICME process models. A series of “tall column” builds were made that included up to eight separate build segments each containing 250 layers as shown in Fig. 15b. Segments were made using either nominal or off-nominal parameters. The global energy density (GED) levels used to make the builds ranged from 0.7 to 7.5 J/mm2; a value of 1.95 J/mm2 was determined to be the “optimum” processing condition based on experimental data that showed minimum build porosity. The GED is defined as the ratio of the laser power (P) to the product of the laser speed (S) and hatch spacing (H), GED = P/(H*S).

Applying Sigma Labs’ multi-variate classification algorithms to the in-process data from the stationary pyrometer provided a means of separating out the response of the material versus the response of the process, categorized as QM for the material and process. QM material is a metric more closely related to the material response on a relatively longer time scale. The QM process is a metric more closely related to the process response over shorter, or melt pool, time scales. A QM is any given in-process data collected by, or derived from, the pyrometer that can be used to characterize the process, such as peak temperature, heating, or cooling rate, among others. Since the pyrometers are fixed or stationary, in-process data can only be collected when the laser traverses the field of view of the pyrometer as shown in Fig. 9b. Note that this in-process data can only be collected once per layer. Once all the QM features are derived for a specific set of layers, a statistically rigorous multivariate classifier is used to track the clustering of these multidimensional features. Next, a QM limit or cutoff value is determined to identify if any specific candidate layer is considered a statistical outlier as compared to the sample baseline population initially established. That QM limit is defined as the distribution of a sum of the squares of k independent standard normal random variables, and for the following examples, it was set to 95 %. If the feature sets are normally distributed for a given number of degrees of freedom, the critical value of the distribution can serve as a single, non-objective arbiter (aka, an upper control limit (UCL)) of nominal versus off-nominal. Furthermore, it may be assumed that a layer is off-nominal when any one of the QMs are off-nominal. The only way a layer can be considered nominal is if both QMs are in the nominal range. In Fig. 16, we can see two comparisons of in-process data against a baseline standard. Each dot represents the value for the QM for a single layer. The off-nominal conditions are defined by the QM limit presented by the red horizontal line. A green dot means a layer is considered to be nominal, red means off nominal. In Fig. 16a, the comparisons show that only a few layers are off-nominal, while in (b), all layers are considered to be off-nominal. One may ask if the in-process data in the figure represents a good or a bad build that should be rejected. Clearly, the in-process data alone cannot provide the complete answer. Quantitative post process information from metallography or mechanical property evaluations, for example, must be used during the process characterization phase to assist in determining acceptable from suspect when viewing in-process data. In Fig. 16b, the QM process is all off-nominal and the decision to reject a part may initially appear obvious. While the QM material in-process data in (a) has flagged most layers as nominal, a few are off-nominal as expected. Should this part be rejected because it shows there are a few layers that are off-nominal? The decision at this point during process characterization and development is not yet clear. Statistically, it is expected that with a 95 % QM limit, 5 % of layers will be flagged as off-nominal since they reside in the tail of the distribution (or beyond the UCL) and it is up to the end user to define how to use this in-process data coupled with post-process quantitative data to accept or buy parts. However, from the QM material stand point, this may be a good sound build even though the response is slightly off-nominal process-wise. Statistically, we may be able to define a statistical threshold or UCL, but we most likely need to define an overall material and process strategy in order to accept or buy parts using in-process data. This is a topic of ongoing research.

Digital imaging [16] of the melt pool is operated in parallel with pyrometer data acquisition. The 2D images provide information about melt pool size, shape, and temperature (Fig. 15c). Short-wavelength and long-wavelength images are recorded. The obtained images are processed offline to determine the temperatures from the ratio of the short- and long-wavelength images. The camera is calibrated with a 2500 °C heat source. A total of 46 different build conditions were performed, and data was acquired for analysis. The experiment focused on the study of the effect of varying parameters at a constant GED on the melt pool temperature and dimensions.

Figure 17 presents an example image and the corresponding data extraction: peak temperature, cooling rate, area, length, and width of the melt pool. Peak melt pool temperatures in the order of 2700 °C are measured (the boiling point of 718Plus is in the 2750 °C range). The corresponding simulation conditions are shown in Fig. 18. The comparison shows very good agreement between the measurement and the numerical predictions both in terms of temperature values and melt pool dimensions.

Micro-model (numerical) result corresponding to process conditions shown in Fig. 17

Non-destructive testing

Non-destructive evaluation is a very important tool during the qualification process. In the classical manufacturing process, raw materials such as ingots, pancakes, castings as well as final machined parts are inspected for defects. Conventional NDE methods offer limited capabilities when inspecting additive manufacturing (AM) parts due to the small size, orientations, shape of defects, unique interface, and extreme surface roughness. The size of the relevant defects can be expected to be as small as or larger than 5 μm, and the surface roughness of the specimens can be expected to be in the order of ~400 μm. Both of these make conventional NDE methods unsuitable for inspecting AM parts. Therefore, we have identified new emerging NDE methods as echoed in the latest NIST (reference) roadmap recommendations for characterizing AM parts with unique defect characteristics and microstructure. These identified NDE methods are acoustic microscopy, X-ray 3D computed tomography (CT) and 2D X-ray digital radiography (XDR), and acoustic sensor testing (AST). Figure 19a shows the detection in one of two samples that were purposely contaminated with foreign material to produce defects. We were fortunate that some relatively large porosity was formed in one of the samples, and we were able to pick it up with both X-ray CT and acoustic microscopy. The acoustic microscopy C-scan is shown in Fig. 19b. The NDE outcomes can be easily integrated into the probabilistic framework as shown in Fig. 2; the NDE outcome feeds into the risk calculation by attenuating the population of defects that was present in the component by screening out those parts found to be defective.

Verification and validation

In order for the ICME models to be integrated into the design process, their accuracy and reliability must be demonstrated. The models must capture the relevant physics of the problem and must also be computationally efficient. Several experiments were performed to validate the models. The first of the experiments consisted of passing the laser over a single crystal flat surface at several different power, speed, and hatch spacings to determine their influence on melt pool size. The second experiment consisted of using a powder bed to construct cube-shaped coupons for several different build conditions (i.e., power, laser speed, and hatch spacing). The coupons were sectioned, polished, and mounted. Macros were obtained from the sections, and the porosity levels were measured and catalogued. The experimental results were compared to the results of the micro-models that simulate the melting and solidification process. It was found that the computational results were very similar to the experimental results. They even showed that some of the porosity that was previously thought to be keyhole welding was most likely entrapped gas.

Tool assessments were performed to obtain maturity level (TML), as described by Cowles et al. [17], for each of the ICME computer codes in the framework. For micro-models, the maturity was estimated at four to five. This reflects the level of complexity of the phenomena that takes place in the DMLS process that must be modeled in order to capture the behavior of the melting and solidification of the powder. Macro-models calculating as-built residual stress were estimated to be in the three to four range. The physical models can be created, but the stress-strain models describing the very high temperature material behavior over a large temperature range are not always available. The TML level for the material property models were estimated to be at level 4. These tools are ready for use in a production environment, albeit with the restrictions that any new material and process will require a calibration from experimental data. The overall ICME and rapid qualification platform is estimated to be on the order of three to four, with a need to exercise the platform several times to achieve higher maturity levels.

Conclusions

A probabilistic framework is proposed for rapid qualification of aerospace components. It leverages knowledge generated by ICME models regarding the manufacturing process and material behavior that can be used to identify testing needed for model development and for evidence of compliance. The proposed framework uses a variety of tools to minimize the risk of failure. It comprehensively quantifies model uncertainty, experimental testing, process monitoring, and non-destructive evaluation. It can even be used to calibrate tools to improve the accuracy of predictive models. It is able to bring together all of the knowledge generated by the models to identify those variables that are most important in demonstrating compliance.

A discussion of the different technologies that are needed in the rapid qualification process was presented, including the probabilistic design format, ICME models, and the quality assurance methods that form the framework. It was demonstrated how sensitivity analysis within a probabilistic format can be used to identify the important design variables as well as how model calibration can be used to improve the model accuracy. The process models were able to identify the processing conditions that can lead to defect-free manufacturing process parameter settings. The residual stress models were able to reasonably predict the residual stresses and deformation during the build process. The analytical models that were used to predict the alloy microstructure as a result of post processing heat treatment were validated. These models were able to identify the type of experimental samples needed to characterize the material microstructure under processing conditions of interest.

The current status of the computational codes is encouraging, but there is a need to improve computational efficiency to enable simulation of the larger build spaces associated with real-world components. The tools and models are now available to simulate the DMLS® process for many material and processing conditions. They can be used to predict the process parameters that will yield good material consolidation with low porosity and to the top layer surface roughness from the predicted melt pool profiles. This leads to the capability to more narrowly defined process parameter conditions to perform a confirmatory series of specimens that is much smaller, thus saving time and resources especially during the metallographic analysis step, which typically takes a significant amount of time. In-process monitoring is used to validate the models as well as In-Process Quality Assurance™. The sensors and corresponding IPQA® provide the link between specification and numerical process window selection to the actual build and final product. The uncertainty quantification tools provide a probabilistic foundation for decision making enabling the assessment of overall process risk.

Lastly, while the models are described as fully integrated and somewhat sequential in the process, the use of them is independently valuable. For example, a process model can help define process limits for good integrity builds, independent of microstructure or strength. These models and this rapid qualification framework require, and will continue to require, a substantial amount of “informed testing” to continuously support the analytical predictions and ensure qualification/certification authorities can have confidence in the results.

References

FAA. [Online] [Cited: August 8, 2015.] https://doi.org/www.faa.gov/aircraft/air_cert/design_approvals/media/CPI_guide_II.pdf

Haugen EB, Wirsching P (1975) Probabilistic design. Machine Design. April 17 pp. 98–104

Gorelik M, Obayami J, Slovisky J, Frias D, Swanson H, McFarland J, Enright M, Riha D (2013) Effect of manufacturing variability on turbine engine performance: a probabilistic study. s.n, San Antonio, TX, pp GT2013–95145, Proceedings of ASME Turbo Expo

Enright MP, McClung JRC, Moody JP, McFarland JM, Wu WT, Shankar R (2013) Probabilistic integration of material mrocess modeling and fracture risk assessment using Gaussian Process models. s.n, Boston, MA, Proceedings of the 54th AIAA/ASME/ASCE/AHS/ASC Structures, Structural Dynamics, and Materials Conference

McFarland JM (2015) Variance decomposition for statistical quantities of interest. Journal of Aerospace Information Systems 12:204–218, Special Section on Uncertainty Quantification

ESI Group (2014) CFD-ACE+ Theory and User Manuals. s.l. : ESI Group

N’Dri N, Mindt H-W, Shula B, Megahed M, Peralta A, Kantzos P, Neumann J (2015) DMLS process modelling & validation. Wiley, TMS 2015 144th Annual Meeting & Exhibition, Orlando, 978-1-119-08241-5

Megahed M, Mindt H-W, N’Dri N, Duan H-Z, Desmaison O (2016) Metal additive manufacturing process and residual stress modelling. s.l. : Springer Verlag, IMMI. Integrating Materials and Manufacturing Innovation, 5:4. doi:https://doi.org/10.1186/s40192-016-0047-2

DMLM models—numerical assessment of porosity. H.-W. Mindt, M. Megahed, A.D. Peralta, J. Neumann. Phoenix, AZ., USA. : s.n., 2015. 22nd ISABE Conference, Oct. 25–30

ESI Group (2015) SYSWeld Theory and User Manuals. s.l. : ESI Group

Vogel M, Khan M, Ibarra-Medina J, Pinkerton AJ, N'Dri N, and Megahed M (2013) A coupled approach to weld pool, phase and residual stress modelling of Laser Direct Metal Deposition (LDMD) Processes. Salt Lake City : s.n.,. 978-1-11876-689-7. 2 World Congress on Integrated Computational Materials Engineering, 12 Jul 2013

Kuehmann CJ, Olson GB (2009) Computational materials design and engineering. 4, Materials Science and Technology, Vol. 25

NIST. https://doi.org/www.NIST.gov. [Online] [Cited: September 10, 2015.] https://doi.org/www.nist.gov/el/isd/upload/NISTAdd_Mfg_Report_FINAL-2.pdf

Dave VR, Hartman DA, Cola MJ (2009) In-Process Quality Assurance for aerospace welding applications. s.l. : American Welding Society, Welding Journal, 88:28–32

Dave VR, Hartman DA, King WH, Cola MJ, Vaidya RU (2003) Strategy for small lot manufacturing: In-Process Monitoring and Control. Los Alamos National Laboratory. LA Science, Los Alamos, NM, pp 63–67, 28

Marshall GJ, Young WJ II, Craig J, Wakeman T, Thompson SM (2015) Dual thermographic monitoring of Ti-6Al-4V cylinders using direct laser deposition. Austin, TX : s.n., 26th International Solid Freeform Fabrication Symposium - An Additive Manufacturing Conference

Cowles B, Backman D, Dutton R (2012) Verification and validation of ICME methods and models for aerospace application. 2, s.l. : SpringerOpen, Integrating Materials and Manufacturing Innovations, Vol. 1, 11 June 2012

Acknowledgements

This work was performed under the DARPA Open Manufacturing Program entitled “Rapid Low Cost Additive Manufacturing” contract number HR001-12-C-0037 to Honeywell International Inc. The authors acknowledge the financial support and the guidance of the managing panel.

We would also like to acknowledge our colleagues who have helped with different aspects of the program. From Honeywell: J. Neumann, H. Deutchman, B. Baughman, P. Kantzos, M. Kemp, S. Singh, B. Shula., and G. Levesque. From SwRI: J. McFarland. From ESI: N. N’Dri, H.-W. Mindt. From Questek: D. Snyder, G. Olson, J. Sebastien. From Sigma Labs: M. Cola. V. Dave. From Stratonics: T. Wakeman.

Funding

This work presented in the manuscript was performed under the DARPA Open Manufacturing Program entitled “Rapid Low Cost Additive Manufacturing” contract number HR001-12-C-0037 to Honeywell International Inc. The manuscript itself was prepared using DARPA funding.

Author information

Authors and Affiliations

Corresponding author

Additional information

Competing interests

The authors declare that they have no competing interests. No other type of competing interests exists.

Authors’ contributions

AP is the Principal Investigator of the DARPA Open Manufacturing Program awarded to Honeywell under which the subject of this paper was developed. ME is the uncertainty quantification lead on the program team and developed the probabilistic framework. MM is the lead for the process modeling effort and leads code development. JG leads the microstructure and strength modeling effort for this program. MR leads the In-Process Quality Metrics effort for this program, and JC leads the melt pool monitoring effort for this program. All authors read and approved the final manuscript.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (https://doi.org/creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Peralta, A.D., Enright, M., Megahed, M. et al. Towards rapid qualification of powder-bed laser additively manufactured parts. Integr Mater Manuf Innov 5, 154–176 (2016). https://doi.org/10.1186/s40192-016-0052-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1186/s40192-016-0052-5