Abstract

This paper proposes a novel feature descriptor, named local quantized extrema patterns (LQEP) for content based image retrieval. The standard local quantized patterns (LQP) collect the directional relationship between the center pixel and its surrounding neighbors and the directional local extrema patterns (DLEP) collect the directional information based on local extrema in 0°, 45°, 90°, and 135° directions for a given center pixel in an image. In this paper, the concepts of LQP and DLEP are integrated to propose the LQEP for image retrieval application. First, the directional quantized information is collected from the given image. Then, the directional extrema is collected from the quantized information. Finally, the RGB color histogram is integrated with the LQEP for a feature vector generation. The performance of the proposed method is tested by conducting three experiments on Coel-1K, Corel-5K and MIT VisTex databases for natural and texture image retrieval. The performance of the proposed method is evaluated in terms of precision, recall, average retrieval precision and average retrieval rate on benchmark databases. The results after investigation show a considerable improvements in terms of their evaluation measures as compared to the existing methods on respective databases.

Similar content being viewed by others

Background

Data mining is an active area of research for mining or retrieving data/information from a large database or library. Image retrieval is the part of data mining in which the visual information (images) from the large size database or library is retrieved. Earlier, text-based retrieval was used for retrieving the information. In this process, the images are annotated with text and then text-based database management systems were used to perform image retrieval. Many advances, such as data modelling, multidimensional indexing, and query evaluation, have been made along this research direction. However, there exist two major difficulties, especially when the size of image collections is too large (tens or hundreds of thousands). One is the vast amount of labor required to annotate the images manually. The other difficulty, which is more essential, araise due to the rich content in the images and the subjectivity of human perception. That is, for the same image content different people may perceive it differently. To address these issues, content-based image retrieval (CBIR) came into existence. CBIR utilizes the visual content such as color, texture, shape, etc., for indexing and retrieving images from the database. The comprehensive and extensive literature on feature extraction for CBIR is available in [1–5].

Color does not only add beauty to images/video but provides more information about the scene. This information is used as the feature for retrieving the images. Various color-based image search schemes have been proposed, some of these are discussed in this section. Swain and Ballard [6] introduced the concepts of color histogram feature and the histogram intersection distance metric to measure the distance between the histograms of the images. The global color histogram is extensively utilized for the purpose of retrieval which gives probability of occurrences of each unique color in the image on a global platform. It is a fast method and has translation, rotation and scale invariant property, but it suffers from lack of spatial information which yields false retrieval results. Stricker et al. [7] proposed two new color indexing schemes. In the first approach they have used the cumulative color histogram. In the second method instead of storing the complete color distributions, first three moments of each color space of image are used. Idris and Panchanathan [8] have used the vector quantization technique to compress the image and from codeword of each image, they obtained the histogram of codewords which was used as the feature vector. In the same manner, Lu et al. [9] proposed a feature for color image retrieval by employing the combination of discrete cosine transform (DCT) and vector quantization technique. A well known image compression method i.e. block truncation coding (BTC) method is proposed in [10] for extracting two features i.e. block color co-occurrence matrix (BCCM) and block pattern histogram (BPH). Image descriptors are also generated with the help of vector quantization technique. Global histogram also suffers from spatial information. In order to overcome this problem Hauang et al. [11] proposed a color correlogram method which includes the local spatial color distribution of color information for image retrieval. Pass and Zabih [12] proposed the color coherence vectors (CCV) where a histogram based approach incorporates some spatial information. Rao et al. [13] proposed the modification in color histogram to achieve the spatial information and for this purpose they proposed three spatial color histograms : annular, angular and hybrid color histograms. Chang et al. [14] proposed a method which takes care of change in color due to change of illumination, the orientation of the surface, and the viewing geometry of the camera with less feature vector length as compared to color correlogram.

Texture is another important feature for CBIR. Smith et al. used the mean and variance of the wavelet coefficients as texture features for CBIR [15]. Moghaddam et al. proposed the Gabor wavelet correlogram (GWC) for CBIR [16]. Ahmadian et al. used the wavelet transform for texture classification [17]. Subrahmanyam et al. have proposed the correlogram algorithm for image retrieval using wavelets and rotated wavelets (WC + RWC) [18]. Ojala et al. proposed the local binary pattern (LBP) features for texture description [19] and these LBPs are converted to rotational invariant LBP for texture classification [20]. Pietikainen et al. proposed the rotational invariant texture classification using feature distributions [21]. Ahonen et al. [22] and Zhao et al. [23] used the LBP operator facial expression analysis and recognition. Heikkila et al. proposed the background modeling and detection by using LBP [24]. Huang et al. proposed the extended LBP for shape localization [25]. Heikkila et al. used the LBP for interest region description [26]. Li et al. used the combination of Gabor filter and LBP for texture segmentation [27]. Zhang et al. proposed the local derivative pattern for face recognition [28]. They have considered LBP as a nondirectional first order local pattern, which are the binary results of the first-order derivative in images. The block-based texture feature which use the LBP texture feature as the source of image description is proposed in [29] for CBIR. The center-symmetric local binary pattern (CS-LBP) which is a modified version of the well-known LBP feature is combined with scale invariant feature transform (SIFT) in [30] to describe the regions of interest. Yao et al. [31] have proposed two types of local edge patterns (LEP) histograms, one is LEPSEG for image segmentation, and the other is LEPINV for image retrieval. The LEPSEG is sensitive to variations in rotation and scale, on the contrary, the LEPINV is resistant to variations in rotation and scale. The local ternary pattern (LTP) [32] has been introduced for face recognition under different lighting conditions. Subrahmanyam et al. have proposed various pattern based features such as local maximum edge patterns (LMEBP) [33], local tetra patterns (LTrP) [34] and directional local extrema patterns (DLEP) [35] for natural/texture image retrieval and directional binary wavelet patterns (DBWP) [36], local mesh patterns (LMeP) [37] and local ternary co-occurance patterns (LTCoP) [38] for biomedical image retrieval. Reddy et al. [39] have extended the DLEP features by adding the magnitude information of the local gray values of an image. Hussain and trigges [40] have proposed the local quantized patterns (LQP) for visual recognition.

Recently, the integration of color and texture features are proposed for image retrieval. Jhanwar et al. [41] have proposed the motif co-occurrence matrix (MCM) for content-based image retrieval. They also proposed the color MCM which is calculated by applying MCM on individual red (R), green (G), and blue (B) color planes. Lin et al. [42] combined the color feature, k-mean color histogram (CHKM) and texture features, motif cooccurrence matrix (MCM) and difference between the pixels of a scan pattern (DBPSP). Vadivel et al. [43] proposed the integrated color and intensity co-occurrence matrix (ICICM) for image retrieval application. First they analyzed the properties of the HSV color space and then suitable weight functions have been suggested for estimating the relative contribution of color and gray levels of an image pixel. Vipparthi et al. [44] have proposed the local quinary patterns for image retrieval.

The concepts of LQP [40] and DLEP [35] have motivated us to propose the local quantized extrema patterns (LQEP) for image retrieval application. The main contributions of this work are summarized as follows. (a) The proposed method collects the directional quantized extrema information from the query/database image by integrating the concepts of LQP and DLEP. (b) To improve the performance of the CBIR system, the LQEP operator combines with RGB color histogram. (c) The performance of the proposed method is tested on benchmark databases for natural and texture image retrieval.

The paper is summarized as follows: In “Background”, a brief review of image retrieval and related work is given. “Review of existing local patterns”, presents a concise review of existing local pattern operators. The proposed system framework and similarity distance measures are illustrated in “Local quantized extrema patterns (LQEP)”. Experimental results and discussions are given in **Experimental Results and Discussion. The conclusions and future scope are given in **Conclusion.

Review of existing local patterns

Local binary patterns (LBP)

The LBP operator was introduced by Ojala et al. [19] for texture classification. Success in terms of speed (no need to tune any parameters) and performance is reported in many research areas such as texture classification [18–21], face recognition [22, 23], object tracking [33], image retrieval [33–39] and finger print recognition. Given a center pixel in the 3 × 3 pattern, LBP value is calculated by comparing its gray scale value with its neighboring pixels based on Eqs. (1) and (2):

where \( I(g_{c} ) \) denotes the gray value of the center pixel, \( I(g_{p} ) \) represents the gray value of its neighbors, \( P \) stands for the number of neighbors and \( R \), the radius of the neighborhood.

After computing the LBP pattern for each pixel (j, k), the whole image is represented by building a histogram as shown in Eq. (3).

where the size of input image is \( N_{1} \times N_{2} \).

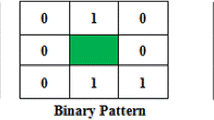

Figure 1 shows an example of obtaining an LBP from a given 3 × 3 pattern. The histograms of these patterns contain the information on the distribution of edges in an image.

Block based local binary patterns (BLK_LBP)

Takala et al. [29] have proposed the block based LBP for CBIR. The block division method is a simple approach that relies on subimages to address the spatial properties of images. It can be used together with any histogram descriptors similar to LBP. The method works in the following way: First it divides the model images into square blocks that are arbitrary in size and overlap. Then the method calculates the LBP distributions for each of the blocks and combines the histograms into a single vector of sub-histograms representing the image.

Center-symmetric local binary patterns (CS_LBP)

Instead of comparing each pixel with the center pixel, Heikkila et al. [30] have compared center-symmetric pairs of pixels for CS_LBP as shown in Eq. (5):

After computing the CS_LBP pattern for each pixel (j, k), the whole image is represented by building a histogram as similar to the LBP.

Directional local extrema patterns (DLEP)

Subrahmanyam et al. [35] propsed directional local extrema patterns (DLEP) for CBIR. DLEP describes the spatial structure of the local texture based on the local extrema of center gray pixel \( g_{c} \).

In DLEP, for a given image the local extrema in 0°, 45°, 90°, and 135° directions are obtained by computing local difference between the center pixel and its neighbors as shown below:

The local extremes are obtained by Eq. (7).

The DLEP is defined (α = 0°, 45°, 90°, and 135°) as follows:

Local quantized patterns (LQP)

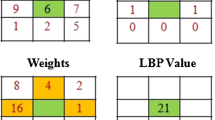

Hussain and Trigges [40] have proposed the LQP operator for visual recognition. The LQP collects the directional geometric features in horizontal (H), vertical (V), diagonal (D) and antidiagonal (A) strips of pixels; combinations of these such as horizontal-vertical (HV), diagonal-antidiagonal (DA) and horizontalvertical-diagonal-antidiagonal (HVDA); and traditional circular and disk-shaped regions. Figure 2 illustrate the possible direction quantized geometric structures for LQP operator. The more details about LQP are available in [40].

Local quantized extrema patterns (LQEP)

The operators DLEP [35] and LQP [40] have motivated us to propose the LQEP for image retrieval. The LQEP integrates the concepts of LQP and DLEP for image retrieval. First, the possible structures are extracted from the give pattern. Then, the extrema operation is performed on the directional geometric structures. Figure 3 illustrates the calculation of LQEP for a given 7 × 7 pattern. For easy understanding of the readers, in Fig. 3 the 7 × 7 pattern is indexed with pixel positions. The positions are indexed in a manner to write the four directional extrema operator calculations. In this paper, HVDA7 geometric structure is used for feature extraction. The brief description about the LQEP feature extraction is given as follows.

For a given center pixel (C) in an image I, the HVDA7 geometric structure is collected using Fig. 3. Then the four directional extremas (DE) in 0°, 45°, 90°, and 135° directions are obtained as follows.

where,

The LQEP is defined by Eq. (10)–(13) as follows.

Eventually, the given image is converted to LQEP map with values ranging from 0 to 4095. After calculation of LQEP, the whole image is represented by building a histogram supported by Eq. (16).

where, LQEP(i,j) represents the LQEP map value ranging 0 to 4095.

Proposed image retrieval system

In this paper, we integrate the concepts of DLEP and LQP for image retrieval. First, the image is loaded and converted into gray scale if it is RGB. Secondly, the four directional HVDA7 structure is collected using the LQP geometric structures. Then, the four directional extremas in 0°, 45°, 90°, and 135° directions are collected. Finally, the LQEP feature is generated by constructing the histograms. Further, to improve the performance of the proposed method, we integrate the LQEP with color RGB histogram for image retrieval.

Figure 4 depicts the flowchart of the proposed technique and algorithm for the same is given as follows.

Algorithm:

Input: Image; Output: Retrieval result

-

1.

Load the image and convert into gray scale (if it is RGB).

-

2.

Collect the HVDA7 structure for a given center pixel.

-

3.

Calculate the local extrema in 0°, 45°, 90°, and 135° directions.

-

4.

Compute the 12-bit LQEP with four directional extrema.

-

5.

Construct the histogram for 12-bit LQEP.

-

6.

Construct the RGB histogram from the RGB image.

-

7.

Construct the feature vector by concatenating RGB and LQEP histograms.

-

8.

Compare the query image with the image in the database using Eq. (20).

-

9.

Retrieve the images based on the best matches

Query matching

Feature vector for query image Q is represented as \( f_{Q} = (f_{{Q_{1} }} ,f_{{Q_{2} }} , \ldots f_{{Q_{Lg} }} ) \) is obtained after the feature extraction. Similarly each image in the database is represented with feature vector \( f_{{DB_{j} }} = (f_{{DB_{j1} }} ,f_{{DB_{j2} }} , \ldots f_{{DB_{jLg} }} );\,j = 1,2, \ldots ,\left| {DB} \right| \). The goal is to select n best images that resemble the query image. This involves selection of n top matched images by measuring the distance between query image and image in the database \( DB \). In order to match the images we use four different similarity distance metrics as follows.

where, L g represents the feature vector length, I 1 is database image and \( f_{{DB_{ji} }} \) is \( i{th} \) feature of \( j{th} \) image in the database \( DB \).

Experimental results and discussion

The performance of the proposed method is analyzed by conducting two experiments on benchmark databases. Further, it is mentioned that the databases used are Corel-1K [45], Corel-5K [45] and MIT VisTex [46] databases.

In all experiments, each image in the database is used as the query image. For each query, the system collects n database images X = (x 1 , x 2 ,…, x n ) with the shortest image matching distance computed using Eq. (20). If the retrieved image x i = 1, 2,…, n belongs to same category as that of the query image then we say the system has appropriately identified the expected image else the system fails to find the expected image.

The performance of the proposed method is measured in terms of average precision/average retrieval precision (ARP), average recall/average retrieval rate (ARR) as shown below:

For the query image \( I_{q} \), the precision is defined as follows:

Experiment #1

In this experiment, Corel-1K database [44] is used. This database consists of large number of images of various contents ranging from animals to outdoor sports to natural images. These images have been pre-classified into different categories each of size 100 by domain professionals. Some researchers think that Corel database meets all the requirements to evaluate an image retrieval system, due its large size and heterogeneous content. For experimentation we selected 1000 images which are collected from 10 different domains with 100 images per domain. The performance of the proposed method is measured in terms of ARP and ARR as shown in Eq. (21–24). Figure 5 illustrates the sample images of Corel-1K database.

Table 1 and Fig. 6 illustrate the retrieval results of proposed method and other existing methods in terms of ARP on Corel–1K database. Table 2 and Fig. 7 illustrate the retrieval results of proposed method and other existing methods in terms of ARR on Corel–1K database. From Tables 1, 2, Figs. 6 and 7, it is clear that the proposed method shows a significant improvement as compared to other existing methods in terms of precision, ARP, recall and ARR on Corel–1K database. Figure 8a, b illustrate the analysis of proposed method (LQEP) with various similarity distance measures on Corel-1K database in terms of ARP and ARR respectively. From Fig. 8, it is observed that the d1 distance measure outperforms the other distance measures in terms of ARP and ARR on Corel-1K database. Figure 9 illustrates the query results of proposed method on Corel–1K database.

Experiment #2

In this experiment Corel-5K database [45] is used for image retrieval. The Corel-5K database consists of 5000 images which are collected from 50 different domains have 100 images per domain. The performance of the proposed method is measured in terms of ARP and ARR as shown in Eq. (21–24).

Table 3 illustrates the retrieval results of proposed method and other existing methods on Corel-5K database in terms of precision and recall. Figure 10a, b show the category wise performance of methods in terms of precision and recall on Corel-5K database. The performance of all techniques in terms of ARP and ARR on Corel-5K database can be seen in Fig. 10c, d respectively. From Table 3, Fig. 10, it is clear that the proposed method shows a significant improvement as compared to other existing methods in terms of their evaluation measures on Corel-5 K database. The performance of the proposed method is also analyzed with various distance measures on Corel-5 K database as shown in Fig. 11. From Fig. 11, it is observed that the d 1 distance measure outperforms the other distance measures in terms of ARP and ARR on Corel-5K database. Figure 12 illustrates the query results of the proposed method on Corel-5K database.

Experiment #3

In this experiment, MIT VisTex database is considered which consists of 40 different textures [46]. The size of each texture is 512 × 512 which is divided into sixteen 128 × 128 non-overlapping sub-images, thus creating a database of 640 (40 × 16) images. In this experiment, each image in the database is used as the query image. Average retrieval recall or average retrieval rate rate (ARR) given in Eq. (24) is the bench mark for comparing results of this experiment.

Figures 13, 14 illustrate the performance of various methods in terms of ARR and ARP on MIT VisTex database. From Figs. 13, 14, it is clear that the proposed method shows a significant improvement as compared to other existing methods in terms of ARR and ARP on MIT VisTex database. Figure 15 illustrates the performance of proposed method with different similarity distance measures in terms of ARR on MIT VisTex database. From Fig. 15, it is observed that d1 distance measure outperforms the other distance measures in terms of ARR on MIT VisTex database. Figure 16 illustrates the query results of proposed method on MIT VisTex database.

Conclusions

A new feature descriptor, named local quantized extrema patterns (LQEP) is proposed in this paper for natural and texture image retrieval. The proposed method integrates the concepts of local quantization geometric structures and local extrema for extracting features from a query/database image for retrieval. Further, the performance of the proposed method is improved by combining it with the standard RGB histogram. Performance of the proposed method is tested by conducting three experiments on benchmark image databases and retrieval results show a significant improvement in terms of their evaluation measures as compared to other existing methods on respective databases.

References

Aura C, Castro EMMM (2003) Image mining by content. Expert Syst Appl 23:377–383

Subrahmanyam M, Maheshwari RP, Balasubramanian R (2012) Expert system design using wavelet and color vocabulary trees for image retrieval. Expert Syst Appl 39:5104–5114

Vipparthi SK, Nagar SK (2014) Expert image retrieval system using directional local motif XoR patterns. Expert Syst Appl 41(17):8016–8026

Rui Y, Huang TS (1999) Image retrieval: current techniques, promising directions and open issues. J Vis Commun Image Represent 10:39–62

Smeulders AWM, Worring M, Santini S, Gupta A, Jain R (2000) Content-based image retrieval at the end of the early years. IEEE Trans Pattern Anal Mach Intell 22(12):1349–1380

Swain MJ, Ballard DH (1991) Color Indexing. Int J Comput Vision 7:11–32

Stricker M, Orengo M (1995) Similarity of color images. In: Proceedings SPIE Storage and Retrieval for Image and Video Databases III, San Jose, Wayne Niblack, pp 381–392

Idris F, Panchanathan S (1997) Image and video indexing using vector quantization. Mach Vis Appl 10:43–50

Lu ZM, Burkhardt H (2005) Colour image retrieval based on DCT domain vector quantisation index histograms. Electron Lett 41:956–957

Qiu G (2003) Color image indexing using BTC. IEEE Trans Image Process 12:93–101

Huang J, Kumar S, Mitra M, Zhu W, Zabih R (1997) Image indexing using color correlograms. In: Proceeding Computer Vision and Pattern Recognition, San Jaun, Puerto Rico, pp 762–768

Pass G, Zabih R (1996) Histogram refinement for content-based image retrieval. In: Proceedings IEEE Workshop on Applications of Computer Vision, 1996, pp 96–102

Rao A, Srihari RK, Zhang Z (1999) Spatial color histograms for content-based image retrieval. In: Proceedings of the Eleventh IEEE International Conference on Tools with Artificial Intelligence, Chicago, IL, USA 1999, pp 183–187

Chang MH, Pyun JY, Ahmad MB, Chun JH, Park JA (2005) Modified color co-occurrence matrix for image retrieval. Lect Notes Comput Sci 3611:43–50

Smith JR, Chang SF (1996) Automated binary texture feature sets for image retrieval. In: Proceedings IEEE International Conference on Acoustics, Speech and Signal Processing, Columbia Univ., New York, pp 2239–2242

Moghaddam HA, Khajoie TT, Rouhi AH (2003) A new algorithm for image indexing and retrieval using wavelet correlogram. In: International Conference on Image Processing, K.N. Toosi Univ. of Technol., Tehran, Iran, vol. 2, pp 497–500

Ahmadian A, Mostafa A (2003) An efficient texture classification algorithm using Gabor wavelet. In: 25th Annual international conf. of the IEEE EMBS, Cancun, Mexico, pp 930–933

Murala S, Maheshwari RP, Balasubramanian R (2011) A correlogram algorithm for image indexing and retrieval using wavelet and rotated wavelet filters. Int J Signal Imaging Syst Eng 4(1):27–34

Ojala T, Pietikainen M, Harwood D (1996) A comparative sudy of texture measures with classification based on feature distributions. J Pattern Recogn 29(1):51–59

Ojala T, Pietikainen M, Maenpaa T (2002) Multiresolution gray-scale and rotation invariant texture classification with local binary patterns. IEEE Trans Pattern Anal Mach Intell 24(7):971–987

Pietikainen M, Ojala T, Scruggs T, Bowyer KW, Jin C, Hoffman K, Marques J, Jacsik M, Worek W (2000) Overview of the face recognition using feature distributions. J Pattern Recogn 33(1):43–52

Ahonen T, Hadid A, Pietikainen M (2006) Face description with local binary patterns: applications to face recognition. IEEE Trans Pattern Anal Mach Intell 28(12):2037–2041

Zhao G, Pietikainen M (2007) Dynamic texture recognition using local binary patterns with an application to facial expressions. IEEE Trans Pattern Anal Mach Intell 29(6):915–928

Heikkila M, Pietikainen M (2006) A texture based method for modeling the background and detecting moving objects. IEEE Trans Pattern Anal Mach Intell 28(4):657–662

Huang X, Li SZ, Wang Y (2004) Shape localization based on statistical method using extended local binary patterns. In: Proceedings of International Conference on Image and Graphics, pp 184–187

Heikkila M, Pietikainen M, Schmid C (2009) Description of interest regions with local binary patterns. J Pattern recognition 42:425–436

Li M, Staunton RC (2008) Optimum Gabor filter design and local binary patterns for texture segmentation. J Pattern Recogn 29:664–672

Zhang B, Gao Y, Zhao S, Liu J (2010) Local derivative pattern versus local binary pattern: Face recognition with higher-order local pattern descriptor. IEEE Trans Image Proc 19(2):533–544

Takala Valtteri, Ahonen Timo, Pietikainen Matti (2005) Block-based methods for image retrieval using local binary patterns, SCIA 2005. LNCS 3450:882–891

Heikkil Marko, Pietikainen M, Schmid C (2009) Description of interest regions with local binary patterns. Pattern Recogn 42:425–436

Yao Cheng-Hao, Chen Shu-Yuan (2003) Retrieval of translated, rotated and scaled color textures. Pattern Recogn 36:913–929

Tan X, Triggs B (2010) Enhanced local texture feature sets for face rec-ognition under difficult lighting conditions. IEEE Trans Image Process 19(6):1635–1650

Murala S, Maheshwari RP, Balasubramanian R (2012) Local maximum edge binary patterns: a new descriptor for image retrieval and object tracking. Signal Process 92:1467–1479

Murala S, Maheshwari RP, Balasubramanian R (2012) Local tetra patterns: a new feature descriptor for content based image retrieval. IEEE Trans Image Processing 21(5):2874–2886

Murala S, Maheshwari RP, Balasubramanian R (2012) Directional local extrema patterns: a new descriptor for content based image retrieval. Int J Multimedia Inf Retrieval 1(3):191–203

Murala S, Maheshwari RP, Balasubramanian R (2012) Directional binary wavelet patterns for biomedical image indexing and retrieval. J Med Syst 36(5):2865–2879

Murala S, Jonathan Wu QM (2013) Local mesh patterns versus local Binary patterns: biomedical image indexing and retrieval. IEEE J Biomed Health Inform 18(3):929–938

Murala S, Jonathan Wu QM (2013) Local ternary co-occurrence patterns: a new feature descriptor for MRI and CT image retrieval. Neurocomputing 119(7):399–412

Reddy PVB, Reddy ARM (2014) Content based image indexing and retrieval using directional local extrema and magnitude patterns. Int J Electron Commun (AEÜ) 68(7):637–643

ul Hussain S, Triggs B (2012) Visual Recognition Using Local Quantized Patterns. ECCV 2012, Part II, LNCS 7573, Italy, pp 716–729

Jhanwar N, Chaudhuri S, Seetharaman G, Zavidovique B (2004) Content-based image retrieval using motif co-occurrence matrix. Image Vision Comput 22:1211–1220

Lin CH, Chen RT, Chan YKA (2009) Smart content-based image retrieval system based on color and texture feature. Image Vision Comput 27:658–665

Vadivel A (2007) Sural Shamik, Majumdar AK. An integrated color and intensity co-occurrence matrix. Pattern Recognit Lett 28:974–983

Vipparthi SK, Nagar SK (2014) Color directional local quinary patterns for content based indexing and retrieval. Human Centric Comp Inform Sci 4:6. doi:10.1186/s13673-014-0006-x

Corel 1000 and Corel 10000 image database. [Online]. Available: http://wang.ist.psu.edu/docs/related.shtml

MIT Vision and Modeling Group, Vision Texture. [Online]. Available: http://vismod.media.mit.edu/pub/

Authors’ contributions

All authors are contributed equally for the current research work. All authors read and approved the final manuscript.

Acknowledgements

The authors declare that they have no acknowledgements for the current research work.

Compliance with ethical guidelines

Competing interests The authors declare that they have no competing interests.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Koteswara Rao, L., Venkata Rao, D. Local quantized extrema patterns for content-based natural and texture image retrieval. Hum. Cent. Comput. Inf. Sci. 5, 26 (2015). https://doi.org/10.1186/s13673-015-0044-z

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13673-015-0044-z