Abstract

Let E be a real normed space with dual space \(E^{*}\) and let \(A:E\rightarrow2^{E^{*}}\) be any map. Let \(J:E\rightarrow2^{E^{*}}\) be the normalized duality map on E. A new class of mappings, J-pseudocontractive maps, is introduced and the notion of J-fixed points is used to prove that \(T:=(J-A)\) is J-pseudocontractive if and only if A is monotone. In the case that E is a uniformly convex and uniformly smooth real Banach space with dual \(E^{*}\), \(T: E\rightarrow2^{E^{*}}\) is a bounded J-pseudocontractive map with a nonempty J-fixed point set, and \(J-T :E\rightarrow2^{E^{*}}\) is maximal monotone, a sequence is constructed which converges strongly to a J-fixed point of T. As an immediate consequence of this result, an analog of a recent important result of Chidume for bounded m-accretive maps is obtained in the case that \(A:E\rightarrow2^{E^{*}}\) is bounded maximal monotone, a result which complements the proximal point algorithm of Martinet and Rockafellar. Furthermore, this analog is applied to approximate solutions of Hammerstein integral equations and is also applied to convex optimization problems. Finally, the techniques of the proofs are of independent interest.

Similar content being viewed by others

1 Introduction

Let H be a real inner product space. A map \(A:H\rightarrow2^{H}\) is called monotone if for each \(x,y\in H\),

Monotone mappings were first studied in Hilbert spaces by Zarantonello [1], Minty [2], Kačurovskii [3] and a host of other authors. Interest in such mappings stems mainly from their usefulness in applications. In particular, monotone mappings appear in convex optimization theory. Consider, for example, the following:. Let \(g:H\rightarrow\mathbb{R}\cup\{\infty\}\) be a proper convex function. The subdifferential of g, \(\partial g:H\rightarrow2^{H}\), is defined for each \(x\in H\) by

It is easy to check that ∂g is a monotone operator on H, and that \(0\in\partial g(u)\) if and only if u is a minimizer of g. Setting \(\partial g\equiv A\), it follows that solving the inclusion \(0\in Au\), in this case, is solving for a minimizer of g.

Furthermore, the equation \(0\in Au\) when A is a monotone map from a real Hilbert space to itself also appears in evolution systems. Consider the evolution equation \(\frac{du}{dt} + Au=0\) where A is a monotone map from a real Hilbert space to itself. At an equilibrium state, \(\frac{du}{dt}=0\) so that \(Au=0\), whose solutions correspond to the equilibrium state of the dynamical system.

In particular, consider the following diffusion equation:

where Ω is an open subset of \({\mathbb{R}}^{n}\).

By a simple transformation, i.e., by setting \(v(t)=u(t,\cdot)\), where

is defined by \(v(t)(x)=u(t,x)\) and \(f(\varphi)(x)=g(\varphi(x))\), where

we see that equation (1.2) is equivalent to

where A is a nonlinear monotone-type mapping defined on \(L_{2}(\Omega )\). Setting f to be identically zero, at an equilibrium state (i.e., when the system becomes independent of time) we see that equation (1.3) reduces to

Thus, approximating zeros of equation (1.4) is equivalent to the approximation of solutions of the diffusion equation (1.2) at equilibrium state.

The notion of monotone mapping has been extended to real normed spaces. We now briefly examine two well-studied extensions of Hilbert space monotonicity to arbitrary normed spaces.

1.1 Accretive-type mappings

Let E be a real normed space with dual space \(E^{*}\). A map \(J:E\rightarrow2^{E^{*}}\) defined by

is called the normalized duality map on E. We have with \(J^{-1}=J^{*}\), \(JJ^{*}=I_{E^{*}}\) and \(J^{*}J =I_{E}\), where \(I_{E}\) and \(I_{E^{*}}\) are the identity mappings on E and \(E^{*}\), respectively.

A map \(A:E\rightarrow2^{E}\) is called accretive if for each \(x,y\in E\), there exists \(j(x-y)\in J(x-y)\) such that

A is called m-accretive if, in addition, the graph of A is not properly contained in the graph of any other accretive operator. It is m-accretive if and only if A is accretive and \(R(I+tA)=E\) for all \(t>0\).

In a Hilbert space, the normalized duality map is the identity map, and so, in this case, inequality (1.5) and inequality (1.1) coincide. Hence, accretivity is one extension of Hilbert space monotonicity to general normed spaces.

Accretive operators have been studied extensively by numerous mathematicians (see, e.g., the following monographs: Berinde [4], Browder [5], Chidume [6], Reich [7], and the references therein).

1.2 Monotone-type mappings in arbitrary normed spaces

Let E be a real normed space with dual \(E^{*}\). A map \(A:E\rightarrow 2^{E^{*}}\) is called monotone if for each \(x,y\in E\), the following inequality holds:

It is called maximal monotone if, in addition, the graph of A is not properly contained in the graph of any other monotone operator. Also, A is maximal monotone if and only if it is monotone and \(R(J+tA)=E^{*}\) for all \(t>0\).

It is obvious that monotonicity of a map defined from a normed space to its dual is another extension of Hilbert space monotonicity to general normed spaces.

The extension of the monotonicity condition from a Banach space into its dual has been the starting point for the development of nonlinear functional analysis…. The monotone mappings appear in a rather wide variety of contexts, since they can be found in many functional equations. Many of them appear also in calculus of variations, as subdifferential of convex functions (Pascali and Sburian [8], p.101).

Accretive mappings were introduced independently in 1967 by Browder [5] and Kato [9]. Interest in such mappings stems mainly from their firm connection with the existence theory for nonlinear equations of evolution in real Banach spaces. It is known (see, e.g., Zeidler [10]) that many physically significant problems can be modeled in terms of an initial-value problem of the form

where A is a multi-valued accretive map on an appropriate real Banach space. Typical examples of such evolution equations are found in models involving the heat, wave or Schrödinger equations (see, e.g., Browder [11], Zeidler [10]). Observe that in the model (1.7), if the solution u is independent of time (i.e., at the equilibrium state of the system), then \(\frac{du}{dt} = {0}\) and (1.7) reduces to

whose solutions then correspond to the equilibrium state of the system described by (1.7). Solutions of equation (1.8) can also represent solutions of partial differential equations (see, e.g., Benilan et al. [12], Khatibzadeh and Moroşanu [13], Khatibzadeh and Shokri [14], Showalter [15], Volpert [16], and so on).

In studying the equation \(0\in Au\), where A is a multi-valued accretive operator on a Hilbert space H, Browder introduced an operator T defined by \(T:= I-A\) where I is the identity map on H. He called such an operator pseudocontractive. It is clear that solutions of \(0\in Au\), if they exist, correspond to fixed points of T.

Within the past 35 years or so, methods for approximating solutions of equation (1.8) when A is an accretive-type operator have become a flourishing area of research for numerous mathematicians. Numerous convergence theorems have been published in various Banach spaces and under various continuity assumptions. Many important results have been proved, thanks to geometric properties of Banach spaces developed from the mid-1980s to the early 1990s. The theory of approximation of solutions of the equation when A is of the accretive-type reached a level of maturity appropriate for an examination of its central themes. This resulted in the publication of several monographs which presented in-depth coverage of the main ideas, concepts, and most important results on iterative algorithms for appropriation of fixed points of nonexpansive and pseudocontractive mappings and their generalizations, approximation of zeros of accretive-type operators; iterative algorithms for solutions of Hammerstein integral equations involving accretive-type mappings; iterative approximation of common fixed points (and common zeros) of families of these mappings; solutions of equilibrium problems; and so on (see, e.g., Agarwal et al. [17]; Berinde [4]; Chidume [6]; Reich [18]; Censor and Reich [19]; William and Shahzad [20], and the references therein). Typical of the results proved for solutions of equation (1.8) is the following theorem.

Theorem 1.1

(Chidume [21])

Let E be a uniformly smooth real Banach space with modulus of smoothness \(\rho_{E}\), and let \(A:E\rightarrow2^{E}\) be a multi-valued bounded m-accretive operator with \(D(A)=E\) such that the inclusion \(0\in Au\) has a solution. For arbitrary \(x_{1}\in E\), define a sequence \(\{x_{n}\}\) by

where \(\{\lambda_{n}\}\) and \(\{\theta_{n}\}\) are sequences in \((0,1)\) satisfying the following conditions:

-

(i)

\(\lim_{n\rightarrow\infty}\theta_{n} =0\), \(\{\theta_{n}\}\) is decreasing;

-

(ii)

\(\sum\lambda_{n}\theta_{n} = \infty\); \(\sum\rho_{E}(\lambda_{n}M_{1})<\infty\), for some constant \(M_{1} > 0\);

-

(iii)

\(\lim_{n\rightarrow\infty} \frac{ [\frac{\theta _{n-1}}{\theta _{n}}-1 ]}{\lambda_{n}\theta_{n}}=0\).

There exists a constant \(\gamma_{0} > 0\) such that \(\frac{\rho _{E}(\lambda_{n})}{\lambda_{n}}\leq\gamma_{0}\theta_{n}\). Then the sequence \(\{x_{n}\}\) converges strongly to a zero of A.

Unfortunately, developing algorithms for approximating solutions of equations of type (1.8) when \(A:E\rightarrow2^{E^{*}}\) is of monotone type has not been very fruitful. Part of the difficulty seems to be that all efforts made to apply directly the geometric properties of Banach spaces developed from the mid 1980s to the early 1990s proved abortive. Furthermore, the technique of converting the inclusion (1.8) into a fixed point problem for \(T:= I-A : E\rightarrow E\) is not applicable since, in this case when A is monotone, A maps E into \(E^{*}\), and the identity map does not make sense.

Fortunately, Alber [22] (see also, Alber and Ryazantseva [23]) recently introduced a Lyapunov functional \(\phi:E\times E\rightarrow\mathbb{R}\), which signaled the beginning of the development of new geometric properties of Banach spaces which are appropriate for studying iterative methods for approximating solutions of (1.8) when \(A:E\rightarrow2^{E^{*}}\) is of monotone type. Geometric properties so far obtained have rekindled enormous research interest on iterative methods for approximating solutions of equation (1.8) where A is of monotone type, and other related problems (see, e.g., Alber [22]; Alber and Guerre-Delabriere [24]; Chidume [21, 25]; Chidume et al. [26]; Diop et al.[27]; Moudafi [28], Moudafi and Tera [29]; Reich [30]; Sow et al. [31]; Takahashi [32]; Zegeye [33] and the references therein).

It is our purpose in this paper to apply the notion of J-fixed points (which has also been defined as a semi-fixed point (see, e.g., Zegeye [33]), a duality fixed point (see, e.g., Liu [34]) and, as far as we know, a new class of mappings called J-pseudocontractive maps introduced here to prove that \(T:=(J-A)\) is J-pseudocontractive if and only if A is monotone; and in the case that E is a uniformly convex and a uniformly smooth real Banach space with dual \(E^{*}\), \(T: E\rightarrow2^{E^{*}}\) is a bounded J-pseudocontractive map with a nonempty J-fixed point set, and \(J-T :E\rightarrow2^{E^{*}}\) is maximal monotone, a sequence is constructed which converges strongly to a J-fixed point of T. As an immediate application of this result, an analog of Theorem 1.1 for bounded maximal monotone maps is obtained, which is also a complement of the proximal point algorithm of Martinet [35] and Rockafellar [36], which has also been studied by numerous authors (see, e.g., Bruck [37]; Chidume [38]; Chidume [21]; Chidume and Djitte [39]; Kamimura and Takahashi [40]; Lehdili and Moudafi [41]; Reich [42]; Reich and Sabach [43, 44]; Solodov and Svaiter [45]; Xu [46] and the references therein). Furthermore, this analog is applied to approximate solutions of Hammerstein integral equations and is also applied to convex optimization problems. Finally, our techniques of proofs are of independent interest.

2 Preliminaries

Let E be a real normed linear space of dimension ≥2. The modulus of smoothness of E, \(\rho_{E}:[0,\infty )\rightarrow[0,\infty)\), is defined by

A normed linear space E is called uniformly smooth if

It is well known (see, e.g., Chidume [6], p.16, also Lindenstrauss and Tzafriri [47]) that \(\rho_{E}\) is nondecreasing. If there exist a constant \(c>0\) and a real number \(q>1\) such that \(\rho_{E}(\tau)\leq c\tau^{q}\), then E is said to be q-uniformly smooth. Typical examples of such spaces are the \(L_{p}\), \(\ell_{p}\), and \(W^{m}_{p}\) spaces for \(1< p<\infty\) where

A Banach space E is said to be strictly convex if

The modulus of convexity of E is the function \(\delta _{E}:(0,2]\rightarrow[0,1]\) defined by

The space E is uniformly convex if and only if \(\delta _{E}(\epsilon)>0\) for every \(\epsilon\in(0,2]\). It is also well known (see e.g., Chidume [6], p.34, Lindenstrauss and Tzafriri [47]) that \(\delta_{E}\) is nondecreasing. If there exist a constant \(c>0\) and a real number \(p>1\) such that \(\delta_{E}(\epsilon)\ge c\epsilon^{p}\), then E is said to be p-uniformly convex. Typical examples of such spaces are the \(L_{p}\), \(\ell_{p}\), and \(W^{m}_{p}\) spaces for \(1< p<\infty\) where

The norm of E is said to be Fréchet differentiable if, for each \(x\in S:= \{u\in E: \|u\|=1\}\),

exists and is attained uniformly for \(y\in E\).

For \(q>1\), let \(J_{q}\) denote the generalized duality mapping from E to \(2^{E^{\ast}}\) defined by

where \(\langle\cdot,\cdot\rangle\) denotes the generalized duality pairing. \(J_{2}\) is called the normalized duality mapping and is denoted by J. It is well known that if E is smooth, then \(J_{q}\) is single-valued.

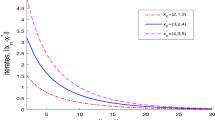

In the sequel, we shall need the following definitions and results. Let E be a smooth real Banach space with dual \(E^{*}\). The Lyapounov functional \(\phi:E\times E\to\mathbb{R}\), defined by

where J is the normalized duality mapping from E into \(E^{*}\) will play a central role in the sequel. It was introduced by Alber and has been studied by Alber [22], Alber and Guerre-Delabriere [24], Kamimura and Takahashi [48], Reich [18], and a host of other authors. If \(E=H\), a real Hilbert space, then equation (2.1) reduces to \(\phi(x,y)=\|x-y\|^{2}\) for \(x,y\in H\). It is obvious from the definition of the function ϕ that

Define a map \(V:X\times X^{*}\to\mathbb{R}\) by

Then it is easy to see that

Lemma 2.1

(Alber and Ryazantseva [23])

Let X be a reflexive strictly convex and smooth Banach space with \(X^{*}\) as its dual. Then

for all \(x\in X\) and \(x^{*},y^{*}\in X^{*}\).

Lemma 2.2

(Alber and Ryazantseva [23], p.50)

Let X be a reflexive strictly convex and smooth Banach space with \(X^{*}\) as its dual. Let \(W:X\times X\rightarrow\mathbb{R}^{1}\) be defined by \(W(x,y)=\frac {1}{2}\phi(y,x)\). Then

i.e.,

and also

for all \(x, y, z\in X\).

Lemma 2.3

(Alber and Ryazantseva [23], p.45)

Let X be a uniformly convex Banach space. Then, for any \(R>0\) and any \(x, y\in X\) such that \(\|x\|\le R\), \(\|y\|\le R\), the following inequality holds:

where \(c_{2}=2\max\{1,R\}\), \(1< L<1.7\).

Define

Lemma 2.4

(Alber and Ryazantseva [23], p.46)

Let X be a uniformly smooth and strictly convex Banach space. Then for any \(R>0\) and any \(x, y\in X\) such that \(\|x\|\le R\), \(\|y\|\le R\) the following inequality holds:

where \(c_{2}=2\max\{1,R\}\), \(1< L<1.7\).

Let \(E^{*}\) be a real strictly convex dual Banach space with a Fréchet differentiable norm. Let \(A:E\rightarrow2^{E^{*}}\) be a maximal monotone operator with no monotone extension. Let \(z\in E^{*}\) be fixed. Then for every \(\lambda>0\), there exists a unique \(x_{\lambda}\in E\) such that \(Jx_{\lambda}+\lambda Ax_{\lambda}\ni z\) (see Reich [7], p. 342). Setting \(J_{\lambda}z=x_{\lambda}\), we have the resolvent \(J_{\lambda}:=(J+\lambda A)^{-1} :E^{*}\rightarrow E\) of A for every \(\lambda>0\). The following is a celebrated result of Reich.

Lemma 2.5

(Reich, [7]; see also, Kido, [49])

Let \(E^{*}\) be a strictly convex dual Banach space with a Fréchet differentiable norm, and let A be a maximal monotone operator from E to \(E^{*}\) such that \(A^{-1}0\ne\emptyset\). Let \(z\in E^{*}\) be arbitrary but fixed. For each \(\lambda>0\) there exists a unique \(x_{\lambda}\in E\) such that \(Jx_{\lambda}+ \lambda Ax_{\lambda}\ni z\). Furthermore, \(x_{\lambda}\) converges strongly to a unique \(p\in A^{-1}0\).

Lemma 2.6

From Lemma 2.5, setting \(\lambda_{n}:=\frac{1}{\theta_{n}}\) where \(\theta_{n} \rightarrow0\) as \(n\rightarrow\infty\), \(z=Jv\) for some \(v\in E\), and \(y_{n}:= (J+\frac{1}{\theta_{n}}A )^{-1}z\), we obtain

where \(A:E\rightarrow E^{*}\) is maximal monotone.

Remark 1

Let \(R>0\) such that \(\|v\|\le R\), \(\|y_{n}\|\le R\) for all \(n\ge1\). We observe that equation (2.7) yields

Taking the duality pairing of the LHS of this equation with \(y_{n-1}-y_{n}\), applying Cauchy-Schwarz, and using (2.8), we obtain

It follows that if E is uniformly convex and uniformly smooth, using Lemma 2.3 we obtain

which gives, using equation (2.6),

Similarly, using Lemma 2.4, we obtain

Remark 2

In p-uniformly convex spaces, we have (see, e.g., Chidume [6], p.34), for some constant \(c>0\),

From inequality (2.9), using inequality (2.12), we obtain

which gives

Also, we have from Lemma 2.4 that

Again, using inequality (2.12), we obtain

which gives

Lemma 2.7

(Kamimura and Takahashi [48])

Let X be a real smooth and uniformly convex Banach space, and let \(\{ x_{n}\}\) and \(\{y_{n}\}\) be two sequences of X. If either \(\{x_{n}\}\) or \(\{ y_{n}\}\) is bounded and \(\phi(x_{n},y_{n})\to0\) as \(n\to\infty\), then \(\| x_{n}-y_{n}\| \to0\) as \(n\to\infty\).

Lemma 2.8

(Xu [50])

Let \(\{a_{n}\}_{n=1}^{\infty}\) be a sequence of non-negative real numbers satisfying the following relation:

where \(\{\sigma_{n}\}_{n=0}^{\infty}\), \(\{b_{n}\}_{n=1}^{\infty}\), and \(\{ c_{n}\}_{n=1}^{\infty}\) satisfy the conditions:

-

(i)

\(\{\sigma_{n}\}_{n=1}^{\infty}\subset[0,1]\), \(\sum_{n=1}^{\infty}\sigma_{n}=\infty\), or equivalently, \(\prod_{n=1}^{\infty}(1-\sigma_{n})=0\);

-

(ii)

\(\limsup_{n\rightarrow\infty}b_{n}\le0\);

-

(iii)

\(c_{n}\ge0\) (\(n\ge0\)), \(\sum_{n=1}^{\infty}c_{n}<\infty\).

Then \(\lim_{n\rightarrow\infty}a_{n}=0\).

Definition 2.9

(J-fixed point)

Let E be an arbitrary normed space and \(E^{*}\) be its dual. Let \(T:E\rightarrow2^{E^{*}}\) be any mapping. A point \(x\in E\) will be called a J-fixed point of T if and only if there exists \(\eta\in Tx\) such that \(\eta\in Jx\).

Remark 3

The notion of J-fixed points, as far as we know, was first introduced by Zegeye [33] who called a point \(x^{*}\in E\) such that \(Tx^{*}=Jx^{*}\), a semi-fixed point of T. Later, Liu [34] called such a point a duality fixed point of T.

3 Main results

We introduce the following definition.

Definition 3.1

(J-pseudocontractive mappings)

Let E be a normed space. A mapping \(T:E\rightarrow2^{E^{*}}\) is called J-pseudocontractive if for every \(x, y\in E\),

Example 1

If \(E=H\), a real Hilbert space, then J is the identity map on H. Consequently, every pseudocontractive map on H is J-pseudocontractive.

For our next example, we need the following characterization of the normalized duality map on \(l_{p}\), \(1< p<\infty\).

In \(l_{p}\) spaces, \(1< p<\infty\), for arbitrary \(x\in l_{p}\), \(x=(x_{1},x_{2},x_{3},\ldots)\),

(see, e.g., Alber and Ryazantseva [23], p.36).

Example 2

Let \(1< q< p<\infty\) and let \(\lambda\in\mathbb{R}\) be arbitrary. Define \(T:l_{p}\rightarrow l_{q}\) by

Then (i) T is J-pseudocontractive, (ii) \(x_{\lambda}:=(\lambda,0,0,\ldots)\) is a J-fixed point of T.

Remark 4

We observe that, assuming existence, a zero of a monotone mapping \(A:E\rightarrow2^{E^{*}}\) corresponds to a J-fixed point of a J-pseudocontractive mapping, T.

The following lemma asserts that \(A:E\rightarrow2^{E^{*}}\) is monotone if and only if \(T:=(J-A):E\rightarrow2^{E^{*}}\) is J-pseudocontractive.

Lemma 3.2

Let E be an arbitrary real normed space and \(E^{*}\) be its dual space. Let \(A:E\rightarrow2^{E^{*}}\) be any mapping. Then A is monotone if and only if \(T:=(J-A): E\rightarrow2^{E^{*}}\) is J-pseudocontractive.

Proof

Let \(x, y\in E\) be arbitrary. Suppose A is monotone. Then, for every \(\mu_{x} \in Ax\), \(\mu_{y}\in Ay\), \(jx\in Jx\), \(jy\in Jy\), \(\tau _{x}\in Tx\), \(\tau_{y}\in Ty\), such that \(\tau_{x}=jx-\mu_{x}\), \(\tau _{y}=jy-\mu_{y}\), we have

Hence, T is J-pseudocontractive.

Conversely, suppose \(T:= (J-A)\) is J-pseudocontractive, we prove \(A:= J-T\) is monotone. For all \(x, y\in E\), let \(\mu_{x}\in Ax\), \(\mu_{y}\in Ay\). Then \(\mu_{x}=jx-\zeta_{x}\) and \(\mu_{y}=jy-\zeta_{y}\) for some \(\zeta _{x}\in Tx\), \(\zeta_{y}\in Ty\), \(jx\in Jx\), and \(jy\in Jy\). We have

Hence, A is monotone. □

We now prove the following lemma, which will be crucial in the sequel.

Lemma 3.3

Let E be a smooth real Banach space with dual \(E^{*}\). Let \(\phi :E\times E\to\mathbb{R}\) be the Lyapounov functional. Then

Proof

Let \(x, y\in E\), we have

But,

so that

and substituting in (3.1), the result follows. □

In Theorem 3.4 below, the sequence \(\{\lambda_{n}\}_{n=1}^{\infty}\subset(0,1)\) satisfies the following conditions:

-

(i)

\(\sum_{n=1}^{\infty}\lambda_{n}=\infty\);

-

(ii)

\(\lambda_{n}M_{0}^{*}\le\gamma_{0}\theta_{n}\); \(\delta ^{-1}_{E}(\lambda _{n}M_{0}^{*}) \leq\gamma_{0}\theta_{n}\),

for all \(n\ge1\) and for some constants \(M_{0}^{*}>0\), \(\gamma_{0}>0\).

Theorem 3.4

Let E be a uniformly convex and uniformly smooth real Banach space and let \(E^{*}\) be its dual. Let \(T:E\to2^{E^{*}}\) be a multi-valued J-pseudocontractive and bounded map. Suppose \(F_{E}^{J}(T):=\{v\in E: Jv\in Tx\}\ne\emptyset\). For arbitrary \(u\in E\), define a sequence \(\{x_{n}\}\) iteratively by: \(x_{1}\in E\),

Then the sequence \(\{x_{n}\}\) is bounded.

Proof

Since \(F_{E}^{J}(T)\ne\emptyset\), let \(x^{*}\in F_{E}^{J}(T)\). Then there exists \(r>0\) such that \(\max \{\phi(x^{*},u), \phi(x^{*},x_{1})\}\le\frac{r}{8}\). Let \(B:=\{x\in E: \phi(x^{*},x)\le r\}\), and since T is bounded, we define:

Let \(M:=\max\{M_{2}M_{0}, c_{2}M_{0}, c_{2}M_{1}\}\), and

where \(c_{2}\) is the constant in Lemma 2.3. We show that \(\phi(x^{*},x_{n})\le r\) for all \(n\ge1\). We proceed by induction. Clearly, \(\phi(x^{*},x_{1})\le r\). Suppose \(\phi(x^{*},x_{n})\le r\) for some \(n\ge1\). We show \(\phi(x^{*},x_{n+1})\le r\). Suppose this is not the case, then \(\phi(x^{*},x_{n+1})>r\). Observe that

From Lemma 2.3 and the recurrence relation (3.2), we have

We hence obtain

Using inequality (2.5) with \(y^{*}=\lambda_{n} [Jx_{n}-\eta _{n}+\theta_{n}(Jx_{n}-Ju) ]\), we obtain using also inequality (3.4)

Since T is J-pseudocontractive, so that \((J-T)\) is monotone, and using the recursion formula, we have

We have from Lemma 2.2

Substituting this in inequality (3.5), we obtain

This is a contradiction. Hence, \(\{x_{n}\}_{n=1}^{\infty}\) is bounded. □

In Theorem 3.5 below, \(\lambda_{n}\) and \(\theta_{n}\) are real sequences in \((0,1)\) satisfying the following conditions:

-

(i)

\(\sum_{n=1}^{\infty}\lambda_{n}\theta_{n}=\infty\),

-

(ii)

\(\lambda_{n}M_{0}^{*}\le\gamma_{0}\theta_{n}\); \(\delta ^{-1}_{E}(\lambda_{n}M_{0}^{*}) \leq\gamma_{0}\theta_{n}\),

-

(iii)

\(\frac{\delta^{-1}_{E} (\frac{\theta_{n-1}-\theta _{n}}{\theta_{n}}K )}{\lambda_{n}\theta_{n}} \rightarrow0\), \(\frac{\delta ^{-1}_{E^{*}} (\frac{\theta_{n-1}-\theta_{n}}{\theta_{n}}K )}{\lambda_{n}\theta_{n}} \rightarrow0\), as \(n\rightarrow\infty\),

-

(iv)

\(\frac{1}{2} (\frac{\theta_{n-1}-\theta_{n}}{\theta _{n}}K )\in(0,1)\),

for some constants \(M_{0}^{*}>0\), and \(\gamma_{0}>0\); where \(\delta_{E}: (0,\infty)\rightarrow(0,\infty)\) is the modulus of convexity of E and \(K>0\) is as defined in Lemma 2.3.

Theorem 3.5

Let E be a uniformly convex and uniformly smooth real Banach space and let \(E^{*}\) be its dual. Let \(T:E\to2^{E^{*}}\) be a J-pseudocontractive and bounded map such that \((J-T)\) is maximal monotone. Suppose \(F_{E}^{J}(T)=\{v\in E: Jv\in Tv\}\ne\emptyset\). For arbitrary \(x_{1}, u\in E\), define a sequence \(\{x_{n}\}\) iteratively by:

where \(\{\lambda_{n}\}\) and \(\{\theta_{n}\}\) are sequences in \((0,1)\) satisfying conditions (i)-(iv) above. Then the sequence \(\{x_{n}\}\) converges strongly to a J-fixed point of T.

Proof

Setting \(y^{*}=\lambda_{n} [Jx_{n}-\eta_{n}+\theta _{n}(Jx_{n}-Ju) ]\in E^{*}\), applying inequality (2.5) and using Lemma 3.3, we compute as follows:

But we have from Lemma 2.6, \(y_{n}=J^{-1} [\tau _{n}-\theta _{n}(Jy_{n}-Ju) ]\) for some \(\tau_{n}\in Ty_{n}\) and thus obtain

Hence, substituting this in inequality (3.7) and using Lemma 3.3, we obtain

Furthermore, using Lemma 2.2, we obtain

Substituting this inequality in inequality (3.8), we thus have

Estimating the underlined terms, we obtain

We thus have

Now, setting

and

inequality (3.11) becomes

It now follows from Lemma 2.8 that \(\phi (y_{n-1},x_{n})\rightarrow0\) as \(n\rightarrow\infty\). From Lemma 2.7, we have \(\|x_{n}-y_{n-1}\|\rightarrow0\) and since \(y_{n}\rightarrow y^{*}\in(J-T)^{-1}0\), we obtain \(x_{n}\rightarrow y^{*}\in(J-T)^{-1}0\). This completes the proof. □

Example 3

We have (see, e.g., [23], p.47) for \(p>1\), \(q>1\), \(X=L^{p}\), \(X^{*}=L^{q}\),

and so obtain

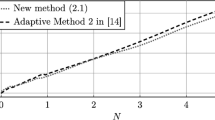

The prototypes for our theorems are the following:

In particular, without loss of generality, let \(r=p\). Then one can choose \(a:=\frac{1}{(p+1)}\) and \(b:= \min \{\frac{1}{2K},\frac {1}{2p(p+1)} \}\).

We now verify that, with these prototypes, the conditions (i)-(iii) of Theorem 3.5 are satisfied. Clearly (i) and the first part of (ii) are easily verified.

For the second part of condition (ii), we have

For condition (iii), we have

Similarly, we obtain

Finally, for condition (iv), we have

This completes the verification.

Remark 5

We remark, following Lindenstrauss and Tzafriri [47], that in applications, we do not often use the precise value of the modulus of convexity but only a power type estimate from below.

A uniformly convex space X has modulus of convexity of power type p if, for some \(0< K<\infty\), \(\delta_{X}(\epsilon)\ge K\epsilon^{p}\). For instance, \(L_{p}\) spaces have modulus of convexity of power type 2, for \(1< p\le2\), and of power type p, for \(p>2\) (see, e.g., [47], p.63). We observe that the condition for modulus of convexity of power type p corresponds to that of p-uniformly convex spaces. However, we see that \(L_{p}\) spaces are p-uniformly convex, for \(1< p< 2\), and are 2-uniformly convex, for \(p\ge2\). These lead us to prove the following corollary of Theorem 3.4, which will be crucial in several applications.

Corollary 3.6

For \(p>1\), \(q>1\), let E be a p-uniformly convex and q-uniformly smooth real Banach space and let \(E^{*}\) be its dual. Let \(T:E\to E^{*}\) be a J-pseudocontractive and bounded map. Suppose \(F_{E}^{J}(T):=\{u^{*}\in E: Tu^{*}=Ju^{*}\}\ne\emptyset\). For arbitrary \(x_{1}, u\in E\), define a sequence \(\{x_{n}\}\) iteratively by:

where \(\{\lambda_{n}\}\) and \(\{\theta_{n}\}\) are sequences in \((0,1)\) satisfying conditions (i)-(iii) of Theorem 3.4. Then the sequence \(\{x_{n}\}\) converges strongly to a J-fixed point of T.

Proof

We observe, for p-uniformly convex space, using Remark 2, that conditions (i)-(iv) of Theorem 3.5 reduce to:

- (i)∗ :

-

\(\lambda_{n}\le\gamma_{0}\theta_{n}\),

- (ii)∗ :

-

\(\sum_{n=1}^{\infty}\lambda_{n}\theta_{n}=\infty\),

- (iii)∗ :

-

\((\frac{\theta_{n-1}-\theta_{n}}{\theta_{n}} )^{1/p}\rightarrow0\), \(\frac{M^{*} (\frac{\theta_{n-1}-\theta _{n}}{\theta_{n}} )^{1/p}}{\lambda_{n}\theta_{n}}\rightarrow0\), \(\frac{ (\lambda _{n}^{(1/p)}M_{0}^{**} )}{\theta_{n}} \rightarrow0\), as \(n\rightarrow\infty\), for some \(M_{0}^{**}, M^{*}>0\),

and for p-uniformly convex spaces, we have from (3.3), using equation (2.12),

Following the proof of Theorem 3.5, we have from inequality (3.9), using (3.13):

Now, setting

and

It now follows from Lemma 2.8 that \(\phi (y_{n-1},x_{n})\rightarrow0\) as \(n\rightarrow\infty\). From Lemma 2.7, we have \(\|x_{n}-y_{n-1}\|\rightarrow0\), and since \(y_{n}\rightarrow y^{*}\in(J-T)^{-1}0\), this completes the proof. □

Example 4

Real sequences that satisfy the conditions (i)∗-(iv)∗ in Corollary 3.6 are the following:

For example, one can choose \(a:=\frac{1}{(p+1)}\) and \(b:= \frac {1}{2p(p+1)}\). We now check these prototypes.

Clearly conditions (i)∗-(ii)∗ are satisfied. We verify condition (iii)∗. Using the fact that \((1+x)^{s}\le1+sx\), for \(x>-1\) and \(0< s<1\), we have

Also,

and

4 Application to zeros of maximal monotone maps

Corollary 4.1

Let E be a uniformly convex and uniformly smooth real Banach space and let \(E^{*}\) be its dual. Let \(A:E\to2^{E^{*}}\) be a multi-valued maximal monotone and bounded map such that \(A^{-1}0\ne\emptyset\). For fixed \(u, x_{1}\in E\), let a sequence \(\{x_{n}\}\) be iteratively defined by

where \(\{\lambda_{n}\}\) and \(\{\theta_{n}\}\) are sequences in \((0,1)\). Then the sequence \(\{x_{n}\}\) converges strongly to a zero of A.

Proof

Recall that A is monotone if and only if \(T=(J-A)\) is J-pseudocontractive and that zeros of A correspond to J-fixed points of T.. Now, if we replace A by \(J-T\) in equation (4.1), the equation reduces to (3.6) and hence the proof follows. □

5 Complement to proximal point algorithm

The proximal point algorithm of Martinet [35] and Rockafellar [36] was introduced to approximate a solution of \(0\in Au\) where A is the subdifferential of some convex functional defined on a real Hilbert space. A solution of this inclusion gives the minimizers of the convex functional. Let E be a real normed space with dual space, \(E^{*}\) and \(f:E\rightarrow\mathbb{R}\) be a convex functional. The subdifferential of f, \(\partial f:E\rightarrow2^{E^{*}}\) at \(u\in E\), is defined as follows:

It is well known that ∂f is a maximal monotone map on E and that \(0\in(\partial f)(u)\) if and only if u is a minimizer of f. Following this, the proximal point algorithm has been studied for minimizers of f in real Banach spaces more general than Hilbert spaces.

Rockafellar [36] proved that the proximal point algorithm defined as follows:

where \(\lambda_{k}>0\) is a regularizing parameter; converges weakly to a solution of \(0\in Au\) where A is the subdifferential of a convex functional on a Hilbert space provided a solution exists. He then asked if the proximal point algorithm always converge strongly.

This was resolved in the negative by Güler [51] who produced a proper closed convex function g in the infinite dimensional Hilbert space \(l_{2}\) for which the proximal point algorithm converges weakly but not strongly (see also Bauschke et al. [52]). Several authors modified the proximal point algorithm to obtain strong convergence (see, e.g., Bruck [37]; Kamimura and Takahashi [40]; Lehdili and Moudafi [41]; Reich [42]; Solodov and Svaiter [45]; Xu [46]). We remark that in every one of these modifications, the recursion formula developed involved either the computation of \((I+\lambda_{k} A)^{-1}(x_{k})\) at each point of the iteration process or the construction, at each iteration, of two subsets of the space, intersecting them and projecting the initial vector onto the intersection. As far as we know, the first iteration process to approximate a solution of \(0\in Au\) in real Banach spaces more general than Hilbert spaces and which does not involve either of these setbacks was given by Chidume and Djitte [39] who proved a special case of Theorem 1.1 in which the space E is a 2-uniformly smooth real Banach space. These spaces include \(L_{p}\) spaces, \(2\le p<\infty\), but do not include \(L_{p}\) spaces, \(1< p<2\). This result of Chidume and Djitte has recently been proved in uniformly convex and uniformly smooth real Banach spaces (which include \(L_{p}\) spaces, \(1< p<\infty\)) (Chidume (Theorem 1.1) above).

Corollary 4.1 of this paper is an analog of Theorem 1.1 for maximal monotone maps when \(A:E\rightarrow2^{E^{*}}\) is a maximal monotone and bounded map, a result which complements the proximal point algorithm, under this setting, in the sense that it yields strong convergence to a solution of \(0\in Au\) and without requiring either the computation of \((J+\lambda A)^{-1}(z_{n})\) at each iteration process, or the construction of two subsets of E, and projection of the initial vector onto their intersection, at each stage of the iteration process.

6 Application to solutions of Hammerstein integral equations

Definition 6.1

Let \(\Omega\subset{\mathbb{R}}^{n}\) be bounded. Let \(k:\Omega\times \Omega\to\mathbb{R}\) and \(f:\Omega\times\mathbb{R} \to\mathbb{R}\) be measurable real-valued functions. An integral equation (generally nonlinear) of Hammerstein-type has the form

where the unknown function u and inhomogeneous function w lie in a Banach space E of measurable real-valued functions.

By a simple transformation (6.1) can put in the form

which, without loss of generality can be written as

Interest in Hammerstein integral equations stems mainly from the fact that several problems that arise in differential equations, for instance, elliptic boundary value problems whose linear part posses Green’s function can, as a rule, be transformed into the form (6.1) (see, e.g., Pascali and Sburian [8], p.164).

Among the first early results on the approximation of solution of Hammerstein equations is the following result of Brézis and Browder.

Theorem 6.2

(Brézis and Browder [53])

Let H be a separable Hilbert space and C be a closed subspace of H. Let \(K:H \to C\) be a bounded continuous monotone operator and \(F:C\to H\) be angle-bounded and weakly compact mapping. For a giving \(f\in C\), consider the Hammerstein equation

and its nth Galerkin approximation given by

where \(K_{n}=P_{n}^{*}KP_{n}:H\to C\) and \(F_{n}=P_{n}FP_{n}^{*}:C_{n} \to H\), where the symbols have their usual meanings (see [8]). Then, for each \(n\in\mathbb{N}\), the Galerkin approximation (6.5) admits a unique solution \(u_{n}\) in \(C_{n}\) and \(\{u_{n}\}\) converges strongly in H to the unique solution \(u\in C\) of the equation (6.4) where \(K_{n}=P_{n}^{*}KP_{n}:H\to C\) and \(F_{n}=P_{n}FP_{n}^{*}:C_{n} \to H\), where the symbols have their usual meanings (see [53]). Then, for each \(n\in\mathbb{N}\), the Galerkin approximation (6.5) admits a unique solution \(u_{n}\) in \(C_{n}\) and \(\{u_{n}\}\) converges strongly in H to the unique solution \(u\in C\) of the equation (6.4).

It is obvious that if an iterative algorithm can be developed for the approximation of solutions of equation of Hammerstein-type (6.3), this will certainly be preferred.

Attempts have been made to approximate solutions of equations of Hammerstein-type using Mann-type iteration scheme. However, the results obtained were not satisfactory (see, e.g., [54]). The recurrence formulas used in early attempts involved \(K^{-1}\) which is also required to be strongly monotone, and this, apart from limiting the class of mappings to which such iterative schemes are applicable, it is also not convenient in applications. Part of the difficulty is the fact that the composition of two monotone operators need not to be monotone.

The first satisfactory results on iterative methods for approximating solutions of Hammerstein equations in real Banach spaces more general Hilbert spaces, as far as we know, were obtained by Chidume and Zegeye [55–57]. For the case of real Hilbert space H, for \(F,K:H \to H\), they defined an auxiliary map on the Cartesian product \(E:=H\times H\), \(T:E\to E\) by

We note that

With this, they were able to obtain strong convergence of an iterative scheme defined in the Cartesian product space E to a solution of Hammerstein equation (6.3). The method of proof used by Chidume and Zegeye provided the clue to the establishment of the following couple explicit algorithm for computing a solution of the equation \(u+KFu=0\) in the original space X. With initial vectors \(u_{0}, v_{0}\in X\), sequences \(\{u_{n}\}\) and \(\{v_{n}\}\) in X are defined iteratively as follows:

where \(\alpha_{n}\) is a sequence in \((0,1)\) satisfying appropriate conditions.

Some typical results obtained using the recursion formulas described above in approximating solutions of nonlinear Hammerstein equations involving monotone maps in Hilbert spaces can be found in [57, 58].

In real Banach space X more general than Hilbert spaces, where \(F,K: X\rightarrow X\) are of accretive-type, Chidume and Zegeye considered an operator \(A:E\rightarrow E\) where \(E:= X\times X\) and were able to successfully approximate solutions of Hammerstein equations using recursion formulas described above. These schemes have now been employed by Chidume and other authors to approximate solutions of Hammerstein equations in various Banach spaces under various continuity assumptions (see, e.g., [27, 31, 55–71]). This success has not carried over to the case of monotone-type mappings in Banach spaces where K and F map a space into its dual. In this section, we introduce a new iterative scheme and prove that a sequence of our scheme converges strongly to a solution of a Hammerstein equation under this setting. For this purpose, we begin with the following preliminaries and lemmas.

We now prove the following lemmas.

Lemma 6.3

Let X, Y be real uniformly convex and uniformly smooth spaces. Let \(E=X\times Y\) with the norm \(\|z\|_{E}=(\|u\|^{q}_{X} + \|v\|^{q}_{Y})^{\frac {1}{q}}\), for arbitrary \(z=[u,v]\in E\). Let \(E^{*}=X^{*}\times Y^{*}\) denote the dual space of E. For arbitrary \(x=[x_{1},x_{2}]\in E\), define the map \(j_{q}^{E}:E\rightarrow E^{*}\) by

so that for arbitrary \(z_{1}=[u_{1},v_{1}]\), \(z_{2}=[u_{2},v_{2}]\) in E, the duality pairing \(\langle\cdot,\cdot\rangle\) is given by

Then

-

(a)

E is uniformly smooth and uniformly convex,

-

(b)

\(j_{q}^{E}\) is single-valued duality mapping on E.

Proof

(a) Let \(p>1\), \(q>1\). Let \(x=[x_{1},x_{2}]\), \(y=[y_{1},y_{2}]\) be arbitrary elements of E. Using Condition (iii)′ of Corollary 2r in [72], we have

where \(g^{*}_{1}\), \(g^{*}_{2}\) are strictly increasing continuous and convex functions on \(\mathbb{R}^{+}\) and \(g^{*}_{1}(0)=g^{*}_{2}(0)=0\). It follows that

where \(g^{*}(\|x-y\|)=g^{*}_{1}(\|x_{1}-y_{1}\|) + g^{*}_{2}(\|x_{2}-y_{2}\|)\). Hence the result follows from Corollary 2′ that E is uniformly smooth.

Also, using condition (iii) of Corollary 3 in [72], we have

where \(g_{1}\), \(g_{2}\) are strictly increasing continuous and convex functions on \(\mathbb{R}^{+}\) and \(g_{1}(0)=g_{2}(0)=0\). It follows that

where \(g(\|x-y\|)=g_{1}(\|x_{1}-y_{1}\|) + g_{2}(\|x_{2}-y_{2}\|)\). Hence the result follows from Corollary 3 that E is uniformly convex. Since E is uniformly smooth, it is smooth and hence any duality mapping on E is single-valued.

(b) For arbitrary \(x=[x_{1},x_{2}]\in E\), let \(j_{q}^{E}(x)=j_{q}^{E}[x_{1},x_{1}] = \psi_{q}\). Then \(\psi_{q}=[j_{q}^{X}(x_{1}),j_{q}^{Y}(x_{2})]\in E^{*}\). We have, for \(p>1\) such that \(1/p + 1/q=1\),

Hence, \(\|\psi\|_{E^{*}}=\|x\|_{E}^{q-1}\). Furthermore,

Hence, \(j_{q}^{E}\) is a single-valued normalized duality mapping on E. □

The following lemma will be needed in the following.

Lemma 6.4

(Browder [73])

Let X be a strictly convex reflexive Banach space with a strictly convex conjugate space \(X^{*}\), \(T_{1}\) a maximal monotone mapping from X to \(X^{*}\), \(T_{2}\) a hemicontinuous monotone mapping of all of X into \(X^{*}\) which carries bounded subsets of X into bounded subsets of \(X^{*}\). Then the mapping \(T=T_{1}+T_{2}\) is a maximal monotone map of X into \(X^{*}\).

Using Lemma 6.4, we prove the following important lemma which will be used in the sequel.

Lemma 6.5

Let E be a Banach space. Let \(F:E\rightarrow E^{*}\) and \(K:E^{*}\rightarrow E\) be bounded and maximal monotone mappings with \(D(F)=D(K)=E\). Let \(T:E\times E^{*}\rightarrow E^{*}\times E\) be defined by

then the mapping \(A:=(J-T)\) is maximal monotone.

Proof

We show that the mapping \(A=(J-T):E\times E^{*}\rightarrow E^{*}\times E\) defined as

is maximal monotone. Let \(S,T:E\times E^{*}\rightarrow E^{*}\times E\) be defined as

Then \(A=S+T\). It suffices to show S, T are maximal monotone.

Observe that S is monotone. Let \(h=[h_{1},h_{2}]\in E^{*}\times E\). Since F, K are maximal monotone, take \(u=(J+\lambda F)^{-1}h_{1}\) and \(v=(J_{*}+\lambda K)^{-1}h_{2}\). Then \((J+\lambda S)w=h\), where \(w=[u,v]\). Hence, S is maximal monotone.

Clearly, T is bounded and monotone. Furthermore it is continuous. Hence, it is hemi-continuous. Therefore by Lemma 6.4, \(A=S+T\) is maximal monotone. □

Lemma 6.6

Let E be a uniformly convex and uniformly smooth real Banach space. Let \(F:E\rightarrow E^{*}\) and \(K:E^{*}\rightarrow E\) be monotone mappings with \(D(F)=D(K)=E\). Let \(T:E\times E^{*}\rightarrow E^{*}\times E\) be defined by \(T[u,v]=[Ju-Fu+v,J_{*}v-Kv-u]\) for all \((u,v)\in E\times E^{*}\), then T is J-pseudocontractive. Moreover, if the Hammerstein equation \(u+KFu=0\) has a solution in E, then \(u^{*}\) is a solution of \(u+KFu=0\) if and only if \((u^{*},v^{*})\in F_{E}^{J}(T)\), where \(v^{*}=Fu^{*}\).

Proof

Using the monotonicity of F and K, we easily obtain \(\langle Tw_{1}-Tw_{2},w_{1}-w_{2}\rangle\le\langle Jw_{1}-Jw_{2},w_{1}-w_{2}\rangle\) for all \(w_{1}=[u_{1},v_{1}], w_{2}=[u_{2},v_{2}]\in E\times E^{*}\).

Moreover, we observe that

□

We now prove the following theorem.

Theorem 6.7

Let E be a uniformly smooth and uniformly convex real Banach space and \(F:E\rightarrow E^{*}\), \(K:E^{*}\rightarrow E\) be maximal monotone and bounded maps, respectively. For \((x_{1},y_{1}), (u_{1},v_{1})\in E\times E^{*}\), define the sequences \(\{u_{n}\}\) and \(\{v_{n}\}\) in E and \(E^{*}\) respectively, by

Assume that the equation \(u+KFu=0\) has a solution. Then the sequences \(\{u_{n}\} _{n=1}^{\infty}\) and \(\{v_{n}\}_{n=1}^{\infty}\) converge strongly to \(u^{*}\) and \(v^{*}\), respectively, where \(u^{*}\) is the solution of \(u+KFu=0\) with \(v^{*}=Fu^{*}\).

Proof

From Lemma 6.6 we see that \(T:E\times E^{*}\rightarrow E^{*}\times E\) defined by \(T[u,v]=[Ju-Fu+v,J_{*}v-Kv-u]\) for all \((u,v)\in E\times E^{*}\) is J-pseudocontractive, and \(A:=(J-T)\) is maximal monotone.

Applying Theorem 3.4 where \(X=E\times E^{*}\), from Lemma 6.3, X is uniformly convex and uniformly smooth. We obtain (6.8) and (6.9) and the proof follows. □

7 Application to convex optimization problem

The following lemma is well known (see, e.g., [74], p.23, for similar proof in the Hilbert space case).

Lemma 7.1

Let X be a normed space. Let \(f:X\rightarrow\mathbb{R}\) be a convex function that is bounded on bounded subsets of X. Then the subdifferential, \(\partial f:X\rightarrow2^{X^{*}}\) is bounded on bounded subsets of E.

We now prove the following strong convergence theorem.

Theorem 7.2

Let E be a uniformly convex and uniformly smooth real Banach space with dual \(E^{*}\). Let \(f:E\rightarrow(-\infty,\infty]\) be a lower semi-continuously Frèchet differentiable convex and bounded functional such that \((\partial f)^{-1}0\ne\emptyset\). For given \(u,x_{1}\in E\), let \(\{x_{n}\}\) be generated by the algorithm

Then \(\{x_{n}\}\) converges strongly to some \(x^{*}\in(\partial f)^{-1}0\).

Proof

Since f is convex and bounded, we see that ∂f is bounded. By Rockafellar [75, 76] (see also, e.g., Minty [2], Moreau [77]), we see that \((\partial f)\) is maximal monotone mapping from \(E^{*}\) into E and \(0\in(\partial f)^{-1}v\) if and only if \(f(v)=\min_{x\in E}f(x)\). Since f is convex and bounded, from Lemma 7.1 we see that ∂f is bounded, hence, the conclusion follows from Corollary 4.1. □

Remark 6

The analytical representations of duality mappings are known in a number of Banach spaces. For instance, in the spaces \(L^{p}(G)\) and \(W^{p}_{m}(G)\), \(p \in(1,\infty)\) we have, respectively,

and

where \(p^{-1}+q^{-1}=1\). (See, e.g., Alber and Ryazantseva [23], p.36.)

8 Conclusion

Let E be a uniformly convex and uniformly smooth real Banach space with dual \(E^{*}\). Approximation of zeros of accretive-type maps of E to itself, assuming existence, has been studied extensively within the past 40 years or so (see, e.g., Agarwal et al. [17]; Berinde [4]; Chidume [6]; Reich [18]; Censor and Reich [19]; William and Shahzad [20], and the references therein). The key tool for this study has been the study of fixed points of pseudocontractive-type maps.

Unfortunately, for approximating zeros of monotone-type maps from E to \(E^{*}\), the normal fixed point technique is not applicable. This motivated the study of the notion of J-pseudocontractive maps introduced in this paper. The main result of this paper is Theorem 3.5 which provides an easily applicable iterative sequence that converges strongly to a J-fixed point of T, where \(T:E\rightarrow2^{E^{*}}\) is a J-pseudocontractive and bounded map such that \(J-T\) is maximal monotone. The two parameters in the recursion formula of the theorem, \(\theta_{n}\) and \(\lambda_{n}\), are easily chosen in any possible application of the theorem (see Example 4 above).

The theorem is, in particular, applicable in \(L_{p}\) and \(l_{p}\) spaces, \(1< p<\infty\). In these spaces, the normalized duality maps J and \(J^{-1}\) which appear in the recursion formula of the theorem are precisely known (see Remark 6 above).

Consequently, while the proof of the theorem is very technical and nontrivial, with the simple choices of the iteration parameters and the exact explicit formula for J and \(J^{-1}\), the recursion formula of the theorem which does not involve the resolvent operator, \((J+\lambda A)^{-1}\), is extremely attractive and user friendly.

Theorem 3.5 is applicable in numerous situations. In this paper, it has been applied to approximate a zero of a bounded maximal monotone map \(A:E\rightarrow2^{E^{*}}\) with \(A^{-1}(0)\ne\emptyset\).

Furthermore, the theorem complements the proximal point algorithm by providing strong convergence to a zero of a maximal monotone operator A and without involving the resolvent \(J_{r}:=(J+rA)^{-1}\) in the recursion formula. In addition, it is applied to approximate solutions of Hammerstein integral equations and also to approximate solutions of convex optimization problems. Theorem 3.5 continues to be applicable in approximating solutions of nonlinear equations. It has recently been applied to approximate a common zero of an infinite family of J-nonexpansive maps, \(T_{i}: E\rightarrow2^{E^{*}}\), \(i\ge1\) (see Chidume et al. [78]). In the case that \(E=H\) is a real Hilbert space, the result obtained in Chidume et al. [78] is a significant improvement of important known results. We strongly believed that the results of this paper will continue to be applied to approximate solutions of equilibrium problems in nonlinear operator theory.

References

Zarantonello, EH: Solving functional equations by contractive averaging. Tech. Rep. 160, U.S. Army Math. Research Center, Madison, Wisconsin (1960)

Minty, GJ: Monotone (nonlinear) operators in Hilbert space. Duke Math. J. 29(4), 341-346 (1962)

Kačurovskii, RI: On monotone operators and convex functionals. Usp. Mat. Nauk 15(4), 213-215 (1960)

Berinde, V: Iterative Approximation of Fixed Points. Lecture Notes in Mathematics. Springer, London (2007)

Browder, FE: Nonlinear mappings of nonexpansive and accretive-type in Banach spaces. Bull. Am. Math. Soc. 73, 875-882 (1967)

Chidume, CE: Geometric Properties of Banach Spaces and Nonlinear Iterations. Lectures Notes in Mathematics, vol. 1965. Springer, London (2009)

Reich, S: Constructive techniques for accretive and monotone operators. In: Applied Non-Linear Analysis, pp. 335-345. Academic Press, New York (1979)

Pascali, D, Sburian, S: Nonlinear Mappings of Monotone Type. Editura Academia, Bucuresti (1978)

Kato, T: Nonlinear semigroups and evolution equations. J. Math. Soc. Jpn. 19, 508-520 (1967)

Zeidler, E: Nonlinear Functional Analysis and Its Applications. Part II: Monotone Operators. Springer, Berlin (1985)

Browder, FE: Nonlinear equations of evolution and nonlinear accretive operators in Banach spaces. Bull. Am. Math. Soc. 73, 875-882 (1967)

Benilan, P, Crandall, MG, Pazy, A: Nonlinear evolution equations in Banach spaces. Preprint, Besançon (1994)

Khatibzadeh, H, Moroşanu, G: Strong and weak solutions to second order differential inclusions governed by monotone operators. Set-Valued Var. Anal. 22(2), 521-531 (2014)

Khatibzadeh, H, Shokri, A: On the first- and second-order strongly monotone dynamical systems and minimization problems. Optim. Methods Softw. 30(6), 1303-1309 (2015)

Showalter, RE: Monotone Operators in Banach Spaces and Nonlinear Partial Differential Equations. Mathematical Surveys and Monographs, vol. 49. Am. Math. Soc., Providence (1997)

Volpert, V: Elliptic Partial Differential Equations: Volume 2: Reaction-Diffusion Equations. Monographs in Mathematics, vol. 104. Springer, Berlin (2014)

Agarwal, RP, Meehan, M, O’Regan, D: Fixed Point Theory and Applications. Cambridge Tracts in Mathematics, vol. 141. Cambridge University Press, Cambridge (2001)

Reich, S: A weak convergence theorem for the alternating methods with Bergman distance. In: Kartsatos, AG (ed.) Theory and Applications of Nonlinear Operators of Accretive and Monotone Type. Lecture Notes in Pure and Appl. Math., vol. 178, pp. 313-318. Dekker, New York (1996)

Censor, Y, Reich, S: Iterations of paracontractions and firmly nonexpansive operators with applications to feasibility and optimization. Optimization 37(4), 323-339 (1996)

William, K, Shahzad, N: Fixed Point Theory in Distance Spaces. Springer, Berlin (2014)

Chidume, CE: Strong convergence theorems for bounded accretive operators in uniformly smooth Banach spaces. In: Mordukhovich, S, Reich, S, Zaslavski, AJ (eds.) Nonlinear Analysis and Optimization. Contemporary Mathematics, vol. 659. Am. Math. Soc., Providence (2016)

Alber, Y: Metric and generalized projection operators in Banach spaces: properties and applications. In: Kartsatos, AG (ed.) Theory and Applications of Nonlinear Operators of Accretive and Monotone Type, pp. 15-50. Dekker, New York (1996)

Alber, Y, Ryazantseva, I: Nonlinear Ill Posed Problems of Monotone Type. Springer, London (2006)

Alber, Y, Guerre-Delabriere, S: On the projection methods for fixed point problems. Analysis 21(1), 17-39 (2001)

Chidume, CE: An approximation method for monotone Lipschitzian operators in Hilbert-spaces. J. Aust. Math. Soc. Ser. A 41, 59-63 (1986)

Chidume, CE, Chidume, CO, Bello, AU: An algorithm for computing zeros of generalized phi-strongly monotone and bounded maps in classical Banach spaces. Optimization 65(4), 827-839 (2016). doi:10.1080/02331934.2015.1074686

Diop, C, Sow, TMM, Djitte, N, Chidume, CE: Constructive techniques for zeros of monotone mappings in certain Banach spaces. SpringerPlus 4(1), 383 (2015)

Moudafi, A: Proximal methods for a class of bilevel monotone equilibrium problems. J. Glob. Optim. 47(2), 45-52 (2010)

Moudafi, A, Thera, M: Finding a zero of the sum of two maximal monotone operators. J. Optim. Theory Appl. 94(2), 425-448 (1997)

Reich, S: The range of sums of accretive and monotone operators. J. Math. Anal. Appl. 68(1), 310-317 (1979)

Sow, TMM, Diop, C, Djitte, N: Algorithm for Hammerstein equations with monotone mappings in certain Banach spaces. Creative Math. Inform. 25(1), 101-114 (2016)

Takahashi, W: Proximal point algorithms and four resolvents of nonlinear operators of monotone type in Banach spaces. Taiwan. J. Math. 12(8), 1883-1910 (2008)

Zegeye, H: Strong convergence theorems for maximal monotone mappings in Banach spaces. J. Math. Anal. Appl. 343, 663-671 (2008)

Liu, B: Fixed point of strong duality pseudocontractive mappings and applications. Abstr. Appl. Anal. 2012, Article ID 623625 (2012). doi:10.1155/2012/623625

Martinet, B: Régularisation d’inéquations variationnelles par approximations successives. Rev. Fr. Autom. Inform. Rech. Opér. 4, 154-158 (1970)

Rockafellar, RT: Monotone operators and the proximal point algorithm. SIAM J. Control Optim. 14(5), 877-898 (1976)

Bruck, RE Jr.: A strong convergent iterative solution of \(0\in U(x)\) for a maximal monotone operator U in Hilbert space. J. Math. Anal. Appl. 48, 114-126 (1974)

Chidume, CE: The iterative solution of the equation \(f\in x + Tx\) for a monotone operator T in \(L^{p}\) spaces. J. Math. Anal. 116(2), 531-537 (1986)

Chidume, CE, Djitte, N: Strong convergence theorems for zeros of bounded maximal monotone nonlinear operators. Abstr. Appl. Anal. 2012, Article ID 681348 (2012). doi:10.1155/2012/681348

Kamimura, S, Takahashi, W: Strong convergence of a proximal-type algorithm in a Banach space. SIAM J. Optim. 13(3), 938-945 (2003)

Lehdili, N, Moudafi, A: Combining the proximal algorithm and Tikhonov regularization. Optimization 37, 239-252 (1996)

Reich, S: Strong convergence theorems for resolvents of accretive operators in Banach spaces. J. Math. Anal. Appl. 75(1), 287-292 (1980)

Reich, S, Sabach, S: A strong convergence theorem for a proximal-type algorithm in reflexive Banach spaces. J. Nonlinear Convex Anal. 10(3), 471-485 (2009)

Reich, S, Sabach, S: Two strong convergence theorems for a proximal method in reflexive Banach spaces. Numer. Funct. Anal. Optim. 31(1-3), 22-44 (2010)

Solodov, MV, Svaiter, BF: Forcing strong convergence of proximal point iterations in a Hilbert space. Math. Program., Ser. A 87, 189-202 (5000)

Xu, HK: A regularization method for the proximal point algorithm. J. Glob. Optim. 36(1), 115-125 (2006)

Lindenstrauss, J, Tzafriri, L: Classical Banach Spaces II: Function Spaces. Ergebnisse Math. Grenzgebiete, vol. 97. Springer, Berlin (1979)

Kamimura, S, Takahashi, W: Strong convergence of a proximal-type algorithm in a Banach space. SIAM J. Optim. 13(3), 938-945 (2002)

Kido, K: Strong convergence of resolvents of monotone operators in Banach spaces. Proc. Am. Math. Soc. 103(3), 755-7588 (1988)

Xu, HK: Iterative algorithms for nonlinear operators. J. Lond. Math. Soc. (2) 66(1), 240-256 (2002)

Güler, O: On the convergence of the proximal point algorithm for convex minimization. SIAM J. Control Optim. 29, 403-419 (1991)

Bauschke, HH, Matouskov, E, Reich, S: Projection and proximal point methods: convergence results and counterexeamples. Nonlinear Anal. 56, 715-738 (2004)

Brézis, H, Browder, FE: Nonlinear integral equations and systems of Hammerstein type. Bull. Am. Math. Soc. 82, 115-147 (1976)

Chidume, CE, Osilike, MO: Iterative solution of nonlinear integral equations of Hammerstein-type. J. Niger. Math. Soc. 11, 9-18 (1992)

Chidume, CE, Zegeye, H: Iterative approximation of solutions of nonlinear equation of Hammerstein-type. Abstr. Appl. Anal. 6, 353-367 (2003)

Chidume, CE, Zegeye, H: Approximation of solutions of nonlinear equations of monotone and Hammerstein-type. Appl. Anal. 82(8), 747-758 (2003)

Chidume, CE, Zegeye, H: Approximation of solutions of nonlinear equations of Hammerstein-type in Hilbert space. Proc. Am. Math. Soc. 133(3), 851-858 (2005)

Chidume, CE, Shehu, Y: Approximation of solutions of equations of Hammerstein type in Hilbert spaces. Fixed Point Theory 16(1), 91-102 (2015)

Chidume, CE, Djitte, N: Iterative approximation of solutions of nonlinear equations of Hammerstein type. Nonlinear Anal. 70(11), 4086-4092 (2009)

Chidume, CE, Djitte, N: Approximation of solutions of Hammerstein equations with bounded strongly accretive nonlinear operators. Nonlinear Anal. 70(11), 4071-4078 (2009)

Chidume, CE, Ofoedu, EU: Solution of nonlinear integral equations of Hammerstein type. Nonlinear Anal. 74(13), 4293-4299 (2011)

Chidume, CE, Djitte, N: Approximation of solutions of nonlinear integral equations of Hammerstein type. ISRN Math. Anal. 2012, Article ID 169751 (2012). doi:10.1155/2012/16975

Chidume, CE, Djitte, N: Convergence theorems for solutions Hammerstein equations with accretive type nonlinear operators. Panam. Math. J. 22(2), 19-29 (2012)

Chidume, CE, Shehu, Y: Approximation of solutions of generalized equations of Hammerstein type. Comput. Math. Appl. 63, 966-974 (2012)

Chidume, CE, Shehu, Y: Strong convergence theorem for approximation of solutions of equations of Hammerstein type. Nonlinear Anal. 75, 5664-5671 (2012)

Chidume, CE, Shehu, Y: Iterative approximation of solution of equations of Hammerstein type in certain Banach spaces. Appl. Math. Comput. 219, 5657-5667 (2013)

Chidume, CE, Djitte, N: Iterative method for solving nonlinear integral equations of Hammerstein type. Appl. Math. Comput. 219, 5613-5621 (2013)

Chidume, CE, Shehu, Y: Iterative approximation of solutions of generalized equations of Hammerstein type. Fixed Point Theory 15(2), 427-440 (2014)

Chidume, CE, Bello, AU: An iterative algorithm for approximating solutions of Hammerstein equations with monotone maps in Banach spaces. Appl. Math. Comput. (to appear)

Djitte, N, Sene, M: An iterative algorithm for approximating solutions of Hammerstein integral equations. Numer. Funct. Anal. Optim. 34, 1299-1316 (2012)

Djitte, N, Sene, M: Approximation of solutions of nonlinear integral equations of Hammerstein type with Lipschitz and bounded nonlinear operators. ISRN Appl. Math. 2012, Article ID 963802 (2012). doi:10.5402/2012/963802

Xu, HK: Inequalities in Banach spaces with applications. Nonlinear Anal. 16(12), 1127-1138 (1991)

Browder, FE: Existence and perturbation theorems for nonlinear maximal monotone operators in Banach spaces. Bull. Am. Math. Soc. 73(3), 322-327 (1967)

Vasin, VV, Eremin, II: Operators and Iterative Processes of Fejer Type: Theory and Applications, 1st edn. Inverse and III-Posed Problems Series. de Gruyter, Berlin (2009)

Rockafellar, RT: Characterization of subdifferentials of convex functions. Pac. J. Math. 17, 497-510 (1966)

Rockafellar, RT: On the maximal monotonicity of subdifferential mappings. Pac. J. Math. 33, 209-216 (1970)

Moreau, JJ: Proximité et dualité dans un espace Hilbertien. Bull. Soc. Math. Fr. 93, 273-299 (1965)

Chidume, CE, Otubo, EE, Ezea, CG: Strong convergence theorem for a common fixed point of an infinite family of J-nonexpansive maps with applications. Aust. J. Math. Anal. Appl. 13(1), 1-13 (2016)

Acknowledgements

Research was supported from ACBF Research Grant Funds to AUST.

Author information

Authors and Affiliations

Corresponding author

Additional information

Competing interests

The authors declare that they have no competing interests.

Authors’ contributions

All authors contributed equally to the writing of this paper. All authors read and approved the final manuscript.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Chidume, C.E., Idu, K.O. Approximation of zeros of bounded maximal monotone mappings, solutions of Hammerstein integral equations and convex minimization problems. Fixed Point Theory Appl 2016, 97 (2016). https://doi.org/10.1186/s13663-016-0582-8

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13663-016-0582-8