Abstract

In this letter, a surround suppression variational Retinex enhancement algorithm (SSVR) is proposed for non-uniform illumination images. Instead of a gradient module, a surround suppression mechanism is used to provide spatial information in order to constrain the total variation regularization strength of the illumination and reflectance. The proposed strategy preserves the boundary areas in the illumination so that halo artifacts are prevented. It also preserves textural details in the reflectance to prevent from illumination compression, which further contributes to the contrast enhancement in the resulting image. In addition, strong regularization strength is enforced to eliminate uneven intensities in the homogeneous areas. The split Bregman optimization algorithm is employed to solve the proposed model. Finally, after decomposition, a contrast gain is added to reflectance for contrast enhancement, and a Laplacian-based gamma correction is added to illumination for prevent color cast. The recombination of the modified reflectance and illumination become the final result. Experimental results demonstrate that the proposed SSVR algorithm performs better than other methods.

Similar content being viewed by others

1 Introduction

Image enhancement techniques have been widely used in various applications in the past few decades, including face recognition [1, 2], micro-imaging [3] and intelligent video surveillance system [4] etc.. The primary purpose of image enhancement is to improve the contrast or perception of an image without losing details or introducing novel artifacts. In generally, many classical enhancement methods have been proposed, including sigmoid based algorithms [5], logarithmic domain algorithms [6], histogram equalization (HE) algorithms [7, 8], unsharp masking algorithms [9], and Retinex algorithms [10]. Sigmoid and logarithmic based methods are simple and effective for global brightness and contrast enhancement, but spatial information of images are not considered. HE algorithm is simple and widely used. But it is limited for the uneven illumination images, especially for dark areas. For unsharp masking algorithms, images are decomposed into high-frequency and low-frequency terms, which are processed respectively. Result images by this method well preserve details, but introduce unnatural looking.

Amongst the various enhancement methods, Retinex has received much attention due to its simplicity and effectiveness in enhancing non-uniform illumination images [11]. Land and McCann first proposed Retinex algorithm, which is a model of color and luminance perception of human visual system (HVS). To simulate the mechanism of HVS, it is an ill-posed problem that computes illumination or reflectance from a single observed image. To overcome this problem, many modified Retinex theories have been proposed. Retinex algorithms are basically categorized into path-based methods [12,13,14], center-/surround-based methods [15,16,17,18], recursive methods [19,20,21], PDE-based methods [22,23,24], and variational methods [11, 25,26,27,28,29,30]. Path-based Retinex methods are the simplest, but they usually necessitate high computational complexity. For the center-/surround-based methods, Gaussian filtering is usually used as a low pass filter to estimate the illumination. In order to get better performance for RGB images, Jobson et al. had put forward multi-scale Retinex (MSR) [16, 17] algorithm and color restored multi-scale Retinex (CRMSR) [18] algorithm. These algorithms are easy to implement but too many parameters need to be manually set. Large numbers of iterations lower recursive methods’ efficiency. In 1974, Horn introduced partial differential equation (PDE) as a novel mathematical model to the Retinex algorithm [22]. PDE-based methods are usually based on the assumption that the illumination varies smoothly, while the reflectance changes at sharp edges. So, reflectance component can be estimated by solving Poisson equation. In 2010, Morel proposed a new PDE-based Retinex method that computed the divergence by thresholding the components of the gradient prior instead of the scalar Laplacian operator [23]. However, the hard thresholding operator in PDE-based Retinex will cause extra artifacts when solving the Poisson equations. In [24], Zosso presented a unifying framework for Retinex in which existing Retinex algorithms can be represented within a single model. He defined Retinex model in more general two steps: first, looking for a filtered gradient to solve the optimazation problem consisting of sparsity prior and quadratic fidelity prior of the reflectance; second, finding a reflectance whose actual gradient comes close. Based on the same assumption of PDE, a variational framework for the Retinex algorithm has been proposed. In 2003, Kimmel indicated that the illumination estimation problem can be formulated as a quadratic programming optimization problem [25]. M. Ng et al. [26] proposed a total variational model for Retinex in which both illumination and reflectance components are considered. X. Lan et al. [28] introduced the concept of spatial information for the uneven intensity correction. Different regularization strength of the reflectance is enforced to get more accurate results for non-uniform illumination images. And the split Bregman algorithm is employed to solve the proposed adaptive Retinex variational model. In 2014, L. Wang et al. [11] proposed variational bayesian model for Retinex by combining the variational Retinex and Beyesian theory. Due to the shortage of the traditional variational method on limiting the scope of reflectance and illumination components, Wei Wang [30] proposed a variational model with barrier functionals for Retinex. They built a new energy function by adding two barriers for getting a better output.

In this paper, a novel image enhancement algorithm for non-uniform illumination images is proposed. First, the variational Retinex model estimates the reflectance and illumination components simultaneously. A surround suppression mechanism, which is a human visual property, is used to constrain the TV regularization strength of both reflectance and illumination. Moreover, the Split Bregman algorithm is used to solve the proposed variational model. Second, after decomposition, a contrast gain is added to reflectance for contrast enhancement, and a Laplacian-based gamma correction is added to illumination for prevent color cast. The recombination of the modified reflectance and illumination become the final result.

The remainder of this paper is organized as follows. The Retinex theory and the proposed SSVR algorithm are introduced in Section 2. Experimental results and comparison of SSVR with other methods are devoted in Section 3. Finally, Section 4 concludes the paper.

2 The proposed algorithm

2.1 Retinex theory

Recently, more and more attention has been paid to color image processing. However, general enhancement algorithms process the image in greyscale values that do not consider the color information. Retinex methods have been proposed for color images based on human visual system (HVS).

Retinex theory was first proposed by Land and McCann in 1971 [10]. According to Retinex model, a given image can be decomposed into two parts: the illumination and the reflectance components. It is defined as follows:

For easy calculation, equation (1) is usually converted into the logarithmic form, as shown:

where s = log(S), l = log(L), r = log(R). The Retinex obtains the reflectance component channel by channel [6].

2.2 A spatially adaptive retinex variational model

Amongst the various Retinex-based contrast enhancement methods, variational Retinex model has received much attention due to its effectiveness in enhancing images with non-uniform lighting. Li et al. [29] proposed a perceptually inspired variational model to directly restore the reflectance and to adjust the uneven intensity distribution in remote sensing images. In this work, the relationship and the fidelity term between the illumination and reflectance are not considered. Besides, Li et al. [29] divided the spatial domain into edges and non-edges in the regularization term, which is a kind of hard segmentation. To overcome these problems, Lan X et al. [28] proposed a spatially adaptive Retinex variational model in which a weighted gradient parameter controls the TV regularization strength of the reflectance. This can be shown as follows:

where α, μ, β is parameters that control each item in this model, w is a weight parameter that controls the TV regularization strength of the reflectance component, it is defined as equation (4). In equation (4), K is the threshold that differentiates the boundary edges from the suppressed texture edges. In this paper, K is set to be equal to the 90% value of the cumulative distribution function.

However, directly applying equation (3–4) cannot effectively obtain the expected reflectance and illumination. The reason for this is that the criterion for selective smoothing depends on the gradient module, which is unable to fully demarcate between texture edges and boundary edges in real scenes. Some of the textures could have higher gradients than some boundaries and, hence, weaker diffusivities. In this paper, we propose a novel adaptive Retinex variational model. Instead of the gradient module, a surround suppression mechanism, which is a human visual property, is introduced to achieve this goal. The proposed strategy preserves the boundary areas in the illumination so that halo artifacts are prevented. It also preserves textural details in the reflectance to prevent from illumination compression, which further contributes to the contrast enhancement in the resulting image. In addition, strong regularization strength is enforced to eliminate uneven intensities in the homogeneous areas. The split Bregman optimization algorithm was employed to solve the proposed model.

2.3 Surround suppression mechanism

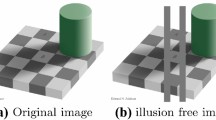

When recognizing objects by judging their contours, the human visual system has a surround suppression mechanism to suppress textural information. This mechanism and its effects are shown in Fig. 1. As shown in Fig. 1a, it is basically divided into two areas: an annular inhibitory surround called NCRF (non-classical receptive field) and a central region called CRF (classical receptive field). The stimulus sensed by NCRF always weakens the CRF’s response to the stimulus in the central region. In Fig. 1b, the sensations of the central points in region A, B, and C are suppressed by their surroundings [31].The effect is strong in texture region C because its surrounding is full of textures, whereas the boundary regions A and B are less affected. Thus, the boundaries of A and B are highlighted. The effects of surround suppression are shown in Fig. 2.

Herein, we extract some critical steps of the method in the literature [32] to suppress the texture gradients for our variational Retinex model. The extracted suppression procedure for the gradient module |∇ s (x, y)| of an image is expressed as follows:

-

First, a weighting function ω σ is defined as follows:

where DoG σ (x, y) is the difference of two Gaussian functions with σ:

-

Then, the suppression term t (x, y) for each pixel is calculated by convolving the gradient module with the weighting function ω σ (x, y):

-

Finally, we define the suppressed gradient value B(x, y) and T(x, y) as follows:

In equations (9–10), suppression term t(x, y) is small for the boundary edges and large for the texture edges. Here, α t is the suppression strength factor, which directly influences the suppression effect. B(x, y) can successfully assign high values in the boundary areas, T(x, y) assigns high values in the texture areas. So, B(x, y) and T(x, y) are regarded as boundary and texture templates, respectively.

2.4 Surround suppression variational retinex model

TV regularization strength of both illumination and reflectance should be associated with the spatial information of the surround suppression mechanism. This strategy preserves the boundary areas in the illumination so that the halo artifacts are prevented. It preserves the textural details in the reflectance to prevent illumination compression, which contributes to the contrast enhancement in the result. Besides, strong regularization strength is enforced to eliminate the uneven intensity in the homogeneous areas. The proposed adaptive surround suppression based variational Retinex model can be shown as follows:

where α, μ, and β in equation (11) are the same as in equation (3), w and v are weight parameters, which control the TV regularization strength of the reflectance and illumination, and are defined as follows:

Here, θ(x) is a monotone decreasing function. We refer to the analysis in [33] and define the modified function:

where K is the threshold that differentiates the boundary edges from the suppressed texture edges. In this paper, K is set to be equal to the 90% value of the cumulative distribution function of B k(x, y) or T k(x, y).

2.5 Split Bregman algorithm for the proposed model

Since two unknown variables exist in equation (11), an alternating minimization scheme is used to minimize the cost function (11). The minimization problem (11) is converted into two subproblems as follows:

The split Bregman algorithm [26, 34] is a very efficient way to solve the minimization subproblem in (14) and (15). By introducing a new variable, the subproblem (14) is converted into the following constrained problem:

In order to solve the constrained problem, an L2 penalty term is added to get an unconstrained problem:

where λ is a nonnegative parameter, and b 1 is the Bregman parameter. The computation procedure is detailed in Algorithm 1.

3 Algorithm 1

Step 1: Initialize \( {u}^0=0,\ j=0,\ \mathrm{and}\ {b}_1^0=\left({b}_{1 h}^0,{b}_{1 v}^0\right)=0, \) where “h” and “v” stands for the horizontal axis and the vertical axis, respectively.

Step 2: Firstly, given u j and \( {b}_1^j \), update d j + 1 as follows:

where shrinkage is the soft shrinkage operator, defined as

Secondly, update u j + 1 by minimizing the differentiable optimization problem in the following:

which can be solved by the Fourier transform and Gauss-Seidel method, etc. Here, we use the Fourier transform to solve it by

where F is Fourier transformation, F –1 is the inverse Fourier transformation, and F * is conjugated Fourier transformation. P is denoted as

Thirdly, update as follows:

Step 3: If (‖u j + 1 − u j‖/‖u j + 1‖) ≤ ε u , r i + 1/2 = u j + 1, r i + 1 = min(r i + 1/2, 0); otherwise, go to Step 2.

The solution of minimizing subproblem in (15) is same as the solution of minimizing subproblem in (14). By introducing a new variable, the subproblem (15) is converted into the following constrained problem:

In order to solve the constrained problem, an L2 penalty term is added to get an unconstrained problem:

where λ is a nonnegative parameter, and b 2 is the Bregman parameter. The computation procedure is detailed in Algorithm 2.

4 Algorithm 2

Step 1: Initialize \( {w}^0=0,\ j=0,\ \mathrm{and}\ {b}_2^0=\left({b}_{2 h}^0,{b}_{2 v}^0\right)=0, \) where “h” and “v” stand for the horizontal axis and the vertical axis, respectively.

Step 2: Firstly, given w j and \( {b}_2^j \), update t j + 1 as follows:

where shrinkage is the soft shrinkage operator, defined as

Secondly, update w j + 1 by minimizing the differentiable optimization problem in the following:

Here, we use the Fourier transform to solve it by

where F is Fourier transformation, F -1 is the inverse Fourier transformation, and F * is conjugated Fourier transformation. Q is denoted as

Thirdly, update as follows:

Step 3: If (‖w j + 1 − w j‖/‖w j + 1‖) ≤ ε w , l i + 1/2 = w j + 1, l i + 1 = max(l i + 1/2, s); otherwise, go to Step 2.

Finally, we give the overall procedure for solving the proposed model in the following:

-

1.

Given that the input image s, initialize l 0 = s. For i = 0, 1, 2,……

-

2.

Given l i, solve the subproblem (14) to get r i + 1/2 by using Algorithm 1. Then, update r i + 1 by r i + 1 = min(r i + 1/2, 0)

Given r i + 1, solve the subproblem (15) to get l i + 1/2. Then, update l i + 1 by l i + 1 = max(l i + 1/2, s).

-

3.

Go back to (2) until (‖r j + 1 − r j‖/‖r j + 1‖) ≤ ε r and (‖l j + 1 − l j‖/‖l j + 1‖) ≤ ε l are satisfied.

4.1 Contrast gain and gamma correction

Most Retinex based enhancement algorithms estimate the reflectance component as the final result. However, reflectance should be within [0~1], which means that it cannot completely contains the whole information of input image. Moreover, illumination component represents ambience information [35, 36].

In order to preserve the naturalness as well as enhance details, we add a contrast gain for reflectance and a gamma correction operation for illumination after the decomposition. These two steps are processed channel by channel. Contrast gain is defined as follows:

In this step, input image is first divided into non-overlapping 12*12 sub-blocks. σ(x, y) is corresponding variance within current sub-block, σ max is maximum variance of all sub-blocks. R max is maximum pixel value. λ is an adjusted parameter which is set 0.1 empirically.

Due to lighting geometry and illuminant color, images captured by cameras may introduce color cast. We proposed an assumption that color variance is Laplacian-based distributed. So, gamma correction is defined as follows:

where W is the white value (it is equal to 255 in an 8-bit image), (i, j) is corresponding illumination location in region S, S represents a region which is selected from the top 0.1% brightest values in its dark channel, D (i, j) is the color difference. max and min represent maximum and minimum values, μ and b are the location and scale parameters of Laplacian distribution. Here and the final result is then given as follows:

5 Experimental results and evaluation

5.1 Subjective assessment

In our experiments, a large number of images were tested. Due to space limitations, we have only shown some of the test images in Figs. 3, 4, 5, 6, 7, and 8. Moreover, the experimental results were calculated using MATLAB R2011a under Windows 7. The parameters were set as α = 4, β = 0.06, μ = 0.04, λ = 0.02, and γ = 0.02. In this paper, the proposed algorithm is compared to the existing MSR [13], LHE [7], AL [6], ALTM [37], GUM [9], SARV [28], and NPE [36] methods. Clearly, MSR, LHE, AL, and GUM gave over enhanced images, simultaneously saturating the resulting images much further and causing color distortion. In addition, MSR and AL introduced serious color distortion. GUM caused serious halo artifacts on the boundary edges, especially for passage.bmp and settingsun.bmp. SARV method produced unnatural looking results with some of the shadows having higher brightness values than some of the naturally brighter areas. And block artifacts are apparent on some texture edges. Compared with the above methods, NPE and SSVR give very natural-looking images. The enhanced image reveals a lot of details in the background regions as well as other interesting areas.

5.2 Objective assessment

Tables 1, 2, and 3 show quantitative comparisons on the six test images. In this paper, the average values of contrast values, discrete entropy values and COE values by each method are considered as the parameter of objective assessment. From Table 1, the proposed SSVR gets the highest contrast. It means that proposed SSVR obtains the highest visibility level from the original images. The discrete entropy indicates the ability of extracting information from an image. In Table 2, the entropy value of LHE and GUM outperform other algorithms. NPE has the lowest entropy values among the five algorithms, in accordance with its performance on detail enhancement. However, higher entropy values do not always get better enhanced performance, both objective assessment and subjective assessment should be concomitantly considered [38, 39]. Observer intuitive feelings are the most direct method to evaluate the image quality. In order to get a more comprehensive evaluation of the quality,we defined a new evaluation index named color-order-error (COE), which is used to measure the level of color constancy preservation. It is defined as:

where σ is standard deviation, t and s are target image and source image, a and b are channels of CIELab color space. In this paper, the original images are source images, the enhanced images by different methods are target images. From the definition of COE, we can see that the smaller the COE value is, the better the color order is preserved. Table 3 shows our algorithm can most successfully preserve the color constancy.

6 Conclusions

This paper proposes a surround suppression variational Retinex enhancement algorithm for image enhancement of non-uniform illumination images, which not only enhances the contrast of the image but also preserves the color constancy. Surround suppression mechanism, which performs well in accordance with constraining the TV regularization strength of the reflectance and illumination. Moreover, in order to prevent light flickering caused by varying apparently scenes, a Laplacian-based gamma correction is conducted on the estimated illumination, which contributes to the color constancy preservation in the output image result. Experimental results demonstrate that the proposed algorithm is better than the existing algorithms.

References

L Tao, Tompkins R, Asari V K. An illuminance-reflectance model for nonlinear enhancement of color images[C]//, 2005, p. 159

T Celik, T Tjahjadi, Contextual and variational contrast enhancement.[J]. IEEE Trans Image Process A Publication of the IEEE Signal Processing Soc 20(12), 3431–3441 (2011)

Y Lu, F Xie, Y Wu et al., No reference uneven illumination assessment for dermoscopy images[J]. Signal Process Letters IEEE 22(5), 534–538 (2015)

J. Tao, Y. Pingxian, G. Peng, et al. An enhancement algorithm for video surveillance image based on multi-scale Retinex[C]// International Conference on Computational and Information Sciences. IEEE Comput Soc, 22-25 (2011)

C Wen, J Lee, Y Liao, Adaptive quartile sigmoid function operator for color image contrast enhancement color and imaging conference. Soc Imaging Sci Tech 2001, 280–285 (2001)

F. Drago, K. Myszkowski, T. Annen. Adaptive logarithmic mapping for displaying high contrast scenes. Computer Graphics Forum. Blackwell Publishing, Inc, 2003, 22, 419-426.

TK Kim, JK Paik, BS Kang, Contrast enhancement system using spatially adaptive histogram equalization with temporal filtering. IEEE Trans Consum Electron 44, 82–87 (1998)

CC Ting, BF Wu, ML Chung et al., Visual contrast enhancement algorithm based on histogram equalization. Sensors 15, 16981–16999 (2015)

G Deng, A generalized unsharp masking algorithm. IEEE Trans Image Process 20, 1249–1261 (2011)

E Land, J Mccann, Lightness and Retinex theory. J Opt Soc Am 61, 1–11 (1971)

L Wang, L Xiao, H Liu, Z Wei, Variational Bayesian method for retinex. IEEE Trans Image Process 23, 3381–3396 (2014)

E Provenzi, LD Carli, A Rizzi, D Marini, Mathematical definition and analysis of the retinex algorithm. J Opt Soc Amer A 22, 2613–2621 (2005)

E Provenzi, M Fierro, A Rizzi, LD Carli, D Gadia, D Marini, Random spray retinex: a new retinex implementation to investigate the local properties of the model. IEEE Trans Image Process 16, 162–171 (2007)

G Gianini, A Rizzi, E Damiani, A Retinex model based on absorbing Markov chains. Inf Sci 327, 149–174 (2015)

DJ Jobson, Z Rahman, GA Woodell, Properties and performance of the center/surround retinex. IEEE Trans Image Process 6, 451–462 (1997)

Z Rahman, DJ Jobson, GA Woodell, Multi-scale retinex for color image enhancement, in Proc. ICIP, 1996, pp. 1003–1006

ZU Rahman, DJ Jobson, GA Woodell, Retinex processing for automatic image enhancement. J Electron Imag 13, 100–110 (2004)

ZU Rahman, DJ Jobson, GA Woodell, Investigating the relationship between image enhancement and image compression in the context of the multi-scale retinex. J Vis Commun Image Represent 22, 237–250 (2011)

J Frankle, J McCann, Method and apparatus for lightness imaging. U.S. Patent 4 384 336, 1983, p. 17

JJ McCann, Lessons learned from Mondrians applied to real images and color gamuts, in Proc. 7th Color Imag. Conf, 1999, pp. 1–8

B Funt, F Ciurea, J McCann, Retinex in MATLABTM. J Electron Imag 13, 48–57 (2004)

K Horn, Determining lightness from an image. Comput Graphics Image Process 3, 277–299 (1974)

JM Morel, AB Petro, C Sbert, A PDE formalization of retinex theory. IEEE Trans Image Process 19, 2825–2837 (2010)

D Zosso, A unifying retinex model based on non-local differential operators. IS&T/SPIE Electronic Imaging. Int Soc Optics Photonics 8657, 865702–865702 (2013)

R Kimmel, M Elad, D Shaked, R Keshet, I Sobel, A variational framework for retinex. Int J Comput Vis 52, 7–23 (2003)

MK Ng, W Wang, A total variation model for retinex. SIAM J Imag Sci 4, 345–365 (2011)

Y Lu, F Xie, Y Wu, No reference uneven illumination assessment for dermoscopy images. IEEE Signal Processing Letters 22, 534–538 (2015)

X Lan, H Shen, L Zhang, A spatially adaptive retinex variational model for the uneven intensity correction of remote sensing images. Signal Process 101, 19–34 (2014)

H Li, L Zhang, H Shen, A perceptually inspired variational method for the uneven intensity correction of remote sensing images. IEEE Trans Geosci Remote Sens 50, 3053–3065 (2012)

W Wang, C He, A Variational model with barrier functionals for Retinex. Siam Journal on Imaging Sciences 8, 1955–1980 (2015)

G Papari, P Campisi, A Neri, A multiscale approach to contour detection by texture suppression. Proceedings of SPIE - The International Society for Optical Engineering 6064, 60640D–60640D-12 (2006)

C Grigorescu, N Petkov, MA Westenberg, Contour and boundary detection improved by surround suppression of texture edges. Image & Vision Computing 22, 609–622 (2004)

C Tsiotsios, M Petrou, On the choice of the parameters for anisotropic diffusion in image processing. Pattern Recogn 46, 1369–1381 (2013)

T Goldstein, S Osher, The split Bregman method for L1-regularized problems. Siam Journal on Imaging Sciences 2, 323–343 (2009)

B. Li, S. Wang, Y. Geng. Image enhancement based on Retinex and lightness decomposition. IEEE Int Conf Image Process, 3417-3420 (2011)

S Wang, J Zheng, HM Hu, Naturalness preserved enhancement algorithm for non-uniform illumination images. IEEE Trans Image Process 22, 3538–48 (2013)

H Ahn, B Keum, D Kim, Adaptive local tone mapping based on retinex for high dynamic range images. Consumer Electronics (ICCE), 2013 IEEE International Conference on, 2013, pp. 153–156

C Chung-Cheng, T Chih-Chung, Contrast enhancement algorithm based on gap adjustment for histogram equalization. Sensors 16, 936 (2016)

C Ting, B Wu, M Chung, C Chiu, Y Wu, Visual contrast enhancement algorithm based on histogram equalization. Sensors 15, 16981–16999 (2015)

Acknowledgements

The authors would like to thank Image Engineering andVideo Technology Lab for the support.

Funding

This work was supported by the Major Science Instrument Program of the National Natural Science Foundation of China under Grant 61527802, the General Program of National Nature Science Foundationof China under Grants 61371132, and 61471043, and the International S&T Cooperation Program of China under Grants 2014DFR10960.

Authors’ contributions

ZR and TX came up with the algorithm and improved the algorithm. In addition, ZR wrote and revised the paper. HW implemented the algorithm of LHE, AL, and ALTM for image enhancement and recorded the data. All authors read and approved the final manuscript.

Competing interests

The authors declare that they have no competing interests.

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Rao, Z., Xu, T. & Wang, H. Mission-critical monitoring based on surround suppression variational Retinex enhancement for non-uniform illumination images. J Wireless Com Network 2017, 88 (2017). https://doi.org/10.1186/s13638-017-0872-9

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13638-017-0872-9