Abstract

We present an interactive, immersive, authentic role-play simulation designed to teach tertiary geoscience students in New Zealand to forecast and mitigate a volcanic crisis. Half of the participating group (i.e., the Geoscience Team) focuses on interpreting real volcano monitoring data (e.g., seismographs, gas output etc.) while the other half of the group (i.e., the Emergency Management Team) forecasts and manages likely impacts, and communicates emergency response decisions and advice to local communities. These authentic learning experiences were aimed at enhancing upper-year undergraduate students’ transferable and geologic reasoning skills. An important goal of the simulation was specifically to improve students’ science communication through interdisciplinary team discussions, jointly prepared, and delivered media releases, and real-time, high-pressure, press conferences.

By playing roles, students experienced the specific responsibilities of a professional within authentic organisational structures. A qualitative, design-based educational research study was carried out to assess the overall student experience and self-reported learning of skills. A pilot and four subsequent iterations were investigated.

Results from this study indicate that students found these role-plays to be a highly challenging and engaging learning experience and reported improved skills. Data from classroom observations and interviews indicate that the students valued the authenticity and challenging nature of the role-play although personal experiences and team dynamics (within, and between the teams) varied depending on the students’ background, preparedness, and personality.

During early iterations, observation and interviews from students and instructors indicate that some of the goals of the simulation were not fully achieved due to: A) lack of preparedness, B) insufficient time to respond appropriately, C) appropriateness of roles and team structure, and D) poor communication skills. Small modifications to the design of Iterations 3 and 4 showed an overall improvement in the students’ skills and goals being reached.

A communication skills instrument (SPCC) was used to measure self-reported pre- and post- communication competence in the last two iterations. Results showed that this instrument recorded positive shifts in all categories of self-perceived abilities, the largest shifts seen in students who participated in press conferences. Future research will be aimed at adapting this curricula to new volcanic and earthquake scenarios.

Similar content being viewed by others

Introduction

During natural hazards crises, geoscientists and emergency managers work together to assess the scientific, commercial, environmental, political and cultural interests of their community (Fiske 1984; Voight 1990). Events such as the 1985 Nevada Del Ruiz lahar (Sigurdsson and Carey 1986), the 1991–1992 eruptions of Pinatubo (e.g., Tayag et al. 1996), ash impacts of the 2010 Eyjafjallajökull, Iceland eruptions (Harris et al. 2012) and the 2009 L’Aquila Earthquake (Jordan et al. 2011) highlight the difficult and crucial role that scientists play in disaster communication and mitigation. Effective communication is important during volcanic crises (IAVCEI Subcommittee for Crisis Protocols 1999; Haynes et al. 2007) and has been identified as a key disaster risk reduction practice (a.k.a., skill or competency) (Integrated Research on Disaster Risk (IRDR) 2011).

The geoscience and engineering community have long recognized the deficit of quality teamwork and communication skills in their graduates (Dannels 2002; Heath 2000; 2003; Ireton et al. 1996; McMasters and Matsch 1996; Sageev and Romanowski 2001; Seat et al. 2001). These competency gaps in geoscience and engineering students specifically deal with information-sharing, cooperation, as well as ethical decision-making and behaviour (Meier et al. 2000). To acquire and perfect these skills, research suggests students need to practice them in authentic scenarios (Cox et al. 2012).

In this paper, we report on and discuss the iterative design of the Volcanic Hazards Simulation (i.e., an authentic, scenario-based role-play simulation) which was designed with the explicit purpose of teaching the skills and concepts necessary to predict and mitigate a volcanic eruption (Table 1). Our research aims focused on the evaluation of the simulation using a design-based research method (Barab and Squire 2004; Edelson 2002; Sandoval and Bell 2004). Design-based research provides a flexible, interconnected, complex line of inquiry that is required to understand the environment, input, social dynamics and outputs of complex learning activities (Brown 1992). The design method is guided by theoretical and practical considerations and supported by student and instructor feedback in order to develop a learning activity which challenges students’ knowledge and skills, but does not overwhelm them to the point of failure.

Background

The effectiveness of role-play and simulation for learning has been reported in a number of studies (e.g., DeNeve and Heppner 1997; van Ments 1999). They have been found to require more active participation from students than traditional, lecture-based teaching techniques and intend to teach practical and theoretical skills that are transferable to different future situations (e.g., Lunce 2006; Roth and Roychoudhury 1993). In this section, we define role-play, simulation, previous studies and outline the basic design of the Volcanic Hazards Simulation.

Previous studies

We define ‘simulation’ as a learning experience that occurs within an imaginary or virtual system or world (van Ments 1999) and ‘role-play’ as the importance and interactivity of roles in pre-defined scenarios (Errington 2011; Errington 1997). Research shows that role-play and simulation improve student attitudes towards learning (DeNeve and Heppner 1997; Livingstone 1999; Shearer and Davidhizar 2003) and interpersonal interactions (Blake 1987; van Ments 1999; Shearer and Davidhizar 2003), generic transferable skills (problem-solving and decision-making skills (Errington 1997; Barclay et al. 2011); communication skills (Bales 1976; Hales and Cashman 2008; van Ments 1999); and teamwork skills (Maddrell 1994; Harpp and Sweeney 2002)), as well as discipline-specific knowledge (DeNeve and Heppner 1997; Livingstone 1999) and volcanic eruption forecasting skills (Hales and Cashman 2008; Harpp and Sweeney 2002).

This simulation is a capstone activity emulating and integrating pedagogical elements (Table 2) from many of the above studies. The research here shows how a design-based approach can be used to iteratively improve and assess a simulation where student learning is a product of many elements. Table 2 lists the scenarios, settings, conditions and accompanying design variables that can be used in any role-play and the italicized parts of table were all identified as being relevant in the design of the Volcanic Hazards Simulation.

Basic design of the volcanic hazards simulation

Our original design of the simulation was based on Harpp and Sweeney’s (2002) Eruption Simulation. Multiple volcanic monitoring datasets were researched and ‘streamed’ in real-time and were presented in several labeled tabs on a custom-designed website interface (Figure 1). These data sets included: seismic activity, ground deformation, visual surveillance of eruptions (including imagery and auditory data), ash distribution and thickness, volcanic gases (CO2 and SO2), weather conditions, and social media. We adapted the Eruption Simulation to focus more specifically on the roles, protocols and challenges from an authentic New Zealand geoscience and emergency management perspective.

Our understanding of these roles and protocols was obtained via interviews and consultation with volcanic hazards professionals at the Institute of Geological and Nuclear Sciences (GNS Science) and the Civil Defence and Emergency Management sector (CDEM) of New Zealand.

It should be noted here that emergency management protocols, roles and responsibilities of scientists, cultural and societal expectations, and other important aspects of disaster management are unique to each nation and setting. This simulation models the New Zealand organisational structure and responsibilities, largely because the students who participate are potential future employees. A review of the New Zealand emergency management paradigm can be found in Britton and Clark (2000) and Hudson-Doyle et al., (this issue).

The Tongariro Volcanic Complex (e.g., Cole 1978; Hobden et al. 1999) was chosen as the host-volcano as it is a primary field site for the student participants enrolled in a 4th year physical volcanology field trip (see Table 3: Pilot, Iteration 2 and 3). As modelled in Eruption Simulation (Harpp and Sweeney 2002), the volcanic activity progresses from a quiescent stage, through small eruptions (i.e., ‘unrest’) concluding with a very large event, based on the 1991 Mt Pinatubo eruptions (e.g., Wolfe and Hoblitt 1996). This eruption scenario was chosen because there was existing volcanic monitoring data available for streaming the data ‘real-time’, it progresses through forecasting stages (which can be denoted by precursors that students could identify), and results in a ‘blockbuster’ climax (which is exciting to the participants).

The simulation consists of a series of complex, interconnected team tasks. One team focuses on recording, processing and interpreting the monitoring data mentioned above, while the other manages and mitigates the effects of volcanic eruptions on local communities. This requires effective use of the students’ cognitive skills (Bloom et al. 1956; Isaacs 1996; Lord and Baviskar 2007), and effective communication within the teams and between the two teams and to the local communities. For example, one task might require the students to weigh and judge the impacts of a volcanic event (e.g., an ash-producing eruption, which deposits ash onto road networks), and evaluate which impact(s) are of greater priority. The simulation is typically run in a single 4 to 6-hour session with between 15 and 40 students. At various stages during the simulation, injects are placed to trigger group tasks. The target audience for the simulation is upper-year undergraduates (300-level) and postgraduates (400-level and Masters) geoscience and hazards management students. The simulation is assessed slightly differently in each course within which it is offered; typically it comprises 5-10% of the final course mark. Suggestions for assessment can be found in the Instruction manual (Files can be found on the VHUB website; https://vhub.org/resources/3395).

Over a three year period from 2010 to 2012 we have developed and adapted the simulation in an iterative process guided by a design-based method and educational theory and practices to achieve the desired learning goals (Table 1).

Methods I. Theoretical framework

The design of the simulation was guided by three main areas of science education and educational psychology research:

-

1.

Motivational theories, and in particular the concept of subjective task value (e.g., Eccles and Wigfield 2002; Eccles 2005; Ryan and Deci 2000);

-

2.

Cognitive load theory (e.g., Chandler and Sweller 1991; Sweller et al. 1998);

-

3.

Team and group behaviours (e.g., Michaelsen and Sweet 2008; Turner 2014) and organisational theory (e.g., Argote et al. 2000)

In the later iterations of the simulation we also focused on the development and assessment of communication skills and utilised research from the risk communication literature to guide our design process (e.g., Morgan et al. 2002; Todesco 2012; Tully 2007).

Motivation

An educational psychology concept relevant to our design is the theory of motivation. At its simplest level, motivation is to strive for something. An individual’s motivations in educational endeavours primarily stem from feelings of recognition, responsibility, personal growth, autonomy and overcoming challenges (Beard 1972). Situated learning experiences include authentic contexts, activities, multiple roles and perspectives, supportive collaborative construction of knowledge leading to motivating and effective means of teaching complex tasks (Herrington and Oliver 1995). If the learning activity is realistic and the tasks are perceived as personally useful, each student will weigh and associate value (consciously or subconsciously) in participating as part of his or her long term academic and professional development (Eccles and Wigfield 2002; Eccles 2005). The design components that were most concerned with aspects of motivation were the perceived challenge and authenticity of the tasks, roles and social interactions.

Cognitive load

The theory of how an individual manages a set of tasks in their working memory is referred to as cognitive load theory (Chandler and Sweller 1991; Sweller 2003). Research shows that when students cope with learning tasks many intrinsic and extrinsic factors affect motivation, perceptions and performance (Eccles and Wigfield 2002; Matsumoto and Sanders 1988). Characteristics of the task itself such as the level of complexity (van Merriënboer et al. 2006; Kirschner et al. 2009), perceived difficulty (Kuhl and Blankenship 1979; Slade and Rush 1991), length of the task (Peterson and Peterson, 1959) and task interconnectivity (van Merriënboer et al. 2006) all affect the cognitive load of a given learning activity. Authentic, complex learning tasks are often overwhelming for novice learners (i.e., students who are learning a new concept or skill; van Merriënboer and Sweller 2005), as they commonly require the learner to incorporate multiple tasks which are interconnected. The design components of the simulation that were most concerned with aspects of cognitive load were those developed to manage the pace of the simulation, and the preparation of students so that the tasks presented were not overwhelming.

Organisational behaviour

Another major influence in our design stemmed from management and organisational studies involving team-based learning and performance. As a complex simulation, the workings of the learning activity are heavily influenced by the behaviour of individuals within an interactive team environment. There are several key interdependent elements that an effective team must have: 1) team members must be aware of and share task and value-based goals (Chou et al. 2008); 2) team members must be worthy of trust (trustworthiness) and show trust for other members (trustfulness) (Webber 2002; Chou et al. 2008); and 3) people need to feel satisfied by the other members work (Chou et al. 2008) which results in a joint potency or belief in the team (Campion et al. 1996). Negative team behaviour arises when there is a substantial rift in any of the above elements. The design components concerned with team dynamics were preparing students for the teamwork skills needed (through preparation activities), structuring, and clarifying the tasks presented so that students could tackle the challenge in a manageable way.

Iterative design research – identifying and manipulating design variables

Design-based research (‘design experiment’ or ‘development research’) is theoretically framed, empirical research of learning and teaching based on particular designs for instruction (Barab and Squire 2004; Sandoval and Bell 2004; Edelson 2002). Design-based research provides a flexible, interconnected, complex line of inquiry that is required to understand the environment, input, social dynamics and outputs of the simulation (Brown 1992) and allows the participants’ behaviour (actions, decisions) and their perceptions of these behaviours to be incorporated into the development of the learning activity (e.g., Barab and Squire 2004). We adopted this approach, as the simulation’s design was new and its effects were untested.

Like other design-based projects, the simulation aimed to achieve multiple goals and the evaluation research component of this project was aimed at enabling students to better achieve the intended learning goals (Table 1). Because of the complex nature of the simulation, each iterative design modification decision was a compromise between the scenario authenticity and the learning goals of the simulation. Figure 2 shows the evolution of the design foci throughout the different iterations of the simulation.

The Volcanic hazards simulation design and iteration evolution. The development of the simulation focused on three major components: reducing the individual and collective cognitive load of the students (Iteration 1 and 2), increasing role and team authenticity (Iteration 1 to 3), and improving the students’ communication skills (Iteration 3 and 4).

Communication competence

Research shows that the use of methods such as role-playing, modeling, coaching, rehearsal, reinforcement and feedback, enable individuals to improve their communication competence (Richmond et al. 2013). Feedback, modeling, and rehearsal are all integral to the simulation and it became increasingly clear that communication competence was vital to the success of the simulation. Our understanding of communication competence and communication apprehension was guided by the work of McCroskey (e.g., McCroskey et al. 1977; McCroskey 1982). McCroskey and McCroskey (1988) investigated communication competence through self-reported evaluation of one’s ability to communicate (self-perceived communication competence). Communication draws on content knowledge and interpersonal skills to convey information appropriately. They developed a self-perceived communication competence scale (SPCC) to obtain information concerning how competent people feel when communicating (McCroskey and McCroskey 1988). The instrument considers several dimensions of communication: communication contexts (public, meeting, group, and dyad (or pair)) and receivers of the communication (strangers, acquaintances, and friends). Previous research has shown that a person’s self-perceived communication competence is substantially correlated to and a reliable measure of actual communication competence (McCroskey and McCroskey 1988).

Methods II. Evaluation of the volcanic hazards simulation

The many different (interacting) variables at play in the simulation required careful data collection and analysis. We opted for a mixed-methods approach, involving both qualitative and quantitative data and analysis, to generate a rich data set to minimise the effects of confounds and biases in any one data collection method.

Study participants

Data were collected from both facilitators (i.e., instructors) and students in the simulation. Student participants (n = 108) were recruited from third and fourth year physical volcanology and hazards management courses which hosted the volcanic hazards simulation as part of their curricula. They were mixed cohorts of American study-abroad students (Pilot, Iteration 2, 3) and New Zealand students (Iteration 1–4) who attended the University of Canterbury. Students ranged in age, gender, nationality, race and geosciences background. All students were encouraged to participate, but were told explicitly that the study was confidential and that if they opted not to participate, they would not be negatively affected in any way. Instructors and facilitators of the simulation were study participants in the sense that they provided data through formal interviews as well as informal discussions with the research team. Informed consent was obtained from all participants in line with the University of Canterbury Human Ethics protocols.

Data collection

The data collection is summarised in Table 2. In order to ascertain if our design was effective, we collected and analysed student feedback (through interviews and questionnaires) and behaviour (self-reported through feedback and questionnaires and observed by the researchers). This allowed us to characterise the successes or failure of our design which was determined through observed individual and team positive behaviours (sophisticated decision-making and effective communication and teamwork strategies) and self-reported indications of engagement, enjoyment and changed perceptions. All iterations included a short demographic questionnaire which asked students basic questions about their nationality, level of study, gender, age, and geology background.

Observations

We collected approximately 37 hours of observation footage including 17 hours of simulation activities and 20 hours of pre-simulation activities such as lectures and group exercises. Data collection was overt (Jorgensen 1989): Observers were introduced to the students and their purpose for being present was explained. Almost all of the iterations were done in a multi-room setting and required multiple observers. Observation summary notes (explained in more detail, below) were taken in all iterations, with increments of approximately 2 minute ‘checks’. Written observations were relied upon for the Pilot and Iteration 2 (as the video files were damaged during the Feb 22, 2011 Christchurch earthquake).

Observations of the Pilot were aimed at reviewing student and instructor behaviour with regard to the core elements of the simulation pedagogy. Several questions were set out that would help us characterise the learning experience: 1. What is the simulation trying to achieve? 2. What are the individual students’ behaviours? 3. How are the students behaving as a team? 4. How are the instructors behaving? 5. Are they supporting the learning goals that were set out? 6. What elements support learning and what elements may be detrimental to learning?

Observations of the remaining iterations were more focused on specific design variables: 1. How does the pace of the tasks and data streaming affect the success of the simulation? 2. Are students prepared for the tasks presented to them? 3. Does the use of role-play positively affect the student’s learning experience and ensure a successful simulation? 4. Does the use and assignment of the roles and structure of the teams ensure a successful simulation? Are more/different roles needed? 5. How can the student’s teamwork and communication skills be characterised? For example: What elements of the simulation create learning opportunities for teamwork and communication? 6. Do the instructors support learning in these new designs?

Interviews

Due to the exploratory nature of the Pilot, we used informal, unstructured interviews immediately following the simulation to gather reflective experiences from both the instructors and students. The interviews were in a focus group format and some of the interviews were conducted with the instructors present with the students. The two interviews ranged from 10 to 25 minutes. There were several open questions posed to the participants: 1. What do you think went well? 2. What did not go so well? 3. What can we improve on in the future? and 4. What would you keep the same? Unscripted follow-up questions were used to probe valuable lines of inquiry.

In Iteration 1 we used semi-structured one-on-one interviews (see Appendix 1A). The interview questions were set out prior to the simulation. The topics in the interview were aimed at deriving a student’s characterisation of their experience (in their team, and assigned role). The interviews (n = 22) were conducted by two researchers (Dohaney and Hearne). All of the interviews were audio-recorded and transcribed later for analysis.

There were two feedback questionnaires designed for this study. The questionnaires (refer to Appendix 1B for Iteration 2 questionnaire (n = 26); and refer to Appendix 1C for Iteration 3 (n = 22) and 4 (n = 20)) were administered directly following the simulation activities. Students were given unlimited time to fill out the questionnaires. The questions probed specific aspects of the pedagogy. Our focus in Iteration 2 was to understand the effect of the pace, the nature of role-play and the team structures.

Our focus in the questionnaires for Iteration 3 and 4 was to gather final feedback and probe into the students’ perceptions of how they communicated within the simulation.

Communication assessment

We used McCroskey and McCroskey’s (1988) self-perceived communication competence instrument (12-item questionnaire; Appendix 1D) to develop baseline efficacies of students communication, and report whether there were changes to their competence after participating in the simulation. The simulation targeted and included examples of the different communication situations (public, meetings), aside from interpersonal communication (dyad) so we could investigate how successful each element was in our design.

Data analysis

Written observation summaries of each iteration were independently transcribed, collated, and analysed for specific lines of inquiry and themes. The primary goal was to document ‘what happened’ and assign a timeline to the student behaviours with accompanying perceived successes and failures of individuals and the teams. These observations were then correlated to the interview and questionnaire data. The purpose of correlation is to match what we observed and how the students perceived those circumstances.

Analysis of the interviews focused on assessing overall student experiences and identifying quotations that were representative of the range and majority of perceptions and observed behaviour. Specifically, we aimed at characterising the design variables that influenced the overall success of the simulation and allowed students to achieve the primary learning goals.

In the Pilot, due to a low number of participants (n = 8), saturation (i.e., when data analysis reaches a point where no new themes or insights emerge; Bowen 2008; Corbin and Strauss 1990) was not achieved. However, based on experience with data from the successive iterations, we conclude that the feedback collected from the Pilot was likely a representative sample.

With a larger number of participants, Iteration 1 allowed us to explore the design and themes in more depth through interview data. Thematic and response saturation for these students was achieved through constant comparison of original themes that emerged from the Pilot, to each subsequent data set. In Iteration 1, analysis of the 22 interviews began by characterising and grouping the responses to each question posed; these were categorised based on the role of the student, their team and matched to their observed behaviour in the simulation. These results were later collated and compared to and combined with the post-questionnaire results from Iteration 2, 3, and 4). The subsequent iterations (2–4) themes were identified, sorted and further refined with questionnaires. Data saturation occurred for the specific themes (i.e., design variables), by probing specific qualities of the simulations design and the students’ perceptions of their experiences.

Results of the SPCC measure in Iteration 3 and 4 were assessed based on the criteria set out by McCroskey and McCroskey (1988; e.g., scoring, noted thresholds between high and low categories, and reliability and validity). Individual student’s pre and post-SPCC scores were considered and factors that may have contributed to changes in efficacy (before and after the simulation) were explored. The small cohort numbers in Iterations 3 and 4 (n = 18 and 19, respectively) with differing treatments regarding communication meant that an effect size and t-test statistical analysis was not appropriate. Further study will allow us to capture communication-focused data from larger populations of students.

Results & discussion: evolution of the volcanic hazards simulation

In this section we thematically present our results and discussion in a combined fashion. We do this for ease of reading as results and discussions from one iteration are relevant for other iterations. Excerpts indicate the iteration from which the data was derived and the role the respondent played. Note that excerpts are typical or majority responses.

Generally, student feedback on the simulation experience illustrated that students valued its challenging nature and authenticity although their personal experience varied according to the roles they played and groups they worked with. One student recalled the benefits of the role-play itself: “I think it’s a really good simulation. I think you get put in a position to make super important decisions and I think, with having all the different teams and having to communicate with them in other rooms. It really adds to the realistic aspects of it”. (Iteration 1, Group Controller).

Other students noted that they enjoyed taking on roles that were new to them, this allowed them to explore new topics and gain new perspectives: “… [this role] was different and it opened my eyes to a different perspective” (Iteration 2, Ministry of Transport). Students reported an ability to see the bigger picture and how their role fit into it: “It was great for understanding how each small role is vital to the bigger picture understanding and management” (Iteration 2, Meteorologist).

From our observations and interviews, four key variables were found to be crucial for the success of the simulation: 1. student pre-simulation preparation; 2. the pace of the simulation; 3. role assignment; and 4. effectiveness of the students’ teamwork and communication skills (Table 4). The following sections describe each of these variables, the theoretical underpinnings of the design, and the instructor and student data which supports improvement of the design to result in more successful simulations. A summary of the design research and supporting evidence is shown in Table 4.

Pre-simulation preparation

Results

In Table 1A we identified several fundamental concepts and skills that students must bring with them into the simulation in order to effectively play their roles and perform basic tasks included in the simulation. Because items 3–6 in Table 1A were not likely to be covered in previous geology courses, we anticipated this lack of knowledge and created preparatory activities (i.e., additional readings, lectures and exercises) for the Pilot to give students the necessary background. The main preparatory exercise was the Hazard Map Activity. Students worked on creating a detailed volcanic hazards map from the literature for the Tongariro Volcanic Complex. This activity was designed to give students the needed background of the region, community, place names, landscape, and geologic history of the volcano. They were also required to designate and explain hazard zones on their maps with relevance to (and introduction to) the New Zealand Volcanic Alert Levels (GeoNet 2014). In addition to the Hazards Map activity, students were given a short lecture with basic instructions on what to do during the simulation.

Feedback from instructors and students in the Pilot indicated that more ‘background’ was required to help students communicate better and perform the simulation tasks (e.g., Instructor quote, Table 4). As a result, the background lectures became more detailed. As the complexity of the role-play increased (Iterations 1–4), student feedback indicated the need for more preparation materials, in particular in relation to the roles and responsibilities: “I [would have liked] a better definition of the role expectations beforehand, the simple descriptions did not do much for me” (Iteration 1, Public Information Officer). This resulted in a set of additional preparation materials which accompany the simulation: the Student Library (containing additional geology and emergency management background readings) and Flow of Information Maps (see Figure 3), and Science Communication Best Practices (see Section Improving Students’ Communication Competence). Table 5 summarises the evolution of preparation activities in the simulation.

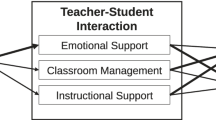

Flow of Information Maps. Caption: The roles, team structures, and ‘flow of information’ used in the volcanic hazards simulation. A. The Geoscience (a.k.a., GNS Science) team, and B. The CDEM (Civil Defence and Emergency Management) team. Student quotes within the manuscript are matched to the roles shown here.

In Iteration 1, the students were assigned roles and were expected to research their job titles, organisation protocols, and media release writing using online resources (which we provided to them). As a result of providing preparation readings and instructive materials to the students, the preparation-related feedback from Iteration 1 improved from the Pilot. Examples of improvement included better quality and efficiency in the writing of media releases and general awareness of what the roles and teams were intended to do during the crisis.

To provide better expectations of role responsibilities, team structure, and required skill sets we developed Role Profiles. These were implemented in Iteration 3 and 4 and were modelled after the New Zealand Civil Defence and Emergency Management Role Maps used for professionals (Ministry of Civil Defence and Emergency Management 2013). Each Role Profile includes the job’s purpose, duties, to which they report to, who they are responsible for, and the key competencies (i.e., skills) needed. One of the Iteration 4 students noted how the Role Profiles helped: “The description of the role was most helpful, [I had a] clear understanding of my role, responsibilities and whom I needed to communicate with” (Iteration 4, Duty Manager).

In order to improve preparedness and match the preparation readings to the specific roles and responsibilities, we created a Student Library. Several students reported finding it helpful: “I think the literature was the most helpful because it provided us with the necessary background information for our roles” (Iteration 3, Department of Conservation). The other preparation activities (i.e., lectures and Hazards Map Activity) also helped students develop expectations for their roles and tasks: “I felt very prepared. All my readings and researching beforehand really helped as I then had a better understanding of the team dynamics and the science” (Iteration 3, Volcanology Section Manager).

In Iteration 2, the majority of students continued to exhibit specific and general lack of preparedness that affected their abilities to achieve the learning outcomes. For example, students were unaware of the importance and sensitivity of Alert Levels (i.e., the general consequences of raising and lowering Alert Levels) and some students could not perform the main tasks to which they were assigned (e.g., the Ash Specialist could not draw accurate ash maps and the Infrastructure Manager was not aware of the main transport conduits of the North Island of New Zealand). However, in Iterations 3 and 4, by increasing the students’ prior knowledge through preparation activities, we observed fewer examples of cognitive overload. Students who did the preparatory activities reported feeling prepared in later iterations (e.g., “I felt very prepared. All my readings and researching beforehand really helped as I then had a better understanding of the team dynamics and the science” (Iteration 3, Volcanic Section Manager).

Discussion

In the early iterations of development, we observed that some students did not possess the content and skills-based knowledge needed for the simulation. Therefore, we inferred that preparation was a crucial aspect of the overall pedagogy, in supporting all of the learning goals. An effective approach is to prepare students by scaffolding the discrete topics and skill sets prior to and during the simulation. Scaffolds include all devices or strategies that support students’ learning (Rosenshine and Meister 1992; Wood et al. 1976). The support enables a learner to reduce their cognitive loads and achieve their goals. Gradually, support can be reduced as students learn to cope with increased cognitive load (van Merriënboer et al. 2003). Preparation activities also served motivational purposes: to further improve a student’s self-efficacy (Schunk 1991) and to foster positive expectations before participating in the simulation.

Despite implementing the pre-simulation preparation activities, some of the students exhibited less geologic reasoning and content knowledge. We observed that this poorer content knowledge inhibited sophisticated problem-solving and synthesis skills needed to support the team’s complex reasoning. In Iteration 4, this was evident when the team discussing possible outcomes of the volcanic unrest needed to look-up the definition of ‘Plinian’ (i.e., a classification term to describe a very large-scale eruption). The students’ lack of knowledge contributed to the team’s inability to create a working hypothesis of the volcanic activity and an instructor was required to step in and help assist the group. In conclusion, the team’s combined reasoning skills are impacted by the level of preparation of some of the individual students. This led us to more closely explore role assignment (Section Role Assignment).

The pace of the simulation

Results

The Pilot was largely improvised with little structure (i.e., in roles, teams, tasks). The pace of the Pilot was very quick, with a simulated day lasting about one minute in actual time. The pace of the simulation was defined by the rate of the streaming data, the number and length of pauses from monitoring tasks, and the number of and spacing of events (e.g., volcanic eruptive events).

While it was our intention for the simulation to be fast-paced, we did not want it to be so fast as to inhibit students’ ability to react to the scenarios.

There were many suggestions from students and instructors on potential ways to decrease the pace and lessen the overwhelming nature of the experience. In the Pilot, the students interviewed said that the pace severely affected their decision-making and their abilities to react to the events occurring. During the Pilot, two ‘pauses’ were improvised by the instructors allowing students to take more time to prepare and organise their thoughts and strategies in order to make comprehensive and measured decisions. For example, student-led working models of the volcano and evacuation plans require multiple datasets to be discussed, weighed, and scrutinised as a team and presented to a critical audience during press conferences. These types of higher-level cognitive tasks require time and careful consideration, leading to well thought out decisions and actions. Never in any of the simulations observed, did a student or team make drastically wrong decisions.

Based on these observations and the feedback received we slowed the pace by reducing the number of events (from 30 to 26) and adding pauses (from 2 to 4) to allow students more time to make quality decisions and communications. However, even at a ‘slower’ pace, many other students continued to struggle to ‘follow-along’, causing them to miss important events. One student explained this: “Yea, it was really fast. I would go off and talk to one of the [Emergency Management] people and then I would come back and there had been 2 cm of ash and we would be so stuck into what we were doing, that we would miss that” (Iteration 1, Public Information Officer 1). Other students suggested that the quality of their work was affected. For example: “It was so rushed, sometimes, that it was like “OK, someone grab a pen and just write something” ” (Iteration 1, Public Information Officer 2).

In Iteration 1, we observed and noticed from feedback that the students could use more time when an event (i.e., an eruption) occurred, but not in-between (i.e., during normal monitoring tasks) therefore we kept the streaming speed the same for all subsequent iterations. Based on results from Iteration 1, we decreased the simulation running time by 24 days, reduced the number of events (from 26 to 24) and increased the number (from 4 to 6) and duration (from ~5-10 minutes to 10–20 minutes) of pauses.

Pauses were present after important events in order to allow students to perform tasks. The number and length of pauses were increased to allow students more time to complete a task. In Iteration 2, pauses became a respite from the quick pace’ moments to reflect, respond and ‘enjoy’: “[The simulation] flew by. I was shocked, especially after the eruptions started. The pauses were awesome!” (Iteration 2, Field Geologist); “It was always nice to pause when something exciting happened” (Iteration 2, Ash Specialist). However, several students indicated that they continued to describe issues keeping up with the simulation and its demands. Optimising the pace ultimately rested with assessing how much time students needed to react to a given event. For example, one student noted: “The compressed time period was good, but, maybe more time was needed for processing each event. Maybe pause after every event?” (Iteration 2, Meteorologist).

In Iteration 1 and 2, several students noted that they performed ‘just fine’ under the circumstances: “It was good having a lot thrown at us” (Iteration 1, Welfare Officer) and “I thought it was perfect for me, because I was kept thinking the whole time, but not uncomfortably overwhelmed” (Iteration 2, Ministry of Economic Development). This indicates that some students’ skills were matched to the tasks that they needed to carry out.

In the final design of the simulation (used in Iteration 3 and 4) we reduced the number of events (from 26 to 24) and increased the duration (to 15–30 minutes) of pauses to provide more time for adequate reasoning. When asked specifically how the pace affected their learning and their abilities during the simulation, fewer students (7 of 27, compared to previous iterations) stated they felt they needed more time. We observed that the new pace used in Iteration 3 and 4 allowed the students (20 of 27) to enjoy the excitement of the simulation and cope with the number of tasks and respond accordingly. Many students noted ‘the excitement’: “It kept the adrenaline going and it was exciting” (Iteration 3, Group Controller); “… the feeling of being thrown into the thick of it, added to the overall experience” (Iteration 2, Ministry of Agriculture and Forestry).

Discussion

Authentic educational activities can be very powerful learning and motivational tools. We set out to design an exercise which was fast-paced and immersive, where students react and make decisions under pressure, in a manner similar to professionals during a volcanic crisis. In order to ascertain if the pace was optimal or detrimental to student learning, we explored how the pace affected their behaviours and perceptions.

Flow theory states that a task (or set of tasks) in which the level of challenge is matched to the individual’s skills and capabilities can lead to highly motivating, immersive experiences (Csikszentmihalyi et al. 2005). Cognitive load theory suggests that an individual’s working memory can store seven elements, but can manipulate only two to four elements at any given time (Kirschner et al. 2011; Sweller et al. 1998). When the processing capacity of a student’s working memory is exceeded, their cognitive system might become overloaded by the high number of interacting elements needed to be processed (e.g., Paas et al. 2003). This manifests itself as incapacity to react to the situation effectively (i.e., self-reported or observed qualities of being ‘overwhelmed’ or ‘stressed out’) resulting in poor performance. By increasing the time that students were given to carry out the tasks, we decreased the cognitive load of the individual student and the collective cognitive load of the team. In conclusion, the final design included longer pauses and fewer volcanic events (24 in total) to provide optimal time for reasoning, reactions and decision-making.

Role assignment

An integral aspect of the design was the authenticity of the roles and teams (i.e., real life science and emergency management protocols, structures, and responsibilities during volcanic crises; modelled after New Zealand roles and teams). These were fundamental to creating an authentic experience and were an explicit learning goal to introduce and reinforce how these disciplines interact during a crisis. The final team and role structures are shown in Figure 3A, and B. These team structures were included in the instructions in a Flow of Information Map, which illustrated the roles, teams, and communication pathways. A more detailed description of communication and team dynamics is given in Section Structuring Communication & Teamwork Tasks.

Results

In the Pilot, there were no pre-defined roles. We observed that in order to cope with the stress, the students self-organized into roles that included: the ‘Data’ people and the ‘Press’ people. Prior to students self-organizing, the group was visibly unorganized and inefficient. Students did not have defined tasks, responsibilities and therefore students did not lead or divide the workload to accomplish the tasks. The simulation was in danger of getting off track and the instructors were required to intervene to help students delegate tasks and responsibilities. These observations were the primary motivation for introducing a structured role-play.

Role-play related feedback collected from the remaining iterations described how students valued the role and team structure of the exercise. This is highlighted by the following themes and representative excerpts.

-

1.

Individual responsibilities: “I loved that we had roles… everyone had roles, they knew what they were supposed to be doing, like where to draw the line between you and your mate” (Iteration 1, Welfare Officer), and dividing the workload: “We were good at dividing up the tasks and finishing up our own responsibilities” (Iteration 2, Ministry of Health);

-

2.

Role immersion: “it was good having different teams and everyone having a different role so that you could really, like, get into one aspect” (Iteration 1, Volcano geophysicist); and

-

3.

Helping students to focus and stay on task: “Yes playing roles was helpful because we could focus just on that job and understand it” (Iteration 2, Public Information Officer).

Beginning in Iteration 1, we observed that some roles were more ‘pivotal’ than others (i.e., those that greatly affected the success of the simulation). Pivotal roles were those of leadership (Group Controller, Volcanic Section Manager), those responsible for more quantitative tasks (Ash Specialist), and for the communication links or liaisons between the teams (Public Information Managers, Infrastructure Manager and the Duty Manager). In order to assign students to roles matched to their personality and skills, we designed and implemented a Role Assignment Questionnaire (Iteration 2; Appendix 2), which asked students about their background, interests and comfort with leadership and communication situations. The main criteria of interest in the Role Questionnaire were their self-reported abilities to lead, perform quantitative tasks, and communicate (i.e., qualities of the pivotal roles). Instructors used the questionnaire to help assign roles that challenged students appropriately, but ensured that the exercise ran smoothly, although they acknowledged that they did not always succeed.

Students in pivotal roles made major decisions and acted as the bridges for information to flow efficiently between the teams. These tasks are crucial working parts of the simulation. These roles were carefully assigned in Iteration 3 and 4 and resulted in observed and self-reported positive team dynamics (e.g., Iteration 4, “We all provided our individual ideas of events, contributing to all aspects” (Iteration 4, Infrastructure Manager)). The team leaders had many responsibilities. One team leader recalled all the tasks that he/she needed to perform: “[I had to] make final decisions, to hold meetings and synthesize the various datasets, to keep the team organized and running smoothly, to communicate with the media and the public officials” (Iteration 2, Volcanic Section Manager). We observed that the students assigned to pivotal roles became overwhelmed during the simulations and we concluded that there were too many tasks for students in some roles to carry out simultaneously. A Duty Manager role was implemented in Iterations 3 and 4, to help manage and delegate tasks (Ministry of Civil Defence and Emergency Management 2002). This new role manages the staff and controls the information going to the Group Controller (the Emergency Management team leader). They share the responsibilities previously assigned to the Group Controller, thereby decreasing the overall stress of this position. The instructors involved in Iterations 3 and 4 noticed a significant change in the effectiveness of communication in the Emergency Management team following the introduction of this role.

One element of the role-play that we did not anticipate would have a negative impact was the level to which students became immersed in their roles in the simulation. The drawback to role immersion is that some students became introspective and ‘put their blinders on’: “It helped me learn more about my specific role, but I did not know what the others did” (Iteration 2, Volcano Geophysicist) and “I did well at focusing on my specific role, but I didn’t do well at thinking about other people’s roles” (Iteration 4, Department of Conservation).

The data collected for this study does suggest that many students noted the phenomena of role immersion (i.e., getting into the learning experience; e.g., Lessiter et al. 2001) and role identification (i.e., feeling that they relate to that role or sense of belonging and commitment e.g., Handley et al. 2006). For example: “… it was easy to slip into the role for the game” (Iteration 3, Department of Conservation); “People got really into their roles” (Iteration 2, Public Information Officer); [My role] just seemed to fit naturally with me and I sort of eased my way in and ‘got stuck right in’, as the activity increased” (Iteration 2, Visual Surveillance). One of the students in Iteration 2 explained why this experience could be negative: “I think sometimes that if you get really into your role, you think “Oh yea, like everything depends on me and my role”, rather than looking about at what everyone else is doing, the effects of their roles and what they are monitoring” (Iteration 1, Gas Geochemist). Whilst wishing to retain the positive aspects of role immersion, there were efforts in the later iterations to emphasize the team structure and best practices of teamwork.

Discussion

Students from every iteration reported that having the opportunity to play a professional geologist or emergency manager for the afternoon was a positive aspect of the activity. We showed here that assigning students to roles tailored to their interests and capabilities (using the Role Questionnaire), resulted in students being more likely to identify with the role and to operate more successfully. Self-actualization (c.f., Maslow 1943; Maslow 1970) is a powerful motivator therefore playing the role of a potential future career should produce a highly motivating experience. Additionally, overcoming challenges encourages a sense of self-efficacy and autonomy in the students, who may choose to continue to take on increasing challenges in the future (Kuhl and Blankenship 1979).

Student feedback indicated that the students immersed themselves into these roles by researching them prior to and ‘getting into it’ during the simulation. The roles were progressively better defined (with customized readings, skill sets, and responsibilities) with each iteration. Role assignment was therefore also successful in team-wide issues such as reducing conflict, balancing team work loads, and improving the communication within and between the teams. These will be discussed below.

Structuring communication & teamwork tasks

Results

Communication and teamwork were identified as primary learning goals of the volcanic hazards simulation (Table 1). We aimed to design a crisis scenario that provided a platform upon which students can discover the quality, efficiency, urgency, and importance of these skills. In the Pilot, the instructors recognized the need for communication and teamwork skills: “Seeing the chain of command appearing and taking charge of what needed doing and seeing [the students] effectively disseminate the information. I think as soon as they realized that teamwork and communication was important, it worked a lot better” (Pilot, Instructor 2).

In Iteration 1–4, the students expressed times when the team communicated effectively and times when communication within and between teams was not effective. When asked about teamwork students frequently mentioned the team and leaders abilities to compile everyone’s thoughts and to listen to one another. Students also noted that good teamwork required supporting and being supported by the team leader: “We listened to the [Group] Controller and went with his/her calls” (Iteration 2, Public Information Officer); “We had a definitive leader in our team” (Iteration 2, Gas Geochemist); “It was a real collaboration, the leader didn’t just override us, he/she considered our opinions” (Iteration 2, Public Information Officer).

Other students disagreed saying that they frequently talked over one another and exhibited poor listening skills. This led to an increased difficulty to make decisions: “There were intense points when everyone was talking all at the same time. This obviously made it impossible to think and to make good decisions” (Iteration 2, Planning and Intelligence, italics are for emphasis).

A primary source of stress was the amount of information that needed to be processed by leader roles. This occurred in both teams, across the iterations. For example: “There were six people yelling [information] to [the team leader] and he/she would write it down, but I don’t think there was enough time for him/her to react to what was happening” (Iteration 3, Field Geologist). Effective communication pathways or information ‘infrastructure’ (Celik and Corbacioglu 2010) is vital for a team to work efficiently. We aimed to engineer more effective communication pathways (through specific roles, increased awareness of team structure and preparation activities) resulting in more straightforward information transfer and thus successful decision-making and mitigation of the impending disaster. By scaffolding (i.e., providing guidance, support and advice) the students communication skills prior to (delivery of best practices) and during the simulation (through instructor interventions) more quality communications were observed in later iterations. The best practices presented to students focused specifically on being terse and contextual, but ‘packaging’ only the relevant information into a communiqué was a large challenge reported by many students. Only through meaningful practice (during the simulation) were the students observed to achieve this learning outcome (“I relayed only the ‘need-to-know’ information” (Iteration 3, Volcano geophysicist)). Providing strong team and role structure (through role assignment and pre-simulation preparation) improved communication pathways and communication efficiency, allowing members to think about the quality of the communications.

By providing more nodes (or in our case more students and more roles) we observed that the student’s efficiency increased and therefore improved the flow of information, and reduced ‘bottlenecks’. This prevented major oral miscommunications, although a lack of efficiency during times of stress persisted: “[The Emergency Management] team didn’t get stuff from the scientists fast enough” (Iteration 3, Infrastructure), when information was not passed along quickly enough.

However, negative group dynamics continued for some of the teams in later iterations (3, 4) and this was mostly due to strong or overbearing personalities of individual students. The team leaders were chosen for their self-reported abilities of teamwork, leadership and confidence with public speaking, but some of the leader students, however, exhibited domineering behaviour. For example: “I wasn’t able to [speak at the press conferences] because of my ‘Boss’, who wanted to be in the spotlight the entire time” (Iteration 3, Volcano geophysicist). Other issues arose during team discussions: “Initially, I struggled slightly in discussion involvement as there were several more dominant team members” (Iteration 4, Infrastructure).

Communication efficiency improved throughout the simulation. In each iteration, we observed students gradually acquiring familiarity with the correct protocols and a level of comfort with this structure. The students reported frustration with the inefficiency of their communications. Information ‘bottlenecks’ promoted inefficiency: “The communication between us and the other geologists was hard because we had to communicate through [the team leader]. And [the team leader] was too busy with everything sometimes to relay it” (Iteration 1, Field Geologist). Building awareness of the importance and inherent difficulties of information transmission was one of our major learning goals (Table 1, goal 5) and was reported by the students in all simulation iterations.

The simulation consists of interdependent tasks (where team members relied on one another to complete a task or outcome; Wageman 2000) which requires a collaborative approach (Rousseau et al. 2006; Wageman 1995)). We aimed to establish clear, transparent boundaries between roles, which allowed students to focus on their tasks and make complex tasks into discrete, manageable tasks.

In later Iterations (2, 3 and 4), we used the role questionnaire to identify and assign students with positive leadership skills and or well-established geological knowledge to pivotal roles so that the teams could manage tasks more efficiently and effectively and so that the interconnectivity of discrete tasks became less burdensome. Improving the students’ awareness of what each role is responsible for and whom they should interact with (through the Role Profiles and the Flow of Information Maps (Figure 3), used in Iteration 3 and 4) was used to reduce the time (and subsequently, the cognitive load) spent in the beginning acclimatising and allowed them the freedom to tackle the tasks at hand.

Discussion

Effective teamwork skills were found to be fundamental to the success of the simulation. There is a call for geoscience graduates to excel at communication and teamwork skills (e.g., Ireton et al. 1996). The two teams have large student numbers (ranging from 8 to 20 members depending on the class size) and rely on all members performing optimally. Students working in group educational settings are not uncommon in higher education, though activities with large teams (more than 8 or 10 students) are rarer, as they are structurally and logistically more difficult to facilitate.

A strong motivational aspect is the general attraction to working with others (i.e., relatedness; Ryan and Deci 2000), in an interdependent and supportive learning environment. Recognition among colleagues is a documented motivator (Maslow 1943) and in general, this induces an element of pressure to perform at one’s best (Cruz and Pil 2011; Hamilton et al. 2003; Slavin 1984). Peers, team leaders and instructors all observe the collective effort that they make to complete the tasks. Holding each student accountable provides the incentive for teaching and learning with one another.

Research shows that team learning can be more effective when structured and scripted (e.g., Dillenbourg 2002). Therefore, division of tasks, job sharing and assigning more students to pivotal roles was implemented and shown to reduce individual students’ cognitive load, freeing up resources to make and communicate decisions. We did observe and accept that some of the roles were less ‘important’ and that this may have created differential levels of motivation, accountability and feelings of group cohesiveness. More time working together as a team prior to the simulation allowed students to assess capabilities better and in general student feedback was very positive about job sharing, helping each other out and the interdependency on one another.

Effective collaboration and crisis mitigation, requires team members to actively communicate and interact with each other with the intention of establishing a common focus and achieving a common goal (Beers et al. 2006; Akkerman et al. 2007). Effective decision-making requires all team members to have access to all relevant information (Beers et al. 2006; Sellnow et al. 2009). To create and disseminate successful communications, valuable knowledge and information held by each team member must actively be shared (i.e., retrieving and explicating information), discussed (i.e., processing the information) and remembered (i.e., personalizing and storing the information) (Kirschner et al. 2009). The leader of the team was responsible for coordinating this effort.

There were examples of strong and poor team leadership throughout the iterations. Team leaders who displayed poor leadership skills negatively affected the team’s decision-making ability. This is likely due to the inability to guide the decision making process and help the team members reach a consensus. In the future, we plan to improve team structure and cohesiveness through team-bonding preparatory activities. If the team structure and norms are established prior to this intense experience, perhaps we would observe more positive and sophisticated behaviours.

Improving Students’ communication competence

Results

A primary learning goal of the Volcanic Hazards Simulation is to improve student’s communication skills. We propose that communication competence is impacted by several variables: A) one’s self-perceived ability to communicate (i.e., confidence); B) content knowledge of the geoscience topics being discussed; C) knowledge of communication best practices; D) appropriate perceptions (a.k.a., conceptions) of communication best practices, and E) communication experience.

This study used a self-report of communication competence (SPCC; McCroskey and McCroskey 1988) as a proxy for communication competence. Figure 4A shows the overall results from pre- and post-simulation SPCC scores. Most students in both iterations scored within the average range of communication competence in the pre-simulation (Iteration 3 = 11/19; and Iteration 4 = 13/18). Several demographic factors were investigated to look for any correlations between reported competencies. Previous studies have indicated that there may be some relationship between gender and competency (university women report lower competency; Donovan and Maclntyre 2004). No correlations were found between gender, age, geology background (i.e., number and nature of previous geology courses), and nationality.

Student’s self-reported scores in communication competence from Iterations 3 and 4 of the simulation. A. Overall scores from both iterations including the average scores from each cohort. Thresholds between High (scores > 87) and Low (scores < 59) competence are indicated, as well as a line that represents no change between pre- and post-scores (i.e., students above the line scored higher in the post-test). Iteration 3 had a wider range of student scores and more students with larger shifts. Students who participated in press conferences (denoted by outlined symbols) scored mostly positive, and some negative changes, while students who did not participate did not change competence between pre- and post-tests. B. The SPCC instrument (Self-perceived communication competence; McCroskey and McCroskey 1988) allows the student responses to be broken down into subcategories. All subcategories, on average, showed positive shifts from pre-test to post-test in both iterations. Iteration 3 illustrated higher shifts in most categories, and both iterations showed notable shifts in the public speaking category.

By looking at the changes between pre- and post-simulation scores we can examine any affect that the simulation may have had on the students perceived communication competence. Overall, students reported improved competence following the simulation with an average change of 4 points (±13), but 40% of the students from all iterations reported negative shifts. The large shifts from low competency to average or high competence (6 students from Iteration 3 and 4 from Iteration 4) were mostly amongst students that participated in press conferences (not all roles within the simulation are called upon to present information in front of the entire class; See Figure 4A). Conversely, the largest negative shifts (Iteration 3 = 2, and Iteration 4 = 4) were also from students asked to present information at the press conferences. Students who exhibited little/no change in competence were dominantly students who did not participate in the press conferences. This aspect suggests that in order for competence to be impacted, students must experience the public speaking aspects of the simulation. Further research will allow us to follow this line of inquiry. While the values of change may seem minimal, previously reported studies which analysed changes in competency over an entire semester of communication class, also resulted in similar magnitude of change (e.g., Rubin et al. 1997).

Figure 4B illustrates the individual categories (communication context and receiver) for Iteration 3 and 4, pre- and post-simulation. Both iterations illustrate overall positive shifts on every category, and the largest shifts seen in the public speaking communication context. Iteration 3 shows larger shifts in all categories.

Discussion

Iteration 3 was embedded in a field course which spent extensive time on the day prior to the simulation covering volcanic hazards topics and the best practices of science communication; while Iteration 4 students received a science communication best practices lecture, but did not focus at the same length or depth on these practices as the Iteration 3. This is likely why Iteration 3 shows more individual student and category positive shifts than Iteration 4; however more research will be needed to confirm whether increased preparation affects perceived improvement.

The amplification (i.e., large shifts in competency) in some student’s scores may be due to a peer comparative effect, where students compare the different student’s abilities to communicate and give themselves a negative or positive self-perceived appraisal following the simulation. Also, during the simulation students are provided with feedback, and then given additional opportunities to improve. Feedback is noted as vital for communication improvement in field such as medicine (Maguire et al. 1996; Maguire and Pitceathly 2002) and it is likely that some of the participants received more meaningful feedback during the simulation than others.

The SPCC scale and other communication instruments (e.g., PRCA-24; McCroskey et al. 1985) are typically used in communication training courses, where participants are given extensive coaching in communication. Students with high confidence in their abilities are more likely to engage in communication experiences (McCroskey et al. 1977), so even a small number of positive shifts in perceived competency are a success. Future research will be carried out with more students, and we will explore design and cohort factors which may impact the students SPCC.

Improving Students’ transferable skills for the professional sector and volcanic crisis management

Transferable skills (such as communication) in the natural hazards sector are essential and they align with the needs of other sectors such as engineering, medical sciences and management sciences. Effective communication is identified as a key practice in creating more disaster resilient communities (Bryner et al. 2012; Tully 2007). Crisis communication research and practice has focused on how the message is delivered, distributed (accurately, timely) and its relevancy during an event (Garcia and Fearnley 2012; Fearnley et al. 2012; Seeger 2006; Valenti and Wilkins 1995).

This study evaluated the students’ abilities to communicate orally within their teams, between the teams and to the public, in press conferences. The focus was on feedback-rich opportunities to establish best practices of communication (in content and delivery) and not on other important aspects of risk communication such as trust-building, participatory or dialogic formats (e.g., townhalls and community meetings). However, during and following press conferences there are reflective opportunities to talk about how communication is used to build resilience during or as a response to these events and we plan to develop new simulations which are explicitly addressing the use of communications to build trust, awareness and resilience of communities.

To acquire and perfect these skills, students need to practice them in authentic scenarios. Cox et al. (2012) suggests that, “engaging students in authentic projects that will allow them to explore the implications of their work for engineering and for other sectors (e.g., the larger society)… [to] engage in projects that relate to engagement with diverse stakeholders” (Cox et al. 2012, page 68). The interconnected tasks in the role-play required the coordination and integration of constituent skills from the very beginning and pushed learners to quickly develop a holistic vision of the whole task (a more expert-like strategy). Self-reported and actual effective communication skills are also linked to student academic success (McCroskey et al. 1989), high self-esteem (McCroskey et al. 1977), and success with employment (Krzystofik and Fein 1988). Thus, this approach to learning communication skills through authentic challenges builds confidence, performance and resiliency in students who are likely to become a part of the geologic and emergency management community.

Further research will come out of this study, which considers the connection (if there is one) between communication, geologyexpertise (i.e., content knowledge), geology and communication experience (i.e., number and nature of previous field, class and communication experiences) and other factors.

The results of our evidence-based educational study of a role-play simulation indicate that such exercises represent a robust and powerful tool that can be used in the tertiary sector to train entry-level geologists. Furthermore, the opportunity exists for professional development for volcanic monitoring scientists and emergency managers. Such exercises both convey the importance of communication during a disaster scenario and simultaneously up-skill this workforce in communication. Further research on their design and efficacy should be explored by our research community.

Limitations of the study

Design-based research presents difficulties different to those of controlled or clinical experimental research. The interconnectivity of participants and outcomes makes segregation of causal relationships difficult. Further study is required to isolate and address specific causal relationships around student success at achieving specific learning goals.

In addition, it is important to acknowledge that the lead author (Dohaney) was heavily embedded in the learning environment (as a teacher, colleague and peer to the participants and the instructors). Validity of the research is difficult to achieve if the researcher cannot identify bias and manipulative control over what results are deemed relevant to the study (Creswell and Miller 2000). As the researcher is intimately involved with conceptualization, design, development, implementation and researching of the pedagogical approach, then ensuring trustworthy assumptions is a challenge (Barab and Squire 2004).

There were however safeguards to eliminate bias and gain an objective perspective on the results and interpretations:

-

1.

With the exception of the Pilot, The lead author (the researcher) did not participate directly (as a role-player) in the simulations. Dohaney was a passive observer and did not interrupt, change, alter or intervene during the activity.

-

2.

In Iteration 3 and 4, Dohaney was the primary instructor of the communication best practices (provided to students prior to the simulation) as there was no other appropriate instructor with the necessary expertise to implement this component. However, the delivery and transfer of information and skills from these preparation activities was not the primary research question, but how that information was used during the simulation.

-

3.

To avoid bias in data selection, representative quotes were taken that illustrated majority and minority perceptions. They were selected based on data ‘richness’ and characterization of the themes identified. For example, when asked about teamwork, students who described any range of experiences (positive, negative, descriptive, experiential, etc.) would be identified and included in a ‘first pass’ of the data. When saturation had been reached (i.e., no new items/themes emerged; Bowen 2008), assessments of ‘majority’, ‘minority’ and depth of responses were culled and grouped based on the pedagogical themes.

There were significant logistical factors not discussed in this manuscript, which are important for instructors intending to use a simulation. Major considerations include: A. location, time and space available to the instructor; B. content knowledge of students regarding background information and group and communication skills; and C. time commitment from students and instructors, including all preparation activities (van Ments 1999).

Conclusions and recommendations

Our methodology and results suggest that pedagogy and design grounded in real-world expertise and best practices can lead to educationally-useful learning strategies. This work shows that the simulation helped students to develop transferable skills while simultaneously, exemplifying their importance. In the role-play, students challenged themselves and moved outside of their ‘academic comfort zone’ when required to rapidly synthesize new information and prior knowledge at an appropriate level of task difficulty. These types of learning activities can be of great value for teaching and learning transferable skills and promoting students’ self-efficacy and motivation. This study concludes that:

-

1.

The method of design and evaluation used in this study can act as a guide for other researchers and instructors to build, test, and refine complex role play simulations, allowing researchers to develop flexible, adaptive theory applicable to new contexts.

-

2.

To achieve learning goals that are transferable to future careers, an authentic immersive role-play must ensure that students are sufficiently prepared, the pace of the role play is appropriate, and that roles and team structure are well-defined.

-

3.

The simulation can elicit large shifts in individual students self-perceived communication competency, and overall has a positive influence on the students’ communication in different contexts (public speaking, meetings).

Designing and evaluating this role-play exercise was a time intensive process. Few geoscience educators or practitioners can dedicate themselves entirely to exercise development; however, this work demonstrates how effective and motivating these activities are for students. We recommend to educators and researchers that long term investment in these sorts of learning activities will add higher-level challenges to students who will be soon entering the workforce and promote greater learning of skills than more static and staid teaching methods.

Although the simulation was designed around a stratovolcano in the New Zealand context, replicable simulations based on other hazards and contexts could be used to teach transferable skills in the geosciences anywhere. A companion simulation we developed based on an eruption in the monogenetic Auckland Volcanic Field has also been a very popular component of postgraduate courses at the University of Auckland. These simulations promote teaching of these transferable skills in a more explicit, structured manner, which is context-apparent and feedback-rich (i.e., scenario-based learning). Such scenarios allow students to practice new skills prior to going into the workplace, where it is expected that they will have these graduate attributes. An open source digital version of the full instruction manual and files can be found on the VHUB website (https://vhub.org/resources/3395). The instruction manual discusses the materials, facilitator notes, teachable moments, assessment and pre- and post-simulation activities in great detail, and provides practical ways to get the most out of the role-play. In any simulation there will be meaningful, teachable moments, but due to the complexity of our study we do not go into this in great detail – please refer to the instructor manual for more details. Further information and assistance with how to run a simulation can be obtained by corresponding with the primary author (Dohaney).

References

Akkerman S, Van den Bossche P, Admiraal W, Gijselaers W, Segers M, Simons R-J, Kirschner P (2007) Reconsidering group cognition: from conceptual confusion to a boundary area between cognitive and socio-cultural perspectives? Educ Res Rev 2(1):39–63, doi:10.1016/j.edurev.2007.02.001

Argote L, Ingram P, Levine JM, Moreland RL (2000) Knowledge transfer in organizations: learning from the experience of others. Organ Behav Hum Decis Process 82:1–8, doi: 10.1006/obhd.2000.2883

Bales RF (1976) Interaction process analysis: a method for the study of small groups. 203. University of Chicago Press, Chicago

Barab S, Squire K (2004) Design-based research: putting a stake in the ground. J Learn Sci 13:1–14, doi:10.1207/s15327809jls1301_1

Barclay EJ, Renshaw CE, Taylor HA, Bilge AR (2011) Improving decision making skill using an online volcanic crisis simulation: impact of data presentation format. J Geosci Educ 59:85, doi:10.5408/1.3543933

Beard R (1972) Teaching and learning in higher education. 253. Penguin

Beers PJ, Boshuizen HPA, Kirschner PA, Gijselaers WH (2006) Common ground, complex problems and decision making. Gr Decis Negot 15:529–556, doi:10.1007/s10726-006-9030-1

Blake M (1987) Role play and inset. J Furth High Educ 11:109–119