Abstract

Background

Publication and outcome reporting bias is often caused by researchers selectively choosing which scientific results and outcomes to publish. This behaviour is ethically significant as it distorts the literature used for future scientific or clinical decision-making. This study investigates the practicalities of using ethics applications submitted to a UK National Health Service (NHS) research ethics committee to monitor both types of reporting bias.

Methods

As part of an internal audit we accessed research ethics database records for studies submitting an end of study declaration to the Hampshire A research ethics committee (formerly Southampton A) between 1st January 2010 and 31st December 2011. A literature search was used to establish the publication status of studies. Primary and secondary outcomes stated in application forms were compared with outcomes reported in publications.

Results

Out of 116 studies the literature search identified 57 publications for 37 studies giving a publication rate of 32 %. Original Research Ethics Committee (REC) applications could be obtained for 28 of the published studies. Outcome inconsistencies were found in 16 (57 %) of the published studies.

Conclusions

This study showed that the problem of publication and outcome reporting bias is still significant in the UK. The method described here demonstrates that UK NHS research ethics committees are in a good position to detect such bias due to their unique access to original research protocols. Data gathered in this way could be used by the Health Research Authority to encourage higher levels of transparency in UK research.

Similar content being viewed by others

Background

Reporting bias occurs when the decision of how to publish a study is influenced by the direction of its results [1]. It is a well-recognized issue [2] that has recently come to the forefront of the public agenda in the UK due to the activities of the international “AllTrials” petition [3], and the writings of populist science authors such as Ben Goldacre [4]. Authoritative academic analyses have been conducted through the long-running work of organizations such as the Cochrane Collaboration and British Medical Journal (among others) who continue to generate significant public and media pressure [5].

In December 2011 the Health Research Authority (HRA) was established in the UK to protect and promote “the interests of patients and the public in health research” [6]. As part of this remit the HRA was challenged to formulate proposals to improve transparency in health research [7]. Since the HRA incorporates the National Research Ethics Service (NRES), whose research ethics committees (RECs) review and provide ethical opinions on all research using NHS patients, it seemed logical that the HRA plays a greater role in monitoring publication outcomes. In recognition of this the HRA business plan for 2013–2014 contains the stated aim of “working with all the relevant partners to help create an environment where clinical trials are registered and research results get published” [8].

To investigate the logistics and effectiveness of NHS research ethics committees monitoring reporting bias we have determined the publication status of all projects submitting an end of study declaration to a single research ethics committee over a defined timeframe. We have also looked for discrepancies between outcomes stated in the original ethics application and those reported in the final academic papers.

Methods

Cohort of studies

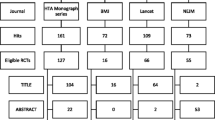

As part of an internal audit we accessed Integrated Research Application System (IRAS) records of research projects that submitted an end of study notification to the South Central, Hampshire A research ethics committee (formally Southampton A) between 1st January 2010 and 31st December 2011. Studies were stratified from definitions in the REC forms (see Table 1).

Literature search

Publications were located through a literature search in three bibliographic databases: Web Of Science, PubMed and Google Scholar. Search queries used the chief investigator’s last name and keywords from the study title. The literature search began in October 2013 and additional new publications monitored until August 2014. A publication was defined as a peer-reviewed paper published in an academic journal. Further analysis was carried out on clinical trials and Clinical Trial of an Investigational Medicinal Product (CTIMPs) to see if they had been registered (and had initial results published) on ClinicalTrials.gov, the European Clinical Trials Register, or isrctn.org. These searches used the sponsor’s protocol number, the European Clinical Trials Database (EudraCT) number, the International Standard Randomised Controlled Trial number (ISRCTN) and/or keywords from the study title. Researchers were not contacted directly so as to determine the level of information that could be gained from the publicly available databases alone.

Outcome reporting bias

Outcome reporting was examined by comparing primary and secondary outcomes originally stated in the REC application with those reported in the final publications. Discrepancies were divided into three categories: 1) a missing outcome not reported in the final publication, 2) change of an outcome from primary to secondary (or vice versa), 3) addition of an outcome in the final publication.

Data analysis

Data was analysed using descriptive statistics and odds ratios calculated using MedCalc 13 [9].

Ethics and data-access

Ethical approval was not required from a statutory committee for this work. Raw data is not available due to the researchers unique access to the research ethics database as members of a REC.

Results

Included studies

A total of 116 studies were included in this study. As the inclusion criteria were based upon end of study notifications, original ethics applications were dated between 2003 and 2011. Characteristics of the included studies are shown in Table 1. The type of study design was unclear for 16 studies, the type of sponsor unknown for 12 studies, the number of centres unknown for 16 studies and the sample size unknown for 21 studies due to lack of information in the REC application. Thirty studies in the cohort contributed towards an educational degree.

Publication outcomes

The literature search identified 57 publications for 37 studies giving an overall publication rate of 32 %. Twenty-one studies had been presented as a conference abstract, 11 of which also had a journal publication. A conference abstract alone was not considered a publication in line with previous studies due to the lack of information inherent in this type of report [10]. Twenty-five studies had more than one publication (range 1–6). Industry sponsored studies were significantly less likely to be published than studies sponsored by academic institutions, the NHS or Health and Personal Social Services (HPSS) sponsored studies (OR = 0⋅25, p = 0 · 04, CI 0⋅07-0⋅92). Attempts to stratify the data in other ways produced no significant results using simple odds-ratio calculations. Of the 37 studies that published at least one paper, the mean time for publication (from date of ethics submission) was 3⋅9 years with the highest number of studies (11) publishing between 3 and 4 years (Fig. 1a). Of 37 clinical trials in this cohort, 26 were registered on the ClinicalTrials.gov website with five (19 %) having posted summary results. Twenty-two out of 27 CTIMPs were registered through EudraCT with only one of the missing five on ClinicalTrials.gov. Only 5 of the 37 clinical trials were registered on the ISRCTN registry, four of which were on ISRCTN only and one on all three databases. This gave 7 out of 37 clinical trials (with 4 CTIMPs) not registered on any of the three main trial registration databases, although these trials had commenced prior to the requirement for registration.

Consistency of outcomes

Full REC applications were available for twenty-eight studies for analysis of outcome-reporting bias (Table 1). A total of 141 outcomes were identified across the 28 studies from the original REC applications. These included 50 primary outcomes and 91 secondary outcomes. The median number of primary outcomes was 1 (range 1–6) and the median number of secondary outcomes was 3 (range 1–11). Four studies did not have any secondary outcomes stated whilst twelve studies had more than one primary outcome. Out of the 141 planned outcomes 32 (23 %) were missing in the final publications, including 6 unreported primary outcomes. Twelve studies (43 %) were entirely consistent with their original REC form whilst 16 (57 %) studies had discrepancies (Fig. 1b). Fourteen studies had missing outcomes including 3 clinical trials, 8 questionnaire/mixed methodology studies and 2 qualitative studies. One study categorised as “other” reported a statistically significant secondary outcome as a primary outcome (Fig. 1c). One tissue study had a new subgroup analysis in the publication that was not mentioned in the REC form.

Amongst the studies with unreported outcomes, six studies had at least one unreported primary outcome and thirteen studies had at least one unreported secondary outcome. The median proportion of unreported outcomes per study was 38 % (CI 25 %-75 %). There was a weak correlation between the number of papers a study produced and the percentage of reported outcomes (p = 0·94). There was no correlation between the number of outcomes and the percentage of reported outcomes (p = 0·62).

Discussion

If the reporting of research is a fundamentally ethical issue [11–13] it seems well within the remit of research ethics committees to encourage researchers to commit to publishing results as a condition of ethical approval as well as monitoring subsequent publications [14]. Although it is appreciated that research can be reported in a number of different ways, this study chose to only look at the peer-reviewed scientific literature as this is the main source of information for the expert community who are best placed to utilise research outcomes. The publication rate of 32 % is consistent with previous studies conducted by research ethics committees that have found publication rates in the range of 20 %-76 % [15–18]. However, unlike many countries where such studies can only really provide snapshots from individual hospitals or regions, extending the method described here across the whole HRA ethics system could potentially capture national data and perhaps provide a continuous monitoring service for the UK.

The main weakness of this study was the short time available to researchers for data analysis and publication (a maximum of three years since end of study notification), especially as the literature seems to show that especially clinical drug trials publish on average between 4 and 8 years after a study [14]. However, this widely quoted timeframe is ambiguous because it is unclear whether it is measured from when the studies started or when they were completed. If the original date of submission to the Hampshire A research ethics committee is used, the studies analysed in this paper had between 2 and 10 years to publish at least some sort of peer reviewed result or commentary. It may be hoped that the percentage of projects publishing might improve as time goes on, but the data did show a mean time of 3⋅9 years between initial ethics application and publication for the studies that did publish (Fig. 1a), suggesting that the timeframe used here is not unreasonable. Other weaknesses included limiting this study to a single research ethics committee and not contacting researchers to determine reasons for non-publication. However, the purpose of this study was to determine a simple method that could reasonably be applied by individual RECs without extensive research funding.

Attempts to stratify the studies did not give significant p values for most study characteristics based upon a simple odds ratio calculation [19]. The only significant difference was with studies sponsored by industry that only showed a 12 % publication rate, compared to around 30 % for other types of sponsors. The reason for this cannot be determined here, but is consistent with the perception that industry suppresses results [20].

Unreported outcomes were the main reporting discrepancy identified between original REC applications and final publications. The figure of 57 % overall discrepancy between the originally planned outcomes and those reported in the final application is consistent with previous literature where discrepancies in reporting of primary outcomes were found in 62-66 % of clinical trials [21, 22]. Again conducting this study from within the HRA system proved particularly powerful as original REC application forms could be analysed. However, administrative changes between 2003 and the current time meant that electronic copies of REC forms were only available for a small number of studies, whilst some paper REC forms had already been discarded, so not all published studies could be included in this analysis. It was also not clear whether additional information from all study amendments had been located.

Conclusion

The results described here demonstrate that NHS research ethics committees are able to effectively monitor publication and outcome reporting bias. This is significant because these committees hold complete records of all human research that is subject to certain legal regulation or conducted within the NHS. This places them in a stronger position than individual sponsors or research funders when it comes to auditing or monitoring reporting bias. However, such monitoring is not currently part of their remit. At the moment committees are composed of up to eighteen volunteer members and one REC manager, reviewing approximately 45 full applications per year. Although this project included just a subset of studies from one committee, it occupied a full-time masters student for most of a year. This would have significant resource implications on RECs and the HRA if comprehensive monitoring identical to this study was to be carried out for all studies submitted to all 68 committees currently overseen by the HRA. An alternative might be to conduct smaller studies such as described here on a regular basis, not for policing researchers, but rather to allow the monitoring of various ways to encourage researchers to publish both their projects as a whole and the outcomes they had originally committed to measure.

References

Song F, Parekh S, Hooper L, Loke YK, Ryder J, Sutton AJ, et al. Dissemination and publication of research findings: an updated review of related biases. Health Technol Asses. 2010;14(8):1.

Dwan K, Gamble C, Williamson PR, Kirkham JJ, Grp RB. Systematic Review of the Empirical Evidence of Study Publication Bias and Outcome Reporting Bias - An Updated Review. Plos One. 2013;3(8):1–31.

AllTrials Campaign. http://www.alltrials.net. Accessed 6th November 2014

Goldacre B: Bad pharma: how drug companies mislead doctors and harm patients, First American edition. edn; 2012.

Drug companies have a year to publish their data, or we'll do it for them http://theconversation.com/drug-companies-have-a-year-to-publish-their-data-or-well-do-it-for-them-15184. Accessed on 6th November 2014

Health Research Authority. http://www.hra.nhs.uk. Accessed on 6th November 2014.

The HRA interest in good research conduct. http://www.hra.nhs.uk/documents/2013/08/transparent-research-report.pdf. Accessed on 6th November 2014.

Health Research Authority Business Plan 2013 – 2014. http://www.hra.nhs.uk/documents/2013/09/hra-business-plan-2013-2014.pdf. Accessed on 6th November 2014.

MedCalc 13. http://www.medcalc.org/index.php. Accessed on 5th May 2015.

Egger M, Smith GD, Altman DG. Systematic Reviews in Healthcare. 2nd ed. London: BMJ Books; 2001.

Pearn J. Publication - an Ethical Imperative. Brit Med J. 1995;310(6990):1313–5.

Antes G, Chalmers I. Under-reporting of clinical trials is unethical. Lancet. 2003;361(9362):978–9.

World Medical A. World Medical Association Declaration of Helsinki: ethical principles for medical research involving human subjects. Jama. 2013;310(20):2191–4.

Hopewell S, Loudon K, Clarke MJ, Oxman AD, Dickersin K: Publication bias in clinical trials due to statistical significance or direction of trial results. Cochrane Db Syst Rev. 2009;(1):1-23.

Sune-Martin P, Montoro-Ronsano JB. Role of a research ethics committee in follow-up and publication of results. Lancet. 2003;361(9376):2245–6.

Turer AT, Mahaffey KW, Compton KL, Califf RM, Schulman KA. Publication or presentation of results from multicenter clinical trials: Evidence from an academic medical center. Am Heart J. 2007;153(4):674–80.

Stern JM, Simes RJ. Publication bias: evidence of delayed publication in a cohort study of clinical research projects. Brit Med J. 1997;315(7109):640–5.

Dickersin K, Min YI, Meinert CL. Factors influencing publication of research results. Follow-up of applications submitted to two institutional review boards. Jama. 1992;267(3):374–8.

Bland JM, Altman DG. Statistics notes - The odds ratio. Brit Med J. 2000;320(7247):1468–8.

Bekelman JE, Li Y, Gross CP. Scope and impact of financial conflicts of interest in biomedical research - A systematic review. Jama-J Am Med Assoc. 2003;289(4):454–65.

Chan AW, Hrobjartsson A, Haahr MT, Gotzsche PC, Altman DG. Empirical evidence for selective reporting of outcomes in randomized trials - Comparison of Protocols to published articles. Jama-J Am Med Assoc. 2004;291(20):2457–65.

Vedula SS, Bero L, Scherer RW, Dickersin K. Outcome Reporting in Industry-Sponsored Trials of Gabapentin for Off-Label Use. New Engl J Med. 2009;361(20):1963–71.

Acknowledgements

We thank the HRA and members of the Hampshire A research ethics committee for supporting this work. Work in SEK’s laboratory is funded by the BBSRC grant BB/L019442/1.

Author information

Authors and Affiliations

Corresponding author

Additional information

Competing interests

SEK is chair of South Central, Hampshire A, NHS research ethics committee. The HRA sponsored RB’s masters of research degree. It provided access to the Research Ethics Database but had no role in the study design, collection and analysis of the data.

Authors’ contributions

SEK conceived the project. RB collected and analysed the data. SEK and RB interpreted the data and wrote the paper. Both authors read and approved the final manuscript.

Rights and permissions

This article is published under an open access license. Please check the 'Copyright Information' section either on this page or in the PDF for details of this license and what re-use is permitted. If your intended use exceeds what is permitted by the license or if you are unable to locate the licence and re-use information, please contact the Rights and Permissions team.

About this article

Cite this article

Begum, R., Kolstoe, S. Can UK NHS research ethics committees effectively monitor publication and outcome reporting bias?. BMC Med Ethics 16, 51 (2015). https://doi.org/10.1186/s12910-015-0042-8

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12910-015-0042-8