Abstract

Background

The law enforcement officer profession requires performance of arduous occupational tasks while carrying an external load, consisting of, at minimum, a chest rig, a communication system, weaponry, handcuffs, personal protective equipment and a torch. The aim of this systematic review of the literature was to identify and critically appraise the methodological quality of published studies that have investigated the impacts of body armour on task performance and to synthesize and report key findings from these studies to inform law enforcement organizations.

Methods

Several literature databases (Medline, CINAHL, SPORTDiscus, EMBAS) were searched using key search words and terms to identify appropriate studies. Studies meeting the inclusion criteria were critically evaluated using the Downs and Black protocol with inter-rater agreement determined by Cohen’s Kappa.

Results

Sixteen articles were retained for evaluation with a mean Downs and Black score of 73.2 ± 6.8% (k = 0.841). Based on the research quality and findings across the included studies, this review determined that while effects of body armour on marksmanship and physiological responses have not yet been adequately ascertained, body armour does have significant physical performance and biomechanical impacts on the wearer, including: a) increased ratings of perceived exertion and increased time to complete functional tasks, b) decreased work capability (indicated by deterioration in fitness test scores), c) decreased balance and stability, and d) increased ground reaction forces.

Conclusions

Given the physical performance and biomechanical impacts on the wearer, body armour should be carefully selected, with consideration of the physical fitness of the wearers and the degree to which the armour systems can be ergonomically optimized for the specific population in question.

Similar content being viewed by others

Background

While a large percentage of a Law Enforcement Officer’s (LEO) time on duty is spent in sedentary activities, a high level of physical fitness remains necessary to effectively respond to many incidents [1]. Although incident responses are sporadic, a LEO will inevitably be required to run, jump, crawl, balance while moving quickly, engage in combat, climb, lift, and push or pull significant weights in the execution of their duties, often without warning [1, 2]. Additionally, the LEO profession requires performance of these arduous occupational tasks while carrying an external load, consisting of, at minimum, a chest rig, a communication system, weaponry, handcuffs, personal protective equipment (PPE) and a torch [3].

With an increasing focus on minimizing the risks associated with the performance of these demanding tasks in unpredictable and potentially hostile environments, the weight of these external loads carried by LEO has been steadily increasing [4]. Total weight of external loads can range from approximately 3.5 kg [3] for the general duty officer to 22 kg in specialist police [5] and may even exceed 40 kg in certain circumstances [6]. This upward trend in carried loads is due not only to the increasing complexity of modern tactical engagements [7], but also to the increased emphasis on improving survival without reducing unit mobility [8]. Given that additional load in the form of body armour is known to be effective in reducing fatalities in military environments, use of body armour is becoming more widespread amongst LEO. For example, one Police Force in New Zealand is citing the escalation of violent crimes as evidence of the need to regularly equip LEOs with body armour, particularly for protection against stab threats [3].

While increasing numbers of law enforcement organizations have been making the decision to issue body armour systems, the additional external load associated with this equipment has been associated with increased rates of injury and reduced operational capabilities in tactical populations [9, 10]. In research, primarily on military personnel, load carriage has been found to cause musculoskeletal injuries (e.g. back pain, lower limb stress fractures), neurological injuries (e.g. brachial plexus palsy) and integumentary injuries (e.g. chaffing, blisters) [11]. Chronic increases in levels of physical exertion due to additional occupational load carriage may even be a causative factor in illness, as a result of depressed immune function [12], especially in LEO with a lower body mass or lower level of physical fitness [13]. Impairments to mission capability and performance can also result from load carriage, due to reduced carrier mobility [14] and an increase in time required to complete functional tasks [3, 15]. These impairments can impact on aspects of marksmanship [5, 16,17,18,19,20] and attention-to-task [21, 22] and increase the physiological cost to complete a task [23] when on duty. Essentially, while body armour provides protection to the wearer, it will, like other types of loads, also impart both risks and physiological costs.

With the majority of body armour research having been conducted in military populations, and despite the increasing use of body armour systems by LEO, published research that has examined the extent to which body armour can impact the performance capabilities of LEO during physically demanding occupational tasks has not been comprehensively and critically reviewed. The aim of this systematic review of the literature was therefore to identify and critically appraise the methodological quality of published studies that have investigated the impacts of body armour on task performance in tactical populations, and to synthesize and report key findings from these studies to inform law enforcement organizations.

Methods

In order to identify and obtain relevant original research for review, key literature databases were systematically searched using specific keywords of relevance to the topic. The selection of keywords to be used in the systematic search was guided by review of keywords used in a sample of relevant articles. The databases searched, specific key words, and search strategies employed are detailed in Table 1. To improve the relevance of search results, filters that reflected study eligibility criteria were applied in each database, where available. In databases where these filters were unavailable or were only partially available, the study eligibility criteria were applied manually through screening of study titles and abstracts. The eligibility criteria were subsequently applied to the full-text of identified articles that were not excluded during the screening of titles and abstracts, to select a final set of eligible articles for inclusion in the literature review. The results of the search, screening and selection processes were documented in a PRISMA flow diagram [24].

The specific inclusion criteria applied for this review were: a) human subjects, b) English language availability, c) peer reviewed publications reporting original research for the first time; d) publication date between 1 January 1991 and 17 June 2016; and e) investigated effects of body armour on at least one of the following outcomes of direct relevance to LEO: occupationally-relevant physical performance; levels of task-related physical exertion; mobility; balance; biomechanics; cognitive performance; or marksmanship. Subject matter experts were consulted in determining the date range. Studies published prior to 1991 were excluded because they preceded the Gulf War (having commenced in 1991) which substantially changed individual combat systems (increased use of body armour) and changed operating conditions from those of previous conflicts. Identified publications that fell outside the date range of the review were excluded from the critical review but used to provide context, where useful. The exclusion criteria employed in assessing eligibility of individual studies for inclusion in the literature review were: a) studies that did not measure at least one aspect of human performance; b) studies that involved testing personal protective equipment (PPE) used in Chemical, Biological, Radiological, Nuclear, and high-yield Explosive (CBRNE) environments, concealable or soft body armour only, or a complete load carriage or fighting load; c) studies in extreme environments (high altitude, very cold, etc); and d) studies in which use of body armour was not a primary independent variable.

Eligible publications identified through the literature search, screening and selection processes were then critically appraised to assess methodological quality using the Downs and Black scoring system [25]. This approach to critical appraisal was chosen for the following reasons: a) it was initially developed using psychometrics, b) repeated testing has demonstrated good reliability and validity of this approach, and c) its results are amenable to translation into a methodological quality rating such as the Kennelly grading system used in this review [26], as detailed below. To assess each article with the Downs and Black protocol, 25 of 27 questions, covering statistical methods, validity, bias, data reporting, power analysis, and organization were scored as either ‘0’ points (if the methodological details requested in the question were absent or undeterminable, or ‘1’ point (if the methodological details were reported correctly). Two further questions, Question 5 (relating to potential confounding variables) and Question 27 (relating to sample size calculations and statistical power analysis), are typically scored across a range. Question 5 is scored on a scale from ‘0’ points to ‘2’ points (based on the quality of reporting of confounding factors) while Question 27 is scored on a scale from ‘0’ points to ‘5’ points (based on the extent to which statistical power analyses have informed sample size decisions). While the ‘0’ to ‘2’ scale was retained in this review for Question 5, the review utilized a modification to Question 27 of the Downs and Black protocol first proposed by Eng, et al. [27]. Eng, et al. [27] suggest that Question 27 is ambiguously worded, and so recommend scoring this question regarding statistical power calculations dichotomously, as a correction. This modification ensures the impact this question can have on a publication’s final total raw score is reduced, limiting the impact of subjectivity in the assessment. For comparison purposes and to improve reporting, the critical appraisal score (CAS) for each included study was converted to a percentage score and reported to one decimal place. The CAS was calculated by dividing the actual raw modified Downs and Black score by the highest possible raw score (modified to 28 points) and then multiplying this figure by 100 to convert this proportion to a percentage.

To obtain a quality grade for each research paper, the CAS was then compared to the grading system for methodological quality proposed by Kennelly [26]. Kennelly’s grades are categorized as ‘poor’, ‘fair’ and ‘good’, with the methodological quality rating determined by the scores assigned in the modified Downs and Black protocol. The grade categories and associated ranges of raw Downs and Black scores employed by Kennelly are: 14 or fewer points, classified as ‘poor’ quality research, 15–19 points, classified as ‘fair’ quality research, and 20 or more points, classified as ‘good’ quality research. However, as the Kennelly system was based on the original Downs and Black protocol (which had a maximum possible score of 32 points), in this review (in which the total possible score from the modified Downs and Black protocol was 28 points) the Downs and Black scores associated with each of Kennelly’s grades were converted to percentages. On this basis, the final grading system employed in this review for grading methodological quality of each of the included studies was as follows: Downs and Black score <45.4% - study classified as being of ‘poor’ methodological quality, score of 45.4–61.0% - study classified as being of ‘fair’ methodological quality, and score >61.0% - study classified as being of ‘good’ methodological quality.

All studies were independently critically appraised using the Downs and Black protocol by two authors (CT, RO), with the level of agreement between the appraisers measured using a Cohen’s Kappa (k) analysis of all raw scores (27 scores per paper). For final scores, any disagreements between the two authors (CT, RO) in scores awarded were settled by consensus or, if necessary, adjudication by the third author (RP).

Following the critical appraisal process, key data were extracted from the included papers and compiled in tables to provide a concise and systematic overview of key attributes of, and findings from, included studies. The key table headings were a) title, lead author and publication date, b) demographics of the participants, c) the equipment used and worn, d) key independent and dependent variables, e) the occupational task or test employed to assess performance and f) the CAS, expressed as a percentage. Key outcomes were also extracted and compiled, although only those outcomes which reached statistical significance were included, for brevity, in the table, as indicators of significant impacts of the body armour on the occupationally-relevant task performance of the wearer. Study findings from across the included studies were then critically synthesized to generate a clear summary of key findings and identify the strength of evidence supporting these key findings.

Results

The PRISMA diagram (Fig. 1) details the results of the literature search, screening, and selection processes. In total, the initial search yielded 704 publications, from which 24 duplicates were removed. Of the remaining 680 publications, 37 were considered to be of potential relevance following initial screening and were examined in full text to further assess eligibility. Ultimately, 16 eligible studies were retained and formed the basis for this review.

The research reported in this review originated from six countries. Specifically, six studies were from the US [23, 28,29,30,31,32], five publications were from Australia [5, 14, 33,34,35], two from New Zealand [3, 36], one from India [37], one from Poland [38], and one from the UK [39]. Three distinct subject populations were represented, consisting of university students (n = 7) [29, 31,32,33,34,35, 39], LEO (n = 4) [3, 5, 14, 36], and military personnel (n = 5) [23, 28, 30, 37, 38]. Additionally, ten of the publications studied only males [3, 5, 14, 33,34,35,36,37,38,39] and six included both males and females [23, 28,29,30,31,32]. The mean ± SD age across all studies was determined to be 28.85 ± 2.32 years, ranging from 21.9 ± 2.4 years [29] to 37 ± 9.16 years [3, 36].

The mean ± SD CAS, derived from critical appraisal of the methodological quality of each included study, was 73.2 ± 6.8%, ranging from 60.7% [35] to 85.7% [36]. This mean indicates that most studies were of at least ‘good’ (62.5%) methodological quality. The kappa statistic for inter-tester agreement of the methodological quality of the studies indicated an ‘almost perfect’ agreement (k = 0.841) [40]. When applying the Downs and Black protocol for critical appraisal of each publication, Questions 1–14, concerning how data was reported, generally scored highly across all studies. Low external validity scores (Questions 11–13) were attributed to those studies which did not recruit participants from a tactical population [29, 31,32,33,34,35, 39]. Additionally, one study recruited subjects from a population of military personnel (US Airborne Infantry Soldiers) that had been trained at a level substantially beyond their peers (US Infantry Soldiers). Questions 15–19, relating to internal validity of the study results, showed overall poor scores with respect to blinding, because in most cases, the test condition was a loaded state and was compared to an unloaded control state. The fact that these conditions did not allow for blinding of either the participants or the researchers who conducted and recorded measurements, accounts for the low scores in this area. However, those studies using within-subject measures were able to eliminate selection bias. Scores relating to whether there was adequate consideration of statistical power were generally low across the included studies, due either to insufficient reporting of power or sample size analyses, or low participant numbers.

The key data extracted from the included study reports are summarized in Table 2. All but one included study compared outcomes from a loaded condition with those from an unloaded control condition [38]. The anomalous study, by Majchrzycka et. al.[38], used the current Polish military armour plate as a control for comparison with new alternative plates. Additional independent variables were also incorporated in some of the included studies, including a fatigued test subject state [3, 36, 39] and the comparison of different armour configurations [35, 38]. Seven unique categories were identified among included studies based on the outcomes they assessed. These categories were: Physiological measures (n = 8) [23, 28, 29, 33, 34, 36, 37, 39], marksmanship measures (n = 1) [5], mobility measures (n = 2) [3, 14], kinetic and kinematic measures (n = 6) [3, 31, 32, 36], cognitive measures (n = 3) [33, 38, 39], subjective measures (n = 4) [28, 33, 34, 36], and thermal measures (n = 2) [33, 34]. Because some studies reported multiple outcome measures, they fitted into multiple categories and thus are referenced more than once in the categorized results presented on the page following.

Physiological studies

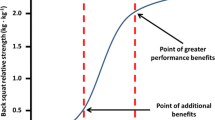

For those studies investigating the physiological effects of wearing body armour, no clear consensus on the independent impacts of wearing body armour emerged from the quantitative data presented in the reports of these studies, as no objective measures were uniformly utilized across all six studies. Nevertheless, the related measures of cardiovascular strain [33], pulmonary strain [37] and heart rate [36, 37] were all reported as elevated beyond control levels in participants wearing body armour suggesting that workload increased when wearing body armour. The study by Swain, et. al. [29] was unique from the others, in that it considered the potential for wearing body armour during training to increase the benefit of training. After 6 weeks of an United States Marine Corps (USMC) style program, the study showed small improvements to the respiratory exchange ratio (RER) (p = 0.01), and maximal heart rate (HRmax) (p = 0.01) in both the experimental group, which wore body armour during training, and the control group. Likewise, maximal oxygen uptake (VO2max) increased in both groups, however, whilst mean VO2max increased approximately twice as much in the vest group as in the control group, this difference between groups did not reach statistical significance (p = 0.16).

Marksmanship studies

Carbone et. al. [5] found no statistically significant difference in marksmanship scores between participants in a body armour condition and those in a minimally loaded control condition, during various marksmanship trials. This study tested specialist police officers in both tactically-loaded condition (ballistic vest, helmet, and primary (M4) and secondary (Glock) weapons of approximately 22 kgs) and an unloaded condition (dressed in fatigues only). Although no statistically significant differences were found in marksmanship measures (5-bullet impact distance from centre of target, on the vertical and horizontal axis), the authors did report that there was a trend towards horizontal target groupings being superior in the loaded condition, during both the static and mobile shooting (25 m pursuit) tasks. While this result did not reach statistical significance, the authors hypothesize that because the participants train primarily in the loaded state, their accuracy may be better when loaded due to a practice effect and the potential stabilizing effect of the body armour.

Mobility studies

Of the two studies [3, 14] investigating changes in mobility associated with wearing body armour, one [14] reported a statistically significant difference between a group wearing a tactically loaded specialist police officer body armour configuration (mean ± SD: 22.8 ± 1.8 kg total equipment weight) and an unloaded control group when testing participants on a 10 m sprint, 25 m simulated patrol, and dummy drags [14]. Loads that exceeded 25% of body weight resulted in a significantly greater effect than lower loads. With respect to task completion, the dummy drag was most severely impacted by load (unloaded mean ± SD time to complete = 9.29 ± 0.53 s: loaded = 10.25 ± 0.77 s). In the other study [3], the researchers found that a stab resistant body armour configuration (mean ± SD weight: 7.65 ± 0.73 kg,) when compared to no load, significantly increased time off balance (mean time 8.12 s loaded, 5.7 s unloaded, when using the stabiliometer, p < 0.001), time to completion during a simulated vehicle exit/sprint (mean time 1.95 s loaded, 1.67 s unloaded, p < 0.001), and time to completion of a mobility battery (mean time 18.16 s loaded, 15.85 s unloaded, P < 0.001).

Kinematic and kinetic studies

Five Studies [3, 30,31,32, 35] reporting on trunk mechanics found that wearing body armour either compromised trunk posture [32] (by increasing flexion or extension during the test activities) [31, 32, 35], reduced range of motion [35], or reduced stability [3, 30]. These impacts of wearing body armour (total weight ranging from 6.4–12.5 kg) reached statistical significance (p ≤ 0.05). Additional observed kinematic impacts of wearing body armour included: increased time to occupational task completion, ranging from 1.89 s extra for grappling tasks (p < 0.001) [3] to 0.5 s extra for both box drop and prone to standing tasks (p = 0.03) [32]; reduced jump height (p < 0.001) [36]; and increased ground reaction forces (GRF) (6–19% over control figures) (p ≤ 0.02) [32, 36].

Cognition

Of the three included studies that considered the impacts of wearing body armour on cognitive function [33, 38, 39], only the study by Roberts, et. al. [39] found a loss in cognitive function when wearing body armour. In that study, the researchers used a verbal fluency task and a controlled order word association task as outcome measures, and observed changes in cognitive strategy. As participants became fatigued, executive function decreased and non-executive function increased (p < 0.05). For this reason, the authors attributed cognitive function decline to fatigue over time, and stated that body armour (S203 Tactical vest and PASGT helmet) did not mediate cognitive impairment, even though clearly it may have contributed to fatigue. Majchrzycka, et. al. [38], found no statistically significant differences between body armour and no-armour groups when comparing the effects of a variety of ballistic chest plates only (2.1–3.2 kg) on cognitive performance, measured via the Grandjean scale, tests of attention and perceptiveness and complex reaction time tests for cognition assessment,. Caldwell et. al. [33] also found no statistically significant relationship between cognitive performance (assessed using the Mini-Cog rapid assessment battery) and wearing body armour (7.36 kg, vest and helmet) (p > 0.05).

Subjective outcomes

All studies considering the rating of perceived exertion (RPE) [28, 33, 34, 36] observed statistically significant increases in RPE during activities undertaken by participants when they were wearing body armour (with loads ranging from 7.8 to 17.48 kg) while performing tasks including shooting accuracy, vaulting, crawling, box lifting and graded exercise testing. One study, using a 7.8–11 kg interceptor plate vest, noted that females reported a higher RPE (1 level of perceived exertion higher on average) than males for the same given tasks [28].

Thermal outcomes

Two studies [33, 34] found that the loaded state (7.36 kg [33] to 19.48 kg [34]) elevated body temperature during activity (by 0.41 to 0.50 °C), beyond control levels in an environment of 21.3 °C (no relative humidity provided) to 36 °C (60% relative humidity) [33]. Body temperature data were obtained either via the auditory canal [33] and from the rectum or an ingested radio-telemetry pill [34]. Another study [39] found that body temperature was not affected by body armour, but used oral temperature, rather than temperature in the gastro-intestinal tract or temperature assessed via the auditory canal. In this study the weight of the body armour, environmental temperature and humidity were not provided.

Discussion

The aim of this systematic review of the literature was to identify and critically appraise the methodological quality of published studies that have investigated the impacts of body armour on task performance in tactical populations, and to synthesize and report key findings from these studies to inform law enforcement organizations. In total, 16 publications were critically reviewed, achieving a mean ± SD critical appraisal score of 73.2 ± 6.8% (range 60.7 to 85.7%). Seven emerging categories, based on reported outcome measures, emerged to form the basis of the themes reported in the preceding results section and now discussed in the context of findings from the broader tactical research literature. These seven themes, discussed below, are physiological effects, marksmanship effects, mobility effects, kinetic and kinematic effects, cognitive effects, subjective measures, and thermal effects.

Physiological effects of wearing body armour

The wide variety of loads (ranging from 7.36 kg [33] to 17.48 kg [34]) and test conditions (e.g., hot/humid exercise conditions [33, 37], simulated functional task circuit [34], graded treadmill testing [28], physical fitness testing [23, 28] and running tasks [39]) resulted in heterogeneous results across studies considered in the physiological study category. Additionally, the available studies in this category did not always use comparable outcome measures – for example, studies used related but distinct measures of cardiovascular strain [33], pulmonary strain [37], body temperature and heart rate [36, 37]. With this variability in outcome measures likely contributing to the observed variability in study findings, specific conclusions that can be drawn from this review about physiological effects of wearing body armour are limited. Nevertheless, the included studies, which analyzed HR, pulmonary function, blood lactate levels, VO2max, and RER, did indicate overall that workload increased when wearing body armour, and this is not surprising since body armour adds load. This finding is consistent with findings of military studies, such as the article by Polcyn, et. al. [41], which found increased energy expenditure in female soldiers during a loaded (12–50 kg) 3.2 km land navigation course. Therefore, the physiological data reported in this review, as well as the subjective data (discussed later in this report) and related outcome measures including mobility, balance, time to complete tasks and other occupationally relevant measures reported in the included studies all indicate that wearing body armour results in additional increases in exertion when LEO perform physically demanding tasks, and this finding is consistent with findings in military populations.

Marksmanship effects of wearing body armour

Only one study in the marksmanship category assessed the effects of body armour on marksmanship of tactical populations [5]. In this study, using an M4 rifle and Glock handgun, no significant effect of wearing the body armour was found. The authors cited low numbers of participants (n = 6) and limited marksmanship data as major contributors to the inconclusive result. Similar challenges were reported by Orr, et. al. [42], who also considered tactical police officers in their research. A further study investigated participants drawn from a military population performing exercise (repetitive 20.5 kg/1.55 m box lift) while wearing a combat load (29.9 kg) [43] and found that while the load did not decrease rifle accuracy, an increase in the time to engage targets occurred. This increase in time to engage targets could impact fighting effectiveness and survivability in a combat environment, regardless of occupation. In other military load carriage studies, such as two investigations by Knapik et. al., [20] and Hanlon [44], a decrease in M16 rifle shooting performance was found after a 20 km road march under loads up to 61 kg, and after a 2 mile run for time. These findings, when taken together, suggest that the independent effect of load carriage on marksmanship is likely to be negative but is also still not well understood, especially when different weapon systems are used (e.g. rifle versus hand gun), warranting further research in this area.

Mobility effects of wearing body armour

This review found that balance is decreased and time to complete functional tasks is increased as a result of wearing body armour. Additionally, some occupationally relevant tasks may be significantly impaired by body armour. These findings are further supported by the results of previous military research [45] showing increased road march time on completion of a functional task (US Army obstacle course) by subjects wearing a full fighting load (14–27kgs). Additional military-focused research showed significant effects of body armour on road march time and the incidence of load-incited blisters [44]. Moreover, a study investigating body armour and full fighting loads also reported biomechanical disadvantage (decreased trunk range of motion) resulted from increasing the external load. Body armour may contribute to development of musculoskeletal injury and chronic low back pain, both of which are reported as being substantial factors in lost time on duty in tactical settings [10, 46].

Kinematic and kinetic effects of wearing body armour

The type of body armour selected for use is characterised by three primary factors: level of protection, actuarial concerns, and degree of functional impairment exerted by the system [47, 48]. Since neither level of protection nor cost are enhanced by systems with potential to improve mobility [49], effective body armour has been repeatedly shown to be ergonomically detrimental, specifically with respect to trunk posture [3, 30,31,32, 35]. GRF [3, 32, 36] universally deteriorated when participants in the included studies were wearing body armour. That is to say, that both fatigue and being in a loaded condition elevated GRF, with the combination of the two resulting in the most impact. With this in mind, a survey of 863 US Soldiers in Iraq [50] found a significant positive correlation between the duration for which Soldiers wore body armour each day and rates of musculoskeletal complaints, such that those who wore the body armour for four hours or more per day were at significantly greater risk. The musculoskeletal complaints ranged from neck and back to upper extremity musculoskeletal pain. For this reason, the weight and ergonomics of body armour systems should be closely evaluated when making equipment decisions.

Cognitive effects of wearing body armour

Heterogeneity limits cross-study comparisons of cognitive effects of wearing body armour in the broad tactical literature and across the studies included in this review. The studies included in this review used a variety of body armour types and configurations and measures of cognitive performance (e.g., unique word association tests, a variety of measures of fatigue levels, and wide-ranging equipment and loads). Nevertheless, one key finding from the included cognition studies is the finding that time and fatigue induced deteriorations in cognitive performance when personnel were carrying loads, including body armour [39]. If an armoured state can bring about fatigue more rapidly than a control state, as has been suggested by several pertinent studies included in this review [3, 23, 28, 36], then relationships between the armoured state, fatigue and cognition can be established. Other research, such as one study of ROTC cadets carrying an external load in the form of all-purpose, lightweight, individual, carrying equipment (ALICE) backpacks [51], has reported significant deterioration in executive, higher-level, mental processing when carrying load (examining tests of situational awareness wearing 30% of body weight). This finding, in conjunction with the aforementioned findings of this review, could be significant for both LEO and military populations, where critical thinking and decision making skills are vital, often for extended periods of time and under significant levels of stress.

Perceived impacts of body armour

Increases in RPE reported by participants when wearing body armour, when compared to their unloaded peers or unloaded time periods, revealed that even in the absence of quantitative measurement of physiological effects, individuals equipped with armour perceived more required effort in completing a task when wearing body armour, regardless of whether or not the subjects recruited were from a tactical background. These results were consistent with other research within the general field of load carriage where increased loading increase subjective ratings of effort [52]. In contrast, one review by Larsen, et. al. [53] found no consensus across reviewed study reports regarding exertion effects of wearing body armour, but this is likely because research considering non-tactical (Emergency Medical Services, firefighting) populations was included in the search strategy.

Thermal effects of wearing body armour

While increases observed in the included studies in core temperature due to wearing body armour were mild (0.5 °C), it should be emphasized that these outcomes were assessed independently of the physical activities within the study protocol. Specifically, time exposed to the hot-humid test condition and loaded state proved to be the variables most strongly associated with temperature deviation from the control values [33]. Therefore, although core temperatures associated with heat illness were not found in data obtained through this review, personnel operating in high heat and high humidity environments should receive special consideration with respect to exertional heat illness and limits on time on duty when equipped with body armour.

Limitations

One notable limitation of this review was the generally small number of participants in each study (ranging from 6 to 52 subjects, mean = 22.16, median = 17). Furthermore, when each sub field was considered in isolation, the relative participant size decreased further (e.g. thermal effects participant sizes of 9 [33] and 11 [34]). On this basis, the results should be considered with caution until larger studies (or a greater summation of smaller studies) can provide further supporting evidence.

Conclusions

Based on research quality and agreement across studies, this review determined that while effects of wearing body armour on marksmanship and various physiological parameters are still uncertain and deserving of further research, body armour does have significant biomechanical and physical performance impacts on the wearer, including: a) increased workload as assessed by a range of different physiological measures, b) decreased work capacity (measured as fitness test score deterioration), b) increased time to complete functional tasks, c) decreased balance and stability, d) increased GRF, e) increased RPE, and f) mild elevation of core temperatures. These occupationally-relevant performance decrements may also lead to decreased cognitive capability and increased injury risk. For this reason, body armour should be carefully selected, with consideration of the levels of physical conditioning of the wearers and the degree to which the armour system can be ergonomically optimized for the individual wearer.

Abbreviations

- ALICE:

-

All-purpose, lightweight, individual, carrying equipment

- CAS:

-

Critical appraisal score

- CBRNE:

-

Chemical, biological, radiological, nuclear, and high-yield explosive

- GRF:

-

Ground reaction forces

- HRmax:

-

Heart rate maximum

- LEO:

-

Law enforcement officer

- PPE:

-

Personal protective equipment

- RER:

-

Respiratory exchange ratio

- ROTC:

-

Reserve officer training corps

- RPE:

-

Rate/Rating of perceived exertion

References

Bonneau J, Brown J. Physical ability, fitness and police work. J Clin Forensic Med. 1995;2:157–64.

Bock C, Stierli M, Hinton B, Orr R. The Functional Movement Screen as a predictor of police recruit occupational task performance. J Bodyw Mov Ther. 2016;20:310–5.

Dempsey PC, Handcock PJ, Rehrer NJ. Impact of police body armour and equipment on mobility. Appl Ergon. 2013;44:957–61.

Stubbs D, David G, Woods V, Beards S. Problems associated with police equipment carriage with body armour, including driving. Contemp Ergon. 2008;2008:23.

Carbone PD, Carlton SD, Stierli M, Orr RM. The impact of load carriage on the marksmanship of the tactical police officer: a pilot study. J Aust Strength and Cond. 2014;22:50–7.

Pryor RR, Colburn D, Crill MT, Hostler DP, Suyama J. Fitness characteristics of a suburban special weapons and tactics team. J Strength Cond Res. 2012;26:752–7.

Orr R. The history of the soldier’s load. Aust Army J. 2010;7:67–88.

Gawande A. Casualties of war — military care for the wounded from Iraq and Afghanistan. N Engl J Med. 2004;351:2471–5.

Orr RM, Pope R, Johnston V, Coyle J. Soldier occupational load carriage: a narrative review of associated injuries. Int J Inj Control Saf Promot. 2014;21:388–96.

Knapik JJ, Reynolds KL, Harman E. Soldier load carriage: historical, physiological, biomechanical, and medical aspects. Mil Med. 2004;169:45–56.

Orr R, Pope R, Johnston V, Coyle J. Soldier self-reported reductions in task performance associated with operational load carriage. J Aust Strength Cond. 2013;21:39–46.

Gleeson M. Immune function in sport and exercise. J Appl Psychol. 2007;103:693–9.

Orr R, Pope R, Peterson S, Hinton B, Stierli M. Leg power as an indicator for risk of injury or illness in police recruits. Int J Environ Res Public Health. 2016;13:237–47.

Carlton SD, Carbone PD, Stierli M, Orr RM. The impact of occupational load carriage on the mobility of the tactical police officer. J Aust Strength Cond. 2014;22:32–7.

Carlton SD, Orr RM. The impact of occupational load carriage on carrier mobility: a critical review of the literature. Int J Occup Saf Ergon. 2014;20:33–41.

Knapik JJ, Staab J, Bahrke M, Reynolds KL, Vogel JA, O’Connor J. Soldier performance and mood states following a strenuous road march. Mil Med. 1991;156:197–200.

Knapik JJ, Ang P, Meiselman H, et al. Soldier performance and strenuous road marching: influence of load mass and load distribution. Mil Med. 1997;162:62–7.

Rice VJ, Sharp M, Tharion WJ, Williamson T. Effects of a shoulder harness on litter carriage performance and post-carry fatigue of men and women. Natick: Military Performance Division US Army Research Institute of Environmental Medicine; 1999. p. 76.

Knapik JJ, Bahrke M, Staab J, Reynolds KL, Vogel JA, O'Connor J. Frequency of loaded road march training and performance on a loaded road march. T13-90. Natick: Military Performance Division US Army Research Institute of Environmental Medicine; 1990. p. 52.

Knapik J, Johnson R, Ang P, Meiselman H, Bensel C. Road march performance of special operations soldiers carrying various loads and load distributions. T14-93. Natick: Military Performance Division US Army Research Institute of Environmental Medicine; 1993. p. 136.

Johnson RF, Knapik JJ, Merullo DJ. Symptoms during load carrying: effects of mass and load distribution during a 20-km road march. Percept Mot Skills. 1995;81:331–8.

Mahoney CR, Hirsch E, Hasselquist L, Lesher LL, Lieberman HR. The effects of movement and physical exertion on soldier vigilance. Aviat Space Environ Med. 2007;78:B51–7.

Ricciardi R, Deuster PA, Talbot LA. Metabolic demands of body armor on physical performance in simulated conditions. Mil Med. 2008;173:817–24.

Moher D, Liberati A, Tetzlaff J, Altman DG. Preferred Reporting Items for Systematic Reviews and MetaAnalyses: The PRISMA Statement. PloS Med. 2009;6(7):e1000097.

Downs SH, Black N. The feasibility of creating a checklist for the assessment of the methodological quality both of randomised and non-randomised studies of health care interventions. J Epidemiol Community Health. 1998;52:377–84.

Kennelly J. Methodological approach to assessing the evidence. Reducing racial/ethnic disparities in reproductive and perinatal outcomes. Springer; 2011. pp. 7-19.

Eng JJ, Teasell RW, Miller WC, Wolfe DL, Townson AF, Aubut J-A, et al. Spinal cord injury rehabilitation evidence: method of the SCIRE systematic review. Aust J Phys. 2002;48:43–9.

Ricciardi R, Deuster PA, Talbot LA. Effects of gender and body adiposity on physiological responses to physical work while wearing body armor. Mil Med. 2007;172:743–8.

Swain DP, Onate JA, Ringleb SI, Naik DN, DeMaio M. Effects of training on physical performance wearing personal protective equipment. Mil Med. 2010;175:664–70.

Sell TC, Pederson JJ, Abt JP, et al. The addition of body armor diminishes dynamic postural stability in military soldiers. Mil Med. 2013;178:76–81.

Phillips M, Bazrgari B, Shapiro R. The effects of military body armour on the lower back and knee mechanics during toe-touch and two-legged squat tasks. Ergonomics. 2015;58:492–503.

Phillips MP, Shapiro R, Bazrgari B. The effects of military body armour on the lower back and knee mechanics during box drop and prone to standing tasks. Ergonomics. 2016;59:682–91.

Caldwell JN, Engelen L, van der Henst C, Patterson MJ, Taylor NAS. The interaction of body armor, low-intensity exercise, and hot-humid conditions on physiological strain and cognitive function. Mil Med. 2011;176:488–93.

Larsen B, Netto K, Skovli D, Vincs K, Vu S, Aisbett B. Body armor, performance, and physiology during repeated high-intensity work tasks. Mil Med. 2012;177:1308–15.

Lenton G, Aisbett B, Neesham-Smith D, Carvajal A, Netto K. The effects of military body armour on trunk and hip kinematics during performance of manual handling tasks. Ergonomics. 2015;59(6):808–16.

Dempsey PC, Handcock PJ, Rehrer NJ. Body armour: the effect of load, exercise and distraction on landing forces. J Sports Sci. 2014;32:301–6.

Majumdar D, Srivastava KK, Purkayastha SS, Pichan G, Selvamurthy W. Physiological effects of wearing heavy body armour on male soldiers. Int J Ind Ergon. 1997;20:155–61.

Majchrzycka K, Brochocka A, Luczak A, Lężak K. Ergonomics assessment of composite ballistic inserts for bullet- and fragment-proof vests. Int J Occup Saf Ergon. 2013;19:387–96.

Roberts APJ, Cole JC. The effects of exercise and body armor on cognitive function in healthy volunteers. Mil Med. 2013;178:479–86.

Landis JR, Koch GG. The measurement of observer agreement for categorical data. Biometrics. 1977;1:159–74.

Polcyn AF, Bessel CK, Harman EA, Obusek JP. The effects of load weight: a summary analysis of maximal performance, physiological, and biomechanical results from four studies of load-carriage systems., in: RTO meeting proceedings 56: soldier mobility: innovations in load carriage system design and evaluation. Kingston: Research and Technology Organisation/North Atlantic Treaty Organization: 2000

Orr R, Poke D, Stierli M, Hinton B. The perceived effect of load carriage on marksmanship in the tactical athlete. J Sci Med Sport. 2015;18(6):98.

Frykman PN, Merullo DJ, Banderet LE, Gregorczyk K, Hasselquist L. Marksmanship deficits caused by an exhaustive whole-body lifting task with and without torso-borne loads. J Strength Cond Res. 2012;26:S30–6.

Hanlon W. Soldier performance and strenuous road marching: influence of load mass and load distribution. Mil Med. 1997;162:62–7.

Frykman PN, Harman E, Pandorf CE. Correlates of obstacle course performance among female soldiers carrying two different loads, in: RTO meeting proceedings 56: soldier mobility: innovations in load carriage system design and evaluation. Research and Technology Organisation/North Atlantic Treaty Organization: Kingston; 2000.

Roy TC, Knapik JJ, Ritland BM, Murphy N, Sharp MA. Risk factors for musculoskeletal injuries for soldiers deployed to Afghanistan. Aviat Space Environ Med. 2012;83:1060–6.

MacLeish KT. Armor and anesthesia: exposure, feeling, and the soldier’s body. Med Anthropol Q. 2012;26:49–68.

Taylor NAS, Burdon CA, van den Heuvel AMJ, et al. Balancing ballistic protection against physiological strain: evidence from laboratory and field trials. Appl Physiol Nutr Metab. 2016;41:117–24.

Wells J, Rupert N, Neal M. Impact damage analysis in a level III flexible body armor vest using XCT diagnostics. Ceram Eng Sci Proc. 2009;30(5):171.

Konitzer LN, Fargo MV, Brininger TL, Lim RM. Association between back, neck, and upper extremity musculoskeletal pain and the individual body armor. J Hand Ther. 2008;21:143–8.

May B, Tomporowski PD, Ferrara M. Effects of backpack load on balance and decisional processes. Mil Med. 2009;174:1308–12.

Vaananen M. The effects of a 4-day march on the lower extremities and hormone balance. Mil Med. 1997;162:118–22.

Larsen B, Netto K, Aisbett B. The effect of body armor on performance, thermal stress, and exertion: a critical review. Mil Med. 2011;176:1265–73.

Acknowledgements

We have no acknowledgements to add.

Funding

This critical review formed part of a larger scope of work which was partially funded by an Australian state law enforcement agency.

Availability of data and materials

The data is available from the corresponding author.

Authors’ contributions

Author’s contributions are as follows: All authors contributed to the conceptualisation and planning of the review and to the development of the search strategy. CT carried out the systematic search, study selection, data extraction, critical appraisal and drafted the manuscript. RO participated in the study selection, critical appraisal, cross checked data extraction and contributed to drafting the manuscript. RP settled any disagreements in critical appraisal and contributed to drafting the manuscript. All authors read and approved the final manuscript.

Competing interests

The authors declare that they have no competing interests.

Consent for publication

Not applicable

Ethics approval and consent to participate

Not applicable

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated.

About this article

Cite this article

Tomes, C., Orr, R.M. & Pope, R. The impact of body armor on physical performance of law enforcement personnel: a systematic review. Ann of Occup and Environ Med 29, 14 (2017). https://doi.org/10.1186/s40557-017-0169-9

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s40557-017-0169-9