Abstract

A search for a standard model Higgs boson produced in association with a top-quark pair and decaying to bottom quarks is presented. Events with hadronic jets and one or two oppositely charged leptons are selected from a data sample corresponding to an integrated luminosity of 19.5\(\,\text {fb}^\text {-1}\) collected by the CMS experiment at the LHC in \(\mathrm {p}\mathrm {p}\) collisions at a centre-of-mass energy of 8\(\,\hbox {TeV}\). In order to separate the signal from the larger \(\hbox {t}\overline{\hbox {t}}\) + jets background, this analysis uses a matrix element method that assigns a probability density value to each reconstructed event under signal or background hypotheses. The ratio between the two values is used in a maximum likelihood fit to extract the signal yield. The results are presented in terms of the measured signal strength modifier, \(\mu \), relative to the standard model prediction for a Higgs boson mass of 125\(\,\hbox {GeV}\). The observed (expected) exclusion limit at a 95 % confidence level is \(\mu <4.2\) (3.3), corresponding to a best fit value \(\hat{\mu }=1.2^{+1.6}_{-1.5}\).

Similar content being viewed by others

1 Introduction

Following the discovery of a new boson with mass around 125\(\,\hbox {GeV}\) by the ATLAS and CMS Collaborations [1–3] at the CERN LHC, the measurement of its properties has become an important task in particle physics. The precise determination of its quantum numbers and couplings to gauge bosons and fermions will answer the question whether the newly discovered particle is the Higgs boson (\(\mathrm{H} \)) predicted by the standard model (SM) of particle physics, i.e. the quantum of the field responsible for the spontaneous breaking of the electroweak symmetry [4–9]. Conversely, any deviation from SM predictions will represent evidence of physics beyond our present knowledge, thus opening new horizons in high-energy physics. While the measurements performed with the data collected so far indicate overall consistency with the SM expectations [3, 10–13], it is necessary to continue improving on the measurement of all possible observables.

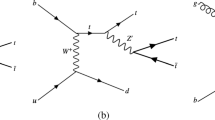

In the SM, the Higgs boson couples to fermions via Yukawa interactions with strength proportional to the fermion mass. Direct measurements of decays into bottom quarks and \(\tau \) leptons have provided the first evidence that the 125\(\,\hbox {GeV}\) Higgs boson couples to down-type fermions with SM-like strength [14]. Evidence of a direct coupling to up-type fermions, in particular to top quarks, is still lacking. Indirect constraints on the top-quark Yukawa coupling can be inferred from measuring either the production or the decay of Higgs bosons through effective couplings generated by top-quark loops. Current measurements of the Higgs boson cross section via gluon fusion and of its branching fraction to photons are consistent with the SM expectation for the top-quark Yukawa coupling [3, 10–12]. Since these effective couplings occur at the loop level, they can be affected by beyond-standard model (BSM) particles. In order to disentangle the top-quark Yukawa coupling from a possible BSM contribution, a direct measurement of the former is required. This can be achieved by measuring observables that probe the top-quark Yukawa interaction with the Higgs boson already at the tree-level. The production cross section of the Higgs boson in association with a top-quark pair (\(\hbox {t}\overline{\hbox {t}} \mathrm{H} \)) provides an example of such an observable. A sample of tree-level Feynman diagrams contributing to the partonic processes \(\hbox {q} \overline{\hbox {q}} ,\hbox {g} \hbox {g} \rightarrow \hbox {t}\overline{\hbox {t}} \mathrm{H} \) is shown in Fig. 1 (left and centre). The inclusive next-to-leading-order (NLO) \(\hbox {t}\overline{\hbox {t}} \mathrm{H} \) cross section is about \(130\,\text {fb} \) in \(\mathrm {p}\mathrm {p}\) collisions at a centre-of-mass energy \(\sqrt{s}=8\,\hbox {TeV} \) for a Higgs boson mass (\(m_\mathrm{H} \)) of 125\(\,\hbox {GeV}\) [15–24], which is approximately two orders of magnitude smaller than the cross section for Higgs boson production via gluon fusion [23, 24].

Tree-level Feynman diagrams contributing to the partonic processes: left \(\hbox {q} \overline{\hbox {q}} \rightarrow \hbox {t}\overline{\hbox {t}} \mathrm{H} \), centre \(\hbox {g} \hbox {g} \rightarrow \hbox {t}\overline{\hbox {t}} \mathrm{H} \), and right \(\hbox {g} \hbox {g} \rightarrow \hbox {t}\overline{\hbox {t}} +\hbox {b}\overline{\hbox {b}} \)

The first search for \(\hbox {t}\overline{\hbox {t}} \mathrm{H} \) events used \(\mathrm {p}\mathrm {\overline{p}}\) collision data at \(\sqrt{s}=1.96\,\hbox {TeV} \) collected by the CDF experiment at the Tevatron collider [25]. Searches for \(\hbox {t}\overline{\hbox {t}} \mathrm{H} \) production at the LHC have previously been published for individual decay modes of the Higgs boson [26, 27]. The first combination of \(\hbox {t}\overline{\hbox {t}} \mathrm{H} \) searches in different final states has been published by the CMS Collaboration based on the full data set collected at \(\sqrt{s}=7\) and 8\(\,\hbox {TeV}\) [28]. Assuming SM branching fractions, the results of that analysis set a 95 % confidence level (CL) upper limit on the \(\hbox {t}\overline{\hbox {t}} \mathrm{H} \) signal strength at 4.5 times the SM value, while an upper limit of 1.7 times the SM is expected from the background-only hypothesis. The median expected exclusion limit for \(\hbox {t}\overline{\hbox {t}} \mathrm{H} \) production in the \(\mathrm{H} \rightarrow \hbox {b}\overline{\hbox {b}} \) channel alone is 3.5 in the absence of a signal.

The results of a search for \(\hbox {t}\overline{\hbox {t}} \mathrm{H} \) production in the decay channel \(\mathrm{H} \rightarrow \hbox {b}\overline{\hbox {b}} \) are presented in this paper based on \(\mathrm {p}\mathrm {p}\) collision data at \(\sqrt{s}=8\,\hbox {TeV} \) collected with the CMS detector [29] and corresponding to an integrated luminosity of 19.5\(\,\text {fb}^\text {-1}\). The analysis described here differs from that of Ref. [28] in the way events are categorized and in its use of an analytical matrix element method (MEM) [30, 31] for improving the separation of signal from background. Within the MEM technique, each reconstructed event is assigned a probability density value based on the theoretical differential cross section \(\sigma ^{-1}\hbox {d}\sigma /\hbox {d}\mathbf {y}\), where \(\mathbf {y}\) denotes the four-momenta of the reconstructed particles. Particle-level quantities that are either unknown (e.g. neutrino momenta, jet-parton associations) or poorly measured (e.g. quark energies) are marginalised by integration. The ratio between the probability density values for signal and background provides a discriminating variable suitable for testing the compatibility of an event with either of the two hypotheses [32].

The MEM has already been successfully used at the Tevatron collider in the context of Higgs boson searches [33, 34], although for simpler final states. A phenomenological feasibility study for a \(\hbox {t}\overline{\hbox {t}} \mathrm{H} \) measurement in the \(\mathrm{H} \rightarrow \hbox {b}\overline{\hbox {b}} \) decay channel at the LHC using the MEM has been pioneered in Ref. [35] based on the MadWeight package [36] for automatised matrix-element calculations. The present paper makes use of an independent implementation of the MEM, specifically optimized for the final state of interest. This is the first time that the MEM is applied to a search for \(\hbox {t}\overline{\hbox {t}} \mathrm{H} \) events. The final states typical of \(\hbox {t}\overline{\hbox {t}} \mathrm{H} \) events with \(\mathrm{H} \rightarrow \hbox {b}\overline{\hbox {b}} \), that are characterised by huge combinatorial background, the presence of nonreconstructed particles, and small signal-to-background ratios, provide an ideal case for the deployment of the MEM. The analysis strategy is designed to maximise the separation between \(\hbox {t}\overline{\hbox {t}} \mathrm{H} \) and \(\hbox {t}\overline{\hbox {t}} +\hbox {b}\overline{\hbox {b}} \) background events, in order to reduce the systematic uncertainty on the signal extraction related to the modelling of this challenging background.

This paper is organised as follows. Section 2 describes the main features of the CMS detector. Section 3 presents the data and simulation samples, while Sects. 4 and 5 discuss the reconstruction of physics objects and the event selection, respectively. Section 6 describes the signal extraction. The treatment of systematic uncertainties and the statistical interpretation of the results are discussed in Sects. 7 and 8, respectively. Section 9 summarises the results.

2 CMS detector

The central feature of the CMS apparatus is a superconducting solenoid of 6 \(\text {m}\) internal diameter, providing a magnetic field of 3.8 \(\text {T}\). Within the field volume are a silicon pixel and strip tracker, a lead tungstate crystal electromagnetic calorimeter (ECAL), and a brass and scintillator hadron calorimeter (HCAL), each composed of a barrel and two endcap sections. Muons are measured in gas-ionization detectors embedded in the steel flux-return yoke outside the solenoid. Extensive forward calorimetry complements the coverage provided by the barrel and endcap detectors. The first level of the CMS trigger system, composed of custom hardware processors, uses information from the calorimeters and muon detectors to select the most interesting events in a time interval of less than 4\(\,\upmu \text {s}\). The high-level trigger processor farm further decreases the event rate from around 100 \(\text {kHz}\) to around 1 \(\text {kHz}\), before data storage. A more detailed description of the CMS detector, together with a definition of the coordinate system used and the relevant kinematic variables can be found in Ref. [29].

3 Data and simulated samples

The data sample used in this search was collected with the CMS detector in 2012 from \(\mathrm {p}\mathrm {p}\) collisions at a centre-of-mass energy of 8\(\,\hbox {TeV}\), using single-electron, single-muon, or dielectron triggers. The single-electron trigger requires the presence of an isolated electron with transverse momentum (\(p_{\mathrm {T}}\)) in excess of 27\(\,\hbox {GeV}\). The single-muon trigger requires an isolated muon candidate with \(p_{\mathrm {T}}\) above 24\(\,\hbox {GeV}\). The dielectron trigger requires two isolated electrons with \(p_{\mathrm {T}}\) thresholds of 17 and 8\(\,\hbox {GeV}\).

Signal and background processes are modelled with Monte Carlo (MC) simulation programs. The CMS detector response is simulated by using the Geant4 software package [37]. Simulated events are required to pass the same trigger selection and offline reconstruction algorithms used on collision data. Correction factors are applied to the simulated samples to account for residual differences in the selection and reconstruction efficiencies with respect to those measured.

The \(\hbox {t}\overline{\hbox {t}} \mathrm{H} \), \(\mathrm{H} \rightarrow \hbox {b}\overline{\hbox {b}} \) signal is modelled by using the pythia 6.426 [38] leading order (LO) event generator normalised to the NLO theoretical cross section [15–24], and assuming the SM Higgs boson with a mass of 125\(\,\hbox {GeV}\). The main background in the analysis stems from \(\hbox {t}\overline{\hbox {t}} +\text {jet}\) production. This process has been simulated with the MadGraph 5.1.3 [39] tree-level matrix element generator matched to pythia for the parton shower description, and normalised to the inclusive next-to-next-to-leading-order (NNLO) cross section with soft-gluon resummation at next-to-next-to-leading logarithmic accuracy [40]. The \(\hbox {t}\overline{\hbox {t}} +\text {jets}\) sample has been generated in a five-flavour scheme with tree-level diagrams for two top quarks plus up to three extra partons, including both charm and bottom quarks. An additional correction factor to the \(\hbox {t}\overline{\hbox {t}} +\text {jets}\) samples is applied to account for the differences observed in the top-quark \(p_{\mathrm {T}}\) spectrum when comparing the MadGraph simulation with data [41]. The interference between the \(\hbox {t}\overline{\hbox {t}} \mathrm{H} \), \(\mathrm{H} \rightarrow \hbox {b}\overline{\hbox {b}} \) diagrams and the \(\hbox {t}\overline{\hbox {t}} +\hbox {b}\overline{\hbox {b}} \) background diagrams is negligible and is not considered in the MC simulation. Minor backgrounds come from the Drell–Yan production of an electroweak boson with additional jets (\(\mathrm {W}+\text {jets}\), \(\hbox {Z} +\text {jets}\)), and from the production of a top-quark pair in association with a \(\mathrm {W}^\pm ,\hbox {Z} \) boson (\(\hbox {t}\overline{\hbox {t}} \mathrm {W}\), \(\hbox {t}\overline{\hbox {t}} \hbox {Z} \)). These processes have been generated by MadGraph matched to the pythia parton shower description. The Drell–Yan processes have been normalised to the NNLO inclusive cross section from fewz 3.1 [42], while the NLO calculations from Refs. [43, 44] are used to normalise the \(\hbox {t}\overline{\hbox {t}} \mathrm {W}\) and \(\hbox {t}\overline{\hbox {t}} \hbox {Z} \) samples, respectively. Single top quark production is modelled with the NLO generator powheg 1.0 [45–50] combined with pythia. Electroweak diboson processes (\(\mathrm {W}\mathrm {W}\), \(\mathrm {W}\hbox {Z} \), and \(\hbox {Z} \hbox {Z} \)) are simulated by using the pythia generator normalised to the NLO cross section calculated with mcfm 6.6 [51]. Processes that involve top quarks have been generated with a top-quark mass of 172.5\(\,\hbox {GeV}\). Samples generated at LO use the CTEQ6L1 parton distribution function (PDF) set [52], while samples generated with NLO programs use the CTEQ6.6M PDF set [53].

Effects from additional \(\mathrm {p}\mathrm {p}\) interactions in the same bunch crossing (pileup) are modelled by adding simulated minimum bias events (generated with pythia) to the generated hard interactions. The pileup multiplicity in the MC simulation is reweighted to reflect the luminosity profile observed in \(\mathrm {p}\mathrm {p}\) collision data.

4 Event reconstruction

The global event reconstruction provided by the particle-flow (PF) algorithm [54, 55] seeds the reconstruction of the physics objects deployed in the analysis. To minimise the impact of pileup, charged particles are required to originate from the primary vertex, which is identified as the reconstructed vertex with the largest value of \(\sum p_{\mathrm {T},i}^2\), where \(p_{\mathrm {T},i}\) is the transverse momentum of the \(i\)th charged particle associated with the vertex. The missing transverse momentum vector \({\mathbf {p}}_{\mathrm {T}}^{\text {miss}} \) is defined as the negative vector sum of the transverse momenta of all neutral particles and of the charged particles coming from the primary vertex. Its magnitude is referred to as \(E_{\mathrm {T}}^{\text {miss}} \).

Muons are reconstructed from a combination of measurements in the silicon tracker and in the muon system [56]. Electron reconstruction requires the matching of an energy cluster in the ECAL with a track in the silicon tracker [57]. Additional identification criteria are applied to muon and electron candidates to reduce instrumental backgrounds. An isolation variable is defined starting from the scalar \(p_{\mathrm {T}}\) sum of all particles contained inside a cone around the track direction, excluding the contribution from the lepton itself. The amount of neutral pileup energy is estimated as the average \(p_{\mathrm {T}}\) density calculated from all neutral particles in the event multiplied by an effective area of the isolation cone, and is subtracted from the total sum.

Jets are reconstructed by using the anti-\(k_{\mathrm {T}}\) clustering algorithm [58], as implemented in the FastJet package [59, 60], with a distance parameter of 0.5. Each jet is required to have pseudorapidity (\(\eta \)) in the range \([-2.5,2.5]\), to have at least two tracks associated with it, and to have electromagnetic and hadronic energy fractions of at least 1 % of the total jet energy. Jet momentum is determined as the vector sum of the momenta of all particles in the jet. An offset correction is applied to take into account the extra energy clustered in jets because of pileup. Jet energy corrections are derived from the simulation, and are confirmed with in situ measurements of the energy balance of dijet and \(\hbox {Z}/\gamma \) + jet events [61]. Additional selection criteria are applied to each event to remove spurious jet-like features originating from isolated noise patterns in few HCAL regions.

The combined secondary vertex (CSV) \(\hbox {b}\)-tagging algorithm is used to identify jets originating from the hadronisation of bottom quarks [62]. This algorithm combines the information about track impact parameters and secondary vertices within jets into a likelihood discriminant to provide separation of \(\hbox {b}\)-quark jets from jets that originate from lighter quarks or gluons. The CSV algorithm assigns to each jet a continuous value that can be used as a jet flavour discriminator. Large values of the discriminator correspond preferentially to \(\hbox {b}\)-quark jets, so that working points of increasing purity can be defined by requiring higher values of the CSV discriminator. For example, the CSV medium working point (CSVM) is defined in such a way as to provide an efficiency of about 70 % (20 %) to tag jets originating from a bottom (charm) quark, and of approximately 2 % for jets originating from light quarks or gluons. Scale factors are applied to the simulation to match the distribution of the CSV discriminator measured with a tag-and-probe technique [63] in data control regions. The scale factors have been derived as a function of the jet flavour, \(p_{\mathrm {T}} \), and \(|\eta |\), as described in Ref. [28].

5 Event selection

The experimental signature of \(\hbox {t}\overline{\hbox {t}} \mathrm{H} \) events with \(\mathrm{H} \rightarrow \hbox {b}\overline{\hbox {b}} \) is affected by a large multijet background which can be reduced to a negligible level by only considering the semileptonic decays of the top quark. The selection criteria are therefore optimised to accept events compatible with a \(\hbox {t}\overline{\hbox {t}} \mathrm{H} \) signal where \(\mathrm{H} \rightarrow \hbox {b}\overline{\hbox {b}} \) and at least one of the top quarks decays to a bottom quark, a charged lepton, and a neutrino. Events are divided into two exclusive channels depending on the number of charged leptons (electrons or muons), which can be either one or two. Top quark decays in final states with tau leptons are not directly searched for, although they can still satisfy the event selection criteria when the tau lepton decays to an electron or muon, plus neutrinos. Channels of different lepton multiplicities are analysed separately. The single-lepton (SL) channel requires one isolated muon with \(p_{\mathrm {T}} >30\,\hbox {GeV} \) and \(|\eta | < 2.1\), or one isolated electron with \(p_{\mathrm {T}} >30\,\hbox {GeV} \) and \(|\eta | < 2.5\), excluding the \(1.44 < |\eta | < 1.57\) transition region between the ECAL barrel and endcap. Events are vetoed if additional electrons or muons with \(p_{\mathrm {T}} \) in excess of 20\(\,\hbox {GeV}\), the same \(|\eta |\) requirement, and passing some looser identification and isolation criteria are found. The dilepton (DL) channel collects events with a pair of oppositely charged leptons satisfying the selection criteria used to veto additional leptons in the SL channel. To reduce the contribution from Drell–Yan events in the same-flavour DL channel, the invariant mass of the lepton pair is required to be larger than 15\(\,\hbox {GeV}\) and at least 8\(\,\hbox {GeV}\) away from the \(\hbox {Z} \) boson mass. Figure 2 (top) shows the jet multiplicity in the SL (left) and DL (right) channels, while the bottom left panel of the same figure shows the multiplicity of jets passing the CSVM working point in the SL channel.

Top row distribution of the jet multiplicity in (left) single-lepton and (right) dilepton events, after requiring that at least two jets pass the CSVM working point. Bottom-left distribution of the multiplicity of jets passing the CSVM working point in single-lepton events with at least four jets. Bottom-right distribution of the selection variable \(\mathcal {F}\) defined in Eq. (2) for single-lepton events with at least six jets after requiring a loose preselection of at least one jet passing the CSVM working point. The plots at the bottom of each panel show the ratio between the observed data and the background expectation predicted by the simulation. The shaded and solid green bands corresponds to the total statistical plus systematic uncertainty in the background expectation described in Sect. 7. More details on the background modelling are provided in Sect. 6.3

The optimisation of the selection criteria in terms of signal-to-background ratio requires a stringent demand on the number of jets. At least five (four) jets with \(p_{\mathrm {T}} >30\,\hbox {GeV} \) and \(|\eta |<2.5\) are requested in the SL (DL) channel. A further event selection is required to reduce the \(\hbox {t}\overline{\hbox {t}} +\text {jets}\) background, which at this stage exceeds the signal rate by more than three orders of magnitude. For this purpose, the CSV discriminator values are calculated for all jets in the event and collectively denoted by \(\mathbf {\xi }\). For SL (DL) events with seven or more (five or more) jets, only the six (four) jets with the largest CSV discriminator value are considered. The likelihood to observe \(\mathbf {\xi }\) is then evaluated under the alternative hypotheses of \(\hbox {t}\overline{\hbox {t}}\) plus two heavy-flavour jets (\(\hbox {t}\overline{\hbox {t}} +\mathrm {hf}\)) or \(\hbox {t}\overline{\hbox {t}}\) plus two light-flavour jets (\(\hbox {t}\overline{\hbox {t}} +\mathrm {lf}\)). For example, for SL events with six jets, and neglecting correlations among different jets in the same event, the likelihood under the \(\hbox {t}\overline{\hbox {t}} +\mathrm {hf}\) hypothesis is estimated as:

where \(\xi _{i}\) is the CSV discriminator for the \(i\)th jet, and \(f_{\mathrm {hf}\mathrm {(lf)}}\) is the probability density function (pdf) of \(\xi _{i}\) when the \(i\)th jet originates from heavy- (light-)flavour partons. The latter include \(\hbox {u} \), \(\hbox {d} \), \(\hbox {s} \) quarks and gluons, but not \(\hbox {c} \) quarks. For the sake of simplicity, the likelihood in Eq. (1) is rigorous for \(\mathrm {W}\rightarrow \hbox {u} \overline{\hbox {d}} (\overline{\hbox {s}})\) decays, whereas it is only approximate for \(\mathrm {W}\rightarrow \hbox {c} \overline{\hbox {s}} (\overline{\hbox {d}})\) decays, since the CSV discriminator pdf for charm quarks differs with respect to \(f_\mathrm {lf}\) [62]. Equation (1) can be extended to the case of SL events with five jets, or DL events with at least four jets, by considering that in both cases four of the jets are associated with heavy-flavour partons, and the remaining jets with light-flavour partons. The likelihood under the alternative hypothesis, \(f(\mathbf {\xi } | \hbox {t}\overline{\hbox {t}} +\mathrm {lf})\), is given by Eq. (1) after swapping \(f_{\mathrm {hf}}\) for \(f_{\mathrm {lf}}\). The variable used to select events is then defined as the likelihood ratio

The distribution of \(\mathcal {F}\) for SL events with six jets is shown in Fig. 2 (bottom right).

In the following, events are retained if \(\mathcal {F}\) is larger than a threshold value \(\mathcal {F}_{\mathrm {L}}\) ranging between 0.85 and 0.97, depending on the channel and jet multiplicity. The selected events are further classified as high-purity (low-purity) if \(\mathcal {F}\) is larger (smaller) than a value \(\mathcal {F}_{\mathrm{H}}\), with \(\mathcal {F}_{\mathrm {L}}<\mathcal {F}_{\mathrm{H}}<1.0\). The low-purity categories serve as control regions for \(\hbox {t}\overline{\hbox {t}} +\mathrm {lf}\) jets, providing constraints on several sources of systematic uncertainty. The high-purity categories are enriched in \(\hbox {t}\overline{\hbox {t}} +\mathrm {hf}\) events, and drive the sensitivity of the analysis. The thresholds \(\mathcal {F}_{\mathrm {L}}\) and \(\mathcal {F}_{\mathrm{H}}\) are optimised separately for each of the analysis categories defined in Sect. 6. The exact values are reported in Table 1.

After requiring a lower threshold on the selection variable \(\mathcal {F}\), the background is dominated by \(\hbox {t}\overline{\hbox {t}} +\text {jets}\), with minor contributions from the production of a single top quark plus jets, \(\hbox {t}\overline{\hbox {t}}\) plus vector bosons, and \(\mathrm {W}/\hbox {Z} \) + jets; the expected purity for a SM Higgs boson signal is only at the percent level. By construction, the selection criteria based on Eq. (2) enhance the \(\hbox {t}\overline{\hbox {t}} +\hbox {b}\overline{\hbox {b}} \) subprocess compared to the otherwise dominant \(\hbox {t}\overline{\hbox {t}} +\mathrm {lf}\) production. The \(\hbox {t}\overline{\hbox {t}} +\hbox {b}\overline{\hbox {b}} \) background has the same final state as the signal whenever the two \(\hbox {b}\) quarks are resolved as individual jets. Therefore, this background cannot be effectively reduced by means of the \(\mathcal {F}\) discriminant. The cross section for \(\hbox {t}\overline{\hbox {t}} +\hbox {b}\overline{\hbox {b}} \) production with two resolved \(\hbox {b}\)-quark jets is larger than that of the signal by about one order of magnitude and is affected by sizable theoretical uncertainties [64], which hampers the possibility of extracting the signal via a counting experiment. A more refined approach, which thoroughly uses the kinematic properties of the reconstructed event, is therefore required to improve the separation between the signal and the background.

6 Signal extraction

As in other resonance searches, the invariant mass reconstructed from the \(\mathrm{H} \rightarrow \hbox {b}\overline{\hbox {b}} \) decay provides a natural discriminating variable to separate the narrow Higgs boson dijet resonance from the continuum mass spectrum expected from the \(\hbox {t}\overline{\hbox {t}} +\text {jets}\) background. However, in the presence of additional \(\hbox {b}\) quarks from the decay of the top quarks, an ambiguity in the Higgs boson reconstruction is introduced, leading to a combinatorial background. The distribution of the experimental mass estimator built from a randomly selected jet pair is much broader compared to the detector resolution, since wrongly chosen jet pairs are only mildly or not at all correlated with \(m_\mathrm{H} \). Unless a selection rule is introduced to filter out the wrong combinations, the existence of such a combinatorial background results in a suppression of the statistical power of the mass estimator, which grows as the factorial of the jet multiplicity. Multivariate techniques that exploit the correlation between several observables in the same event are naturally suited to deal with signal extraction in such complex final states.

In this paper, a likelihood technique based on the theoretical matrix elements for the \(\hbox {t}\overline{\hbox {t}} \mathrm{H} \) process and the \(\hbox {t}\overline{\hbox {t}} +\hbox {b}\overline{\hbox {b}} \) background is applied for signal extraction. This method utilises the kinematics and dynamics of the event, providing a powerful discriminant between the signal and background. The \(\hbox {t}\overline{\hbox {t}} +\hbox {b}\overline{\hbox {b}} \) matrix elements are considered as the prototype to model all background processes. This choice guarantees optimal separation between the signal and the \(\hbox {t}\overline{\hbox {t}} +\hbox {b}\overline{\hbox {b}} \) background, which is a desirable property given the large rate and theoretical uncertainty in the latter. The performance on the other \(\hbox {t}\overline{\hbox {t}} +\text {jets}\) subprocesses might not be necessarily optimal, even though some separation power is still preserved; indeed, the \(\hbox {t}\overline{\hbox {t}} +\hbox {b}\overline{\hbox {b}} \) matrix elements describe these processes better than the signal matrix elements do, as it has been verified a posteriori with the simulation. More specifically, the shapes of the matrix element discriminant predicted by the simulation for the different \(\hbox {t}\overline{\hbox {t}} +\text {jets}\) subprocesses are found to be similar to each other, with a slightly better separation power for the \(\hbox {t}\overline{\hbox {t}} +\hbox {b}\overline{\hbox {b}} \) background. The approximate degeneracy in shape between several processes can be ascribed to a smearing effect of the combinatorial background, as well as to the impact of the Higgs boson mass constraint on the calculation of the event likelihood under the signal hypothesis. The latter provides a similar discrimination against all \(\hbox {t}\overline{\hbox {t}} +\text {jets}\) subprocesses. A slightly worse separation power is instead observed for minor backgrounds, such as single top quark or \(\hbox {t}\overline{\hbox {t}} \hbox {Z} \) events, for which neither of the two matrix elements tested really applies. However, all of the background processes analysed are found to yield discriminant shapes that can be well distinguished from that for the signal. Also, it is found that most of the statistical power attained by this method in separating \(\hbox {t}\overline{\hbox {t}} \mathrm{H} \), \(\mathrm{H} \rightarrow \hbox {b}\overline{\hbox {b}} \) from \(\hbox {t}\overline{\hbox {t}} +\hbox {b}\overline{\hbox {b}} \) events relies on the different correlation and kinematic distributions of the two \(\hbox {b}\)-quark jets not associated with the top quark decays.

6.1 Construction of the MEM probability density functions

The MEM probability density functions under the signal and background hypothesis are constructed at LO assuming for simplicity that in both cases the reactions proceed via gluon fusion. At \(\sqrt{s}=8\,\hbox {TeV} \), the fraction of the gluon-gluon initiated subprocesses is about 55 % (65 %) of the inclusive LO (NLO) cross section, and it grows with the centre-of-mass energy [21]. Examples of diagrams entering the calculation are shown in the middle and right panels of Fig. 1. All possible jet-quark associations in the reconstruction of the final state are considered. For each event, the MEM probability density function \(w(\mathbf {y}|\mathcal {H})\) under the hypothesis \(\mathcal {H}=\hbox {t}\overline{\hbox {t}} \mathrm{H} \) or \(\hbox {t}\overline{\hbox {t}} +\hbox {b}\overline{\hbox {b}} \) is calculated as:

where \(\mathbf {y}\) denotes the set of observables for which the matrix element pdf is constructed, i.e. the momenta of jets and leptons. The sum extends over the \(N_{a}\) possibilities of associating the jets with the final-state quarks. The integration on the right-hand side of Eq. (3) is performed over the phase space of the final-state particles and over the gluon energy fractions \(x_{a,b}\) by using the vegas [65] algorithm. The four-momenta of the initial-state gluons \(p_{a,b}\) are related to the four-momenta of the colliding protons \(P_{a,b}\) by the relation \(p_{a,b}=x_{a,b}P_{a,b}\). The delta function enforces the conservation of longitudinal momentum and energy between the incoming gluons and the \(k=1,\hdots ,8\) outgoing particles with four-momenta \(p_{k}\). To account for the possibility of inital/final state radiation, the total transverse momentum of the final-state particles, which should be identically zero at LO, is instead loosely constrained by the resolution function \(\mathcal {R}^{(x,y)}\) to the measured transverse recoil \(\mathbf {\rho }_{\mathrm {T}}\), defined as the negative of the total transverse momentum of jets and leptons, plus the missing transverse momentum.

The remaining part of the integrand in Eq. (3) contains the product of the gluon PDFs in the protons (\(g\)), the square of the scattering amplitude (\(\mathcal {M}\)), and the transfer function (\(W\)). For \(\mathcal {H}=\hbox {t}\overline{\hbox {t}} \mathrm{H} \), the factorisation scale \(\mu _{\mathrm {F}}\) entering the PDF is taken as half of the sum of twice the top-quark mass and the Higgs boson mass [20], while for \(\mathcal {H}=\hbox {t}\overline{\hbox {t}} +\hbox {b}\overline{\hbox {b}} \) a dynamic scale is used equal to the quadratic sum of the transverse masses for all coloured partons [66]. The scattering amplitude for the hard process is evaluated numerically at LO accuracy by the program OpenLoops [67]; all resonances are treated in the narrow-width approximation [68], and spin correlations are neglected. The transfer function \(W\left( \mathbf {y} , \mathbf {p} \right) \) provides a mapping between the measured set of observables \(\mathbf {y}\) and the final-state particles momenta \( \mathbf {p}=(\mathbf {p}_1, \hdots , \mathbf {p}_8)\). Given the good angular resolution of jets, the direction of quarks is assumed to be perfectly measured by the direction of the associated jets. Also, since energies of leptons are measured more precisely than for jets, their momenta are considered perfectly measured. Under these assumptions, the total transfer function reduces to the product of the quark energy transfer function times the probability for the quarks that are not reconstructed as jets to fail the acceptance criteria. The quark energy transfer function is modelled by a single Gaussian function for jets associated with light-flavour partons, and by a double Gaussian function for jets associated with bottom quarks; the latter are constructed by superimposing two Gaussian functions with different mean and standard deviation. Such an asymmetric parametrisation provides a good description of both the core of the detector energy response and the low-energy tail arising from semileptonic B hadron decays. The parametrisation of the transfer functions has been derived from MC simulated samples.

6.2 Event categorisation

To aid the evaluation of the MEM probability density functions at LO, events are classified into mutually exclusive categories based on different parton-level interpretations. Firstly, the set of jets yielding the largest contribution to the sum defined by Eq. (1), determines the four (tagged) jets associated with bottom quarks; the remaining \(N_\text {untag}\) (untagged) jets are assumed to originate either from \(\mathrm {W}\rightarrow \hbox {q} \overline{\hbox {q}} '\) decays (SL channel) or from initial- or final-state gluon radiation (SL and DL channels). There still remains a twelve-fold ambiguity in the determination of the parton matched to each jet, which is reflected by the sum in Eq. (3). Indeed, without distinguishing between \(\hbox {b}\) and \(\overline{\hbox {b}}\) quarks, there exist \(4!/(2!\,2!) = 6\) combinations for assigning two jets out of four with the Higgs boson decay (\(\mathcal {H}=\hbox {t}\overline{\hbox {t}} \mathrm{H} \)), or with the bottom quark-pair radiation (\(\mathcal {H}=\hbox {t}\overline{\hbox {t}} +\hbox {b}\overline{\hbox {b}} \)); for each of these possibilities, there are two more ways of assigning the remaining tagged jets to either the \(\hbox {t}\) or \(\overline{\hbox {t}}\) quark, thus giving a total of twelve associations. In the SL channel, an event can be classified in one of three possible categories. The first category (Cat-1) is defined by requiring at least six jets; if there are exactly six jets, the mass of the two untagged jets is required to be in the range \(\left[ 60,100\right] \) \(\,\hbox {GeV}\), i.e. compatible with the mass of the \(\mathrm {W}\) boson. If the number of jets is larger than six, the mass range is tightened to compensate for the increased ambiguity in selecting the correct \(\mathrm {W}\) boson decay products. In the event interpretation, the \(\mathrm {W}\rightarrow \hbox {q} \overline{\hbox {q}} '\) decay is assumed to be fully reconstructed, with the two quarks identified with the jet pair satisfying the mass constraint. The definition of the second category (Cat-2) differs from that of Cat-1 by the inversion of the dijet mass constraint. This time, the event interpretation assumes that one of the quarks from the \(\mathrm {W}\) boson decay has failed the reconstruction. The integration on the right-hand side of Eq. (3) is extended to include the phase space of the nonreconstructed quark. The other untagged jet(s) is (are) interpreted as gluon radiation, and do not enter the calculation of \(w(\mathbf {y}|\mathcal {H})\). The total number of associations considered is twelve times the multiplicity of untagged jets eligible to originate from the \(\mathrm {W}\) boson decay: \(N_{a}=12 N_\text {untag}\). In the third category (Cat-3), exactly five jets are required, and an incomplete \(\mathrm {W}\) boson reconstruction is again assumed. In the DL channel, only one event interpretation is considered, namely that each of the four bottom quarks in the decay is associated with one of the four tagged jets.

Finally, two event discriminants, denoted by \(P_{\mathrm {s/b}}\) and \(P_{\mathrm {h/l}}\), are defined. The former encodes only information from the event kinematics and dynamics via Eq. (3), and is therefore suited to separate the signal from the background; the latter contains only information related to \(\hbox {b}\) tagging, thus providing a handle to distinguish between the heavy- and the light-flavour components of the \(\hbox {t}\overline{\hbox {t}} +\text {jets}\) background. They are defined as follows:

and

where the functions \(f( \mathbf {\xi } | \hbox {t}\overline{\hbox {t}} +\mathrm {hf})\) and \(f( \mathbf {\xi } | \hbox {t}\overline{\hbox {t}} +\mathrm {lf})\) are defined as in Eq. (1) but restricting the sum only to the jet-quark associations considered in the calculation of \(w(\mathbf {y})\); the coefficients \(k_{\mathrm {s/b}}\) and \(k_{\mathrm {h/l}}\) in the denominators are positive constants that can differ among the categories and will be treated as optimisation parameters, as described below.

The joint distribution of the \((P_{\mathrm {s/b}},P_{\mathrm {h/l}})\) discriminants is used in a two-dimensional maximum likelihood fit to search for events resulting from Higgs boson production. By construction, the two discriminants satisfy the constraint \(0 \le P_{\mathrm {s/b}}, P_{\mathrm {h/l}} \le 1\). Because of the limited size of the simulated samples, the distributions of \(P_{\mathrm {s/b}}\) and \(P_{\mathrm {h/l}}\) are binned. A finer binning is used for the former, which carries the largest sensitivity to the signal, while the latter is divided into two equal-sized bins. The coefficient \(k_{\mathrm {s/b}}\) appearing in the definition of \(P_{\mathrm {s/b}}\) is introduced to adjust the relative normalisation between \(w( \mathbf {y} | \hbox {t}\overline{\hbox {t}} \mathrm{H})\) and \(w(\mathbf {y} | \hbox {t}\overline{\hbox {t}} +\hbox {b}\overline{\hbox {b}})\); likewise for \(k_{\mathrm {h/l}}\). A redefinition of any of the two coefficients would change the corresponding discriminant monotonically, thus with no impact on its separation power. However, since both variables are analysed in bins with fixed size, an optimisation procedure, based on minimising the expected exclusion limit on the signal strength as described in Sect. 8, is carried out to choose the values that maximise the sensitivity of the analysis. More specifically, the coefficients \(k_{\mathrm {s/b}}\) are first set to the values that remove any local maximum for the \(\hbox {t}\overline{\hbox {t}} +\hbox {b}\overline{\hbox {b}} \) distribution around \(P_{\mathrm {s/b}}\sim 1\), a condition that is found to provide already close to optimal coefficients. Then, starting from this initial point, several values of \(k_{\mathrm {s/b}}\) are scanned and the \(P_{\mathrm {s/b}}\) distributions are recomputed accordingly. An expected upper limit on the signal strength is then evaluated for each choice of \(k_{\mathrm {s/b}}\) using the simulated samples. This procedure is repeated until a minimum in the expected limit is obtained. A similar procedure is applied for choosing the optimal \(k_{\mathrm {h/l}}\) coefficients.

6.3 Background modelling

The background normalisation and the distributions of the event discriminants are derived by using the MC simulated samples described in Sect. 3. In light of the large theoretical uncertainty that affects the prediction of \(\hbox {t}\overline{\hbox {t}}\) plus heavy-flavour [64, 69], the MadGraph sample is further divided into subsamples based on the quark flavour associated with the jets generated in the acceptance region \(p_{\mathrm {T}} >20\,\hbox {GeV} \), \(|\eta |<2.5\). Events are labelled as \(\hbox {t}\overline{\hbox {t}} +\hbox {b}\overline{\hbox {b}} \) if at least two jets are matched within \(\sqrt{{(\Delta \eta )^2+(\Delta \phi )^2}}<0.5\) to bottom quarks not originating from the decay of a top quark. If only one jet is matched to a bottom quark, the event is labelled as \(\hbox {t}\overline{\hbox {t}} +\hbox {b} \). These cases typically arise when the second extra \(\hbox {b}\) quark in the event is either too far forward or too soft to be reconstructed as a jet, or because the two extra \(\hbox {b}\) quarks are emitted almost collinearly and end up in a single jet. Similarly, if at least one reconstructed jet is matched to a \(\hbox {c}\) quark, the event is labelled as \(\hbox {t}\overline{\hbox {t}} +\hbox {c}\overline{\hbox {c}} \). In the latter case, single- and double-matched events are treated as one background. If none of the above conditions is satisfied, the event is classified as \(\hbox {t}\overline{\hbox {t}}\) plus light-flavour. Table 1 reports the number of events observed in the various categories, together with the expected signal and background yields. The latter are obtained from the signal-plus-background fit described in Sect. 8.

Distribution of the \(P_{\mathrm {s/b}}\) discriminant in the two \(P_{\mathrm {h/l}}\) bins for the high-purity (H) categories. The signal and background yields have been obtained from a combined fit of all nuisance parameters with the constraint \(\mu =1\). The bottom panel of each plot shows the ratio between the observed and the overall background yields. The solid blue line indicates the ratio between the signal-plus-background and the background-only distributions. The shaded and solid green bands correspond to the \(\pm 1\sigma \) uncertainty in the background prediction after the fit

Distribution of the \(P_{\mathrm {s/b}}\) discriminant in the two \(P_{\mathrm {h/l}}\) bins for the low-purity (L) categories. The signal and background yields have been obtained from a combined fit of all nuisance parameters with the constraint \(\mu =1\). The bottom panel of each plot shows the ratio between the observed and the overall background yields. The solid blue line indicates the ratio between the signal-plus-background and the background-only distributions. The shaded and solid green bands correspond to the \(\pm 1\sigma \) uncertainty in the background prediction after the fit

7 Systematic uncertainties

There are a number of systematic uncertainties of experimental and theoretical origin that affect the signal and the background expectations. Each source of systematic uncertainty is associated with a nuisance parameter that modifies the likelihood function used to extract the signal yield, as described in Sect. 8. The prior knowledge on the nuisance parameter is incorporated into the likelihood in a frequentist manner by interpreting it as a posterior arising from a pseudo-measurement [70]. Nuisance parameters can affect either the yield of a process (normalisation uncertainty), or the shape of the \(P_{\mathrm {s/b}}\) and \(P_{\mathrm {h/l}}\) discriminants (shape uncertainty), or both. Multiple processes across several categories can be affected by the same source of uncertainty. In that case the related nuisance parameters are treated as fully correlated.

The uncertainty in the integrated luminosity is estimated to be 2.6 % [71]. The lepton trigger, reconstruction, and identification efficiencies are determined from control regions by using a tag-and-probe procedure. The total uncertainty is evaluated from the statistical uncertainty of the tag-and-probe measurement, plus a systematic uncertainty in the method, and is estimated to be 1.6 % per muon and 1.5 % per electron. It is conservatively approximated to a constant 2 % per charged lepton. The uncertainty on the jet energy scale (JES) ranges from 1 % up to about 8 % of the expected energy scale depending on the jet \(p_{\mathrm {T}} \) and \(|\eta |\) [61]. For each simulated sample, two alternative distributions of the \(P_{\mathrm {s/b}}\) and \(P_{\mathrm {h/l}}\) discriminants are obtained by varying the energy scale of all simulated jets up or down by their uncertainty, and the fit is allowed to interpolate between the nominal and the alternative distributions with a Gaussian prior [70]. A similar procedure is applied to account for the uncertainty related to the jet energy resolution (JER), which ranges between about 5 and 10 % of the expected energy resolution depending on the jet direction. Since the analysis categories are defined in terms of the multiplicity and kinematic properties of the jets, a variation of either the scale or the resolution of the simulated jets can induce a migration of events in or out of the analysis categories, as well as migrations among different categories. The fractional change in the event yield induced by a shift of the JES (JER) ranges between 4–13 % (0.5–2 %), depending on the process type and on the category. When the JES and JER are varied from their nominal values, the \({\mathbf {p}}_{\mathrm {T}}^{\text {miss}} \) vector is recomputed accordingly. The scale factors applied to correct the CSV discriminator, as described in Sect. 4, are affected by several sources of systematic uncertainty. In the statistical interpretation, the fit can interpolate between the nominal and the two alternative distributions constructed by varying each scale factor up or down by its uncertainty.

Top Observed 95 % CL UL on \(\mu \) are compared to the median expected limits under the background-only and the signal-plus-background hypotheses. The former are shown together with their \({\pm }1\sigma \) and \({\pm }2\sigma \) CL intervals. Results are shown separately for the individual channels and for their combination. Middle Best-fit value of the signal strength modifier \(\mu \) with its \(\pm 1\sigma \) CL interval obtained from the individual channels and from their combination. Bottom Distribution of the decimal logarithm \(\log (\mathrm {S}/\mathrm {B})\), where \(\mathrm {S}\) (\(\mathrm {B}\)) indicates the total signal (background) yield expected in the bins of the two-dimensional histograms, as obtained from a combined fit with the constraint \(\mu =1\)

Theoretical uncertainties are treated as process-specific if they impact the prediction of one simulated sample at the time. They are instead treated as correlated across several samples if they are related to common aspects of the simulation (e.g. PDF, scale variations). The modelling of the \(\hbox {t}\overline{\hbox {t}} +\text {jets}\) background is affected by a variety of systematic uncertainties. The uncertainty due to the top-quark \(p_{\mathrm {T}}\) modelling is evaluated by varying the reweighting function \(r_{\hbox {t}}(p_{\mathrm {T}} ^{\hbox {t}})\), where \(p_{\mathrm {T}} ^{\hbox {t}}\) is the transverse momentum of the generated top quark, between one (no correction at all) and \(2r_{\hbox {t}}-1\) (the relative correction is doubled). This results in both a shape and a normalisation uncertainty. The latter can be as large as 20 % for a top quark \(p_{\mathrm {T}}\) around 300\(\,\hbox {GeV}\), and corresponds to an overall normalisation uncertainty of about 3–8 % depending on the category. To account for uncertainties in the \(\hbox {t}\overline{\hbox {t}} +\text {jets}\) acceptance, the factorisation and renormalisation scales used in the simulation are varied in a correlated way by factors of \(1/2\) and 2 around their central value. The scale variation is assumed uncorrelated among \(\hbox {t}\overline{\hbox {t}} +\hbox {b}\overline{\hbox {b}} \), \(\hbox {t}\overline{\hbox {t}} +\hbox {b} \), and \(\hbox {t}\overline{\hbox {t}} +\hbox {c}\overline{\hbox {c}} \). In a similar way, independent scale variations are introduced for events with exactly one, two, or three extra partons in the matrix element. To account for possibly large \(\mathrm {K}\)-factors due to the usage of a LO MC generator, the \(\hbox {t}\overline{\hbox {t}} +\hbox {b}\overline{\hbox {b}} \), \(\hbox {t}\overline{\hbox {t}} +\hbox {b} \), and \(\hbox {t}\overline{\hbox {t}} +\hbox {c}\overline{\hbox {c}} \) normalisations predicted by the MadGraph simulation are assigned a 50 % uncertainty each. This value can be seen as a conservative upper limit to the theoretical uncertainty in the \(\hbox {t}\overline{\hbox {t}} +\mathrm {hf}\) cross section achieved to date [64]. Essentially, the approach followed here is to assign large a priori normalisation uncertainties to the different \(\hbox {t}\overline{\hbox {t}} +\text {jets}\) subprocesses, thus allowing the fit to simultaneously adjust their rates. Scale uncertainties in the inclusive theoretical cross sections used to normalise the simulated samples range from a few percent up to 20 %, depending on the process. The PDF uncertainty is treated as fully correlated for all processes that share the same dominant initial state (i.e. \(\hbox {g} \hbox {g} \), \(\hbox {g} \hbox {q} \), or \(\hbox {q} \hbox {q} \)); it ranges between 3 and 9 %, depending on the process. Finally, the effect of the limited size of the simulated samples is accounted for by introducing one nuisance parameter for each bin of the discriminant histograms and for each sample, as described in Ref. [72]. Table 2 summarises the various sources of systematic uncertainty with their impact on the analysis.

8 Results

The statistical interpretation of the results is performed by using the same methodology employed for other CMS Higgs boson analyses and extensively documented in Ref. [2]. The measured signal rate is characterised by a strength modifier \(\mu =\sigma /\sigma _{\mathrm {SM}}\) that scales the Higgs boson production cross section times branching fraction with respect to its SM expectation for \(m_\mathrm{H} =125\) \(\,\hbox {GeV}\). The nuisance parameters, \(\theta \), are incorporated into the likelihood as described in Sect. 7. The total likelihood function \(\mathcal {L}\left( \mu ,\theta \right) \) is the product of a Poissonian likelihood spanning all bins of the \((P_{\mathrm {s/b}},P_{\mathrm {h/l}})\) distributions for all the eight categories, times a likelihood function for the nuisance parameters. Based on the asymptotic properties of the profile likelihood ratio test statistic \(q(\mu ) = -2\ln [\mathcal {L}(\mu ,\hat{\theta }_{\mu })/\mathcal {L}(\hat{\mu },\hat{\theta })]\), confidence intervals on \(\mu \) are set, where \(\hat{\theta }\) and \(\hat{\theta }_{\mu }\) indicate the best-fit value for \(\theta \) obtained when \(\mu \) is floating in the fit or fixed at a hypothesised value, respectively.

Figures 3 and 4 show the binned distributions of \((P_{\mathrm {s/b}}, P_{\mathrm {h/l}})\) in the various categories and for the two channels. For visualisation purposes, the two-dimensional histograms are projected onto one dimension by showing first the distribution of \(P_{\mathrm {s/b}}\) for events with \(P_{\mathrm {h/l}}<0.5\) and then for \(P_{\mathrm {h/l}}\ge 0.5\). The observed distributions are compared to the signal-plus-background expectation obtained from a combined fit to all categories with the constraint \(\mu =1\). No evidence of a \(\hbox {t}\overline{\hbox {t}} \mathrm{H} \) signal over the background is observed. The statistical interpretation is performed both in terms of exclusion upper limits (UL) at a 95 % CL, where the modified \(\mathrm {CL}_\mathrm {s}\) prescription [73, 74] is adopted to quote confidence intervals, and in terms of the maximum likelihood estimator of the strength modifier (\(\hat{\mu }\)).

Figure 5 (top) shows the observed 95 % CL UL on \(\mu \), compared to the signal-plus-background and to the background-only expectation. Results are shown for the SL and DL channels alone, and for their combination. The observed (background-only expected) exclusion limit is \(\mu <4.2\) (3.3). The best-fit value of \(\mu \) obtained from the individual channels and from their combination is shown in Fig. 5 (middle). A best-fit value \(\hat{\mu }=1.2^{+1.6}_{-1.5}\) is measured from the combined fit. Table 3 summarises the results.

Overall, a consistent distribution of the nuisance parameters pulls is obtained from the combined fit. In the signal-plus-background (background-only) fit, the nuisance parameters that account for the 50 % normalisation uncertainty in the \(\hbox {t}\overline{\hbox {t}} +\hbox {b}\overline{\hbox {b}} \), \(\hbox {t}\overline{\hbox {t}} +\hbox {b} \), and \(\hbox {t}\overline{\hbox {t}} +\hbox {c}\overline{\hbox {c}} \) backgrounds are pulled by \(+0.2\) (\(+0.5\)), \(-0.4\) (\(-0.3\)), and \(+0.8\) (\(+0.8\)), respectively, where the pull is defined as the shift of the best-fit estimator from its nominal value in units of its a priori uncertainty. The correlation between the \(\hbox {t}\overline{\hbox {t}} +\hbox {b}\overline{\hbox {b}} \) normalisation nuisance and the \(\hat{\mu }\) estimator is found to be \(\rho \approx -0.4\), and is the largest entry in the correlation matrix. From an a priori study (i.e. before fitting the nuisance parameters with the likelihood function of the data), the nuisance parameter corresponding to the 50 % normalisation uncertainty in the \(\hbox {t}\overline{\hbox {t}} +\hbox {b}\overline{\hbox {b}} \) background features the largest impact on the median expected limit, which would be around 4 % smaller if that uncertainty were not taken into account. Such a reduced impact on the expected limit implies that the sensitivity of the analysis is only mildly affected by the lack of a stringent a priori constraint on the \(\hbox {t}\overline{\hbox {t}} +\hbox {b}\overline{\hbox {b}} \) background normalisation; this is also consistent with the observation that the fit effectively constrains the \(\hbox {t}\overline{\hbox {t}} +\hbox {b}\overline{\hbox {b}} \) rate, narrowing its normalisation uncertainty down to about 25 %.

For illustration, Fig. 5 (bottom) shows the distribution of the decimal logarithm \(\log (\mathrm {S}/\mathrm {B})\), where \(\mathrm {S}/\mathrm {B}\) is the ratio between the signal and background yields in each bin of the two-dimensional histograms, as obtained from a combined fit with the constraint \(\mu =1\). Agreement between the data and the SM expectation is observed over the whole range of this variable.

9 Summary

A search for Higgs boson production in association with a top-quark pair with \(\mathrm{H} \rightarrow \hbox {b}\overline{\hbox {b}} \) has been presented. A total of 19.5\(\,\text {fb}^\text {-1}\) of \(\mathrm {p}\mathrm {p}\) collision data collected by the CMS experiment at \(\sqrt{s}=8\,\hbox {TeV} \) has been analysed. Events with one lepton and at least five jets or two opposite-sign leptons and at least four jets have been considered. Jet \(\hbox {b}\)-tagging information is exploited to suppress the \(\hbox {t}\overline{\hbox {t}}\) plus light-flavour background. A probability density value under either the \(\hbox {t}\overline{\hbox {t}} \mathrm{H} \) or the \(\hbox {t}\overline{\hbox {t}} +\hbox {b}\overline{\hbox {b}} \) background hypothesis is calculated for each event using an analytical matrix element method. The ratio of probability densities under these two competing hypotheses allows a one-dimensional discriminant to be defined, which is then used together with \(\hbox {b}\)-tagging information in a likelihood analysis to set constraints on the signal strength modifier \(\mu =\sigma /\sigma _{\mathrm {SM}}\).

No evidence of a signal is found. The expected upper limit at a 95 % CL is \(\mu <3.3\) under the background-only hypothesis. The observed limit is \(\mu <4.2\), corresponding to a best-fit value \(\hat{\mu }=1.2^{+1.6}_{-1.5}\). Within the present statistics, the analysis documented in this paper yields competitive results compared to those obtained on the same data set and for the same final state by using non-analytical multivariate techniques [28]. However, the matrix element method applied for a maximal separation between the signal and the dominant \(\hbox {t}\overline{\hbox {t}} +\hbox {b}\overline{\hbox {b}} \) background allows for a better control of the systematic uncertainty due to this challenging background. This method represents a promising strategy towards a precise determination of the top quark Yukawa coupling. Once the statistical uncertainty will be reduced by the inclusion of the upcoming \(13\,\hbox {TeV} \) collision data, systematic uncertainties will start to play a more important role. By incorporating experimental and theoretical model parameters into an event likelihood, the matrix element method offers a natural handle to minimise the impact of systematic uncertainties on the extraction of the signal.

References

ATLAS Collaboration, Observation of a new particle in the search for the Standard Model Higgs boson with the ATLAS detector at the LHC. Phys. Lett. B 716, 1 (2012). doi:10.1016/j.physletb.2012.08.020. arXiv:1207.7214

CMS Collaboration, Observation of a new boson at a mass of 125 GeV with the CMS experiment at the LHC. Phys. Lett. B. 716, 30 (2012). doi:10.1016/j.physletb.2012.08.021. arXiv:1207.7235

CMS Collaboration, Observation of a new boson with mass near 125 GeV in pp collisions at \(\sqrt{s}\) = 7 and 8 TeV. JHEP 06, 081 (2013). doi:10.1007/JHEP06(2013) 081. arXiv:1303.4571

F. Englert, R. Brout, Broken symmetry and the mass of gauge vector mesons. Phys. Rev. Lett. 13, 321 (1964). doi:10.1103/PhysRevLett. 13.321

P.W. Higgs, Broken symmetries, massless particles and gauge fields. Phys. Lett. 12, 132 (1964). doi:10.1016/0031-9163(64)91136-9

P.W. Higgs, Broken symmetries and the masses of gauge bosons. Phys. Rev. Lett. 13, 508 (1964). doi:10.1103/PhysRevLett. 13.508

G.S. Guralnik, C.R. Hagen, T.W.B. Kibble, Global conservation laws and massless particles. Phys. Rev. Lett. 13, 585 (1964). doi:10.1103/PhysRevLett. 13.585

P.W. Higgs, Spontaneous symmetry breakdown without massless bosons. Phys. Rev. 145, 1156 (1966). doi:10.1103/PhysRev. 145.1156

T.W.B. Kibble, Symmetry breaking in non-Abelian gauge theories. Phys. Rev. 155, 1554 (1967). doi:10.1103/PhysRev. 155.1554

CMS Collaboration, Study of the mass and spin-parity of the Higgs boson candidate via its decays to Z boson pairs. Phys. Rev. Lett. 110, 081803 (2013). doi:10.1103/PhysRevLett. 110.081803. arXiv:1212.6639

ATLAS Collaboration, Measurements of Higgs boson production and couplings in diboson final states with the ATLAS detector at the LHC. Phys. Lett. B 726, 88 (2013). doi:10.1016/j.physletb.2013.08.010. arXiv:1307.1427

ATLAS Collaboration, Evidence for the spin-0 nature of the Higgs boson using ATLAS data. Phys. Lett. B 726, 120 (2013). doi:10.1016/j.physletb.2013.08.026. arXiv:1307.1432

CDF and D0 Collaboration, Higgs boson studies at the tevatron. Phys. Rev. D 88, 052014 (2013). doi:10.1103/PhysRevD.88.052014. arXiv:1303.6346

CMS Collaboration, Evidence for the direct decay of the 125 GeV Higgs boson to fermions. Nature Phys. 10, 557 (2014). doi:10.1038/nphys3005. arXiv:1401.6527

R. Raitio, W.W. Wada, Higgs-boson production at large transverse momentum in quantum chromodynamics. Phys. Rev. D 19, 941 (1979). doi:10.1103/PhysRevD.19.941

J.N. Ng, P. Zakarauskas, QCD-parton calculation of conjoined production of Higgs bosons and heavy flavors in \(p\bar{p}\) collisions. Phys. Rev. D 29, 876 (1984). doi:10.1103/PhysRevD.29.876

Z. Kunszt, Associated production of heavy Higgs boson with top quarks. Nucl. Phys. B 247, 339 (1984). doi:10.1016/0550-3213(84)90553-4

W. Beenakker et al., Higgs radiation off top quarks at the tevatron and the LHC. Phys. Rev. Lett. 87, 201805 (2001). doi:10.1103/PhysRevLett. 87.201805. arXiv:hep-ph/0107081

W. Beenakker et al., NLO QCD corrections to \({\rm t}\bar{\rm t}{\rm H}\) production in hadron collisions. Nucl. Phys. B 653, 151 (2003). doi:10.1016/S0550-3213(03)00044-0. arXiv:hep-ph/0211352

S. Dawson, L.H. Orr, L. Reina, D. Wackeroth, Next-to-leading order QCD correction to \(pp\rightarrow t \bar{t} h\) at the CERN Large Hadron Collider. Phys. Rev. D 67, 071503 (2003). doi:10.1103/PhysRevD.67.071503. arXiv:hep-ph/0211438

S. Dawson et al., Associated Higgs production with top quarks at the large hadron collider: NLO QCD corrections. Phys. Rev. D 68, 034022 (2003). doi:10.1103/PhysRevD.68.034022. arXiv:hep-ph/0305087

M.V. Garzelli, A. Kardos, C.G. Papadopoulos, Z. Trocsanyi, Standard model Higgs boson production in association with a top anti-top pair at NLO with parton showering. Europhys. Lett. 96, 11001 (2011). doi:10.1209/0295-5075/96/11001. arXiv:1108.0387

LHC Higgs Cross Section Working Group, Handbook of LHC Higgs cross sections: 1. inclusive observables. CERN report CERN-2011-002 (2011). doi:10.5170/CERN-2011-002. arXiv:1101.0593

LHC Higgs Cross Section Working Group, Handbook of LHC Higgs cross sections: 3. Higgs properties. CERN report CERN-2013-004 (2013). doi:10.5170/CERN-2013-004. arXiv:1307.1347

CDF Collaboration, Search for the Standard model Higgs boson produced in association with top quarks using the full CDF data set. Phys. Rev. Lett. 109, 181802 (2012). doi:10.1103/PhysRevLett. 109.181802. arXiv:1208.2662

ATLAS Collaboration, Search for \({\rm H}\rightarrow \gamma \gamma \) produced in association with top quarks and constraints on the Yukawa coupling between the top quark and the Higgs boson using data taken at 7 TeV and 8 TeV with the ATLAS detector. Phys. Lett. B 740, 222 (2015). doi:10.1016/j.physletb.2014.11.049. arXiv:1409.3122

CMS Collaboration, Search for the standard model Higgs boson produced in association with a top-quark pair in pp collisions at the LHC. JHEP 05, 145 (2013). doi:10.1007/JHEP05(2013) 145

CMS Collaboration, Search for the associated production of the Higgs boson with a top-quark pair. J. High Energy Phys. 09, 087 (2014). doi:10.1007/JHEP09(2014)087. [Erratum: doi:10.1007/JHEP10(2014)106]

CMS Collaboration, The CMS experiment at the CERN LHC. JINST 3, S08004 (2008). doi:10.1088/1748-0221/3/08/S08004

D0 Collaboration, A precision measurement of the mass of the top quark. Nature 429, 638 (2004). doi:10.1038/nature02589. arXiv:hep-ex/0406031

D0 Collaboration, Helicity of the \(W\) boson in lepton + jets \(t \bar{t}\) events. Phys. Lett. B 617, 23 (2005). doi:10.1016/j.physletb.2005.04.069. arXiv:hep-ex/0404040

L. Neyman, Outline of a theory of statistical estimation based on the classical theory of probability. Philos. Trans. R. Soc. Lond. 236, 333 (1937). doi:10.1098/rsta.1937.0005

CDF Collaboration, Search for a standard model Higgs boson in \(WH \rightarrow \ell \nu \bar{b}\) in \(p\bar{p}\) collisions at \(\sqrt{s} = 1.96\) TeV. Phys. Rev. Lett. 103, 101802 (2009). doi:10.1103/PhysRevLett. 103.101802. arXiv:0906.5613

CDF Collaboration, Search for standard model Higgs boson production in association with a \(W\) boson using a matrix element technique at CDF in \(p\bar{p}\) collisions at \(\sqrt{s} =19.6\) TeV. Phys. Rev. Lett. D 85, 072001 (2012). doi:10.1103/PhysRevD.85.072001. arXiv:1112.4358

P. Artoisenet, P. de Aquino, F. Maltoni, O. Mattelaer, Unravelling \(t\bar{t}\) via the matrix element method. Phys. Rev. Lett. 111, 091802 (2013). doi:10.1103/PhysRevLett. 111.091802. arXiv:1304.6414

P. Artoisenet, V. Lemaître, F. Maltoni, O. Mattelaer, Automation of the matrix element reweighting method. JHEP 12, 068 (2010). doi:10.1007/JHEP12(2010) 068. arXiv:1007.3300

S. Agostinelli et al., GEANT4—asimulation toolkit. Nucl. Instrum. Meth. A 506, 250 (2003). doi:10.1016/S0168-9002(03)01368-8

T. Sjöstrand, S. Mrenna, P. Skands, PYTHIA 6.4 physics and manual. JHEP 05, 026 (2006). doi:10.1088/1126-6708/2006/05/026. arXiv:hep-ph/0603175

J. Alwall et al., MadGraph 5: going beyond. JHEP 06, 128 (2011). doi:10.1007/JHEP06(2011) 128. arXiv:1106.0522

M. Czakon, P. Fiedler, A. Mitov, Total top-quark pair production cross-section at hadron colliders through O(\(\alpha _S^4\)). Phys. Rev. Lett. 110, 252004 (2013). doi:10.1103/PhysRevLett. 110.252004. arXiv:1303.6254

CMS Collaboration, Measurement of differential top-quark-pair production cross sections in pp collisions at \(\sqrt{s} = 7\text{ TeV }\). Eur. Phys. J. C 73, 2339 (2013). doi:10.1140/epjc/s10052-013-2339-4

R. Gavin, Y. Li, F. Petriello, S. Quackenbush, FEWZ 2.0: a code for hadronic Z production at next-to-next-to-leading order. Comput. Phys. Commun. 182, 2388 (2011). doi:10.1016/j.cpc.2011.06.008. arXiv:1011.3540

M.V. Garzelli, A. Kardos, C.G. Papadopoulos, Z. Trocsanyi, \({\rm t}\bar{{\rm t}}{\rm W}\) and \({\rm t}\bar{{\rm t}}{\rm Z}\) hadroproduction at NLO accuracy in QCD with parton shower and hadronization effects. JHEP 11, 056 (2012). doi:10.1007/JHEP11(2012) 056. arXiv:1208.2665

J.M. Campbell, R.K. Ellis, \({\rm t}\bar{{\rm t}}{\rm W}^{\pm }\) production and decay at NLO. JHEP 07, 052 (2012). doi:10.1007/JHEP07(2012) 052. arXiv:1204.5678

P. Nason, A new method for combining NLO QCD with shower Monte Carlo algorithms. JHEP 11, 040 (2004). doi:10.1088/1126-6708/2004/11/040. arXiv:hep-ph/0409146

S. Frixione, P. Nason, C. Oleari, Matching NLO QCD computations with parton shower simulations: the POWHEG method. JHEP 11, 070 (2007). doi:10.1088/1126-6708/2007/11/070. arXiv:0709.2092

S. Alioli, P. Nason, C. Oleari, E. Re, A general framework for implementing NLO calculations in shower Monte Carlo programs: the POWHEG BOX. JHEP 06, 043 (2010). doi:10.1007/JHEP06(2010) 043. arXiv:1002.2581

T. Melia, P. Nason, R. Rontsch, G. Zanderighi, \({\rm W}^+{\rm W}^-\), WZ and ZZ production in the POWHEG BOX. JHEP 11, 078 (2011). doi:10.1007/JHEP11(2011) 078. arXiv:1107.5051

E. Re, Single-top \(W\) \(t\)-channel production matched with parton showers using the POWHEG method. Eur. Phys. J. C 71, 1547 (2011). doi:10.1140/epjc/s10052-011-1547-z. arXiv:1009.2450

S. Alioli, P. Nason, C. Oleari, E. Re, NLO single-top production matched with shower in POWHEG: \(s\)- and \(t\)-channel contributions. JHEP 09, 111 (2009). doi:10.1088/1126-6708/2009/09/111. arXiv:0907.4076. [Erratum: doi: 10.1007/JHEP02(2010) 011]

J.M. Campbell, R.K. Ellis, MCFM for the tevatron and the LHC. Nucl. Phys. Proc. Suppl. 205–206, 10 (2010). doi:10.1016/j.nuclphysbps.2010.08.011. arXiv:1007.3492

J. Pumplin et al., New generation of parton distributions with uncertainties from global QCD analysis. JHEP 07, 012 (2002). doi:10.1088/1126-6708/2002/07/012. arXiv:hep-ph/0201195

P.M. Nadolsky et al., Implications of CTEQ global analysis for collider observables. Phys. Rev. D 78, 013004 (2008). doi:10.1103/PhysRevD.78.013004. arXiv:0802.0007

CMS Collaboration, Particle-flow event reconstruction in CMS and performance for jets, taus, and \(E_{\rm {T}}^{{\rm miss}}\). CMS physics analysis summary CMS-PAS-PFT-09-001 (2009)

CMS Collaboration, Commissioning of the particle-flow event reconstruction with the first LHC collisions recorded in the CMS detector. CMS physics analysis summary CMS-PAS-PFT-10-001 (2010)

C.M.S. Collaboration, Performance of CMS muon reconstruction in pp collision events at \(\sqrt{s} = 7\) TeV. JINST 7, P10002 (2012). doi:10.1088/1748-0221/7/10/P10002. arXiv:1206.4071

S. Baffioni et al., Electron reconstruction in CMS. Eur. Phys. J. C 49, 1099 (2007). doi:10.1140/epjc/s10052-006-0175-5

M. Cacciari, G.P. Salam, G. Soyez, The anti-\(k_t\) jet clustering algorithm. JHEP 04, 063 (2008). doi:10.1088/1126-6708/2008/04/063. arXiv:0802.1189

M. Cacciari, G.P. Salam, G. Soyez, FastJet user manual. Eur. Phys. J. C 72, 1896 (2012). doi:10.1140/epjc/s10052-012-1896-2. arXiv:1111.6097

M. Cacciari, G.P. Salam, Dispelling the \(N^{3}\) myth for the \(k_t\) jet-finder. Phys. Lett. B 641, 57 (2006). doi:10.1016/j.physletb.2006.08.037. arXiv:hep-ph/0512210

C.M.S. Collaboration, Determination of jet energy calibration and transverse momentum resolution in CMS. JINST 06, P11002 (2011). doi:10.1088/1748-0221/6/11/P11002. arXiv:1107.4277

C.M.S. Collaboration, Identification of b-quark jets with the CMS experiment. JINST 8, P04013 (2013). doi:10.1088/1748-0221/8/04/P04013. arXiv:1211.4462

C.M.S. Collaboration, Measurements of inclusive W and Z cross sections in pp collisions at \(\sqrt{s}\) = 7 TeV. J. High Energy Phys. 01, 080 (2011). doi:10.1007/JHEP01(2011) 080

F. Cascioli et al., NLO matching for \({\rm t}\bar{{\rm t}}{\rm b}\bar{{\rm b}}\) production with massive b-quarks. Phys. Lett. B 734, 210 (2014). doi:10.1016/j.physletb.2014.05.040. arXiv:1309.5912

G.P. Lepage, A new algorithm for adaptive multidimensional integration. J. Comput. Phys. 27, 192 (1978). doi:10.1016/0021-9991(78)90004-9

J. Alwall, S. de Visscher, F. Maltoni, QCD radiation in the production of heavy colored particles at the LHC. JHEP 02, 017 (2009). doi:10.1088/1126-6708/2009/02/017. arXiv:0810.5350

F. Cascioli, P. Maierhöfer, S. Pozorini, Scattering amplitudes with open loops. Phys. Rev. Lett. 108, 111601 (2009). doi:10.1103/PhysRevLett. 108.111601. arXiv:1111.5206

N. Kauer, Narrow-width approximation limitations. Phys. Lett. B 649, 413 (2007). doi:10.1016/j.physletb.2007.04.036. arXiv:hep-ph/0703077

A. Bredenstein, A. Denner, S. Dittmaier, S. Pozzorini, Next-to-leading order QCD corrections to \(pp\rightarrow t\bar{t}b\bar{b} +X\) at the LHC. Phys. Rev. Lett. 103, 012002 (2009). doi:10.1103/PhysRevLett. 103.012002. arXiv:0905.0110

ATLAS and CMS Collaborations, LHC Higgs Combination Group, Procedure for the LHC Higgs boson search combination in Summer 2011. ATL-PHYS-PUB/CMS NOTE 2011–11/2011-005 (2011)

CMS Collaboration, CMS luminosity based on pixel cluster counting—summer 2013 update. CMS physics analysis summary CMS-PAS-LUM-13-001 (2013)

R. Barlow, C. Beeston, Fitting using finite Monte Carlo samples. Comput. Phys. Commun. 77, 219 (1993). doi:10.1016/0010-4655(93)90005-W

A.L. Read, Presentation of search results: the \(CL_s\) technique. J. Phys. G 28, 2693 (2002). doi:10.1088/0954-3899/28/10/313

T. Junk, Confidence level computation for combining searches with small statistics. Nucl. Instrum. Meth. A 434, 435 (1999). doi:10.1016/S0168-9002(99)00498-2. arXiv:hep-ex/9902006

Acknowledgments

We congratulate our colleagues in the CERN accelerator departments for the excellent performance of the LHC and thank the technical and administrative staffs at CERN and at other CMS institutes for their contributions to the success of the CMS effort. In addition, we gratefully acknowledge the computing centres and personnel of the Worldwide LHC Computing Grid for delivering so effectively the computing infrastructure essential to our analyses. Finally, we acknowledge the enduring support for the construction and operation of the LHC and the CMS detector provided by the following funding agencies: the Austrian Federal Ministry of Science, Research and Economy and the Austrian Science Fund; the Belgian Fonds de la Recherche Scientifique, and Fonds voor Wetenschappelijk Onderzoek; the Brazilian Funding Agencies (CNPq, CAPES, FAPERJ, and FAPESP); the Bulgarian Ministry of Education and Science; CERN; the Chinese Academy of Sciences, Ministry of Science and Technology, and National Natural Science Foundation of China; the Colombian Funding Agency (COLCIENCIAS); the Croatian Ministry of Science, Education and Sport, and the Croatian Science Foundation; the Research Promotion Foundation, Cyprus; the Ministry of Education and Research, Estonian Research Council via IUT23-4 and IUT23-6 and European Regional Development Fund, Estonia; the Academy of Finland, Finnish Ministry of Education and Culture, and Helsinki Institute of Physics; the Institut National de Physique Nucléaire et de Physique des Particules / CNRS, and Commissariat à l’Énergie Atomique et aux Énergies Alternatives / CEA, France; the Bundesministerium für Bildung und Forschung, Deutsche Forschungsgemeinschaft, and Helmholtz-Gemeinschaft Deutscher Forschungszentren, Germany; the General Secretariat for Research and Technology, Greece; the National Scientific Research Foundation, and National Innovation Office, Hungary; the Department of Atomic Energy and the Department of Science and Technology, India; the Institute for Studies in Theoretical Physics and Mathematics, Iran; the Science Foundation, Ireland; the Istituto Nazionale di Fisica Nucleare, Italy; the Ministry of Science, ICT and Future Planning, and National Research Foundation (NRF), Republic of Korea; the Lithuanian Academy of Sciences; the Ministry of Education, and University of Malaya (Malaysia); the Mexican Funding Agencies (CINVESTAV, CONACYT, SEP, and UASLP-FAI); the Ministry of Business, Innovation and Employment, New Zealand; the Pakistan Atomic Energy Commission; the Ministry of Science and Higher Education and the National Science Centre, Poland; the Fundação para a Ciência e a Tecnologia, Portugal; JINR, Dubna; the Ministry of Education and Science of the Russian Federation, the Federal Agency of Atomic Energy of the Russian Federation, Russian Academy of Sciences, and the Russian Foundation for Basic Research; the Ministry of Education, Science and Technological Development of Serbia; the Secretaría de Estado de Investigación, Desarrollo e Innovación and Programa Consolider-Ingenio 2010, Spain; the Swiss Funding Agencies (ETH Board, ETH Zurich, PSI, SNF, UniZH, Canton Zurich, and SER); the Ministry of Science and Technology, Taipei; the Thailand Center of Excellence in Physics, the Institute for the Promotion of Teaching Science and Technology of Thailand, Special Task Force for Activating Research and the National Science and Technology Development Agency of Thailand; the Scientific and Technical Research Council of Turkey, and Turkish Atomic Energy Authority; the National Academy of Sciences of Ukraine, and State Fund for Fundamental Researches, Ukraine; the Science and Technology Facilities Council, UK; the US Department of Energy, and the US National Science Foundation. Individuals have received support from the Marie-Curie programme and the European Research Council and EPLANET (European Union); the Leventis Foundation; the A. P. Sloan Foundation; the Alexander von Humboldt Foundation; the Belgian Federal Science Policy Office; the Fonds pour la Formation à la Recherche dans l’Industrie et dans l’Agriculture (FRIA-Belgium); the Agentschap voor Innovatie door Wetenschap en Technologie (IWT-Belgium); the Ministry of Education, Youth and Sports (MEYS) of the Czech Republic; the Council of Science and Industrial Research, India; the HOMING PLUS programme of Foundation for Polish Science, cofinanced from European Union, Regional Development Fund; the Compagnia di San Paolo (Torino); the Consorzio per la Fisica (Trieste); MIUR project 20108T4XTM (Italy); the Thalis and Aristeia programmes cofinanced by EU-ESF and the Greek NSRF; and the National Priorities Research Program by Qatar National Research Fund.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

Funded by SCOAP3.

About this article

Cite this article

Khachatryan, V., Sirunyan, A.M., Tumasyan, A. et al. Search for a standard model Higgs boson produced in association with a top-quark pair and decaying to bottom quarks using a matrix element method. Eur. Phys. J. C 75, 251 (2015). https://doi.org/10.1140/epjc/s10052-015-3454-1

Received:

Accepted:

Published:

DOI: https://doi.org/10.1140/epjc/s10052-015-3454-1