Abstract

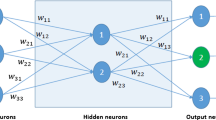

Motivated by the problem of training multilayer perceptrons in neural networks, we consider the problem of minimizing E(x)=∑ i=1 n f i (ξ i ⋅x), where ξ i ∈R s, 1≤i≤n, and each f i (ξ i ⋅x) is a ridge function. We show that when n is small the problem of minimizing E can be treated as one of minimizing univariate functions, and we use the gradient algorithms for minimizing E when n is moderately large. For large n, we present the online gradient algorithms and especially show the monotonicity and weak convergence of the algorithms.

Similar content being viewed by others

References

D.P. Bertsekas, Nonlinear Programming (Athena, Boston, MA, 1995).

D.P. Bertsekas and J. Tsitsiklis, Neuro-Dynamic Programming (Athena, Boston, MA, 1996).

S.W. Ellacott, The numerical approach of neural networks, in: Mathematical Approaches to Neural Networks, ed. J.G. Taylor (North-Holland, Amsterdam, 1993) pp. 103-138.

T.L. Fine and S. Muhherjee, Parameter convergence and learning curves for neural networks, Neural Computation 11 (1999) 747-769.

W. Finnoff, Diffusion approximations for the constant learning rate backpropagation algorithm and resistance to local minima, Neural Computation 6 (1994) 285-295.

A.A. Gaivoronski, Convergence properties of backpropagation for neural nets via theory of stochastic gradient methods, Optim. Methods Software 4 (1994) 117-134.

M. Gori and M. Maggini, Optimal convergence of online backpropagation, IEEE Trans. Neural Networks 7 (1996) 251-254.

L. Grippo, Convergent on-line algorithms for supervised learning in neural networks, IEEE Trans. Neural Networks 11 (2000) 1284-1299.

M.H. Hassoun, Fundamentals of Artificial Neural Networks (MIT Press, Cambridge, MA, 1995).

S. Haykin, Neural Networks, 2nd ed. (Prentice-Hall, Englewood Cliffs, NJ, 1999).

C.M. Kuan and K. Hornik, Convergence of learning algorithms with constant learning rates, IEEE Trans. Neural Networks 2 (1991) 484-489.

H.J. Kushner and J. Yang, Analysis of adaptive step size SA algorithms for parameter tracting, IEEE Trans. Automat. Control 40 (1995) 1403-1410.

H.J. Kushner and G.G. Yin, Stochastic Approximation Algorithms and Applications (Springer, Berlin, 1997).

C.G. Looney, Pattern Recognition Using Neural Networks (Oxford Univ. Press, New York, 1997).

Z. Luo, On the convergence of the LMS algorithm with adaptive learning rate for linear feedforward networks, Neural Computation 3 (1991) 226-245.

Z. Luo and P. Tseng, Analysis of an approximate gradient projection method with applications to the backpropagation algorithm, Optim. Methods Software 4 (1994) 85-102.

O.L. Mangasarian and M.V. Solodov, Serial and parallel backpropagation convergence via nonmonotone perturbed minimization, Optim. Methods Software 4 (1994) 103-116.

S. Mukherjee and T.L. Fine, Online steepest descent yields weights with nonnormal limiting distribution, Neural Computation 8 (1996) 1075-1084.

S.-H. Oh, Improving the error backpropagation algorithm with a modified error function, IEEE Trans. Neural Networks 8 (1997) 799-803.

J.M. Ortega and W.C. Rheinboldt, Iterative Solution of Nonlinear Equations in Several Variables (Academic Press, New York, 1970).

A. Ralston, A First Course in Numerical Analysis (McGraw-Hill, New York, 1965).

P. Sollich and D. Barber, Online learning from finite training sets and robustness to input bias, Neural Computation 10 (1998) 2201-2217.

H. White, Some asymptotic results for learning in single hidden-layer feedforward neural network models, J. Amer. Statist. Assoc. 84 (1989) 117-134.

Wei Wu and Y.S. Xu, Convergence of the on-line updating gradient method for neural networks, to appear in Comput. Appl. Math.

Author information

Authors and Affiliations

Rights and permissions

About this article

Cite this article

Wu, W., Feng, G. & Li, X. Training Multilayer Perceptrons Via Minimization of Sum of Ridge Functions. Advances in Computational Mathematics 17, 331–347 (2002). https://doi.org/10.1023/A:1016249727555

Issue Date:

DOI: https://doi.org/10.1023/A:1016249727555