Abstract

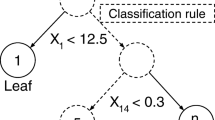

Classification of large datasets is an important data mining problem. Many classification algorithms have been proposed in the literature, but studies have shown that so far no algorithm uniformly outperforms all other algorithms in terms of quality. In this paper, we present a unifying framework called Rain Forest for classification tree construction that separates the scalability aspects of algorithms for constructing a tree from the central features that determine the quality of the tree. The generic algorithm is easy to instantiate with specific split selection methods from the literature (including C4.5, CART, CHAID, FACT, ID3 and extensions, SLIQ, SPRINT and QUEST).

In addition to its generality, in that it yields scalable versions of a wide range of classification algorithms, our approach also offers performance improvements of over a factor of three over the SPRINT algorithm, the fastest scalable classification algorithm proposed previously. In contrast to SPRINT, however, our generic algorithm requires a certain minimum amount of main memory, proportional to the set of distinct values in a column of the input relation. Given current main memory costs, this requirement is readily met in most if not all workloads.

Similar content being viewed by others

References

Agrawal, R., Ghosh, S., Imielinski, T., Iyer, B., and Swami, A. 1992. An interval classifier for database mining applications. In Proc. of the VLDB Conference. Vancouver, British Columbia, Canada, pp. 560–573.

Agrawal, R., Imielinski, T., and Swami, A. 1993. Database mining: A performance perspective. IEEE Transactions on Knowledge and Data Engineering, 5(6):914–925.

Agresti, A. 1990. Categorical Data Analysis. John Wiley and Sons.

Astrahan, M.M., Schkolnick, M., and Whang, K.-Y. 1987. Approximating the number of unique values of an attribute without sorting. Information Systems, 12(1):11–15.

Brachman, R.J., Khabaza, T., Kloesgen, W., Shapiro, G.P., and Simoudis, E. 1996. Mining business databases. Communications of the ACM, 39(11):42–48.

Bishop, C.M. 1995. Neural Networks for Pattern Recognition. New York, NY: Oxford University Press.

Breiman, L., Friedman, J.H., Olshen, R.A., and Stone, C.J. 1984. Classification and Regression Trees. Wadsworth: Belmont.

Brodley, C.E. and Utgoff, P.E. 1992. Multivariate versus univariate decision trees. Technical Report 8, Department of Computer Science, University of Massachussetts, Amherst, MA.

Catlett, J. 1991a. On changing continuos attributes into ordered discrete attributes. Proceedings of the European Working Session on Learning: Machine Learning, 482:164–178.

Catlett, J. 1991b. Megainduction: Machine learning on very large databases. PhD Thesis, University of Sydney.

Chan, P.K. and Stolfo, S.J. 1993a. Experiments on multistrategy learning by meta-learning. In Proc. Second Intl. Conference on Info. and Knowledge Mgmt., pp. 314–323.

Chan, P.K. and Stolfo, S.J. 1993b. Meta-learning for multistrategy and parallel learning. In Proc. Second Intl. Workshop on Multistrategy Learning, pp. 150–165.

Cheeseman, P. and Stutz, J. 1996. Bayesian classification (autoclass): Theory and results. In Advances in Knowledge Discovery and Data Mining, U.M. Fayyad, G.P. Shapiro, P. Smyth, and R. Uthurusamy (Eds.). AAAI/MIT Press, ch. 6, pp. 153–180.

Cheeseman, P., Kelly, J., Self, M., Stutz, J., Taylor,W., and Freeman, D. 1988. Autoclass: A bayesian classification system. In Proceedings of the Fifth International Conference on Machine Learning. Morgan Kaufmann.

Cheng, J., Fayyad, U.M., Irani, K.B., and Qian, Z. 1988. Improved decision trees: A generalized version of ID3. In Proceedings of the Fifth International Conference on Machine Learning. Morgan Kaufman.

Chirstensen, R. 1997. Log-Linear Models and Logistic Regression, 2nd ed. Springer.

Corruble, V., Brown, D.E., and Pittard, C.L. 1993. A comparison of decision classifiers with backpropagation neural networks for multimodal classification problems. Pattern Recognition, 26:953–961.

Curram, S.P. and Mingers, J. 1994. Neural networks, decision tree induction and discriminant analysis: An empirical comparison. Journal of the Operational Research Society, 45:440–450.

Dougherty, J., Kahove, R., and Sahami, M. 1995. Supervised and unsupervised discretization of continous features. In Machine Learning: Proceedings of the 12th International Conference, A. Prieditis and S. Russell (Eds.). Morgan Kaufmann.

Fayyad, U.M. 1991. On the induction of decision trees for multiple concept learning. PhD Thesis, EECS Department, The University of Michigan.

Fayyad, U., Haussler, D., and Stolorz, P. 1996. Mining scientific data. Communications of the ACM, 39(11).

Fayyad, U.M. and Irani, K. 1993. Multi-interval discretization of continous-valued attributes for classification learning. In Proceedings of the 13th International Joint Conference on Artificial Intelligence. Morgan Kaufmann, pp. 1022–1027.

Fayyad, U.M., Shapiro, G.P., Smyth, P., and Uthurusamy, R. (Eds.). 1996. Advances in Knowledge Discovery and Data Mining. AAAI/MIT Press.

Friedman, J.H. 1977. A recursive partitioning decision rule for nonparametric classifiers. IEEE Transactions on Computers, 26:404–408.

Fukuda, T., Morimoto,Y., and Morishita, S. 1996. Constructing efficient decision trees by using optimized numeric association rules. In Proceedings of the 22nd VLDB Conference. Mumbai, India.

Garey, M.R. and Johnson, D.S. 1979. Computer and Intractability. Freeman and Company.

Gillo, M.W. 1972. MAID: A honeywell 600 program for an automatised survey analysis. Behavioral Science, 17:251–252.

Goldberg, D.E. 1989. Genetic Algorithms in Search, Optimization and Machine Learning. Morgan Kaufmann.

Graefe, G., Fayyad, U., and Chaudhuri, S. 1998. On the efficient gathering of sufficient statistics for classification from large SQL databases. In Proceedings of the Fourth International Conference on Knowledge Discovery and Data Mining. AAAI Press, pp. 204–208.

Haas, P.J., Naughton, J.F., Seshadri, S., and Stokes, L. 1995. Sampling-based estimation of the number of distinct values of an attribute. In Proceedings of the Eighth International Conference on Very Large Databases (VLDB). Zurich, Switzerland, pp. 311–322.

Hand, D.J. 1997. Construction and Assessment of Classification Rules. Chichester, England: John Wiley & Sons.

Hyafil, L. and Rivest, R.L. 1976. Constructing optimal binary decision trees is NP-complete. Information Processing Letters, 5(1):15–17.

Ibarra, O.H. and Kim, C.E. 1975. Fast approximation algorithms for the knapsack and sum of subsets problem. Journal of the ACM, 22:463–468.

Inman, W.H. 1996. The data warehouse and data mining. Communications of the ACM, 39(11).

James, M. 1985. Classification Algorithms. Wiley.

Kerber, R. 1991. Chimerge discretization of numeric attributes. In Proceedings of the 10th International Conference on Artificial Intelligence, pp. 123–128.

Kohavi, R. 1995. The power of decision tables. In Proceedings of the 8th European Conference on Machine Learning. N. Lavrac and S. Wrobel (Eds.). Lecture Notes in Computer Science, vol. 912, Springer.

Kohonen, T. 1995. Self-Organizing Maps. Heidelberg: Springer-Verlag.

Lim, T.-S., Loh, W.-Y., and Shih, Y.-S. 1997. An empirical comparison of decision trees and other classification methods. Technical Report 979, Department of Statistics, University of Wisconsin, Madison.

Liu, H. and Setiono, R. 1996. Chi2: Feature selection and discretization of numerical attributes. In Proceedings of the IEEE Tools on AI.

Loh, W.-Y. and Shih, Y.-S. 1997. Split selection methods for classification trees. Statistica Sinica, 7(4):815–840.

Loh, W.-Y. and Vanichsetakul, N. 1988. Tree-structured classification via generalized disriminant analysis (with discussion). Journal of the American Statistical Association, 83:715–728.

Maass, W. 1994. Efficient agnostic pac-learning with simple hypothesis. In Proceedings of the Seventh Annual ACM Conference on Computational Learning Theory, pp. 67–75.

Magidson, J. 1989. CHAID, LOGIT and log-linear modeling. Markting Information Systems, Report 11–130.

Magidson, J. 1993a. The CHAID approach to segmentation modeling. In Handbook of Marketing Research, R. Bagozzi (Ed.). Blackwell.

Magidson, J. 1993b. The use of the new ordinal algorithm in CHAID to target profitable segments. Journal of Database Marketing, 1(1).

Mehta, M., Agrawal, R., and Rissanen, J. 1996. SLIQ: A fast scalable classifier for data mining. In Proc. of the Fifth Int'l Conference on Extending Database Technology (EDBT), Avignon, France.

Mehta, M., Rissanen, J., and Agrawal, R. 1995. MDL-based decision tree pruning. In Proc. of the 1st Int'l Conference on Knowledge Discovery in Databases and Data Mining, Montreal, Canada.

Michie, D., Spiegelhalter, D.J., and Taylor, C.C. 1994a. Machine Learning, Neural and Statistical Classification. Ellis Horwood.

Michie, D., Spiegelhalter, D.J., and Taylor, C.C. (Eds.). 1994b. Machine Learning, Neural and Statistical Classification. London: Ellis Horwood.

Morgan, J.N. and Messenger, R.C. 1973. Thaid: A sequantial search program for the analysis of nominal scale dependent variables. Technical Report, Institute for Social Research, University of Michigan, Ann Arbor, Michigan.

Morimoto, Y., Fukuda, T., Matsuzawa, H., Tokuyama, T., and Yoda, K. 1998. Algorithms for mining association rules for binary segmentations of huge categorical databases. In Proceedings of the 24th International Conference on Very Large Databases (VLDB). Morgan Kaufmann.

Murphy, O.J. and McCraw, R.L. 1991. Designing storage efficient decision trees. IEEE Trans. on Comp., 40(3):315–319.

Murthy, S.K. 1995. On growing better decision trees from data. PhD Thesis, Department of Computer Science, Johns Hopkins University, Baltimore, Maryland.

Naumov, G.E. 1991. NP-completeness of problems of construction of optimal decision trees. Soviet Physics, Doklady, 36(4):270–271.

Quinlan, J.R. 1979. Discovering rules by induction from large collections of examples. In Expert Systems in the Micro Electronic Age, D. Michie (Ed.). Edinburgh University Press: Edinburgh, UK.

Quinlan, J.R. 1983. Learning efficient classification procedures. In Machine Learning: An Artificial Intelligence Approach, T.M. Mitchell, R.S. Michalski, and J.G. Carbonell (Eds.). Palo Alto, CA: Tioga Press.

Quinlan, J.R. 1986. Induction of decision trees. Machine Learning, 1:81–106.

Quinlan, J.R. 1993. C4.5: Programs for Machine Learning. Morgan Kaufman.

Rastogi, R. and Shim, K. 1998. PUBLIC: A decision tree classifier that integrates building and pruning. In Proceedings of the 24th International Conference on Very Large Databases. New York City, New York, pp. 404–415.

Ripley, B.D. 1996. Pattern Recognition and Neural Networks. Cambridge: Cambridge University Press.

Rissanen, J. 1989. Stochastic Complexity in Statistical Inquiry. World Scientific Publ. Co.

Sahni, S. 1975. Approximate algorithms for the 0/1 knapsack problem. Journal of the ACM, 22:115–124.

Sarle, W.S. 1994. Neural networks and statistical models. In Procedings of the Nineteenth Annual SAS Users Groups International Conference. SAS Institute, Inc., Cary, NC, pp. 1538–1550.

Shafer, J., Agrawal, R., and Mehta, M. 1996. SPRINT: A scalable parallel classifier for data mining. In Proc. of the 22nd Int'l Conference on Very Large Databases. Bombay, India.

Shavlik, J.W., Mooney, R.J., and Towell, G.G. 1991. Symbolic and neural learning algorithms: An empirical comparison. Machine Learning, 6:111–144.

Sonquist, J.A., Baker, E.L., and Morgan, J.N. 1971. Searching for structure. Technical Report, Institute for Social Research, University of Michigan, Ann Arbor, Michigan.

Weiss, S.M. and Kulikowski, C.A. 1991. Computer Systems that Learn: Classification and Prediction Methods from Statistics, Neural Nets, Machine Learning, and Expert Systems. Morgan Kaufman.

Zighed, D.A., Rakotomalala, R., and Feschet, F. 1997. Optimal multiple intervals discretization of continous attributes for supervised learning. In Proceedings of the Third International Conference on Knowledge Discovery and Data Mining, pp. 295–298.

Author information

Authors and Affiliations

Rights and permissions

About this article

Cite this article

Gehrke, J., Ramakrishnan, R. & Ganti, V. RainForest—A Framework for Fast Decision Tree Construction of Large Datasets. Data Mining and Knowledge Discovery 4, 127–162 (2000). https://doi.org/10.1023/A:1009839829793

Issue Date:

DOI: https://doi.org/10.1023/A:1009839829793